Relative Error-based Time-limited Model Order Reduction via Oblique Projection

Abstract

In time-limited model order reduction, a reduced-order approximation of the original high-order model is obtained that accurately approximates the original model within the desired limited time interval. Accuracy outside that time interval is not that important. The error incurred when a reduced-order model is used as a surrogate for the original model can be quantified in absolute or relative terms to access the performance of the model reduction algorithm. The relative error is generally more meaningful than an absolute error because if the original and reduced systems’ responses are of small magnitude, the absolute error is small in magnitude as well. However, this does not necessarily mean that the reduced model is accurate. The relative error in such scenarios is useful and meaningful as it quantifies percentage error irrespective of the magnitude of the system’s response. In this paper, the necessary conditions for a local optimum of the time-limited norm of the relative error system are derived. Inspired by these conditions, an oblique projection algorithm is proposed that ensures small -norm relative error within the desired time interval. Unlike the existing relative error-based model reduction algorithms, the proposed algorithm does not require solutions of large-scale Lyapunov and Riccati equations. The proposed algorithm is compared with time-limited balanced truncation, time-limited balanced stochastic truncation, and time-limited iterative Rational Krylov algorithm. Numerical results confirm the superiority of the proposed algorithm over these existing algorithms.

keywords:

norm, model order reduction, oblique projection, optimal, relative error, time-limited1 Introduction

Computer-aided simulation and analysis have been a major driver in scientific innovation and progress for the last several decades. The dynamical systems and processes, which can be natural or artificial, are mathematically modeled to be used in a computer program that can simulate and analyze that system for endless possible inputs, settings, and scenarios. During the mathematical modeling process, the behavior of the system or process is generally described by partial differential equations (PDEs). To utilize the mathematical tools available in dynamical system theory, the PDEs are converted into ordinary differential equations (ODEs), which are then expressed as a state-space model [1, 2].

Let be the inputs, be the outputs, and be the states of the state-space model of the dynamical system . Then the state-space equations of can be written as

wherein , , , and . The state-space realization has the following equivalence with the transfer function of

Note that the simulation of requires the solution of differential equations. The computing power of modern-day computers has been exponentially increasing following Moore’s law, and memory resources have been increasing tremendously [2]. However, the complexity of modern-day systems and processes has also been increasing tremendously following the scientific and technological advancements enabled by high-speed chip manufacturing. Therefore, the value of in most modern-day systems and processes is normally several thousand. The simulation and analysis of such a high-order model remain a computational challenge, which has been a consistent motivation for developing efficient model order reduction (MOR) algorithms [3, 4, 5]. MOR is a process of obtaining a reduced-order model (ROM) that has similar properties and behavior but can be simulated cheaply without exhausting the available computational resources. The ROM can then be used as a surrogate for the original model with tolerable numerical error.

Let us denote the ROM as . Further, let us denote the states and outputs of as and , respectively. Then the state-space equations of can be written as

wherein , , , , and . The state-space realization has the following equivalence with the transfer function of

Ideally, the MOR algorithm should have three main properties:

-

1.

should closely mimic in the frequency and/or time domains.

-

2.

should preserve important system properties like stability, passivity, contractivity, etc.

-

3.

The computational cost required to obtain should be way less than that required to simulate .

No MOR algorithm has all these properties; nevertheless, the significance of a MOR algorithm is generally accessed against these three standards. Mathematically, two possible ways to express the MOR problem are the following:

-

1.

.

-

2.

.

Most of the MOR algorithms use additive error as their performance criterion, i.e., they minimize . The quality of approximation in these algorithms is accessed by computing system norms (like norm and norm) of . If the output responses of and are inherently small, a small output response of can be a misleading notion of accuracy. The relative error is a better approximation criterion as it reveals percentage error. However, is a less used approximation criterion because most of the algorithms that use this criterion are computationally expensive due to the inherent nature of the problem, which will be discussed later. Various performance specifications and specific properties to be preserved lead to various MOR families. Within the family, several algorithms with different discourses are available, each having some distinct features. In the sequel, a brief literature survey of the important MOR algorithms is presented.

Balanced truncation (BT) is one of the most famous MOR techniques due to its useful theoretical properties, like stability preservation and supreme accuracy [6]. BT has led to several important MOR algorithms constituting a rich family of algorithms known as balancing- or gramian-based MOR techniques in the literature. The ROM produced by BT is often suboptimal in the norm, i.e., a suboptimum of . An apriori upper bound on for the ROM generated by BT was proposed in [7]. Most of the algorithms in the BT family offer a similar apriori upper bound, which is a distinct and important feature of balancing-based MOR algorithms. BT is a computationally expensive algorithm as it requires the solution of two large-scale Lyapunov equations, which limits its applicability to medium-scale systems. To overcome this problem, several numerical algorithms are reported that compute low-rank solutions of these Lyapunov equations, which are cheaper to compute [8, 9, 10, 11]. By using these low-rank solutions, the applicability of BT is extended to large-scale systems [12]. Some other numerical methods to avoid ill-conditioning in the BT algorithm are also reported, like [13, 14]. BT has been extended to preserve other system properties like passivity and contractivity in [15, 16, 17] and [18], respectively. Further, its applicability has also been extended to more general classes of linear and nonlinear systems, cf.[19, 20, 21, 22]. A closely related yet different MOR method known as optimal Hankel norm approximation (OHNA) is proposed in [23]. The ROM produced by OHNA is optimal in the Hankel norm, and an apriori upper bound on , which is half of that in the case of BT, also holds [24]. An important extension to BT, which is relevant to the problem considered in this paper, is the balanced stochastic truncation (BST) [25]. It minimizes , and an apriori upper bound on also exists [26]. If the original model is a stable minimum phase system, BST guarantees that the ROM preserves this property.

In a large-scale setting, the computation of the norm is expensive. Moreover, the MOR algorithms that tend to reduce the norm of error are generally also expensive [27, 28, 29]. The norm, on the other hand, can be computed cheaply due to its relation with the system gramians, for which several efficient low-rank algorithms are available. Interestingly, the MOR algorithms that seek to ensure less error in the norm happen to be the most computationally efficient ones in the available literature [30]. The selection of the norm as a performance specification in this paper is motivated by this factor. A locally optimal solution in the norm is also possible, and several efficient algorithms are available that achieve the local optimum upon convergence. The necessary conditions for a local optimum of (known as Wilson’s conditions) are related to interpolation conditions, i.e., interpolates at selected points in the -plane, cf. [31, 32, 33, 34]. The iterative rational Krylov algorithm (IRKA) is the pioneer and most famous interpolation algorithm for single-input single-output (SISO) systems that can achieve the local optimum of upon convergence [32]. IRKA is generalized for multi-input multi-output (MIMO) systems in [33]. A more general algorithm that does not assume that and have simple poles is presented in [34] that can capture the local optimum of upon convergence. This algorithm is based on the Sylvester equations and uses the connection between the Sylvester equations and projection established in [35]. Its numerical properties are further improved in [36], and a more robust algorithm is presented. To speed up convergence in IRKA, some trust region-based techniques are proposed in [37]. Some other -optimal algorithms based on manifold theory are also reported, like [38, 39, 40, 41]. To guarantee stability, some suboptimal solutions to are reported, like [42, 43, 44].

The simulation of a model does not encompass the entire time horizon since no practical system is run over the entire time range. Thus it is reasonable to ensure good accuracy in the actual time range of operation, which is often limited to a small interval. For instance, low-frequency oscillations in interconnected power systems only last for 15 seconds, after which these are successfully damped by the power system stabilizers and damping controllers. The first 15 seconds thus become important in small-signal stability analysis of interconnected power systems [45, 46, 47]. Similarly, in the time-limited optimal control problem, the behavior of the plant in the desired time interval is important [48]. This motivates time-limited MOR, wherein it is focused to ensure supreme accuracy in the desired time interval rather than trying to achieve good accuracy over the entire time range. BT was generalized for the time-limited MOR problem in [49] to obtain a time-limited BT (TLBT) algorithm. TLBT does not retain the stability preservation and apriori error bound properties of BT. However, an norm-based upper bound on is proposed in [50, 51] for TLBT. Some ad hoc approaches to guarantee stability in TLBT are also reported, like [52]; however, these are quite inaccurate and computationally expensive. TLBT, like BT, is computationally expensive in a large-scale setting and requires solutions of two large-scale Lyapunov equations. In [53], these Lyapunov equations are replaced with their low-rank solutions to extend the applicability of TLBT to large-scale systems. TLBT is further generalized to preserve more properties like passivity and to extend its applicability to a more general class of linear and nonlinear systems in the literature, cf. [54, 55, 56, 57, 58]. BST is extended to the time-limited MOR scenario in [59]; however, an upper bound on does not exist for time-limited BST (TLBST).

In [60], a definition for the time-limited norm is presented, and the necessary conditions for a local optimum of (wherein represents the time-limited norm) are derived. Then, IRKA is heuristically extended to time-limited IRKA (TLIRKA) for achieving a local optimum of . However, TLIRKA does not satisfy the necessary conditions for a local optimum. In [61], interpolation-based necessary conditions for a local optimum of are derived, and a nonlinear optimization-based algorithm is proposed to construct the local optimum. This algorithm, however, is computationally expensive, and its applicability is limited to the order of a few hundred. Another nonlinear optimization-based algorithm for this problem is reported in [62], which is very similar to that given in [61]. The equivalence between gramian-based conditions and interpolation conditions is established in [63, 64]. A stability-preserving interpolation algorithm that achieves a subset of the necessary conditions is proposed in [65]. To the best of the authors’ knowledge, there is no algorithm in the literature that seeks to reduce , which has motivated the results obtained in this paper.

In this paper, necessary conditions for the local optimum of are derived. It is shown that it is inherently not possible to achieve a local optimum of in an oblique projection framework. Nevertheless, inspired by these necessary conditions, a computationally efficient oblique projection-based algorithm is proposed that seeks to obtain the local optimum of and offers high fidelity. Unlike TLBST, the proposed algorithm does not require the solutions of large-scale Lyapunov and Ricatti equations. The proposed algorithm is numerically compared to TLBT, TLBST, and TLIRKA by considering benchmark examples. The numerical results confirm that the proposed algorithm offers a small relative error within the desired time interval.

2 Preliminaries

Let the original high-order system be an -th order square linear time-invariant system and be its state-space realization. Further, let us denote the impulse response of as . The time-limited controllability gramian and time-limited observability gramian of the state-space realization within the desired time interval sec solve the following Lyapunov equations

cf. [49].

Definition 2.1.

2.1 Problem Formulation

In an oblique projection framework, the state-space matrices of the ROM are obtained as

wherein , , , the columns of span an -dimensional subspace along with the kernels of , and is an oblique projector [66].

In time-limited MOR, it is aimed to ensure that the difference is small within the desired time interval sec. The -norm is an effective measure to quantify the error in the desired time interval [60, 61]. The problem of relative error-based time-limited MOR via oblique projection is to construct the reduction matrices and such that the ROM obtained with these matrices ensures a small -norm of the relative error , i.e.,

2.2 Existing Oblique Projection Techniques

In this subsection, three important oblique projection algorithms for time-limited MOR are briefly reviewed. These algorithms are the most relevant to the new results obtained in this paper.

2.2.1 Time-limited Balanced Truncation (TLBT)

In TLBT [49], the reduction matrices are computed from the contragradient transformation of and as where . The resultant ROM offers a small additive error in the desired time interval sec.

2.2.2 Time-limited Balanced Stochastic Truncation (TLBST)

The controllability gramian of the pair solves the following Lyapunov equation

Define and as the following

Now, let solves the following Riccati equation

The time-limited observability gramian of the pair solves the following Lyapunov equation

In TLBST [59], the reduction matrices are computed from the contragradient transformation of and as where . The resultant ROM offers a small relative error in the desired time interval sec.

2.2.3 Time-limited Iterative Rational Krylov Algorithm (TLIRKA)

TLIRKA starts with an arbitrary guess of the ROM’s state-space matrices , wherein has simple eigenvalues [60]. Let be the eigenvalue decomposition of . Further, let and satisfy the following Sylvester equations

| (1) | ||||

| (2) |

The reduction matrices are computed as and , wherein and represent orthogonal basis and Hermitian, respectively. The ROM is updated using these reduction matrices, and the process is repeated until the relative change in the eigenvalues of stagnates. Upon convergence, a ROM that offers a small is achieved.

3 Main Results

In this section, first, a state-space realization and an expression for the norm of are obtained. Second, necessary conditions for the local optimum of are derived. Third, an oblique projection algorithm is presented, which seeks to satisfy these conditions. Fourth, the algorithmic and computational aspects of the proposed algorithm are discussed.

3.1 State-space Realization and norm of

Let us assume that is invertible and stable, i.e., is a stable minimum phase transfer function. Then, it can readily be noted that can be expressed as . Further, if the matrix is full rank, the following state-space realization exists for

cf. [67, 68]. Then, a possible state-space realization of is given as

Due to the triangular structure of , the matrix exponential can be expressed as

cf. [69]. Since , cf. [70], the following holds

Thus and solve the following Sylvester equations

| (3) | ||||

| (4) |

Proposition 3.1.

.

Proof.

Let be the time-limited controllability gramian of the pair , which solves the following Lyapunov equation

| (5) |

By expanding the equation (5), it can be noted that , , , and solve the following linear matrix equations

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) |

wherein

Let be the time-limited observability gramian of the pair , which solves the following Lyapunov equation

| (10) |

By expanding the equation (10), it can be noted that , , , , , and solve the following linear matrix equations

| (11) | ||||

| (12) | ||||

| (13) | ||||

| (14) | ||||

| (15) | ||||

| (16) |

wherein

Proposition 3.2.

and .

Proof.

From the definition of the norm, can be written as

3.2 Necessary Conditions for the Local optimum of

The derivation of the necessary conditions for a local optimum of requires several new variables to be defined. For clarity, we define new variables before presenting the optimality conditions. Let us define , which solves the following Sylvester equation

| (17) |

where

will be used in deriving the partial derivative of with respect to . Next, let us define , , , , and , which solve the following linear matrix equations

| (18) | ||||

| (19) | ||||

| (20) | ||||

| (21) | ||||

| (22) | ||||

| (23) |

These variables will be used in deriving the partial derivative of with respect to and . Next, let us define , which solves the following Sylvester equation

| (24) |

where

will be used in deriving the partial derivative of with respect to . Next, let us define , , , and , which solve the following linear matrix equations

| (25) | ||||

| (26) | ||||

| (27) | ||||

| (28) |

wherein

These variables will be used in deriving the partial derivative of with respect to .

The following properties of trace will also be used repeatedly in the derivation of the optimality conditions:

-

1.

Addition: .

-

2.

Transpose: .

-

3.

Cyclic permutation: .

-

4.

Partial derivative: where is the first-order derivative of with respect to , and is the differential of ,

cf. [71].

Lastly, the following lemma will also be used repeatedly in the derivation.

Lemma 3.3.

Let and satisfy the following Sylvester equations

Then .

Proof.

See the proof of Lemma 4.1 in [72]. ∎

We are now in a position to state the necessary conditions, which a local optimum ROM must satisfy, in the following theorem.

Theorem 3.4.

Suppose is a stable minimum phase system, and the matrix is full rank. Then, the local optimum of must satisfy the following optimality conditions

| (29) | ||||

| (30) | ||||

| (31) |

wherein

Proof.

The proof is long and tedious and can be found in the appendix given at the end. ∎

3.3 An Oblique Projection Algorithm

In this subsection, we sketch an oblique projection algorithm from the optimality conditions derived in the last subsection. Some numerical and computational issues related to the applicability of the algorithm are also discussed.

3.3.1 Limitation in the Oblique Projection Framework

Let us assume that and are invertible. Then, the optimal choices of and are given as

If the ROM is obtained via oblique projection, this suggests selecting and as and , respectively. Due to the oblique projection condition , we get

To compute the deviation in the satisfaction of optimality conditions with this choice of reduction subspaces, let us express the optimality condition (29) a bit differently. Note that

| and |

Then, the optimality condition (29) can be rewritten as

where

It is now clear that by selecting the reduction matrices as and , deviations in the satisfaction of the optimality conditions (29), (30), and (31) are given by , , and , respectively. In general, , , and . Thus it is inherently not possible to obtain a local optimum of in an oblique projection framework. Nevertheless, the reduction matrices and target the optimality conditions and seek to construct a local optimum.

3.3.2 Algorithm

The appropriate reduction matrices for the problem under consideration have so far been identified from the optimality conditions. We now proceed in sketching an algorithm with this choice of reduction matrices. The matrices , , , and depend on the ROM, which is unknown. Thus an iterative solution seems inevitable. Note that and span the same subspace, as and only change the basis, cf. [35]. The condition on invertibility of and in every iteration can be restrictive and cause numerical ill-conditioning or even failure. Therefore, and seem a better choice for the reduction matrices. Further, note that and can be seen as two coupled systems, i.e.,

| and |

The problem of computing the ROM can be seen as a stationary point problem, i.e.,

with an additional constraint that . Starting with an arbitrary guess of , the process can be continued until convergence to obtain the stationary points. There are still two issues to be addressed before sketching a stationary point solution algorithm. Note that it is so far assumed that the matrix is full rank, which essentially ensures exists. If the matrix is not full rank, we can use a remedy employed in BST, i.e., the so-called -regularization. The rank deficient can be replaced with , wherein is a small positive number enough to ensure the invertibility of [73]. In the final ROM, the original is plugged back in. This is an effective approach and is used in MATLAB’s built-in function for BST. The second assumption we used is that a stable realization of exists because is a stable minimum phase system. Ensuring this condition in every iteration can be a serious limitation in the applicability of the proposed stationary point iteration algorithm. A solution to this problem is discussed in the sequel.

The classical norm of can be expressed in the frequency domain as the following

Let be a minimum phase right spectral factor of , such that . Then can be rewritten as

Let us denote the impulse response of as . Then can be represented in the time domain as

Finally, can be written as

It can readily be noted that can be replaced with without affecting . The advantage, however, is that can be defined even when is not a stable minimum phase system, i.e., by replacing with a stable . Next, we construct a state-space realization of on similar lines with BST, wherein a similar state-space realization is constructed.

The observability gramian of the pair solves the following Lyapunov equation

Let us define and as the following

Let solves the following Riccati equation

| (32) |

Then, a minimum phase realization of is given as

| (33) |

cf. [68]. Further, a stable realization of is given as

| (34) |

We are now in a position to state the algorithm. The proposed algorithm is named “Time-limited Relative-error MOR Algorithm (TLRHMORA)”. The pseudo-code of TLRHMORA is given in Algorithm 1. Step 1 replaces with a full rank matrix if is rank deficient. Steps 3-4 compute a stable realization of . Steps 5-7 compute the reduction matrices. Steps 8-12 ensure the oblique projection condition using the bi-orthogonal Gram-Schmidt method, cf. [36]. Step 13 updates the ROM.

Input: Original system: ; Desired time: sec; Initial guess: ; Allowable iteration: .

Output: ROM .

3.3.3 General Time Interval

So far, we restricted ourselves for simplicity to the case when the desired time interval starts from sec. The problem can be generalized to any time interval sec by making a few changes. Note that for the general time interval case, and solve the following Lyapunov equations

cf. [49]. Accordingly, the reduction matrices in TLRHMORA for the general time interval case can be computed by solving the following equations in each iteration

wherein and can be computed by solving the following Sylvester equations

3.3.4 Stopping Criterion

Most of the MOR algorithms are iterative methods with no guarantee of convergence. The same is the case with TLRHMORA. Further, it has been already discussed that even if TLRHMORA converges, it generally cannot achieve a local optimum due to the inherent construction of the oblique projection framework. Therefore, it is reasonable to stop the algorithm prematurely if it does not converge within an allowable number of iterations. This stopping criterion can help in keeping the computational cost in check, especially in a large-scale setting.

3.3.5 Selection of the Initial Guess

Iterative methods generally improve as the number of iterations progresses. However, a good initial guess can significantly improve the final outcome in terms of accuracy and convergence. It is established in [74, 75, 76] that to ensure a small weighted additive error in the norm, the initial guess should have the dominant poles (with large residues) of the original model and the frequency weight. By following this guideline, the initial guess for TLRHMORA should have dominant poles and/or zeros of the original model. Such an initial guess can be constructed by using computationally efficient algorithms proposed in [77] and [78]. Although there is no guarantee that the final ROM will preserve these poles and zeros, it ensures that is small to start with.

3.3.6 Computational Aspects

The computation of and can be done cheaply, as it only involves small matrices. Similarly, the matrix exponentials , , and can also be computed cheaply, as it involves small matrices. Further, the computation of and is again cheap owing to the small-sized matrices involved. However, the matrix exponential can be a computational challenge if is a large-scale matrix. Note that we need this matrix exponential’s products and for the computation of , , , and . In [53], computationally efficient Krylov subspace-based projection methods are proposed that can accurately approximate and in a large-scale setting as and , respectively. When is a large-scale matrix, these methods can be used to keep TLRHMORA computationally viable.

Once the matrix exponential’s products and are computed, the most computationally demanding step in TLRHOMRA is to compute , , , and by solving their respective Sylvester equations. These Sylvester equations have the following special structure

wherein , , , , and . The big matrices and in this type of Sylvester equation are sparse because the mathematically modeling of most modern-day systems ends up in a large-scale but sparse state-space model. The small matrices and are dense; hence, giving this equation the name “sparse-dense Sylvester equation”. It frequently arises in MOR algorithms, and an efficient solution for this type of Sylvester equation is proposed in [36]. It is highlighted in [36] that the computational time required to solve this equation can be reduced further by using sparse linear matrix solvers, like [79] and [80]. Further, it is noted in [30] that the computational cost of solving a “sparse-dense Sylvester equation” is almost the same as that of constructing a rational Krylov subspace, which is well known to be computationally efficient in a large-scale setting; also see [81]. In short, the solution of a “sparse-dense Sylvester equation” is viable even in a large-scale setting due to the availability of efficient solvers in the literature. The remaining steps in TLRHMORA only involve simple operations that can be executed cheaply. Moreover, by using a maximum number of allowable iterations as a stopping criterion, the number of times these Sylvester equations need to be solved can be limited in case TLRHMORA does not converge quickly.

4 Numerical Results

In this section, TLRHMORA is applied to three models taken from the benchmark collection for testing MOR algorithms, cf. [82], and its performance is compared with TLBT, TLBST, and TLIRKA. The first two models are SISO systems, while the third model is a MIMO system. All the tests are performed using MATLAB R2016a on a laptop with 16GB memory and a 2GHZ Intel i7 processor. All the Lyapunov and Sylvester equations are solved using MATLAB’s “lyap” command. The Riccati equations are solved using MATLAB’s “care” command. The matrix exponentials are computed using MATLAB’s “expm()” command. The maximum number of iterations in TLRHMORA is set to 50. Since all the models considered in this section have zero -matrix, it is replaced with in TLBST and TLRHMORA. For fairness, TLIRKA and TLRHMORA are initialized with the same arbitrary initial guess. The desired time interval is chosen arbitrarily for demonstration purposes.

4.1 Clamped Beam

The clamped beam model is a order SISO system taken from the benchmark collection of [82]. The desired time interval in this example is selected as sec for demonstration purposes. ROMs of orders are generated using TLBT, TLBST, TLIRKA, and TLRHMORA. The relative errors are tabulated in Table 1, and it can be seen that TLRHMORA offers supreme accuracy.

| Order | TLBT | TLBST | TLIRKA | TLRHMORA |

|---|---|---|---|---|

| 5 | 56.6676 | 43.4164 | 45.0637 | 22.7069 |

| 6 | 56.3121 | 75.4351 | 38.5722 | 9.4143 |

| 7 | 15.6814 | 10.4421 | 14.6589 | 5.5747 |

| 8 | 5.2759 | 26.6056 | 4.5883 | 2.0863 |

| 9 | 6.3876 | 17.7543 | 7.1781 | 1.9531 |

| 10 | 0.5775 | 1.2804 | 0.5691 | 0.5256 |

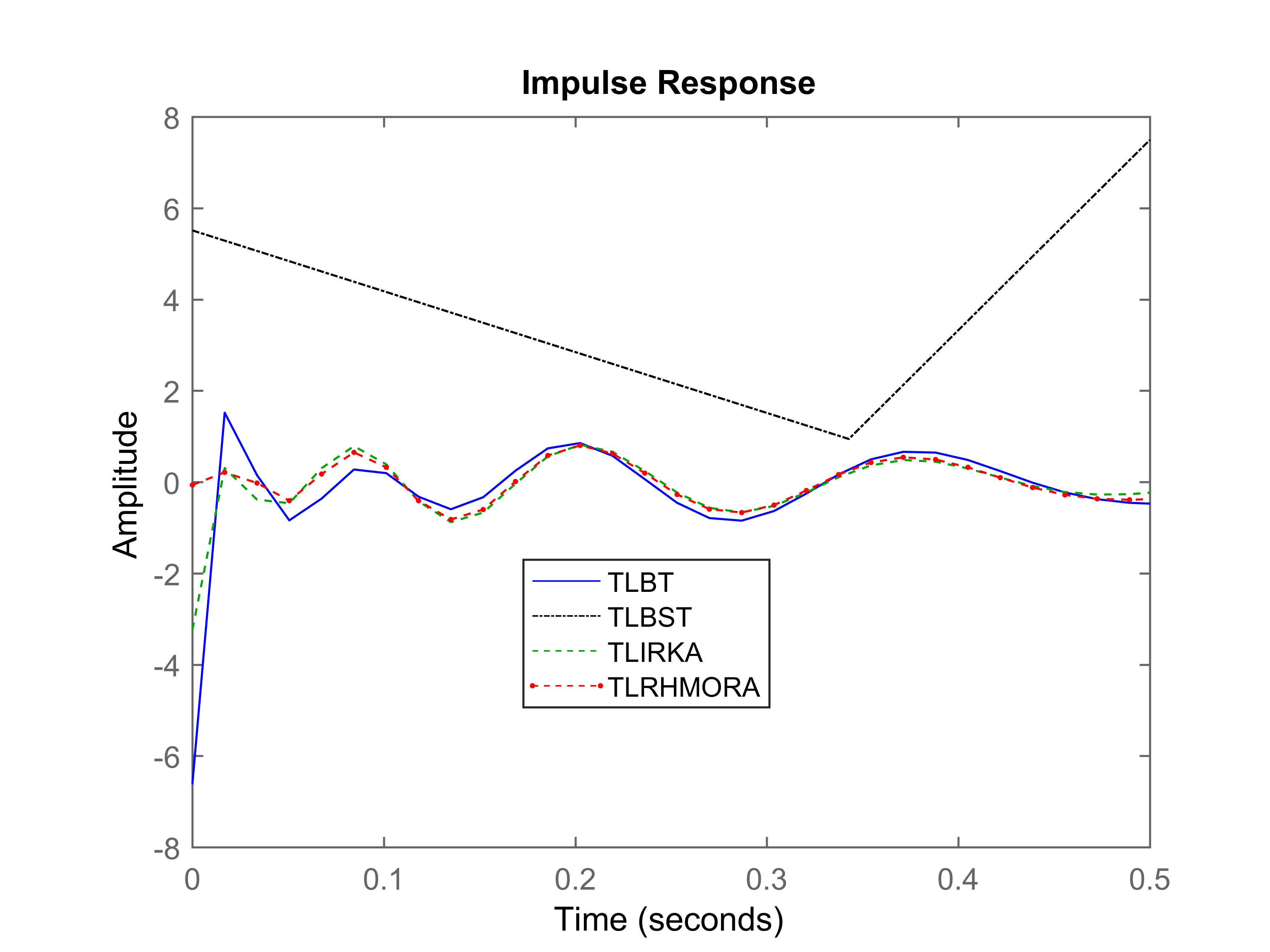

To visually analyze what these numbers mean in terms of accuracy, the impulse responses of for order ROMs within the desired time interval sec are plotted in Figure 1.

It can be seen that TLRHMORA offers the best performance. The highest error in this case is incurred by TLBST, and the impulse response plot corresponds accordingly to the numerical error given in Table 1. TLBT, TLIRKA, and TLRHMORA, in this case, have offered comparable accuracy for the most part of the desired time interval. However, TLBT and TLIRKA have incurred large numerical errors near 0 sec. This is the reason why for TLBT and TIRKA is significantly larger than that for TLRHMORA. Note, TLIRKA and TLRHMORA are both MOR algorithms but with different cost functions, i.e., TLIRKA minimizes , while TLRHMORA minimizes . Although both algorithms are initialized with the same initial guess, it can be seen in Table 1 and Figure 1 that TLRHMORA ensures superior accuracy due to its cost function.

4.2 Artificial Dynamical System

The artificial dynamical model is a order SISO system taken from the benchmark collection of [82]. The desired time interval in this example is selected as sec for demonstration purposes. ROMs of orders are generated using TLBT, TLBST, TLIRKA, and TLRHMORA. The relative errors are tabulated in Table 2, and it can be seen that TLRHMORA offers supreme accuracy.

| Order | TLBT | TLBST | TLIRKA | TLRHMORA |

|---|---|---|---|---|

| 11 | 0.8117 | 0.1098 | 0.5251 | 0.0488 |

| 12 | 0.2270 | 0.2858 | 0.1885 | 0.0511 |

| 13 | 0.2250 | 4.9610 | 4.5675 | 0.0317 |

| 14 | 0.1760 | 0.2748 | 2.6495 | 0.0218 |

| 15 | 0.1392 | 0.1342 | 0.8801 | 0.0188 |

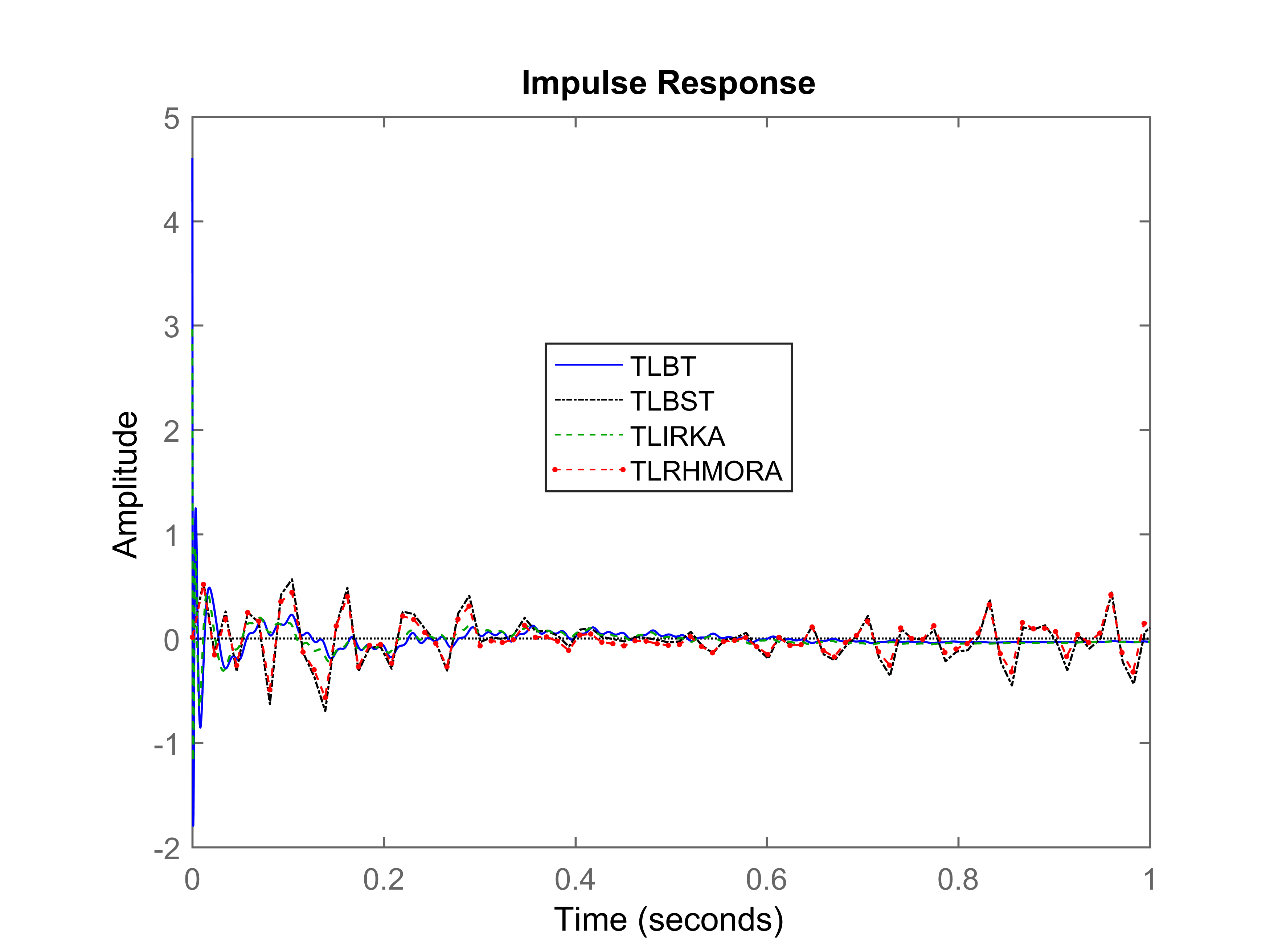

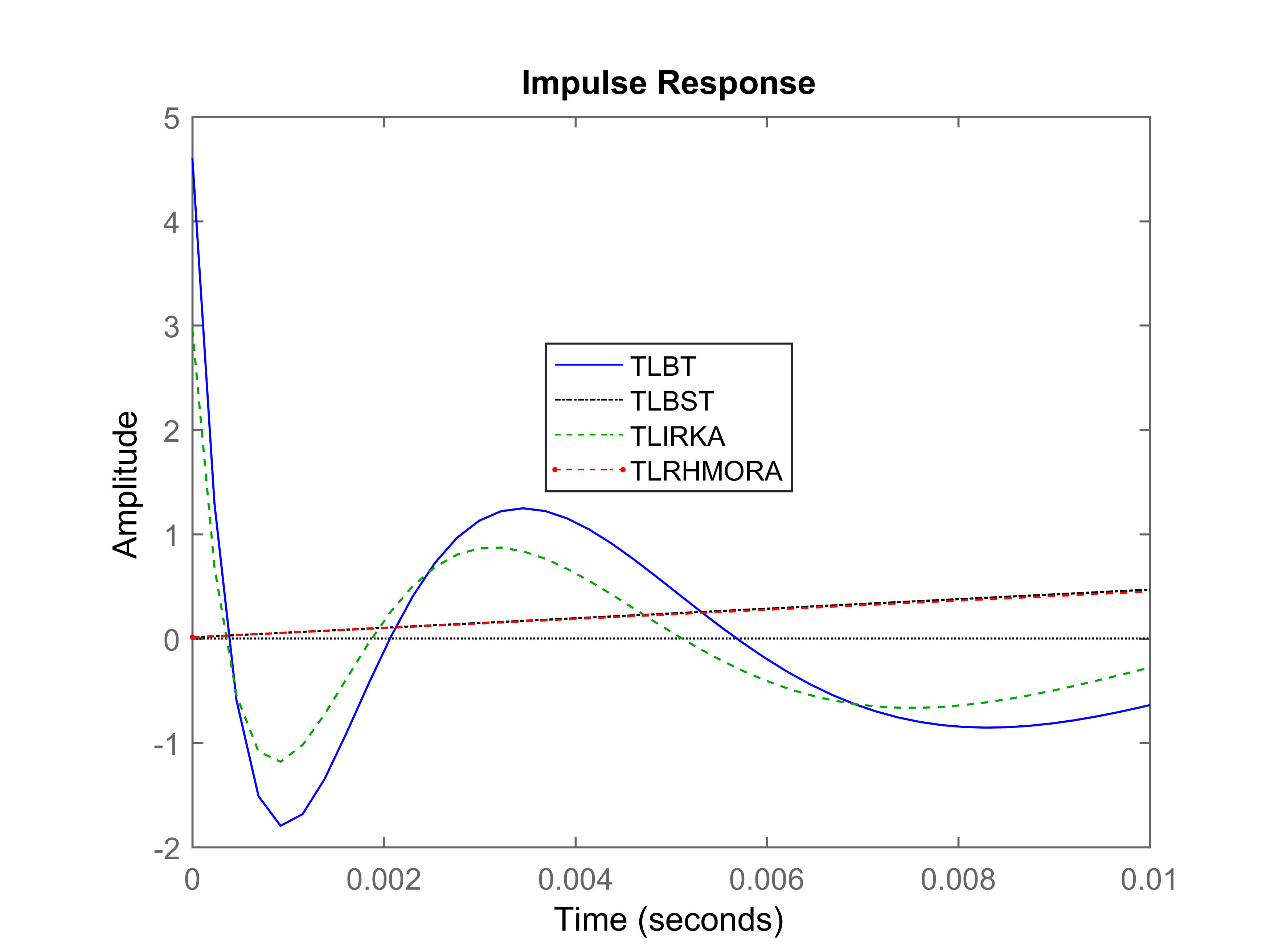

To visually analyze what these numbers mean in terms of accuracy, the impulse responses of for order ROMs within the desired time interval sec are plotted in Figure 2.

It can be seen that TLRHMORA offers the best performance. TLBT, TLBST, TLIRKA, and TLRHMORA, in this case, have offered comparable accuracy for the most part of the desired time interval. However, TLBT, TLBST, and TLIRKA have incurred large numerical errors near 0 sec, which can be seen in Figure 3 (a magnified version of Figure 2).

This is the reason why for TLBT, TLBST, and TIRKA is significantly larger than that for TLRHMORA.

4.3 International Space Station

The international space station model is a order MIMO system taken from the benchmark collection of [82]. It has three inputs and three outputs. The desired time interval in this example is selected as sec for demonstration purposes. ROMs of orders are generated using TLBT, TLBST, TLIRKA, and TLRHMORA. The relative errors are tabulated in Table 3, and it can be seen that TLRHMORA offers supreme accuracy.

| Order | TLBT | TLBST | TLIRKA | TLRHMORA |

|---|---|---|---|---|

| 5 | 2.0787 | 2.1599 | 2.4186 | 1.9615 |

| 6 | 2.0865 | 1.9597 | 1.9485 | 1.9367 |

| 7 | 2.0040 | 1.9317 | 2.5756 | 1.5787 |

| 8 | 1.6106 | 1.8876 | 1.8760 | 1.5636 |

| 9 | 1.6166 | 1.8859 | 1.9060 | 1.0615 |

There are nine impulse response plots of for this example. Therefore, we refrain from showing these for the economy of space.

5 Conclusion

The problem of time-limited MOR based on relative error expression is considered. The necessary conditions for a local optimum are derived for the case when the ROM is a stable minimum phase system. Based on these conditions, the reduction matrices for an oblique projection algorithm are identified. Then, a generalized iterative algorithm, which does not require the ROM to be a stable minimum phase system in every iteration, is proposed. Unlike TLBST, the proposed algorithm does not require the solutions of large-scale Lyapunov and Riccati equations. Instead, solutions of small-scale Lyapunov and Riccati equations are required that can be computed cheaply. The numerical results confirm that the proposed algorithm is accurate and offers high fidelity.

Appendix

Let us define the cost function as and denote the first-order derivatives of , , , , , , , and with respect to as , , , , , , , and , respectively. Further, let us denote the differential of as . By differentiating with respect to , we get

By differentiating the equations (11), (12), and (14) with respect to , we get

| (35) | ||||

| (36) | ||||

| (37) |

wherein

By applying Lemma 3.3 on the equations (35) and (18), (36) and (19), and (37) and (21), we get

Thus

By differentiating the equations (13), (15), (16), and (3) with respect to , we get

| (38) | ||||

| (39) | ||||

| (40) | ||||

| (41) |

wherein

By applying Lemma 3.3 on the equations (38) and (20), and (39) and (22), we get

Thus

By applying Lemma 3.3 on the equations (40) and (23), we get

Thus

By applying Lemma 3.3 on the equations (41) and (17), we get

Thus

Since , we get

Hence,

and

is a necessary condition for the local optimum of .

Let us denote the first-order derivatives of , , , , , , , and with respect to as , , , , , , , and , respectively. Further, let us denote the differential of as . By differentiating with respect to , we get

By differentiating the equations (11), (12), and (14) with respect to , we get

| (42) | ||||

| (43) | ||||

| (44) |

wherein

By applying Lemma 3.3 on the equations (42) and (18), (43) and (19), and (44) and (21), we get

Thus

By differentiating the equations (13), (15), and (16) with respect to , we get

| (45) | ||||

| (46) | ||||

| (47) |

wherein

By applying Lemma 3.3 on the equations (45) and (20), and (46) and (22), we get

Thus

By applying Lemma 3.3 on the equations (47) and (23), we get

Thus

By differentiating the equation (3) with respect to , we get

| (48) |

where

By applying Lemma 3.3 on the equations (48) and (24), we get

Thus

Since , we get

Hence,

and

is a necessary condition for the local optimum of .

Let us denote the first-order derivatives of , , , , and with respect to as , , , , and , respectively. Further, let us denote the differential of as . By taking differentiation of with respect to , we get

By taking differentiation of the equations (6), (8), (9), and (3) with respect to , we get

| (49) | ||||

| (50) | ||||

| (51) | ||||

| (52) |

wherein

By applying Lemma 3.3 on the equations (49) and (25), (50) and (26), and (51) and (27), we get

Thus

By applying Lemma 3.3 on the equations (52) and (28), we get

Thus

Hence,

and

is a necessary condition for the local optimum of . This completes the proof.

Acknowledgment

This work is supported by the National Natural Science Foundation of China under Grants No. 61873336 and 61803046, the International Corporation Project of Shanghai Science and Technology Commission under Grant 21190780300, in part by The National Key Research and Development Program No. 2020YFB1708200 , and in part by the High-end foreign expert program No. G2021013008L granted by the State Administration of Foreign Experts Affairs (SAFEA).

Competing Interest Statement

The authors declare no conflict of interest.

References

- [1] W. H. Schilders, H. A. Van der Vorst, J. Rommes, Model order reduction: theory, research aspects and applications, Vol. 13, Springer, 2008.

- [2] P. Benner, M. Hinze, E. J. W. Ter Maten, Model reduction for circuit simulation, Vol. 74, Springer, 2011.

- [3] P. Benner, V. Mehrmann, D. C. Sorensen, Dimension reduction of large-scale systems, Vol. 45, Springer, 2005.

- [4] G. Obinata, B. D. Anderson, Model reduction for control system design, Springer Science & Business Media, 2012.

- [5] P. Benner, W. Schilders, S. Grivet-Talocia, A. Quarteroni, G. Rozza, L. Miguel Silveira, Model order reduction: Volume 3 applications, De Gruyter, 2020.

- [6] B. Moore, Principal component analysis in linear systems: Controllability, observability, and model reduction, IEEE transactions on automatic control 26 (1) (1981) 17–32.

- [7] D. F. Enns, Model reduction with balanced realizations: An error bound and a frequency weighted generalization, in: The 23rd IEEE conference on decision and control, IEEE, 1984, pp. 127–132.

- [8] S. Gugercin, J.-R. Li, Smith-type methods for balanced truncation of large sparse systems, in: Dimension reduction of large-scale systems, Springer, 2005, pp. 49–82.

- [9] J.-R. Li, J. White, Low rank solution of Lyapunov equations, SIAM Journal on Matrix Analysis and Applications 24 (1) (2002) 260–280.

- [10] T. Penzl, A cyclic low-rank Smith method for large sparse Lyapunov equations, SIAM Journal on Scientific Computing 21 (4) (1999) 1401–1418.

- [11] P. Kürschner, M. A. Freitag, Inexact methods for the low rank solution to large scale Lyapunov equations, BIT Numerical Mathematics 60 (4) (2020) 1221–1259.

- [12] V. Mehrmann, T. Stykel, Balanced truncation model reduction for large-scale systems in descriptor form, in: Dimension Reduction of Large-Scale Systems, Springer, 2005, pp. 83–115.

- [13] M. S. Tombs, I. Postlethwaite, Truncated balanced realization of a stable non-minimal state-space system, International Journal of Control 46 (4) (1987) 1319–1330.

- [14] M. G. Safonov, R. Chiang, A schur method for balanced-truncation model reduction, IEEE Transactions on Automatic Control 34 (7) (1989) 729–733.

- [15] J. R. Phillips, L. Daniel, L. M. Silveira, Guaranteed passive balancing transformations for model order reduction, IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 22 (8) (2003) 1027–1041.

- [16] B. Yan, S. X.-D. Tan, B. McGaughy, Second-order balanced truncation for passive-order reduction of RLCK circuits, IEEE Transactions on Circuits and Systems II: Express Briefs 55 (9) (2008) 942–946.

- [17] J. R. Phillips, L. M. Silveira, Poor man’s TBR: A simple model reduction scheme, IEEE transactions on computer-aided design of integrated circuits and systems 24 (1) (2004) 43–55.

- [18] T. Reis, T. Stykel, Positive real and bounded real balancing for model reduction of descriptor systems, International Journal of Control 83 (1) (2010) 74–88.

- [19] T. Reis, T. Stykel, Balanced truncation model reduction of second-order systems, Mathematical and Computer Modelling of Dynamical Systems 14 (5) (2008) 391–406.

- [20] X. Wang, Q. Wang, Z. Zhang, Q. Chen, N. Wong, Balanced truncation for time-delay systems via approximate gramians, in: 16th Asia and South Pacific Design Automation Conference (ASP-DAC 2011), IEEE, 2011, pp. 55–60.

- [21] L. Zhang, J. Lam, B. Huang, G.-H. Yang, On gramians and balanced truncation of discrete-time bilinear systems, International Journal of Control 76 (4) (2003) 414–427.

- [22] S. Lall, J. E. Marsden, S. Glavaški, A subspace approach to balanced truncation for model reduction of nonlinear control systems, International Journal of Robust and Nonlinear Control: IFAC-Affiliated Journal 12 (6) (2002) 519–535.

- [23] K. Glover, All optimal Hankel-norm approximations of linear multivariable systems and their -error bounds, International journal of control 39 (6) (1984) 1115–1193.

- [24] K. Glover, A tutorial on Hankel-norm approximation, From data to model (1989) 26–48.

- [25] M. Green, Balanced stochastic realizations, Linear Algebra and its Applications 98 (1988) 211–247.

- [26] M. Green, A relative error bound for balanced stochastic truncation, IEEE Transactions on Automatic Control 33 (10) (1988) 961–965.

- [27] G. M. Flagg, S. Gugercin, C. A. Beattie, An interpolation-based approach to model reduction of dynamical systems, in: 49th IEEE Conference on Decision and Control (CDC), IEEE, 2010, pp. 6791–6796.

- [28] G. Flagg, C. A. Beattie, S. Gugercin, Interpolatory model reduction, Systems & Control Letters 62 (7) (2013) 567–574.

- [29] A. Castagnotto, C. Beattie, S. Gugercin, Interpolatory methods for model reduction of multi-input/multi-output systems, in: Model Reduction of Parametrized Systems, Springer, 2017, pp. 349–365.

- [30] T. Wolf, pseudo-optimal model order reduction, Ph.D. thesis, Technische Universität München (2014).

- [31] D. Wilson, Optimum solution of model-reduction problem, in: Proceedings of the Institution of Electrical Engineers, Vol. 117, IET, 1970, pp. 1161–1165.

- [32] S. Gugercin, A. C. Antoulas, C. Beattie, model reduction for large-scale linear dynamical systems, SIAM journal on matrix analysis and applications 30 (2) (2008) 609–638.

- [33] P. Van Dooren, K. A. Gallivan, P.-A. Absil, -optimal model reduction of MIMO systems, Applied Mathematics Letters 21 (12) (2008) 1267–1273.

- [34] Y. Xu, T. Zeng, Optimal model reduction for large scale MIMO systems via tangential interpolation., International Journal of Numerical Analysis & Modeling 8 (1) (2011).

- [35] K. Gallivan, A. Vandendorpe, P. Van Dooren, Sylvester equations and projection-based model reduction, Journal of Computational and Applied Mathematics 162 (1) (2004) 213–229.

- [36] P. Benner, M. Køhler, J. Saak, Sparse-dense Sylvester equations in -model order reduction., MPI. Magdeburg preprints MPIMD/11-11 (2011).

- [37] C. A. Beattie, S. Gugercin, A trust region method for optimal model reduction, in: Proceedings of the 48h IEEE Conference on Decision and Control (CDC) held jointly with 2009 28th Chinese Control Conference, IEEE, 2009, pp. 5370–5375.

- [38] Y. Jiang, K. Xu, optimal reduced models of general MIMO LTI systems via the cross gramian on the Stiefel manifold, Journal of the Franklin Institute 354 (8) (2017) 3210–3224.

- [39] K. Sato, H. Sato, Structure-preserving optimal model reduction based on the Riemannian trust-region method, IEEE Transactions on Automatic Control 63 (2) (2017) 505–512.

- [40] H. Sato, K. Sato, Riemannian trust-region methods for optimal model reduction, in: 2015 54th IEEE Conference on Decision and Control (CDC), IEEE, 2015, pp. 4648–4655.

- [41] P. Yang, Y.-L. Jiang, K.-L. Xu, A trust-region method for model reduction of bilinear systems on the Stiefel manifold, Journal of the Franklin Institute 356 (4) (2019) 2258–2273.

- [42] H. K. Panzer, S. Jaensch, T. Wolf, B. Lohmann, A greedy rational Krylov method for -pseudooptimal model order reduction with preservation of stability, in: 2013 American Control Conference, IEEE, 2013, pp. 5512–5517.

- [43] T. Wolf, H. K. Panzer, B. Lohmann, pseudo-optimality in model order reduction by Krylov subspace methods, in: 2013 European control conference (ECC), IEEE, 2013, pp. 3427–3432.

- [44] S. Ibrir, A projection-based algorithm for model-order reduction with performance: A convex-optimization setting, Automatica 93 (2018) 510–519.

- [45] P. Kundur, N. J. Balu, M. G. Lauby, Power system stability and control, Vol. 7, McGraw-hill New York, 1994.

- [46] B. Pal, B. Chaudhuri, Robust control in power systems, Springer Science & Business Media, 2006.

- [47] G. Rogers, Power system oscillations, Springer Science & Business Media, 2012.

- [48] M. Grimble, Solution of finite-time optimal control problems with mixed end constraints in the s-domain, IEEE Transactions on Automatic Control 24 (1) (1979) 100–108.

- [49] W. Gawronski, J.-N. Juang, Model reduction in limited time and frequency intervals, International Journal of Systems Science 21 (2) (1990) 349–376.

- [50] M. Redmann, P. Kürschner, An output error bound for time-limited balanced truncation, Systems & Control Letters 121 (2018) 1–6.

- [51] M. Redmann, An -error bound for time-limited balanced truncation, Systems & Control Letters 136 (2020) 104620.

- [52] S. Gugercin, A. C. Antoulas, A survey of model reduction by balanced truncation and some new results, International Journal of Control 77 (8) (2004) 748–766.

- [53] P. Kürschner, Balanced truncation model order reduction in limited time intervals for large systems, Advances in Computational Mathematics 44 (6) (2018) 1821–1844.

- [54] U. Zulfiqar, M. Imran, A. Ghafoor, M. Liaqat, Time/frequency-limited positive-real truncated balanced realizations, IMA Journal of Mathematical Control and Information 37 (1) (2020) 64–81.

- [55] P. Benner, S. W. Werner, Frequency-and time-limited balanced truncation for large-scale second-order systems, Linear Algebra and its Applications 623 (2021) 68–103.

- [56] D. Kumar, A. Jazlan, V. Sreeram, Generalized time limited gramian based model reduction, in: 2017 Australian and New Zealand Control Conference (ANZCC), IEEE, 2017, pp. 47–49.

- [57] M. Imran, M. Imran, Development of time-limited model reduction technique for one-dimensional and two-dimensional systems with error bound via balanced structure, Journal of the Franklin Institute (2022).

- [58] H. R. Shaker, M. Tahavori, Time-interval model reduction of bilinear systems, International Journal of Control 87 (8) (2014) 1487–1495.

- [59] M. Tahavori, H. R. Shaker, Model reduction via time-interval balanced stochastic truncation for linear time invariant systems, International Journal of Systems Science 44 (3) (2013) 493–501.

- [60] P. Goyal, M. Redmann, Time-limited -optimal model order reduction, Applied Mathematics and Computation 355 (2019) 184–197.

- [61] K. Sinani, S. Gugercin, optimality conditions for a finite-time horizon, Automatica 110 (2019) 108604.

- [62] K. Das, S. Krishnaswamy, S. Majhi, optimal model order reduction over a finite time interval, IEEE Control Systems Letters 6 (2022) 2467–2472.

- [63] U. Zulfiqar, V. Sreeram, X. Du, On frequency-and time-limited -optimal model order reduction, arXiv preprint arXiv:2102.03603.

- [64] U. Zulfiqar, near-optimal projection methods for finite-horizon model order reduction, Ph.D. thesis, The University of Western Australia, Perth, Australia (2021).

- [65] U. Zulfiqar, V. Sreeram, X. Du, Time-limited pseudo-optimal -model order reduction, IET Control Theory & Applications 14 (14) (2020) 1995–2007.

- [66] A. C. Antoulas, Approximation of large-scale dynamical systems, SIAM, 2005.

- [67] K. Zhou, Frequency-weighted norm and optimal Hankel norm model reduction, IEEE Transactions on Automatic Control 40 (10) (1995) 1687–1699.

- [68] K. Zhou, J. Doyle, K. Glover, Robust and optimal control, Control Engineering Practice 4 (8) (1996) 1189–1190.

- [69] D. A. Bini, S. Dendievel, G. Latouche, B. Meini, Computing the exponential of large block-triangular block-toeplitz matrices encountered in fluid queues, Linear Algebra and its Applications 502 (2016) 387–419.

- [70] N. N. Smalls, The exponential function of matrices, Master thesis, Georgia State University (2007).

- [71] K. B. Petersen, M. S. Pedersen, et al., The matrix cookbook, Technical University of Denmark 7 (15) (2008) 510.

- [72] D. Petersson, A nonlinear optimization approach to -optimal modeling and control, Ph.D. thesis, Linköping University Electronic Press (2013).

- [73] P. Benner, E. S. Quintana-Ortí, G. Quintana-Ortí, Efficient numerical algorithms for balanced stochastic truncation, International Journal of Applied Mathematics and Computer Science 11 (5) (2001) 1123-1150.

- [74] B. Anić, C. Beattie, S. Gugercin, A. C. Antoulas, Interpolatory weighted- model reduction, Automatica 49 (5) (2013) 1275–1280.

- [75] T. Breiten, C. Beattie, S. Gugercin, Near-optimal frequency-weighted interpolatory model reduction, Systems & Control Letters 78 (2015) 8–18.

- [76] U. Zulfiqar, V. Sreeram, M. I. Ahmad, X. Du, Frequency-weighted -optimal model order reduction via oblique projection, International Journal of Systems Science 53 (1) (2022) 182–198.

- [77] J. Rommes, N. Martins, Efficient computation of multivariable transfer function dominant poles using subspace acceleration, IEEE transactions on power systems 21 (4) (2006) 1471–1483.

- [78] N. Martins, P. C. Pellanda, J. Rommes, Computation of transfer function dominant zeros with applications to oscillation damping control of large power systems, IEEE transactions on power systems 22 (4) (2007) 1657–1664.

- [79] T. A. Davis, Algorithm 832: UMFPACK v4. 3—an unsymmetric-pattern multifrontal method, ACM Transactions on Mathematical Software (TOMS) 30 (2) (2004) 196–199.

- [80] J. W. Demmel, S. C. Eisenstat, J. R. Gilbert, X. S. Li, J. W. Liu, A supernodal approach to sparse partial pivoting, SIAM Journal on Matrix Analysis and Applications 20 (3) (1999) 720–755.

- [81] H. K. Panzer, Model order reduction by Krylov subspace methods with global error bounds and automatic choice of parameters, Ph.D. thesis, Technische Universität München (2014).

- [82] Y. Chahlaoui, P. V. Dooren, Benchmark examples for model reduction of linear time-invariant dynamical systems, in: Dimension reduction of large-scale systems, Springer, 2005, pp. 379–392.