Relation Matters: Foreground-aware Graph-based Relational Reasoning for Domain Adaptive Object Detection

Abstract

Domain Adaptive Object Detection (DAOD) focuses on improving the generalization ability of object detectors via knowledge transfer. Recent advances in DAOD strive to change the emphasis of the adaptation process from global to local in virtue of fine-grained feature alignment methods. However, both the global and local alignment approaches fail to capture the topological relations among different foreground objects as the explicit dependencies and interactions between and within domains are neglected. In this case, only seeking one-vs-one alignment does not necessarily ensure the precise knowledge transfer. Moreover, conventional alignment-based approaches may be vulnerable to catastrophic overfitting regarding those less transferable regions (e.g. backgrounds) due to the accumulation of inaccurate localization results in the target domain. To remedy these issues, we first formulate DAOD as an open-set domain adaptation problem, in which the foregrounds and backgrounds are seen as the “known classes” and “unknown class” respectively. Accordingly, we propose a new and general framework for DAOD, named Foreground-aware Graph-based Relational Reasoning (FGRR), which incorporates graph structures into the detection pipeline to explicitly model the intra- and inter-domain foreground object relations on both pixel and semantic spaces, thereby endowing the DAOD model with the capability of relational reasoning beyond the popular alignment-based paradigm. FGRR first identifies the foreground pixels and regions by searching reliable correspondence and cross-domain similarity regularization respectively. The inter-domain visual and semantic correlations are hierarchically modeled via bipartite graph structures, and the intra-domain relations are encoded via graph attention mechanisms. Through message-passing, each node aggregates semantic and contextual information from the same and opposite domain to substantially enhance its expressive power. Empirical results demonstrate that the proposed FGRR exceeds the state-of-the-art performance on four DAOD benchmarks.

Index Terms:

Domain adaptive object detection, foreground-aware, relational reasoning, graph structure, intra- and inter-domain.1 Introduction

As one of the most fundamental problems in computer vision, object detection has gained a great surge of development in the past decade, owing to the renaissance in deep learning and the explosive increase of labeled training data. Nevertheless, the impressive performance gains rely on a strong pre-assumption that the training and test data are drawn from identical distribution, which is challenged to be satisfied in many real-world applications, such as autonomous driving and medical image analysis. Moreover, manually collecting large-scale instance-level annotated data in various domains is labor-intensive and impractical. An intuitive solution is to directly apply the off-the-shelf object detection models trained on the source domain to a new target domain. However, domain shift [1, 2] hinders the deployment of models and emerges as an inevitable challenge. Such a dilemma has inspired the research on Unsupervised Domain Adaptation (UDA) [3], which aims at mitigating the domain disparity via transferring knowledge from a label-rich domain to a new fully unlabeled domain.

The mainstream paradigm for UDA is to learn domain-invariant features by minimizing the discrepancy of source and target feature distributions. Current UDA approaches can be roughly divided into two categories: 1) statistics matching, which targets on aligning feature representations across domains in virtue of statistical distribution divergence [4, 5, 6, 7, 8, 9]; 2) adversarial learning, which adversarially learns domain-invariant representations based on the two-player game between domain discriminator and feature extractor [10, 11, 12, 13, 14, 15]. By doing so, UDA has shown remarkable progress in image classification and semantic segmentation problems.

Compared to the conventional problems of UDA, object detection is a more challenging problem as it requires one to simultaneously achieve adaptation under the classification and regression settings. In this paper, our objective is to investigate the UDA techniques for object detection, namely, Domain Adaptive Object Detection (DAOD). In light of the local nature of detection tasks, state-of-the-art DAOD approaches [16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26] are dedicated to change the focus of adaptation process from global to local by means of fine-grained feature alignment methods regarding the foreground objects. Typically, they incorporate the adversarial feature training within de facto detection frameworks [27, 28] to form the baseline model. Then, elaborate local alignment modules, such as mining foreground regions via attention-like mechanism [21, 23, 25], are imposed on the baseline model to extract and align the discriminative features at different feature levels, i.e., local-level, global-level, and instance-level.

However, both the domain and region alignment approaches in the literature, such as weak global alignment [18], multi-adversarial alignment [20], feature calibration [21], and coarse-to-fine alignment [23], fail to capture the topological relations among foreground objects during the adaptation process. In this regard, simply seeking explicit one-vs-one matching no matter in image-level or instance-level cannot ensure the precise knowledge transfer since they do not model the explicit dependencies and interactions between and within domains. Moreover, such strict alignment may be prone to result in catastrophic overfitting regarding those less transferable regions (e.g. backgrounds) due to the accumulation of inaccurate localization results in the target domain. Consequently, existing DAOD methods may be sensitive to the size of domain discrepancy, i.e., when the domain discrepancy is large, the unstable alignment process will significantly affect the adaptation performance. How to explore the relationships among different foreground objects is vital for a holistic understanding of the scene in each domain and the precise knowledge transfer between domains, but remains out of reach for current approaches.

Remedying these issues, we first propose to formulate DAOD as an Open Set Domain Adaptation (OSDA) problem [29]. Unlike the closed set domain adaptation, wherein the source and target domains contain the same classes, OSDA should explicitly identify and isolate the unknown class before reducing the distributional disparity of known classes across domains. In the task of DAOD, we argue that both the so-called known and unknown classes refer to the category of a pixel or region rather than the whole image. As shown in Fig. 1, we observe that the backgrounds are distinct (non-transferable) across domains (e.g., “billboard” in the source domain and “tree” in the target domain) and can be regarded as unknown class, while the foregrounds possess more common features across domains (e.g., “dog” in both domains has four legs and one head) and can be regarded as known class. Compared to classification based OSDA, the decision boundaries among features of target known and unknown classes in the detection pipeline are ambiguous and these features may be highly entangled, impeding the DAOD model to learn object-wise invariant representations across domains. This motivates us to design DAOD algorithms in the following two steps: (1) Make a distinction between foreground and background feature representations in an unsupervised manner. (2) Perform relational reasoning to model the foreground object interactions and align the corresponding categories in both domains.

Grounded on these findings, we propose a Foreground-aware Graph-based Relational Reasoning (FGRR) framework for DAOD to explicitly model the intra- and inter-domain foreground object interactions on both visual (pixel-level) and semantic spaces, thereby endowing the detection model with the capability of relational reasoning beyond the mainstream one-vs-one feature alignment paradigm. To be specific, FGRR consists of two key components, pixel-level and semantic-level relational reasoning (cf. Figure 1). For pixel-level relational reasoning, we search pixel-wise correspondence to find out foreground pixels via mutual nearest neighbor constraint. The searched pixels is the graph nodes, and then we model the inter-domain relations via bipartite graph learning and the intra-domain relations via graph attention mechanism. Through message-passing and feature aggregation, each foreground node can be aware of the contextual information within domain and the topological interactions across domains. For semantic-level relational reasoning, we discriminate the target instance-level features to define graph nodes by choosing proposals with high-confidence prediction. When reasoning in the high-level semantic space, we devise a cross-domain similarity regularization strategy, which penalizes the ones of nodes that are more likely to be backgrounds and enhance the connections between foreground nodes, to further identify and isolate the backgrounds so as to construct semantic-level bipartite graph. In addition, the intra-domain graph edges are characterized from two aspects: geometric constraints and semantic similarities. By doing so, the semantic correlations are propagated through nodes methodically, helping the DAOD model understand the high-level semantic concepts and reason their relationships.

Noting that the proposed FGRR embraces the complementary strengths of the inter- and intra-domain reasoning modules. On the one hand, we hierarchically learn the relational structures between domains via the proposed inter-domain graph reasoning modules, which helps the detection model capture more informative commonalities for constructing intra-domain relation graphs. On the other hand, we use intra-domain relation graphs to abstract away from dense feature maps and model the intra-domain foreground pixel/object interactions, which provide high-quality relational structures for inter-domain bipartite graph learning. In addition, upon observing that the cross-domain object-level variations based on the image-level features are prone to result in negative transfer but difficult to capture, we develop an object-aware reweighting mechanism to regularize image-level adaptation by explicitly accounting for the object-level variations from two complementary aspects.

The contributions of our work are summarized as follows:

-

•

We formulate DAOD as an OSDA problem, which has not been explored by the literature and provides a new and general perspective to mitigate the domain shift for adapting object detectors.

-

•

We make the first attempt to reason the foreground object relationships and interactions via graph-based structures rather than following the mainstream one-vs-one feature alignment paradigm.

-

•

We propose a novel DAOD framework, named FGRR, which seamlessly incorporates the inter- and intra-domain relational reasoning modules into the detection pipeline. These modules are imposed to the pixel-wise and instance-wise features to jointly learn visual and semantic relationships.

-

•

We conduct extensive experiments based on modern object detectors. Experimental results reveal that our FGRR models have achieved state-of-the-art results on four DAOD benchmarks: real-to-artistic, normal-to-foggy, and synthetic-to-real datasets, as well as cross-site mammogram mass detection (from public to in-house datasets).

A preliminary version of this paper was presented in [30]. We have made substantial changes to that conference paper. (1) Besides modeling the inter-domain relations, our FGRR further explores the complementary effect of intra-domain relations to help the source and target graph nodes attend and reason over their intra-domain neighborhoods’ features instead of treating each pixel or region separately in each domain, thereby capturing the internal object structures and contextual information. (2) An image-level object-aware reweighting mechanism is introduced to deal with the object-level variations based on image-level features, which are difficult to be estimated since the object information here is implicit and ambiguous. This mechanism is simple yet effective by only utilizing the searched foreground pixels to compute the weight of input image pairs during image-level adaptation. (3) We improve the mechanisms of defining graph nodes to find out more reliable foreground pixels and regions. (4) We additionally conduct experiments on two widely-used DAOD benchmarks (normal-to-foggy and synthetic-to-real) to comprehensively evaluate the effectiveness of the proposed FGRR. In addition, we also evaluate our FGRR on a practical application, i.e., cross-site mammogram mass detection. More ablation studies and analyses are provided to demonstrate the contributions of newly proposed components. (5) We provide additional insights on relational reasoning for DAOD, more complete introduction and analysis, as well as more elaborated literature review and technical details regarding the proposed method.

2 Related Works

2.1 Unsupervised Domain Adaptation (UDA)

In the literature, UDA has achieved remarkable success for bridging the domain disparity between two different distributions in image classification, semantic segmentation, and object detection problems. Specifically, a typical solution for UDA is to align the source and target feature representations in the shared latent space by incorporating well-defined divergence measures into deep architectures. For example, DDC [31] and DAN [6] resort to Maximum Mean Discrepancy (MMD) [32] for measuring the domain discrepancy in the task-specific layers of deep networks. Correlation Alignment (CORAL) [33] aligns the second-order statistics of source and target distributions. Central Moment Discrepancy (CMD) [8] matches higher order central moments of probability distributions via the means of order-wise moment differences. Margin Disparity Discrepancy (MDD) [34], which characterizes the disagreements of multi-class scoring hypotheses, extends previous UDA theories to the case of multiclass classification.

Another line of research was inspired by the essence of Generative Adversarial Nets (GAN) [35]. DANN [36] presents a domain-adversarial training strategy to learn domain-invariant representations by adversarially confusing a domain discriminator with the help of a Gradient Reversal Layer (GRL), and this work motivates a number of follow-up methods due to its strong efficacy and scalability [13, 37, 14, 38, 39]. For example, ADDA [11] improves DANN by separating the source and target feature extractors to learn target representations in an asymmetric way. Saito et al. [40] utilizes two task-specific classifiers as discriminators, and a feature extractor learns to fool the classifiers. CDAN [12] conditions the adversarial training on categorical information conveyed in the label classifier. In addition, the works of [41, 42, 43, 44, 45, 46] directly utilize the GAN-based image-to-image translation techniques, e.g., CycleGAN [47], to generate source-like target images or/and target-like source images to achieve pixel-level adaptation. Other representative approaches to UDA include self-ensembling [48], similarity learning [49], pseudo-labeling [50, 51, 52], structurally regularized deep clustering [53], to name a few.

Particularly, several UDA works attempt to explore intrinsic characteristics of source and target data in addition to domain and category label information. To be specific, Ma et al. [54] incorporate GCN into the adaptation pipeline to exploit the data structure for bridging source and target domains. Luo et al. [55] introduce an adversarial bipartite graph learning algorithm to model the source-target interactions for video-based UDA. Kang et al. [56] delve into the pixel-wise one-to-one association problem for domain adaptive semantic segmentation. These methods, however, fail to explore the topological correspondence across domains and thus cannot endow the UDA model with the capability of cross-domain relation reasoning. More importantly, existing UDA methods focus on the closed set adaptation and cannot generalize to OSDA [29] scenarios. Moreover, state-of-the-art OSDA methods [57, 58, 59, 60] are tailored for classification problems and cannot be applied to solve the detection tasks, where the foreground objects and backgrounds may come from the same image and can be naturally regarded as the known and unknown classes.

2.2 Domain Adaptive Object Detection (DAOD)

2.2.1 Object Detection

Object detection, one of the most longstanding and challenging problems in computer vision, aims at localizing and classifying all object instances of given categories in natural images [61]. The current state-of-the-art is shaped by deep learning based approaches. This paper focuses on exploring the adaptability of modern object detectors, hence we briefly review a few representative object detectors. The series of region-based convolutional networks, such as R-CNN [62], Fast R-CNN [63], and Faster R-CNN [27], have demonstrated strong capability in achieving higher detection precision. These methods first get a set of object proposal candidates generated by the region proposal mechanisms, and then the proposals are further classified and their locations are refined by regression in the second stage. The other mainstream pipeline is one-stage detector, such as SSD [28], YOLO [64, 65], and RetinaNet [66], which directly conducts the category confidence prediction and the bounding box regression in a single-shot manner. By doing so, these detectors have shown a clear superiority in terms of inference speed. In addition, anchor-free one-stage object detectors, such as CornerNet [67] and FCOS [68], eliminate the need for anchor boxes and thus result in a much simpler training process.

2.2.2 Domain Adaptive Object Detection (DAOD)

DAOD [69, 70] aims to mitigate the distributional shift problem in object detection. Domain Adaptive Faster R-CNN [16] pioneers this line of research by adversarially learning domain-invariant representations on both image-level and instance-level within the Faster R-CNN framework. In view of the local nature of object detection task, most existing DAOD methods [17, 18, 19, 20, 21, 22, 23, 24, 25, 71, 72, 73, 74, 26, 75, 76] resort to learning the local feature patterns in the target domain in virtue of elaborate fine-grained feature alignment modules.

Specifically, Zhu et al. [17] design a region mining strategy to identify the discriminative regions, and then utilize the source region proposals to reweight the target ones to induce better local alignment; Xu et al. [22] and Zheng et al. [23] rely on the cross-domain prototype alignment [13, 14] to align the foreground objects with the same class label across domains. He et al. [77] propose an asymmetric tri-way Faster-RCNN to solve the labeling unfairness between domains. Wu et al. [26] explicitly extract domain-invariant instance-level features based on a progressive disentangled mechanism. Wang et al. [78] and Chen et al. [79] additionally incorporate the object scale into adversarial training networks. Hsu et al. [25] and VS et al. [74] introduce specific networks to generate foreground-aware attention maps, which are used to achieve category-wise adversarial feature alignment. Although our work have the similar motivation about creating foreground-background awareness compared to some of local alignment methods, these prior efforts still focus on pairwise alignment and usually require additional attention modules.

In addition to the series of works that adapt Faster R-CNN, Kim et al. [80] aims to adapt SSD via weak self-training and adversarial background score regularization. DA-DETR [81] develops a hybrid attention module with a domain discriminator on the top of Deformable DETR [82] to highlight and align the foreground features in adversarial training. Transformer-based methods [83] globally model the long-range dependencies among all encoded features while our FGRR locally models the relations based on the constructed graphs (a sparse version of encoded features). Here, the difference between global and local is that whether the self-attention operation is applied to all encoded features.

Despite their strong efficacy on adapting certain detectors, state-of-the-art methods cannot be readily applied to distinct detection pipelines and thus fail to establish a general DAOD approach. More importantly, how to effectively perform inter- and intra-domain relational reasoning to capture the topological relationships among foreground objects is vital for enhancing the discriminability of feature representations in DAOD but remains the boundary to explore.

3 Methodology

In DAOD, we have access to a source domain (, ) of labeled samples, and a target domain of unlabeled samples. and are sampled from distinct data distributions, but share an identical label space ( classes in all). The objective of DAOD is to transfer knowledge from to for improving the detection performance in .

The overall architecture of FGRR is illustrated in Figure 2, which involves three modules: pixel-level relational reasoning (low-level features), semantic-level relational reasoning (high-level features), and image-level object-aware reweighting (image-level features). FGRR targets on endowing the DAOD model with inter- and intra-domain relational reasoning ability. (1) We filter out source background pixels within the bounding boxes and search target foreground pixels via mutual nearest neighbor constraint (Sec. 3.1.1). The searched pixels is represented as the graph nodes, and the inter- and intra-domain relations are learned via bipartite graph learning (Sec. 3.1.2) and graph attention mechanisms (Sec. 3.1.3). (2) When reasoning over relations between high-level visual concepts, we discriminate the target instance-level features to define graph nodes (Sec. 3.2.1), and then a cross-domain similarity regularization strategy (Sec. 3.2.2) is introduced to identify and isolate the backgrounds for constructing the semantic-level bipartite graph. In addition, the intra-domain graph edges are characterized from two aspects: geometric constraints and semantic similarities (Sec. 3.2.3). (3) The object-aware reweighting (Sec. 3.3) is introduced to capture the object-level variations by learning appropriate importance weights for different input images. The motivation and technical details of each proposed module are successively demonstrated in this section.

3.1 Pixel-Level Relational Reasoning

Deep features in the standard CNNs must eventually transition from general to specific along the network [84]. Low-level features, obtained from the shallow layers of CNNs, have less semantics but with rich local details, e.g., have more clear edges and corners. For low-level features, state-of-the-art DAOD methods to date mostly resort to global domain alignment via adversarial feature adaptation [16, 18, 20] or trying to coarsely derive the foreground regions via attention-related strategies [21, 23, 25], such as class activation maps (CAMs) [85] and the entropy of domain discriminator outputs [21]. Despite their efficacy for significantly reducing the domain disparity, current DAOD approaches for adapting low-level features still suffer from three key challenges. First, they cannot capture the cross-domain foreground object relations (such as object co-occurrence), and thus fail to enable fine-grained and more precise knowledge transfer. Second, globally matching features between domains is error-prone since the extracted features from shallow layers may be dominated by background noise, i.e., the foreground and background features are highly entangled. Third, relations between distant foreground regions on the feature map, which are crucial for depicting intra-domain topological structures, cannot be captured on the shallow layers with small receptive field in conventional DAOD pipelines.

To tackle the aforementioned challenges, we propose to endow the shallow layers of a DAOD model with relational reasoning ability to achieve a holistic understanding of foreground objects with the following two steps. First, we need to find out the discriminative foreground pixels by searching reliable pixel-level correspondence between domains. Second, based on the selected foreground pixels, we propose to model and reason over these pixels in both inter-domain and intra-domain by harnessing two complementary graph-based relational reasoning modules.

3.1.1 Searching Reliable Pixel-Level Correspondence

Assume that we are given the source and target 3D feature maps extracted from shallow layer of the backbone network, i.e., the extracted features are low-level features. First of all, we aim to find source foreground pixels by using source annotations, including category labels and bounding boxes. In object detection, the semantic label of a certain source pixel is determined by the scope of its bounding box, which inevitably includes many background pixels. Fortunately, we can observe that the foreground pixels are meaningful and coherent compared to the uninformative background content within the bounding boxes.

Technically, we first compute the centroid for each source category in the feature space, which represents the mean feature vector of pixels belonging to the same object category within a source image. The formulation is defined as follows,

| (1) |

where is the pixel index, and denotes the set of pixels annotated with category in . Then, we utilize the calculated to select foreground pixels in that satisfy the following two requirements:

-

•

For each source pixel in , its similarity to the class centroid should larger than a certain value, i.e., , where denotes the cosine similarity and is a threshold.

-

•

To mitigate the influence of pixels that are located near the bounding box, the centerness [68] of pixel point should also larger than a certain value, i.e., , where , , , and denote the normalized distances from the location of to the four sides of the bounding box, and is a threshold.

If the above requirements are all satisfied, is added into , where is the selected foreground pixel set annotated with class . The overall source selected foreground pixel set is denoted by .

Next, we aim to find pixels in that are more likely to be foreground pixels via cross-domain nearest neighbor search. We present the searching process below. For a source pixel in , we searched its nearest neighbor in the target domain. In turn, is the nearest neighbor of target pixel in the source domain. If also belongs to the category , we will assign the target pixel with pseudo-label . Similarly, we traversal all pixels in to find out pixel pairs between domains that are mutual nearest neighbors. In this way, we can obtain two set of selected pixels in both domains, i.e., and .

3.1.2 Inter-domain Relational Reasoning

Given the searched foreground pixels in both source and target domains, we can easily project these visual features into node space, where each node corresponds to a foreground pixel in the feature map. Then, we aim to learn inter-domain visual dependencies in pixel level by introducing a bipartite graph convolutional module (BGCM) that can be inserted to detection model in a plug-and-play manner. To be specific, BGCM utilize a bipartite graph to indicate the relations among foreground nodes across domains and enhance their expressive power by aggregating information from its neighborhoods on the opposite domain. and are two group of bipartite graph nodes projected from and respectively. Bipartite graph edges represent the similarities between and . To mitigate the impact of noisy background pixels and relations, we let the edge weights be learnable. Formally, for nodes and in and , we have,

| (2) |

where stands for the sigmoid function, and are the features of nodes and , and is the learnable parameter.

To perform graph convolution on the constructed bipartite graph , we augment its original form as follows,

| (3) |

| (4) |

After that, the augmented bipartite graph can be learned by leveraging the modern Graph Convolutional Networks (GCN) [86]. In practice, we stack multiple graph convolution layers to form the BGCM. To be specific, the graph convolution is recursively conducted as: , where is the parameter matrix, are the hidden features of the -th layer (where ), and is the adjacency matrix.

To improve the discriminability of node features, we conduct node classification (i.e. multi-class classification) based on the bipartite graph. Note that the selected source pixels have ground-truth labels, and we utilize the pixel-wise correspondence to assign pseudo-labels to the selected target pixels. Formally, the last layer of pixel-level bipartite graph (BGCM) predicts the label using a classifier and can be formulated as follows,

| (5) |

where denotes the predicted label, denotes a fully-connected layer, and is the feature of source or target nodes. The node classification loss is represented by .

3.1.3 Intra-domain Relational Reasoning

Although BGCM models the correlations between domains, the intra-domain topological structure is neglected. We argue that relations within per domain are complementary to the inter-domain relations and should be explicitly taken into consideration. To solve this issue, we introduce a graph attention module (GAM), which allows nodes to attend over their neighborhoods features by specifying different weights to different neighbors, to perform intra-domain relational reasoning and capture the long-range dependencies between distant regions on each input image.

Specifically, we directly construct the pixel-level intra-domain graph on the source or target selected pixels ( or ) to reduce the computational cost. Each node in represents a selected pixel, and each edge in encodes the relationships between nodes. Then, we conduct self-attention on the nodes [87], which computes the hidden representations that characterize the relationships between a source/target selected pixel and its neighborhoods within the domain. Given two nodes and in , their edge weights are defined as follows,

| (6) |

where stands for the neighborhood of node in the graph, is a learnable weight vector, is a weight matrix that is applied to every node, and is the concatenation operation.

Discussion. Technically, graph self-attention is a type of local attention, while self-attention in transformer [83] is a type of global attention. The constructed intra-domain relation graphs are sparse in the feature space. In that sense, local attention (based on the constructed graphs) will significantly reduce the potential of being biased towards those non-transferable nodes (pixels or regions), while global attention (based on the complete feature maps) is more likely to result in wrong feature aggregation and even negative transfer.

3.2 Semantic-Level Relational Reasoning

Instance-level (high-level) feature adaptation, which refers to aligning the ROI-based feature representations in the second stage of two-stage detectors, is a central step for learning semantic representations and mitigating high-level differences between domains, such as geometric variations (position, size, viewpoint, etc), the number of objects, and the type of objects. In this regard, numerous instance-wise alignment modules has been proposed, such as vanilla adversarial alignment [16], weighted adversarial alignment [17, 20, 21, 24], and prototype-guided alignment [22, 23]. However, these semantic alignment approaches suffer from two critical limitations. First, the alignment performance heavily relies on the quality of instance-level features, which are noisy and unpredictable in the target domain due to the lack of ground-truth annotations to distinguish foreground and background proposals during the ROI-pooling. Moreover, instance-level features are sensitive to the high-level variances, thereby hindering the deployment of direct alignment. Second, category alignment (one-vs-one) is insufficient to reason the foreground object dependencies since the graphical structure hidden in the semantic space (many-vs-many) lacks thorough investigation. Meanwhile, such high-level features are instance-informative yet domain-specific, requiring an elaborate calibration step to alleviate the feature noise.

To overcome the above challenges, we semantically explore the intra- and inter-domain object relations to enhance the reasoning ability of DAOD model by aggregating semantic and global context information via message-passing. In this section, we take Faster R-CNN as an exemplar to illustrate the technical details, which can be readily applied to other modern detectors in experiments (cf. Section 5.4).

3.2.1 Graph Nodes

Semantic-level graph nodes are introduced to represent the inherent characteristics of high-level visual concepts (object/instance/category). Regarding semantic-level graph, an intuitive idea is to utilize the proposals generated by RPN as graph nodes. Unfortunately, these proposals may be insufficient to stand for an instance and cannot directly feed them into the relational reasoning procedure in view of the incomplete categorical information problems in the target domain, such as the deviation of bounding boxes, occlusions, and class ambiguities. Moreover, due to the absence of target ground-truth bounding boxes, most existing DAOD works randomly sample target candidate proposals for ROI-pooling and fail to distinguish the positive and negative samples. To solve this problem, we propose to use pseudo-labels for discriminating the target foreground instance-level features.

The workflow of Faster R-CNN can be divided into three components: backbone network, region proposal network (RPN), and ROI-wise classifier (RC). RPN generates and sends candidate object proposals to the head of RC for ROI-pooling based on the feature map extracted from the backbone network, then RC predicts the category labels and box coordinates of the pooled instance-level features. Both RPN and RC include a classification loss and a regression loss. Specifically, we utilize the detection results of RC to generate pseudo-labels for the target proposals. At the second stage, assume that we have target proposals filtered by RPN. The classification and regression outputs of RC are denoted by and , where and represents the proposal feature. If , this result will be added into class . Then, we sort the proposals within each class in descending order by their classification probabilities. A portion of high-confidence detection results in each class are selected as our pseudo labels, which are denoted by . In this way, we can ensure that the sampling process works in a class-balanced manner, implicitly highlighting the under-represented categories. Finally, we utilize the pseudo-labeled bounding boxes to obtain corresponding proposal features from RPN, i.e., . These discriminative ROI-based instance-level features are the representations of semantic-level graph nodes, and the constructed graph can regarded be sparse representation of all region proposals. Note that the target negative samples (backgrounds) are still randomly sampled from the remaining proposals.

3.2.2 Inter-domain Relational Learning

Next, we propose a semantic-level bipartite graph module (SBGM) to model semantic relations and constraints with respect to the foreground objects across domains. The bipartite graph is defined as . and are the source and target vertex sets, where and is the ROI-based instance-level features generated by RPN, is the number of proposals, is the node feature dimension, and denotes the set of edges.

Given the graph nodes, SBGM aims to characterize the correspondence between and . A natural idea is to traverse all possible pairs between and to compute their similarity, and node pairs with higher similarity should be assigned larger edge weights. Whilst we have enhanced the discriminability of foreground proposals, the instance-level features still contain positive and negative samples, and the distinction between known and unknown classes is yet to be explicitly constrained in light of the asymmetry of OSDA problem, making the target nodes be risky to aggregate biased or even wrong semantic information during the message-passing process.

Consequently, to identify and isolate the background proposals, we design a Cross-Domain Similarity Regularization (CDSR) strategy to generate reliable node pairs across domains. Our key idea is to adjust the cross-domain similarity measure to ensure that the nearest neighbor of a source node, in the target domain, is more likely to have as a nearest neighbor this particular source node, i.e., assign large edge weights to nodes from and that are mutual nearest neighbors. However, compared to the low-level feature space, we found that the nearest neighbors may be asymmetric in the high-level embedding space: (we omit the subscripts and for simplicity) being a -NN of does not assure that is a -NN of , which also refer to the hubness problem [88, 89]. In high-level semantic space, some nodes are more likely to be the nearest neighbors of many other nodes (e.g., easy negatives), but some others may be not nearest neighbors of any node (e.g., hard positives). For the bipartite graph , we denote the neighborhood of a source node as . All elements of are nodes from . Likewise, the neighborhood associated with a target node is represented by . All elements of are nodes from . The average similarity of a source node to its target neighborhood is represented by,

| (7) |

Similarly, the average similarity of a target node to its source neighborhood is represented by . Based on the computed and , the cross-domain similarity measure is formulated as follows,

| (8) |

Then, we can utilize Eq. (8) to calculate the adjacency matrix , which associates each edge with its element . Finally, we use Eq. (3)-(4) to augment as such that the modern graph convolution techniques can be conducted based on .

To further enhance the semantic correlations among foreground objects between domains, we propose a Category-aware Domain Alignment (CDA) loss term on top of to enable domain alignment on all foreground categories. Technically, we contrastively align the source and target prototypes to achieve domain alignment. Formally, we define the source and target class prototypes as follows,

| (9) |

where stands for the nodes in that belongs to category (). The target graph nodes can be clustered into classes by using the target pseudo-labels. Formally, the formulation of CDA loss is defined as follows,

| (10) |

where is the margin term and set as 1 in our experiments.

3.2.3 Intra-domain Relational Learning

The semantic-level intra-domain graph are represented by . The graph nodes are inherited from the SBGM. Each edge in stands for the semantic correlations between and . To derive the intra-domain graph edge representations, we regularize the semantic relation from two aspects, i.e., geometric constraints and semantic similarities. Specifically, intra-domain graph edge is represented by an adjacency matrix , which includes a spatial graph and a semantic graph.

For the spatial graph, we introduce and to characterize the spatial interactions between nodes, where and are two region proposals associated with two RoI-pooled instance-level features, denotes the distance between and , and measures their interactions in terms of Intersection over Union (IoU). Formally, when or , and will be connected with a spatial graph edge (i.e. ); otherwise, these two nodes will not be connected (). By doing so, we can define the spatial adjacency matrix .

For the semantic graph, we directly measure their cosine similarity to characterize the semantic interactions between nodes. when , and will be connected with a semantic graph edge (i.e. ); otherwise, these two nodes will not be connected (). By doing so, we can define the spatial adjacency matrix .

We integrate the spatial graph with the semantic graph to formulated our complete semantic-level intra-domain graph,

| (11) |

where stands for the element-wise dot. Following Eq. (6), we conduct graph attention operation based on .

3.3 Image-level Object-aware Reweighting

In addition to the low-level (pixel-wise) and high-level (semantic-wise) features, we contend that the adaptation between global image-level features should be simultaneously considered now that image-level features, which contain rich information regarding the foreground objects, backgrounds, and scene layout, may be distinct across domains, i.e., the image-level features are not equally transferable. Conventional DAOD methods [16, 20, 22, 23] commonly resort to fully align the whole image-level feature distributions of source and target data. Recent work of [18, 21] started to explore the adaptation difficulty of different images by assigning larger training weights to those easy-to-adapt images during the process of image-level adaptation.

However, existing methods cannot deal with the object-level variations across domains based on the image-level features, such as the amount and category of foreground objects and the object co-occurrence, which is prone to result in severe feature misalignment or even negative transfer. Moreover, as we usually utilize mini-batch SGD for training, the categorical information in each batch is insufficient to cover all objects. Thus, the object-level variations depend on the input image pairs and should be handled case-by-case. Intuitively, we need to enlarge the training weights when the source and target samples contain similar content in the current batch; otherwise, the training weights will be reduced. Unfortunately, it is difficult to identify the category of objects in the target domain based on image-level features due to the absence of ground-truth annotations.

To remedy these issues, we propose a simple yet effective mechanism to characterize the degree of between-domain object-level variations from two aspects, i.e., the similarity of prototype representations and the number of identical object categories. The larger the object-level variation is, the smaller the training weight value is. Given an input source and target image pair and , we resort to the searched pixel-level correspondence in Section 3.1.1 to compute these two terms. First, the weight regarding the similarity of prototype representations is formulated as follows,

| (12) |

where denotes the number of identical object categories between and based on the searched pixels, and and are the source and target prototype representations of category . Second, the weight with respect to the number of identical object categories is defined by,

| (13) |

where is the number of classes and . Considering that the input image is treated as a whole in this step, no graph structure will be used here. Thus, we decide to incorporate the computed training weights of images into a modern image-level adaptation objective. In this paper, we adopt the popular domain adversarial training loss [16, 18, 21], which is formulated as follows,

| (14) |

where is the backbone feature extractor and denotes image-wise domain discriminator.

3.4 Overall Objective

The detection loss contains a classification loss and a regression loss . measures the classification accuracy of detector, and measures the degree of overlap between the ground-truth and predicted bounding boxes. Formally, the overall objective function for the proposed FGRR is defined as follows,

| (15) |

where , , and are hyper-parameters for balancing the weights of different modules.

4 Theoretical Insight

In this section, we investigate the connections between our approach and the theoretical upper bound of OSDA problem, making using of statistical learning theory of domain adaptation [90, 91, 92]. First, we provide the notations and problem setting of OSDA, and the definition of source and target risks.

Definition 1.

Open-Set Domain Adaptation (OSDA). Suppose that we have a source domain of labeled samples and a target domain of unlabeled samples. and are drawn from and , . The source and target label spaces share known classes and individually include a unknown class and , which is different in both domains (i.e., ). The goal of OSDA is to learn an optimal target classifier .

Definition 2.

Source and Target Risks. The source risk and target risk of w.r.t. under source distribution and target distribution are defined as

where and are class-prior probabilities of and . Then, we have

Given the hypothesis space with a condition that constant function , for , the expected error on target samples is bounded as,

| (16) |

where the shared error , , and . We show the derivation of Inequality (16) in the supplementary material. From the inequality, we can see that the target error is bounded by the following four terms: (1) expected error on the known classes of source domain ; (2) domain discrepancy ; (3) shared error of the ideal joint hypothesis ; (4) target open set risk .

Remark 1.

, which can be optimized by using the source ground-truth annotations, is expected to be small. , which measures the discrepancy between source and target data distributions, can be optimized by using any modern domain alignment approaches. , which reflects the category-level conditional shift, is considered to be sufficiently small since our method explicitly model the semantic correlations. The target open set risk is prone to be large when an approach does not make a distinction between the target foregrounds (known classes) and backgrounds (unknown class). By contrast, our approach optimize this term by discriminating the foreground pixels and regions and performing foreground-aware relational reasoning. To summarize, the proposed FGRR is capable of effectively optimizing the upper bound of expected target error by simultaneously minimizing the above four terms.

5 Experiments

In this section, we conduct experiments on four domain shifts including seven natural image datasets and two medical image datasets, i.e., real-to-artistic (Pascal VOC Clipart, Pascal VOC Watercolor, and Pascal VOC Comic), normal-to-foggy (Cityscapes Foggy-Cityscapes), synthetic-to-real (Sim10k Cityscapes), and cross-site mammogram mass detection (Public In-house).

5.1 Dataset

Real-to-Artistic. In this case, we combine the Pascal VOC2007-trainval and VOC2012-trainval datasets as the source domain, and use Clipart1k, Watercolor2k, and Comic2k as the target domains respectively. The Pascal VOC [93] is a real-world image dataset, which contains 16,551 images with 20 object classes. Clipart1k, Watercolor2k, and Comic2k, which are collected from a website called Behance and annotated by Inoue et al. for cross-domain object detection tasks, consist of 1,000, 2,000, and 2,000 images respectively. Clipart1k has the same 20 object categories as Pascal VOC, and Watercolor2k and Comic2k share 6 identical object classes with the Clipart1k dataset, i.e., bicycle, bird, cat, car, dog, and person. For Pascal VOC Clipart, we use all images of Clipart1k as the target domain for both training and testing by following mainstream DAOD works [18, 21]. For Pascal VOC Watercolor and Pascal VOC Comic, we leverage the train set (1K images) for training and the test set (1K images) is held out for evaluation.

Normal-to-Foggy. The images in Cityscapes [94] are captured from the street scenes of different cities in normal weather conditions via a car-mounted video camera. Since the dataset has dense pixel-level labels, we use the rectangle of instance mask to derive ground-truth bounding boxes for our DAOD tasks. Foggy-Cityscapes [94] are rendered from Cityscapes by using the depth maps to simulate the foggy scenes, and the bounding box annotations are naturally inherited from the original Cityscapes dataset. Both datasets own 2,975 images in the training set and 500 images in the validation set. Note that although images in Cityscapes and Foggy-Cityscapes have a one-to-one correspondence, we do not use this information when training DAOD models.

Synthetic-to-Real. Sim10K [95] is a driving scene dataset that was produced based on the computer game Grand Theft Auto V (GTA V). It consists of 10,000 synthetic images with 58,071 bounding boxes of the car. We use all images in Sim10K as the source domain. In addition, the Cityscapes dataset, which contains real road scene images, is used as the target domain. Sim10K and Cityscapes share one identical object class, i.e., car.

Cross-Site Mammogram Mass Detection. We further evaluate our model on two mammograms datasets with labeled masses: a public dataset (INbreast [96]) and an in-house dataset. INbreast, which includes 107 low-quality mammography cases with 112 labeled masses, is one of the most broadly-used mammogram mass detection datasets. The in-house dataset, which includes 297 high-quality cases with 448 labeled masses, is collected from a local hospital. The annotations are labeled by two radiologists with strong expertise. We use these two datasets to build a DAOD task: Public In-house.

5.2 Implementation Details

We follow the same setting in mainstream DAOD methods [16, 18, 21] that utilize Faster-RCNN [27] with VGG-16 [97] or ResNet-101 [98] architectures as the detection model. We fine-tune VGG-16 and ResNet-101 pretrained on ImageNet. In all experiments, the shorter side of the image is resized to 600. The batch size is selected as 2 (one image per domain) to fit the GPU memory. In the testing phase, we report mean average precision (mAP) with a IoU threshold of 0.5 on the target domain to evaluate the adaptation performance. We train the proposed model using stochastic gradient descent (SGD) optimizer with an initial learning rate of 0.001 and momentum 0.9. The learning rate is decreased to 0.0001 after 5 epochs. For the hyper-parameters , , and in Eq. (15), We set and in all experiments considering that we do not have access to the target labels in both model training and selection phase. The experiments are implemented based on PyTorch deep learning framework.

| Methods | backbone |

aero |

bcycle |

bird |

boat |

bottle |

bus |

car |

cat |

chair |

cow |

table |

dog |

hrs |

bike |

prsn |

plnt |

sheep |

sofa |

train |

tv |

mAP | mAP* | Gain |

| SA-DA-Faster [79] | ResNet-50-FPN | 29.4 | 56.8 | 30.6 | 34.0 | 49.5 | 50.5 | 47.7 | 18.7 | 48.5 | 64.4 | 20.3 | 29.0 | 42.3 | 84.1 | 73.4 | 37.4 | 20.5 | 39.8 | 41.2 | 48.0 | 43.3 | 28.8 | 14.5 |

| DA-Faster [16] | ResNet-101 | 15.0 | 34.6 | 12.4 | 11.9 | 19.8 | 21.1 | 23.2 | 3.1 | 22.1 | 26.3 | 10.6 | 10.0 | 19.6 | 39.4 | 34.6 | 29.3 | 1.0 | 17.1 | 19.7 | 24.8 | 19.8 | 27.8 | -8.0 |

| MAF [20] | ResNet-101 | 38.1 | 61.1 | 25.8 | 43.9 | 40.3 | 41.6 | 40.3 | 9.2 | 37.1 | 48.4 | 24.2 | 13.4 | 36.4 | 52.7 | 57.0 | 52.5 | 18.2 | 24.3 | 32.9 | 39.3 | 36.8 | 27.8 | 9.0 |

| SWDA [18] | ResNet-101 | 26.2 | 48.5 | 32.6 | 33.7 | 38.5 | 54.3 | 37.1 | 18.6 | 34.8 | 58.3 | 17.0 | 12.5 | 33.8 | 65.5 | 61.6 | 52.0 | 9.3 | 24.9 | 54.1 | 49.1 | 38.1 | 27.8 | 10.3 |

| ICR-CCR [24] | ResNet-101 | 28.7 | 55.3 | 31.8 | 26.0 | 40.1 | 63.6 | 36.6 | 9.4 | 38.7 | 49.3 | 17.6 | 14.1 | 33.3 | 74.3 | 61.3 | 46.3 | 22.3 | 24.3 | 49.1 | 44.3 | 38.3 | 27.0 | 11.3 |

| HTCN [21] | ResNet-101 | 33.6 | 58.9 | 34.0 | 23.4 | 45.6 | 57.0 | 39.8 | 12.0 | 39.7 | 51.3 | 21.1 | 20.1 | 39.1 | 72.8 | 63.0 | 43.1 | 19.3 | 30.1 | 50.2 | 51.8 | 40.3 | 27.8 | 12.5 |

| CDTD [99] | ResNet-101 | 44.7 | 50.0 | 33.6 | 27.4 | 42.2 | 55.6 | 38.3 | 19.2 | 37.9 | 69.0 | 30.1 | 26.3 | 34.4 | 67.3 | 61.0 | 47.9 | 21.4 | 26.3 | 50.1 | 47.3 | 41.5 | 27.8 | 13.7 |

| ATF [77] | ResNet-101 | 41.9 | 67.0 | 27.4 | 36.4 | 41.0 | 48.5 | 42.0 | 13.1 | 39.2 | 75.1 | 33.4 | 7.9 | 41.2 | 56.2 | 61.4 | 50.6 | 42.0 | 25.0 | 53.1 | 39.1 | 42.1 | 27.8 | 14.3 |

| PD [26] | ResNet-101 | 41.5 | 52.7 | 34.5 | 28.1 | 43.7 | 58.5 | 41.8 | 15.3 | 40.1 | 54.4 | 26.7 | 28.5 | 37.7 | 75.4 | 63.7 | 48.7 | 16.5 | 30.8 | 54.5 | 48.7 | 42.1 | 27.8 | 14.3 |

| DBGL [30] | ResNet-101 | 28.5 | 52.3 | 34.3 | 32.8 | 38.6 | 66.4 | 38.2 | 25.3 | 39.9 | 47.4 | 23.9 | 17.9 | 38.9 | 78.3 | 61.2 | 51.7 | 26.2 | 28.9 | 56.8 | 44.5 | 41.6 | 27.8 | 13.8 |

| FGRR (Ours) | ResNet-101 | 30.8 | 52.1 | 35.1 | 32.4 | 42.2 | 62.8 | 42.6 | 21.4 | 42.8 | 58.6 | 33.5 | 20.8 | 37.2 | 81.4 | 66.2 | 50.3 | 21.5 | 29.3 | 58.2 | 47.0 | 43.3 | 27.8 | 15.5 |

| Methods | Backbone | Person | Rider | Car | Truck | Bus | Train | Motorbike | Bicycle | mAP | mAP* | Gain |

| MTOR [19] | ResNet-50 | 30.6 | 41.4 | 44.0 | 21.9 | 38.6 | 40.6 | 28.3 | 35.6 | 35.1 | 26.9 | 8.2 |

| GPA [22] | ResNet-50 | 32.9 | 46.7 | 54.1 | 24.7 | 45.7 | 41.1 | 32.4 | 38.7 | 39.5 | 26.9 | 12.6 |

| PD [26] | ResNet-101 | 32.8 | 44.4 | 49.6 | 33.0 | 46.1 | 38.0 | 29.9 | 35.3 | 38.6 | 25.6 | 13.0 |

| AFAN [78] | ResNet-50-FPN | 42.5 | 44.6 | 57.0 | 26.4 | 48.0 | 28.3 | 33.2 | 37.1 | 39.6 | 26.1 | 13.5 |

| SA-DA-Faster [79] | ResNet-50-FPN | 50.3 | 45.4 | 62.1 | 32.4 | 48.5 | 52.6 | 31.5 | 29.5 | 44.0 | 30.3 | 13.7 |

| SCDA [17] | VGG-16 | 33.5 | 38.0 | 48.5 | 26.5 | 39.0 | 23.3 | 28.0 | 33.6 | 33.8 | 26.2 | 7.6 |

| DA-Faster [16] | VGG-16 | 25.0 | 31.0 | 40.5 | 22.1 | 35.3 | 20.2 | 20.0 | 27.1 | 27.6 | 18.8 | 8.8 |

| CT [72] | VGG-16 | 32.7 | 44.4 | 50.1 | 21.7 | 45.6 | 25.4 | 30.1 | 36.8 | 35.9 | 26.2 | 9.7 |

| CDN [71] | VGG-16 | 35.8 | 45.7 | 50.9 | 30.1 | 42.5 | 29.8 | 30.8 | 36.5 | 36.6 | 26.1 | 10.5 |

| PD [26] | VGG-16 | 33.1 | 43.4 | 49.6 | 22.0 | 45.8 | 32.0 | 29.6 | 37.1 | 36.6 | 22.8 | 13.8 |

| SWDA [18] | VGG-16 | 29.9 | 42.3 | 43.5 | 24.5 | 36.2 | 32.6 | 30.0 | 35.3 | 34.3 | 20.3 | 14.0 |

| PAL [73] | VGG-16 | 36.4 | 47.3 | 51.7 | 22.8 | 47.6 | 34.1 | 36.0 | 38.7 | 39.3 | 24.4 | 14.9 |

| MAF [20] | VGG-16 | 28.2 | 39.5 | 43.9 | 23.8 | 39.9 | 33.3 | 29.2 | 33.9 | 34.0 | 18.8 | 15.2 |

| ICR-CCR [24] | VGG-16 | 45.1 | 34.6 | 49.2 | 30.3 | 32.9 | 43.8 | 36.4 | 27.2 | 37.4 | 22.0 | 15.4 |

| DD-MRL [100] | VGG-16 | 30.8 | 40.5 | 44.3 | 27.2 | 38.4 | 34.5 | 28.4 | 32.2 | 34.6 | 17.9 | 16.7 |

| VDD [76] | VGG-16 | 33.4 | 44.0 | 51.7 | 33.9 | 52.0 | 34.7 | 34.2 | 36.8 | 40.0 | 22.8 | 17.2 |

| MeGA-CDA [74] | VGG-16 | 37.7 | 49.0 | 52.4 | 25.4 | 49.2 | 46.9 | 34.5 | 39.0 | 41.8 | 24.4 | 17.4 |

| CDTD [99] | VGG-16 | 31.6 | 44.0 | 44.8 | 30.4 | 41.8 | 40.7 | 33.6 | 36.2 | 37.9 | 20.3 | 17.6 |

| C2F [23] | VGG-16 | 43.2 | 37.4 | 52.1 | 34.7 | 34.0 | 46.9 | 29.9 | 30.8 | 38.6 | 20.8 | 17.8 |

| ATF [77] | VGG-16 | 34.6 | 47.0 | 50.0 | 23.7 | 43.3 | 38.7 | 33.4 | 38.8 | 38.7 | 20.3 | 18.4 |

| HTCN [21] | VGG-16 | 33.2 | 47.5 | 47.9 | 31.6 | 47.4 | 40.9 | 32.3 | 37.1 | 39.8 | 20.3 | 19.5 |

| DBGL [30] | VGG-16 | 33.5 | 46.4 | 49.7 | 28.2 | 45.9 | 39.7 | 34.8 | 38.3 | 39.6 | 20.3 | 19.3 |

| FGRR (Ours) | VGG-16 | 34.4 | 47.6 | 51.3 | 30.0 | 46.8 | 42.3 | 35.1 | 38.9 | 40.8 | 20.3 | 20.5 |

| Methods | Backbone | bike | bird | car | cat | dog | person | mAP | mAP* | Gain |

| AFAN [78] | ResNet-50-FPN | 87.0 | 46.4 | 47.3 | 33.1 | 30.0 | 60.1 | 50.6 | 43.1 | 7.5 |

| BDC-Faster | ResNet-101 | 68.6 | 48.3 | 47.2 | 26.5 | 21.7 | 60.5 | 45.5 | 44.6 | 0.9 |

| DA-Faster [16] | ResNet-101 | 75.2 | 40.6 | 48.0 | 31.5 | 20.6 | 60.0 | 46.0 | 44.6 | 1.4 |

| MAF [20] | ResNet-101 | 73.4 | 55.7 | 46.4 | 36.8 | 28.9 | 60.8 | 50.3 | 44.6 | 5.7 |

| SWDA [18] | ResNet-101 | 82.3 | 55.9 | 46.5 | 32.7 | 35.5 | 66.7 | 53.3 | 44.6 | 8.7 |

| ATF [77] | ResNet-101 | 78.8 | 59.9 | 47.9 | 41.0 | 34.8 | 66.9 | 54.9 | 44.6 | 10.3 |

| CDTD [99] | ResNet-101 | 82.2 | 55.1 | 51.8 | 39.6 | 38.4 | 64.0 | 55.2 | 44.6 | 10.6 |

| DBGL [30] | ResNet-101 | 83.1 | 49.3 | 50.6 | 39.8 | 38.7 | 61.3 | 53.8 | 44.6 | 9.2 |

| FGRR (Ours) | ResNet-101 | 86.1 | 54.8 | 48.9 | 36.6 | 40.4 | 67.5 | 55.7 | 44.6 | 11.1 |

| Methods |

bike |

bird |

car |

cat |

dog |

person |

mAP | mAP* | Gains |

|---|---|---|---|---|---|---|---|---|---|

| SWDA [18] | 36.0 | 18.3 | 29.3 | 9.3 | 22.9 | 48.4 | 27.4 | 24.4 | 3.0 |

| DBGL [30] | 35.6 | 20.3 | 33.9 | 16.4 | 26.6 | 45.3 | 29.7 | 24.4 | 5.3 |

| FGRR (Ours) | 42.2 | 21.1 | 30.2 | 21.9 | 30.0 | 50.5 | 32.7 | 24.4 | 8.3 |

| Methods | Backbone | AP on car | AP* on car | Gain |

|---|---|---|---|---|

| MTOR [19] | ResNet-50 | 46.6 | 39.4 | 7.2 |

| AFAN [78] | ResNet-50-FPN | 45.5 | 32.9 | 12.6 |

| SA-DA-Faster [79] | ResNet-50-FPN | 55.8 | 36.7 | 19.1 |

| DA-Faster [16] | VGG-16 | 38.9 | 34.6 | 4.3 |

| SWDA [18] | VGG-16 | 40.1 | 34.6 | 5.5 |

| HTCN [21] | VGG-16 | 42.5 | 34.6 | 7.9 |

| CDTD [99] | VGG-16 | 42.6 | 34.6 | 8.0 |

| ATF [77] | VGG-16 | 42.8 | 34.6 | 8.2 |

| UMT [75] | VGG-16 | 43.1 | 34.3 | 8.8 |

| MeGA-CDA [74] | VGG-16 | 44.8 | 34.3 | 10.5 |

| MAF [20] | VGG-16 | 41.1 | 30.1 | 11.0 |

| Noise Labeling [101] | VGG-16 | 42.6 | 31.1 | 11.5 |

| DBGL [30] | VGG-16 | 42.7 | 34.6 | 8.1 |

| Ours | VGG-16 | 44.5 | 34.6 | 9.9 |

5.3 Comparisons with State-of-the-Arts

5.3.1 Comparison Methods

We compare the proposed FGRR with state-of-the-art DAOD methods. (1) Domain alignment: Domain adaptive Faster-RCNN (DA-Faster) [16], Strong-Weak Distribution Alignment (SWDA) [18], Domain Diversification and Multi-domain-invariant Representation Learning (DD-MRL) [100], Collaborative Training (CT) [72], Augmented Feature Alignment Network (AFAN) [78], CDTD [99], and Scale-Aware DA-Faster (SA-DA-Faster) [79]. (2) Local alignment: Mean Teacher with Object Relations (MTOR) [19], Selective Cross-Domain Alignment (SCDA) [17], Multi-Adversarial Faster-RCNN (MAF) [20], Conditional Domain Normalization (CDN) [71], Progressive Disentanglement (PD) [26], Image-level Categorical Regularization and Categorical Consistency Regularization (ICR-CCR) [24], Coarse-to-Fine (C2F) [23], Asymmetric Tri-way Faster-RCNN (ATF) [77], Graph-induced Prototype Alignment (GPA) [22], Hierarchical Transferability Calibration Network (HTCN) [21], Prior-Adversarial Loss (PAL) [73], Memory Guided Attention for Category-Aware Domain Adaptation (MeGA-CDA) [74], Vector-Decomposed Disentanglement (VDD) [76], and Dual Bipartite Graph Learning (DBGL) [30].

For all the aforementioned methods, we cite the quantitative results from their original papers. Source Only stands for the model that is trained only using source images and directly evaluated on the target domain without any adaptation. To facilitate a fair comparison, we utilize the gain of performance compared to the Source Only model as the main evaluation metric by following [23].

5.3.2 Results and Discussion

Real-to-Artistic. Table I, Table III, and Table IV report the adaptation results of Pascal VOC Clipart, Pascal VOC Watercolor, and Pascal VOC Comic. The proposed FGRR significantly outperforms all comparison methods on different real-to-artistic adaptation tasks. In particular, compared to the preliminary version (DBGL), the proposed FGRR improves over its results by +2.2% on average (41.6% 43.3%, 53.8% 55.7%, and 29.7% 32.7%), which clearly demonstrates the significance of our improvements. In contrast to adversarial-based domain alignment (e.g., DA-Faster and SWDA) and local alignment approaches (e.g., MAF, HTCN, MeGA-CDA, and VDD), FGRR additionally explore the topological interactions on both intra- and inter-domains to enable fine-grained knowledge transfer. Our better performance over them can explicitly testify the effectiveness of these two components. ATF resorts to the tri-training strategy to solve the labeling unfairness problem between source and target domains, and thus is complementary to our FGRR. That is to say, our work focuses on adapting internal features without requiring model ensemble (e.g., mean-teacher [19] and tri-training [77]). The better performance of FGRR further verifies the importance of endowing DAOD model with the relational reasoning ability. In addition, since the proposed model explicitly consider the imbalance problem in DAOD, the per-class adaptation performance is more balanced. For instance, in Table IV, the performances of “bird”, “car” “cat”, and “dog” are balanced, and their average performance is substantially improved at the same time.

Normal-to-Foggy. Table II displays the results on adaptation from Cityscapes to Foggy-Cityscapes. This scenario is the most widely used DAOD datasets, and contains distinct object co-occurrence, backgrounds, and weather. The source and target images are originally the same one, which makes this task more suitable for explicit local feature alignment approaches, such as HTCN [21] and MeGA-CDA [74]. As can be seen, the proposed FGRR achieves a remarkable increase of +20.5% over the Source Only model on adaptation between these two similar domains, verifying the robustness and effectiveness of FGRR on the different DAOD scenario. The results also reveal several interesting observations.

-

1.

The series of holistic feature alignment approaches (such as DA-Faster, SWDA, and DD-MRL) demonstrate inferior performance since they do not consider the local nature of object detection problem. In other words, the objects of interest only take a small portion of the whole image, and thus globally aligning features between domains is prone to result in negative transfer.

-

2.

Local alignment approaches (such as SCDA, C2F, MeGA-CDA, HTCN, and VDD) change the focus of the adaptation from holistic to local by virtue of elaborate local alignment methods regarding the foreground objects. In this way, they substantially improve the adaptation performance compared to holistic alignment approaches.

-

3.

MTOR explores the object relation within and between domains in the context of a mean-teacher framework. However, they focus on optimizing consistency regularization without delving into the interactions and dependencies of different foreground objects. By contrast, the proposed FGRR utilizes the bipartite graph to model the cross-domain relations where two set of features are aggregated over the spatial and semantic spaces. Noting that FGRR significantly outperforms the result of MTOR by 5.7% (from 35.1% to 40.8%).

-

4.

C2F and GPA aim to achieve the cross-domain semantic consistency based on prototype alignment. In particular, GPA utilizes the graph-based information propagation to obtain the prototype representation of each category. However, these two approaches heavily rely on the region proposal step to generate instance-level features, which may be noisy due to the error accumulation problem. More importantly, they neglect the valuable low-level features and the rich interactions among objects of different categories. Our better performance over them (from 38.6% and 39.5% to 40.8%) further reveals the importance of relational reasoning for DAOD tasks.

-

5.

In essence, the classical one-vs-one alignment (such as adversarial training) can be seen as the simplest case of relational reasoning. Most conventional domain adaptation methods assume that perfect alignment equals to precise knowledge transfer, while the many-vs-many relations between different entities are ignored. Moreover, alignment-based approaches naturally neglect the intra-domain relations as no entities can be explicitly aligned within each domain. In this regard, our work gives a hint to bridge the gap between alignment-based and relational reasoning based DAOD.

Synthetic-to-Real. Table V presents the results on adaptation from Sim10K to Cityscapes. From the table, we can observe that the proposed FGRR algorithm significantly improves the baseline methods. The domain disparity of this adaptation task mainly stems from the difference of image style, making it more sophisticated than normal-to-foggy adaptation where only weather changes. In that case, we argue that FGRR explicitly extract the source and target foreground pixels and regions to promote the positive interactions of foregrounds via relational reasoning procedure as well as mitigate the negative influence of background noises. By comparison, prior efforts, which aim at either holistic image alignment or local region alignment, cannot ensure the precise knowledge transfer among foreground objects across domains. In particular, methods introducing a FPN architecture would significantly improve over the baseline models. The justification is that this adaptation task focuses on detecting cars which have large scale variances across different scenes, naturally fitting the feature pyramid property of FPN architecture. However, most existing works do not integrate the FPN into their detection backbone, and it may be non-trivial to combine them especially when the DAOD methods have adaptation modules within the backbone networks. To facilitate a fair comparison, we follow the mainstream settings.

Cross-Site Mammogram Mass Detection. Table VI provides the experimental results on mammogram mass detection, which targets on testing the scalability and efficacy of the proposed FGRR on real-world applications. Cross-site mammogram mass detection substantially differs from adaptation based on nature images due to the particular challenges of sparse distribution and tiny size of lesions, difference of imaging quality as well as existence of hard mimics. According to the table, we can see that the proposed FGRR outperforms all comparison methods by a large margin on this challenging adaptation task (from 38.4% to 43.9%), clearly demonstrating the effect of our approach on highlighting foreground pixels versus regions and explicitly modeling foreground interactions on both intra- and inter-domains. Moreover, such practical application reveal the potential of DAOD model for improving complex problems.

| Methods |

aero |

bcycle |

bird |

boat |

bottle |

bus |

car |

cat |

chair |

cow |

table |

dog |

hrs |

bike |

prsn |

plnt |

sheep |

sofa |

train |

tv |

mAP | mAP* | Gain |

| DANN [36] | 24.1 | 52.6 | 27.5 | 18.5 | 20.3 | 59.3 | 37.4 | 3.8 | 35.1 | 32.6 | 23.9 | 13.8 | 22.5 | 50.9 | 49.9 | 36.3 | 11.6 | 31.3 | 48.0 | 35.8 | 31.8 | 26.7 | 5.1 |

| DT+PL w/o label [102] | 16.8 | 53.7 | 19.7 | 31.9 | 21.3 | 39.3 | 39.8 | 2.2 | 42.7 | 46.3 | 24.5 | 13.0 | 42.8 | 50.4 | 53.3 | 38.5 | 14.9 | 25.1 | 41.5 | 37.3 | 32.7 | 26.7 | 6.0 |

| WST [80] | 30.8 | 65.5 | 18.7 | 23.0 | 24.9 | 57.5 | 40.2 | 10.9 | 38.0 | 25.9 | 36.0 | 15.6 | 22.6 | 66.8 | 52.1 | 35.3 | 1.0 | 34.6 | 38.1 | 39.4 | 33.8 | 26.7 | 7.1 |

| BSR [80] | 26.3 | 56.8 | 21.9 | 20.0 | 24.7 | 55.3 | 42.9 | 11.4 | 40.5 | 30.5 | 25.7 | 17.3 | 23.2 | 66.9 | 50.9 | 35.2 | 11.0 | 33.2 | 47.1 | 38.7 | 34.0 | 26.7 | 7.3 |

| BSR+WST [80] | 28.0 | 64.5 | 23.9 | 19.0 | 21.9 | 64.3 | 43.5 | 16.4 | 42.2 | 25.9 | 30.5 | 7.9 | 25.5 | 67.6 | 54.5 | 36.4 | 10.3 | 31.2 | 57.4 | 43.5 | 35.7 | 26.7 | 9.0 |

| I3Net [103] | 30.0 | 67.0 | 32.5 | 21.8 | 29.2 | 62.5 | 41.3 | 11.6 | 37.1 | 39.4 | 27.4 | 19.3 | 25.0 | 67.4 | 55.2 | 42.9 | 19.5 | 36.2 | 50.7 | 39.3 | 37.8 | 26.7 | 11.1 |

| DBGL [30] | 23.2 | 65.5 | 30.1 | 18.3 | 24.6 | 67.6 | 43.9 | 15.1 | 38.7 | 36.4 | 31.3 | 20.2 | 25.0 | 74.3 | 55.1 | 38.2 | 12.5 | 41.0 | 49.1 | 43.9 | 37.7 | 26.7 | 11.0 |

| FGRR (Ours) | 33.4 | 69.5 | 26.4 | 20.8 | 27.4 | 58.1 | 42.3 | 14.2 | 42.0 | 39.7 | 30.4 | 19.6 | 28.7 | 76.4 | 56.7 | 40.6 | 8.5 | 41.3 | 52.1 | 44.5 | 38.6 | 26.7 | 11.9 |

| Source Domain | Pascal VOC | Pascal VOC | Pascal VOC | Cityscapes | Sim10k | Public | Avg |

|---|---|---|---|---|---|---|---|

| Target Domain | Clipart1k | Watercolor2k | Comic2k | Foggy-Cityscapes | Cityscapes | In-house | |

| Source Only | 27.8 | 44.6 | 24.4 | 20.3 | 34.6 | 12.0 | 27.3 |

| FGRR w/o PRR | 41.0 | 53.6 | 30.2 | 38.6 | 42.5 | 40.0 | 41.0 |

| FGRR w/o SRR | 41.4 | 53.0 | 30.9 | 37.8 | 41.9 | 39.5 | 40.8 |

| FGRR w/o IOR | 42.4 | 54.8 | 31.4 | 40.5 | 43.3 | 41.6 | 42.3 |

| PRR w/o inter | 41.8 | 54.0 | 30.9 | 39.8 | 42.7 | 41.6 | 41.8 |

| PRR w/o intra | 42.5 | 54.4 | 31.0 | 40.1 | 43.2 | 42.6 | 42.3 |

| SRR w/o inter | 41.2 | 53.7 | 30.2 | 39.4 | 42.6 | 42.1 | 41.5 |

| SRR w/o intra | 42.4 | 54.5 | 30.7 | 39.9 | 43.0 | 42.4 | 42.1 |

| PRR w/o BGL | 41.4 | 53.9 | 31.9 | 39.6 | 42.9 | 43.0 | 42.1 |

| PRR w/ random link | 39.1 | 52.6 | 28.5 | 37.7 | 40.1 | 39.8 | 39.6 |

| SRR w/o BGL | 40.9 | 53.3 | 31.4 | 39.4 | 43.0 | 40.4 | 41.4 |

| SRR w/o CDA | 42.3 | 54.8 | 31.5 | 40.0 | 43.1 | 42.3 | 42.3 |

| FGRR (Full) | 43.3 | 55.7 | 32.7 | 40.8 | 44.5 | 43.9 | 43.5 |

| Methods | bike | bird | car | cat | dog | person | mAP | mAP* | Gain |

| DANN [36] | 73.4 | 41.0 | 32.4 | 28.6 | 22.1 | 51.4 | 41.5 | 47.1 | -5.6 |

| BSR [80] | 82.8 | 43.2 | 49.8 | 29.6 | 27.6 | 58.4 | 48.6 | 47.1 | 1.5 |

| WST [80] | 77.8 | 48.0 | 45.2 | 30.4 | 29.5 | 64.2 | 49.2 | 47.1 | 2.1 |

| BSR+WST [80] | 75.6 | 45.8 | 49.3 | 34.1 | 30.3 | 64.1 | 49.9 | 47.1 | 2.8 |

| I3Net [103] | 81.1 | 49.3 | 46.2 | 35.0 | 31.9 | 65.7 | 51.5 | 47.1 | 4.4 |

| DBGL [30] | 84.0 | 46.7 | 45.5 | 36.2 | 35.7 | 63.7 | 52.0 | 47.1 | 4.9 |

| FGRR (Ours) | 81.1 | 41.4 | 53.5 | 40.7 | 35.0 | 67.5 | 53.2 | 47.1 | 6.1 |

| Methods | bike | bird | car | cat | dog | person | mAP | mAP* | Gain |

| DANN [36] | 33.3 | 11.3 | 19.7 | 13.4 | 19.6 | 37.4 | 22.5 | 21.9 | 0.6 |

| BSR [80] | 45.2 | 15.8 | 26.3 | 9.9 | 15.8 | 39.7 | 25.5 | 21.9 | 3.6 |

| WST [80] | 45.7 | 9.3 | 30.4 | 9.1 | 10.9 | 46.9 | 25.4 | 21.9 | 3.5 |

| BSR+WST [80] | 50.6 | 13.6 | 31.0 | 7.5 | 16.4 | 41.4 | 26.8 | 21.9 | 4.9 |

| I3Net [103] | 47.5 | 19.9 | 33.2 | 11.4 | 19.4 | 49.1 | 30.1 | 21.9 | 8.2 |

| DBGL [30] | 45.4 | 15.9 | 24.8 | 11.5 | 29.4 | 55.1 | 30.4 | 21.9 | 8.5 |

| FGRR (Ours) | 49.8 | 18.0 | 29.3 | 13.8 | 25.5 | 55.2 | 31.9 | 21.9 | 10.0 |

5.4 FGRR for Adapting One-Stage Object Detector

Most existing DAOD approaches are built on the top of Faster R-CNN detection framework and, consequently, how to adapting one-stage object detectors is yet to be thoroughly studied. In this section, we generalize the proposed FGRR algorithm for adapting one-stage detectors. Following the only two works [80, 103] that have tackled the task of adapting one-stage detectors, we adopt SSD300 [28] framework with VGG-16 [97] architectures. To facilitate a fair comparison, we also follow them [80, 103] to conduct experiments on three adaptation tasks, i.e., PASCAL VOC Clipart, PASCAL VOC Watercolor2k, and Pascal VOC Comic2k. In experiments, we resize the input images to 300 300 and conduct data augmentations by following [28, 80]. The batch size is set as 16 to fit the GPU memory.

The adaptation results are presented in Table VII, Table IX, and Table X respectively. The proposed FGRR outperforms all the baseline methods in terms of mAP. It is noteworthy that FGRR improves over the results of DBGL by +1.2% on average (37.7% 38.6%, 52.0% 53.2%, and 30.4% 31.9%). DANN stands for the method that embeds the vanilla adversarial feature adaptation mechanism into the detection pipeline. From the result, we can see that simply incorporating the alignment module with one-stage detectors achieves inferior performance. For example, in the task of PASCAL VOC Clipart, the Source Only model unexpectedly outperforms DANN by a large margin (from 41.5% to 47.1%), implying that DANN is prone to cause negative transfer in this case. By comparison, vanilla adversarial adaptation module could substantially improve the Source Only baseline when adapting Faster R-CNN (such as DA-Faster [16]). This phenomenon indicates that it is non-trivial to extend a DAOD algorithm to adapting one-stage detectors. WST+BSR [80] is tailored for SSD framework and thus demonstrates strong efficacy. In particular, I3Net implicitly learn instance-invariant features by changing the emphasis of adaptation from holistic to local, i.e., attending to discriminative regions versus samples and aligning semantic-level representations. However, joining the practice of prior two-stage DAOD methods, this work focuses on one-to-one matching and neglects the significance of exploring many-to-many relationships. In this regard, the proposed FGRR not only highlights the foreground pixels and regions without introducing any special modules but also tackles the intra- and inter-domain relational reasoning problems via graph-based structures in the context of DAOD. The better performance achieved by our method verifies this point.

5.5 Further Empirical Analysis

5.5.1 Ablation Study

We investigate the individual effects of each proposed module by performing in-depth and complete ablation studies. We evaluate several variants of FGRR: (1) FGRR w/o PRR, FGRR w/o SRR, and FGRR w/o IOR: remove the pixel-level and semantic relational reasoning modules as well as the image-level object-aware reweighting module from the full FGRR model respectively. (2) PRR w/o inter and PRR w/o intra: remove the inter- and intra-domain reasoning modules from the PRR respectively. SRR w/o inter and SRR w/o intra: remove the inter- and intra-domain reasoning modules from the SRR respectively. (3) PRR w/o BGL: directly learn the cross-domain one-vs-one correspondence based on searched foreground pixel pairs without introducing bipartite graph. PRR w/ random link: randomly select pixels to construct bipartite graph. (4) SRR w/o BGL: directly learn the cross-domain one-vs-one correspondence based on foreground proposals without introducing bipartite graph.. SRR w/o CDA: remove the category-aware domain alignment module from SRR. The results of ablation study are summarized in Table VIII. From the table, we have the following observations. (1) Respectively removing the PRR and SRR components from the full FGRR model will lead to substantial performance degeneration, clearly revealing their individual effects and the complementary effect between intra- and inter-domain relational reasoning. (2) The results of FGRR w/o IOR verify that estimating the object-level variations based on image-level features can harmonize the adaptation process and thus provide better knowledge transfer. (3) Inter-domain and intra-domain relational reasoning modules make almost equal contributions to the final performance, highlighting the necessity of considering them in a unified framework. (4) The performances of PRR w/o BGL and SRR w/o BGL drop significantly compared to the full FGRR model, demonstrating the importance of performing relational reasoning compared to conventional local and global alignment approaches. (5) The results of PRR w/ random link reveal that randomly linking graph node will bring in a number of noisy connections and thereby cause significant performance degeneration, showing the superiority of graph construction strategies in our method.

5.5.2 Influence of IoU threshold

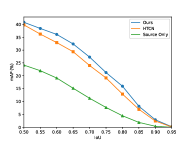

Considering that the IoU threshold is an important factor that affects the detection results, we study the adaptation performance of three models (i.e., Source Only, HTCN [21], and FGRR) with different IoU threshold on Cityscapes Foggy-Cityscapes and Pascal VOC Clipart1k. The results are reported in Figure 3, where we can see that 1) the mAP gradually decreases with the increasing of IoU threshold and approaches zero at the end, and 2) the proposed FGRR consistently outperforms the comparison models on different threshold, revealing the effectiveness of our FGRR on providing robust and precise bounding boxes prediction.

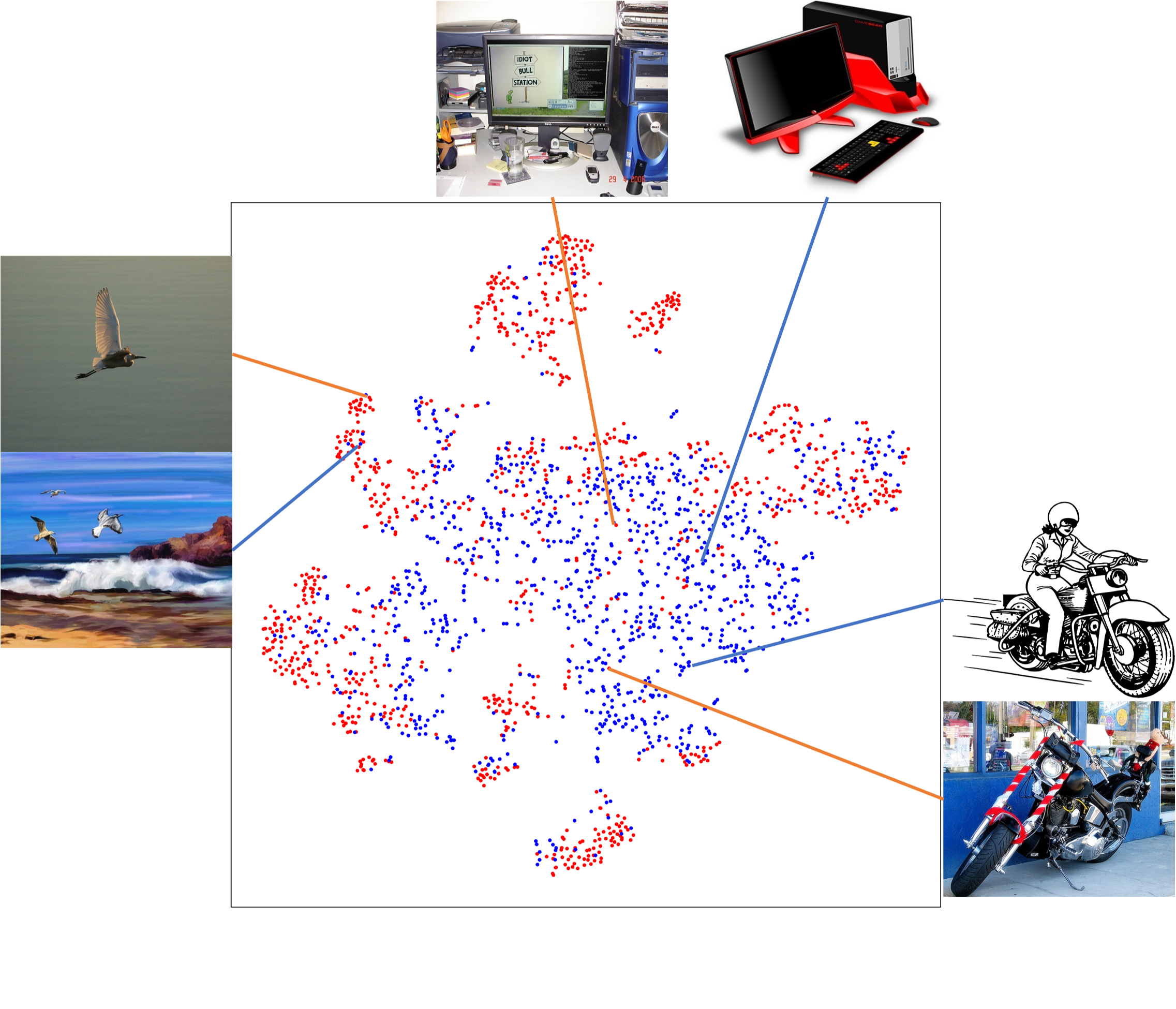

5.5.3 Feature Visualization

Figure 4 visualizes the image-level features generated by Source Only, HTCN and our FGRR using t-SNE algorithm on adaptation task Pascal VOC Clipart1k. As can be seen, for our FGRR model, images that have similar semantic information will get much closer in the feature space compared to the other models. It is noteworthy that when compared to Source Only and HTCN, FGRR does not match the source and target features more compact, but improves the adaptation performance by a large margin in terms of mAP, implying that globally matching features cannot ensure the fine-grained knowledge transfer. This result also verifies the hypothesis in [18] that weak global alignment helps the adaptation of object detectors.

5.5.4 Qualitative Detection Results

Figure 5, Figure 6, and Figure 7 provide some detection results on the target domain based on different DAOD methods, i.e., Source Only, HTCN [21], and FGRR. From the figures, we can see that the proposed FGRR is significantly better than the compared methods in terms of giving accurate boxes regression and object classification. More specifically, FGRR is capable of precisely detecting those sample-scarce or/and hard-to-classify categories, obscured or/and tiny foreground objects, as well as largely reducing the false positive results.

6 Conclusion

In this paper, we delve into the relational reasoning problem for domain adaptive object detection. A Foreground-aware Graph-based Relational Reasoning (FGRR) framework is introduced to explicitly endow the detection models with the capability of reasoning over relations between foreground objects on both pixel-level and semantic-level. The proposed FGRR first identifies the foreground pixels and regions to represent graph nodes. Then, the graph edges are constructed by regularizing the affinity between nodes, and finally, the inter- and intra-domain relations is learned separately via bipartite graph learning and graph attention mechanisms. Experiments on four DAOD benchmarks validated the effectiveness of the proposed FGRR.

References

- [1] J. Quionero-Candela, M. Sugiyama, A. Schwaighofer, and N. D. Lawrence, Dataset shift in machine learning. The MIT Press, 2009.

- [2] A. Torralba and A. A. Efros, “Unbiased look at dataset bias,” in CVPR, 2011, pp. 1521–1528.

- [3] S. J. Pan and Q. Yang, “A survey on transfer learning,” IEEE Transactions on knowledge and data engineering, vol. 22, no. 10, pp. 1345–1359, 2010.

- [4] B. Gong, Y. Shi, F. Sha, and K. Grauman, “Geodesic flow kernel for unsupervised domain adaptation,” in CVPR, 2012, pp. 2066–2073.