Relating-Up: Advancing Graph Neural Networks through Inter-Graph Relationships

Abstract

Graph Neural Networks (GNNs) have excelled in learning from graph-structured data, especially in understanding the relationships within a single graph, i.e., intra-graph relationships. Despite their successes, GNNs are limited by neglecting the context of relationships across graphs, i.e., inter-graph relationships. Recognizing the potential to extend this capability, we introduce Relating-Up, a plug-and-play module that enhances GNNs by exploiting inter-graph relationships. This module incorporates a relation-aware encoder and a feedback training strategy. The former enables GNNs to capture relationships across graphs, enriching relation-aware graph representation through collective context. The latter utilizes a feedback loop mechanism for the recursively refinement of these representations, leveraging insights from refining inter-graph dynamics to conduct feedback loop. The synergy between these two innovations results in a robust and versatile module. Relating-Up enhances the expressiveness of GNNs, enabling them to encapsulate a wider spectrum of graph relationships with greater precision. Our evaluations across 16 benchmark datasets demonstrate that integrating Relating-Up into GNN architectures substantially improves performance, positioning Relating-Up as a formidable choice for a broad spectrum of graph representation learning tasks.

1 Introduction

Graph Neural Networks (GNNs) have emerged as a specialized tool for graph representation learning, achieving remarkable success in node classification [1], link predictions [2], as well as graph classification [3, 4, 5] and graph clustering [6, 7] tasks, etc. The prevailing designs of modern GNNs employ message-passing strategies, excelling in capturing node relationships within a single graph by recursively aggregating information from neighbors to refine node representation. As depicted in Figure 1, despiting the effectiveness of this representation learning paradigm [8, 9], existing GNN architectures is limited focusing to single graph, i.e., intra-graph relationships, the exploration of insightful relationships across graphs, i.e., inter-graph relationships, has neglected. For instance, consider the challenge in chemistry of distinguishing chiral molecules. While these molecules may seem similar in structure or atomic composition, their unique interactions in ligand or enzyme-catalyzed reactions highlight distinct differences. Thus, there is a need to move beyond single graph and devise method to extract relationships spanning graphs.

Capturing and utilizing inter-graph relationships remains an challenging problem. A straightforward approach relies on heuristical design, utilizing data augmentation [10, 11] to generate variation graphs from a vicinity around training graphs to enriching the training distribution. Other methods, such as contrastive learning [12], by emphasizing intra-consistency and inter-differences, aspire to group similar entities while distancing diverse entities. However, these methods remain inherently bound to the paradigm of intra-graph focus, limiting their ability in explicitly unveiling inter-graph relationships.

In this paper, we introduce Relating-Up, a novel module designed to enhance existing GNN architectures by dynamically utilizing inter-graph relationships. Unlike previous efforts that seek performance improvements through complex and specialized techniques, Relating-Up offers a straightforward yet effective approach to enhance GNNs by tapping into underutilized context of relationships between graphs. Specifically, Relating-Up incorporates a feedback loop mechanism that enriches graph representation learning by bridging conventional graph representation with an innovative relation-aware representation. Through continuous mutual refinement, these representation spaces significantly improve the expressiveness and accuracy of graph representations, deepening our understanding of inter-graph dynamics. Our assessments on various benchmark datasets reveal that incorporating the Relating-Up module into GNN architectures significantly improves their effectiveness. This positions Relating-Up as a strong option for a wide range of tasks in graph representation learning.

In summary, our contributions are highlighted as follows:

-

1.

Relating-Up introduces the concept of inter-graph dynamics into GNN architectures, enabling these models to understand and leverage the contextual information present across graphs.

-

2.

We propose an feedback training strategy that iteratively refines graph representations by leveraging insights from inter-graph dynamics, ensuring that the learning process is not static but evolves dynamically, leading to more robust and generalizable graph models.

-

3.

Relating-Up is designed to be a versatile, plug-and-play module that can be integrated seamlessly with existing GNN architectures, ensuring compatibility across a wide range of models and expanding the applicability of GNNs.

2 Related Work

2.1 Graph Neural Networks

GNNs attempt to exploit the relational information in real-world, thereby enhancing various downstream fields, such as molecules[13], recommendation[14], and social networks[15, 16]. Pioneering architectures such as GCNs [1], GraphSAGE [17], GATs [18], GIN [8] have ingeniously introduced the convolution operation to graphs. The foundational philosophy behind these designs is the recursive aggregation of information from neighboring nodes [19, 8], epitomizing the classic GNN paradigm that emphasizes neighborhood aggregation to discern local intra-graph relationships. In the specific field of graph classification, pooling is usually used to obtain the representation of the entire graph [20, 3, 21]. These methods form efficient representation of the entire graph by shrinking graph into different scales. Although many variants of GNNs with different neighborhood aggregation and graph-level pooling schemes have been proposed, their main focus remains on intra-graph, mapping the representation of a graph by summarizing node information enhanced through intra-graph structure, inadvertently neglecting inter-graph relations.

2.2 Graph Data Augmentation

Despite the exceptional prowess of GNNs in theoretical settings, their practical efficacy on real-world graphs often remains precarious [22]. To address the overreliance on labeled data and intrinsic oversomoothing issue, graph data augmentation has emerged as a pivotal technique to enhance the robustness and generalization of GNNs[23]. Graph data augmentation typically involves creating synthetic graphs [11] or modifying existing ones [24] to reflect potential relationships within a transformation neighborhood, encouraging the model to learn more generalizable patterns. While these approaches internally exploit the relationships among variants graphs within a transformation neighborhood [25], they do not explicitly facilitate learning inter-graph relationships, which is a critical aspect of comprehensively understanding graph data.

2.3 Graph Contrastive Learning

Graph contrastive learning is a burgeoning area in graph representation learning, focused on extracting meaningful patterns from graphs by emphasizing contrasts between predefined graph relationships. Graph contrastive learning aim to maximizing the similarity between representations of similar graphs while minimizing it for dissimilar ones [12, 26]. However, this approach encounters a critical limitation in its inability to adaptively handle inter-graph relationships. The effectiveness of graph contrastive learning is contingent upon predefined priors knowledge, which limits its scope of application. It often struggles to autonomously uncover hidden or implicit relationships across graphs, thereby overlooking insightful connections.

2.4 Advancement in Inter-graph Relationships

Recent advancements have utilized semi-supervised learning and class-aware refinements for graph classification, such as SEAL [15] and CARE [27]. Diverging from these methods, our proposed module introduces a dynamic mechanism that continuously evolves graph representations by leveraging inter-graph relationships, enhancing robustness and contextual awareness. Unlike SEAL, Relating-Up adaptively uncovers complex relationships across graphs. Furthermore, it extends the scope of graph representation by exploring the varied structural and feature-based relationships across graphs, beyond traditional class labels.

Overall, our proposed Relating-Up addresses these shortcomings by inherently leveraging inter-graph relationships. It adaptively and dynamically learns the complex relationships across graphs, offering a more comprehensive and insightful perspective of graph data.

3 Preliminaries

In this section, we introduce the fundamental concepts and notations that underpin our work, providing a groundwork for understanding our proposed Relating-Up module.

3.1 Notations

In the context of graph representation learning tasks, we consider a graph denoted as , where represents the set of nodes and signifies the set of edges. Each node is characterized by a feature vector , and the total number of nodes is given by . The edges, , play a pivotal role as they define the intra-graph relationships between the nodes.

The primary objective in graph representation learning tasks is to learn a function . The is responsible for encoding the graph into an informative representation space , which is instrumental for various downstream tasks. Specifically, for graph classification tasks, this involves combining a classification head trained on a set of graphs to facilitate accurate classification.

3.2 Graph Encoder

To effectively capture the intricate relationships among nodes, GNN architectures recursively updating node representations according to the intra-graph relationships among neighboring nodes using a message passing strategy, formalized as follows:

| (1) |

where represents the node representation of node after the layer, with initialized as . The message-passing strategy for each GNN layer simplifies to , where denotes the neighborhood of node , representing intra-graph relationships. The functions and are parametric functions designed to aggregate neighborhood information and combine them to update node representation.

By stacking multiple message-passing layers, a set of node representations is produced. The overall graph representation, , is then obtained through a function as defined by:

| (2) |

where is a permutation invariance function to capture the structural nuances of the graph.

3.3 Graph Classification

For a given graph , the graph encoder transform individual graph structures and proprieties into fixed-dimensional representations . Subsequently, is fed into the classification head, denoted as , to obtain class probabilities. The loss function is typically defined as the CrossEntropy of the predictions over the labels:

| (3) |

where denotes the predicted probability for graph belonging to class , and is the ground truth label.

4 The Proposed Methods

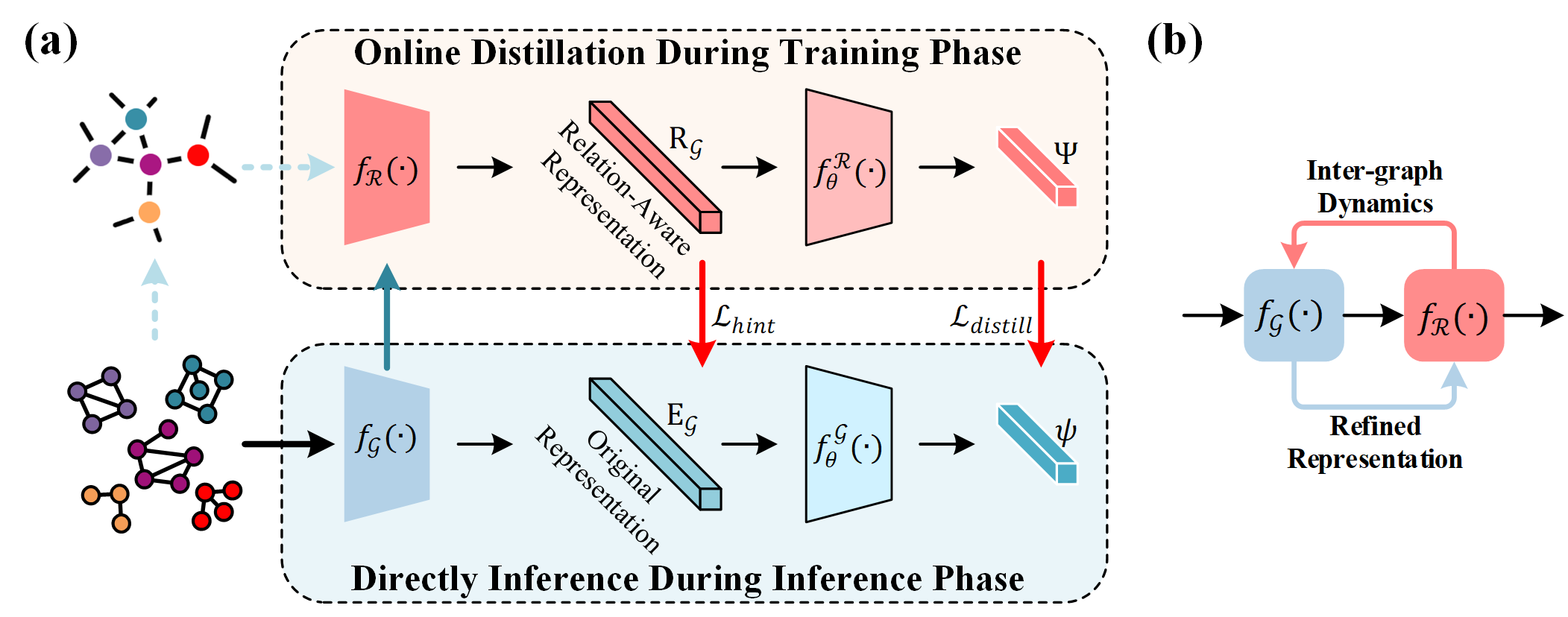

In this section, we describe the proposed Relating-Up module. As shown in in Figure 2, Relating-Up consisting of three key components: a graph encoder, a relation encoder, and a feedback training strategy. By focusing on the extension of GNNs rather than introducing an entirely new framework, Relating-Up respects the foundational strengths of GNNs while addressing their limitations in considering inter-graph relationships.

4.1 Graph Encoder

In Relating-Up, we implement graph encoder by stacking multiple GNN layers, which extracts a fixed-dimensional representation for each graph . Notably, Relating-Up is designed for integrate seamlessly with various GNN backbones without altering their fundamental operations.

4.2 Relation Encoder

Upon deriving the graph representations, the relation encoder, denoted as , dynamically interprets the relationships between these graphs. Specifically, it processes a set of graph representations, symbolized as . Each layer of consists of a multi-head self-attention (MSA) mechanism and a feed-forward network (FFN) block, each followed by a layer normalization (LN). The MSA, pivotal to the encoder, dynamically computing inter-graph relationships as:

| (4) |

where , , represent queries, keys, and values, respectively, derived from the graph representations. is the batch size during the training phase. Each head in the MSA captures unique relationship patterns, with their outputs concatenated and linearly transformed for comprehensive relationship encoding:

| (5) |

where the operations for parallel projections are parameter , , and , where stands for the number of attention heads. The output projection matrix is . Then, the inter-graph relation-aware representation at -th relation layer is formulated as:

| (6) |

where denotes the refined relation-aware representations, now enriched with inter-graph relationship information. This approach allows Relating-Up to dynamically and adaptively learns the nuanced relationships across graphs, which is crucial for tasks that require a comparative understanding of the inter-graphs relationships.

4.3 Feedback Training Strategy

We introduce an innovative feedback training strategy as a pivotal component of the Relating-Up module. Algorithm 1 reports the pseudo-code of the feedback training strategy. This strategy is ingeniously designed to dynamically utilize the potential inter-graph relationships. Specifically, as shown in Figure 2 (b), we utilize a feedback loop mechanism to progressively refine graph representations. Mathematically, this strategy involves minimizing the discrepancy between the original graph representation space and relation-aware representation space,ensuring effective integration of insights from the relation-aware space into the original graph representation. To achieve this, we construct the feedback loop mechanism by two classifier and after graph encoder and relation encoder, respectively. The relation-aware insights from relation encoder are then distilled and conveyed to graph encoder to established a dynamic feedback loop:

| (7) |

where is the CrossEntropy loss for the labeled graph entities from the and . The is formulated as:

| (8) |

where is the Kullback-Leibler divergence, . and are and predicted probability distribution, respectively. is the temperature of distillation with a larger makes the probability distribution softer. The relation hints is defined as the inexplicit knowledge in relation-aware representation to guide to glimpse the global landscape of during the training period. It works by decreasing the L2 distance between and :

| (9) |

| Dataset | GraphSAGE | GCN | GAT | GIN | ||||

|---|---|---|---|---|---|---|---|---|

| Original | Relating-Up | Original | Relating-Up | Original | Relating-Up | Original | Relating-Up | |

| MUTAG | 83.04±11.20 | 85.67±7.45 | 84.09±10.23 | 86.20±7.87 | 81.93±11.90 | 85.09±9.40 | 85.70±9.08 | 86.70±7.55 |

| PTC-FM | 61.89±4.24 | 64.48±5.83 | 60.73±3.05 | 64.18±4.63 | 60.18±7.02 | 63.91±5.51 | 57.89±4.13 | 64.47±4.06 |

| PTC-MM | 61.61±6.96 | 66.40±6.30 | 65.20±5.21 | 67.30±4.90 | 64.59±5.16 | 65.77±5.63 | 62.53±7.91 | 66.38±4.10 |

| PTC-FR | 66.11±2.77 | 67.24±4.45 | 65.81±2.57 | 66.94±4.13 | 65.25±2.41 | 67.81±1.84 | 66.07±4.28 | 68.93±5.56 |

| PTC-MR | 59.85±7.45 | 61.04±8.26 | 56.65±6.08 | 61.55±9.24 | 57.07±8.31 | 60.99±6.94 | 57.56±6.29 | 62.46±5.80 |

| COX2 | 81.60±4.24 | 83.70±5.36 | 81.37±4.48 | 83.07±4.66 | 77.94±1.02 | 79.23±2.03 | 83.50±3.63 | 83.72±4.10 |

| COX2-MD | 60.43±10.44 | 66.40±8.61 | 57.48±7.54 | 66.73±7.83 | 65.08±9.01 | 67.04±9.03 | 60.46±9.03 | 67.63±8.40 |

| PROTEINS | 72.68±2.65 | 74.66±3.25 | 73.49±3.71 | 74.84±3.78 | 73.22±2.09 | 74.62±3.46 | 73.67±3.57 | 75.91±4.41 |

| DD | 77.93±3.08 | 78.27±3.06 | 76.66±3.50 | 77.84±3.30 | 76.18±3.70 | 78.27±2.61 | 76.57±2.55 | 79.12±3.84 |

| NCI1 | 76.41±2.13 | 76.73±2.16 | 76.33±2.53 | 77.19±1.65 | 67.01±2.30 | 67.88±1.56 | 79.39±1.42 | 80.66±2.65 |

| NCI109 | 75.41±2.98 | 75.70±2.22 | 75.02±2.35 | 76.75±2.17 | 66.97±2.89 | 66.42±2.41 | 78.41±2.45 | 80.63±2.10 |

| ENZYMES | 36.83±6.21 | 40.83±4.23 | 38.33±6.15 | 40.83±3.59 | 28.67±4.70 | 30.33±4.33 | 40.00±5.77 | 44.50±6.06 |

| IMDB-B | 72.00±2.57 | 73.10±2.47 | 73.00±3.41 | 74.50±3.35 | 71.70±2.37 | 73.50±2.55 | 73.70±2.69 | 75.80±3.49 |

| IMDB-M | 50.93±3.53 | 51.53±4.30 | 50.87±3.64 | 52.13±3.51 | 50.80±3.62 | 51.53±2.88 | 49.40±3.39 | 52.00±3.35 |

| REDDIT-B | 74.80±1.91 | 75.01±1.83 | 74.75±3.30 | 78.05±3.12 | 74.75±1.57 | 75.49±1.78 | 73.70±3.71 | 78.90±2.77 |

| REDDIT-M5 | 34.65±1.70 | 35.23±0.80 | 36.89±1.88 | 39.65±1.11 | 33.83±1.11 | 33.67±1.66 | 51.23±2.71 | 54.11±2.10 |

By embedding a feedback strategy that iteratively refines graph representations with the relation-aware insights, this strategy significantly enhances the capability of GNNs to understand and utilize the rich and complex tapestry of inter-graph relationships.

4.4 Inference

During training phase, the relation encoder and relation-aware classifier actively enhance the graph encoder with deep insights into inter-graph relationships. This process embeds a rich understanding of these relationships directly into the graph representations, ensuring that the encoder captures not only intra-graph features but also the nuanced dynamics between different graphs. As a result, during inference phase, the reliance shifts solely to this enriched graph encoder and original graph representation classifier for direct classification. This shift is underpinned by the premise that the relational insights, once integrated into the graph representations during training, remain inherently effective for classification tasks. The elimination of the relation encoder and relation-aware classifier during inference significantly streamlines the process, optimizing for speed and computational efficiency while maintaining the robustness and accuracy imparted during training.

5 Experiments

| Backbone | Method | Validation | Test |

|---|---|---|---|

| GCN | Original | 81.23±1.14 | 76.09±0.45 |

| Relating-Up | 85.53±0.44 | 77.84±0.81 | |

| GCN-Virtual | Original | 83.98±1.00 | 74.90±0.86 |

| Relating-Up | 85.87±0.90 | 76.96±0.76 | |

| GIN | Original | 81.16±0.77 | 74.58±1.13 |

| Relating-Up | 82.87±0.42 | 75.48±1.09 | |

| GIN-Virtual | Original | 84.70±0.54 | 76.65±2.11 |

| Relating-Up | 82.56±1.21 | 78.87±1.16 |

In this section, we conduct comprehensive experiments to assess the effectiveness of the proposed method, which includes comparison experiments, empirical exploration of the proposed method, and ablation experiments. More exploratory experimental results are presented in Appendix. Our code is available at https://anonymous.4open.science/r/RelatingUp-Q417.

Dataset. In our experiments, we use a diverse collection of 17 graph classification benchmark datasets, covering datasets from the bioinformatics domains to the social domains. Specifically, this collection includes 16 datasets collected from the TUDataset [28] and the OGBG-MOLHIV dataset collected from the Open Graph Benchmark (OGB) [29]. Detailed statistics and properties of these datasets are presented in Table S1.

Model. The proposed Relating-Up module is a plug-and-play designed for seamless integration with existing graph representation learning architectures. We evaluate the effectiveness of Relating-Up with (1) different GNN backbones, including GCN [1], GraphSAGE [17], and GIN [8]; (2) various graph pooling methods include global pooling, DiffPool, global attention pooling, GAPool, and self-attention graph pooling, SAPool [21, 30]; (3) other graph representation learning paradigms based on graph augmentation and contrastive learning that required explicit definition of graph relationships, e.g., graph augmentation, mixup for graph classification [25], GraphCL [12], MVGRL[26].

Implement Details. To ensure fair comparisons, we standardized hyperparameters across various GNN based architectures. Specifically, each model was configured with five GNN layers with each comprising 128 hidden units. We employed a mini-batch size of 128 and a dropout ratio of 0.5. For optimization, the Adam optimizer was employed, starting with a 0.01 learning rate, halved every 50 epochs.

| Dataset | DiffPool | GAPool | SAGPool | |||

| Original | Relating-Up | Original | Relating-Up | Original | Relating-Up | |

| MUTAG | 76.54±7.00 | 80.61±4.45 | 76.61±8.97 | 78.22±8.25 | 84.04±6.29 | 84.53±8.73 |

| PTC-FM | 55.01±5.17 | 59.09±3.54 | 63.05±5.09 | 65.04±6.10 | 61.63±8.00 | 64.76±4.37 |

| PTC-MM | 58.03±3.93 | 61.24±1.96 | 65.20±5.18 | 68.16±5.24 | 64.03±6.97 | 68.46±6.19 |

| PTC-FR | 61.75±6.11 | 63.93±2.77 | 65.23±3.88 | 67.80±2.61 | 65.50±6.67 | 68.37±3.73 |

| PTC-MR | 54.70±2.80 | 55.03±2.71 | 58.12±3.95 | 61.05±6.61 | 56.38±3.82 | 60.16±3.82 |

| COX2 | 77.20±2.71 | 78.52±1.88 | 82.86±3.06 | 83.26±2.38 | 80.30±4.05 | 83.29±4.25 |

| COX2-MD | 53.21±3.44 | 57.91±2.87 | 58.10±6.01 | 65.70±6.57 | 62.10±9.21 | 66.37±8.49 |

| PROTEINS | 66.86±2.98 | 71.34±2.19 | 70.43±3.19 | 73.03±4.11 | 73.84±4.10 | 75.91±3.70 |

| DD | 72.32±1.93 | 74.62±1.32 | 73.51±4.09 | 74.15±2.97 | 75.46±2.55 | 78.02±2.02 |

| NCI1 | 69.77±1.17 | 71.02±0.77 | 74.53±2.10 | 74.86±3.42 | 73.65±3.44 | 75.75±2.70 |

| NCI109 | 67.29±2.02 | 69.43±1.34 | 73.42±2.11 | 73.75±2.70 | 74.10±2.08 | 75.83±2.12 |

| ENZYMES | 30.80±4.34 | 32.63±2.73 | 36.83±6.21 | 37.33±5.28 | 34.50±6.01 | 38.83±5.78 |

| IMDB-BINARY | 64.80±3.95 | 66.65±1.54 | 71.10±3.11 | 74.70±2.65 | 72.60±3.72 | 74.30±2.79 |

| IMDB-MULTI | 42.53±2.53 | 45.73±2.05 | 50.53±3.05 | 52.87±3.17 | 49.07±3.13 | 52.00±3.40 |

| REDDIT-BINARY | 75.16±1.20 | 76.88±2.61 | 74.80±1.91 | 75.44±1.96 | 89.02±2.49 | 89.40±2.54 |

| REDDIT-MULTI-5K | 30.71±1.31 | 32.75±1.95 | 34.65±1.70 | 34.83±1.73 | 50.73±1.85 | 52.09±3.03 |

Evaluation Protocol. To ensure our evaluation protocol adheres to high standards of reproducibility and comparability, we used a 10-fold cross-validation strategy, dividing each dataset into three parts: 80% for training, 10% for validation, and 10% for testing. During training, we kept track of the validation accuracy after each epoch. Our early stopping criterion halted training when there was no improvement in validation accuracy for 100 epochs, within a total training span of 300 epochs. The epoch with the highest validation accuracy was considered optimal. Finally, we assessed this optimal performance on the test data, ensuring our results were consistent and reliable.

| Dataset | Aug | Mixup | GraphCL | MVGRL | Relating-Up |

| MUTAG | 83.40±11.18 | 84.04±9.65 | 81.32±5.99 | 82.12±9.21 | 86.70±7.55 |

| PTC-FM | 60.45±5.33 | 61.61±4.63 | 58.13±4.31 | 59.29±6.70 | 64.47±4.06 |

| PTC-MM | 63.39±5.83 | 64.90±6.10 | 65.57±8.42 | 63.93±11.2 | 66.38±4.10 |

| PTC-FR | 66.79±7.43 | 66.64±3.32 | 65.42±8.86 | 66.69±7.64 | 68.93±5.56 |

| PTC-MR | 57.64±6.50 | 59.58±5.66 | 59.29±7.51 | 58.40±8.28 | 62.46±5.80 |

| COX2 | 81.09±3.55 | 81.80±4.71 | 82.97±4.43 | 82.85±4.63 | 83.72±4.10 |

| COX2-MD | 60.81±8.08 | 61.10±8.15 | 60.43±6.91 | 64.37±9.30 | 67.63±8.40 |

| PROTEINS | 73.60±4.32 | 73.82±4.73 | 74.02±6.16 | 75.11±4.83 | 75.91±4.41 |

| DD | 78.18±2.96 | 77.76±4.13 | 78.32±8.36 | OOM | 79.12±3.84 |

| NCI1 | 75.65±2.49 | 78.39±1.63 | 77.18±2.23 | 73.65±3.48 | 80.66±2.65 |

| NCI109 | 78.84±3.02 | 78.83±2.19 | 78.04±1.26 | 73.27±2.31 | 80.63±2.10 |

| ENZYMES | 40.33±3.09 | 40.33±3.09 | 41.83±4.81 | 41.33±5.78 | 42.33±5.59 |

| IMDB-BINARY | 71.90±2.47 | 73.80±3.12 | 72.90±3.39 | 71.80±4.21 | 75.80±3.49 |

| IMDB-MULTI | 51.47±3.21 | 50.80±3.08 | 50.33±2.24 | 49.20±3.99 | 52.00±3.35 |

| REDDIT-BINARY | 71.60±3.48 | 71.45±2.16 | 73.30±2.62 | 77.40±3.62 | 78.90±2.77 |

| REDDIT-MULTI-5K | 46.73±1.84 | 45.91±4.00 | 51.63±3.15 | OOM | 54.11±2.10 |

5.1 Performance comparison with GNN backbones

GNN Backbones. In keeping with the focus of our research on enhancing GNN architectures to leverage inter-graph relationships, we primarily compare our module against widely used GNN backbones. Specifically, we assess the performance improvements achieved by integrating the Relating-Up module with various GNN backbones. The comparative results, as depicted in Table 1, illustrate both the original performance of the GNN backbones and the enhancements gained through Relating-Up integration. The most outstanding results within each group are highlighted in bold. Our experiments reveal that Relating-Up consistently boosts the performance of GNN backbones across diverse datasets. Additionally, we have also evaluated Relating-Up using the OGBG-MOLHIV dataset. As shown in Table 2, the results demonstrate significant improvements in ROC-AUC scores with the integration of Relating-Up. These improvements underscore the efficacy of the Relating-Up module in enhancing the predictive performance of GNN backbones on a challenging dataset."

Empirical Exploration. As shown in Figure 3 and 4, the embedding distribution learned by the Relating-Up is more compact in the same class and more separated in different classes. This enhancement results from the ability to link and integrate relationships across graphs, an aspect often neglect in standard GNN architectures. Overall, these results reinforce the effectiveness of Relating-Up module and highlight the versatility and robustness of Relating-Up as a valuable enhancement to graph representation learning.

Advanced Pooling Methods. Additionally, as presented in Table 3, Relating-Up demonstrates consistent improvements across various datasets with advanced pooling methods. In the bioinformatics domain, the average performance boost is 2.53±1.57% across all pooling methods, with individual improvements of 2.66±1.42% for DiffPool, 2.04±2.05% for GAPool, and 0.90±1.20% for SAGPool. This highlights its potential to significantly impact graph pooling scenarios, with a slightly more pronounced effect on pooling methods compared to the underlying GNN backbones. In social domain datasets, the average performance increase across pooling methods is 1.83±1.04%, with individual improvements of 2.2±0.68% for DiffPool, 1.69±1.58% for GAPool, and 1.59±1.05% for SAGPool.

Other Competitive Methods. The experimental results detailed in Table 5 demonstrate that Relating-Up exhibits competitive performance compared to other methods that claim to incorporate inter-graph relationships.

Hyperparameter Sensitivity. To balance the feedback training strategy, three hyper-parameters , , and are introduced. Through the experiments detailed in Figure S1, we find out that these hyperparameters have impacts on the performance.

Batch Size. We examines the Relating-Up response to varying batch size in GNN architectures, as detailed in Table S3. The results indicate Relating-Up are not significantly hindered by the variations in batch size.

| Backbone | Method | MUTAG | PROTEINS | DD | NCI1 |

|---|---|---|---|---|---|

| GCN | CARE | 79.80±12.89 | 71.97±5.65 | 75.97±4.54 | 79.39±2.03 |

| Relating-Up | 86.20±7.87 | 74.84±3.78 | 77.84±3.30 | 77.19±1.65 | |

| GIN | CARE | 85.18±8.36 | 72.51±6.89 | 74.79±4.10 | 81.30±1.14 |

| Relating-Up | 86.70±7.55 | 75.91±4.40 | 79.12±3.84 | 80.66±2.65 |

| MUTAG | PTC-FM | COX2 | COX2-MD | PROTEINS | DD | NCI1 | |||

| A1 | ✘ | ✘ | 82.06±9.39 | 58.45±7.46 | 77.95±0.90 | 51.15±1.30 | 68.85±6.65 | 70.35±9.22 | 72.78±8.13 |

| A2 | ✘ | ✔ | 85.12±9.70 | 60.14±5.29 | 82.23±3.14 | 66.42±8.85 | 75.20±2.76 | 77.96±3.86 | 79.51±1.77 |

| A3 | ✔ | ✘ | 80.88±11.05 | 63.61±4.09 | 80.07±4.24 | 52.82±7.35 | 74.30±3.10 | 75.64±4.18 | 77.13±6.82 |

| Full Model | ✔ | ✔ | 86.70±7.55 | 64.47±4.06 | 83.72±4.10 | 67.63±8.40 | 75.91±4.41 | 79.12±3.84 | 80.66±2.65 |

Time Efficiency. Our computational efficiency study detailed in Appendix D reveal that while our module introduces additional computational steps, the overall increase in processing time is marginal compared to the significant performance gains achieved.

5.2 Performance comparison with other graph representation learning paradigms

To further evaluate the effectiveness of proposed Relating-Up, we compare it against other graph representation learning paradigms. The comparison includes GraphAug, Mixup for graph, GraphCL, and MVGRL. These methods represent a diverse set of strategies for enhancing graph representation learning. The experimental results are shown in the Table 4. It can been seen from the experiments results that Relating-Up consistently outperforms these methods that require an predefined relationships. We attributed this improvement to the dynamic understanding inter-graph relationships of Relating-Up.

5.3 Ablation Studies

The feedback training strategy entails dual-focused training: firstly on the original graph representations, and secondly on the relation-aware representations distilled from inter-graph relationships. Our experimental setup included three variations: (A1) a GNN module implementing Relating-Up without feedback training strategy; A GNN model with Relating-Up but (A2) remove , and (A3) remove . The experimental results, detailed in Table 6, showed an intriguing trend. The standalone implementation of relation-aware representation (A1) did not surpass baseline modules in performance, which emphasizes the importance of the feedback training strategy in the Relating-Up framework. The results demonstrate that effective integration and utilization of insights from inter-graph relationships hinge on a complete feedback training strategy.

6 Conclusion

In this work, we introduced Relating-Up, an innovative module designed to enhance GNNs by enabling them to dynamically explore and leverage inter-graph relationships. Integrating a relation-aware encoder with a feedback training strategy, Relating-Up transforms GNNs beyond their standard intra-graph focus, allowing them to capture complex dynamics across various graphs. This module, adaptable to a wide range of existing GNN architectures, significantly boosts their performance across diverse benchmark datasets. By providing a deeper, more nuanced understanding of graph data, Relating-Up marks a significant advancement in graph representation learning.

Limitation. While Relating-Up presents significant benefits, it may require some adaptation to seamlessly integrate with unsupervised graph representation learning paradigms, especially those based on contrastive learning. However, we believe that with further research and development, this limitation can be addressed, making it more readily extendable to unsupervised learning paradigms.

References

- [1] Thomas N. Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings, 2017.

- [2] Muhan Zhang and Yixin Chen. Link prediction based on graph neural networks. In Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, December 3-8, 2018, Montréal, Canada, pages 5171–5181, 2018.

- [3] Muhan Zhang, Zhicheng Cui, Marion Neumann, and Yixin Chen. An end-to-end deep learning architecture for graph classification. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), New Orleans, Louisiana, USA, February 2-7, 2018, pages 4438–4445, 2018.

- [4] Federico Errica, Marco Podda, Davide Bacciu, and Alessio Micheli. A fair comparison of graph neural networks for graph classification. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020, 2020.

- [5] Xiaotong Zhang, Han Liu, Qimai Li, and Xiao-Ming Wu. Attributed graph clustering via adaptive graph convolution. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, August 10-16, 2019, pages 4327–4333, 2019.

- [6] Kiarash Zahirnia, Oliver Schulte, Parmis Naddaf, and Ke Li. Micro and macro level graph modeling for graph variational auto-encoders. In Advances in Neural Information Processing Systems 35: Annual Conference on Neural Information Processing Systems 2022, NeurIPS 2022, New Orleans, LA, USA, November 28 - December 9, 2022, 2022.

- [7] Xiaotong Zhang, Han Liu, Qimai Li, and Xiao-Ming Wu. Attributed graph clustering via adaptive graph convolution. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, August 10-16, 2019, pages 4327–4333, 2019.

- [8] Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019, 2019.

- [9] Asiri Wijesinghe and Qing Wang. A new perspective on "how graph neural networks go beyond weisfeiler-lehman?". In The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022, 2022.

- [10] Tong Zhao, Wei Jin, Yozen Liu, Yingheng Wang, Gang Liu, Stephan Günneman, Neil Shah, and Meng Jiang. Graph data augmentation for graph machine learning: A survey. IEEE Data Engineering Bulletin, 2023.

- [11] Johannes Klicpera, Stefan Weißenberger, and Stephan Günnemann. Diffusion improves graph learning. In Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, pages 13333–13345, 2019.

- [12] Yuning You, Tianlong Chen, Yongduo Sui, Ting Chen, Zhangyang Wang, and Yang Shen. Graph contrastive learning with augmentations. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020.

- [13] Jun Xia, Yanqiao Zhu, Yuanqi Du, and Stan Z. Li. A systematic survey of chemical pre-trained models. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, IJCAI 2023, 19th-25th August 2023, Macao, SAR, China, pages 6787–6795, 2023.

- [14] Shiwen Wu, Fei Sun, Wentao Zhang, Xu Xie, and Bin Cui. Graph neural networks in recommender systems: A survey. ACM Comput. Surv., 55(5):97:1–97:37, 2023.

- [15] Jia Li, Yongfeng Huang, Heng Chang, and Yu Rong. Semi-supervised hierarchical graph classification. IEEE Trans. Pattern Anal. Mach. Intell., 45(5):6265–6276, 2023.

- [16] Yongji Wu, Defu Lian, Yiheng Xu, Le Wu, and Enhong Chen. Graph convolutional networks with markov random field reasoning for social spammer detection. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020, pages 1054–1061, 2020.

- [17] William L. Hamilton, Zhitao Ying, and Jure Leskovec. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, pages 1024–1034, 2017.

- [18] Petar Velickovic, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. Graph attention networks. In 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings, 2018.

- [19] Keyulu Xu, Chengtao Li, Yonglong Tian, Tomohiro Sonobe, Ken-ichi Kawarabayashi, and Stefanie Jegelka. Representation learning on graphs with jumping knowledge networks. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, volume 80 of Proceedings of Machine Learning Research, pages 5449–5458, 2018.

- [20] David Duvenaud, Dougal Maclaurin, Jorge Aguilera-Iparraguirre, Rafael Gómez-Bombarelli, Timothy Hirzel, Alán Aspuru-Guzik, and Ryan P. Adams. Convolutional networks on graphs for learning molecular fingerprints. In Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, December 7-12, 2015, Montreal, Quebec, Canada, pages 2224–2232, 2015.

- [21] Junhyun Lee, Inyeop Lee, and Jaewoo Kang. Self-attention graph pooling. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA, volume 97 of Proceedings of Machine Learning Research, pages 3734–3743, 2019.

- [22] Muhammet Balcilar, Pierre Héroux, Benoit Gaüzère, Pascal Vasseur, Sébastien Adam, and Paul Honeine. Breaking the limits of message passing graph neural networks. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event, volume 139 of Proceedings of Machine Learning Research, pages 599–608, 2021.

- [23] Kaize Ding, Zhe Xu, Hanghang Tong, and Huan Liu. Data augmentation for deep graph learning: A survey. SIGKDD Explor., 24(2):61–77, 2022.

- [24] Pál András Papp, Karolis Martinkus, Lukas Faber, and Roger Wattenhofer. Dropgnn: Random dropouts increase the expressiveness of graph neural networks. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, virtual, pages 21997–22009, 2021.

- [25] Yiwei Wang, Wei Wang, Yuxuan Liang, Yujun Cai, and Bryan Hooi. Mixup for node and graph classification. In WWW ’21: The Web Conference 2021, Virtual Event / Ljubljana, Slovenia, April 19-23, 2021, pages 3663–3674, 2021.

- [26] Kaveh Hassani and Amir Hosein Khas Ahmadi. Contrastive multi-view representation learning on graphs. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event, volume 119 of Proceedings of Machine Learning Research, pages 4116–4126, 2020.

- [27] Jiaxing Xu, Jinjie Ni, Sophi Shilpa Gururajapathy, and Yiping Ke. A class-aware representation refinement framework for graph classification, 2022.

- [28] Kristian Kersting, Nils M. Kriege, Christopher Morris, Petra Mutzel, and Marion Neumann. Benchmark data sets for graph kernels, 2016.

- [29] Weihua Hu, Matthias Fey, Marinka Zitnik, Yuxiao Dong, Hongyu Ren, Bowen Liu, Michele Catasta, and Jure Leskovec. Open graph benchmark: Datasets for machine learning on graphs, 2021.

- [30] Boris Knyazev, Graham W. Taylor, and Mohamed R. Amer. Understanding attention and generalization in graph neural networks. In Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, pages 4204–4214, 2019.

Appendix A Algorithm of feedback training strategy

Our proposed feedback training strategy is summarized in Algorithm 1.

Appendix B Detailed Experiment Settings

B.1 Datasets

| Dataset | #Graph | #Classes | #Nodes | #Edges | #Features | |

| Bio. | MUTAG | 188 | 2 | 17.93 | 19.97 | 7 |

| PTC-FM | 349 | 2 | 14.11 | 14.48 | 18 | |

| PTC-MM | 351 | 2 | 14.56 | 15.00 | 19 | |

| PTC-FR | 336 | 2 | 13.97 | 14.32 | 20 | |

| PTC-MR | 344 | 2 | 14.29 | 14.69 | 18 | |

| COX2-MD | 303 | 2 | 26.28 | 335.12 | 7 | |

| COX2 | 467 | 2 | 41.22 | 43.45 | 35 | |

| PROTEINS | 1113 | 2 | 39.06 | 72.82 | 3 | |

| DD | 1178 | 2 | 284.32 | 715.66 | 89 | |

| NCI1 | 4110 | 2 | 29.87 | 32.30 | 37 | |

| NCI109 | 4127 | 2 | 29.68 | 32.13 | 38 | |

| ENZYMES | 600 | 6 | 32.63 | 64.14 | 3 | |

| Social. | IMDB-BINARY | 1000 | 2 | 19.77 | 96.53 | - |

| IMDB-MULTI | 1500 | 3 | 13.00 | 69.54 | - | |

| REDDIT-BINARY | 2000 | 2 | 429.63 | 497.75 | - | |

| REDDIT-MULTI-5K | 4999 | 5 | 508.52 | 594.87 | - | |

All dataset are publicly available and represent a relevant subset of those most frequently used in literature for GNNs comparison. Specifically, this collection includes 16 datasets collected from the TUDataset [28] and the OGBG-MOLHIV dataset collected from the Open Graph Benchmark (OGB) [29] . The bioinformatics domain including MUTAG, PTC-FM, PTC-MM, PTC-FR, PTC-FM, COX2, COX2-MD, PROTEINS, DD, NCI1, NCI109, and ENZYMES. The social networks datasets including IMDB-BINARY, IMDB-MULTI and REDDIT-BINARY, REDDIT-MULTI-5K. The addition of OGBG-MOLHIV further enriches our experiments by incorporating a more challenge modern dataset. Notably, since the node features are not present for social domain datasets, we assign a uniform uninformative feature to all nodes for REDDIT datasets, and we we utilize one-hot encoding of node degrees for IMDB datasets [4]. Detailed statistics and properties of these datasets are presented in Table S1.

For all datasets, we employed a 10-fold cross-validation strategy for all datasets, dividing each dataset into training, validation, and test subsets in a 8:1:1 ratio, respectively. All experiments were conducted using the exact same fold index to ensure fair comparisons.

B.2 Model Architectures Hyperparameters

The hyperparameters of GNN backbone is configured with five GNN layers, each comprising 128 hidden units. Unless otherwise stated, we opted for a global sum pooling after GNNs to obtain graph representations. For graph augmentation, we random choice 2 compose from subgraphs induced by random walks (RWS), node dropping, feature masking, and edge removing. For graph contrastive learning model, i.e., GraphCL and MVGRL, the hyperparameters of these models are taken from the original official examples. For Relating-Up, we do a hyperparameter search of from , from , and from . All the codes were implemented using PyTorch and PyTorch Geometric packages. The experiments were conducted in a Linux server with Intel(R) Core(TM) i9-13900KF CPU (3.0GHz), GeForce GTX 4090 GPU, and 64GB RAM.

Appendix C Hyperparameter Analysis

In this section, we present a sensitivity analysis of hyperparameters for the proposed Relating-Up across four distinct dataset: MUTAG, PROTEINS, DD, and IMDB-BINARY. In our experimental setup, Relating-Up integrated with a 5 layers GIN backbone with each layer comprising 128 hidden units. We focus on three hyperparameters introduced by the feedback training strategy: (balancing cross-entropy loss and KL divergence, varied within the range of ), (L2 hint loss, tested across values in the specturm of ), and (the temperature parameter for KL divergence, experimented with in the range of ). The experimental results are shown in Figure S1.

C.1 Analysis of

serves as the balance between the cross-entropy loss for labeled graph entities and the KL divergence. It was observed that the optimal value of is highly dataset-specific. Lower values of tend to give more weight to the KL divergence, thus encouraging the model to leverage relation-aware representations more effectively. In contrast, higher values of emphasize the cross-entropy loss, focusing the model on learning from the original graph representations. The optimal value of depends on the specific characteristics of the dataset and the complexity of the graph structures involved.

C.2 Analysis of

The role of is to manage the representation hints loss, aiming to reduce the gap between the relation-aware and the original graph representations. Altering the value of has a significant impact on how the model aligns these two representation spaces. Higher values of enforce a stronger congruence, potentially leading to a more harmonious integration of knowledge from inter-graph relationships. However, too high a can result in overfitting to the relation-aware representations, compromising the generalization abilities.

C.3 Analysis of

The temperature parameter controls the softening of the probability distribution across classes. A higher leads to a softer distribution, which can be beneficial in scenarios where the inter-graph relationships are not entirely reliable or when a more generalized learning approach is desired.

C.4 Dataset-Specific Optimal Settings

Across all datasets, the results underscore the need for dataset-specific hyperparameter tuning in the Relating-Up module. The optimal settings for , , and varied notably across different datasets, reflecting the diverse nature of graph structures, complexity, and label distributions in graph-based learning tasks. This highlights the importance of a tailored approach in hyperparameter optimization for enhancing the performance of graph neural networks in various applications.

Appendix D Time Efficiency

The Relating-Up module incorporation of a relation encoder to process relationships across graph and employs a dynamic feedback training strategy to refine inter-graph relationships. Although incorporating these components introduce computational overhead during training, the exclusion of these components during the inference phase ensures that the time efficiency is comparable to conventional GNN models. To investigate the time efficiency of the Relating-Up module, we compared the runtime against standard GNN backbone. We conducted experiments on several representative datasets, measuring the time taken for training and inference per epoch.

D.1 Training Time

The training time of Relating-Up is expected to be longer than that of the baseline GNN models due to the additional components. Specifically, the relation encoder processes the set of all graph representations in a batch. This operation adds complexity and increases training time. Moreover, the feed learning strategy, also contributes to the longer training times as it requires additional forward and backward passes during the optimization process. Table S2 provides a comprehensive overview of the training times for various methods, including Relating-Up, on different datasets. The relative training time (compared to the GNN backbone) is boldfaced, while the actual time in seconds is italicized. As observed, Relating-Up exhibits a slight increase in training time compared to the original GNN backbones but remains significantly more efficient than other complex methods like MVGRL and GraphCL.

| Methods | MUTAG | PROTEINS | DD | IMDB-BINARY | |

|---|---|---|---|---|---|

| GCN | Original | 1 | 1 | 1 | 1 |

| 0.036 | 0.128 | 0.815 | 0.134 | ||

| MVGRL | 6.08 | 15.01 | 49.58 | 5.85 | |

| 0.216 | 1.924 | 40.402 | 0.782 | ||

| GraphCL | 2.0 | 2.0 | 2.2 | 1.61 | |

| 0.071 | 0.256 | 1.791 | 0.215 | ||

| Relating-Up | 1.22 | 1.15 | 1.02 | 1.12 | |

| 0.043 | 0.148 | 0.83 | 0.15 | ||

| GIN | Original | 1 | 1 | 1 | 1 |

| 0.036 | 0.128 | 0.815 | 0.134 | ||

| MVGRL | 6.57 | 14.73 | 55.48 | 9.0 | |

| 0.233 | 1.888 | 45.216 | 1.203 | ||

| GraphCL | 2.07 | 1.99 | 2.11 | 2.47 | |

| 0.074 | 0.256 | 1.719 | 0.331 | ||

| Relating-Up | 1.22 | 1.15 | 1.02 | 1.12 | |

| 0.043 | 0.148 | 0.83 | 0.15 |

D.2 Inference Time

As shown in Figure S2, during inference, the Relating-Up module operates more efficiently compared to the training phase. Since the relation encoder and the additional components for feedback training strategy are not utilized during inference, the time efficiency is comparable to the standard GNN models. This optimization ensures that while Relating-Up adds computational overhead during training, it does not significantly impact the performance during the inference phase, which is crucial for practical applications.

In summary, while Relating-Up introduces additional computational costs during training, it maintains efficient inference times. The extra time taken during training is a trade-off for the enhanced performance and the ability to leverage inter-graph relationships, which can be crucial for complex graph analysis tasks. The efficiency during inference makes Relating-Up a practical solution for real-world applications where quick response times are essential.

Appendix E Experimentation on Batch Size

| Dataset | Methods | Batch size = 16 | Batch size = 32 | Batch size = 128 |

|---|---|---|---|---|

| MUTAG | GCN | 82.49±11.31 | 86.20±10.31 | 84.09±10.23 |

| Relating-Up | 86.14±8.75 | 86.75±7.11 | 86.20±7.87 | |

| GIN | 84.04±6.29 | 81.37±11.17 | 85.70±9.08 | |

| Relating-Up | 86.20±7.14 | 86.20±8.51 | 86.70±7.55 | |

| PROTEINS | GCN | 73.66±3.41 | 74.56±3.25 | 73.49±3.71 |

| Relating-Up | 74.84±2.66 | 75.11±3.50 | 74.84±3.78 | |

| GIN | 74.39±3.33 | 73.49±3.56 | 73.67±3.57 | |

| Relating-Up | 74.84±2.76 | 75.11±2.17 | 75.91±3.41 | |

| DD | GCN | 77.25±2.07 | 76.07±3.56 | 76.66±3.50 |

| Relating-Up | 78.52±3.13 | 78.27±3.80 | 78.02±2.02 | |

| GIN | 76.31±2.44 | 76.32±3.31 | 76.57±2.55 | |

| Relating-Up | 78.44±2.96 | 78.45±2.77 | 79.12±3.84 | |

| IMDB-B | GCN | 74.20±2.89 | 72.80±3.68 | 73.00±3.41 |

| Relating-Up | 75.38±2.44 | 75.91±2.57 | 74.50±3.35 | |

| GIN | 72.40±3.98 | 73.70±3.26 | 73.70±2.69 | |

| Relating-Up | 74.70±2.28 | 74.40±2.80 | 75.80±3.49 |

The experimentation on batch size reveals how the Relating-Up module responds to different batch sizes when integrated with GNN architectures. The study covers batch sizes of 16, 32, and 128, providing a broad spectrum of sizes from small to large. This range allows for an in-depth analysis of the impact of batch size on the learning dynamics and performance of the Relating-Up-enhanced models. The results, presented in the table S3, illustrate the performance on various datasets, comparing the baseline GCN with the Relating-Up enhanced version across different batch sizes.

Notably, the Relating-Up module consistently outperforms the baseline GCN model across all batch sizes for each dataset. This indicates that the effectiveness of Relating-Up is not heavily dependent on the batch size, showcasing its robustness and adaptability. The ability of Relating-Up to enhance graph representation learning is evident across different batch configurations, suggesting that it can be effectively integrated into various GNN architectures without the need for extensive hyperparameter tuning related to batch size.

Furthermore, the relatively stable performance of Relating-Up across different batch sizes also indicates that the mechanisms for leveraging inter-graph relationships and incorporating feedback training strategies are not significantly hindered by the variations in batch size. This aspect is particularly important for practical applications where batch size may vary based on the available computational resources or specific requirements of the task.