Reinforcement Learning with Supervision from Noisy Demonstrations

Abstract.

Reinforcement learning has achieved great success in various applications. To learn an effective policy for the agent, it usually requires a huge amount of data by interacting with the environment, which could be computational costly and time consuming. To overcome this challenge, the framework called Reinforcement Learning with Expert Demonstrations (RLED) was proposed to exploit the supervision from expert demonstrations. Although the RLED methods can reduce the number of learning iterations, they usually assume the demonstrations are perfect, and thus may be seriously misled by the noisy demonstrations in real applications. In this paper, we propose a novel framework to adaptively learn the policy by jointly interacting with the environment and exploiting the expert demonstrations. Specifically, for each step of the demonstration trajectory, we form an instance, and define a joint loss function to simultaneously maximize the expected reward and minimize the difference between agent behaviors and demonstrations. Most importantly, by calculating the expected gain of the value function, we assign each instance with a weight to estimate its potential utility, and thus can emphasize the more helpful demonstrations while filter out noisy ones. Experimental results in various environments with multiple popular reinforcement learning algorithms show that the proposed approach can learn robustly with noisy demonstrations, and achieve higher performance in fewer iterations.

1. Introduction

In recent years, reinforcement learning (RL) has achieved significant progress as a method of building intelligent agents for decision making. The target of reinforcement learning is to find a policy to decide the optimal action given a state, such that the future reward received from the environment is maximized (Szepesvári, 2010; Kaelbling et al., 1996). However, RL algorithms usually require a huge number of interactions with the environment to learn an effective policy. For example, in Atari Games, 44 million frames are used to train the agent with model-free deep Q-learning (Hessel et al., 2018); in Go game, strategic policies that combined with search cost 40 days to learn the model (Silver et al., 2016, 2017); in Autonomous Driving, the agent attempts more than 310 thousand times to learn to park (Schulman et al., 2017). Obviously, such large scale interactions can be applied only in simulated environment, while remain impractical in most real world applications.

In fact, in many tasks, we may have some demonstration trajectories from experts as the supervised information, which can significantly reduce the number of interactions required for learning the policy. Reinforcement learning with expert demonstrations (RLED) is a framework to exploit such supervised information. The key idea of RLED is that RL algorithms can save many experiences by incorporating prior knowledge of various forms into the learning process. These methods usually work in a two-step manner, i.e., firstly pre-train through supervised learning from demonstrations and then learn policy by exploring the environment. For example, some methods combine an imitation hinge loss with the Q-learning loss to minimize the optimal Bellman residual (Hester et al., 2018; Piot et al., 2014), the Approximate Policy Iteration method use expert demonstrations to define linear constraints of the optimization problem (Kim et al., 2013), and in (Chemali and Lazaric, 2015), a classification-based policy iteration algorithm is proposed to imitate the expert policy. These methods typically assume the demonstrations are perfect, and their ultimate goal is to derive suitable behaviors from the demonstrations. Unfortunately, in real cases, demonstrations usually contain serious noises or even misleading information. As a result, the policy learned from the noisy demonstrations is less likely to be consistent with the ground-truth target, and thus may fail when applied in real tasks.

In this paper, instead of assuming that the demonstrations are perfect, we consider a more practical setting, where the demonstrations could be noisy or imperfect. We propose to learn the policy with the supervision from noisy demonstration (LfND for short). The basic idea is that, different demonstrations may have different effects to the policy learning, and even the same demonstration may contributes differently at different time. If we can accurately estimate the potential utility of each demonstration at each iteration, the supervised information can be exploited more adequately.

To implement this idea, we firstly form an instance for each step of the demonstration trajectory, which is a triplet of state, action and reward . Then, we define a joint loss function on the instance to let the agent simultaneously interact with the environment and exploit the expert demonstrations. On one hand, policy gradient is performed to maximize the expected reward; on the other hand, a cross entropy loss is defined to minimize the distance between the actions of the agent and the demonstration. Finally, we assign each instance with a weight based on the difference between the state-action value calculated from expert demonstration and the state value estimated by the state value function. This difference can be regarded as the expected gain of the value function for a specific instance, and thus measures its potential contribution to the policy learning. In other words, the noise or misleading demonstrations will be filtered out with small weights, while the useful demonstrations will be emphasized with large weights. By minimizing the weighted loss function over all instances, the demonstrations are fully exploited as a supervision to the environment exploration.

We implemented our approach with multiple popular reinforcement learning algorithms, and perform experiments in different environments. The results show that the policy learned by our method can lead to better performance in most cases. Especially the proposed method can robustly exploit the noisy demonstrations to significantly reduce the number of training iterations.

2. Related Work

In reinforcement learning, expert demonstrations have been shown to contribute effectively in challenging environments (Subramanian et al., 2016). Learning from demonstrations (LfD) (Schaal, 1997), which tries to clone the behavior from the demonstrations, is a typical framework to exploit the experts’ knowledge. Imitation learning is one of such approaches that directly trains an action predictor from demonstration data (Schaal, 1997; Atkeson and Schaal, 1997). Recently, some studies propose to explore an adversarial paradigm for the behavior cloning method (Ho and Ermon, 2016; Wu et al., 2019). Another popular paradigm is inverse reinforcement learning (IRL) (Ng et al., 2000; Abbeel and Ng, 2004; Ziebart et al., 2008), which expected to find a proper reward function that can explain the demonstrations as the optimal behavior. Some methods tried to shape reward using demonstrations or expert advice, which expected that the learned reward is more effective for agents to explore the environment (Brys et al., 2015; Suay et al., 2016).

Recently, more approaches try to directly learn with demonstration trajectories (Piot et al., 2014). Most of these methods focus on optimizing TD loss based on Q-learning models (Hester et al., 2018; Piot et al., 2014; Kim et al., 2013; Chemali and Lazaric, 2015), and thus are less suitable for problems with continuous action space. For example, DQfD (Hester et al., 2018) and OBR (Piot et al., 2014) are two similar methods, which perform imitation and exploration by combining TD loss and the classification loss. The process of AlphaGo (Silver et al., 2016) works in a different way to combine exploration and demonstration based on policy gradient algorithm. Specifically, AlphaGo firstly pre-trains a policy network from a dataset of 30 million expert state-action pairs, and then uses this network as a initialization to train policy net by applying policy gradient methods. Another approach to combine RL and demonstration is POfD (Kang et al., 2018), which also focuses on the policy-based method. Specifically, the POfD approach defines a Jensen-Shannon (JS) divergence between the learned policy and expert’s policy, and optimizes it through adversarial training on demonstrations.

A common assumption of the above methods is that the expert demonstrations are noise-free, which can hardly hold in real environments. Recently, there is one work called NAC (Gao et al., 2018) noticed the potential risk of imperfect demonstrations. The NAC approach defined an extra regular term to normalize the Q-function, which can reduce the Q-values of actions unseen in the demonstration data. However, this method focuses on improving the robustness of the model by regularization, and does not explicitly estimate the different contributions of demonstrations with different qualities.

3. The LfND Approach

In this section, we first formalize the framework for reinforcement learning with noisy demonstrations, and then introduce the proposed LfND approach in detail.

3.1. Problem Setting

We consider an agent interacting with the environment over a sequence of steps, which can be formalized as a Markov Decision Process . Here is a set of states, is a set of actions, is the disount factor, is the state transition probabilities, and is the reward function. At each step , with the state , the agent takes an action according to the policy , and receives a reward from the environment. The target is to maximize the discounted sum of rewards over all steps.

RL algorithms typically learn an effective control policy after many millions of steps, which is unacceptable in most real tasks. To overcome this problem, we can provide the agent with expert demonstrations to more effectively learn the policy . Formally, a trajectory is a sequence of observations, actions and rewards, , where is the -th action from experts, and is the terminal state. For convenience, we denote as an instance corresponding to the -th step of the trajectory .

As discussed in the Introduction, the trajectory collected from expert demonstration is usually imperfect, because it may contain serious noise or even some misleading information. For example, to train an agent to play Go game with human demonstrations, we should be aware that a trajectory win the game does not mean that it is optimal. The optimal demonstrations are hard to obtain caused by data collection noise or produced by the immaturity of the expert. It is more common that some parts of the demonstrations are optimal while the others are not. A naive approach to handle noisy demonstrations is to simply filter out the trajectories with low reward. However, a low reward does not necessarily imply that all the demonstration steps in the trajectory are useless. Instead, even for a noisy trajectory, some steps of it may still provide important information for the policy optimization. Moreover, even for the same step of a trajectory, it may contribute differently at different stages of the learning process, as the model changes.

Based on the above observations, we propose to adaptively estimate the potential utility of each instance at each iteration during the policy learning. Formally, we introduce a weight variable for each demonstration instance to estimate the potential value. Obviously, more useful instances should receive higher values of , while noisy instance should receive lower values of . After that, we try to learn the policy by allowing the agent to interact with the environment and exploit the supervised information from weighted demonstration instances.

3.2. Algorithm Detail

First of all, we compare the proposed framework with existing reinforcement learning with expert demonstrations (RLED) methods in Figure 1. The framework of previous approaches (Gao et al., 2018; Piot et al., 2014; Kim et al., 2013; Chemali and Lazaric, 2015; Silver et al., 2016) are demonstrated in Figure 1(a). They typically work in a two-steps way. In the first step, a supervised learning algorithm is employed to pre train the policy network based on the demonstrations. Then in the second step, the policy is optimized by allowing the agent to explore the environment. Obviously, the demonstration learning and environment exploring are performed separately, making it less possible to adaptively exploit the supervised information from the demonstration trajectories. More importantly, all the instances of the trajectories are uniformly used, without considering their potential utility. When the demonstration trajectories are imperfect, some noisy instances will mislead the policy optimization, and subsequently hurt the learning performance.

In contrast, the proposed LfND framework is demonstrated in Figure 1(b). First of all, it simultaneously learns from the demonstrations and the environment in a joint framework, which allows the agent to adaptively utilize the information from two sources. Unlike previous methods that assume the demonstrations are perfect, the proposed LfND framework introduces a weight variables for each instance to estimate its potential utility. Noticing that because the utility of a specific instance varies as the model changes, the weights are adaptively updated in different learning iterations. By optimizing the weighted loss over all instances, it is expected to emphasize the positive effects of good demonstrations while eliminate the negative effects of noisy demonstrations.

In the following part of this section, we will firstly introduce the joint training of the policy based on the noisy demonstrations and environment exploring, and then discuss on how to calculate the weights to accurately estimate the potential utility of demonstration instances.

To allow joint training of the agent by simultaneously learning from the demonstrations and exploring the environment, we define a joint objective function as in Eq. 1.

| (1) |

where and denote the loss for demonstration learning and environment exploring, respectively, and is a trade-off parameter.

For demonstration learning, as previously discussed, given a set of noisy trajectories, if we can estimate the potential utility of each instance accurately at each time, it is more effective to utilize these supervised information for policy joint optimization. In other words, our target is to utilize the right demonstration instances at the right time to learn an effective policy. Specifically, in the set of demonstration trajectories , each trajectory consists of (state, action, reward) instances as follows:

Given a state , based on the currently learned parameters of the policy network, the agent will return an action:

Note that we assume both and is a distribution over all actions, where each element describes the probability of taken the corresponding action. Then, to learn the policy from demonstration, we try to minimize the distance between and , forcing the agent to behave similarly to the expert. Formally, we define a weighted cross-entropy loss function over all trajectories as Eq. 2.

| (2) |

Obviously, by minimizing the loss function, the policy will be optimized to produce consistent actions with the experts, while the negative effect of noisy demonstrations will be eliminated by the weight . Note that we only introduce this straightforward implementation to validate our idea of learning from demonstrations. Other more advanced strategies may be employed to further improve the performance.

For environment exploring, we focus on policy gradient methods, which are popular and can handle tasks with continuous actions compared to Q-learning methods. As an example, we can employ the trust region policy optimization (TRPO) method (Schulman et al., 2015) to define the loss function as follows:

| (3) |

where is the policy parameters before the update, is an estimator of the advantage function, and the is the parameters of max trust region between the new policy and the old policy.

Alternatively, one can also use proximal policy optimization (PPO) algorithm (Schulman et al., 2017) to define as follows:

| (4) |

where denote the probability ratio . The second term of Eq 4, , modifies the surrogate objective by clipping the probability ratio, which prevents a big gap between the new and old policies (Schulman et al., 2017).

Finally, by substituting Eqs. 2 and 3 or 4 into Eq. 1, we get the final objective function of the proposed LfND approach. It can be observed that both the two terms of objective function are consistently trying to learn the policy distribution, and can be effectively solved with policy gradient methods.

Next, we discuss the estimation of the potential utility of each instance, i.e., calculating the weights for each instance . Intuitively, given a state , if the action given by the expert will lead to a higher expected reward than the currently learned policy, it is likely that the expert policy is superior to the agent policy, and thus such demonstration could be utilized to improve the policy network. We thus define the weight as follows:

| (5) |

where

Here, is the indicator function that the value is 1 when the condition is met, otherwise it is 0. is the state-action value function calculated from expert, which estimates the long-term reward of performing action in state . Note that this term is an accurate value because the whole trajectory is given. is the state value function from policy , which estimates the value of the state . It can be observed Eq. 5 has a similar form with the advantage function (Baird III, 1993; Wang et al., 2015). The difference is that advantage function aims at describing how good it is to select action in state under the current policy , and its function is approximated by policy .

Remarks: As we discussed before, the utility of a specific demonstration may vary over different learning stages as the model updates. For example, at the begining, the parameter of policy network is usually initialized to a small number that makes the is also a small number. Thus nearly all instances will be used to optimize the policy because the will be greater than zero for most instances. As the learning process goes on, the will increase, and the weights of some less useful instances will be zero so that these instances will not participate in the policy optimization. Note that, it can not work if we use advantage function instead of , because and are both approximated by policy , which can not estimate weights of instances.

Another case is that even the demonstration is noise, it is still possible to contribute to the policy optimizatioin. Intuitively, this instance may have a negative effect on optimizing a policy with high performance, but it still has a positive effect on the randomly initialized policy or a policy with poor performance. This also demonstrate that the proposed approach can dynamically adjust the weights through the current policy , and adaptively utilize the right instances at right time to jointly optimize the policy.

Although the weight defined in Eq. 5 can eliminate the negative effects of noisy demonstrations, it is unable to distinguish demonstrations with different degrees of positive effects. Therefore, we provide some other optional solutions to the weight estimation. Specifically, we define a linear form of weight as shown in Eq 6.

| (6) |

where is a hyperparameter, say, .

Similarly, we also define a logarithmic form of the weight as shown in Eq 7.

| (7) |

Obviously, Eq 6 and Eq 7 can better describe the importance of different demonstration instances. The comparison among these different implementations will be studied in the experiment section.

The process of the approach is summarized in Algorithm 1. Firstly, environment , observation space , action space and set of trajectory are given. Then the parameters represents the policy network is randomly initialized. We also introduce the memory to store tuples of agent interactions, which is initialized as an empty set. At each iteration, some trajectories generated by interaction between agent and environment are stored in memory , and then for each demonstration instance will be caculated by Eq. 6 or Eq. 7, finally will be optimized until convergence or desirable performance. When updating by minimizing the loss function in Eq. 1, can be solved with existing policy gradient algorithms.

4. Experiments

4.1. Settings

To validate the effectiveness of the proposed approach, we perform experiments in six commonly used MuJoCo (Todorov et al., 2012) environments, which are implemented in OpenAI Gym (Brockman et al., 2016). HalfCheetah: a simulated cheetah robot system with 17-dimensional state and 3-dimensional action, whose goal is to make the robot run as far as possible. Hopper: a one-legged robot, which is expected to hop forward as fast as possible in this environment. There is a 11-dimensional state space and a 3-dimensional action space. Humanoid: there is a bipedal robot in the Humanoid system with 376-dimensional state and 17-dimensional action. Its goal is to make it walk forward as fast as possible without falling over. HumanoidStandup: the HumanoidStandup system with 376-dimensional state and 17-dimensional action is trying to make the robot standing up as long as possible. InvertedPendulum: the goal of this environment is to prevent an agent which moves along a frictionless track from falling over. In this environment, a 4-dimensional state and a 1-dimensional continuous action is provided. Walker2d: the goal of Walker2d system is to make the robot run as fast as possible. The state is in 17-dimensional and the action is in 6-dimensional. Screen shots of the six environments are shown in Figure 2. For each environment, 10 demonstration trajectories are provided by a well trained agent, among which 5 trajectories contain noisy demonstrations. Specifically, all trajectories are generated by the trained agent, and each trajectory is from the initial state to the terminal state, where the noisy demonstrations are produced by the immature agent.

We respectively employ two state-of-the-art reinforcement learning algorithms, i.e., TRPO (Schulman et al., 2015) and PPO (Schulman et al., 2017), as the base model to implement our approach as well as other compared methods. The following methods are compared in the experiments:

-

•

Trust Region Policy Optimization (TRPO): A RL method performs strategy search in the trust region, and tries to ensure that the strategy of the next iteration will be better than the current strategy (Schulman et al., 2015).

-

•

Proximal Policy Optimization (PPO): A RL method that tries to optimize the lower bound of the clipped and unclipped objectives (Schulman et al., 2017).

-

•

Imitation Learning only (IL): Supervised imitation from expert demonstrations without any environment interaction.

-

•

Learning policy by AlphaGo (LbA): This method combines exploration and demonstration based on policy gradient method as in AlphaGo. Specifically, it firstly pre trains the policy network with expert demonstrations by supervised learning, and then trains the network by exploring the environment (Silver et al., 2016).

-

•

Policy Optimization from Demonstration (POfD): A latest RLED method that optimizes the JensenShannon (JS) divergence between the current policy and expert’s policy by adversarial training on demonstrations (Kang et al., 2018).

-

•

LfND: The approach proposed in this paper.

-

•

LfND without weight (LfND-noW): A degenerated version of the proposed method, which uses all the instances of the trajectories uniformly in the learning process.

The parameters of the two base models are set as recommended in the corresponding literatures. Specifically, for TRPO, the penalty coefficient is set to 0.01. For PPO, the parameter is set to 0.2. We use the same parameter setting for both our approach and the compared methods. The trade-off parameter of LfND is set to 1 as default. We use linear form of weight in experiments and set as default.

| Model | Methods | Environment | |||||

|---|---|---|---|---|---|---|---|

| HalfCheetah | Hopper | Humanoid | HumanoidStandup | InvertedPendulum | Walker2d | ||

| TRPO | TRPO | ||||||

| LfND-noW | |||||||

| IL | |||||||

| LbA | |||||||

| POfD | |||||||

| LfND | |||||||

| PPO | PPO | ||||||

| LfND-noW | |||||||

| IL | |||||||

| LbA | |||||||

| POfD | |||||||

| LfND | |||||||

| Model | Methods | Environment | |||||

|---|---|---|---|---|---|---|---|

| HalfCheetah | Hopper | Humanoid | HumanoidStandup | InvertedPendulum | Walker2d | ||

| TRPO | TRPO | ||||||

| LfND-noW | |||||||

| IL | |||||||

| LbA | |||||||

| POfD | |||||||

| LfND | |||||||

| PPO | PPO | ||||||

| LfND-noW | |||||||

| IL | |||||||

| LbA | |||||||

| POfD | |||||||

| LfND | |||||||

4.2. Performance comparison

We evaluate the performance of compared methods by plotting the reward curve with the number of training iterations increases. The results with TRPO as the base model are presented in Figure 3, while the results with PPO as the base model are shown in Figure 4. It is worthy to note that when comparing with a specific RL base algorithm, we use the same algorithm to implement LfND and all other methods for fair comparison. In addition, we also show the average reward from noisy demonstrations in the figures, denoted by Expert.

From Figures 3 and 4, we can observe that no matter which base model is used, the proposed LfND approach outperforms the other methods in most cases. LfND can achieve higher reward with fewer training iterations in general. The IL method which imitates the demonstration without exploring environment is not effective in all environments, and typically cannot reach the average reward of demonstrations. This phenomenon implies that it is important to learn the policy by both exploiting the demonstration and exploring the environment, especially when the demonstrations are noisy. When comparing with the methods that only exploring the environment, i.e., TRPO or PPO, our method is always superior by utilizing the supervised information from demonstration. In contrast, the other two methods POfD and LbA, which also combine environment exploring and demonstration exploiting, are less robust. They outperforms TRPO or PPO in some environments but loss in the others, probably misled by the noisy demonstrations since they utilize all trajectories without distinction. The LfND-noW approach, which is a variant of the proposed approach without weighting scheme, can improve the performance quickly at the early stage but fail to achieve higher performance persistently. One possible reason is that at the early stage, the learned policy is quite poor, and thus most demonstrations are superior than it and can provide useful information to guide the training. After some iterations, when the policy getting more effective, the noisy demonstrations will hurt the performance. This also validates that a specific demonstration may contribute differently at different learning stages, and thus it is important to adaptively adjust the weights of demonstrations as in LfND. The results in Figures 3 and 4 are consistent in general, validating that the proposed strategy can be effectively incorporated with different reinforcement learning base models.

To further validate the superiority of our method, we also show the average and maximum reward achieved by different algorithms within 500 learning iterations. The mean results over 5 times repeated experiments with different random seeds are recorded. Results on average reward and maximum reward are reported in Tables 1 and 2, respectively. It can be observed that the proposed approach LfND outperforms the other methods in most cases with regard to both average reward and maximum reward. In other words, the LfND method can consistently keep a higher performance in the learning process, and also obtain a better final policy.

4.3. Study on different noise ratios

In this subsection, to further examine the robustness of the proposed method, we perform the experiments with different noise ratios of demonstrations. Specifically, we vary the noise ratio from 0 to 1 with interval of 0.1, where 0 ratio means there is no noise demonstration. For each ratio, we perform the experiments for five methods and record their average reward over 500 learning iterations. Then we calculate the improvement ratio of the proposed method over the other methods. Figure 5 shows the overall improvements of our method over other methods, one color for a method. Due to space limitation, we report the results with TRPO as the base model in three environments, i.e., HalfCheetah, Humanoid and Walker2d. It can be observed that the LfND approach always outperforms other compared methods with all ratios of noise demonstrations. With the increase of noise ratio, the superiority of LfND over the other methods becomes more significant. These results indicate that the proposed LfND method can effectively and robustly learn the policy from noisy demonstrations.

4.4. Examination on noise identification

In this subsection, we further perform experiments to examine whether potential utility of demonstrations are accurately estimated by our algorithm. Specifically, we firstly calculate the average weight of each demonstration instance during the learning process. Then we sort all the instances in increasing order of their average weights, and separate them into 10 groups with equal size. A smaller group ID implies smaller weights for the demonstrations in the group. After that, we fed the demonstrations from each group into the imitation learning (IL) algorithm to learn a policy, and record its performance on average reward, maximum reward and final reward during 500 iterations, respectively.

In Figure 6, the reward curves with different groups are plotted in HalfCheetah, Humanoid and Walker2d. The IL_Mean, the IL_Final and the IL_Max denote the mean, final, maximum rewards respectively in 10 groups. It can be observed that the higher weight of the demonstrations are used for imitation learning, the higher the performance.

Intuitively, high quality demonstration can improve the performance of imitation learning, which implies that the LfND approach can accurately distinguish the quality of different demonstrations by estimating its potential utility. In other words, these results also validate that the LfND approach can effectively emphasize more helpful demonstration while filter out noisy ones.

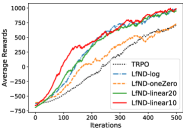

4.5. Study on different weighting strategies

As discussed previously, we may weight the instances in different forms. In this subsection, we further compare the results of our method with four different weighting strategies: oneZero denotes the one-zero form of weight as in Eq 1; linear10 and linear20 denote the linear forms of weight with hyperparameter of 10 and 20 respectively as in Eq 6; log denotes the logarithmic form of weight as in Eq 7.

In Figure 7, we plot the reward curves in HalfCheetah, Humanoid and Walker2d environments for six methods: PPO, TRPO, LfND-oneZero, LfND-linear10, LfND-linear20, LfND-log. Firstly it can be observed that LfND with all weighting strategies can achieve better performance than the base RL algorithms. LfND-log, LfND-linear10 and LfND-linear20 outperforms LfND-oneZero in all cases. One intuitive reason is that both linear weight form and log weight form can emphasize the more helpful demonstrations while filter out noisy ones. These results also validate that the superiority of LfND shown in previous experiments is from the proposed strategy of adaptively exploiting noisy demonstrations.

5. Conclusion

In this paper, we propose a new learning framework called LfND to learn policy from noisy demonstrations. With a adaptive weighting strategy to estimate the potential utility of each demonstration instance, the LfND method can learn the policy in a more effective and robust way by incorporating environment exploration and demonstration exploiting. Experiments results with multiple state-of-the-art reinforcement learning algorithms and different environments consistently demonstrate the superiority of the proposed approach. In the future, in addition to the expected gain of value functions, we plan to study other approaches for estimating the potential utility of demonstrations.

References

- Abbeel and Ng [2004] Pieter Abbeel and Andrew Y Ng. Apprenticeship learning via inverse reinforcement learning. In Proceedings of the twenty-first international conference on Machine learning, page 1. ACM, 2004.

- Atkeson and Schaal [1997] Christopher G Atkeson and Stefan Schaal. Robot learning from demonstration. In ICML, volume 97, pages 12–20. Citeseer, 1997.

- Baird III [1993] Leemon C Baird III. Advantage updating. Technical report, WRIGHT LAB WRIGHT-PATTERSON AFB OH, 1993.

- Brockman et al. [2016] Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. Openai gym. arXiv preprint arXiv:1606.01540, 2016.

- Brys et al. [2015] Tim Brys, Anna Harutyunyan, Halit Bener Suay, Sonia Chernova, Matthew E Taylor, and Ann Nowé. Reinforcement learning from demonstration through shaping. In Twenty-Fourth International Joint Conference on Artificial Intelligence, 2015.

- Chemali and Lazaric [2015] Jessica Chemali and Alessandro Lazaric. Direct policy iteration with demonstrations. In Twenty-Fourth International Joint Conference on Artificial Intelligence, 2015.

- Gao et al. [2018] Yang Gao, Ji Lin, Fisher Yu, Sergey Levine, Trevor Darrell, et al. Reinforcement learning from imperfect demonstrations. arXiv preprint arXiv:1802.05313, 2018.

- Hessel et al. [2018] Matteo Hessel, Joseph Modayil, Hado Van Hasselt, Tom Schaul, Georg Ostrovski, Will Dabney, Dan Horgan, Bilal Piot, Mohammad Azar, and David Silver. Rainbow: Combining improvements in deep reinforcement learning. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- Hester et al. [2018] Todd Hester, Matej Vecerik, Olivier Pietquin, Marc Lanctot, Tom Schaul, Bilal Piot, Dan Horgan, John Quan, Andrew Sendonaris, Ian Osband, et al. Deep q-learning from demonstrations. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- Ho and Ermon [2016] Jonathan Ho and Stefano Ermon. Generative adversarial imitation learning. In Advances in neural information processing systems, pages 4565–4573, 2016.

- Kaelbling et al. [1996] Leslie Pack Kaelbling, Michael L Littman, and Andrew W Moore. Reinforcement learning: A survey. Journal of artificial intelligence research, 4:237–285, 1996.

- Kang et al. [2018] Bingyi Kang, Zequn Jie, and Jiashi Feng. Policy optimization with demonstrations. In International Conference on Machine Learning, pages 2474–2483, 2018.

- Kim et al. [2013] Beomjoon Kim, Amir-massoud Farahmand, Joelle Pineau, and Doina Precup. Learning from limited demonstrations. In Advances in Neural Information Processing Systems, pages 2859–2867, 2013.

- Ng et al. [2000] Andrew Y Ng, Stuart J Russell, et al. Algorithms for inverse reinforcement learning. In Icml, volume 1, page 2, 2000.

- Piot et al. [2014] Bilal Piot, Matthieu Geist, and Olivier Pietquin. Boosted bellman residual minimization handling expert demonstrations. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pages 549–564. Springer, 2014.

- Schaal [1997] Stefan Schaal. Learning from demonstration. In Advances in neural information processing systems, pages 1040–1046, 1997.

- Schulman et al. [2015] John Schulman, Sergey Levine, Pieter Abbeel, Michael Jordan, and Philipp Moritz. Trust region policy optimization. In International conference on machine learning, pages 1889–1897, 2015.

- Schulman et al. [2017] John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- Silver et al. [2016] David Silver, Aja Huang, Chris J Maddison, Arthur Guez, Laurent Sifre, George Van Den Driessche, Julian Schrittwieser, Ioannis Antonoglou, Veda Panneershelvam, Marc Lanctot, et al. Mastering the game of go with deep neural networks and tree search. nature, 529(7587):484, 2016.

- Silver et al. [2017] David Silver, Julian Schrittwieser, Karen Simonyan, Ioannis Antonoglou, Aja Huang, Arthur Guez, Thomas Hubert, Lucas Baker, Matthew Lai, Adrian Bolton, et al. Mastering the game of go without human knowledge. Nature, 550(7676):354, 2017.

- Suay et al. [2016] Halit Bener Suay, Tim Brys, Matthew E Taylor, and Sonia Chernova. Learning from demonstration for shaping through inverse reinforcement learning. In Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems, pages 429–437. International Foundation for Autonomous Agents and Multiagent Systems, 2016.

- Subramanian et al. [2016] Kaushik Subramanian, Charles L Isbell Jr, and Andrea L Thomaz. Exploration from demonstration for interactive reinforcement learning. In Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems, pages 447–456. International Foundation for Autonomous Agents and Multiagent Systems, 2016.

- Szepesvári [2010] Csaba Szepesvári. Algorithms for reinforcement learning. Synthesis lectures on artificial intelligence and machine learning, 4(1):1–103, 2010.

- Todorov et al. [2012] Emanuel Todorov, Tom Erez, and Yuval Tassa. Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 5026–5033. IEEE, 2012.

- Wang et al. [2015] Ziyu Wang, Tom Schaul, Matteo Hessel, Hado Van Hasselt, Marc Lanctot, and Nando De Freitas. Dueling network architectures for deep reinforcement learning. arXiv preprint arXiv:1511.06581, 2015.

- Wu et al. [2019] Yueh-Hua Wu, Nontawat Charoenphakdee, Han Bao, Voot Tangkaratt, and Masashi Sugiyama. Imitation learning from imperfect demonstration. arXiv preprint arXiv:1901.09387, 2019.

- Ziebart et al. [2008] Brian D Ziebart, Andrew Maas, J Andrew Bagnell, and Anind K Dey. Maximum entropy inverse reinforcement learning. 2008.