Regret Guarantees for Model-Based Reinforcement Learning with Long-Term Average Constraints

Abstract

We consider the problem of constrained Markov Decision Process (CMDP) where an agent interacts with an ergodic Markov Decision Process. At every interaction, the agent obtains a reward and incurs costs. The agent aims to maximize the long-term average reward while simultaneously keeping the long-term average costs lower than a certain threshold. In this paper, we propose CMDP-PSRL, a posterior sampling based algorithm using which the agent can learn optimal policies to interact with the CMDP. We show that with the assumption of slackness, characterized by , the optimization problem is feasible for the sampled MDPs. Further, for MDP with states, actions, and mixing time , we prove that following CMDP-PSRL algorithm, the agent can bound the regret of not accumulating rewards from an optimal policy by . Further, we show that the violations for any of the constraints is also bounded by . To the best of our knowledge, this is the first work that obtains a regret bounds for ergodic MDPs with long-term average constraints using a posterior sampling method.

1 Introduction

Consider a wireless sensor network where the devices aim to update a server with sensor values. At time , the device can choose to send a packet to obtain a reward of unit or to queue the packet and obtain reward. However, communicating a packet results in power consumption. At time , if the wireless channel condition, , is weak and the device chooses to send a packet, the resulting instantaneous power consumption, , is high. The goal is to send as many packets as possible while keep the average power consumption, , within some limit, say . This environment has state as the channel condition and queue length at time . To limit the power consumption, the agent may choose to send packets when the channel condition is good or when the queue length grows beyond a certain threshold. The agent aims to learn the policies in an online manner which requires efficiently balancing exploration of state-space and exploitation of the estimated system dynamics [Singh et al., 2020].

Similar to the example above, many applications require to keep some costs low while simultaneously maximizing the rewards [Altman, 1999]. Owing to the importance of this problem, in this paper, we consider the problem of constrained Markov Decision Processes (CMDP). We aim to develop a reinforcement learning algorithm following which an agent can bound the constraint violations and the regret in obtaining the optimal reward to .

The problem setup, where the system dynamics are known, is extensively studied [Altman, 1999]. For a constrained setup, the optimal policy is possibly stochastic [Altman, 1999, Puterman, 2014]. In the domain where the agent learns the system dynamics and aims to learn good policies online, there has been work where to show asymptotic convergence to optimal policies [Gattami et al., 2021], or even provide regret guarantees when the MDP is episodic [Zheng and Ratliff, 2020, Ding et al., 2021]. Recently, [Singh et al., 2020] considered the problem of online optimization of infinite-horizon communicating Markov Decision Processes with long-term average constraints. They provide an optimism based algorithm where confidence bounds on each transition probabilities is constructed. Using this, they obtain a regret bound of 111 hides the logarithmic terms. Additionally, finding the optimistic policy is a computationally intensive task as the number of optimization variables become for MDP with states and actions.

In this paper, we consider the reinforcement learning an infinite-horizon ergodic MDP [Tarbouriech and Lazaric, 2019, Gattami et al., 2021] with long-term average constraints. We use deviation bounds [Jaksch et al., 2010] and use a Bellman error analysis to bound the reward regret of the MDP as . Additionally, we also bound the constraint violations as . We propose a posterior sampling based algorithm where we sample the transition dynamics using a Dirichlet distribution [Osband et al., 2013], which achieves this regret bound.

Unlike optimistic algorithms, the sampled MDP may not be infeasible for the constrained optimization. Hence, we consider slackness characterized by Slater’s parameter Ding et al. [2020], which allows us to prove that the optimization problem is feasible even with the sampled MDPs. Posterior sampling also helps to reduces the optimization variables, to find only the optimal policy for the sampled MDP, to only variables. Finally, we provide numerical examples where the algorithm converges to the calculated optimal policies. To the best of our knowledge, this is the first work to obtain regret guarantees for the infinite horizon long-term average constraint setup with posterior sampling.

2 Related Work

Stochastic Optimization using Markov Decision Processes has very rich roots [Howard, 1960]. There have been work in understanding convergence of the algorithm to find optimal policies for known MDPs [Bertsekas and Tsitsiklis, 1996, Altman, 1999]. Also, when the MDP is not known, there are algorithms with asymptotic guarantees for learning the optimal policies [Watkins and Dayan, 1992] which maximize an objective without any constraints. Recent algorithms even achieve finite time near-optimal regret bounds with respect to the number of interactions with the environment [Jaksch et al., 2010, Osband et al., 2013, Agrawal and Jia, 2017, Jin et al., 2018]. Jaksch et al. [2010] uses the optimism principle for minimizing regret for weakly communicating infinite horizon MDPs with bounded diameter. Osband et al. [2013] extended the analysis of Jaksch et al. [2010] to posterior sampling for episodic MDPs and bounded the Bayesian regret and further improved the regret bounds Osband and Van Roy [2017]. Agrawal and Jia [2017] uses a posterior sampling based approach and obtains a frequentist regret for the infinite horizon MDPs with bounded diameter.

In many reinforcement learning settings, the agent not only wants to maximize the rewards but also satisfy certain cost constraints [Altman, 1999]. Early works in this area were pioneered by [Altman and Schwartz, 1991]. They provided an algorithm which combined forced explorations and following policies optimized on empirical estimates to obtain an asymptotic convergence. [Borkar, 2005] studied the constrained RL problem using actor-critic and a two time-scale framework [Borkar, 1997] to obtain asymptotic performance guarantees. Very recently, [Gattami et al., 2021] analyzed the asymptotic performance for Lagrangian based algorithms for infinite-horizon long-term average constraints.

Inspired by the finite-time performance analysis of reinforcement learning algorithm for unconstrained problems, there has been a significant thrust in understanding the finite-time performances of constrained MDP algorithms. [Zheng and Ratliff, 2020] considered an episodic CMDP and use an optimism based algorithm to bound the constraint violation as with high probability. [Kalagarla et al., 2020] also considered the episodic setup to obtain PAC-style bound for an optimism based algorithm. [Ding et al., 2021] considered the setup of -episode length episodic CMDPs with -dimensional linear function approximation to bound the constraint violations as by mixing the optimal policy with an exploration policy. [Efroni et al., 2020] proposes a linear-programming and primal-dual policy optimization algorithm to bound the regret as . [Qiu et al., 2020] proposed an algorithm which obtains a regret bound of for the problem of adversarial stochastic shortest path. Compared to these works, we focus on setting with infinite horizon long-term average constraints.

After developing a better understanding of the policy gradient algorithms [Agarwal et al., 2020], there has been theoretical work in the area of model-free policy gradient algorithms for constrained MDP and safe reinforcement learning as well. [Xu et al., 2020] consider an infinite horizon discounted setup with constraints and obtain global convergence using policy gradient algorithms. [Ding et al., 2020] also considers an infinite horizon discounted setup. They use a natural policy gradient to update the primal variable and sub-gradient descent to update the dual variable.

Recently [Singh et al., 2020] considered the setup of infinite-horizon CMDPs with long-term average constraints and obtain a regret bound of using an optimism based algorithm and forced explorations. We consider a similar setting with ergodic CMDP and propose a posterior sampling based algorithm to bound the regret as using explorations assisted by the ergodicity of the MDP.

3 Problem Formulation

We consider an infinite horizon discounted Markov decision process (MDP) , defined by the tuple . denotes a finite set of state space with , and denotes a finite set of actions with . denotes the probability of transitioning to state from state after taking action . denotes the average reward in state after taking action . denotes average cost incurred by the agent for constraint after taking action in state . We use a stochastic policy , such that given state , is the probability of selecting action .

Note that the a policy induces a Markov chain over the state space of the MDP. Pertaining to the Markov chains generated by the policies for , we now define the mixing time of MDP.

Definition 1 (Mixing Time).

Consider the Markov Chain induced by the policy on the MDP . Let be a random variable that denotes the first time step when this Markov Chain enters state starting from state . Then, the mixing time of the MDP is defined as:

| (1) |

Similar to Singh et al. [2020], let be the step state distribution starting from state following policy and be the steady-state state distribution generated by policy .

Our first assumption on the MDP allows any policy to reach any state starting from any state , and to converge to a steady state. We formalize the result in the following assumption:

Assumption 1.

The MDP is ergodic, or with being the long-term steady state distribution induced by policy , and and are problem specific constants. And, the mixing time of the MDP is finite or .

After discussing the transition dynamics of the system, we move to the rewards and costs of the MDP .

Assumption 2.

The reward function and the costs are known to the agent.

We note that in most of the problems, rewards are engineered. Hence, Assumption 2 is justified in many setups. However, the system dynamics are stochastic and typically not known.

Following a policy , the expected long-term average cost are given by . Also, we denote the average long-term reward using . Formally, we have:

| (2) | ||||

| (3) | ||||

For brevity, in the rest of the paper, will be denoted as , where . Both, and satisfy the following form of Bellman equation:

| (4) |

| (5) | ||||

| (6) |

where is the bias for reward and is the bias for cost .

The objective is find a policy which is the solution of the following optimization problem.

| (7) | ||||

| (8) |

where are the bounds on the average costs which the agent needs to satisfy.

After formulating the optimization problem, we now state our next assumption characterizing the slackness.

Assumption 3.

There exists a policy , and one constant such that

| (9) |

The slackness assumption is mild because, in various applications some a priori knowledge about a strictly feasible policy is available. Hence, this assumption is again a standard assumption in the constrained RL literature Efroni et al. [2020], Ding et al. [2021, 2020]. is referred as Slater’s constant. Ding et al. [2021] assumes that the Slater’s constant is known.

Any online algorithm starting with no prior knowledge will require to obtain estimates of transition probabilities and obtain reward and costs for each state action pair. Initially, when algorithm does not have good estimates of the model, it accumulates a regret as well as violates constraints as it does not know the optimal policy. We define reward regret as the difference between the cumulative reward obtained vs the expected rewards from running the optimal policy for steps, or

| (10) |

Additionally, we define constraint regret for each constraint as the gap between the cumulative cost incurred and constraint bounds, or

| (11) |

where .

In the following section, we present a model-based algorithm to obtain this policy , and reward regret and the constraint regret accumulated by the algorithm.

4 The CMDP-PSRL Algorithm

For infinite horizon optimization problems (or ), we can use steady state distribution of the state to obtain expected long-term rewards or costs [Puterman, 2014]. We use

| (12) | ||||

| (13) |

where is the steady state joint distribution of the state and actions under policy .

Based on the above formulation, we can solve the joint optimization problem of following form

| (14) |

with the following set of constraints,

| (15) | ||||

| (16) | ||||

| (17) |

for all and . Equation (15) denotes the constraint on the transition structure for the underlying Markov Process. Equation (16) ensures that the solution is a valid probability distribution. Finally, Equation (17) are the constraints for the constrained MDP setup which the policy must satisfy.

Note that arguments in Equation (14) are linear, and the constraints in Equation (15) and Equation (16) are linear, this is a linear programming problem. Since convex optimization problems can be solved in polynomial time [Potra and Wright, 2000], we can use standard approaches to solve Equation (14). After solving the optimization problem, we obtain the optimal policy from the obtained steady state distribution as,

| (18) |

Since we assumed that the CMDP is ergodic, the Markov Chain induced from policy is ergodic. Hence, every state is reachable following the policy , we have and Equation (18) is defined for all states .

Further, since we assumed that the induced Markov Chain is irreducible for all stationary policies, we assume Dirichlet distribution as prior for the state transition probability . Dirichlet distribution is also used as a standard prior in literature [Agrawal and Jia, 2017, Osband et al., 2013]. Further, there exists a steady state distribution when the transition probability is sampled from a Dirichlet distribution [Agarwal et al., 2022, Proposition 1].

The complete constrained posterior sampling based algorithm, which we name CMDP-PSRL, is described in Algorithm 1. The algorithm proceeds in epochs, and a new epoch is started whenever the visitation count in epoch , , is at least the total visitations before episode , , for any state action pair (Line 8). In Line 9, we sample transition probabilities using the updated posterior and in Line 10, we update the policy using the optimization problem specified in Equation (14)-(17) for . Further, if the sampled MDP does not satisfy the cost constraint in Equation (17), we ignore that constraint 222We will show in the analysis that cumulative constraint violations are still bounded. for that epoch.

5 Analysis

We first obtain the feasibility of the optimization problem Equation (14)-(17) for the sampled MDP. We note that we assumed slackness in the true MDP with transition probabilities . Hence, if the the sampled MDP is close to the true MDP, the deviation in the cost will be less and there will be a policy which satisfies the constraint in Equation (17). We formalize this intuition in the following result.

Lemma 1.

Proof Outline.

We consider the policy which satisfies the Slater’s condition in Equation (9). We then consider the Bellman error of taking one step in MDP with transition probabilities and then following policy on the MDP with transition probabilities . Now, using [Agarwal et al., 2022, Lemma 1] relating the average costs following policy with and (, and for all respectively) with the Bellman error gives the required result. The complete proof is provided in the supplementary material. ∎

After obtaining a feasible policy maximizing rewards for the sampled MDP, we now quantify is regret. We note that when optimizing for long-term average rewards and long-term average constraints, we want to simultaneously minimize the reward regret and the constraint regrets. Further, if we know the optimal policy before hand, the deviations resulting from the stochasticity of the process can still result in some constraint violations. Also, since we sample a MDP, the policy which is feasible for the MDP may violate constraints on the true MDP. We want to bound this gap between costs for the two MDPs as well.

We aim to quantify the regret from (R.1) deviation of long-term average rewards of the optimal policy because of incorrect knowledge of the MDP (), (R.2) deviation of the long-term average rewards generated by the optimal policy for the sampled MDP on the sampled MDP and the long-term average rewards generated by the optimal policy for the sampled MDP on the true MDP (), and (R.3) deviation of the expected rewards from following the optimal policy of the sampled MDP ().

Similarly, the constraint violations for each are incurred from (C.1) deviation of long-term average rewards of the optimal policy because of incorrect knowledge of the MDP (), (C.2) deviation of the long-term average costs generated by the optimal policy for the sampled MDP on the sampled MDP and the long-term average costs generated by the optimal policy for the sampled MDP on the true MDP (), and (C.3) deviation of the expected costs from following the optimal policy of the sampled MDP ().

We now prove the regret bounds for Algorithm 1. We first give the high level ideas used in obtaining the bounds on regret. We divide the regret into regret incurred in each epoch . Then, we use the posterior sampling lemma [Osband et al., 2013, Lemma 1] to obtain the equivalence between the long-term average rewards of the true MDP and the long-term average rewards for the optimal value of the sampled MDP . This step allows us to deal with the regret from (R.1). Then we use the Bellman error formulation to relate average rewards for the policy on and [Agarwal et al., 2022]. Combining this with Azuma’s concentration inequality for Martingales allows us to bound the regret from (R.2) and (R.3).

Bounding constraint violations requires similar considerations for (C.2) and (C.3). Further, (C.1) becomes zero if Equation (17) is feasible for the sampled MDP. However, if Equation (17) is not feasible, the cost may be as high as (). We bound the violations by bounding the time-steps for which the optimal policy for unconstrained optimization runs.

To obtain bounds on the regret, we first note that the total number of epochs, , for which the Algorithm 1 runs is bounded by from [Jaksch et al., 2010, Proposition 1].

We formally state the regret bounds and constraint violation bounds in Theorem 1 which we prove rigorously in the supplementary material.

Theorem 1.

The expected reward regret , and the expected constraint regret of Algorithm 1 are bounded as

Proof Outline.

We break the cumulative regret into the regret incurred in each epoch . This gives us:

| (20) | ||||

| (21) | ||||

| (22) | ||||

| (23) |

The Equation (22) follows from [Osband et al., 2013, Lemma 1] for regret each each epoch of Equation (21). Proceeding from Equation (23) requires additional consideration. Typical proof techniques to bound regret requires a bounded bias-span () which may be large for the sampled MDP. For this, we consider an MDP for the transition probability satisfies

| (24) | ||||

where is the estimated transition probability given at time . We now have,

| (25) |

The first term of Equation (25) is bounded by bounding the expected Bellman error. The second term is converted to a Martingale sequence by conditioning it on the state and is bounded using the ergodicity of the MDP and Azuma’s concentration inequality. The complete proof on bounding the regret is provided in the supplementary material.

Regarding the constraint violations, for each , we want to bound,

| (26) |

We divide the constraint violation regret into regret over epochs as well. Now, for each epoch, we know that the constraint is satisfied by the policy for the sampled MDP. This allows us to obtain:

| (27) | ||||

| (28) | ||||

| (29) | |||

| (30) |

The first term in Equation (28) denotes the difference between the incurred costs and the expected costs from following policy . The second term denotes the difference between the expected costs from policy on the true MDP and on the sampled MDP. The third terms denotes the violations of the policy which would be zero if the policy satisfies constraint Eqution (17) for the sampled MDP. Equation (29) is obtained from the fact and Equation (28) is obtained from the fact .

The first and second term in Equation (28) are bounded similar to Equation (23), and we focus our attention to the third term. If the optimization problem in Equation (14)-(17) is feasible, the term and if the optimization equation is infeasible, the term is upper bounded by as and . Hence, we get:

| (31) | |||

| (32) | |||

| (33) | |||

| (34) | |||

| (35) |

where Equation (31) follows from the fact that total violations are less than the cumulative violations are considered per epoch. Equation (33) follows from Lemma 1 as when and Equation (35) comes from [Jaksch et al., 2010, Proposition 1]. ∎

We note that the fundamental setup of unconstrained optimization (), the bound is loose compared to that of UCRL2 algorithm Jaksch et al. [2010]. This is because we use a stochastic policy instead of a deterministic policy. Recall that the optimal policy for CMDP setup is possibly stochastic Altman [1999].

6 Evaluation of the Proposed Algorithm

To validate the performance of the proposed CDMP-PSRL algorithm and the understanding of our analysis, we run the simulation on the flow and service control in a single-serve queue, which is introduced in [Altman and Schwartz, 1991]. A discrete-time single-server queue with a buffer of finite size is considered in this case. The number of the customer waiting in the queue is considered as the state in this problem and thus . Two kinds of the actions, service and flow, are considered in the problem and control the number of customers together. The action space for service is a finite subset in , where . Given a specific service action , the service a customer is successfully finished with the probability . If the service is successful, the length of the queue will reduce by 1. Similarly, the space for flow is also a finite subsection in . In contrast to the service action, flow action will increase the queue by with probability if the specific flow action is given. Also, we assume that there is no customer arriving when the queue is full. The overall action space is the Cartesian product of the and . According to the service and flow probability, the transition probability can be computed and is given in the Table 1.

| Current State | |||

|---|---|---|---|

For the reward function as and the constraints for service and flow as and , respectively, and stationary policies for service and flow as and , respectively, the problem can be defined as

| (36) |

According to the discussion in [Altman and Schwartz, 1991], we define the reward function as , which is an decreasing function only dependent on the state. It is reasonable to give higher reward when the number of customer waiting in the queue is small. For the constraint function, we define and , which are dependent only on service and flow action, respectively. Higher constraint value is given if the probability for the service and flow are low and high, respectively.

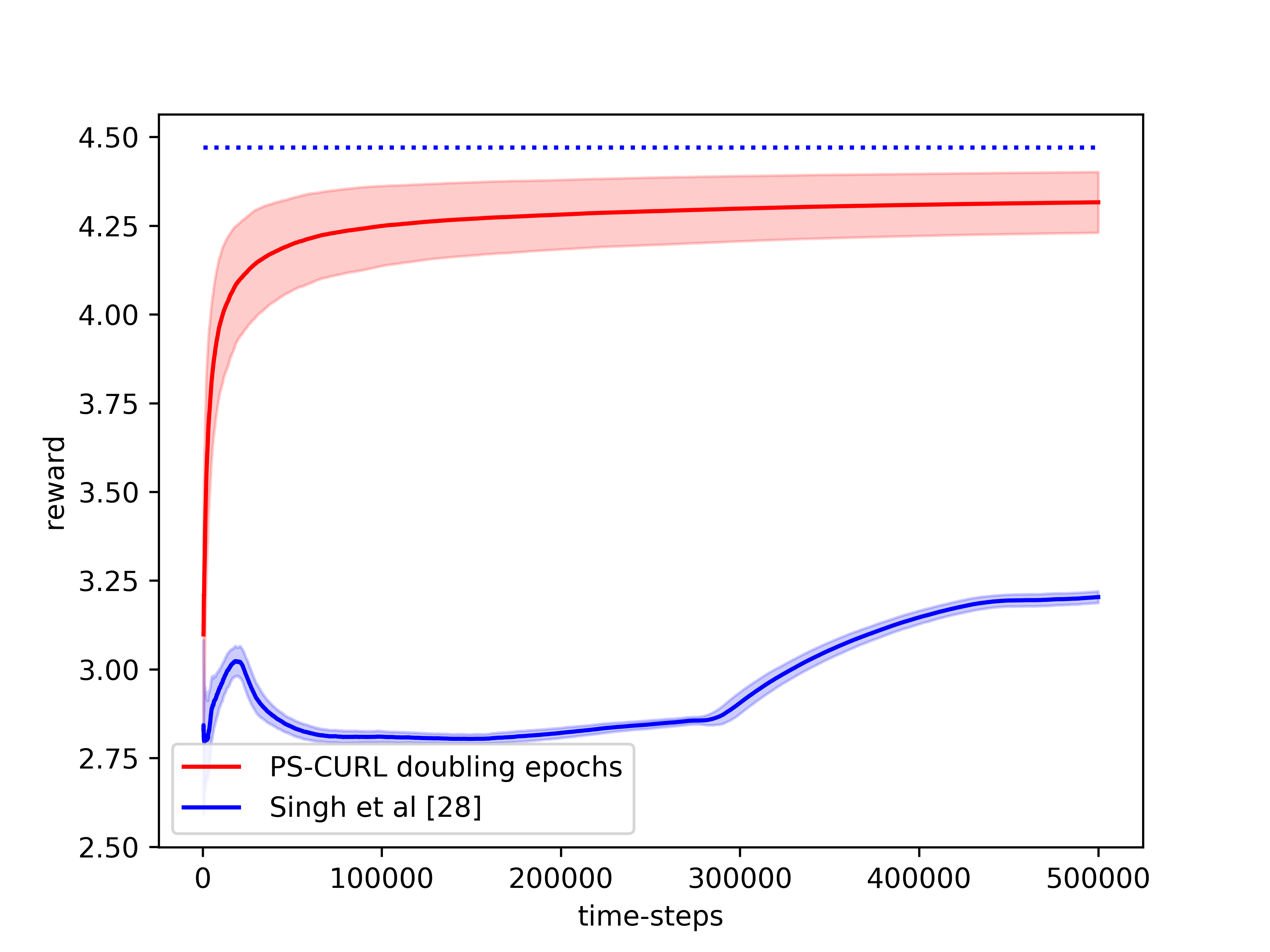

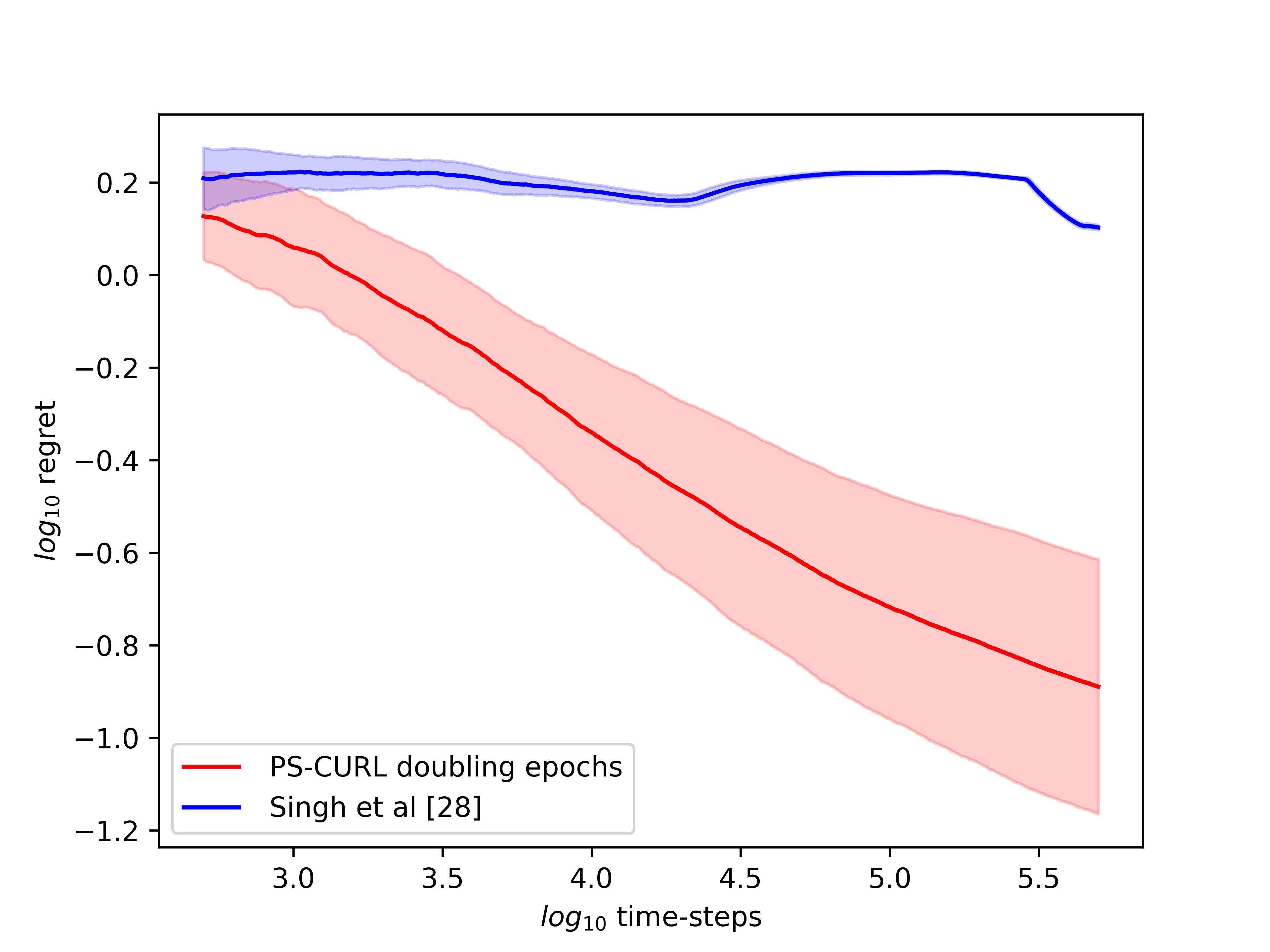

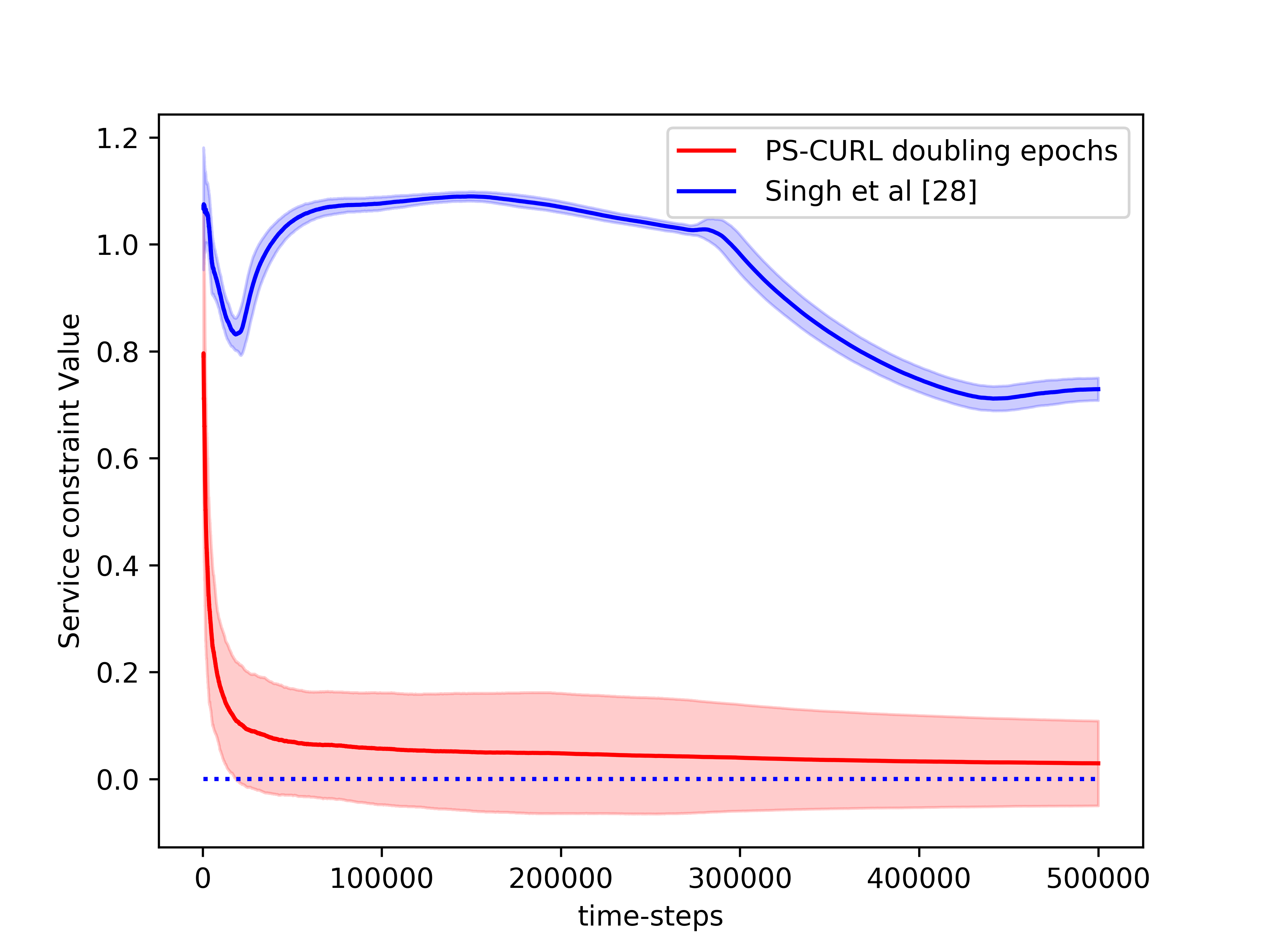

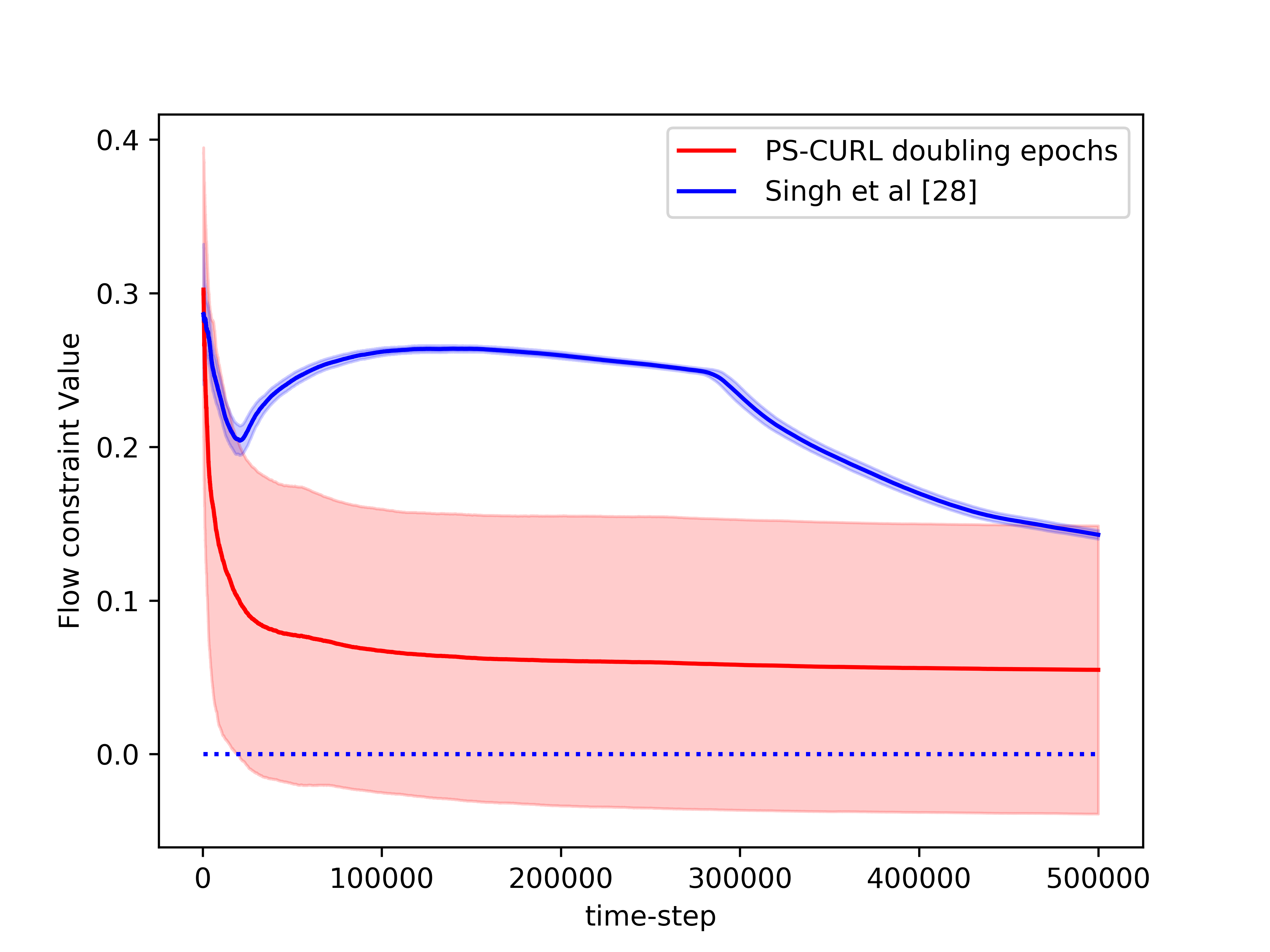

In the simulation, the length of the buffer is set as . The service action space is set as and the flow action space is set as . We use the length of horizon and run independent simulations of the proposed CMDP-PSRL algorithm. We also plot the standard deviation around the mean value in the shadow to show the random error. In order to compare this result to the optimal, we assume that the full information of the transition dynamics is known and then use Linear Programming to solve the problem. The optimal cumulative reward from LP is shown to be . The reward performance of the CMDP-PSRL algorithm is shown in the Figure 1 where we observe that the reward converges towards the optimal value. We also plot the constraint violations in Figure 2. The service and flow constraints converge to 0 as expected. We note that the reward of the proposed CMDP-PSRL algorithm becomes closer the optimal reward as the algorithm proceeds, and to further increase the reward, it does not violates the constraint.

We also compared our algorithm against the optimistic algorithm of Singh et al. [2020]. We note that their algorithm performs significantly worse compared to our algorithm. We account this poor performance on two accounts. An optimistic algorithm does not find a policy for transition probabilities close to for significantly large time. The other issue is because they consider confidence interval for each . This also shows in their analysis and hence they obtain a regret bound. Further, the optimization problem takes a significantly more time to solve for optimistic setup. However, the variance of their optimistic algorithm is significantly lower compared to the variance of our CMDP-PSRL algorithm.

7 Conclusion

This paper, considers the setup of reinforcement learning in ergodic infinite-horizon constrained Markov Decision Processes with long-term average constraint. We propose a posterior sampling based algorithm, CMDP-PSRL, which proceeds in epochs. At every epoch, we sample a new CMDP and generate a solution for the constraint optimization problem. A major advantage of the posterior sampling based algorithm over an optimistic approach is, that it reduces the complexity of solving for the optimal solution of the constraint problem. We also study the proposed CMDP-PSRL algorithm from regret perspective. We bound the regret of the reward collected by the CMDP-PSRL algorithm as . Further, we bound the gap between the long-term average costs of the sampled MDP and the true MDP to bound the constraint violations as . Finally, we evaluate the proposed CMDP-PSRL algorithm on a flow control problem for single queue and show that the proposed algorithm performs empirically well. This paper is the first work which obtains a regret bounds for ergodic MDPs with long-term average constraints using a posterior sampling algorithm. A model-free algorithm that obtains similar regret bounds for infinite horizon long-term average constraints remains an open problem.

References

- Agarwal et al. [2020] Alekh Agarwal, Sham M Kakade, Jason D Lee, and Gaurav Mahajan. Optimality and approximation with policy gradient methods in markov decision processes. In Jacob Abernethy and Shivani Agarwal, editors, Proceedings of Thirty Third Conference on Learning Theory, volume 125 of Proceedings of Machine Learning Research, pages 64–66. PMLR, 09–12 Jul 2020. URL http://proceedings.mlr.press/v125/agarwal20a.html.

- Agarwal et al. [2022] Mridul Agarwal, Vaneet Aggarwal, and Tian Lan. Multi-objective reinforcement learning with non-linear scalarization. In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, pages 9–17, 2022.

- Agrawal and Jia [2017] Shipra Agrawal and Randy Jia. Optimistic posterior sampling for reinforcement learning: worst-case regret bounds. In Advances in Neural Information Processing Systems, pages 1184–1194, 2017.

- Altman and Schwartz [1991] E. Altman and A. Schwartz. Adaptive control of constrained markov chains. IEEE Transactions on Automatic Control, 36(4):454–462, 1991. 10.1109/9.75103.

- Altman [1999] Eitan Altman. Constrained Markov decision processes, volume 7. CRC Press, 1999.

- Bertsekas and Tsitsiklis [1996] D. P. Bertsekas and J. N. Tsitsiklis. Neuro-dynamic programming. Athena Scientific, Belmont, MA, 1996.

- Borkar [1997] Vivek S. Borkar. Stochastic approximation with two time scales. Systems & Control Letters, 29(5):291–294, 1997. ISSN 0167-6911. https://doi.org/10.1016/S0167-6911(97)90015-3. URL https://www.sciencedirect.com/science/article/pii/S0167691197900153.

- Borkar [2005] V.S. Borkar. An actor-critic algorithm for constrained markov decision processes. Systems & Control Letters, 54(3):207–213, 2005. ISSN 0167-6911. https://doi.org/10.1016/j.sysconle.2004.08.007. URL https://www.sciencedirect.com/science/article/pii/S0167691104001276.

- Ding et al. [2020] Dongsheng Ding, Kaiqing Zhang, Tamer Basar, and Mihailo Jovanovic. Natural policy gradient primal-dual method for constrained markov decision processes. Advances in Neural Information Processing Systems, 33, 2020.

- Ding et al. [2021] Dongsheng Ding, Xiaohan Wei, Zhuoran Yang, Zhaoran Wang, and Mihailo Jovanovic. Provably efficient safe exploration via primal-dual policy optimization. In International Conference on Artificial Intelligence and Statistics, pages 3304–3312. PMLR, 2021.

- Efroni et al. [2020] Yonathan Efroni, Shie Mannor, and Matteo Pirotta. Exploration-exploitation in constrained mdps. arXiv preprint arXiv:2003.02189, 2020.

- Gattami et al. [2021] Ather Gattami, Qinbo Bai, and Vaneet Aggarwal. Reinforcement learning for constrained markov decision processes. In International Conference on Artificial Intelligence and Statistics, pages 2656–2664. PMLR, 2021.

- Howard [1960] R. A. Howard. Dynamic Programming and Markov Processes. MIT Press, Cambridge, MA, 1960.

- Jaksch et al. [2010] Thomas Jaksch, Ronald Ortner, and Peter Auer. Near-optimal regret bounds for reinforcement learning. Journal of Machine Learning Research, 11(Apr):1563–1600, 2010.

- Jin et al. [2018] Chi Jin, Zeyuan Allen-Zhu, Sebastien Bubeck, and Michael I Jordan. Is q-learning provably efficient? In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc., 2018. URL https://proceedings.neurips.cc/paper/2018/file/d3b1fb02964aa64e257f9f26a31f72cf-Paper.pdf.

- Kalagarla et al. [2020] Krishna C Kalagarla, Rahul Jain, and Pierluigi Nuzzo. A sample-efficient algorithm for episodic finite-horizon mdp with constraints. arXiv preprint arXiv:2009.11348, 2020.

- Osband and Van Roy [2017] Ian Osband and Benjamin Van Roy. Why is posterior sampling better than optimism for reinforcement learning? In International conference on machine learning, pages 2701–2710. PMLR, 2017.

- Osband et al. [2013] Ian Osband, Daniel Russo, and Benjamin Van Roy. (more) efficient reinforcement learning via posterior sampling. In Advances in Neural Information Processing Systems, pages 3003–3011, 2013.

- Potra and Wright [2000] Florian A Potra and Stephen J Wright. Interior-point methods. Journal of Computational and Applied Mathematics, 124(1-2):281–302, 2000.

- Puterman [2014] Martin L Puterman. Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons, 2014.

- Qiu et al. [2020] Shuang Qiu, Xiaohan Wei, Zhuoran Yang, Jieping Ye, and Zhaoran Wang. Upper confidence primal-dual reinforcement learning for cmdp with adversarial loss. In H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 15277–15287. Curran Associates, Inc., 2020. URL https://proceedings.neurips.cc/paper/2020/file/ae95296e27d7f695f891cd26b4f37078-Paper.pdf.

- Singh et al. [2020] Rahul Singh, Abhishek Gupta, and Ness B Shroff. Learning in markov decision processes under constraints. arXiv preprint arXiv:2002.12435, 2020.

- Tarbouriech and Lazaric [2019] Jean Tarbouriech and Alessandro Lazaric. Active exploration in markov decision processes. In The 22nd International Conference on Artificial Intelligence and Statistics, pages 974–982. PMLR, 2019.

- Watkins and Dayan [1992] Christopher J. C. H. Watkins and Peter Dayan. Q-learning. Machine Learning, 8(3):279–292, May 1992. ISSN 1573-0565. 10.1007/BF00992698. URL https://doi.org/10.1007/BF00992698.

- Weissman et al. [2003] Tsachy Weissman, Erik Ordentlich, Gadiel Seroussi, Sergio Verdu, and Marcelo J Weinberger. Inequalities for the l1 deviation of the empirical distribution. 2003.

- Xu et al. [2020] Tengyu Xu, Yingbin Liang, and Guanghui Lan. A primal approach to constrained policy optimization: Global optimality and finite-time analysis. arXiv preprint arXiv:2011.05869, 2020.

- Zheng and Ratliff [2020] Liyuan Zheng and Lillian Ratliff. Constrained upper confidence reinforcement learning. In Learning for Dynamics and Control, pages 620–629. PMLR, 2020.

Appendix A Proof for Regret Bounds

We now complete the proof of Theorem 1 here.

A.1 Variable Definitions

We first define some important variables required for the proof.

We define value function for rewards and cost as:

| (37) | ||||

| (38) |

We also define Q-value function for rewards and cost as:

| (39) | ||||

| (40) |

Based on this, we define Bellman error function for rewards and cost as:

| (41) | ||||

| (42) |

A.2 Auxiliary Lemmas

We now state and prove various lemmas required to complete the proof of Theorem 1.

The first lemma obtains concentration bounds for the sampled MDP. We have:

Lemma 2.

The probability that the event

| (43) |

fails to occur for any is bounded by .

Proof Outline.

From the result of Weissman et al. [2003], the distance of a probability distribution over events with samples is bounded as:

| (44) |

Thus, for , we have

| (45) | ||||

| (46) | ||||

| (47) |

We sum over the all the possible values of till time-step to bound the probability that the event does not occur as:

| (48) |

Finally, summing over all the , we get

| (49) |

Further, using union bounds and summing over all the , we get

| (50) | ||||

| (51) |

∎

The next lemma relates the difference between average per step reward (or cost ) for following policy on true MDP with transition probabilities and average per step reward for following policy on MDP with transition probabilities with the Bellman error as:

Lemma 3.

The difference of long-term average rewards for running the policy on the MDP, , and the average long-term average rewards for running the policy on the true MDP, , is the long-term average Bellman error as

| (52) |

Proof.

Note that for all , we have:

| (53) | ||||

| (54) |

where Equation (54) follows from the definition of the Bellman error for state action pair .

Similarly, for the true MDP, we have,

| (55) | ||||

| (56) |

Subtracting Equation (56) from Equation (54), we get:

| (57) | ||||

| (58) |

Using the vector format for the value functions, we have,

| (59) |

Now, converting the value function to average per-step reward we have,

| (60) | ||||

| (61) | ||||

| (62) |

where the last equation follows from the definition of occupancy measures by Puterman [2014], and the existence of the limit in Equation (72). ∎

After relating the gap between the long-term average rewards of policy on the two MDPs, we now want to bound the sum of Bellman error over an epoch. For this, we first bound the Bellman error for a particular state action pair in the form of following lemma. We have,

Lemma 4.

For an MDP with rewards and transition probability such that , the Bellman error for state-action pair is upper bounded as

| (63) |

where is the bias-span of the MDP with transition probability .

Proof.

Starting with the definition of Bellman error in Equation (41), we get

| (64) | ||||

| (65) | ||||

| (66) | ||||

| (67) | ||||

| (68) | ||||

| (69) | ||||

| (70) | ||||

| (71) | ||||

| (72) | ||||

| (73) | ||||

| (74) |

where Equation (67) comes from the assumption that the rewards are known to the agent. Equation (71) follows from the fact that the difference between value function at two states is bounded. Equation (72) comes from the definition of bias term Puterman [2014] where is the bias of the policy when run on the sampled MDP. Equation (73) follows from Hölder’s inequality. In Equation (74), the norm of probability vector difference is bounded from the definition.

Additionally, note that the norm in Equation (73) is bounded by . Thus the Bellman error is loose upper bounded by for all state-action pairs. ∎

Lemma 5 (Bounded Span of optimal MDP in confidence interval).

For a MDP with rewards and transition probabilities , for policy , the difference of bias of any two states , and , is upper bounded by the mixing time of the true MDP as:

| (75) |

Proof.

Note that for all . Now, consider the following Bellman equation:

| (76) |

where and .

Consider two states . Also, let be a random variable defined as:

| (77) |

We also define another operator,

| (78) |

where .

Now, note that

| (79) | ||||

| (80) | ||||

| (81) | ||||

| (82) | ||||

| (83) |

Further, for any two vectors , where all the elements of are not smaller than we have . Hence, we have for all . Unrolling the recurrence, we have

| (84) |

For , we have , completing the proof. ∎

A.3 Proof of results from main text

Proof of Theorem 1.

We first consider term. We start by defining filtration as the set of of observed states and played actions. Further, we have as

| (89) |

We have,

| (90) | ||||

| (91) | ||||

| (92) | ||||

| (93) |

Hence, we have,

| (94) | ||||

| (95) | ||||

| (96) | ||||

| (97) |

Using Azuma-Hoeffding’s inequality, we get,

| (98) |

with probability at least . Summing over all the epochs and using Cauchy-Schwarz inequality, we get:

| (99) | ||||

| (100) | ||||

| (101) | ||||

| (102) |

with probability at least . Further, the maximum value of the sum is bounded by and that event occurs with probability less than which gives,

| (103) | ||||

| (104) | ||||

| (105) |

We can now focus on the term. We have:

| (106) | ||||

| (107) |

Similar to Equations (90)-(93), we have:

| (108) |

Again, using Azuma-Hoeffding’s inequality, with probability at least we have:

| (109) |

Summing over all the epochs, we get, with probability at least :

| (110) | ||||

| (111) | ||||

| (112) | ||||

| (113) | ||||

| (114) | ||||

| (115) | ||||

| (116) | ||||

| (117) |

where Equation (113) follows from Lemma 4. Equation (114) follows from Lemma 2 with probability . Equation (115) comes from Lemma 5. Equation (116) follows from [Jaksch et al., 2010, Lemma 19] and Equation (117) follows from Cauchy-Schwarz inequality.

Together with Equation (108), we get:

| (118) |

Combining and we get the required bound on regret. The bound on constraint violations follows similarly. ∎

Proof of Lemma 1.

We begin with considering the policy in Assumption 3. We now prove the result for one and the result follows for all . We consider an MDP with transition dynamics which maximizes for all for all . Consider the difference between the average cost incurred from following policy on the MDP with true transition probabilities and the average cost incurred from following policy on the MDP with transition probabilities and using Lemma 3. We have:

| (119) | ||||

| (120) | ||||

| (121) |

where the of Bellman error is of the following form,

and the value function, and -value, , function become:

We bound the expectation using Azuma-Hoeffdings inequality as follows:

| (122) | ||||

| (123) | ||||

| (124) | ||||

| (125) | ||||

| (126) | ||||

| (127) | ||||

| (128) | ||||

| (129) |

where Equation (123) is obtained by summing both sides from to . Equation (124) is obtained by summing over the geometric series with ratio . Equation (125) comes from analysis used in the proof of Theorem 1. Equation (126) comes from the fact that for all , and then replacing the lower bound of . Equation (127) follows from the Cauchy Schwarz inequality. Equation (128) follows from the fact that the epoch length is same as the number of visitations to all state action pairs in an epoch.

Combining Equation (129) with Equation (121), we obtain the required result as follows:

| (130) | ||||

| (131) | ||||

| (132) | ||||

| (133) | ||||

| (134) |

where Equation (132) comes from the fact that we consider epoch length and Equation (133) comes from Assumption 3 and Equation (134) comes from the value of Slater’s constant in Assumption 3. Replicating the analysis for all , for the policy , satisfy the constraint for all and hence, the optimization problem in Equation (14)-(17) is feasible. ∎