Regional Style and Color Transfer

Abstract

This paper presents a novel contribution to the field of regional style transfer. Existing methods often suffer from the drawback of applying style homogeneously across the entire image, leading to stylistic inconsistencies or foreground object twisted when applied to images with foreground elements such as person figures. To address this limitation, we propose a new approach that leverages a segmentation network to precisely isolate foreground objects within the input image. Subsequently, style transfer is applied exclusively to the background region. The isolated foreground objects are then carefully reintegrated into the style-transferred background. To enhance the visual coherence between foreground and background, a color transfer step is employed on the foreground elements prior to their reincorporation. Finally, we utilize feathering techniques to achieve a seamless amalgamation of foreground and background, resulting in a visually unified and aesthetically pleasing final composition. Extensive evaluations demonstrate that our proposed approach yields significantly more natural stylistic transformations compared to conventional methods.

Index Terms:

Object Segmentation, Style Transfer, Color Transfer

I Introduction

In the domain of computer vision, recent advancements in image segmentation techniques [1] have had a profound impact. These advancements facilitate the precise delineation of objects and regions within images. Traditional segmentation methods primarily relied on handcrafted features and heuristic algorithms. However, the emergence of deep learning-based approaches, particularly convolutional neural networks (CNNs) [2, 3], has revolutionized this field. CNN-based models like U-Net and Mask R-CNN [4] have demonstrated exceptional performance in segmenting objects with intricate shapes and diverse appearances. This paves the way for more accurate and efficient image understanding tasks.

In parallel, The field of style transfer has concurrently witnessed significant progress in recent years, fueled by the growing interest in neural style transfer algorithms. These algorithms aim to apply the artistic style of a reference image to a target image while faithfully preserving content of the target image. Early methods employed optimization-based approaches. However, with the advent of deep learning, neural style transfer has evolved to leverage pre-trained CNNs like VGG and ResNet [5, 6], enabling the achievement of impressive results in real-time style transfer applications. These advancements have unveiled new avenues for creative expression and artistic rendering within the realm of digital media.

Similarly, color transfer techniques have also undergone remarkable developments, particularly within the context of image enhancement and color harmonization. These techniques aim to transfer the color distribution of a source image to a target image while maintaining its content and structural integrity. Traditional color transfer algorithms often relied on histogram matching and statistical methods. However, modern approaches leverage deep learning architectures to learn intricate mappings between color distributions [7]. These deep learning-based color transfer models offer superior performance and flexibility, enabling the creation of more natural and aesthetically pleasing color adjustments in images.

To address the limitations associated with unnatural style transfer, this paper presents a novel regional artistic style and color transfer approach. We first employ object segmentation to process the image and generate a mask. This mask facilitates the division of the foreground object (e.g., person) and the background (e.g., scenic view, road, room). Subsequently, color transfer and style transfer are applied to the foreground and background regions, respectively. Finally, the two stylistically altered images are seamlessly blended together.

In summary, our paper makes the following key contributions:

-

•

We propose a regional style and color transfer approach specifically designed to overcome the unnatural effects associated with global style or color transfer applied to the entire image.

-

•

Through extensive experimentation, we demonstrate the advantages of applying style and color transfer within distinct image regions, resulting in a more natural aesthetic outcome.

-

•

Our method possesses the inherent capability to be extended to establish a correspondence between painted images and video frames, thereby enabling the creation of stylized films.

-

•

Leveraging effective segmentation techniques, our approach offers a concise and effective framework for seamlessly applying and switching between style transfer algorithms.

II Methods

This section details the comprehensive approach adopted in this study to elucidate the influence of regionally applied stylistic and chromatic alterations. The proposed framework at fig 1 takes two input images: a content image and a style image. The model strategically partitions the style transfer process, applying the stylistic elements to the background region and the color transfer to the foreground region. To achieve this regional manipulation, semantic segmentation [8] is leveraged to delineate the person foreground. Subsequently, the style-transferred background and color-transferred foreground are seamlessly integrated using a blending technique, effectively mitigating any potential artifacts at the boundary.

II-A Segmentation and Boundary Optimization

Semantic Segmentation Our image segmentation approach leverages the DeepLabv3+ [8]. This model incorporates Atrous Spatial Pyramid Pooling (ASPP) [9] alongside an encoder-decoder structure to capture rich contextual information. ASPP employs a series of atrous convolutions with varying dilation rates, enabling the model to learn features at diverse scales. The encoder progressively encodes these features across scales, while the decoder refines them to generate high-resolution segmentation masks. We trained the model on the PASCAL VOC 2012 dataset [10], encompassing 20 object classes including ”person”. This dataset comprises 11,530 images with 27,450 annotated objects and 6,929 segmentation. A ResNet-101 backbone was utilized with a stride of 16 to expedite the training process. This configuration achieved a segmentation performance of 82.1% mean Intersection-over-Union (mIoU) on the PASCAL VOC 2012 validation set. Notably, with 64 channels, the model attained an mIoU of 78.21%.

Boundary Optimization Person object boundaries extracted from segmentation masks often exhibit noise. To facilitate independent style and color application to distinct regions, precise boundaries are crucial. We address this by employing a two-step boundary optimization process. Initially, Canny edge detection is applied to the original image. Subsequently, a Breadth-First Search (BFS) algorithm traverses each point on the binary person mask, starting from the periphery and moving inwards. For each point, 1) If the closest edge point resides within the mask, the point is removed, and its unvisited neighbors are added to a First-In-First-Out (FIFO) queue. 2) If the nearest edge point lies outside the mask, the point is skipped, and the traversal continues. Finally, Euclidean distance-based smoothing is applied to refine the extracted boundary.

II-B Artistic Style Transfer

We followed the idea of fast style transfer [11] to train the style transfer. This method reduces the total amount of parameters in the model to achieve faster style transfers with pre-trained style. Given a layer of the model, is the number of feature maps, and is the size of each feature map. The output of a layer can be stored in a matrix where is the activation of the filter at position in layer . The Gram Matrix from style representation is expressed: .

The squared-error loss between the original image and the generated images is defined as following:

| (1) |

| (2) |

where indicates original image in layer , and are weighting factors compared to the total loss. is the style presentation in layer . Hereby, we get the total loss function: , in which and are weighting factors for content and style construction, respectively. Through times of training, the ratio gave us the best result. Therefore, we adopt this ratio in this method.

II-C Color Transfer

This work proposes a method for color transfer specifically targeted at the foreground region of an image. This approach aims to achieve color harmony between the foreground (e.g., a person) and a style-transferred background while preserving the integrity of the portrait. To achieve this, we leverage the color space, which aligns well with the statistics of natural scenes and human visual perception, for color representation. The conversion from the original RGB space to follows the established method by Reinhard et al. [7]:

| (3) |

| (4) |

Within the space, each pixel is represented as a three-dimensional vector. To reduce dimension and capture the dominant color variations, we employ Principal Component Analysis (PCA) in this 3D space for both the image and the style representation. This involves eigenvalue decomposition of the covariance matrix , where is an matrix containing all pixel vectors of an image in space. The first principal component vector, q, corresponding to the largest eigenvalue, captures the most significant color variation direction. By calculating the dot product with , all pixel vectors in an image are projected onto a one-dimensional line, effectively capturing the main color distribution.

For each pixel on the original image, our task is to find the equivalent pixel on the style image and transfer its RGB color:

| (5) |

We use the pixel such that

| (6) |

in which indicates the cumulative density function and and indicate the first principal components of the original image and the style, respectively.

II-D Blending Style and Color

In the preceding stages, a background style-transferred image, a foreground color-transferred image, and an optimized person segmentation mask were generated. To create the final result, these elements are seamlessly combined leveraging the optimized segmentation mask.

We employ alpha blending, a well-established technique in computer graphics, to achieve a seamless integration of the aforementioned images. As detailed in Section II-A, the Euclidean distance metric was previously utilized to generate a feathered boundary within the segmentation mask. This inherent property of the mask provides a range of values between and , perfectly suited for alpha blending. The following equation mathematically represents the pixel-wise blending process:

| (7) |

where is the pixel value of boundary, denotes the pixel value of global style transfer image, and represents the pixel value of global color transfer image.

III Results

III-A Segmentation and Boundary Optimization

We evaluated the impact of boundary optimization on regional style transfer. To isolate the effect of boundary optimization, color transfer to the foreground region was intentionally omitted from the analysis. As illustrated in Fig 2, the proposed boundary optimization algorithm demonstrably improved boundary preservation, highlighting its effectiveness.

III-B Background Style Transfer

In the context of neural style transfer, we employ a loss function that balances the contributions of the content image and the style reference image. This optimization process leads to a trade-off parameter that determines the relative influence of each image on the final output. Leveraging the segmentation mask obtained in the preceding step, we achieve selective style transfer by applying the artistic style solely to the background region, while preserving the foreground content of the original image. As illustrated in Fig 3(d), the resulting image successfully integrates the content of the original image with the style of the reference image, applied exclusively to the background.

III-C Foreground Color Transfer

To achieve visual harmony between the style-transferred background and the foreground object (e.g., person), we perform a color transfer operation specifically on the foreground region. This process aims to adapt the color palette of the foreground to better complement the stylized background, while maintaining the integrity of the portrait itself. Fig 3(e) demonstrates the successful integration of the original image content with the color characteristics of the style image, applied selectively to the foreground element.

III-D Image Blending

Leveraging the strengths of both style transfer and color transfer, our approach meticulously separates the foreground and background regions. This enables the creation of a final output where the artistic style from the reference image is seamlessly integrated into the background, while the foreground portraits retain their original color characteristics with rigorous fidelity. Fig 3(f) exemplifies this meticulous approach, showcasing the generation of an aesthetically unified image. The foreground elements appear to harmoniously co-exist with the artistically rendered background, achieving a visually compelling and perceptually convincing outcome.

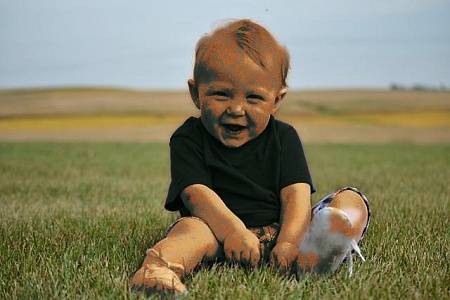

To comprehensively evaluate the effectiveness of our proposed method, we conducted a series of experiments utilizing diverse input and style images. Fig 4 showcases a comparative analysis of various image manipulation techniques. As can be observed, global style transfer exhibits a significant limitation: the style is indiscriminately applied across the entire image, resulting in a visually distorted and unnatural appearance for both the foreground and background. Background-only style transfer, while successful in preserving the integrity of the foreground portraits, introduces a visual inconsistency, where the portraits appear in-congruent with the artistically transformed background. In contrast, our proposed regional style and color transfer method stands out as a groundbreaking advancement. It achieves the remarkable feat of seamlessly integrating the artistic style from the reference image into the background, while meticulously preserving the natural color fidelity of the foreground portraits. This results in a visually harmonious image where the foreground elements organically co-exist with the artistically rendered background, creating an aesthetically pleasing and perceptually convincing outcome.

IV Conclusions

This paper presents a novel approach to regional style and color transfer, effectively addressing the limitations of global style transfer methods that often produce unnatural results. Our proposed technique leverages the power of regional style and color transfer within selective image regions, demonstrably leading to better visual quality and enhanced realism.

Extensive experimentation underscores the effectiveness of our method in controlling style and color transfer across various regions, culminating in a significantly more natural aesthetic. The elegance and efficiency of our approach lie in its seamless integration with existing segmentation techniques, enabling style and color transfer to designated image areas.

Furthermore, our framework exhibits inherent scalability, readily adaptable to establish stylistic correspondences between painted images and video frames, paving the way for the creation of stylized films. While our current implementation demonstrates minimal computational overhead, future research directions include extending the framework’s applicability beyond human segmentation to encompass diverse object categories (e.g., vehicles, animals). Additionally, we aim to optimize inference speed and efficiency to address the exponential growth in computational cost associated with larger input images. These advancements will constitute the cornerstone of our ongoing research efforts.

References

- [1] L. Li, “Cpseg: Finer-grained image semantic segmentation via chain-of-thought language prompting,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2024, pp. 513–522.

- [2] H. Peng, S. Huang, T. Zhou, Y. Luo, C. Wang, Z. Wang, J. Zhao, X. Xie, A. Li, T. Geng et al., “Autorep: Automatic relu replacement for fast private network inference,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 5178–5188.

- [3] C.-H. Chang, X. Wang, and C. C. Yang, “Explainable ai for fair sepsis mortality predictive model,” arXiv preprint arXiv:2404.13139, 2024.

- [4] Y. Xin, S. Luo, H. Zhou, J. Du, X. Liu, Y. Fan, Q. Li, and Y. Du, “Parameter-efficient fine-tuning for pre-trained vision models: A survey,” arXiv preprint arXiv:2402.02242, 2024.

- [5] J. Yao, C. Li, K. Sun, Y. Cai, H. Li, W. Ouyang, and H. Li, “Ndc-scene: Boost monocular 3d semantic scene completion in normalized devicecoordinates space,” in 2023 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE Computer Society, 2023, pp. 9421–9431.

- [6] T. Deng, Y. Zhou, W. Wu, M. Li, J. Huang, S. Liu, Y. Song, H. Zuo, Y. Wang, Y. Yue et al., “Multi-modal uav detection, classification and tracking algorithm–technical report for cvpr 2024 ug2 challenge,” arXiv preprint arXiv:2405.16464, 2024.

- [7] E. Reinhard, M. Adhikhmin, B. Gooch, and P. Shirley, “Color transfer between images,” IEEE Computer Graphics and Applications, vol. 21, no. 5, pp. 34–41, 2001.

- [8] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” in ECCV, 2018.

- [9] H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia, “Pyramid scene parsing network,” in IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 2881–2890.

- [10] M. Everingham, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman, “The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results,” http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html.

- [11] L. Engstrom, “Fast style transfer,” https://github.com/lengstrom/fast-style-transfer/, 2016.