Region Rebalance for Long-Tailed Semantic Segmentation

Abstract

In this paper, we study the problem of class imbalance in semantic segmentation. We first investigate and identify the main challenges of addressing this issue through pixel rebalance. Then a simple and yet effective region rebalance scheme is derived based on our analysis. In our solution, pixel features belonging to the same class are grouped into region features, and a rebalanced region classifier is applied via an auxiliary region rebalance branch during training. To verify the flexibility and effectiveness of our method, we apply the region rebalance module into various semantic segmentation methods, such as Deeplabv+, OCRNet, and Swin. Our strategy achieves consistent improvement on the challenging ADEK and COCO-Stuff benchmark. In particular, with the proposed region rebalance scheme, state-of-the-art BEiT receives + gain in terms of on the ADEK val set.

1 Introduction

Deep neural networks (DNNs) like convolutional neural networks [33, 25, 49, 50] and vision transformers [21, 54, 38] have achieved great success in various computer vision tasks, including image classification [33, 25, 49, 50], object detection [45, 36, 37] and semantic segmentation [62, 15, 59, 61]. Most previous studies focus on curated data with annotations, such as ImageNet, that are balanced over different classes. In contrast, real-world data often exhibit a long-tailed distribution where a small number of head classes contain many instances while most other classes have relatively few instances. As shown in [22], addressing the long-tailed distribution problem is the key to large vocabulary vision recognition tasks.

With the increasing attention on the long-tailed distribution problem, various advanced methods have been developed for long-tailed image classification [5, 26, 19, 23, 11, 43, 32, 57, 17, 52, 64, 18] and instance segmentation [51, 34, 56]. For example, Cao et al. [9] and Ren et al. [43] provide theoretical foundation on the optimal margins for long-tailed image classification. For long-tailed instance segmentation, most effort tackles the problem based on the Mask-RCNN framework [24] and conducts class rebalance in the proposal classification. Without much prior long-tailed study for semantic segmentation yet, we focus our work in this direction.

To address the long-tailed semantic segmentation problem, we first apply the well-known long-tailed image classification method to the semantic segmentation task. With the balanced softmax strategy [43] to rebalance the pixel classification, we observe that it does not work well for the major evaluation metric of .

From an investigation of this, we identify two major challenges in conducting pixel rebalance: (i) Pixel rebalance mainly improves pixel accuracy but not . We empirically observe that applying the pixel rebalance strategy even harms performance and argue that inconsistency between the training objective, i.e., pixel cross-entropy, and our inference target, i.e., , leads to this phenomenon. (ii) Neighboring pixels are highly correlated. In long-tailed image classification, rebalance can be effectively guided by class frequency because image samples are independent and identically distributed (i.i.d.). In contrast, pixel samples are not i.i.d. due to correlation among neighboring pixels in an image. As a result, class frequency in the pixel domain is an unsuitable guide for rebalancing.

In this work, we propose to overcome the non-i.i.d. issue of pixel rebalance by gathering correlated pixels into regions and performing rebalance based on regions. We refer to this method for dealing with the class imbalance in semantic segmentation as Region Rebalance. In this approach, we introduce an auxiliary region classification branch where pixel features of the same class/region are averaged and fed into a rebalanced region classifier, as shown in Figure 2. With this additional training target, the pixel features are encouraged to lie in a more balanced classification space with regard to uncorrelated pixel samples. We note that the region rebalance branch is used only for training and is removed for inference.

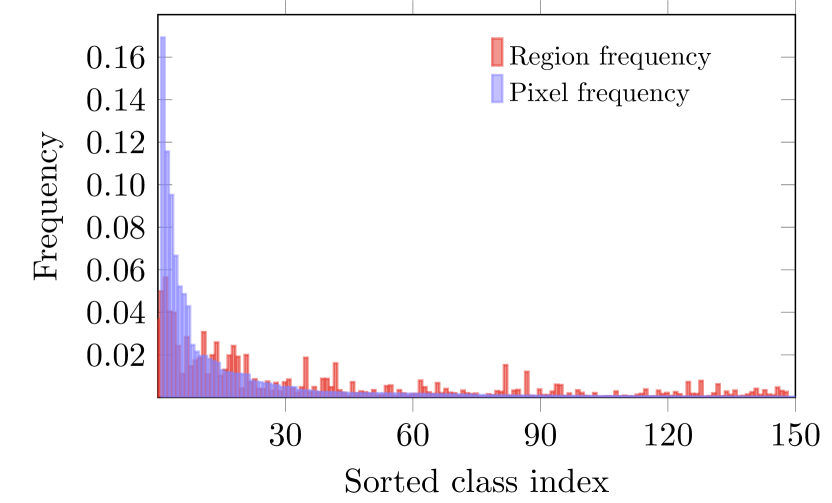

An incidental benefit of this approach is that popular benchmark datasets, including ADE20K and COCO-Stuff164K, exhibit less class imbalance in the region domain than in the pixel domain, as later analyzed in Sec. 3.3.2. Because of this property, rebalancing in the region domain can be accomplished relatively more effectively.

We apply our region rebalance strategy to various semantic segmentation methods e.g., PSPNet [62], OCRNet [61], DeepLabv+ [15], Swin [38], and BEiT [3], and conduct experiments on two challenging semantic segmentation benchmarks, namely ADEK[66] and COCO-StuffK[8], to verify the effectiveness of our method. The results are reported in Figure 1. Our code will be made publicly available. The key contributions are summarized as follows:

-

•

We investigate the class imbalance problem that exists in semantic segmentation and identify the main challenges of pixel rebalance.

-

•

We propose a simple yet effective region rebalance scheme and validate the effectiveness of our method across various semantic segmentation methods. Notably, our method yields a + improvement for BEiT [3], setting a new performance record on ADEK val at the time of submission.

2 Related Work

2.1 Long-tailed Image Classification

In the area of long-tailed image classification, the most popular methods for dealing with imbalanced data can be categorized into re-weighting/re-sampling methods [47, 63, 5, 7, 23, 30, 6, 28, 27, 58, 44, 48, 29] or one-stage/two-stage methods [10, 31, 64, 65, 18, 17].

Re-weighting/Re-sampling. Re-sampling approaches are based on either over-sampling low-frequency classes or under-sampling high-frequency classes. Over-sampling [47, 63, 5, 7] usually suffers from heavy over-fitting to low-frequency classes. For under-sampling [23, 30, 6], it inevitably leads to degradation of CNN generalization ability because a large portion of the high-frequency class data is discarded. Re-weighting [28, 27, 58, 44, 48, 29] loss functions is an alternative to rebalancing and is accomplished by enlarging weights on more challenging or sparse classes. However, re-weighting makes CNNs difficult to optimize when training on large-scale data.

One-stage/Two-stage. Since the deferred re-weighting and re-sampling strategies proposed by Cao et al. [10], it was further observed by Kang et al. [31] and Zhou et al. [65] that re-weighting and re-sampling could benefit classifier learning but hurt representation learning. Based on this, many two-stage methods [10, 31, 64] were developed.

The two-stage design contradicts the push towards end-to-end learning that has been prevalent in the deep learning era. Through a causal inference framework, Tang et al. [53] revealed the harmful causal effects of SGD momentum on long-tailed classification. Ren et al. [43] extended LDAM [10] and theoretically obtained the optimal margins for multi-class image classification. Cui et al. [19] proposed a residual learning mechanism to address this issue. Recently, contrastive learning has also been introduced for long-tailed image classification [18].

2.2 Long-tailed Instance Segmentation

With the great progress in long-tailed image classification [5, 26, 19, 23, 11, 43, 32, 57, 17, 52, 64, 18], many researchers have started to explore the long-tailed phenomenon in instance segmentation. Many algorithms [51, 34, 56] have been developed to address this issue.

Tan et al. [51] observed that each positive sample of one category could be seen as a negative sample for other categories, causing the tail categories to receive more discouraging gradients. Based on this, they proposed to simply ignore those gradients for rare categories. Li et al. [34] proposed balanced group softmax (BAGS) for balancing the classifier within a detection framework through group-wise training. BAGS implicitly modulates the training process for the head and tail classes and ensures that all classes are trained sufficiently. Wang et al. [56] proposed the Seesaw loss to dynamically rebalance gradients of positive and negative samples for each category, further improving performance.

2.3 Semantic Segmentation

Semantic segmentation has long been studied as a fundamental task in computer vision. Substantial progress was made with the introduction of Fully Convolutional Networks (FCN) [40], which formulate the semantic segmentation task as per-pixel classification. Subsequently, many advanced methods have been introduced.

Many approaches [1, 2, 35, 42, 46] combine upsampled high-level feature maps and low-level feature maps to capture global information and recover sharp object boundaries. A large receptive field also plays an important role in semantic segmentation. Several methods [13, 12, 60] have proposed dilated or atrous convolutions to enlarge the field of filters and incorporate larger context. For better global information, recent work [14, 15, 62] adopted spatial pyramid pooling to capture multi-scale contextual information. Along with atrous spatial pyramid pooling and an effective decoder module, Deeplabv+ [15] features a simple and effective encoder-decoder architecture.

3 Our Method

First, we analyze the challenges of conducting pixel rebalance for semantic segmentation. Second, we present the details of our proposed region rebalance scheme and explain how region rebalance helps.

3.1 Challenges of Pixel Rebalance

3.1.1 Higher Pixel Accuracy Higher ?

In the image classification task, minimizing the cross-entropy loss essentially maximizes the probability of the ground truth label during the training phase, which is consistent with the top- accuracy evaluation metric during inference. Therefore, we can expect more balanced class accuracy via incorporating rebalance strategies.

For semantic segmentation, we typically use pixel cross-entropy as a proxy to indirectly optimize Intersection-over-Union (). However, pixel cross-entropy inherently maximizes pixel accuracy (). This misalignment between the training objective and target evaluation metric inspires us to explore what would happen when rebalancing with pixel cross-entropy in semantic segmentation.

Theoretical analysis. In order to study how pixel rebalance affects , we examine the connections between and . We use , and to denote true positives, false negatives, and false positives, respectively. Eq. (1) presents the formula for , while Eq. (2) is for Acc. By combining these two equations in Eq. (3), we can see that is a linear function of , with as a constant. In this sense, it is reasonable to optimize with pixel cross-entropy.

| (1) | |||||

| (2) | |||||

| (3) |

When rebalancing with pixel cross-entropy, of low-frequency classes is expected to improve. However, according to Remark 1, a higher does not always mean a higher . Even worse, we empirically observe that Remark 1 is usually not satisfied for pixel rebalancing as shown in the following case study.

Remark 1

(Condition for improvement) With pixel rebalance, suppose the of class is improved from to , then it must satisfy - to guarantee improvements, where denotes false positives after the rebalance while is false positives beforehand. is the pixel frequency for class . . Proof See the Supplementary Material.

| Method | (s.s.) |

|---|---|

| Baseline | |

| Re-weighting | |

| balancedsoftmax | |

| balancedsoftmax* | |

| RR (Ours) |

A Case Study. To rebalance with pixel cross-entropy, we conduct experiments on ADEK with balanced softmax [43], a state-of-the-art method for long-tailed image classification. As shown in Table 1, the overall becomes worse after rebalance, dropping from to , despite significant improvements in overall .

To understand whether the overall degradation is caused by over-rebalance, i.e., of high-frequency classes drops a lot though of low-frequency classes improves, we plot and for each class . The and are calculated by

| (4) | |||||

| (5) |

As shown in Figure 3 (b) and (c), after pixel rebalance with balanced softmax, decreases while increases for low-frequency classes.

This case study shows that pixel rebalance can improve while failing to meet the improvement condition of Remark 1, which implies that pixel rebalance improves low-frequency largely through an increase of , which is harmful to .

3.1.2 Accuracy vs. Frequency

An important prior for rebalancing in long-tailed image classification is that images are nearly i.i.d., which makes class accuracy positively related to the number of images in the corresponding classes as shown in Figure 4 (a).

Under this case, to encourage the optimization process to be more friendly to low-frequency classes in training, it would be sensible to re-weight the cross-entropy loss with a factor that is inversely proportional to class frequency, like in [20]. Moreover, Cao et al. [10] and Ren et al. [43] theoretically reveal the close relationship between the optimal margin and class frequency, further indicating the importance of class frequency statistics for conducting rebalance.

However, the situation is different for the semantic segmentation task. Pixels within the same region of an image usually belong to the same object or stuff and are thus highly correlated. Since pixels are not i.i.d., the class pixel frequency is an unsuitable factor for rebalancing. This conclusion is supported empirically in Figure 4 (b), where class accuracy is shown to have much weaker correlation with class pixel frequency compared to class image frequency in image classification, shown in Figure 4 (a).

| Dataset | |

|---|---|

| CIFAR- | |

| ADEK-pixel | |

| ADEK-region |

To quantify the correlation between class accuracy and frequency, we calculate the Pearson correlation coefficient [4]:

| (6) |

where , , and are functions for mean, standard deviation, covariance and expectation. Table 2 lists the Pearson coefficients between class accuracy and image frequency on CIFAR-100, pixel frequency on ADE20K, and region frequency on ADE20K, respectively. The correlations between class accuracy and image frequency, region frequency, pixel frequency decrease step by step. Region frequency has a stronger correlation with class accuracy than pixel frequency, as it eliminates the effects of correlations among neighboring pixels. The weak relationship between class accuracy and pixel frequency causes pixel frequency to be less informative for rebalancing, which adds to the challenge of rebalancing in semantic segmentation.

3.2 Region Rebalance Framework

Due to the issues discussed in Sec. 3.1, we propose to rebalance at the region level instead of the pixel level, through a Region Rebalance (RR) training strategy. The region rebalance method relieves the class imbalance issue with an auxiliary region classification branch by encouraging features to lie in a more balanced region classification space. Moreover, rebalance methods from long-tailed image classification can be readily employed to solve the region imbalance problem. This involves no extra cost for testing because the region rebalance branch is removed during inference.

The region rebalance framework shown in Figure 2 contains two components: (i) An auxiliary region classification branch to ease the class imbalance problem and (ii) Enhanced rebalance with techniques in long-tailed image classification for solving region imbalance. The overall loss functions in the following are deployed:

| (7) | |||||

| (8) | |||||

| (9) |

where is the dataset, contains all the extracted regions, is the ground truth label of region , represents the region logit corresponding to class , denotes the region frequency of class , is the label space, is the region loss, while represents pixel cross-entropy loss.

In the region classification branch, we group pixels belonging to the same class into region features by averaging the pixel features according to the ground-truth region map. Then the extracted region features are fed into a region classifier. Further, we enhance the rebalance using a technique [43] borrowed from the long-tailed image classification community to tackle the region imbalance problem. The deployed region loss is shown in Eq. (8), and we use a hyper-parameter to control the strength of rebalance, shown in Eq. (9).

3.3 Analysis of Our Method

The misalignment between the training objective, i.e., pixel cross-entropy, and evaluation metric at inference is problematic for direct pixel rebalance as analyzed in Sec. 3.1. The proposed method instead relieves class imbalance via region rebalance. To clarify the mechanism of our region rebalance method, we analyze it in comparison to pixel rebalance. In addition, we discuss differences in imbalance reflected by dataset statistics.

| Dataset | PIF | RIF |

|---|---|---|

| ADEK | ||

| COCO-StuffK |

3.3.1 Region Rebalance vs. Pixel Rebalance

On one hand, region rebalance gathers pixel features of the same class into region features, and then region classification follows. The “gather” operation essentially removes the effect of correlation among pixels in a region. As demonstrated in Table 2, region frequency has a stronger correlation with class accuracy than pixel frequency, further indicating its greater suitability for rebalancing. Besides, the “gather” operation reduces data class imbalance and thus is good for rebalancing as analyzed in Sec. 3.3.2.

On the other hand, we empirically observe that region rebalance is more aligned with the metric. As studied in Sec. 3.1.1, pixel rebalance promotes higher while failing to improve because of an increase in .

Such behavior can be understood by considering the situation that two very different objects/stuff can still have similar local pixel features. With pixel rebalance, we attach more importance to pixels of low-frequency classes, and in consequence, corresponding similar pixels in other classes are more likely to become . In contrast, objects/stuff are more distinguishable at the region level due to features that are more global. Though more importance is also attached to regions of low-frequency classes with region rebalance, it will be much harder to predict other class objects/stuff as . More visual evidence is discussed in our supplementary file. We leave more theoretical analysis to future work.

3.3.2 Datasets Statistics

Semantic segmentation datasets suffer from both pixel level and region level data imbalance. However, we observe that the degree of pixel imbalance is more serious than that of region imbalance as shown in Figure 5. We collect statistics from the most popular semantic segmentation benchmarks in Figure 5. Following convention [20, 39], we calculate the imbalance factor (IF) as , where and are the numbers of training samples for the most frequent class and the least frequent class.

As shown in Figure 5, for ADEK, the pixel imbalance factor (PIF) is nearly times the region imbalance factor (RIF). For COCO-StuffK, PIF is nearly times RIF. As our method relieves the class imbalance issue via region rebalancing, it conveniently benefits from less imbalance than pixel rebalance would, and this in turn promotes more effective rebalancing.

4 Experiments

To evaluate the effectiveness of our method, we conduct experiments on the most popular benchmarks in semantic segmentation, i.e., ADEK [66] and COCO-StuffK [8]. By inserting the proposed region rebalance method into UperNet, PSPNet, Deeplabv+, and OCRNet, clear improvements are obtained. We also test the region rebalance method on popular transformer neural networks, specifically Swin transformer [38] and BEiT [3].

4.1 Datasets

ADEK. ADEK [66] is a challenging dataset often used to validate transformer-based neural networks on downstream tasks such as semantic segmentation. It contains K densely annotated images with 150 fine-grained semantic concepts. The training and validation sets consist of K and K images, respectively.

COCO-StuffK. COCO-StuffK [8] is a large-scale scene understanding benchmark that can be used for evaluating semantic segmentation, object detection, and image captioning. It includes all K images from COCO . The training and validation sets contain K and K images, respectively. It covers classes: thing classes and stuff classes.

4.2 Implementation Details

We implement the proposed region rebalance method in the mmsegmentation codebase [16] and follow the commonly used training settings for each dataset. More details are described in the following.

For backbones, we use CNN-based ResNet-c and ResNet-c, which replace the first convolution layer in the original ResNet- and ResNet- with three consecutive convolutions. Both are popular in the semantic segmentation community [62]. For OCRNet, we adopt HRNet-W and HRNet-W [55]. For transformer-based neural networks, we adopt the popular Swin transformer [38] and BEiT [3]. BEiT achieves the most recent state-of-the-art performance on the ADEK validation set. ”” in Tables 3 and 4 indicates models that are pretrained on ImageNet-K.

With CNN-based models, we use SGD and the poly learning rate schedule [62] with an initial learning rate of and a weight decay of . If not stated otherwise, we use a crop size of , a batch size of , and train all models for K iterations on ADEK and K iterations on COCO-Stuff164K. For Swin transformer and BEiT, we use their default optimizer, learning rate setup and training schedule.

In the training phase, the standard random scale jittering between and , random horizontal flipping, random cropping, as well as random color jittering are used as data augmentations [16]. For inference, we report the performance of both single scale (s.s.) inference and multi-scale (m.s.) inference with horizontal flips and scales of , , , , , .

| Method | Backbone | (s.s.) | (m.s.) |

|---|---|---|---|

| OCRNet | HRNet-W | ||

| OCRNet-RR | HRNet-W | () | |

| UPerNet | ResNet- | ||

| UPerNet-RR | ResNet- | () | |

| PSPNet | ResNet- | ||

| PSPNet-RR | ResNet- | () | |

| DeepLabv+ | ResNet- | ||

| DeepLabv+-RR | ResNet- | () | |

| OCRNet | HRNet-W | ||

| OCRNet-RR | HRNet-W | () | |

| UperNet | ResNet- | ||

| UperNet-RR | ResNet- | () | |

| PSPNet | ResNet- | ||

| PSPNet-RR | ResNet- | () | |

| DeepLabv+ | ResNet- | ||

| DeepLabv+-RR | ResNet- | () | |

| UperNet | Swin-T | ||

| UperNet-RR | Swin-T | () | |

| UperNet | Swin-B† | ||

| UperNet-RR | Swin-B† | () | |

| UperNet | BEiT-L | ||

| UperNet-RR | BEiT-L | () |

4.3 Main Results

Comparison on ADEK. To show the flexibility of our region rebalance module, we experiment on ADEK with various semantic segmentation methods, e.g., PSPNet, UperNet, OCRNet, and Deeplabv3+. As shown in Table 3, plugging the region rebalance module into those methods leads to significant improvements. Specifically, for ResNet- or HRNet-W, after training with Region Rebalance, there are %, %, % and % gains for UperNet, PSPNet, OCRNet, and Deeplabv3+ respectively.

To further show the effectiveness of the proposed region rebalance method, we experiment with Swin transformer and BEiT. For CNN-based models, we achieve with ResNet-, surpassing the baseline by . With BEiT, the state-of-the-art is improved from to .

Comparison on COCO-StuffK. On the large-scale COCO-StuffK dataset, we again demonstrate the flexibility of our region rebalance module. The experimental results are summarized in Table 4. Equipped with the region rebalance module in training, CNN-based models, i.e., ResNet-, HRNet-W, ResNet- and HRNet-W, surpass their baselines by a large margin. Specifically, with HRNet- and OCRNet, our trained model outperforms the baseline by . With the large CNN-based ResNet- and Deeplabv3+, our model achieves , surpassing the baseline by .

We also verify the effectiveness of the region rebalance module with Swin transformer on COCO-StuffK. Experimental results with Swin-T and Swin-B show clear improvements after rebalancing with our region rebalance method in the training phase.

| Method | Backbone | (s.s.) | (m.s.) |

|---|---|---|---|

| OCRNet | HRNet-W | ||

| OCRNet-RR | HRNet-W | () | |

| UperNet | ResNet- | ||

| UperNet-RR | ResNet- | () | |

| PSPNet | ResNet- | ||

| PSPNet-RR | ResNet- | () | |

| DeepLabv+ | ResNet- | ||

| DeepLabv+-RR | ResNet- | () | |

| OCRNet | HRNet-W | ||

| OCRNet-RR | HRNet-W | () | |

| UperNet | ResNet- | ||

| UperNet-RR | ResNet- | () | |

| PSPNet | ResNet- | ||

| PSPNet-RR | ResNet- | () | |

| DeepLabv+ | ResNet- | ||

| DeepLabv+-RR | ResNet- | () | |

| UperNet | Swin-T | ||

| UperNet-RR | Swin-T | () | |

| UperNet | Swin-B† | ||

| UperNet-RR | Swin-B† | () |

4.4 Ablation Experiments

Ablation of region rebalance components. To verify the usefulness of both components in our region rebalance method, we conduct the following ablations on top of the baseline: I. only with an auxiliary region classification branch; II. further inserting a rebalance mechanism, i.e., balanced softmax [43]. We plot the experimental results in Figure 7.

With the two components applied in training, we observe consistent improvements on ADEK and the large-scale COCO-StuffK. Additionally, an interesting phenomenon is observed: with only component I, deep models already enjoy significant gains on COCO-StuffK. The reason behind this may be that there is a much larger ratio between the pixel imbalance factor (PIF) and region imbalance factor (RIF) on COCO-StuffK than on ADEK, as shown in Table 5. From this point of view, when adopting I, models are given stronger regularization and the output features are encouraged to be more balanced.

Improvements on low-frequency classes. To further examine the rebalancing effects of our proposed region rebalance method, we plot the class between the baseline model and the model trained with the region rebalance method. Figure 3 (a) shows the results on ADEK with ResNet- and Deeplabv+. We also validate the rebalance effects on COCO-StuffK with different semantic segmentation methods, i.e., Deeplabv+, OCRNet, and UperNet, as shown in Figure 6. We observe that the of most low-frequency classes is enhanced. For example, the of class “shower” is improved from to in ADEK.

| Hyper-parameter | (s.s.) |

|---|---|

| (baseline) | 43.95 |

| 44.61 | |

| 44.85 | |

| 45.02 | |

| 44.64 |

Ablation of hyper-parameter . In Eq. (9), we introduce the hyper-parameter for weighting between pixel loss and region loss. All experimental results in Tables 3 and 4 are reported for the same .

To show the sensitivity of our region rebalance method to different values, we conduct an ablation with ResNet-50 and Deeplabv3+ on ADE20K. Table 5 lists the experimental results, showing that the performance is not affected substantially by the value of within the range [0.1,0.3].

Ablation on Dice loss. Though some -based loss functions, e.g., Dice Loss [41], have been developed, we empirically observe that pixel cross-entropy is still necessary in training for high performance. An ablation on the Dice loss is conducted on ADEK with ResNet-50 and Deeplabv+, and the results are reported in Table 6. With just the Dice Loss itself, the model achieves only 1.14 mIoU. This huge performance degradation implies that the pixel cross-entropy is still a necessary part and the challenges in Sec. 3.1 will still exist when we conduct pixel rebalance.

| Method | (s.s.) |

|---|---|

| Baseline (cross-entropy) | 43.95 |

| Dice Loss | 1.14 |

| Dice Loss + cross-entropy | 44.12 |

| RR (Ours) | 45.02 |

5 Conclusion

In this paper, we investigate the imbalance phenomenon in semantic segmentation and identified two major challenges in conducting pixel rebalance, i.e., (i) misalignment between the training objective and the evaluation metric at inference, and (ii) correlations among pixels within the same object/stuff cause class frequency to be less effective for rebalancing. With our analysis, we propose to gather correlated pixels into regions and rebalance within the context of region classification. The proposed region rebalance method leads to strong improvements across different semantic segmentation methods, e.g., OCRNet, DeepLabv+, UperNet, and PSPNet. Experimental results on ADEK and the large-scale COCO-StuffK verify the effectiveness of our method.

References

- [1] Md Amirul Islam, Mrigank Rochan, Neil DB Bruce, and Yang Wang. Gated feedback refinement network for dense image labeling. In CVPR, 2017.

- [2] Vijay Badrinarayanan, Alex Kendall, and Roberto Cipolla. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE TPAMI, 2017.

- [3] Hangbo Bao, Li Dong, and Furu Wei. Beit: Bert pre-training of image transformers. arXiv preprint arXiv:2106.08254, 2021.

- [4] Jacob Benesty, Jingdong Chen, Yiteng Huang, and Israel Cohen. Pearson correlation coefficient. In Noise reduction in speech processing. 2009.

- [5] Mateusz Buda, Atsuto Maki, and Maciej A Mazurowski. A systematic study of the class imbalance problem in convolutional neural networks. Neural Networks, 2018.

- [6] Mateusz Buda, Atsuto Maki, and Maciej A. Mazurowski. A systematic study of the class imbalance problem in convolutional neural networks. Neural Networks, 2018.

- [7] Jonathon Byrd and Zachary Lipton. What is the effect of importance weighting in deep learning? In ICML, 2019.

- [8] Holger Caesar, Jasper Uijlings, and Vittorio Ferrari. Coco-stuff: Thing and stuff classes in context. In CVPR, 2018.

- [9] Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. Learning imbalanced datasets with label-distribution-aware margin loss. In NeurIPS, 2019.

- [10] Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. Learning imbalanced datasets with label-distribution-aware margin loss. In NeurIPS, 2019.

- [11] Nitesh V. Chawla, Kevin W. Bowyer, Lawrence O. Hall, and W. Philip Kegelmeyer. SMOTE: Synthetic minority over-sampling technique. Journal of Artificial Intelligence Research, 2002.

- [12] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. Semantic image segmentation with deep convolutional nets and fully connected crfs. In ICLR, 2015.

- [13] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE TPAMI, 2017.

- [14] Liang-Chieh Chen, George Papandreou, Florian Schroff, and Hartwig Adam. Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587, 2017.

- [15] Liang-Chieh Chen, Yukun Zhu, George Papandreou, Florian Schroff, and Hartwig Adam. Encoder-decoder with atrous separable convolution for semantic image segmentation. In ECCV, 2018.

- [16] MMSegmentation Contributors. MMSegmentation: Openmmlab semantic segmentation toolbox and benchmark. https://github.com/open-mmlab/mmsegmentation, 2020.

- [17] Jiequan Cui, Shu Liu, Zhuotao Tian, Zhisheng Zhong, and Jiaya Jia. Reslt: Residual learning for long-tailed recognition. arXiv preprint arXiv:2101.10633, 2021.

- [18] Jiequan Cui, Zhisheng Zhong, Shu Liu, Bei Yu, and Jiaya Jia. Parametric contrastive learning. In ICCV, 2021.

- [19] Yin Cui, Menglin Jia, Tsung-Yi Lin, Yang Song, and Serge J. Belongie. Class-balanced loss based on effective number of samples. In CVPR, 2019.

- [20] Yin Cui, Menglin Jia, Tsung-Yi Lin, Yang Song, and Serge Belongie. Class-balanced loss based on effective number of samples. In CVPR, 2019.

- [21] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [22] Agrim Gupta, Piotr Dollár, and Ross Girshick. LVIS: A dataset for large vocabulary instance segmentation. In CVPR, 2019.

- [23] Haibo He and Edwardo A Garcia. Learning from imbalanced data. IEEE TKDE, 2009.

- [24] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In ICCV, 2017.

- [25] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, 2016.

- [26] Chen Huang, Yining Li, Chen Change Loy, and Xiaoou Tang. Learning deep representation for imbalanced classification. In CVPR, 2016.

- [27] Chen Huang, Yining Li, Change Loy Chen, and Xiaoou Tang. Deep imbalanced learning for face recognition and attribute prediction. IEEE TPAMI, 2019.

- [28] Chen Huang, Yining Li, Chen Change Loy, and Xiaoou Tang. Learning deep representation for imbalanced classification. In CVPR, 2016.

- [29] Muhammad Abdullah Jamal, Matthew Brown, Ming-Hsuan Yang, Liqiang Wang, and Boqing Gong. Rethinking class-balanced methods for long-tailed visual recognition from a domain adaptation perspective. In CVPR, 2020.

- [30] Nathalie Japkowicz and Shaju Stephen. The class imbalance problem: A systematic study. Intelligent Data Analysis, 2002.

- [31] Bingyi Kang, Saining Xie, Marcus Rohrbach, Zhicheng Yan, Albert Gordo, Jiashi Feng, and Yannis Kalantidis. Decoupling representation and classifier for long-tailed recognition. arXiv preprint arXiv:1910.09217, 2019.

- [32] Bingyi Kang, Saining Xie, Marcus Rohrbach, Zhicheng Yan, Albert Gordo, Jiashi Feng, and Yannis Kalantidis. Decoupling representation and classifier for long-tailed recognition. In ICLR, 2020.

- [33] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. ImageNet classification with deep convolutional neural networks. In NeurIPS, 2012.

- [34] Yu Li, Tao Wang, Bingyi Kang, Sheng Tang, Chunfeng Wang, Jintao Li, and Jiashi Feng. Overcoming classifier imbalance for long-tail object detection with balanced group softmax. In CVPR, 2020.

- [35] Guosheng Lin, Anton Milan, Chunhua Shen, and Ian Reid. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In CVPR, 2017.

- [36] Tsung-Yi Lin, Piotr Dollár, Ross B. Girshick, Kaiming He, Bharath Hariharan, and Serge J. Belongie. Feature pyramid networks for object detection. In CVPR, 2017.

- [37] Shu Liu, Lu Qi, Haifang Qin, Jianping Shi, and Jiaya Jia. Path aggregation network for instance segmentation. In CVPR, 2018.

- [38] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In ICCV, 2021.

- [39] Ziwei Liu, Zhongqi Miao, Xiaohang Zhan, Jiayun Wang, Boqing Gong, and Stella X Yu. Large-scale long-tailed recognition in an open world. In CVPR, 2019.

- [40] Jonathan Long, Evan Shelhamer, and Trevor Darrell. Fully convolutional networks for semantic segmentation. In CVPR, 2015.

- [41] Fausto Milletari, Nassir Navab, and Seyed-Ahmad Ahmadi. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In 3DV, 2016.

- [42] Tobias Pohlen, Alexander Hermans, Markus Mathias, and Bastian Leibe. Full-resolution residual networks for semantic segmentation in street scenes. In CVPR, 2017.

- [43] Jiawei Ren, Cunjun Yu, Shunan Sheng, Xiao Ma, Haiyu Zhao, Shuai Yi, and Hongsheng Li. Balanced meta-softmax for long-tailed visual recognition. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin, editors, NeurIPS, 2020.

- [44] Mengye Ren, Wenyuan Zeng, Bin Yang, and Raquel Urtasun. Learning to reweight examples for robust deep learning. In ICML, 2018.

- [45] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. In NeurIPS, 2015.

- [46] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In MICCAI, 2015.

- [47] Li Shen, Zhouchen Lin, and Qingming Huang. Relay backpropagation for effective learning of deep convolutional neural networks. In ECCV, 2016.

- [48] Jun Shu, Qi Xie, Lixuan Yi, Qian Zhao, Sanping Zhou, Zongben Xu, and Deyu Meng. Meta-weight-net: Learning an explicit mapping for sample weighting. In NeurIPS, 2019.

- [49] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. In ICLR, 2015.

- [50] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In CVPR, 2015.

- [51] Jingru Tan, Changbao Wang, Buyu Li, Quanquan Li, Wanli Ouyang, Changqing Yin, and Junjie Yan. Equalization loss for long-tailed object recognition. In CVPR, 2020.

- [52] Kaihua Tang, Jianqiang Huang, and Hanwang Zhang. Long-tailed classification by keeping the good and removing the bad momentum causal effect. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin, editors, NeurIPS, 2020.

- [53] Kaihua Tang, Jianqiang Huang, and Hanwang Zhang. Long-tailed classification by keeping the good and removing the bad momentum causal effect. In NeurIPS, 2020.

- [54] Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In ICML, 2021.

- [55] Jingdong Wang, Ke Sun, Tianheng Cheng, Borui Jiang, Chaorui Deng, Yang Zhao, Dong Liu, Yadong Mu, Mingkui Tan, Xinggang Wang, et al. Deep high-resolution representation learning for visual recognition. IEEE TPAMI, 2020.

- [56] Jiaqi Wang, Wenwei Zhang, Yuhang Zang, Yuhang Cao, Jiangmiao Pang, Tao Gong, Kai Chen, Ziwei Liu, Chen Change Loy, and Dahua Lin. Seesaw loss for long-tailed instance segmentation. In CVPR, 2021.

- [57] Xudong Wang, Long Lian, Zhongqi Miao, Ziwei Liu, and Stella X. Yu. Long-tailed recognition by routing diverse distribution-aware experts. In LCLR, 2021.

- [58] Yu-Xiong Wang, Deva Ramanan, and Martial Hebert. Learning to model the tail. In NeurIPS, 2017.

- [59] Tete Xiao, Yingcheng Liu, Bolei Zhou, Yuning Jiang, and Jian Sun. Unified perceptual parsing for scene understanding. In ECCV, 2018.

- [60] Fisher Yu and Vladlen Koltun. Multi-scale context aggregation by dilated convolutions. In ICLR, 2016.

- [61] Yuhui Yuan, Xilin Chen, and Jingdong Wang. Object-contextual representations for semantic segmentation. In ECCV, 2020.

- [62] Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. Pyramid scene parsing network. In CVPR, 2017.

- [63] Q Zhong, C Li, Y Zhang, H Sun, S Yang, D Xie, and S Pu. Towards good practices for recognition & detection. In CVPR workshops, 2016.

- [64] Zhisheng Zhong, Jiequan Cui, Shu Liu, and Jiaya Jia. Improving calibration for long-tailed recognition. In CVPR, 2021.

- [65] Boyan Zhou, Quan Cui, Xiu-Shen Wei, and Zhao-Min Chen. Bbn: Bilateral-branch network with cumulative learning for long-tailed visual recognition. arXiv preprint arXiv:1912.02413, 2019.

- [66] Bolei Zhou, Hang Zhao, Xavier Puig, Sanja Fidler, Adela Barriuso, and Antonio Torralba. Scene parsing through ade20k dataset. In CVPR, 2017.

Region Rebalance for Long-Tailed Semantic Segmentation

Supplementary Material

Appendix A Experiments on COCO-StuffK for Semantic Segmentation

COCO-StuffK, which includes K images from the COCO training set, is a subset of COCO-StuffK. The training and validation sets consist of K and K images, respectively. It also covers classes. The experimental results are reported in Table 7. Employing our region rebalance module in training yields and improvements separately when compared with the baselines.

| Method | Backbone | (s.s.) | (m.s.) |

|---|---|---|---|

| DeepLabv+ | ResNet- | ||

| DeepLabv+-RR | ResNet- | () | |

| DeepLabv+ | ResNet- | ||

| DeepLabv+-RR | ResNet- | () |

Appendix B Proof of Remark 1

Before the pixel rebalance, for one low-frequency class , we suppose its and are

| (10) | |||||

| (11) |

With the pixel rebalance, we expect the is improved to . Then the class and become

| (12) | |||||

| (13) |

Taking the reciprocal of and , we obtain

| (14) | |||||

| (15) |

To guarantee , it should satisfy:

| (16) |

where is the pixel frequency of class .

Appendix C How Does Make Effects on and ?

As analyzed in Sec. 3.1, we examine the connections between and , i.e.,

| (17) |

To understand the role of , we plot the curves with respect to and with various constant value shown in Figure 8. We observe that, when is a smaller value around 0.0, e.g., , optimizing is equal to optimizing (Figure 8). However, demonstrated by Figure 8, when is a larger value, e.g., , optimizing will have little effect on , especially when has already achieved a high value, e.g., . This is just the reason that is improved while even decrease for low-frequency classes compared to the baseline in Sec. 3.1.1.

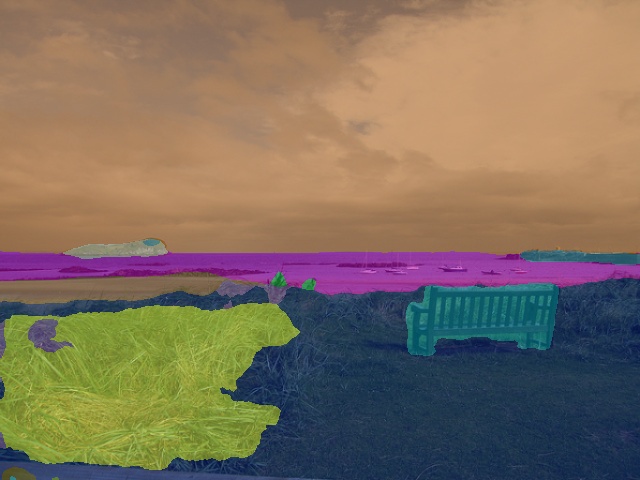

Appendix D Visual Evidence for Region Rebalance vs. Pixel Rebalance

As demonstrated in Sec. 3.1.1, pixel rebalance improves meanwhile increases significantly for low-frequency classes. As a result, can not benefit from the pixel rebalance. In contrast, the proposed region rebalance is more aligned with the metric. This phenomenon can be understood by considering the situation that two very different objects or stuff can still have similar local pixel features. Conducting pixel rebalance make it easy to let these similar pixels of other classes to be . Taking it into consideration that objects/stuff are more distinguishable at the region level because of the global features, predicting other class objects/stuff as will be much harder. We give visual evidence to our intuitive reasoning in Figure 9.

As shown in highlighted regions with rectangle, pixels having similar texture with other object/stuff or around objects/stuff boundary are more likely to be with pixel rebalance. Region rebalance relieves this issue by using global region features.

|

(a) Input

|

|

|

|

|

|

|---|---|---|---|---|---|

|

(b) GT

|

|

|

|

|

|

|

(c) Pixel Rebalance

|

|

|

|

|

|

|

(d) Region Rebalance

|

|

|

|

|

|

Appendix E Quantitative Visualization Comparisons on ADEK and COCO-StuffK

In this section, we demonstrate the advantages of region rebalance with quantitative visualizations on ADEK and COCOStuffK shwon in Figures 10, 11 and 12.

|

(a) Input

|

|

|

|

|

|

|---|---|---|---|---|---|

|

(b) GT

|

|

|

|

|

|

|

(c) Baseline

|

|

|

|

|

|

|

(d) Region Rebalance

|

|

|

|

|

|

|

(a) Input

|

|

|

|

|

|

|---|---|---|---|---|---|

|

(b) GT

|

|

|

|

|

|

|

(c) Baseline

|

|

|

|

|

|

|

(d) Region Rebalance

|

|

|

|

|

|

|

(a) Input

|

|

|

|

|

|

|---|---|---|---|---|---|

|

(b) GT

|

|

|

|

|

|

|

(c) Baseline

|

|

|

|

|

|

|

(d) Region Rebalance

|

|

|

|

|

|