Reflectance-Oriented Probabilistic Equalization

for Image Enhancement

Abstract

Despite recent advances in image enhancement, it remains difficult for existing approaches to adaptively improve the brightness and contrast for both low-light and normal-light images. To solve this problem, we propose a novel 2D histogram equalization approach. It assumes intensity occurrence and co-occurrence to be dependent on each other and derives the distribution of intensity occurrence (1D histogram) by marginalizing over the distribution of intensity co-occurrence (2D histogram). This scheme improves global contrast more effectively and reduces noise amplification. The 2D histogram is defined by incorporating the local pixel value differences in image reflectance into the density estimation to alleviate the adverse effects of dark lighting conditions. Over 500 images were used for evaluation, demonstrating the superiority of our approach over existing studies. It can sufficiently improve the brightness of low-light images while avoiding over-enhancement in normal-light images.

Index Terms — 2D histogram equalization, reflectance, Retinex model, contrast enhancement, image enhancement

1 Introduction

Image enhancement aims to enhance image contrast and reveal hidden image details. With the rapid development of digital imaging devices, the number of images out there and the demand for image enhancement has increased significantly. Commercial raster graphics editors require image editing expertise or considerable manual effort to produce satisfactory image enhancement. Therefore, it is essential to develop an automated image enhancement technique that adapts to different input lighting conditions. Existing approaches to image enhancement can be classified into model-based approaches and learning-based ones. We focus on model-based approaches, as they are more interpretable and do not need labeled training data.

In model-based approaches, histogram equalization (HE) has received the most attention. It derives an intensity mapping function such that the entropy of the distribution of output intensities is maximized. However, HE extends the contrast between intensities with large populations to a wider range, even if it is not semantically important. This issue has been addressed by incorporating spatial information into density estimation [1, 2, 3, 4, 5, 6, 7]. For example, 2DHE [2, 3] equalizes the 2D histogram of intensity co-occurrence so that the contrast between frequently co-occurring intensities is enhanced to a greater extent. CACHE [6] incorporates image gradients into histogram construction to avoid the excessive enhancement of trivial background. However, such spatial information is not discriminative enough, especially for low-light image areas. Their equalization schemes [2, 3, 6] also overemphasize the importance of the frequently co-occurring intensities, tending to cause precipitous brightness fluctuation in very dark or very bright image areas.

Another direction [8, 9, 10, 11, 12, 13, 14, 15, 16, 17] is based on the Retinex model. It takes an image as a combination of illumination and reflectance components, which capture global brightness and sharp image details, respectively. Some studies [8, 9, 10, 11, 12] assumed reflectance to be the desired enhancement output and obtained it by estimating and removing illumination. However, this strategy sometimes leads to excessively enhanced brightness. In other studies, LIME [15] assumes that the gamma correction of the reflectance is the ideal form of low-light image enhancement. In NPE [13] and NPIE [16], the illumination is enhanced with HE and recombined with the reflectance to reconstruct the enhanced image. Ren et al. [17] found that the illumination can be leveraged as the exposure ratio of a camera response function (CRF), and proposed a novel CRF-based image enhancement approach called LECARM. These approaches are valid for discovering dark image details. However, it is not easy for them to find a solution optimized for both low-light and normal-light images; they tend to overly amplify the brightness and saturation of normal-light images.

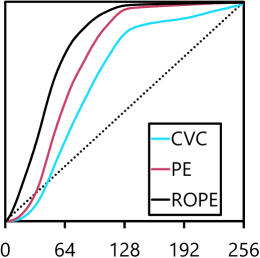

Here, we propose a novel 2DHE approach known as reflectance-oriented probabilistic equalization (ROPE), which allows for adaptive regulation of global brightness. ROPE assumes intensity occurrence and co-occurrence to be dependent and derives the distribution of intensity occurrence (1D histogram) by marginalizing over the distribution of intensity co-occurrence (2D histogram). This scheme builds a novel bridge between 1D and 2D histograms. Compared to related approaches such as CVC [2] (2DHE) and CACHE [6, 7] (1DHE), ROPE provides more adequate contrast enhancement and less noise amplification. Inspired by RG-CACHE [7], we define a novel 2D histogram by incorporating the local pixel value differences in image reflectance into the density estimation to alleviate the adverse effects of dark lighting conditions. Experiments show that ROPE outperforms state-of-the-art image enhancement approaches from both qualitative and quantitative perspectives.

2 Proposed Approach

2.1 Preliminaries

Given a color image , its grayscale image is defined as the max of its RGB components and is equal to its value channel in HSV space [18]. Let be the enhanced image of in ROPE. Let and denote element-wise manipulation and division, respectively. The final output is computed as

| (1) |

Let and , where and are the intensities of the pixels in the input and output, respectively. is the total number of possible intensity values (typically 256). Our goal is to find an intensity mapping function of the form to produce the enhanced image.

Let be the event of (occurrence), where and is an intensity value. Let be its probability. The 1D histogram of can then be expressed by . Let be the cumulative distribution function of . In HE, the intensity mapping function is given by . The problem is how to properly define .

2.2 Modeling of 1D Histogram as Marginal Probability

In ROPE, we define based on the 2D histogram of . Let be the event of and (co-occurrence), where and is the set of coordinates in the local window centered on the pixel . Let be its probability. Then the 2D histogram can be written as with and . The construction of this 2D histogram is discussed in Section 2.3.

In CVC [2], given two intensities and , their 2D histogram value is voted into the bin of the larger intensity and added to the 1D histogram value , as illustrated in Fig. 1a. Similarly, CACHE [6, 7] votes into the bins of both intensities (Fig. 1b). These schemes overemphasize and/or and thus tend to cause precipitous brightness fluctuation in very dark or bright image areas as shown in Figs. 2b and 2e. Instead, we aim to find a proper method to distribute over all for more adequate contrast enhancement (Fig. 1c as well as Figs. 2c and 2f).

Our thinking is as follows. Wu et al. [6, 7] have revealed that in HE, for any , the degree of contrast enhancement (CE) between and is ultimately proportional to . Thus, for any , the degree of CE between and is proportional to . Meanwhile, related studies [2, 3] suggested that in 2DHE, needs to be defined in such a way that it is positively correlated with the requirement of CE between and . These two insights lead to the inference that should be modeled such that . This confirms our motivation above: should not be delegated to and/or alone, but should be distributed over all .

Now, we would like to build a bridge between and . We assume that and are dependent on each other. If we consider to be a marginal event, its distribution can be obtained by marginalizing over :

| (2) |

where is the conditional probability of given . This formula implies that the 1D histogram value can be modeled as a weighted average of all 2D histogram values . The conditional probabilities act as weights.

Recall that it is necessary to determine in such a way that . Therefore, it is reasonable to assume that and are dependent on each other, i.e., , if and only if ; otherwise, and are mutually exclusive and . In view of this, we introduce a significance factor for all and define the conditional probabilities by

| (3) |

The intensity value , which requires a greater contrast between and , should have a greater value of , and vice versa. However, we have no idea which intensity values are more important than others. In this study, we propose to determine through an iterative method. Let be the index of the iteration and be the maximum number of iterations. When , we initialize for all and compute using Eq. 2. For all , we update with and recalculate using the updated significance factor. In this way, we can guarantee that with a larger probability tends to have a larger significance factor and thus tends to receive more contribution from the 2D histogram values . Empirically, we found that two iterations are sufficient for ROPE to achieve satisfactory performance.

2.3 Embedding Reflectance in 2D Histogram

Next, we describe how to construct the 2D histogram of the grayscale image . In CVC [2], the histogram value is determined as the co-occurrence frequency of the intensity value in the local window centered on the pixel of intensity , further weighted by . RG-CACHE [7] directly constructs a 1D histogram by incorporating the gradient of image reflectance into the density estimation. In this study, we borrow the idea of RG-CACHE to mitigate the negative effect of dark lighting conditions but embed the image reflectance into a 2D histogram instead of a 1D histogram.

Let and be the illumination and the reflectance components of , respectively. We first use the relative total variation (RTV) approach [19, 15] as an edge-preserving filter [20, 21, 22, 23, 24, 25] to estimate . We then consider a modified Retinex model as the formation of : (where is the Euler number). Thus, the definition of the reflectance becomes

| (4) |

Eq. 4 calculates in the logarithmic domain. It reveals much richer objectness information hidden in the dark areas because logarithmic scaling magnifies the difference between small quantities, as shown in Figs. 3a and 3f.

Let , where is the reflectance value of the pixel . We calculate the 2D histogram values by

| (5) |

where is a window centered on according to CVC, and is the Kronecker delta. In this study, it is assumed that and for all .

Recall that needs to be defined in such a way that it is positively correlated with the requirement of CE between and (Section 2.2). Eq. 5 satisfies this condition exactly; the 2D histogram values capture the local pixel value differences in reflectance and are sensitive to the presence of meaningful objects hidden in the dark. As shown in Fig. 3f, most large differences in reflectance are present in the foreground objects, e.g., the plants in the center and on the right and the ColorChecker. These objects obviously require greater brightness and contrast (high CE requirement) than the background. Conversely, the reflectance values of the background, where contrast is of less importance (low CE requirement), are rather smooth. Once the 2D histogram is constructed, it is substituted into Eq. 2 to iteratively calculate the 1D histogram. The input image is then enhanced by HE as described in Section 2.1.

| Approach | DE | EME | PD | PCQI | LOE |

| No Enhancement | 7.17 | 15.7 | 27.9 | 1.00 | 0 |

| LIME [15] | 7.08 | 13.0 | 27.6 | 0.88 | 156.5 |

| NPIE [16] | 7.33 | 17.4 | 27.8 | 0.99 | 98.9 |

| LECARM [17] | 7.11 | 12.2 | 25.0 | 0.90 | 299.9 |

| KIND [26] | 7.02 | 11.7 | 22.5 | 0.87 | 155.4 |

| CVC [2] | 7.49 | 23.5 | 32.3 | 1.06 | 0 |

| RG-CACHE [7] | 7.64 | 26.0 | 37.6 | 1.02 | 0 |

| ROPE | 7.62 | 32.3 | 40.1 | 1.04 | 0 |

Fig. 3a shows an example of input image . Figs. 3b–3e show the 2D/1D histograms and the intensity mapping function obtained using CVC, PE, and ROPE (PE indicates our approach proposed in Section 2.2, but with the 2D histogram of CVC used). Compared to CVC, ROPE has more emphasis on darker pixels, especially for important objects, thanks to the incorporation of reflectance. As shown in Fig. 3g, CVC made no significant changes to the input, whereas PE improved the visibility of image detail, thereby demonstrating the effectiveness of Eq. 2. By harnessing the reflectance effectively, ROPE further boosted brightness and contrast for the most satisfying image enhancement.

3 Experiments

In Section 2.3, we compared ROPE to CVC [2] and RG-CACHE [7]. In this section, we mainly compare ROPE with four state-of-the-art approaches: LIME [15], NPIE [16], LECARM [17], and KIND [26]. The first three are based on the Retinex model; the last one is based on deep learning. All these approaches were evaluated using 578 images from four datasets: LIME [15], USC-SIPI [27], BSDS500 [28], and VONIKAKIS [29]. The size of the local window used in ROPE was set to , and the maximum number of iterations was set to two.

3.1 Qualitative Assessment

The enhanced images obtained with the compared approaches are shown in Fig. 4. KIND uses CNNs for image enhancement, but in a broader sense, it is also based on the Retinex model and so has similar performance to LIME, NPIE, and LECARM. Two common disadvantages of the four approaches are 1) a tendency to over-amplify brightness (first row) and 2) excessive saturation if color distortion was previously hidden in the dark areas of the input (second row). In comparison, ROPE does not suffer from these problems. The previous approaches essentially excel at enhancing low-light images, but there are exceptions, as shown in the third row. In this example, ROPE provided the most pleasant brightness, especially for the flowers in the center. All of these examples demonstrate the much greater adaptability and consistency of ROPE; our approach is capable of improving brightness sufficiently for dark images while avoiding excessive enhancement for normal-light images.

3.2 Quantitative Assessment

We objectively evaluated the image enhancement approaches using five metrics: discrete entropy (DE), EME, PD, and PCQI for contrast enhancement and LOE for naturalness. DE measures the amount of information in an image. EME [31] measures the average local contrast in an image. PD [32] measures the average intensity difference of all pixel pairs in an image. PCQI [33] measures the distortions of contrast strength and structure between input and output. LOE [13] measures the difference in lightness order between the input and enhanced images. The lightness order means the relative order of the intensity values of two pixels. For DE, EME, PD, and PCQI, higher statistics indicate better quality, while LOE is the opposite.

Table 1 shows the statistics averaged over 500 test images of BSDS500. Let us first focus on the contrast enhancement metrics. Since CVC, RG-CACHE, and ROPE are based on HE, they could maximize the range of intensity values and so achieved the highest scores. ROPE showed excellent contrast improvement capabilities, taking first place in EME and PD and second place in DE and PCQI. In comparison, none of the four approaches compared in Section 3.1 showed good performance.

In terms of image naturalness, all the approaches based on HE obtained the best LOE scores. These approaches have an inherent monotonicity constraint on the intensity mapping function , so that the contrast enhancement does not change the order of intensity values in all pixels. In comparison, the approaches based on the Retinex model have poorer scores because they alter or eliminate the illumination component of the image, which results in a large variation in the lightness order.

Computational Complexity. Consider the processing of a grayscale image with pixels and possible intensity values. The complexity of the reflectance estimation based on RTV [19, 15] is , which is the same as in LIME. The complexity of the 2D histogram construction (Eq. 5) is , where is the local window size. The 1D histogram construction (Eq. 2) requires complexity . Although it appears to be computationally intensive, the processing time can be greatly reduced by exploiting convolutional operations. Applying the intensity mapping function to finally takes a complexity of . Given a color image, the complexities are the same as those described above, since ROPE is only applied to its intensity channel (Section 2.1).

4 Conclusion

In this study, a novel image enhancement approach called ROPE is proposed. In this approach, an image is decomposed into illumination and reflectance components. The local pixel value differences in reflectance are embedded in a 2D histogram that captures the probability of intensity co-occurrence. ROPE derives a 1D histogram by marginalizing over the 2D histogram, assuming that intensity occurrence and co-occurrence are dependent on each other. Finally, an intensity mapping function is derived by HE for image enhancement. Evaluated on more than 500 images, ROPE surpassed state-of-the-art image enhancement approaches in both qualitative and quantitative terms. It was able to provide sufficient brightness improvement for low-light images while adaptively avoiding excessive enhancement for normal-light images.

References

- [1] Tarik Arici, Salih Dikbas, and Yucel Altunbasak, “A histogram modification framework and its application for image contrast enhancement,” IEEE Trans. Image Processing, vol. 18, no. 9, pp. 1921–1935, 2009.

- [2] Turgay Çelik and Tardi Tjahjadi, “Contextual and variational contrast enhancement,” IEEE Trans. Image Processing, vol. 20, no. 12, pp. 3431–3441, 2011.

- [3] Turgay Çelik, “Two-dimensional histogram equalization and contrast enhancement,” Pattern Recognit., vol. 45, no. 10, pp. 3810–3824, 2012.

- [4] Gabriel Eilertsen, Rafal K. Mantiuk, and Jonas Unger, “Real-time noise-aware tone mapping,” ACM Trans. Graph., vol. 34, no. 6, pp. 198:1–198:15, 2015.

- [5] Haonan Su and Cheolkon Jung, “Low light image enhancement based on two-step noise suppression,” in ICASSP, 2017, pp. 1977–1981.

- [6] Xiaomeng Wu, Xinhao Liu, Kaoru Hiramatsu, and Kunio Kashino, “Contrast accumulated histogram equalization for image enhancement,” in ICIP, 2017, pp. 3190–3194.

- [7] Xiaomeng Wu, Takahito Kawanishi, and Kunio Kashino, “Reflectance-guided, contrast-accumulated histogram equalization,” in ICASSP, 2020, pp. 2498–2502.

- [8] Daniel J. Jobson, Zia-ur Rahman, and Glenn A. Woodell, “Properties and performance of a center/surround Retinex,” IEEE Trans. Image Processing, vol. 6, no. 3, pp. 451–462, 1997.

- [9] Daniel J. Jobson, Zia-ur Rahman, and Glenn A. Woodell, “A multiscale Retinex for bridging the gap between color images and the human observation of scenes,” IEEE Trans. Image Processing, vol. 6, no. 7, pp. 965–976, 1997.

- [10] Giovanni Ramponi, “A cubic unsharp masking technique for contrast enhancement,” Signal Processing, vol. 67, no. 2, pp. 211–222, 1998.

- [11] Frédo Durand and Julie Dorsey, “Fast bilateral filtering for the display of high-dynamic-range images,” ACM Trans. Graph., vol. 21, no. 3, pp. 257–266, 2002.

- [12] Zeev Farbman, Raanan Fattal, Dani Lischinski, and Richard Szeliski, “Edge-preserving decompositions for multi-scale tone and detail manipulation,” ACM Trans. Graph., vol. 27, no. 3, 2008.

- [13] Shuhang Wang, Jin Zheng, Hai-Miao Hu, and Bo Li, “Naturalness preserved enhancement algorithm for non-uniform illumination images,” IEEE Trans. Image Processing, vol. 22, no. 9, pp. 3538–3548, 2013.

- [14] Xueyang Fu, Delu Zeng, Yue Huang, Xiao-Ping Zhang, and Xinghao Ding, “A weighted variational model for simultaneous reflectance and illumination estimation,” in CVPR, 2016, pp. 2782–2790.

- [15] Xiaojie Guo, Yu Li, and Haibin Ling, “LIME: Low-light image enhancement via illumination map estimation,” IEEE Trans. Image Processing, vol. 26, no. 2, pp. 982–993, 2017.

- [16] Shuhang Wang and Gang Luo, “Naturalness preserved image enhancement using a priori multi-layer lightness statistics,” IEEE Trans. Image Processing, vol. 27, no. 2, pp. 938–948, 2018.

- [17] Yurui Ren, Zhenqiang Ying, Thomas H. Li, and Ge Li, “LECARM: Low-light image enhancement using the camera response model,” IEEE Trans. Circuits Syst. Video Techn., vol. 29, no. 4, pp. 968–981, 2019.

- [18] Alvy Ray Smith, “Color gamut transform pairs,” in SIGGRAPH, 1978, pp. 12–19.

- [19] Li Xu, Qiong Yan, Yang Xia, and Jiaya Jia, “Structure extraction from texture via relative total variation,” ACM Trans. Graph., vol. 31, no. 6, pp. 139:1–139:10, 2012.

- [20] Li Xu, Cewu Lu, Yi Xu, and Jiaya Jia, “Image smoothing via L gradient minimization,” ACM Trans. Graph., vol. 30, no. 6, pp. 174, 2011.

- [21] Kaiming He, Jian Sun, and Xiaoou Tang, “Guided image filtering,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 6, pp. 1397–1409, 2013.

- [22] Qi Zhang, Xiaoyong Shen, Li Xu, and Jiaya Jia, “Rolling guidance filter,” in ECCV, 2014, pp. 815–830.

- [23] Qi Zhang, Li Xu, and Jiaya Jia, “100+ times faster weighted median filter (WMF),” in CVPR, 2014, pp. 2830–2837.

- [24] Takashi Shibata, Masayuki Tanaka, and Masatoshi Okutomi, “Gradient-domain image reconstruction framework with intensity-range and base-structure constraints,” in CVPR, 2016, pp. 2745–2753.

- [25] Xiaoyong Shen, Chao Zhou, Li Xu, and Jiaya Jia, “Mutual-structure for joint filtering,” International Journal of Computer Vision, vol. 125, no. 1-3, pp. 19–33, 2017.

- [26] Yonghua Zhang, Jiawan Zhang, and Xiaojie Guo, “Kindling the darkness: A practical low-light image enhancer,” in ACM Multimedia, 2019, pp. 1632–1640.

- [27] sipi.usc.edu/database.

- [28] Pablo Arbelaez, Michael Maire, Charless C. Fowlkes, and Jitendra Malik, “Contour detection and hierarchical image segmentation,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 5, pp. 898–916, 2011.

- [29] sites.google.com/site/vonikakis/datasets.

- [30] www.kecl.ntt.co.jp/people/wu.xiaomeng/icassp2021/appendix.pdf.

- [31] Sos S. Agaian, Blair Silver, and Karen A. Panetta, “Transform coefficient histogram-based image enhancement algorithms using contrast entropy,” IEEE Trans. Image Processing, vol. 16, no. 3, pp. 741–758, 2007.

- [32] ZhiYu Chen, Besma R. Abidi, David L. Page, and Mongi A. Abidi, “Gray-level grouping (GLG): An automatic method for optimized image contrast enhancement - Part I: The basic method,” IEEE Trans. Image Processing, vol. 15, no. 8, pp. 2290–2302, 2006.

- [33] Shiqi Wang, Kede Ma, Hojatollah Yeganeh, Zhou Wang, and Weisi Lin, “A patch-structure representation method for quality assessment of contrast changed images,” IEEE Signal Process. Lett., vol. 22, no. 12, pp. 2387–2390, 2015.