Reducing Conservativeness Oriented Offline Reinforcement Learning

Abstract

In offline reinforcement learning, a policy learns to maximize cumulative rewards with a fixed collection of data. Towards conservative strategy, current methods choose to regularize the behavior policy or learn a lower bound of the value function. However, exorbitant conservation tends to impair the policy’s generalization ability and degrade its performance, especially for the mixed datasets. In this paper, we propose the method of reducing conservativeness oriented reinforcement learning. On the one hand, the policy is trained to pay more attention to the minority samples in the static dataset to address the data imbalance problem. On the other hand, we give a tighter lower bound of value function than previous methods to discover potential optimal actions. Consequently, our proposed method is able to tackle the skewed distribution of the provided dataset and derive a value function closer to the expected value function. Experimental results demonstrate that our proposed method outperforms the state-of-the-art methods in D4RL offline reinforcement learning evaluation tasks and our own designed mixed datasets.

1 Introduction

Reinforcement learning has gained unprecedented attention in recent years, benefiting from the powerful representation and expressive capability of deep neural networks Mnih et al. (2015); Schulman et al. (2017). However, contemporary deep reinforcement learning algorithms generally require millions of samples to achieve expert-level performance. In many real-world applications, such as healthcare and self-driving, trial-and-error collecting samples is costly, time-consuming, and even unsafe. It is becoming a major bottleneck in deep reinforcement learning for the employment in these scenarios Dulac-Arnold et al. (2019).

By utilizing the logged dataset, offline reinforcement learning is one promising solution for the above issues. The policy can be learned without further interaction with the environment. The dataset could be generated by other agents, other algorithms, and even other tasks. For instance, the Atari videos on Youtube can be employed to train the policy to imitate human gameplayAytar et al. (2018). Besides, without the chance to interact with the environment, the algorithm cannot perform hazardous options throughout the training period. However, current reinforcement learning methods easily fail to find the optimal policy due to limited and narrow datasets. Fujimoto et al. (2019) showed offline reinforcement learning suffers from the extrapolation error, which is caused by the optimistic estimation of value target of unseen state-action pairs. Numerous researchers attempt to reduce the extrapolation error by learning the policy in the constrained offline action space. Kumar et al. (2019); Wu et al. (2019) minimized the divergence between the trained policy and the offline policy. Kumar et al. (2020) proposed Conservative Q-Learning (CQL) to learn a lower bound of the expected value function and update the policy according to the conservative value function.

Although these methods use conservative strategies to behave close to the offline distribution, exorbitant conservativeness might impair the policy’s generalization ability, especially in high-dimensional settings. For CQL, learning a lower bound of the value function can suppress the effect of out-of-distribution samples. However, the discrepancy between the lower bound and the real value function might pose challenges to discover optimal actions. Especially for non-expert datasets, optimal policy might deviate far from the offline policy. The conservative lower bound concerns staying in the offline distribution and easily fails to derive the optimal policy.

Prior conservative methods always penalize samples with high uncertainty. Note that the out-of-distribution samples and those samples with small occurrences in the dataset both generally have high uncertainty. However, they are not supposed to be treated equally, especially for the skewed dataset where valuable samples are relatively sparse. Training the policy conservatively in terms of a imbalanced distribution will undermine the agent’s performance. The data imbalance always appears in supervised learning, where one or more categories occupy relatively small proportions of the training data against that of the others. In offline reinforcement learning, there is always a disproportionate percentage of state-action pairs for each component in policy mixtures. The previous methods generally neglect data imbalance problem in offline datasets. Although these methods achieve remarkable results in most D4RL evaluation benchmarks Fu et al. (2020), they fail to perform well as expected in the mixed datasets. Mixed datasets are introduced by various policies, which are prevalent in many applications. For these tasks, it is crucial to reduce the conservativeness by paying more attention to the sparse samples.

In this paper, we propose reducing conservativeness oriented offline reinforcement learning to tackle the issues. On the one hand, we expect to treat the majority and minority samples equally to handle the data imbalance problem. We first provide the samples in the dataset with pseudo class labels by clustering, and then employ resampling and reweighting to train the samples according to each transition’s class frequency. In general, the samples with rare occurrences are assumed to have high uncertainty. Enhancing the weight of these samples in training might introduce more uncertainty and enlarge the extrapolation error. Third, to reduce the extrapolation error resulting from uncertainty preference, we choose to penalize the out-of-distribution samples based on their uncertainty. In this manner, the minority samples are supposed to be attached with more importance while the trust-region of these samples will be relatively cramped. On the other hand, we introduce a tighter lower bound into the value function optimization process, which is able to reduce the conservativeness further. The tighter lower bound is expected to explore the potential optimal actions for the agents, and is crucial when presented with non-expert datasets. We evaluate our proposed method on D4RL Mujoco tasks and our own designed mixed dataset. The experimental results demonstrate that our proposed method outperforms and achieves the competitive performance compared with the state-of-the-art methods on the most tasks.

2 Related Work

The offline reinforcement learning methods Lange et al. (2012) focus on training a policy given a fixed dataset. They have been applied in various applications, such as healthcare Gottesman et al. (2018); Wang et al. (2018), recommendation systems Strehl et al. (2010); Swaminathan & Joachims (2015); Covington et al. (2016); Chen et al. (2019), dialogue systemsZhou et al. (2017), and autonomous driving Sallab et al. (2017). In these settings, standard off-policy methods Mnih et al. (2015); Lillicrap et al. (2015) generally fail and exhibit undesirable performance due to their sensitiveness to the dataset distribution Fujimoto et al. (2019). The problem has been studied in approximate dynamic programming Bertsekas & Tsitsiklis (1995); Farahmand et al. (2010); Munos (2003); Scherrer et al. (2015). They ascribed it to the errors arising from distribution shift and function approximation. Recently, Van Hasselt et al. (2018) stated that the temporal difference algorithms would diverge when represented with function approximators and trained under off-policy data.

Some methods have been proposed to deal with the issue by restraining the policy distribution conservatively. Fujimoto et al. (2019) proposed Batch-Constrained Reinforcement Learning (BCQ) to select actions close to the offline actions when formulating Bellman target. Kumar et al. (2019) presented a bootstrapping error accumulation reduction (BEAR). They minimized Maximum Mean Discrepancy (MMD) between the trained and offline policies to alleviate the Bellman update’s error propagation. Jaques et al. (2019) utilized KL-divergence with a regularization weight to penalize the policy. Wu et al. (2019) introduced value penalty and policy penalty to regularize the learned policy towards the behavior policy. These methods tried to penalize the out-of-distribution actions and force the policy to behave in the dataset’s action space. However, these methods ignore the data imbalance problem in the logged dataset. In an optimistic view, we focus on treating the minority classes and the majority classes equally. Our propsoed method is closely related to CQLKumar et al. (2020), which learned a lower bound for the policy’s value function by penalizing the out-of-distribution state-action pairs and maximizing the in-distribution samples’ Q-value. Differently, we encourage the violation from the offline policy by estimating a tighter lower bound for the value function, which is helpful to non-expert tasks. Similarly, Agarwal et al. (2020) used an optimistic strategy and introduced a value function ensemble to stabilize the Q-function updating. Although our method is proposed in terms of reducing the conservativeness, it is essentially a conservative method.

Previous methods tend to penalize samples with high uncertainty. Yu et al. (2020) trained an auxiliary dynamics model to produce imaginary samples to augment the dataset. They reduced a reward for the generated samples according to their uncertainty. Kidambi et al. (2020) learned a pessimistic Markov Decision Process (MDP) using the offline dataset and as well as a near-optimal policy based on this pessimistic MDP. By employing an unknown state-action detector, they used a model-based method to train the policy. However, our proposed method encourages the policy to utilize samples with high uncertainty instead. Furthermore, we impose strict constraints on these sparse samples to reduce extrapolation error.

Current methods of tackling data imbalance can be divided into two categories, i.e., resampling and cost-sensitive learning. Resampling means over-sampling Shen et al. (2016) the minority classes and under-samplingHe & Garcia (2009) the frequent classes. Over-sampling could result in the over-fitting of minority classes, while under-sampling might ignore valuable information in the majority classes. Cost-sensitive learning Wang et al. (2017) assigns different weights for different samples. Most methods reweight samples according to the inverse of the class frequency. Cui et al. (2019) proposed to reweight using the effective number of the classes. In reinforcement learning, Monte Carlo dropout Srivastava et al. (2014) and random network distillation Burda et al. (2018) are common methods to estimate the frequency of each sample. Since offline datasets do not offer class labels for samples, we introduce pseudo labels to the samples using unsupervised learning methods. In this manner, our proposed method is able to perform resampling and reweighting efficiently for different clusters.

3 Background

In general, a reinforcement learning problem could be formulated as a Markov decision process , with state space , action space , transition probability matrix , reward function , and discount factor Sutton & Barto (2018). Each term of denotes the probability of arriving at the next state when selecting at state . At each time step, an agent is located at and chooses action . The agent would arrive at a next state and receive a reward from the environment. The objective of reinforcement learning is to train a policy to maximize accumulative rewards in one episode as:

| (1) |

When the agent performs an action at state according to a policy , the expectation of the cumulative rewards is defined as Q-value function: . And the value function is defined as . The Q-learning algorithms update the Q-value function according to Bellman operator as:

| (2) |

Compared with Q-learning methods, actor-critic methods employ a policy to sample actions. The update rule of Q-value function evaluation is , where is the expectation of Q-value with respective to transition matrix and policy . Since it is impossible to enumerate all states and actions, the Q-value function is generally updated using an empirical Bellman operator . Recently, Haarnoja et al. (2018) employed deep neural networks to approximate the actual Q-value function and the policy. On the basis of the neural network, the Q-value loss function is then defined as:

| (3) |

where is a replay buffer containing the collected samples. In Eq. 3, is the parameter of the Q-value network and is the parameter of the policy. In this manner, the policy can be updated as:

| (4) |

Offline reinforcement learning suffers from the out-of-distribution challenge. The standard reinforcement learning algorithms might derive a policy that generates out-of-distribution actions when applied in this setting. They have no chance to correct the real Q-value of these actions. Recent works focus on punishing the out-of-distribution actions. Kumar et al. (2020) proposed the CQL method by maximizing the value of in-distribution state-action pairs and minimizing the value of out-of-distribution state-action pairs. The Q-network is updated as:

| (5) | ||||

In Eq. 5, is a hyperparameter for controlling the degree of conservativeness with respect to the Bellman update, denotes the empirical Q-value function, and is used to denote the offline policy distribution.

4 Data Imbalance

In real-world applications, a large amount of data is generally produced by the skewed distributions. The samples of some classes outnumber those of other classes. In healthcare applications, severe events like hematorrhea are rare but important. In advertisement systems, the number of valuable purchases is much smaller than that of clicks. In this section, we present the discussion of the data imbalance in offline reinforcement learning.

The data imbalance phenomenon exists in the dataset generated by a single policy. Without loss of generality, we assume the transition model of the environment is stochastic in the single policy scenario. A suboptimal stochastic policy might produce a small portion of expert-level trajectories and a large portion of trajectories with poor performances. During the training process, those suboptimal samples dominate over the sparse samples of high quality. In standard reinforcement learning, the temporal difference method samples the experiences uniformly. The expert-level trajectories would be sampled inefficiently, which will impair the reward propagation and undermine the training process. Moreover, the weights of sparse samples might lead to large variances for off-policy evaluation.

Mixed policies are characterized by data imbalance and are ubiquitous in real-world applications. In autonomous driving, samples are produced by different drivers with different driving inclinations. Similarly, in reinforcement learning settings, the agents interact with the environment using the new updated policy at each time step. The collected samples produced by different policies lead to a mixed dataset. The induced skewed dataset consists of samples produced by different policies with different proportions. The large proportion of the samples dominates the training process of the policy compared with that of the small proportion of the samples. In the worst cases, the small proportion of samples from high-quality policies is hard to be utilized.

We examine the performance of the offline algorithm using D4RL benchmarks on the skewed datasets. We study the Mujoco tasks Walker2d and Halfcheetah by mixing the “random” and “expert” dataset with different ratios for evaluation. We create five datasets and all the datasets consist of samples for each task. And the proportions of random samples are set as , , , , and respectively. Accordingly, the proportions of expert-level samples decline. We train a conservative Q-learning (CQL) agent Kumar et al. (2020) for updates and run four seeds for each experiment. The results for these tasks are shown in Fig. 1. As demonstrated in Fig. 1, the performance of the trained policy decreases as the random sample proportion increases. As the above discussion, the current offline reinforcement learning methods struggle to handle the data imbalance problem.

5 Lower Bound of the Value Function

The CQL proposed to learn a lower bound of the expected value function by minimizing the Q-value of out-of-distribution state-action pairs and maximizing the Q-value of in-distribution state-action pairs. In the setting without approximation error, the gap between the value function derived by CQL and expected value function is the Pearson divergence between and . The gap decreases to when . We could obtain a baseline which is the minimum of Pearson divergence for and a threshold as:

| (6) |

where is the tolerance threshold of the violation of the trained policy against the offline policy . When decreases, the algorithm tends to behave more conservatively. When increases, the policy is allowed to deviate further from the dataset. In this manner, the policy is able to preserve the opportunity to balance stability and generalization ability. The objective of our proposed method is then defined as:

| (7) | ||||

We assume that is a constant dependent on the reward function and transition matrix, and denotes a vector of counts for all states. We show that the empirical value function is the lower-bound of the actual value function.

Theorem 1

For any policy whose Q-value function is updated as Eq. 7, the empirical value function is a lower bound of the expected value function as:

Thus, if , we have with high probability. When , then any guarantees .

6 Method

In this section, we introduce our proposed reducing conservativeness oriented offline reinforcement learning. We first perform clustering on the dataset and providing the samples with pseudo labels. We then employ resampling and reweighting to associate the minor classes with greater importance. In order to reduce extrapolation error resulting from high uncertainty, we impose regularization constraints on the minority classes. Finally, we introduce our proposed tighter lower bound to the value function update.

6.1 Clustering

In supervised learning, resampling and reweighting are two typical data preprocessing methods for imbalanced data. However, these two methods can not be directly applied to offline reinforcement learning. For the data imbalance of a single policy, the majority and minority of classes are latent and should be justified explicitly. For the data imbalance of mixed policies, the number of policies and their corresponding samples are generally inaccessible. Even if the proportions of the samples are provided, some policies are more similar in state-action space while others are relatively distant but might contain more information. As a result, treating these classes equally tends to bring additional data imbalance issues. In our proposed method, we create pseudo labels by classifying these samples with an unsupervised learning method.

Given a dataset with samples, we perform clustering to partition samples into clusters. For the Mujoco control suite, the dimension of the feature is no more than . K-means clustering shows satisfactory performance in this setting. The advanced clustering methods such as Jiang et al. (2016) can also be employed. However, during the experiment, these methods do not gain obvious improvement compared with that of the k-means clustering in this scenario. We concatenate to form a new vector and normalize the dataset to a Gaussian distribution with zero mean and unit variance. Then the rescaled dataset is clustered using Euclidean distance metric. K-means is sensitive to initialization and often converges at a local optimum. We choose the first cluster center uniformly at random from the dataset, and choose the subsequent cluster centers from remaining data samples with probability proportional to their distances from previous closest existing cluster centers.

6.2 Resampling

After clustering, we could associate each sample with a pseudo label . We use to denote the number of samples of cluster . And during the training process, we classify each transition according to its cluster, and resample samples as:

| (8) |

In Eq. 8, is a parameter to normalize the resampling distribution, and is a hyperparameter to control the degree of resampling. When , the resampling is reduced to the conventional sampling. As increases, the resampling could select sparse samples more frequently. It should be noted that resampling might result in the overfitting of minority classes. It also might discard valuable examples that are important for reward propagation in reinforcement learning. We therefore seek a complementary method to handle its shortcomings.

6.3 Reweighting

Compared with resampling, reweighting is an alternative method that samples uniformly from the static dataset against data imbalance issue. Given transition , we reweight the loss function in accordance with the inverse of its class frequency. With the slight abuse of notation, the reweighted loss function of Q-value is defined as:

| (9) |

Assigning sample weights inversely proportionally to the class frequency leads to poor performance in large-scale datasets. Since samples share the high-level mutual information, the information gain would decrease as more samples are collected. Similar to Cui et al. (2019), we take advantage of the effective number of each class. The weight for transition is defined as:

| (10) |

where is a hyperparameter controlling the degree of reweighting. When , each sample is weighted equally. When , each sample is reweighted in terms of its class frequency. To take advantage of resampling and reweighting, we first resample transitions from the dataset and then reweight these samples during training.

6.4 Uncertainty Correction

The proposed resampling and reweighting might assign more importance to sparse samples with higher uncertainty compared with that of the majority classes. When combined with function approximation, the state-action pairs near the minority samples might be assigned with excessive values, which will further amplify the extrapolation error. To overcome this issue, we restrain state-action pairs, which are out-of-distribution near the minority classes, more strictly. Our method of dealing with data imbalance can be generally integrated into prior methods. For policy constrained methodsKumar et al. (2019), the threshold of the Lagrange multiplier could be reweighted by the inverse of the class frequency. In this manner, the agent is forced to keep closer to the actions in the batch when it is located in uncertain regions. For conservative Q-learning, the minimization and maximization terms could be weighted with the inverse of the class frequency as well. The update rule of uncertainty correction is defined as:

| (11) | ||||

6.5 Implementations

Our proposed method for data imbalance issues could be employed as a general framework for any offline reinforcement method. In this paper, we implement our proposed method on the basis of CQL. We integrate the tighter lower bound into the proposed framework. In order to estimate the Pearson diverge between and , the lower bound can be obtained using function approximation as:

| (12) |

where . In Eq. 12, denotes Pearson divergence and is the parameter of deep neural network. During the experiment, we observed that setting a hyperparameter is a satisfactory alternative to stabilize the training process. Therefore, the objective of our proposed method is finally defined as:

| (13) | ||||

The whole process of our proposed method is summarized in Algorithm 1.

Input: offline dataset , update iterations for policy and Q-value networks, Pearson divergence hyperparameter , number of clusters

Parameter: policy network , Q-networks .

Output: learnt policy network

7 Experiments

7.1 Clustering Performance

The performances of resampling and reweighting are directly related to the k-means clustering. We first study the effectiveness of the clustering method. We choose Halfcheetah and Walker2d tasks for evaluation. Different policies are utilized to generate corresponding samples and form a mixed dataset. The dataset consists of “random” samples generated by rollouting a random policy, and “expert” samples generated by a well trained soft actor-critic policy.

We perform k-means clustering with the cluster number as . Since the k-means clustering is an unsupervised learning method, we associate the cluster with the majority of all samples as cluster 1 and the rest of the samples as cluster 2. As shown in Table 1, cluster 1 contains most of the “random” samples while cluster 2 contains most of the “expert” samples and a small number of “random” samples.

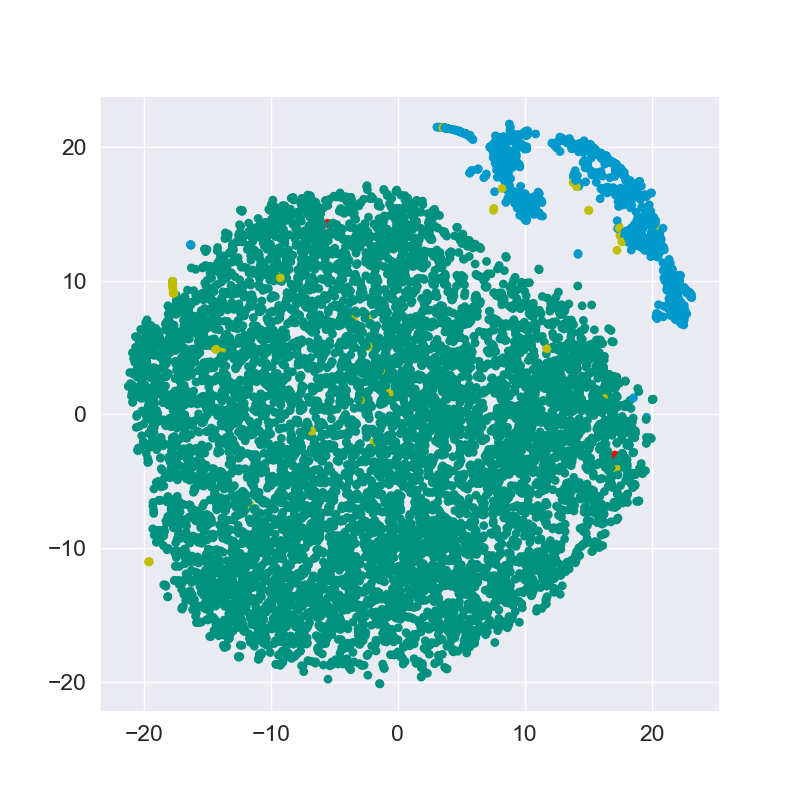

We further use t-Distributed Stochastic Neighbor Embedding (t-SNE) to visualize the data distribution. The visualization for Walker2d and Halfcheetah tasks are shown in Fig. 2. In Fig. 2, the green and red points denote the “random” and “expert” samples in cluster 1 respectively, while the yellow and blue points denote “random” and “expert” samples in cluster 2 respectively. Note that red points are so rare and hard to distinguish. The experimental results show that “random” samples and “expert” samples are able to be separated by k-means clustering.

| Task | Data | cluster 1 | cluster 2 |

| Halfcheetah | random | 899100 | 0 |

| expert | 4553 | 95346 | |

| Walker2d | random | 899889 | 110 |

| expert | 4298 | 95634 |

7.2 Offline Mujoco Control Datasets

To evaluate the effectiveness of our proposed method, we perform experiments using the Mujoco control suits in D4RL benchmarks Fu et al. (2020). D4RL benchmarks present static datasets for Hopper, Halfcheetah, and Walker2d Mujoco tasks (as shown in Fig. 3). For each type of control environment, D4RL offers kinds of datasets, “random”, “medium”, “medium-replay”, “medium-expert”, and “expert” dataset. The “expert” dataset consists of samples generated by a well trained soft actor-critic policy. The “medium” dataset is generated by a policy which is trained to achieve approximate performance as that of the “expert” dataset. The “random” dataset is generated by rollouting a randomly initialized policy for steps. The “medium-replay” dataset contains all samples stored in the replay buffer during training policy for the “medium” dataset. The “medium-expert” dataset contains the same number of samples selected from the “medium” and the “expert” dataset.

The experimental results of the state-of-the-art methods and our proposed methods are summarized in Table 2. The results for BEARKumar et al. (2019), BRACWu et al. (2019), SACHaarnoja et al. (2018), and BC are presented by Fu et al. (2020), and the result of CQL is presented by Kumar et al. (2020). As shown in Table 2, our proposed method outperforms or achieves competitive performance compared the state-of-the-art methods on most tasks. Especially, our proposed method exceeds the best previous methods on the mixed “medium’ and “medium-replay” datasets. Our proposed method achieves more than scores on the “Hopper-medium” task. As shown in Fig. 4, our method learns a higher Q-value than CQL. The experiments demonstrate that the proposed reducing conservativeness oriented offline reinforcement learning is helpful to non-expert tasks and beneficial for tackling data imbalance issues.

| Task Name | SAC | BC | BEAR | BRAC-p | BRAC-v | CQL | Our method |

| Halfcheetah-random | 30.5 | 2.1 | 25.5 | 23.5 | 28.1 | 35.4 | 35.3 |

| Walker2d-random | 4.1 | 1.6 | 6.7 | 0.8 | 0.5 | 7.0 | 7.2 |

| Hopper-random | 11.3 | 9.8 | 9.5 | 11.1 | 12.0 | 10.8 | 10.7 |

| Halfcheetah-medium | -4.3 | 36.1 | 38.6 | 44.0 | 45.4 | 44.4 | 51.4 |

| Walker2d-medium | 0.9 | 6.6 | 33.2 | 72.7 | 81.3 | 79.2 | 78.8 |

| Hopper-medium | 0.8 | 29.0 | 47.6 | 31.2 | 32.3 | 58.0 | 96.8 |

| Halfcheetah-medium-replay | -2.4 | 38.4 | 36.2 | 45.6 | 46.9 | 46.2 | 51.5 |

| Walker2d-medium-replay | 1.9 | 11.3 | 10.8 | -0.3 | 0.9 | 26.7 | 46.2 |

| Hopper-medium-replay | 3.5 | 11.8 | 25.3 | 0.7 | 0.8 | 48.6 | 65.2 |

| Halfcheetah-medium-expert | 1.8 | 35.8 | 51.7 | 43.8 | 45.3 | 62.4 | 60.3 |

| Walker2d-medium-expert | 1.9 | 11.3 | 10.8 | -0.3 | 0.9 | 98.7 | 105.3 |

| Hopper-medium-expert | 1.6 | 111.9 | 4.0 | 1.1 | 0.8 | 111.0 | 110.9 |

| Halfcheetah-expert | -1.9 | 107.0 | 108.2 | 3.8 | -1.1 | 104.8 | 102.6 |

| Walker2d-expert | -0.3 | 125.7 | 106.1 | -0.2 | -0.0 | 153.9 | 108.3 |

| Hopper-expert | 0.7 | 109.0 | 110.3 | 6.6 | 3.7 | 109.9 | 111.6 |

7.3 Mixed Datasets

We further evaluate the ability of our proposed method for tackling data imbalance issues on our own designed mixed dataset. We create datasets for each of the Halfcheetah, Walker2d, and Hopper tasks. Each dataset consists of samples with mixed “random” and “expert” samples. For each task, the proportions of random samples in the datasets are , , , , and respectively. We compare our proposed method with CQL and show the results in Fig. 5. As expected, using more “random ” samples will affects the performance of the policy.

As shown in Fig. 5, our proposed method considerably outperforms CQL on most of the tasks. For Halfcheetah and Walker2d tasks, the performance of our proposed method decreases much more slowly. For Hopper tasks, our proposed method is competitive to CQL in the tasks and outperforms CQL with a small margin when the proportion of the “random” samples is set as . The improvements obtained compared with that of CQL demonstrate that our proposed method is less sensitive to the reduced “expert” samples, and it can utilize sparse samples more effectively.

7.4 Ablation Study

In our proposed method, the number of clusters and the choice of are important hyperparameters. In this section, we present the experiments for the ablation study.

7.4.1 Number of clusters

We evaluate the sensitivity of our proposed method using different numbers of clusters. We perform the experiments on “Walker2d-medium-replay” and “Hopper-medium” tasks by using cluster number as , , , , and . The experimental results are depicted in Fig. 6.

As shown in Fig. 6, the performance of our proposed method first increases and then decreases as increases. Due to the high dimensional state-action space, the samples are difficult to be separated. One promising solution is to utilize more concrete representation to formulate state-action samples.

7.4.2 Choice of

In our proposed method, is a critical hyperparameter for controlling the degree of conservativeness. We evaluate the performance by setting as , , , , and , and perform the experiments on “Walker2d-medium-replay” and “Hopper-medium” tasks. As shown in Fig. 7, when is set with a large value, the Q-value function tends to increase to infinity and the accumulative reward tends to be zero. When is set with a small value, the proposed method becomes very conservative and yields poor performance.

8 Conclusions

In this paper, we introduce the method of reducing conservativeness oriented offline reinforcement learning. After partitioning the samples into different clusters, we reweight and resample them according to their class frequency. To reduce extrapolation error, we put more constraints on those minority classes. Besides, we introduce a tighter lower bound of the value function. Our experimental results show that our method outperforms state-of-art approaches on D4RL evaluation benchmarks and our designed mixed datasets.

References

- Agarwal et al. (2020) Agarwal, R., Schuurmans, D., and Norouzi, M. An optimistic perspective on offline reinforcement learning. International Conference on Machine Learning, 2020.

- Aytar et al. (2018) Aytar, Y., Pfaff, T., Budden, D., Paine, T. L., Wang, Z., and de Freitas, N. Playing hard exploration games by watching youtube. arXiv preprint arXiv:1805.11592, 2018.

- Bertsekas & Tsitsiklis (1995) Bertsekas, D. P. and Tsitsiklis, J. N. Neuro-dynamic programming: an overview. In Proceedings of 1995 34th IEEE conference on decision and control, volume 1, pp. 560–564. IEEE, 1995.

- Burda et al. (2018) Burda, Y., Edwards, H., Storkey, A., and Klimov, O. Exploration by random network distillation. arXiv preprint arXiv:1810.12894, 2018.

- Chen et al. (2019) Chen, M., Beutel, A., Covington, P., Jain, S., Belletti, F., and Chi, E. H. Top-k off-policy correction for a reinforce recommender system. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, pp. 456–464, 2019.

- Covington et al. (2016) Covington, P., Adams, J., and Sargin, E. Deep neural networks for youtube recommendations. In Proceedings of the 10th ACM conference on recommender systems, pp. 191–198, 2016.

- Cui et al. (2019) Cui, Y., Jia, M., Lin, T.-Y., Song, Y., and Belongie, S. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9268–9277, 2019.

- Dulac-Arnold et al. (2019) Dulac-Arnold, G., Mankowitz, D., and Hester, T. Challenges of real-world reinforcement learning. arXiv preprint arXiv:1904.12901, 2019.

- Farahmand et al. (2010) Farahmand, A. M., Munos, R., and Szepesvári, C. Error propagation for approximate policy and value iteration. In Advances in Neural Information Processing Systems, 2010.

- Fu et al. (2020) Fu, J., Kumar, A., Nachum, O., Tucker, G., and Levine, S. D4rl: Datasets for deep data-driven reinforcement learning. arXiv preprint arXiv:2004.07219, 2020.

- Fujimoto et al. (2019) Fujimoto, S., Meger, D., and Precup, D. Off-policy deep reinforcement learning without exploration. In International Conference on Machine Learning, pp. 2052–2062. PMLR, 2019.

- Gottesman et al. (2018) Gottesman, O., Johansson, F., Meier, J., Dent, J., Lee, D., Srinivasan, S., Zhang, L., Ding, Y., Wihl, D., Peng, X., et al. Evaluating reinforcement learning algorithms in observational health settings. arXiv preprint arXiv:1805.12298, 2018.

- Haarnoja et al. (2018) Haarnoja, T., Zhou, A., Hartikainen, K., Tucker, G., Ha, S., Tan, J., Kumar, V., Zhu, H., Gupta, A., Abbeel, P., et al. Soft actor-critic algorithms and applications. arXiv preprint arXiv:1812.05905, 2018.

- He & Garcia (2009) He, H. and Garcia, E. A. Learning from imbalanced data. IEEE Transactions on knowledge and data engineering, 21(9):1263–1284, 2009.

- Jaques et al. (2019) Jaques, N., Ghandeharioun, A., Shen, J. H., Ferguson, C., Lapedriza, A., Jones, N., Gu, S., and Picard, R. Way off-policy batch deep reinforcement learning of implicit human preferences in dialog. arXiv preprint arXiv:1907.00456, 2019.

- Jiang et al. (2016) Jiang, Z., Zheng, Y., Tan, H., Tang, B., and Zhou, H. Variational deep embedding: An unsupervised and generative approach to clustering. arXiv preprint arXiv:1611.05148, 2016.

- Kidambi et al. (2020) Kidambi, R., Rajeswaran, A., Netrapalli, P., and Joachims, T. Morel: Model-based offline reinforcement learning. arXiv preprint arXiv:2005.05951, 2020.

- Kumar et al. (2019) Kumar, A., Fu, J., Tucker, G., and Levine, S. Stabilizing off-policy q-learning via bootstrapping error reduction. arXiv preprint arXiv:1906.00949, 2019.

- Kumar et al. (2020) Kumar, A., Zhou, A., Tucker, G., and Levine, S. Conservative q-learning for offline reinforcement learning. arXiv preprint arXiv:2006.04779, 2020.

- Lange et al. (2012) Lange, S., Gabel, T., and Riedmiller, M. Batch reinforcement learning. In Reinforcement learning, pp. 45–73. Springer, 2012.

- Lillicrap et al. (2015) Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., and Wierstra, D. Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971, 2015.

- Mnih et al. (2015) Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., et al. Human-level control through deep reinforcement learning. nature, 518(7540):529–533, 2015.

- Munos (2003) Munos, R. Error bounds for approximate policy iteration. In ICML, volume 3, pp. 560–567, 2003.

- Sallab et al. (2017) Sallab, A. E., Abdou, M., Perot, E., and Yogamani, S. Deep reinforcement learning framework for autonomous driving. Electronic Imaging, 2017(19):70–76, 2017.

- Scherrer et al. (2015) Scherrer, B., Ghavamzadeh, M., Gabillon, V., Lesner, B., and Geist, M. Approximate modified policy iteration and its application to the game of tetris. J. Mach. Learn. Res., 16:1629–1676, 2015.

- Schulman et al. (2017) Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- Shen et al. (2016) Shen, L., Lin, Z., and Huang, Q. Relay backpropagation for effective learning of deep convolutional neural networks. In European conference on computer vision, pp. 467–482. Springer, 2016.

- Srivastava et al. (2014) Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., and Salakhutdinov, R. Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1):1929–1958, 2014.

- Strehl et al. (2010) Strehl, A., Langford, J., Kakade, S., and Li, L. Learning from logged implicit exploration data. arXiv preprint arXiv:1003.0120, 2010.

- Sutton & Barto (2018) Sutton, R. S. and Barto, A. G. Reinforcement learning: An introduction. MIT press, 2018.

- Swaminathan & Joachims (2015) Swaminathan, A. and Joachims, T. Batch learning from logged bandit feedback through counterfactual risk minimization. The Journal of Machine Learning Research, 16(1):1731–1755, 2015.

- Van Hasselt et al. (2018) Van Hasselt, H., Doron, Y., Strub, F., Hessel, M., Sonnerat, N., and Modayil, J. Deep reinforcement learning and the deadly triad. arXiv preprint arXiv:1812.02648, 2018.

- Wang et al. (2018) Wang, L., Zhang, W., He, X., and Zha, H. Supervised reinforcement learning with recurrent neural network for dynamic treatment recommendation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2447–2456, 2018.

- Wang et al. (2017) Wang, Y.-X., Ramanan, D., and Hebert, M. Learning to model the tail. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 7032–7042, 2017.

- Wu et al. (2019) Wu, Y., Tucker, G., and Nachum, O. Behavior regularized offline reinforcement learning. arXiv preprint arXiv:1911.11361, 2019.

- Yu et al. (2020) Yu, T., Thomas, G., Yu, L., Ermon, S., Zou, J., Levine, S., Finn, C., and Ma, T. Mopo: Model-based offline policy optimization. arXiv preprint arXiv:2005.13239, 2020.

- Zhou et al. (2017) Zhou, L., Small, K., Rokhlenko, O., and Elkan, C. End-to-end offline goal-oriented dialog policy learning via policy gradient. arXiv preprint arXiv:1712.02838, 2017.