Recurrent Attention Model with Log-Polar Mapping is Robust against Adversarial Attacks

Abstract

Convolutional neural networks are vulnerable to small adversarial attacks, while the human visual system is not. Inspired by neural networks in the eye and the brain, we developed a novel artificial neural network model that recurrently collects data with a log-polar field of view that is controlled by attention. We demonstrate the effectiveness of this design as a defense against SPSA and PGD adversarial attacks. It also has beneficial properties observed in the animal visual system, such as reflex-like pathways for low-latency inference, fixed amount of computation independent of image size, and rotation and scale invariance. The code for experiments is available at https://gitlab.com/exwzd-public/kiritani_ono_2020.

Keywords Adversarial Attack Attention Convolutional Neural Network Neuroscience

1 Introduction

Convolutional neural networks (CNNs) were designed after the hierarchical animal visual system [13] discovered by Hubel and Wiesel [18]. However, CNNs represent visual inputs very differently from the animal brain. In standard, feedforward CNNs trained on ImageNet [4], a unit in a convolution layer has a small effective receptive field [29]. CNNs, as a result, have difficulty in capturing shapes of large objects, and are biased toward local texture [16, 2]. The existence of adversarial examples [41] further highlights CNNs’ weakness and difference from the human visual system. Small perturbation of images can fool the human brain when images are presented to subjects briefly for 63-71 ms; however, visual recognition of humans is not disrupted if subjects can see perturbed images long enough [11]. In this study, we aim to emulate the robustness of natural visual systems.

The animal visual system is a recurrent neural network (RNN) that actively collects data over time [17]. On the human retina, the acuity is highest in the fovea with densely packed photoreceptors, and the resolution decreases as a function of eccentricity [3]. Rapid eye movements, and the non-uniform resolution in the retina allow animals to efficiently scrutinize informative regions of the visual scene while processing other areas at lower resolution [30, 32]. In the mammalian neocortex, the signal from the eye is routed to two parallel data streams [36, 15, 45]. In the ventral, or "what" pathway, the identity of visual objects is extracted. The dorsal, or "where" pathway on the other hand, is involved with spatial awareness and localization of objects. We take inspiration from this sampling strategy of the brain and propose a novel computer vision model, Recurrent Attention Model with Log Polar Mapping (RAM-LPM). RAM-LPM has a log-polar field of view (FOV) that is mapped to a regular grid-like tensor. The tensor is then processed in "what" and "where" RNNs. The "what" pathway is trained to learn the features of patches for object classification, whereas the movement of FOV is controlled by the "where" pathway.

We show that RAM-LPM is resistant to simultaneous perturbation stochastic approximation (SPSA) [44] and projected gradient descent (PGD) [33] attacks. We also discuss other desirable properties of RAM-LPM. Similar to the animal eye, the log-polar FOV is highly invariant to scaling and rotation. RAM-LPM also responds to inputs quickly with a reflex circuit. Another advantage is the fixed amount of computation that is independent of image size; in standard CNNs, the amount of computation linearly scales to pixel number. RAM-LPM thus overcomes some of the shortcomings of CNNs, demonstrating the effectiveness of bridging neuroscience and deep learning research.

2 Background and Related Works

2.1 Log-Polar Vision

The eye does not process the entire visual field evenly. Photoreceptive cells in the human eye are abundant around the central region called the fovea, whereas the density decreases in the periphery [3, 12, 20]. The development of FOV in a log-polar coordinate system was motivated by the distribution of photoreceptors in the eye [35, 34, 42]. The advantages of this sampling method, compared to sampling with a conventional Cartesian lattice, include efficient compression of information, as well as rotation and scale equivalence [47, 8]. The brain’s ability to perceive objects is also largely unaffected by rotation and scaling [14, 22]. Anatomical and physiological studies demonstrated that the projection from the retina to the primary visual cortex can be approximated by log-polar mapping [6, 38, 43], suggesting that the log-polar FOV captures the computation in the mammalian visual system better than the Cartesian FOV [49].

2.2 Attention

The movement of the eye, together with the non-uniform spatial resolution in the eye, further enables efficient sampling in the visual field [27, 9, 40]. In computer vision, hard attention, a mechanism that selects and processes only small portions of an image was inspired by the animal visual system. Hard attention is used to boost the performance as well as to reduce the amount of computation [31, 10].

2.3 Reflex Circuit

Animals respond to sensory inputs over variable time scale. The fastest output is mediated by reflex in the peripheral nervous system [39]. Slower but more thoughtful responses involve recurrent circuits in the central nervous system [26, 21]. Recent neural networks employ short-cut connections; however, they are intended for effective backpropagation of derivatives of very deep neural networks [19], and not used for responses with short latency.

2.4 Adversarial Attacks

An adversarial attack is a technique to find a perturbation that changes the prediction of a machine learning model [41]. The perturbation can be very small and imperceptible to human eyes. In this study, we only consider perturbation of small norm added to original images. The human visual system is perturbed much less than CNNs if subjects are allowed to see images long enough [11]. Luo et al. [28] suggested foveation as a defense to adversarial attacks, but Athaye et al [1] generated examples that remain adversarial after scaling, translation and other changes in viewpoint. Furthermore, hard attention with square crops was recently shown to be an inadequate defence [10]. Rotation-equivalent networks were shown to be robust to spatially transformed adversarial attacks [48]; however, they were still vulnerable to adversarial attacks [5].

3 Model Formulation

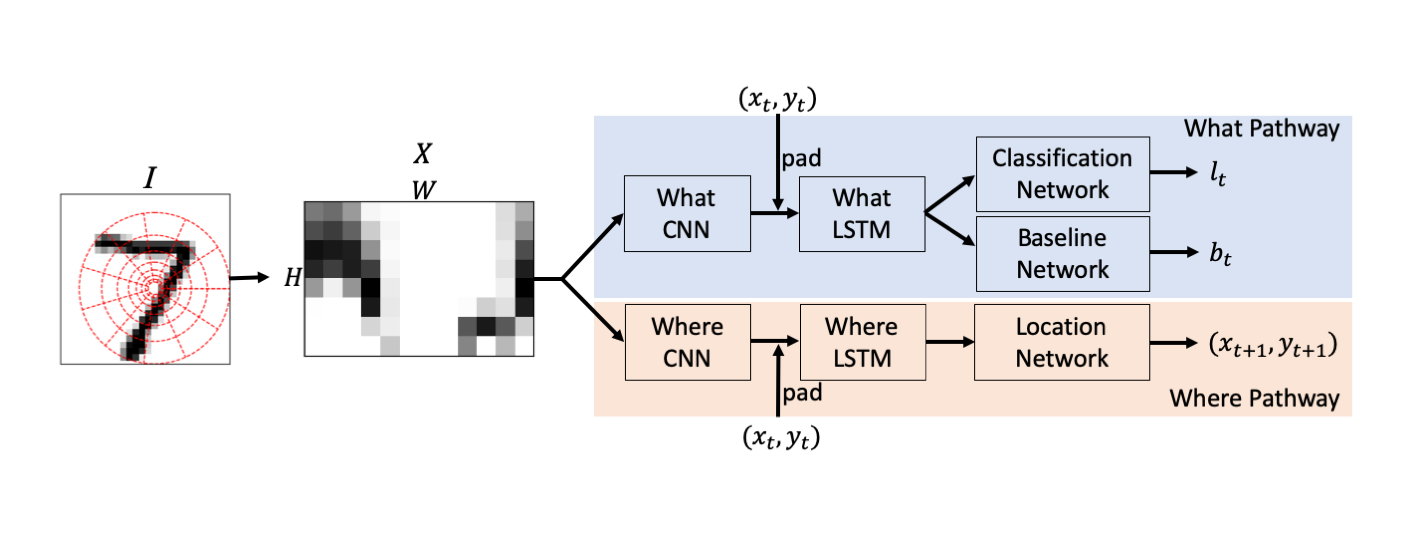

Like the recurrent attention model by [31], RAM-LPM has access to a fraction of an image at each time step. It learns both the identity of images, and the policy on where to attend in an image based on previous patches, and actions. The model consists of subnetworks that are summarized in figure 1. At each time step , a small patch around is received by the model, and this patch is routed to "what" and "where" pathways. The logits , location of the next foveation , and the prediction of reward (discussed later) are computed. The implementations of the sub-networks are discussed below.

3.1 Sampling Method

A patch of an image is extracted in a similar way described in [8]. We consider an image in a 2D Cartesian coordinate system. Let be the interpolated pixel intensity at in for channel , and be a point in that is learned by the location network. A point in is mapped to a point in a log-polar coordinate system such that,

| (1) |

and

| (2) |

Let be the pixel intensity at for channel in log-polar coordinates:

| (3) |

A region bounded by two concentric circles in is the field of view. In log-polar coordinates, the FOV is defined by

| (4) |

and

| (5) |

The FOV is evenly divided in log-polar coordinates into by sections. A tensor with shape whose elements are given by

| (6) |

is fed to "what" and "where" pathways.

3.2 What Pathway

is first processed by a feedforward CNN network. Since is contiguous about the angular axis in the original image , we use wrap-around padding [8] before convolution and max pooling layers. The output of the CNN is flattened, padded with the ouput of the location network , and passed to the following LSTM network. The classification network and baseline network are both fully connected networks that output the logits for classification, and baseline used in REINFORCE training, respectively. We minimize the cross entropy loss by backpropagating the derivatives through the classification network, the LSTM network and CNN of the what pathway. The parameters in the baseline network are trained by minimizing the MSE loss from and reward ; when the prediction is correct; and otherwise. Adam optimizer [25] is used to update the parameters.

3.3 Where Pathway

As in the "what" pathway, is first processed by a CNN. The flattened output padded with , is recurrently fed to a LSTM network. The location network consists of fully connected layers followed by parameterized Gaussian random number generators. The fully connected layers output a pair of real numbers that are used to generate the position of the FOV at the next time step:

| (7) |

and

| (8) |

could also be leaned is fixed in our experiments. The parameters in this pathway were updated using the REINFORCE algorithm with baseline subtraction [31]. The initial position of the FOV is randomized with uniform distributions:

| (9) |

and

| (10) |

where the center of the image is and the length of the larger side of the image is 2.

3.4 Training and Inference

The model samples patches times, and the final logit is used in the cross entropy loss function in the "what" pathway. During inference, the forward pass is repeated times, and the logits for each class are averaged.

4 Experiments

4.1 Training on ImageNet

We trained RAM-LPM on the Imagenet dataset [37]. To avoid information loss caused by cropping and shrinking, the images are first zero-padded either vertically and horizontally to make them a square. The images are then resized to 512 x 512 pixels. Note that the amount of computation for RAM-LPM does not depend on the number of pixels in an image. The model performance on the validation set is summarized in figure 2. The fast reflex-like output is followed by slower response which is more accurate. Larger also increases the accuracy.

4.2 Adversarial Attacks

The robustness of the trained model was tested on 300 or 1000 images randomly picked from ImageNet validation dataset, for SPSA and PGD attacks respectively. The gradient computed in "what" pathway of RAM-LPM with respect to the input was used in the PGD attack [10]. We preprocessed the images in two different ways since the difficulty of finding an adversarial example can depend on image size [44]. In the first preparation, we evaluated the robustness without cropping and resizing. Instead, zeros were padded horizontally or vertically to make the images a square. The padded regions were not changed by the adversarial attacks. In the second preparation, the short side of images was resized to 224 pixels preserving the aspect ratio, and then the central portion of 224 x 224 pixels was cropped. The images classified correctly by the trained model with and were perturbed by untargeted SPSA and PGD attacks implemented in advertorch [7]. For SPSA attack, we used , perturbation size , maximum iterations of , batch size of , and Adam learning rate of . These parameters are the same as in [44]. For PGD attack, we used , step size of , and maximum iterations of . We used the parameters specified in Appendix D of [10] except for the step size which was decreased from . This increased the success rate of the PGD attack.

The number of failures and successes of the attacks are summarized in Table 1. RAM-LPM was resistant to both attacks especially when the image sizes were not changed.

| SPSA (224 x 224) | SPSA (original size) | PGD (224 x 224) | PGD (original size) | |

| Success : Failure : Incorrect | 55 : 101 : 144 | 17 : 159 : 124 | 436 : 62 : 502 | 269 : 290 : 441 |

4.3 Rotation, Scale and Translation Invariance

We evaluated the performance RAM-LPM on SIM2MNIST [8] dataset. SIM2MNIST is a MNIST variant that is perturbed with clutters, rotation, scaling and translation. Table 2 shows our result along with the performance of other models reported in [8]. The performance of RAM-LPM is comparable to that of PTNs, suggesting RAM-LPM achieves representation invariance to various transformations.

5 Conclusion

In this work, we propose a novel approach for image classification that combines log-polar mapping and hard attention. We demonstrated that RAM-LPM achieves robustness against SPSA and PGD attacks. Robustness of brain-like models corroborates the notion that adversarial examples are human-centric phenomena, and suggests that RAM-LPM learns "robust features" [23]. Other beneficial properties of RAM-LPM include amount of computation independent of image size, reflex-like fast response, and invariance to rotation, scaling and translation. We hope our approach demonstrated the effectiveness of applying signal processing mechanisms in the brain to deep learning models. We expect our approach to be useful in safety-critical applications or analysis of high-resolution microscopy/satellite images where rotation/scale/translation invariance is exhibited.

Acknowledgement

We are grateful to Jonathan Hough of ExaWizards Inc. for helpful feedback on the manuscript.

References

- AEIK [18] Anish Athalye, Logan Engstrom, Andrew Ilyas, and Kevin Kwok. Synthesizing robust adversarial examples. In Jennifer Dy and Andreas Krause, editors, Proceedings of the 35th International Conference on Machine Learning, volume 80 of Proceedings of Machine Learning Research, pages 284–293, Stockholmsmässan, Stockholm Sweden, 10–15 Jul 2018. PMLR.

- BB [19] Wieland Brendel and Matthias Bethge. Approximating CNNs with bag-of-local-features models works surprisingly well on imagenet. In International Conference on Learning Representations, 2019.

- CSKH [90] Christine A Curcio, Kenneth R Sloan, Robert E Kalina, and Anita E Hendrickson. Human photoreceptor topography. Journal of comparative neurology, 292(4):497–523, 1990.

- DDS+ [09] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. ImageNet: A Large-Scale Hierarchical Image Database. In CVPR09, 2009.

- DMM [18] Beranger Dumont, Simona Maggio, and Pablo Montalvo. Robustness of rotation-equivariant networks to adversarial perturbations. arXiv preprint arXiv:1802.06627, 2018.

- DW [61] PM Daniel and D Whitteridge. The representation of the visual field on the cerebral cortex in monkeys. The Journal of physiology, 159(2):203–221, 1961.

- DWJ [19] Gavin Weiguang Ding, Luyu Wang, and Xiaomeng Jin. AdverTorch v0.1: An adversarial robustness toolbox based on pytorch. arXiv preprint arXiv:1902.07623, 2019.

- EABZD [17] Carlos Esteves, Christine Allen-Blanchette, Xiaowei Zhou, and Kostas Daniilidis. Polar transformer networks. arXiv preprint arXiv:1709.01889, 2017.

- EI [08] Lior Elazary and Laurent Itti. Interesting objects are visually salient. Journal of vision, 8(3):3–3, 2008.

- EKL [19] Gamaleldin Elsayed, Simon Kornblith, and Quoc V Le. Saccader: Improving accuracy of hard attention models for vision. In Advances in Neural Information Processing Systems, pages 700–712, 2019.

- ESC+ [18] Gamaleldin F. Elsayed, Shreya Shankar, Brian Cheung, Nicolas Papernot, Alex Kurakin, Ian Goodfellow, and Jascha Sohl-Dickstein. Adversarial examples that fool both computer vision and time-limited humans, 2018.

- FFY+ [08] Barbara L Finlay, Edna Cristina S Franco, Elizabeth S Yamada, Justin C Crowley, Michael Parsons, José Augusto PC Muniz, and Luiz Carlos L Silveira. Number and topography of cones, rods and optic nerve axons in new and old world primates. Visual Neuroscience, 25(3):289–299, 2008.

- Fuk [80] Kunihiko Fukushima. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological cybernetics, 36(4):193–202, 1980.

- GKT [06] Rudy Guyonneau, Holle Kirchner, and Simon J Thorpe. Animals roll around the clock: The rotation invariance of ultrarapid visual processing. Journal of Vision, 6(10):1–1, 2006.

- GM [92] Melvyn A Goodale and A David Milner. Separate visual pathways for perception and action. Trends in neurosciences, 15(1):20–25, 1992.

- GRM+ [18] Robert Geirhos, Patricia Rubisch, Claudio Michaelis, Matthias Bethge, Felix A Wichmann, and Wieland Brendel. Imagenet-trained cnns are biased towards texture; increasing shape bias improves accuracy and robustness. arXiv preprint arXiv:1811.12231, 2018.

- HKP+ [11] Sonja B Hofer, Ho Ko, Bruno Pichler, Joshua Vogelstein, Hana Ros, Hongkui Zeng, Ed Lein, Nicholas A Lesica, and Thomas D Mrsic-Flogel. Differential connectivity and response dynamics of excitatory and inhibitory neurons in visual cortex. Nature neuroscience, 14(8):1045, 2011.

- HW [62] David H Hubel and Torsten N Wiesel. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. The Journal of physiology, 160(1):106–154, 1962.

- HZRS [16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- IBSA [91] Oscar Inzunza, Hermes Bravo, Ricardo L Smith, and Manuel Angel. Topography and morphology of retinal ganglion cells in falconiforms: A study on predatory and carrion-eating birds. The Anatomical Record, 229(2):271–277, 1991.

- IFRS [19] Hidehiko K Inagaki, Lorenzo Fontolan, Sandro Romani, and Karel Svoboda. Discrete attractor dynamics underlies persistent activity in the frontal cortex. Nature, 566(7743):212–217, 2019.

- IMLP [13] Leyla Isik, Ethan M Meyers, Joel Z Leibo, and Tomaso A Poggio. The dynamics of invariant object recognition in the human visual system. American Journal of Physiology-Heart and Circulatory Physiology, 2013.

- IST+ [19] Andrew Ilyas, Shibani Santurkar, Dimitris Tsipras, Logan Engstrom, Brandon Tran, and Aleksander Madry. Adversarial examples are not bugs, they are features. arXiv preprint arXiv:1905.02175, 2019.

- JBKS [15] Aditya Jain, Ramta Bansal, Avnish Kumar, and KD Singh. A comparative study of visual and auditory reaction times on the basis of gender and physical activity levels of medical first year students. International Journal of Applied and Basic Medical Research, 5(2):124, 2015.

- KB [14] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization, 2014.

- KN [71] Kisou Kubota and Hiroaki Niki. Prefrontal cortical unit activity and delayed alternation performance in monkeys. Journal of neurophysiology, 34(3):337–347, 1971.

- KNL [06] Christoph Kayser, Kristina J Nielsen, and Nikos K Logothetis. Fixations in natural scenes: Interaction of image structure and image content. Vision research, 46(16):2535–2545, 2006.

- LBR+ [15] Yan Luo, Xavier Boix, Gemma Roig, Tomaso Poggio, and Qi Zhao. Foveation-based mechanisms alleviate adversarial examples. arXiv preprint arXiv:1511.06292, 2015.

- LLUZ [16] Wenjie Luo, Yujia Li, Raquel Urtasun, and Richard Zemel. Understanding the effective receptive field in deep convolutional neural networks. In Advances in neural information processing systems, pages 4898–4906, 2016.

- LMBC [90] MF Land, JN Marshall, D Brownless, and TW Cronin. The eye-movements of the mantis shrimp odontodactylus scyllarus (crustacea: Stomatopoda). Journal of Comparative Physiology A, 167(2):155–166, 1990.

- MHG+ [14] Volodymyr Mnih, Nicolas Heess, Alex Graves, et al. Recurrent models of visual attention. In Advances in neural information processing systems, pages 2204–2212, 2014.

- MLC [14] NJ Marshall, MF Land, and TW Cronin. Shrimps that pay attention: saccadic eye movements in stomatopod crustaceans. Philosophical Transactions of the Royal Society B: Biological Sciences, 369(1636):20130042, 2014.

- MMS+ [17] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083, 2017.

- MS [85] Richard A Messner and Harold H Szu. An image processing architecture for real time generation of scale and rotation invariant patterns. Computer vision, graphics, and image processing, 31(1):50–66, 1985.

- MST [85] Lina Massone, Giulio Sandini, and Vincenzo Tagliasco. “form-invariant” topological mapping strategy for 2d shape recognition. Computer Vision, Graphics, and Image Processing, 30(2):169–188, 1985.

- MUM [83] Mortimer Mishkin, Leslie G Ungerleider, and Kathleen A Macko. Object vision and spatial vision: two cortical pathways. Trends in neurosciences, 6:414–417, 1983.

- RDS+ [15] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV), 115(3):211–252, 2015.

- Sch [77] Eric L Schwartz. Spatial mapping in the primate sensory projection: analytic structure and relevance to perception. Biological cybernetics, 25(4):181–194, 1977.

- She [10] Charles Scott Sherrington. Flexion-reflex of the limb, crossed extension-reflex, and reflex stepping and standing. The Journal of physiology, 40(1-2):28–121, 1910.

- STG [12] Alexander C. Schütz, Julia Trommershäuser, and Karl R. Gegenfurtner. Dynamic integration of information about salience and value for saccadic eye movements. Proceedings of the National Academy of Sciences, 109(19):7547–7552, 2012.

- SZS+ [13] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199, 2013.

- TB [10] V Javier Traver and Alexandre Bernardino. A review of log-polar imaging for visual perception in robotics. Robotics and Autonomous Systems, 58(4):378–398, 2010.

- TSSDV [82] Roger B Tootell, Martin S Silverman, Eugene Switkes, and Russell L De Valois. Deoxyglucose analysis of retinotopic organization in primate striate cortex. Science, 218(4575):902–904, 1982.

- UOvdOK [18] Jonathan Uesato, Brendan O’Donoghue, Aaron van den Oord, and Pushmeet Kohli. Adversarial risk and the dangers of evaluating against weak attacks, 2018.

- WGB [11] Quanxin Wang, Enquan Gao, and Andreas Burkhalter. Gateways of ventral and dorsal streams in mouse visual cortex. Journal of Neuroscience, 31(5):1905–1918, 2011.

- WGTB [17] Daniel E Worrall, Stephan J Garbin, Daniyar Turmukhambetov, and Gabriel J Brostow. Harmonic networks: Deep translation and rotation equivariance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5028–5037, 2017.

- WH [92] JC Wilson and RM Hodgson. A pattern recognition system based on models of aspects of the human visual system. In 1992 International Conference on Image Processing and its Applications, pages 258–261. IET, 1992.

- XZL+ [18] Chaowei Xiao, Jun-Yan Zhu, Bo Li, Warren He, Mingyan Liu, and Dawn Song. Spatially transformed adversarial examples. arXiv preprint arXiv:1801.02612, 2018.

- ZKW [99] Christoph Zetzsche, Gerhard Krieger, and Bernhard Wegmann. The atoms of vision: Cartesian or polar? JOSA A, 16(7):1554–1565, 1999.

Appendix A Model Details

The hyperparameters for the experiments 4.1, and 4.3 are summarized in Table 3. The architecture of CNNs in "what" and "where" pathways are summarized in Figure 3, and 4.

| section 4.1 | section 4.3 | |

| 54 | 20 | |

| 108 | 24 | |

| 0.16 | 0.16 | |

| LSTM dim (what path) | 512 | 128 |

| LSTM dim (where path) | 512 | 128 |

| Learning rate for what path | 1e-4 | 1e-3 |

| Learning rate for where path | 1e-6 | 1e-5 |

| NumGlimpse | 10 | 20 |

| Batch size | 64 | 256 |

| Unit in FC layer (classification net.) | 512 | 128 |

| Unit in FC layer (baseline net.) | 512 | 128 |

| Unit in FC layer (location net.) | 128 | 128 |