Recognizing Exercises and Counting Repetitions in Real Time

Abstract

Artificial intelligence technology has made its way absolutely necessary in a variety of industries including the fitness industry. Human pose estimation is one of the important researches in the field of Computer Vision for the last few years. In this project, pose estimation and deep machine learning techniques are combined to analyze the performance and report a feedback on the repetitions of performed exercises in real time. Involving machine learning technology in fitness industry could help the judges to count repetitions of any exercise during Weightlifting or CrossFit competitions.

1 Introduction

The project provides a solution to count repetitions of a physical exercise in real time. The method uses pose estimation to track athletes, recognize their performed exercises, count the repetitions, and analyze the performance of the repetitions. OpenPose [11] is a real-time network that detects human poses and extracts their 3D skeleton keypoints from an input video or an external camera. The proposed method uses a pretrained model on BODY_25 dataset for OpenPose, which is faster and more accurate comparing with MSCOCO dataset [12]. The method uses these keypoints to track poses on frames and recognize the performed exercise. A Set of exercise videos are used from UCF101 dataset [8] to train the exercise recognition model. In order to an effective counting and analyzing repetitions, each exercise has different pre-selected parameters; upper and lower range of motion, major joint, and type of motion. The method measures, filters, and smooths the angles of the major joint for the performed exercise. Then, it counts the repetitions of the exercise, and detects the number of correct and incorrect repetitions, and locates their frames.

In Crossfit competitions, each athlete is paired with an individual judge. The judge counts and evaluates the athlete’s repetitions. In large competitions, judges are limited to count repetitions without evaluation [10]. Involving deep learning technology in this field could eliminate the need of this number of judges. This might reduce the budget of judges’ salaries during these competitions, as well as solving the limitation of judges.

The challenge of existing publicly available datasets of video recordings with its repetitions for CrossFit was concerned in this project. So, a total of 25 videos were recorded and others were downloaded from YouTube. Then, the performances of the exercise were evaluated manually to test the proposed method.

The proposed method achieved 98.4% in the accuracy of exercise recognition, which is high ratio and closes to highest ratio of the related works. In addition, it achieved an error of counter within 1 reps, which similar to the best result of related works. However, the proposed method is the only method that can recognize and report the incorrect from the total repetitions. In addition, the model counts the total repetitions for each exercise or sets separately if a person performs multiple exercises and keeps shifting between them.

The main contributions of this project are:

-

•

Offering a complete system to re-identify and track persons, recognize the performed exercises, and count each person’s repetitions in real time.

-

•

Analyzing the performed exercises without the requirement of recording the video from a specific particular perspective.

-

•

Distinguishing and reporting total, correct, and incorrect repetitions.

2 Related Works

There are some existed methods that offer solutions to recognize exercise and/or counting repetitions: using either a smartwatch, a security camera system, or a webcam.

2.1 Smartwatch

In paper [9], a deep learning approach is constructed for exercise recognition and repetition counting. The method uses a raw sensor data extracted from a smartwatch to train a neural network. It achieves a classification accuracy of 99.96% and counting correctly within an error of 1 repetition in 91% of the performed sets. In order to apply the method, every athlete must have a smartwatch, which requires a large budget. In addition, the method is not able to indicate correct/incorrect repetitions.

2.2 GymCam

GymCam [4] is a camera-based system for detecting, recognizing and tracking preformed exercises. The system uses recorded gym video to train a neural network. The method recognizes exercises with an accuracy of 93.6% and counting the number of repetitions within 1.7 on average. It’s able to recognize and count the repetition of multiple persons appearing in the frame. Similar to the previous method [9], GymCam is not able to indicate correct/incorrect repetitions.

2.3 Pose Trainer

Pose Trainer [1] uses pose estimation method to detect the athlete’s exercise pose and provides in details a useful feedback. The model relies on recording a dataset of over 100 exercise videos of correct and incorrect form, based on personal training guidelines, and build geometric-heuristic and machine learning method for evaluation. This method also is not able to analyze the performance of multiple persons appearing in the frame, as well as it not able to count the repetition of the performed exercise. It’s also limited to four exercises; bicep curl, front raises, shrugs, and shoulder press. Another disadvantage is that the user is required to record the video from a particular perspective (facing the camera, side to the camera, etc.)

3 Proposed Method

The proposed method divides respectively into three phases; pose tracker to identify and track athlete to apply the algorithm to multiple persons, exercise recognition to detect the name of the appeared exercises, and counter to count and indicate the correct and incorrect repetitions. Figure 1 sketches a flowchart of the proposed method.

3.1 Pose Tracker

The proposed method identifies and tracks each person appeared in the frames to analyze/count separately his repetitions. For this task, the model uses Euclidean distance score for each 3D body key-point appeared in the current and previous frames. Then, the system rearranges and assigns each person to his unique ID number. Figure 2 shows an example of implementation of person re-identification technique for two persons appearing the videos.

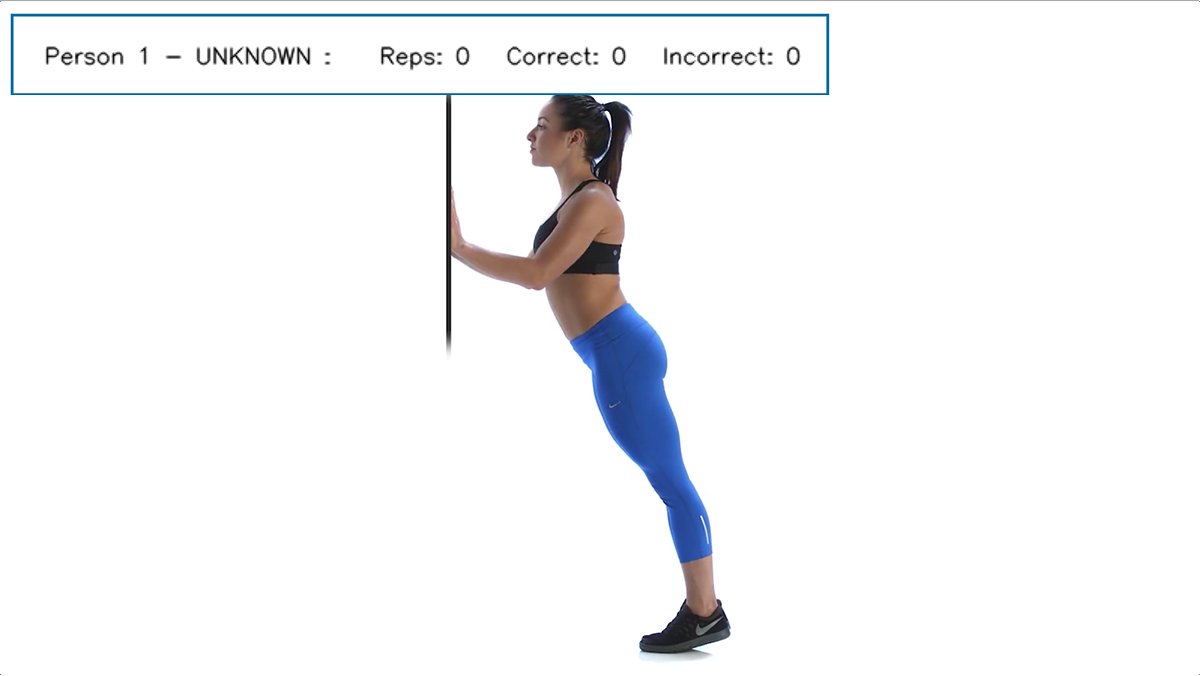

3.2 Exercise Recognition

Basically, it’s an action recognition model that uses 103 push-ups, 101 pull-ups, and 113 squats videos from UCF101 dataset [8]. The model uses OpenPose [11] to extract 3D human body keypoints from each frame. Then, the keypoints are gotten rid of undetected data, and convert from 3D to 2D mesh as shown in figure 4. These keypoints were considered as features to classify the performed exercises. The final dataset that uses to train the action recognition neural network contains 7437 push-ups, 12707 pull-ups, and 14357 squats body keypoints. Figure 3 demonstrates the architecture of the exercise recognition neural network. In order to detect the known and unknown pose/motion, the model classifies pose with reject option [3] using confidence interval thresholds. The mean of the Softmax probabilities from the test set of each class was collected and the 90% confidence interval was estimated to be a reference on final model. The predicted probabilities for each class of the exercise recognition network must be bounded between these estimated confidence intervals to confirm the predicted label or will label as an unknown.

3.3 Repetitions Counter

For this task, OpenPose extracts 3D body keypoints from an input videos or an external webcam. The method recognizes exercise in each frame. After passing 10 frames, the system takes the most frequent prediction from last 10 frames to figure out the performed exercise. Eq.1 shows the formula for this process.

| (1) |

Where, f: frame, and y: predicted label from the network.

After indicating the label of the performed exercise in the frame, the model will use preselected parameters for each exercise. These parameters contain the exercise range of motion, the major joint, and type of motion (push or pull). Then, the system calculates the angles of the major joint using vector dot product [6] as shown in the following equations.

| (2) |

| (3) |

| (4) |

Where, A, B, and C: 3D points.

3.3.1 Data Preprocessing

After measuring each joint angle, the system applies data preprocessing techniques to the calculated angles: filling the gaps and normalizing the outliers.

To fill the gaps, increment/decrement operators are applied as shown in Eq.5.

| (5) |

Where, ∠: The angles, and f: Frame.

To normalizing the outliers, the following proposed formula is applied for N iterations.

| (6) |

Where, ∠: The angles, f: Frame, and M: Middle of range of motion.

3.3.2 Counting Algorithm

Figure 5 represents two cycles (repetitions): A-C (1st cycle) and E-G (2nd cycle). Simply, if the angle passes the middle line of the range of motion (point B), this will count as one repetition. If the following angles (trend) pass the upper/lower of the range of motion (point C) and continue pass the opposite side of the range of motion (point E), this will count as a correct repetition. Otherwise, it will count as an incorrect repetition (point G). So, the first cycle will be count as correct repetitions, while the second one is incorrect.

3.4 Real-time Implementation

One of the major considerations in this project is achievement of run the model in real-time. The essential challenge was achieving higher frame per second (FPS) value using an available computer hardware and equipment. The proposed model is run and tested in a MacBook Pro NVIDIA GeForce GT 750M 2 GB, and an integrated 720p FaceTime HD webcam. The hardware is limited and inefficient to implement deep learning models. However, the keypoints extraction using OpenPose model is executed in Google Colab [2], a free cloud service that supports free GPU. Hence, the proposed method tested using a prerecorded video. Table 1 demonstrates results of two attempts to implement real-time OpenPose model using different dataset on CPU of the MacBook Pro.

| Dataset | FPS | Accuracy |

|---|---|---|

| BODY_25 [11] | 0.6 | High |

| MobileNet-thin [5] | 4.2 | Low |

MobileNet-thin model only extracts 2D keypoints. So, there was an attempt to use 3D pose baseline model as a medium to convert keypoints from 2D to 3D [7]. however, the model used a lot of RAM storage, which made this attempt inefficient.

Due to the achievement of these low values of FPS using CPU, the proposed method was built and evaluated using prerecorded videos within fps of 24 - 30. However, the final system was ran and tested on GPU using jetson AGX Xavier which achieved a FPS of 30.

4 Results

Since the model, divided into three main tasks, each task has its own result. However, the pose tracker was not evaluated in the current model.

4.1 Exercise Recognition

Figures 6 and 7 visualize the performance of the exercise recognition neural network during training process over 50 epochs. Tables 2 and 3 represent the accuracy of the proposed method compared with the related works and class-wise accuracy. Since the related works use different methods and datasets, comparing models in a fair manner is often not exist. However, exercise recognition is a partial task of the final project.

| Method | Accuracy |

|---|---|

| Smartwatch | 99.96% |

| GymCam | 93.6% |

| Proposed Method | 98.4% |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Pull up | 0.975 | 0.982 | 0.979 |

| Push up | 0.975 | 0.968 | 0.972 |

| Squat | 0.992 | 0.989 | 0.990 |

4.2 Repetitions Counter

The following sections represent samples of preprocessed data (angles), and the accuracy of counting repetitions for each method.

4.2.1 Data Preprocessing

Figure 8 demonstrates two graphs of unfiltered and filtered angles. The model filled the gaps and normalized the outliers from the signal (angles).

4.2.2 Counting Repetitions

Table 4 represents the counter error of the proposed method compared with the related works. This comparison as well is not fair due to limited dataset. In addition, there is no existed method that counts correct and incorrect repetition. It is notable that correct/incorrect counting in the proposed method depends on the preselected parameters of the exercise.

| Method | Error |

|---|---|

| Smartwatch | 1 rep |

| GymCam | 1.7 reps |

| Proposed Method | 1 rep |

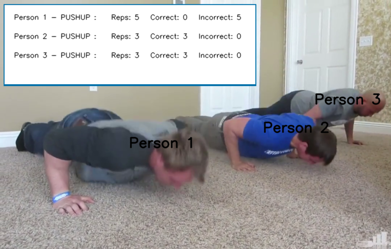

4.3 Output Format

The system generates two formats of an output. First output is a video with label of total, correct, and incorrect repetitions as shown in figure 9. The second output is a text report of each indicated repetition with its time in the original video. Below is an example of text report output on a correctly and incorrectly performed push-up exercise:

5 Conclusion and Future Work

Involving deep learning technology in this field could help the judges during sport competitions. However, currently, I believe that the human decision is still do better than computer. For the future work, the system will be developed to analyze complex exercises, which use multiple joints to perform. The current method is unable to distinguish between the competitors and judges. It’s just ignored any known movement for any person appeared in the frames. In future work, the system will be developed to identify and ignore the judges and audience. Another work is to provide a contribution of a public dataset of videos with its repetitions for CrossFit.

References

- [1] Chen, Steven & Yang, Richard., Pose Trainer: Correcting Exercise Posture using Pose Estimation, 2018.

-

[2]

Fuat.,

Google Colab Free GPU Tutorial, 2018.

https://medium.com/deep-learning-turkey/google-colab-free-gpu-tutorial-e113627b9f5d - [3] Hanczar, B & Dougherty, E., Classification with reject option in gene expression data, 2008.

- [4] Khurana R., Ahuja K., Yu Z., Mankoff J.,Harrison C., & Goel M., GymCam: Detecting, Recognizing and Tracking Simultaneous Exercises in Unconstrained Scenes, 2018.

- [5] Kim I., Deep Pose Estimation implemented using Tensorflow with Custom Architectures for fast inference, 2018.

- [6] Krishnan M., Using the law of cosines and vector dot product formula to find the angle between three points, 2019.

- [7] Martinez J., Hossain R., Romero J., & Little J., A simple yet effective baseline for 3d human pose estimation, 2017.

- [8] Soomro K., Roshan A. & Shah M., UCF101: A Dataset of 101 Human Action Classes From Videos in The Wild, 2012.

- [9] Soro, A., Brunner, G., Tanner, S., & Wattenhofer, R. Recognition and Repetition Counting for Complex Physical Exercises with Deep Learning. 2019.

-

[10]

Walkerm, E.

Are You Ready To Judge?, 2016.

http://www.crossfit1864.com/blog/2016/2/26/29-feb-2016-are-you-ready-to-judge - [11] Zhe C., Tomas S., Shih-En W., & Yaser S., OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields, 2018.

- [12] Zhe C., Tomas S., Shih-En W., & Yaser S., OpenPose - Frequently Asked Question (FAQ), 2019.