ReasonPix2Pix: Instruction Reasoning Dataset for Advanced Image Editing

Abstract

Instruction-based image editing focuses on equipping a generative model with the capacity to adhere to human-written instructions for editing images. Current approaches typically comprehend explicit and specific instructions. However, they often exhibit a deficiency in executing active reasoning capacities required to comprehend instructions that are implicit or insufficiently defined. To enhance active reasoning capabilities and impart intelligence to the editing model, we introduce ReasonPix2Pix, a comprehensive reasoning-attentive instruction editing dataset. The dataset is characterized by 1) reasoning instruction, 2) more realistic images from fine-grained categories, and 3) increased variances between input and edited images. When fine-tuned with our dataset under supervised conditions, the model demonstrates superior performance in instructional editing tasks, independent of whether the tasks require reasoning or not. The code will be available at https://github.com/Jin-Ying/ReasonPix2Pix.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/6cd0669f-8ee8-4c1a-928c-2554232cea59/x1.png)

1 Introduction

Instruction-based image editing aims at furnishing a generative model with the capacity to adhere to human-written instructions for editing images, which is vital to facilitate the AI-Generated Content (AIGC) system’s comprehension of human intentions.

Prevailing instruction-based image editing frameworks typically comprehend explicit and specific instructions, such as “replace the fruits with cake”. Unfortunately, these models display a deficiency in active reasoning capabilities, i.e. understanding the instructions rather than extracting words from them. As shown in Figure 2, one typical instruction-based image editing framework, InstructPix2Pix, fails to realize “she prefers face mask to sunglasses”, adding sunglasses to the woman, which is unreasonable. Meanwhile, the model lacks the ability to comprehend the given image. For example, for a simple straightforward instruction “make it 50 years later”, with a variety of given images, the editing outcomes should be different. But in Figure 3, previous methods simply turn the person to an older one or even fail to edit the image, which is absolutely incorrect.

On the other hand, these methods also lack the capacity to comprehend implicit or inadequately defined instructions. This requires manual intervention to either make implicit instructions explicit or deconstruct the instructions into multiple explicit, specific instructions to align with the capabilities of these models. For example, the instruction “make the room tidy” necessitates manual partitioning into various steps such as “replace loose clothing with neatly folded clothing”, “remove garbage from the floor”, and “smooth the bed linens”, among others. Similarly, the implicit instruction “she is the star of the show” necessitates human intervention to render it explicit as “add some sparkles and a spotlight effect to the image”. So, enhancing the self-reasoning capabilities is not only user-friendly, but also key to the advancement of next-generation intelligent AIGC systems.

Input

Add a pair of sunglasses

She prefers face mask to sunglasses

The potential of generative models aided by MLLM for reasoning-aware instruction editing is considerable. Nonetheless, existing datasets designated for instruction editing fail to fully unlock and exploit the inherent reasoning capabilities of the models. Thus, we develop a comprehensive reasoning-attentive instruction editing dataset, ReasonPix2Pix, comprising both image pairs and accompanying reasoning instructions. ReasonPix2Pix is characterized by: 1) implicit instructions to further the model’s reasoning capability, 2) an abundance of real images taken from fine-grained categories, and 3) increased variances between the input and the subsequently edited images, particularly at the geometric level. We compare it with previous datasets in Table 1.

Further, we inject MLLM into the image editing model and fine-tune it on our dataset, which enhances the reasoning abilities of image editing and significantly boosts the quality of instructional editing. Our contribution can be summarized as follows:

-

•

We propose image editing with instruction reasoning, an interesting task to enhance the model’s intelligence in understanding human intent.

-

•

We develop a comprehensive reasoning-attentive instruction editing dataset, ReasonPix2Pix. It comprises both image pairs and accompanying reasoning instructions.

-

•

We fine-tune a simple framework on our dataset. Without hustle and bustle, our model demonstrates not only superior performance in instruction editing tasks that do not necessitate reasoning but also performs adequately in tasks necessitating reasoning.

2 Related Work

Image Editing

Image editing is a fundamental computer vision task, which can also be viewed as an image-to-image translation. Numerous works [13, 14, 19, 29, 35] have been invented to tackle this task, especially after the proposal of Generative Adversarial Networks (GAN) [20, 67, 19]. One line of methods [1, 2, 3, 10, 50, 41, 7] plug the raw image into latent space [21, 22], and them manipulate it. These methods are proven to be effective in converting image style, adding and moving objects in the images. Recently, with the explosion of multi-modal learning, text information can be embedded through models such as CLIP, and then serves as guidance for image editing [25, 37, 62, 12, 34, 24, 4, 8, 5]. These methods enable models to edit images according to the given text.

Diffusion Models

Diffusion model [48] is among the most popular generative models, showing strong performance in image synthesis [49, 17, 9, 18, 46, 44, 65]. It learns the probability distribution of a given dataset through a diffusion process. Recently, text-to-image diffusion models [34, 42, 40, 45], such as Stable Diffusion [42], achieve great success in converting text to high-quality images.

Diffusion Models for Image Editing

Some of the diffusion models are naturally capable of editing images [32, 23, 4, 40, 16]. However, when applied to practice, these models show weak stability (i.e., generating similar images when given similar texts). This problem is alleviated by imposing constrains on models [16] via Prompt-to-Prompt. Different from previous methods that tackle generated images, SDEdit [32] edits real images by a noising-and-denoising procedure.

Image inpainting can be viewed as a more refined image editing. It converts text inputs and user-drawn masks [40, 4, 23] to images of a specific category or style by learning from a small set of training samples [11, 43]. InstructPix2Pix [6] simplify the generation procedure, taking one input image and one instruction to edit it without any training. It proposes a large-scale dataset, with paired images and the corresponding instruction. However, it only contains straightforward instructions, which hinders it from being applied to complicated real-world scenarios. So in this paper, we construct the instruction reasoning dataset for improving image editing.

Multi-modal Large Language Model

With the rapid development of Large Language Models (LLM), they are extended to more modalities (e.g. vision), forming multi-modal large language models. BLIP-2 [27] and mPLUG-OWL [59] introduce a visual encoder to embed images, and then combine them with text embeddings. Instruct-tuning is widely adopted to transfer the ability in LLM to the visual domain [26, 28, 66]. Another line of works use prompt engineering [56, 58, 47, 31, 57], which sacrifices end-to-end training. The application of multi-modal large language models to vision tasks [53] are proven to be effective in grounding [38], and object detection [39, 61].

3 Methodology

Our objective is to perform image editing in accordance with human instructions, with a particular emphasis on reasoning instructions. Given an input image denoted as and a human instruction denoted as , our model is designed to comprehend the explicit or implicit intents of human and subsequently generate the output image that aligns with the provided instruction. To achieve this objective, we introduce ReasonPix2Pix (Section 3.2), a dataset specifically tailored for instruction-based image editing with a focus on reasoning capabilities. Taking our dataset as the foundation training data, we fine-tune a simple framework that comprises a multimodal large language model coupled with a diffusion model.

3.1 Preliminaries

InstructPix2Pix Dataset

InstructPix2Pix [6] produces an important large-scale paired dataset to enable instruction-based image editing. Concretely, As shown in Figure 4, it contains 1) Input Image and Input Caption , 2) Edited Image and Edited Caption , and 3) Text Instruction .

V3Det Dataset

V3Det [52] is a vast detection dataset with 13,204 categories, and over images. The images appear to be realistic and complex, developing a more general visual perception system.

3.2 ReasonPix2Pix

| Input Image | Edited Image | Instruction | Number | |

|---|---|---|---|---|

| Part I | InstructPix2Pix | InstructPix2Pix | Generated | 8,013 |

| Part II | InstructPix2Pix | Generated | Generated | 4,141 |

| Part III | V3Det | Generated | Generated | 28,058 |

Towards injecting reasoning ability into the image editing model, we construct a comprehensive reasoning-attentive instruction editing dataset.

According to the generation procedure, our generated dataset can be divided into three parts. As shown in Table 2, Part I takes the original image pair in InstructPix2Pix, with our generated instruction to enable instruction reasoning, in Part II we start from the input image from InstructPix2Pix, generate our own edited image and instructions, and in Part III, we take more realistic images from V3Det, and generate edited images and instructions.

Filtering

Though achieving great success in instruction-based image editing, InstructPix2Pix model suffers from various failure cases. One typical failure case is that the model is prone to output the original image, i.e. conduct no editing. Delving into the dataset, we observe that a proportion of edited images is highly similar to the input image. Therefore, we need to filter this part of data first, we distinguish them by

| (1) |

where is the threshold value of the divergence between the input image and the edited image. If is too small, the input image and edited image appear to be too similar, so this pair of data will be abandoned. can be a pre-defined value or rank value (e.g. pick out the worst data).

Part I: Reasoning Instruction generation

For this part of data, we take the input and edited image directly from the original InstructPix2Pix Dataset. To equip the model with the reasoning ability to understand instructions, we need to convert existing instructions to reasoning instructions , which is indirect, but still accurate. We take the large-scale language model, GPT-3.5 [36] (denoted as ) to generate our reasoning instruction.

As shown in Figure 5, we take input caption, edited caption, and original instruction from InstructPix2Pix dataset, inject them into GPT-3.5, and ask GPT-3.5 to generate candidate instructions.

| (2) |

where is the generation prompt we design to project the input caption, edited caption, and original instruction to a new instruction . With our prompt, the generated instruction should be 1) indirect, and 2) in a similar effect to the original instructions. For each input, we repeat this procedure for times to generate multiple candidate instructions .

Then we again ask GPT-3.5 to distinguish the best instruction among them.

| (3) |

where is the selection prompt we design to ask the GPT model to select the most suitable instruction from . The selected instruction is and it will serve as the reasoning instruction in Part I data. Therefore, the Part I data includes , , and 3) .

Part II III: Image Editing and Reasoning Instruction Generation

To further improve the model capability, we expand the dataset with the other two parts of data. These parts of data not only enhance the reasoning ability of our model, but also aim at improving our model’s capability of coping with more realistic images with fine-grained categories and more variances between the input and edited image.

We take the original input images from InstructPix2Pix and V3Det. The former is the same with Part I but the latter contains abundant real images from large amounts of categories. As shown in Figure 6, since the images from V3Det have no captions, we first generate a caption through BLIP2. For images from InstructPix2Pix, we take the original input caption directly. For simplicity, we denote the input image and caption as and .

The caption is passed to a Spacy model , an advanced Natural Language Processing (NLP) model to recognize entities in sentences. Here we utilize it to extract the candidate categories,

| (4) |

where extracts candidate categories. For instance, in Figure 6, Spacy takes categories, i.e. butterfly and flower.

With these categories, we can locate the corresponding object in the image by Grounding DINO [30].

| (5) |

where is the bounding box of object belongs to category in image .

Then we inject the caption and candidate categories into GPT-3.5. Here we design another prompt to ask GPT-3.5 to output 1) one selected category, 2) the target category we need to replace it with, and 3) the reasoning instruction.

| (6) |

where is the prompt we introduce. It takes input caption and candidate categories and produces the selected category , the target category , and the reasoning instruction. We will replace with . For Figure 6, GPT selects butterfly and produces the target category bee, therefore, the butterfly will be transformed into a bee.

Finally, GLIGEN will conduct object replacement from category to category.

| (7) |

where is the generated edited image, and is the bounding box of the object belongs to . Through this procedure, we obtain paired image ( and ) and instruction , forming Part II and Part III in our dataset.

We present some samples of our data here. From Figure 7, our dataset has complicated reasoning instructions (e.g. “A company has planned a new project on clean energy”), more changes between input and edited image, especially on geometric level, and more realistic images.

3.3 Dataset Utilization

We utilize our extensive model to enhance the active reasoning capability of the editing model. Concretely, we design a simple framework, which integrates a Multimodal Large Language Model (MLLM) into the diffusion model, as depicted in Figure 8. Diverging from previous methodologies that comprehend human intent solely through text, the MLLM enhances understanding by incorporating both the instruction and the input image. Formally, the instruction feature with human’s intent can be formulated as

| (8) |

where is the MLLM. is the output of , containing the multimodal understanding of our instruction.

Then we can inject seamlessly into the editing model. The image generative model can edit the input image under the supervision of .

| (9) |

where is the image model, and is the corresponding output.

Considering the large amounts of parameters in LLM, we fixed it when fine-tuning our model. With our ReasonPix2Pix dataset, the model is fine-tuned end-to-end.

4 Experiment

4.1 Implementation Details

We utilize GPT-3.5-turbo when generating our dataset. We adopt Stable Diffusion v1.5 [42] and LLaVA-7B-v1.5 [28] in our fine-tuning process. The images are resized to , and the base learning rate is during training [55, 54, 63, 64]. Other training configs are consistent with those in InstructPix2Pix [6].

We utilize the test data of V3Det to construct a benchmark by the data generation pipeline in Figure 6, with images. Meanwhile, we record the selected category and the target new category, so we can formulate a straightforward instruction by multiple templates, e.g. Turn A to B. Therefore, our test data consists of the input image and its caption, the ground-truth edited image and its caption, and the straightforward instruction and reasoning instruction, respectively. We evaluate our method, as well as previous methods on this test set.

4.2 Qualitative Results

Image Quality

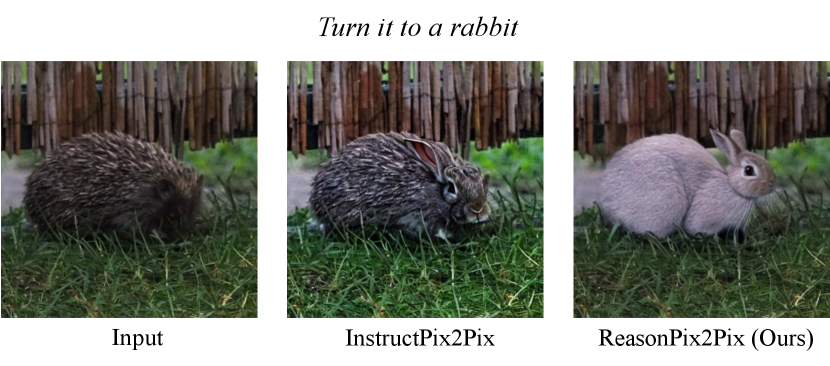

Here we compare the performance of our method with previous methods under straightforward instructions. As in Figure 9, InstructPix2Pix fails to turn the hedgehog in the image to a rabbit. Our method is able to convert these complicated categories, generating more vivid results.

Reasoning Ability

To compare the reasoning ability, first we start from relatively simple instruction. As shown in Figure 10, when the instruction is “remove the color”. Previous methods can to some extent, understand the instruction, but the generated results are not accurate. InstructPix2Pix follows the instruction to convert the image to black-and-white, but it also removes the background. On the contrary, our ReasonPix2Pix understands the instruction and gives adequate results.

| Method | Direct Instruction | Reasoning Instruction | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| L1 | L2 | CLIP-I | DINO | CLIP-T | L1 | L2 | CLIP-I | DINO | CLIP-T | |

| Null-text [33] | 0.0931 | 0.0354 | 0.8542 | 0.8036 | 0.2479 | 0.2637 | 0.1165 | 0.6326 | 0.5249 | 0.1706 |

| InstructPix2Pix [6] | 0.1265 | 0.0423 | 0.8042 | 0.7256 | 0.2465 | 0.2984 | 0.1385 | 0.6034 | 0.5142 | 0.1629 |

| MagicBrush [60] | 0.0706 | 0.0247 | 0.9127 | 0.8745 | 0.2568 | 0.2239 | 0.0938 | 0.6755 | 0.6125 | 0.1941 |

| EDICT [51] | 0.1149 | 0.0385 | 0.8137 | 0.7485 | 0.2490 | 0.2753 | 0.1296 | 0.6282 | 0.5526 | 0.1703 |

| InstructDiffusion [15] | 0.0824 | 0.0295 | 0.8873 | 0.8461 | 0.2506 | 0.2145 | 0.0863 | 0.6904 | 0.6375 | 0.2046 |

| ReasonPix2Pix (Ours) | 0.0646 | 0.0203 | 0.9246 | 0.8920 | 0.2553 | 0.1347 | 0.0476 | 0.7824 | 0.7216 | 0.2350 |

| InstructPix2Pix [6] | MagicBrush [60] | InstructDiffusion [15] | ReasonPix2Pix (Ours) | |

|---|---|---|---|---|

| Direct Instruction | 16 | 21 | 28 | 35 |

| Reasoning Instruction | 13 | 15 | 18 | 54 |

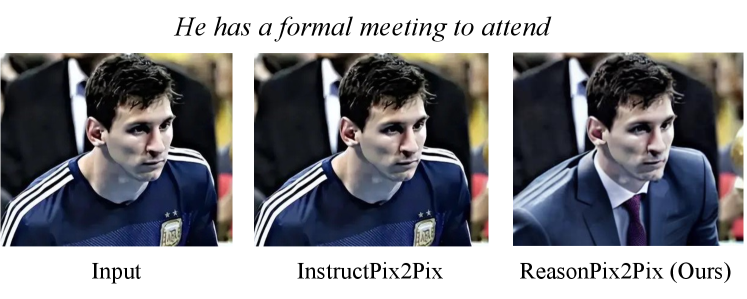

Then we move to more complicated instructions. As demonstrated in Figure 11, with an indirect instruction “he has a formal meeting to attend”, previous InstructPix2Pix cannot tackle it, outputing the original image without any editing. Our method can understand the instruction, and ask him to wear formal clothes to attend the meeting.

4.3 Quantitative Results

Besides the qualitative results above, we also compare the quantitative metrics with previous methods in Table 3, with direct instruction and reasoning instruction respectively. We report the L1 and L2 distance between the generated images and ground-truth images, and the cosine distance between their CLIP and DINO embeddings respectively. In addition, we also report CLIP-T, the cosine similarity between CLIP features of the target caption and the generated image. With traditional direct instructions, our method achieves competitive performance among previous methods, proving the quality of our generated images. When it comes to reasoning instruction that requires understanding, previous methods show degraded performance, but our method achieves remarkably higher results than other methods.

Meantime, we also establish a user study to compare our method with previous methods. We random sample samples generated by different models respectively, and ask workers to evaluate them ( per person). The workers are asked to pick out the best image among candidates. From Table 4, with direct instruction, our method is mildly superior to previous methods. When the instructions become reasoning ones, the gap between our method and previous methods becomes larger.

4.4 Analysis

Qualitative Results

We evaluate the effectiveness of the three parts of our dataset. Figure 12 demonstrates the results when training our method with merely Part I, Part I, and Part II, and the whole dataset respectively. We can observe that when confronting instructions that require reasoning, previous methods such as InstructPix2Pix are prone to edit nothing or produce unreasonable editing results. With Part I data, the model seems to understand the instructions, but it is still hard to provide an edited image. It is consistent with our proposal that with merely the images in InstructPix2Pix dataset, the editing ability of the model is still limited. On the other hand, when introducing Part II and Part III data sequentially, the editing results become increasingly better. With all the data in our dataset, the model is capable of understanding the instruction and producing the corresponding results.

On the other hand, in our simple framework, we integrate Multi-modal Large Language model into the image editing model, which naturally has reasoning ability. Here, we compare the results of InstructPix2Pix, adding MLLM without fine-tuning, and our model that is fine-tuned on ReasonPix2Pix. Figure 13 shows that without fine-tuning, it is hard for the image editing model to take the output of MLLM. When fine-tuned on our dataset, the model is capable of understanding and editing.

Quantitative Results

In Figure 15(a) we compare the quantitative results. CLIP-I rises when we add Part I, II, and III data. Therefore, the three parts of our dataset are all indispensable. Meanwhile, as shown in Figure 15(b), MLLM brings about minor improvements, and our dataset obviously advances model performance. The quantitative results are again, consistent with our qualitative results.

Comprehensive Understanding

Finally, let us return the case in Sec. 1, the instruction “make it 50 years later”. As mentioned in Sec. 1, previous methods cannot cope with some cases such as fruits. Meanwhile, understanding instruction is not a single-modal problem, a statue of a man will not become an old man after 50 years. With our framework and dataset, the model takes both image and instruction into consideration. As a result, it provides reasonable results according to different inputs. After 50 years, a young beautiful woman becomes an old woman, the apple turns into a rotted one, and the statue becomes a broken one with dust.

4.5 Limitations

Our dataset size is still limited due to API costs. We have formulated a clear data generation pipeline in this paper. If needed, researchers can expand our dataset to more than .

5 Conclusion

In this paper, we aim at enhancing the reasoning ability of editing models to make it more intelligent. Concretely, we introduce ReasonPix2Pix, a dedicated reasoning instruction editing dataset to inject reasoning ability to image editing. We fine-tune a simple framework on our proposed dataset. Extensive experiment results prove that our method achieves competitive results, no matter the instruction requires reasoning or not.

References

- Abdal et al. [2019] Rameen Abdal, Yipeng Qin, and Peter Wonka. Image2stylegan: How to embed images into the stylegan latent space? In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4432–4441, 2019.

- Abdal et al. [2020] Rameen Abdal, Yipeng Qin, and Peter Wonka. Image2stylegan++: How to edit the embedded images? In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8296–8305, 2020.

- Alaluf et al. [2022] Yuval Alaluf, Omer Tov, Ron Mokady, Rinon Gal, and Amit Bermano. Hyperstyle: Stylegan inversion with hypernetworks for real image editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18511–18521, 2022.

- Avrahami et al. [2022] Omri Avrahami, Dani Lischinski, and Ohad Fried. Blended diffusion for text-driven editing of natural images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18208–18218, 2022.

- Bar-Tal et al. [2022] Omer Bar-Tal, Dolev Ofri-Amar, Rafail Fridman, Yoni Kasten, and Tali Dekel. Text2live: Text-driven layered image and video editing. In European Conference on Computer Vision, pages 707–723. Springer, 2022.

- Brooks et al. [2023] Tim Brooks, Aleksander Holynski, and Alexei A Efros. Instructpix2pix: Learning to follow image editing instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18392–18402, 2023.

- Chai et al. [2021] Lucy Chai, Jonas Wulff, and Phillip Isola. Using latent space regression to analyze and leverage compositionality in gans. In International Conference on Learning Representations, 2021.

- Crowson et al. [2022] Katherine Crowson, Stella Biderman, Daniel Kornis, Dashiell Stander, Eric Hallahan, Louis Castricato, and Edward Raff. Vqgan-clip: Open domain image generation and editing with natural language guidance. In European Conference on Computer Vision, pages 88–105. Springer, 2022.

- Dhariwal and Nichol [2021] Prafulla Dhariwal and Alexander Nichol. Diffusion models beat gans on image synthesis. Advances in Neural Information Processing Systems, 34:8780–8794, 2021.

- Epstein et al. [2022] Dave Epstein, Taesung Park, Richard Zhang, Eli Shechtman, and Alexei A Efros. Blobgan: Spatially disentangled scene representations. In European Conference on Computer Vision, pages 616–635. Springer, 2022.

- Gal et al. [2022a] Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv preprint arXiv:2208.01618, 2022a.

- Gal et al. [2022b] Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. Stylegan-nada: Clip-guided domain adaptation of image generators. ACM Transactions on Graphics (TOG), 41(4):1–13, 2022b.

- Gatys et al. [2015] Leon A Gatys, Alexander S Ecker, and Matthias Bethge. A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576, 2015.

- Gatys et al. [2016] Leon A Gatys, Alexander S Ecker, and Matthias Bethge. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2414–2423, 2016.

- Geng et al. [2023] Zigang Geng, Binxin Yang, Tiankai Hang, Chen Li, Shuyang Gu, Ting Zhang, Jianmin Bao, Zheng Zhang, Han Hu, Dong Chen, et al. Instructdiffusion: A generalist modeling interface for vision tasks. arXiv preprint arXiv:2309.03895, 2023.

- Hertz et al. [2022] Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626, 2022.

- Ho et al. [2020] Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33:6840–6851, 2020.

- Ho et al. [2022] Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. Cascaded diffusion models for high fidelity image generation. J. Mach. Learn. Res., 23:47–1, 2022.

- Huang et al. [2018] Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. Multimodal unsupervised image-to-image translation. In ECCV, 2018.

- Isola et al. [2017] Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. Image-to-image translation with conditional adversarial networks. CVPR, 2017.

- Karras et al. [2019] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 4401–4410, 2019.

- Karras et al. [2020] Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8110–8119, 2020.

- Kawar et al. [2022] Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. Imagic: Text-based real image editing with diffusion models. arXiv preprint arXiv:2210.09276, 2022.

- Kim et al. [2022] Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. Diffusionclip: Text-guided diffusion models for robust image manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2426–2435, 2022.

- Kwon and Ye [2022] Gihyun Kwon and Jong Chul Ye. Clipstyler: Image style transfer with a single text condition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18062–18071, 2022.

- Li et al. [2023a] Bo Li, Yuanhan Zhang, Liangyu Chen, Jinghao Wang, Jingkang Yang, and Ziwei Liu. Otter: A multi-modal model with in-context instruction tuning. arXiv:2305.03726, 2023a.

- Li et al. [2023b] Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv:2301.12597, 2023b.

- Liu et al. [2023a] Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee. Visual instruction tuning. arXiv:2304.08485, 2023a.

- Liu et al. [2019] Ming-Yu Liu, Xun Huang, Arun Mallya, Tero Karras, Timo Aila, Jaakko Lehtinen, and Jan Kautz. Few-shot unsupervised image-to-image translation. In IEEE International Conference on Computer Vision (ICCV), 2019.

- Liu et al. [2023b] Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. arXiv preprint arXiv:2303.05499, 2023b.

- Liu et al. [2023c] Zhaoyang Liu, Yinan He, Wenhai Wang, Weiyun Wang, Yi Wang, Shoufa Chen, Qinglong Zhang, Yang Yang, Qingyun Li, Jiashuo Yu, et al. Internchat: Solving vision-centric tasks by interacting with chatbots beyond language. arXiv:2305.05662, 2023c.

- Meng et al. [2021] Chenlin Meng, Yutong He, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. Sdedit: Guided image synthesis and editing with stochastic differential equations. In International Conference on Learning Representations, 2021.

- Mokady et al. [2023] Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. Null-text inversion for editing real images using guided diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6038–6047, 2023.

- Nichol et al. [2021] Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. Glide: Towards photorealistic image generation and editing with text-guided diffusion models. arXiv preprint arXiv:2112.10741, 2021.

- Ojha et al. [2021] Utkarsh Ojha, Yijun Li, Cynthia Lu, Alexei A. Efros, Yong Jae Lee, Eli Shechtman, and Richard Zhang. Few-shot image generation via cross-domain correspondence. In CVPR, 2021.

- OpenAI [2022] OpenAI. Chatgpt, 2022.

- Patashnik et al. [2021] Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pages 2085–2094, 2021.

- Peng et al. [2023] Zhiliang Peng, Wenhui Wang, Li Dong, Yaru Hao, Shaohan Huang, Shuming Ma, and Furu Wei. Kosmos-2: Grounding multimodal large language models to the world. arXiv:2306.14824, 2023.

- Pi et al. [2023] Renjie Pi, Jiahui Gao, Shizhe Diao, Rui Pan, Hanze Dong, Jipeng Zhang, Lewei Yao, Jianhua Han, Hang Xu, Lingpeng Kong, and Tong Zhang. Detgpt: Detect what you need via reasoning. arXiv:2305.14167, 2023.

- Ramesh et al. [2022] Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, 2022.

- Richardson et al. [2021] Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. Encoding in style: a stylegan encoder for image-to-image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2287–2296, 2021.

- Rombach et al. [2022] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10684–10695, 2022.

- Ruiz et al. [2022] Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. arXiv preprint arXiv:2208.12242, 2022.

- Saharia et al. [2022a] Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. Palette: Image-to-image diffusion models. In ACM SIGGRAPH 2022 Conference Proceedings, pages 1–10, 2022a.

- Saharia et al. [2022b] Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S Sara Mahdavi, Rapha Gontijo Lopes, et al. Photorealistic text-to-image diffusion models with deep language understanding. arXiv preprint arXiv:2205.11487, 2022b.

- Saharia et al. [2022c] Chitwan Saharia, Jonathan Ho, William Chan, Tim Salimans, David J Fleet, and Mohammad Norouzi. Image super-resolution via iterative refinement. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022c.

- Shen et al. [2023] Yongliang Shen, Kaitao Song, Xu Tan, Dongsheng Li, Weiming Lu, and Yueting Zhuang. Hugginggpt: Solving ai tasks with chatgpt and its friends in huggingface. arXiv:2303.17580, 2023.

- Sohl-Dickstein et al. [2015] Jascha Sohl-Dickstein, Eric Weiss, Niru Maheswaranathan, and Surya Ganguli. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning, pages 2256–2265, Lille, France, 2015. PMLR.

- Song and Ermon [2019] Yang Song and Stefano Ermon. Generative modeling by estimating gradients of the data distribution. Advances in Neural Information Processing Systems, 32, 2019.

- Tov et al. [2021] Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. Designing an encoder for stylegan image manipulation. ACM Transactions on Graphics (TOG), 40(4):1–14, 2021.

- Wallace et al. [2023] Bram Wallace, Akash Gokul, and Nikhil Naik. Edict: Exact diffusion inversion via coupled transformations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 22532–22541, 2023.

- Wang et al. [2023a] Jiaqi Wang, Pan Zhang, Tao Chu, Yuhang Cao, Yujie Zhou, Tong Wu, Bin Wang, Conghui He, and Dahua Lin. V3det: Vast vocabulary visual detection dataset. arXiv preprint arXiv:2304.03752, 2023a.

- Wang et al. [2023b] Wenhai Wang, Zhe Chen, Xiaokang Chen, Jiannan Wu, Xizhou Zhu, Gang Zeng, Ping Luo, Tong Lu, Jie Zhou, Yu Qiao, et al. Visionllm: Large language model is also an open-ended decoder for vision-centric tasks. arXiv:2305.11175, 2023b.

- Wang et al. [2017] Xuan Wang, Jingqiu Zhou, Shaoshuai Mou, and Martin J Corless. A distributed linear equation solver for least square solutions. In 2017 IEEE 56th Annual Conference on Decision and Control (CDC), pages 5955–5960. IEEE, 2017.

- Wang et al. [2019] Xuan Wang, Jingqiu Zhou, Shaoshuai Mou, and Martin J Corless. A distributed algorithm for least squares solutions. IEEE Transactions on Automatic Control, 64(10):4217–4222, 2019.

- Wu et al. [2023] Chenfei Wu, Shengming Yin, Weizhen Qi, Xiaodong Wang, Zecheng Tang, and Nan Duan. Visual chatgpt: Talking, drawing and editing with visual foundation models. arXiv:2303.04671, 2023.

- Yang et al. [2023a] Rui Yang, Lin Song, Yanwei Li, Sijie Zhao, Yixiao Ge, Xiu Li, and Ying Shan. Gpt4tools: Teaching large language model to use tools via self-instruction. arXiv:2305.18752, 2023a.

- Yang et al. [2023b] Zhengyuan Yang, Linjie Li, Jianfeng Wang, Kevin Lin, Ehsan Azarnasab, Faisal Ahmed, Zicheng Liu, Ce Liu, Michael Zeng, and Lijuan Wang. Mm-react: Prompting chatgpt for multimodal reasoning and action. arXiv:2303.11381, 2023b.

- Ye et al. [2023] Qinghao Ye, Haiyang Xu, Guohai Xu, Jiabo Ye, Ming Yan, Yiyang Zhou, Junyang Wang, Anwen Hu, Pengcheng Shi, Yaya Shi, et al. mplug-owl: Modularization empowers large language models with multimodality. arXiv:2304.14178, 2023.

- Zhang et al. [2023a] Kai Zhang, Lingbo Mo, Wenhu Chen, Huan Sun, and Yu Su. Magicbrush: A manually annotated dataset for instruction-guided image editing. arXiv preprint arXiv:2306.10012, 2023a.

- Zhang et al. [2023b] Shilong Zhang, Peize Sun, Shoufa Chen, Min Xiao, Wenqi Shao, Wenwei Zhang, Kai Chen, and Ping Luo. Gpt4roi: Instruction tuning large language model on region-of-interest. arXiv:2307.03601, 2023b.

- Zheng et al. [2022] Wanfeng Zheng, Qiang Li, Xiaoyan Guo, Pengfei Wan, and Zhongyuan Wang. Bridging clip and stylegan through latent alignment for image editing. arXiv preprint arXiv:2210.04506, 2022.

- Zhou et al. [2018] Jingqiu Zhou, Wang Xuan, Shaoshuai Mou, and Brian DO Anderson. Distributed algorithm for achieving minimum l 1 norm solutions of linear equation. In 2018 Annual American Control Conference (ACC), pages 5857–5862. IEEE, 2018.

- Zhou et al. [2019] Jingqiu Zhou, Xuan Wang, Shaoshuai Mou, and Brian DO Anderson. Finite-time distributed linear equation solver for solutions with minimum -norm. IEEE Transactions on Automatic Control, 65(4):1691–1696, 2019.

- Zhou et al. [2024] Jingqiu Zhou, Aojun Zou, and Hongshen Li. Nodi: Out-of-distribution detection with noise from diffusion. arXiv preprint arXiv:2401.08689, 2024.

- Zhu et al. [2023] Deyao Zhu, Jun Chen, Xiaoqian Shen, Xiang Li, and Mohamed Elhoseiny. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv:2304.10592, 2023.

- Zhu et al. [2017] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Computer Vision (ICCV), 2017 IEEE International Conference on, 2017.