Realtime Global Attention Network for Semantic Segmentation

Abstract

In this paper, we proposed an end-to-end realtime global attention neural network (RGANet) for the challenging task of semantic segmentation. Different from the encoding strategy deployed by self-attention paradigms, the proposed global attention module encodes global attention via depth-wise convolution and affine transformations. The integration of these global attention modules into a hierarchy architecture maintains high inferential performance. In addition, an improved evaluation metric, namely MGRID, is proposed to alleviate the negative effect of non-convex, widely scattered ground-truth areas. Results from extensive experiments on state-of-the-art architectures for semantic segmentation manifest the leading performance of proposed approaches for robotic monocular visual perception.

Index Terms:

semantic segmentation, global attention, visual perception, hierarchy inference, neural network.I INTRODUCTION

Correct bin picking by suction from cluttered environment is nontrivial for a robotic hand [1]. Since robotic hands don’t have much prior knowledge of spatial shape by category, texture of material or normal vectors of surfaces, it remains to be an open topic whether a robotic hand is capable of recognizing and analyzing objectiveness as human being does. This topic becomes more intricate given the constraint that, the very limited information offered is the RGB images of cluttered scene, and plausible areas for suction annotated by various human experts. Individuals may make mistakes while annotating images in their unique styles, which diversifies annotations greatly, making it more difficult for traditional machine vision techniques to forge ahead with.

In terms of neural network based semantic segmentation, realtime visual perception with light computational liability is preferred, especially for robotic bin picking by suction. Zeng et al [1] names semantic segmentations of adsorbability as affordance maps to indicate the possibilities of objects being picked up. It is widely known that, the predicted affordance maps are not yet applicable enough for actual bin picking - post-refinement of estimating normal vectors of 3D surfaces, registering affordance maps to multiple coordinates systems, calibrations on robotic hand and cameras, and data stream synchronization etc. are necessary though time-consuming. In this case, predict more reliable affordance maps in real-time with low-cost inferential processors will greatly benefit the real world implementations.

We propose to efficiently predict affordance maps by realtime global attention network (RGANet), which can be easily adapted to other semantic segmentation tasks. As depicted in Fig. 1, RGANet is a light-weighted hierarchy architecture composed of inference blocks (IBs) that are based on attention mechanism [2, 3]. For the purpose of preserving full-size activation of feature maps, pooling and dropout layers are deprecated. To explore the potentials of proposed global attention modules (GAMs), we adopt a GAM-enhanced encoder-decoder backbone network.

One specific forward pass of the proposed framework is illustrated in Fig. 2. RGANet5 has 5 levels of inference blocks (IBs), each one applies to a 2 down-sampling of its previous feature map to capture features at each scale. The decoder network is composed of vote & upsample (VU) blocks and excite-squeeze-stack (ESS) bottleneck blocks for better decoding capabilities. Due to the enhancement of GAM at each IB, we only utilize 33 standard convolution w/o pooling layers to downsample features, and convolution for reformulating feature depth. We also tested large kernels for down-sampling, which doesn’t outperform 33 convolutions.

Vanilla convolution convolves and aggregates feature maps faster than fully-connected layers due to shared weights. Depth-wise convolution [4] further reduces parameters by convolving groups of feature maps separately then concatenates. We propose to reformulate self-attention mechanism by implementing long convolutional kernels (please refer to section III-B) and depth-wise convolutions instead of computing cosine similarities of feature vectors [3] or pure matrix multiplication [2] upon feature volumes. Our perception is that single convolutional kernel only needs to encode neighboring features other than entire feature volume, which can be mutually correlated by affine-transforming into global encoding. This is beneficial for reducing the computational complexity, and speeding up inference to meet realtime requirements.

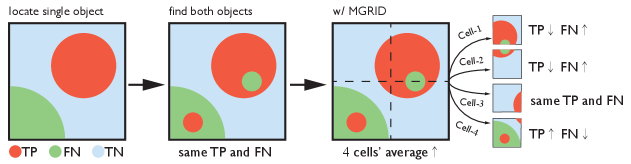

Furthermore, during evaluation phase, due to unconnected ground-truth areas that are distributed across the entire image per the trade-off circumstance illustrated in Fig. 4, generally applied segmentation metrics fail to correctly evaluate predictions. Mean-Grid Fbeta-score (MGRID), a novel metric for segmentation evaluation, alleviates the flaw by two-stage operations: partition and synthetics.

To summarize our contributions, this paper highlights the following novelties:

i. Propose a one-stage hierarchy inference architecture for semantic segmentation without any auxiliary losses.

ii. Propose the GAM for realtime inference - 54fps on a GTX 1070 laptop, and 134fps with a V100 GPU.

iii. Propose the metric MGRID for evaluating widely-distributed predictions.

II RELATED WORKS

II-A Predict Affordance Maps

Zeng et al [1] implemented two FCNs [5] with ResNet-101 [6] backbones to fuse color and depth streams of cluttered scene. This approach yields 83.4% precision at Top-1% percentile affordance proposals. Azpiri et at [7] further refined the Top-1% percentile precision to 94% by a deep Graph-Convolutional Network backbone, which outperforms FCN (ResNet-101 backbone) by 2%. Neither of two approaches takes colored images as the only input, consuming much inference time in processing depth information. Shao et at [8] proposed to predict affordance map by sharing a ResNet-50 backbone between colored image stream and depth image stream, and a U-Net [9] to fuse features at different scales. Their approach is annotation-free, but requires the robotic hand attempting to find the most possible points for suction, which is more expensive than deriving direct predictions from monocular images.

II-B Self-Attention Modules in Semantic Segmentation

Self-Attention mechanism was first proposed in the field of nature language processing [3] for temporal domain. Wang et al [10] adapted the idea to spatial domain, that a feature vector is spatially related to all other features in the feature volume, the features that are highly related will generate strong response, which facilitates network to model the non-local relations across the entire volume.

Li et al [11] designed a fully-convolutional feature pyramid attention module to replace the spatial-pyramid-pooling module [12], and a global-attention-upsample module with which high-level features perform global average pooling [13] as the guidance for low-level features. Woo et al [14] believed that simple channels-wise attention and spatial-wise attention sub-modules can boost representation power of CNNs. Recently, OCNet [15] efficiently aligns a global relation module and a local relation module, dividing and merging feature volumes in different styles. Bello et al [16] proposed to augment standard convolution by attention mechanism. Cao et at [17] proposed a global-context block that benefits from simplified non-local block [10] and squeeze-excitation module [18]. The proposed GAM in this paper is inspired by the criss-cross attention (CCA) mechanism of CCNet [2]. CCNet approximates non-local attention by two cascade CCA modules, each of which correlates all feature vectors aligned horizontally and vertically.

III METHODOLOGY

III-A Hierarchy Inference Architecture

RGANet5 is designed as a single ‘distillation tower’ (see Fig. 2) with 5 temperature levels, each level is composed of one IB. The architecture is expandable such that RGANet has cascade IBs. Each IB halves input feature size, then modulates channels to ratio by a standard convolution. ESS block (see Fig. 3(a)) is regarded as the backbone for IB, we adopt ESS-3, ESS-6, ESS-12, ESS-24 for IB-1 and IB-2, IB-3, IB-4, IB-5 respectively. Output of GAM (refer to Fig. 3(b)) is directly added to the output feature volume x of ESS block as the residual , which formulates the incremental up-sampling layers accordingly. The final output takes the form , where is the learnable weights volume that has the same size of x, and operator ‘’ signifies element-wise product. Therefore, non-negative activation of GAM artifact is preferred, and Batch-Norm (BN) layers are necessary to restrict its upper bound.

As the synthesis of 5-scales distillation artifacts, highway connections not only populate previous inferences to up-sampling layers accordingly, they also facilitate gradient back-propagation during training phase, especially for a very deep network. The ‘vote & upsample’ (VU) block (see Fig. 2) weights concatenated input feature maps by convolution without bias, these weighted features are then upsampled via nearest interpolation. If inferring without last two ESS-3 blocks, RGANet will not yield fine-grained predications. Also, we noticed that IB-1 is directly linked to the last UV4 block, which performs poorly without Decision Unit (DU) shown in Fig. 2. DU consists of feature modulating head to deduct channels from to the number of classes by convolution, and one last GAM that yields predictions.

III-B Realtime Global Attention

Fig. 3(b) illustrates the pipeline of the GAM. With a batch-size of 1, input feature volume filtered by previous ESS block has a shape of , which is rotated to query (upper branch, blue color) and key (lower branch, purple color). Query conducts channel-wise attention via depth-wise sliding kernels shaped across view, which results in a feature volume . Similarly, horizontal positions are encoded by depth-wise convolutional kernel , mapping into the horizontally positional encoding . Key is transformed accordingly except the sizes of kernels, we denote its artifacts as channel-wise encoding and vertically positional encoding . The final output are then formulated as:

| (1) |

where operator signifies rotation; operator performs convolution to resize -channels to -channels with Swish activation function [19], then maps to [0, 1] weights volume via Sigmoid or Softmax functions; ‘’ operator concatenate and features; Let ‘’ denotes the depth-wise convolution, we know:

| (2) | ||||

Consider Eqn. (1) and Eqn. (2), RGA’s total number of trainable parameters is (exclude BN layer, bias and point-wise convolution), while for vanilla convolution with the same kernel height and width, this number becomes . In terms of global attention, although matrix multiplication operation only correlates features horizontally and vertically aligned for any feature vector, , , and themselves are the artifacts of global depth-wise convolutions, which, as a result, each element in these 4 Queries and Keys bounds other elements with shared weights. We can also treat RGA as the type of ‘learnable global attention’. Furthermore, all affine operations of RGA module are differentiable, we didn’t observe vanishing, or exploding gradients issues during training.

III-C Rethink Densely-Stacked Bottlenecks

A Dense block [20] has multiple densely-connected Bottlenecks (BNK) modules, each BNK acts in a stack-squeeze manner, and the last one outputs a -channels feature volume.

The ESS- block, as illustrated in Fig. 3(a), outputs a stacked -channels feature volume by densely-connected BNKs, each contributes -channels of features, and we let . Each channel of stacked feature maps shows one degree of filtered raw input features, such that RGA modules are capable of correlating high-order features to low-level features. RGANet5 adopts ESS-3 for IB-1, ESS-3 for IB-2, ESS-6 for IB-3, ESS-12 for IB-4, and ESS-24 for IB-5. One of our future works is to let RGA module ignore noisy low-level features, and locate more reliable high-order features.

III-D MGRID metric for Evaluation

Existing evaluation metrics poorly treat the special case of a prediction map as illustrated in Fig. 4 that, when original predictions cover object-1 (red), but fail to locate object-2 (green) at the left-bottom corner of image, off-the-shelf metrics yields the same results if part of object-1 relocates to the object-2, because this relocation does not affect paranormal statistics of TP, FN, TN and FP. In reality, we want predications to be able to cover more objects, such that a robotic hand would seek-and-pick all objects even though Precision or Recall is yet not favorable enough (e.g., 15% Recall on object-2 alone). It would be more reasonable to assign higher score for the prediction map that covers 2 objects.

Partition.

2-Stages MGRID metric aims to remedy the issue mentioned above. As shown in the third image of Fig. 4, during partition stage, predication map is manually divided into four cells, each cell is treated fairly against any other cell. An ideal partition will separates objects by different cells. Next, only calculate Fbeta-scores (or any other existing metrics) for all cells that contain predictions and categorical ground-truth (non-zero TP, FP or FN samples), using the definition

| (3) |

where , weights Recall more than Precision, and weights the opposite.

Synthetics.

The second step is to synthesize all Fbeta-scores collected from all partitions, any Fbeta-score is curved by a regulator as shown in Fig. 4, which takes the form

| (4) |

where coefficients requires to be manually set. Fig. 4 shows the curve by setting , then is calculated as . Let , then intercept at can be denoted as . To make the regulator effective, should fall within the interval , which leads to valid ranges of and :

| (5) |

The final confidence score is derived by

| (6) |

IV EXPERIMENTS

IV-A Configuration

Public-available suction dataset [21] consists of camera intrinsics and pose records, RGB-D images of clutter scenes and their backgrounds, and labels. We only adopted color images and labels w/ a train-split of 1470 images and a test-split of 367 images, each image has a resolution of 480640. All colored images were normalized to tensors valued between 0 and 1, which were not resized or padded during training and testing.

IV-B Train and Test

We implemented AdamW optimizer [22] with AMSGrad [23], compared two weighted loss function during training - focal loss (FLoss) [24] and cross-entropy loss (CELoss). Assume denotes the prediction, the one-hot encoded ground-truth, the total number of classes, and are constants, then FLoss and CEloss are denoted as:

| (7) | ||||

where and , , and all . Training-set is augmented during training by random hue, flip, rotation, blur, shift etc.

Online testing comes simultaneously during training, which shows calculations of Jaccard Index, precision, and recall of running batches. The Offline testing loads checkpoint, merely evaluates the class corresponds to predicted suction areas.

All experiments were conducted using a GTX1070 laptop, and one Tesla V100 GPU. Network scaler was set to 15 constantly for a better trade-off between module size and performance. We set constant learning rate to , weights decay rate to 0; , and upon background and negative samples, upon suction areas because of unbalanced proportions; default MGRID parameters , grid intervals , and took the same values as illustrated in Fig. 4. The training of RGANet5 lasted for 2 days.

We implement multiple metrics to evaluate inferential artifacts. Note that for the suction dataset, users only care about feasible regions to adhere. Therefore, only the category that represents predicted suction areas is evaluated, the final scores are computed via averages over entire test-set.

IV-C Ablation Study

Block highways between IBs and remove DU

To evaluate the performance of RGANet5 w/o DU, as well as the effects of disconnecting different highways between IBs, we tested 5 circumstances when different highways to IBs are blocked. The results were acquired via CELoss because of its better performance than implementing FLoss. We find that, FLoss enhances weights for negative samples to weaken salient positive responses, in the meantime, it also ‘confuses’ inferential and voting blocks of RGANet by unreasonable probabilistic distributions of classes, especially when negative samples dominate. Additionally, we applied nearest interpolation instead of deconvolutional layers to VU.

Fig. 5 illustrates the testing performance of RGANet5-NB, RGANet5-B1, RGANet5-B2, RGANet5-B3, and RGANet5-B4 by comparing mean and observing standard deviation of accuracy, Jaccard Index, precision, recall, Dice (F1-score), MCC (Phi Index) and MGRID. It is obvious that IB-1 provides the most crucial lower level features, IB-2, IB-3, IB-4 and IB-5 refine those features distinctively - IB-4 and IB-5 provide more useful semantic information than IB-2 and IB-3. When all highways are disconnected, RGANet5-B4 outputs less precise proposals than RGANet5-B3 - the best performer during the test.

In conclusion, blocking highways has insignificant influence on the performance over test-set.

| Method | Prec. | Recall | Jacc. | Dice | MGRID |

|---|---|---|---|---|---|

| RGANet-B3(w/o) | 43.87 | 48.53 | 30.09 | 42.16 | 28.58 |

| RGANet-B3(w/) | 50.52 | 58.92 | 37.84 | 50.44 | 34.20 |

| RGANet-NB(w/o) | 43.45 | 46.45 | 28.88 | 40.65 | 27.65 |

| RGANet-NB(w/) | 47.16 | 63.36 | 37.92 | 50.60 | 34.58 |

Ablation study on DU. All averaged evaluation metrics are presented in ‘%’. GAM in DU tends to make better trade-off between precision and recall.

Without DU vs With DU

According to Tab. I, GAM boosts the overall performance of the backbone networks by large margins, indicating the powerful encoding capabilities of global attention mechanism. Furthermore, GAM shows a favor of correlating more feature maps, which leads to the superior performance of RGANet-NB. As for RGANet-B3(w/ DU), we also substituted the nearest interpolation layer in VU by a deconvolutional layer to evaluate its up-sampling performance.

| Group 1 - Large Models | Backbone | Parameters | Inference Time | FLOPs | Jaccard | Dice | MGRID |

|---|---|---|---|---|---|---|---|

| RGANet5-B3 (Ours) | - | 3.67M | 7.45ms / 134fps | 1.83B | 37.84 | 50.44 | 34.20 |

| RGANet5-NB (Ours) | - | 3.41M | 7.51ms / 133fps | 1.57B | 37.92 | 50.60 | 34.58 |

| CCNet (ResNet101) [2] | ResNet101 | 71.27M | 51.03ms / 19fps | 73.03B | 43.83 | 57.00 | 38.90 |

| FCN (ResNet50) [5] | ResNet50 | 32.96M | 22.85ms / 43fps | 32.48B | 42.15 | 55.32 | 37.91 |

| FCN (ResNet101) [5] | ResNet101 | 51.95M | 39.41ms / 25fps | 50.72B | 42.28 | 55.51 | 37.69 |

| DeepLabv3 (ResNet50) [25] | ResNet50 | 39.64M | 32.71ms / 30fps | 38.40B | 43.17 | 56.32 | 38.42 |

| DeepLabv3 (ResNet101) [25] | ResNet101 | 58.63M | 49.17ms / 20fps | 56.63B | 41.98 | 55.05 | 37.55 |

| BiSeNetv1 [26] | ResNet18 | 23.08M | 9.65ms / 103fp/s | 9.53B | 37.64 | 50.38 | 34.18 |

Comparison with large semantic segmentation models using a Tesla V100 GPU. All averaged evaluation metrics are presented in ‘%’. Proposed approaches achieve competitive performance with the least total parameters, indicating a better trade-off between model size and performance.

| Group 2 - Light-Weighted Models | Backbone | Parameters | Inference Time | FLOPs | Jaccard | Dice | MGRID |

|---|---|---|---|---|---|---|---|

| RGANet5-B3 (Ours) | - | 3.67M | 7.45ms / 134fps | 1.83B | 37.84 | 50.44 | 34.20 |

| RGANet5-NB (Ours) | - | 3.41M | 7.51ms / 133fps | 1.57B | 37.92 | 50.60 | 34.58 |

| DeepLabv3 [27] | MobileNetv2 | 4.12M | 2.92ms / 342fps | 1.16B | 34.61 | 47.33 | 31.76 |

| DDRNet-23-slim [28] | - | 5.69M | 1.23ms / 813fps | 1.07B | 32.30 | 43.95 | 32.30 |

| HRNet-small-v1 [29] | - | 1.54M | 1.84ms / 543fps | 0.97B | 34.31 | 46.28 | 31.97 |

| HarDNet [30] | - | 4.12M | 1.63ms / 613fps | 1.03B | 35.15 | 47.13 | 32.61 |

| ShelfNet [31] | ResNet18 | 14.57M | 2.06ms / 485fps | 2.91B | 36.17 | 48.61 | 33.32 |

| STDCv1 [32] | - | 14.23M | 2.45ms / 408fps | 5.48B | 36.10 | 48.34 | 33.19 |

Comparison with light-weighted semantic segmentation models using a Tesla V100 GPU. All averaged evaluation metrics are presented in ‘%’.

IV-D Compare to State-of-the-Arts

We conducted experiments to compare RGANet5 with several novel semantic segmentation approaches. These approaches can be divided into two groups - one group that has much more parameters/FLOPs that substantially can outperform RGANet, and the other one that has comparable model sizes. As shown in Tab. II, Deeplabv3 [25] and FCN [5] do not rely on attention mechanism, while CCNet [2] has merely two cascade CCA modules that bring in tremendous amount of trainable parameters and operations. Light-weighted RGANet with 6 GAMs, on the other hand, achieves competitive performance (4-7% less) against the best performer on the test-set. Also, RGANet5 outperforms BiSeNetv1 [26], another attention-based realtime approach, by less parameters and FLOPs.

As illustrated in Tab. III, proposed approach achieves the best Jaccard Index, Dice coefficient and MGRID score when compared with top-tier realtime approaches selected from Cityscape Leader board [33]. Although behaves better in metrics evaluation, RGANet runs relatively slow due to the fact that PyTorch is well-optimized for convolutional neural networks. It is one of our future works to further optimize RGANet for faster and more accurate inference. Readers may refer to Fig. 6 for our qualitative evaluation.

V Conclusion and Future Works

Firstly, we introduced a novel light-weighted, hierarchy inference network embedded with realtime global attention modules. Densely-connected excite-squeeze-stack blocks generate feature volume as the input to realtime global modules, and the attention module correlates features via learnable weights and affine transformations. Ablation study, as well as the comparison with the state-of-the-art approaches manifests the competitive performance of the proposed RGANet5. Secondly, we designed the MGRID metric, which effectively leverages on the weights of predictive regions via partition and synthesis stages. Our future works include but not limit to, enhance the encoding capability of inferential blocks by efficient backbone networks and optimizations.

ACKNOWLEDGMENT

References

- [1] A. Zeng, S. Song, K.-T. Yu, et al., “Robotic pick-and-place of novel objects in clutter with multi-affordance grasping and cross-domain image matching,” in Proceedings of the International Conference on Robotics and Automation (ICRA). IEEE, 2018.

- [2] Z. Huang, X. Wang, L. Huang, et al., “Ccnet: Criss-cross attention for semantic segmentation,” in Proceedings of the International Conference on Computer Vision (ICCV). IEEE/CVF, 2019, pp. 603–612.

- [3] A. Vaswani, N. Shazeer, N. Parmar, et al., “Attention is all you need,” in Proceedings of the Conference and Workshop on Neural Information Processing Systems (NIPS), 2017.

- [4] F. Chollet, “Xception: Deep learning with depthwise separable convolutions,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017, pp. 1251–1258.

- [5] J. Long, E. Shelhamer, and T. Darrell, “Fully convolutional networks for semantic segmentation,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2015, pp. 3431–3440.

- [6] C. Szegedy, S. Ioffe, V. Vanhoucke, et al., “Inception-v4, inception-resnet and the impact of residual connections on learning,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31, no. 1, 2017.

- [7] A. I. Azpiri, E. L. Ortega, and A. A. Cobo, “Affordance-based grasping point detection using graph convolutional networks for industrial bin-picking applications,” Sensors, vol. 21, no. 3, p. 816, 2021.

- [8] Q. Shao, J. Hu, W. Wang, et al., “Suction grasp region prediction using self-supervised learning for object picking in dense clutter,” in Proceedings of the International Conference on Mechatronics System and Robots (ICMSR). IEEE, 2019, pp. 7–12.

- [9] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2015, pp. 234–241.

- [10] X. Wang, R. Girshick, A. Gupta, et al., “Non-local neural networks,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2018, pp. 7794–7803.

- [11] H. Li, P. Xiong, J. An, and L. Wang, “Pyramid attention network for semantic segmentation,” British Machine Vision Conference (BMVC), 2018.

- [12] H. Zhao, J. Shi, X. Qi, et al., “Pyramid scene parsing network,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2017, pp. 2881–2890.

- [13] B. Zhou, A. Khosla, A. Lapedriza, et al., “Learning deep features for discriminative localization,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2016, pp. 2921–2929.

- [14] S. Woo, J. Park, J.-Y. Lee, and I. S. Kweon, “Cbam: Convolutional block attention module,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 3–19.

- [15] Y. Yuan, L. Huang, J. Guo, et al., “Ocnet: Object context for semantic segmentation,” International Journal of Computer Vision, pp. 1–24, 2021.

- [16] I. Bello, B. Zoph, A. Vaswani, et al., “Attention augmented convolutional networks,” in Proceedings of the International Conference on Computer Vision (ICCV), 2019, pp. 3286–3295.

- [17] Y. Cao, J. Xu, S. Lin, et al., “Gcnet: Non-local networks meet squeeze-excitation networks and beyond,” in Proceedings of the International Conference on Computer Vision Workshops, 2019.

- [18] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2018, pp. 7132–7141.

- [19] P. Ramachandran, B. Zoph, and Q. V. Le, “Searching for activation functions,” in International Conference on Learning Representations (ICLR), 2018.

- [20] G. Huang, Z. Liu, L. Van Der Maaten, et al., “Densely connected convolutional networks,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017, pp. 4700–4708.

- [21] A. Zeng, “Datasets,” https://vision.princeton.edu/projects/2017/arc/, Mar. 2018, accessed 5/4/2021.

- [22] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” in International Conference on Learning Representations (ICLR), 2018.

- [23] S. Reddi, S. Kale, and S. Kumar, “On the convergence of adam and beyond,” in International Conference on Learning Representations (ICLR), 2018.

- [24] T.-Y. Lin, P. Goyal, R. Girshick, et al., “Focal loss for dense object detection,” in Proceedings of the International Conference on Computer Vision (ICCV). IEEE, 2017, pp. 2980–2988.

- [25] L.-C. C. G. P. Florian and S. H. Adam, “Rethinking atrous convolution for semantic image segmentation,” in Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2017.

- [26] C. Yu, J. Wang, C. Peng, et al., “Bisenet: Bilateral segmentation network for real-time semantic segmentation,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 325–341.

- [27] M. Sandler, A. Howard, M. Zhu, et al., “Mobilenetv2: Inverted residuals and linear bottlenecks,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2018, pp. 4510–4520.

- [28] Y. Hong, H. Pan, W. Sun, et al., “Deep dual-resolution networks for real-time and accurate semantic segmentation of road scenes,” arXiv preprint arXiv:2101.06085, 2021.

- [29] J. Wang, K. Sun, T. Cheng, et al., “Deep high-resolution representation learning for visual recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [30] P. Chao, C.-Y. Kao, Y.-S. Ruan, et al., “Hardnet: A low memory traffic network,” in Proceedings of the International Conference on Computer Vision (ICCV). IEEE/CVF, 2019, pp. 3552–3561.

- [31] J. Zhuang, J. Yang, L. Gu, and N. Dvornek, “Shelfnet for fast semantic segmentation,” in Proceedings of the International Conference on Computer Vision Workshops (ICCVW). IEEE/CVF, 2019, pp. 0–0.

- [32] M. Fan, S. Lai, J. Huang, et al., “Rethinking bisenet for real-time semantic segmentation,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR). IEEE/CVF, 2021, pp. 9716–9725.

- [33] paperswithcode.com, “real-time semantic segmentation leader board,” https://paperswithcode.com/sota/real-time-semantic-segmentation-on-cityscapes, 2021, accessed 9/2/2021.