Real-World Image Super Resolution via Unsupervised Bi-directional Cycle Domain Transfer Learning based Generative Adversarial Network

Abstract

Deep Convolutional Neural Networks (DCNNs) have exhibited impressive performance on image super-resolution tasks. However, these deep learning-based super-resolution methods perform poorly in real-world super-resolution tasks, where the paired high-resolution and low-resolution images are unavailable and the low-resolution images are degraded by complicated and unknown kernels. To break these limitations, we propose the Unsupervised Bi-directional Cycle Domain Transfer Learning-based Generative Adversarial Network (UBCDTL-GAN), which consists of an Unsupervised Bi-directional Cycle Domain Transfer Network (UBCDTN) and the Semantic Encoder guided Super Resolution Network (SESRN). First, the UBCDTN is able to produce an approximated real-like LR image through transferring the LR image from an artificially degraded domain to the real-world LR image domain. Second, the SESRN has the ability to super-resolve the approximated real-like LR image to a photo-realistic HR image. Extensive experiments on unpaired real-world image benchmark datasets demonstrate that the proposed method achieves superior performance compared to state-of-the-art methods.

Index Terms:

Deep convolutional neural networks, Image super-resolution, Unsupervised manner, Real-world scenes

I Introduction

Single Image Super-Resolution (SISR) aims to reconstruct a High-Resolution (HR) image from a single Low-Resolution (LR) image. It has been widely applied in many computer vision applications, such as surveillance [1], image enhancement [2] and medical image processing [3]. In the SISR task, the general degradation formula is expressed as:

| (1) |

where represents the HR image, is the degraded LR image, denotes a blur kernel, and is the convolution operation performed on and . denotes a downsampling operation on the image with a scaling factor , and is considered as an additive white Gaussian noise. However, under the real-world scene setting, is unknown and should take into account many possible conditions such as sensor noise, compression artifacts, and unpredicted noise caused by physical devices. Resulting from the existence of uncertain kernel and noise , SISR has become a particularly ill-posed inverse task since there are infinite HR images that can be recovered from a given LR image, in which it is required to select the most plausible solution.

Recently, a great number of deep learning-based SISR models have been proposed, such as RDN [4], EDSR [5], SRDenseNet [6], and ESRGAN [7]. Despite the successful progress achieved by the aforementioned methods, there still exist unnoticed issues. Those models were trained in a supervised manner with a large number of paired images, synthesized LR images produced by pre-determined degradation and its HR counterpart, resulting in deteriorative performance when they are applied to real-world scenarios. The reason is that paired LR-HR data is unavailable and the degradation of the input LR image is unknown in the real-world scene. Besides, it is unreasonable to simply apply the LR images downsampled by the ideally fixed kernel to the real-world SR problems [8, 9]. There exists a large domain gap between real-world LR images and artificially synthesized LR images. In addition, the synthesized LR images may eliminate diverse patterns and complicated characteristics belonging to real-world LR images such as sensor noise and natural characteristics. Thus, the existing SR methods normally encounter a serious domain consistency problem and produce poor performance in practical scenarios [10, 11].

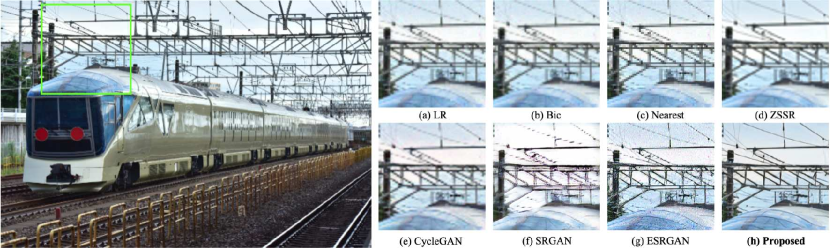

Therefore, it is imperative to explore an effective method that can apply unpaired images to satisfy the need for real-world SR scenarios. It must be different from the aforementioned SR methods which do not take into consideration the domain gap between the LR images generated from a known degradation (e.g., bicubic downscaling) and the real-world LR images. To address the above limitations, we propose an Unsupervised Bi-directional Cycle Domain Transfer Learning-based Generative Adversarial Network (UBCDTL-GAN) for real-world image super-resolution, which is composed of two networks, Unsupervised Bi-directional Cycle Domain Transfer Network (UBCDTN) and Semantic Encoder guided Super Resolution Network (SESRN). To simulate the real-world data distribution and reduce the domain gap between the generated LR image domain and the real-world LR image domain, we design UBCDTN to estimate the inherent degradation kernel from the real-world LR distribution and translate the artificially degraded LR domain image to the real-world domain. With the help of the cycle consistency mechanism [12], the proposed UBCDTN is able to learn bi-directional inverse mapping in an unsupervised manner, which can ensure the generated real-like LR image preserves desired characteristics of real-world patterns. Besides, we also enforce auxiliary constraints on the UBCDTN such as adversarial loss, identity loss, and cycle-perceptual loss. The designed domain transfer network provides an effective way to generate real-like LR images, which can construct the paired real-world LR-HR data for the following SESRN. For the second step, we design the Semantic Encoder guided Super Resolution Network (SESRN). The goal of the SESRN is to super-resolve the real-like LR images to the photo-realistic HR images. It is emphasized that we apply our previously proposed architecture and optimization strategy of GAFHH-RIDN [13] to the generator and discriminator of SESEN. We evaluate our method on the NTIRE 2020 Super Resolution Challenge Track 1 validation dataset. The quantitative and qualitative comparisons demonstrate the superiority of the proposed UBCDTL-GAN compared with other state-of-the-art methods. A comparison of visual results with various latest methods is shown in Fig. 4, and the numeric results can be seen in Table I. The main contributions of our proposed method can be summarized as follows:

1) We propose a novel bi-directional cycle domain transfer network, UBCDTN. According to the domain transfer learning scheme, the designed bi-directional cycle architecture is able to eliminate the domain gap between the artificially degraded LR images and real-world LR images in an unsupervised manner.

2) We further impose the auxiliary constraints on UBCDTN by incorporating adversarial loss, identity loss, and cycle-perceptual loss, which can guarantee that the generated real-like LR images contain the same style as real-world images.

3) We design the SESRN as a deep super-resolution network to generate visually pleasant SR results under supervised learning settings.

4) Benefiting from the collaborative training strategy, the proposed UBCDTL-GAN is able to be trained in an end-to-end fashion, which can ease the entire training procedure and strengthen the robustness of the model.

II Related Work

In this section, we first review the paired image super-resolution methods. Then, we introduce blind and unsupervised learning methods for real-world scenarios.

II-A Paired Image Super Resolution

In recent years, deep learning-based methods have exhibited exceptional ability to enhance SISR performance. However, most of these methods rely on supervised settings, where specific pre-defined and paired LR and HR images are required in the training process. The pioneer method was introduced by Dong [14], namely SRCNN. It is able to recover HR images from bicubic downsampled LR images in an end-to-end manner. Moreover, the enhanced deep SR method EDSR [5] was proposed by Lim , which greatly enlarges the network to be deeper and wider, resulting in learning richer feature representations. Later, generative adversarial learning was introduced in SISR. SRGAN [15] proposed by Ledig incorporates adversarial loss and perceptual loss to update the generator and discriminator respectively, which can obtain photo-realistic SR images. Furthermore, the ESRGAN [7] introduces the new generator architecture and adopts enhanced adversarial loss, achieving promising performance in the visual aspect.

II-B Blind Image Super Resolution

The blind SISR is defined as the task that supposes the LR image is produced by an unknown degradation kernel from its HR version. The Iterative Kernel Correction (IKC) [16] was proposed by Gu . to estimate blur kernel and eliminate artifacts caused by kernel discrepancy. Zhang . [17] proposed IRCNN which includes a set of CNN denoisers to estimate the blur model. However, the results of IRCNN indicate that it is difficult to estimate a comprehensive degradation kernel in real-world conditions. The blind SR methods have the limited capability to approximate unknown degradations. Thus, there is still room for improvement in dealing with blind image super-resolution.

II-C Unpaired Super Resolution

Recently, many unsupervised methods have been proposed to satisfy real-world conditions where the paired LR-HR image data is unavailable, and the input image is corrupted by unknown degradation kernels. By employing the internal recurrence of information inside an image, ZSSR [18] can use pseudo image pairs to recover LR images with diverse blur kernels. Inspired by CycleGAN, Yuan . [10] proposed CinCGAN, which first generates bicubic downsampled LR images from the input and then super-resolves LR images to HR images. However, CinCGAN only considers single bicubic degradation, resulting in poor generalization in complicated real-world SR tasks. By contrast, our method utilizes UBCDTN to simulate diverse real-world degradation types, making it perform proficiently in the real world.

III Overview

We divide the unsupervised super-resolution problem into two stages, but it is still in an end-to-end fashion. The proposed method comprises two stages: 1) Unsupervised image domain translation between real-world LR images and artificially degraded LR images. 2) Semantic encoder guided generative adversarial super resolution from the generated real-like LR images to final HR images in a supervised manner. To obtain the artificially degraded LR image , we perform bicubic downscaling on HR image by image degradation block.

In the first stage, the proposed UBCDTN generates real-like LR images belonging to the real-world domain from the artificially degraded LR images. It aims to transfer the domain of artificially degraded images to the real-world domain, which can ensure a similar real-world LR pattern in the generated real-like LR images. After domain translation performed by UBCDTN, we obtain the real-like LR treated as the input of the following SESRN. In the second stage, we utilize SESRN to learn the super-resolution mapping from the real-like LR space to the HR space. We further train the SESRN with adversarial loss, content loss, and pixel-wise loss to generate photo-realistic SR images.

III-A Unsupervised Bi-directional Cycle Domain Transfer Network

The designed UBCDTN is able to translate the source domain to the target domain . It provides an effective bi-directional cycle solution to reduce the domain gap between and . The forward-cycle module contains where it aims to learn a mapping . The goal of in backward-cycle module is to learn another mapping .

As shown in Fig. 1, the forward-cycle module comprises generator , discriminator and Feature Extractor . The backward-cycle module consists of , , and , where these networks have the same architecture as the forward-cycle module’s corresponding components, but for different purposes. We utilize U-Net as the basic architecture for and . and are standard convolutional neural networks, where each is composed of nine convolutional layers followed by BatchNormalization (BN) layers and Leaky ReLU layers. The pre-trained VGG19 is exploited as feature extractor and .

The UBCDTN trains two generators simultaneously, where these two generators should be translated in bi-direction and inverted to each other. We involve adversarial learning in both modules, where and are trained on discriminator , respectively. However, as mentioned before, the SISR task is an ill-posed problem in which there exist many possible SR images reconstructed from one given LR input. Thus, applying adversarial loss alone to train UBCDTN leads to the problem of model collapse, and it cannot produce the desired generated images which have the same characteristics and patterns as target domain images. It is necessary to employ regularization on UBCDTN to improve the quality of translated images. Thus, we propose a cycle-consistency constraint to guarantee the domain correlation between (, ) and (, ). In addition, in order to avoid color variation, identity loss is applied to preserve color composition between the input image and output image [12]. Moreover, we introduce the cycle-perceptual loss as an additional constraint to preserve the sharper edges and finer structures in the reconstructed images. The following sections provide more details of the forward-cycle module and backward-cycle module.

III-A1 Forward-cycle Module

The forward-cycle module contains a generator , discriminator and feature extractor . The goal of is to map the artificially degraded LR image to the target domain image, i.e., . The concern of the unpaired condition can be eliminated by adding additional constraints. We propose the cycle-consistency to guarantee the relevant content in the generated can be preserved, i.e., . In a word, the generated images of and should be cycle-consistent with each other. Thus, the forward cycle consistency is established . The forward cycle consistency loss can be defined as follows:

| (2) |

| (3) |

where is the number of training images. As Eqn. 2 shows, with the help of , can be identical to the input . Through the cycle consistency mechanism, in the source domain can be reconstructed after performing and on in turn. In addition, we apply adversarial losses to and such that the distribution of is indistinguishable from the real distribution . We apply the Relativistic average GAN (RaGAN) [19] to train and . receives the input and generates , which can fool . The discriminator aims to predict the probability that the provided real image is more realistic compared to the generated fake image . The input of contains real data and fake data respectively. It can be summarized as follows:

| (4) |

| (5) |

where denotes sigmoid non-linearity, denotes the discriminator without the final sigmoid layer, , are mathematical means of and in the training batch respectively, and , are the predicted probability of , to be real by discriminator . An adversarial loss for , can be expressed as and respectively as follows:

| (6) |

| (7) |

where and indicates the distribution of real image , and fake image respectively. We further introduce forward identity loss to maintain color composition between and . The can be generated by , as Eqn. 8 shows. The is expressed as Eqn. 9:

| (8) |

| (9) |

Moreover, in order to minimize the perceptual divergence between and , we utilize to extract VGG feature maps for cycle-perceptual loss estimation. It can be defined as follows:

| (10) |

where indicates the extracted feature maps of -th convolution layer (after activation layer) before -th maxpooling layer. The total objective loss for forward cycle module is a weighted sum of the four loss functions:

| (11) |

where the hyper-parameters , , , are trade-off factors for each loss. We empirically set , , , and to 1, 10, 1 and 1 respectively. It is noticeable that the loss with high weights indicates a significant proportion of the training process.

III-A2 Backward-cycle Module

To transfer the image from the target domain to the source domain, i.e., , we specifically construct a backward-cycle module in which the generator is able to learn the mapping . The learned mapping is capable of re-expressing the target domain image by the source domain image implicitly. The backward-cycle module consists of a generator , discriminator and feature extractor . There are several constraints required in the backward-cycle module. First, we symmetrically design the backward cycle consistency constraint, i.e., , which further forces the contents of generated images to be relevant to the input ones. Thus, the backward cycle is composed of . The backward cycle consistency loss can be defined as follows:

| (12) |

| (13) |

As Eqn. 12 shows, because of the formulated backward cycle scheme, and are capable of inverting the reconstructed images back to the . The Eqn. 13 is able to minimize the correlation discrepancy between and such that the consistent characteristics can be preserved. Similarly, as the forward cycle module, we also adopt adversarial learning in the backward cycle module, where the generator and discriminator are optimized by RaGAN. The adversarial loss and are defined as:

| (14) |

| (15) |

| (16) |

| (17) |

According to the adversarial loss, can generate desired under the supervision of . In order to avoid color variation between and , we further define backward identity loss . The is generated by , as Eqn. 18 shows and the is defined as Eqn. 19:

| (18) |

| (19) |

Moreover, the backward cycle-perceptual loss is calculated to recover visual pleasing details. We utilize to measure the Euclidean distance between feature maps of and . It can be defined as follows:

| (20) |

In the end, the total optimization loss for the backward cycle module can be formulated as:

| (21) |

where the hyper-parameters , , , are the set of corresponding weights for , , and . The , , , and are empirically set to 1, 10, 1 and 1 respectively.

III-A3 Total Unsupervised Bi-directional Cycle Domain Transfer Network Loss

The full optimization objective loss of UBCDTN , which is an addition of forward cycle module loss and backward cycle module loss , can be represented as follows:

| (22) |

III-B Semantic Encoder Guided Super Resolution Network

In this section, we present the proposed SESRN, which aims to generate the desired from the produced by UBCDTN. First, we describe four main components of SESRN: , , , . Second, we describe the optimization loss function for SESRN. The architecture of SESRN is illustrated in Fig. 2. In addition, the structure of the joint discriminator and generator proposed in GAFH-RIDN [13] are shown in Fig. 3.

III-B1 Generator

We utilize the Dense Nested Blocks (DNBs) and Residual in Internal Dense Blocks (RIDBs) that we proposed in GAFH-RIDN [13] as basic units to construct . The goal of is to super-resolve to the SR image from the real-like LR image . The overall super-resolution process is formulated as:

| (23) |

As shown at the top of Fig. 3, the generator contains three stages: Shallow Feature Module (SFM), Multi-level Dense Block Module (MDBM), and Upsampling Module (UM). Specifically, the MDBM is built up by multiple DNBs formed by several RIDBs. Each DNB includes 3 RIDBs cascaded by residual connections and one scaling layer. The designed architecture guarantees the feature maps of each layer are propagated into all succeeding layers, promoting an effective way to extract hierarchical features and alleviating the gradient vanishing problem. It is emphasized that Local Residual Learning (LRL) is introduced to take effective use of the local residual features extracted by RIDBs. In addition, in order to help the generator fully take advantage of hierarchical features, we design the Global Residual Learning (GRL) to fuse the shallow features and global features . Overall, benefiting from the proposed architecture [13], is capable of exploiting abundant hierarchical features and super-resolving from the LR space to the HR space.

III-B2 Semantic Encoder

The proposed semantic encoder is supposed to extract embedded semantics (as shown in Fig. 2), which is used to project visual information (HR, LR) back to the latent space. The motivation is that the GAN-based SR models [15, 7, 20] only exploit visual information during the discriminative procedure, ignoring the semantic information reflected by latent representation. Therefore, the proposed semantic encoder will complement the missing critical property. Previous GAN’s work [21, 22] has proved that the semantic representation is beneficial to the discriminator.

Based on this observation, the proposed semantic encoder is designed to inversely map the image to the embedded semantics. Significantly, the most important advantage of the semantic encoder is that it is able to guide the discriminative process since the embedded semantics obtained from the semantic encoder can reflect semantic attributes, such as the image features (color, texture, and shape) and the spatial relationship between various components of the images. It can be emphasized that the embedded semantics is fed into a joint discriminator along with HR and LR images, leading to optimizing the adversarial process. Thanks to this property, the semantic encoder can guide the discriminative adversarial learning of the joint discriminator, thereby enhancing its discriminative ability.

In this context, we utilize the pre-trained VGG16 [23] networks as the semantic encoder. For the purpose of satisfying the different dimensions of HR and LR images, we adopt two side-by-side semantic encoders, which have the same structure but different input dimensions, to obtain embedded semantics from different convolutional layers respectively.

III-B3 Joint Discriminator

As shown in Fig. 2, the proposed joint discriminator takes the tuple incorporating both visual information and embedded semantics as the input, where Embedded Semantics-Level Discriminative Sub-Net (ESLDSN) aims to identify the embedded semantics whether it comes from the HR images while Image-Level Discriminative Sub-Net (ILDSN) distinguishes whether the input image is from the HR image dataset or the generator. Next, through the operation of the Fully Connected Module (FCM) on a concatenated vector, the final probability is predicted.

Thanks to this property, the joint discriminator is capable of learning the joint probability distribution of image data () and embedded semantics (). In order to satisfy this structure, we design two sets of paths entering into the joint discriminator. The set of red paths in Fig. 2 represents a real tuple which consists of the real HR image from the dataset and its embedded semantics . The set of paths in blue indicates the fake tuple constructed by SR image generated from the generator and produced by semantic encoder through real-like LR image. Therefore, the joint discriminator has the ability to measure the difference between real tuple and the fake tuple .

In addition, we adopt the Relativistic average Least Squares GAN (RaLSGAN) [19] loss for the joint discriminator by applying the RaD to the least squares loss function [24]. The real tuple is denoted as , and the fake tuple is expressed as . In the training procedure, the joint discriminator receives both and as the input. It can be expressed as follows:

| (24) |

| (25) |

where denotes the probability predicted by the joint discriminator. Moreover, the least squares loss is applied to evaluate the distance between HR and SR images. Thus, we define the optimization loss and for joint discriminator and generator respectively:

| (26) |

| (27) |

By taking advantage of least squares loss and relativism in RaLS, SESRN is capable of enhancing model stability and producing visually pleasant SR images.

III-B4 Content Extractor

In SESRN, we further leverage the pre-trained VGG19 network as content extractor to obtain feature representations, where they are used to formulate the content loss . Specifically, we calculate the based on the Euclidean distance between two feature representations of SR images and HR images. We extract feature representations from the ‘Conv3_3’ layer in the content extractor, which are the low-level features before the activation layer. With this loss term, the SESRN is encouraged to reconstruct finer high-frequency details and improve perceptual quality.

III-B5 Loss Function

We introduce content loss to constrain SR images to be faithful to human visual perception. Besides, we also involve pixel-wise loss to optimize our method. Furthermore, adversarial losses and are applied to and respectively, which allows the generator to produce the SR image consistent with the distribution of the HR image.

Content Loss: is able to improve the perceptual similarity between SR and HR images. It is formulated as:

| (28) |

where represents the feature representations obtained from -th convolution layer before -th maxpooling layer in the fixed content extractor.

Pixel-wise Loss: The pixel-wise loss is widely applied to enforce the intensity similarity between the SR and HR images. It is calculated as:

| (29) |

We use to minimize the distance between and .

Total Loss: Finally, we obtain the total loss function for SESRN. It is the weighted sum of the three above-discussed losses. The formula is described as follows:

| (30) |

where , and are empirically set to 1, and 1 respectively.

III-C Full objective loss for UBCDTL-GAN

The full objective loss for the UBCDTL-GAN, which is the combination of and , can be defined as:

| (31) |

The complete objective loss encourages the proposed method UBCDTL-GAN can solve the unpaired real-world image super-resolution problem.

IV Experiments

In this section, we first present the datasets and the experimental details. Then, we compare our method with state-of-the-art SISR methods. The qualitative comparison can be seen in Fig. 4, and the quantitative comparisons are provided in Table I. It is emphasized that all the quantitative results are cited from their official published papers. Moreover, as for qualitative comparison, we directly download the released code and pre-trained models of the compared methods. Then, we carefully re-implement referenced methods on the same validation dataset to obtain effective results.

IV-A Training Data

At the training stage, we conduct experiments on the DF2K dataset [25, 5], which is a merge of the DIV2K and Flickr2K datasets, containing a total of 3450 images. Specifically, as for the LR images, we use the real-world LR images from DIV2K NTIRE 2017 unknown degradation 4× dataset, and Flickr2K LR images collected from NTIRE 2020 Real-World Track 1 training source dataset, where all the LR images are corrupted with unknown degradation, and downsampled 4× by an unpredicted operator to satisfy real-world conditions, resulting in sensor noise, compression artifacts, etc. Since the goal of our method is to solve an unsupervised super-resolution problem without LR-HR paired images, we select the first 1725 images (number: 1-1725) from the DF2K HR dataset as our HR training dataset, and the LR training dataset is formed by the other 1725 images (number: 1726-3450) obtained from DF2K real-world LR dataset. Overall, our method is trained on such an unpaired real-world LR-HR dataset.

To evaluate the proposed method on real-world data, at the testing stage, we use the validation dataset from the NTIRE 2020 Real-World SR challenge Track 1. This dataset contains 100 testing LR images (scaling factor: 4×), where all LR images are processed with unknown degradation operations to simulate the realistic artifacts and natural characteristics. In order to compare the qualitative and quantitative results fairly, we use the same validation dataset for all experiments.

IV-B Training Setups

The training procedure is divided into three steps. Instead of randomly initializing model weights, we pre-train the UBCDTN and SESRN in the first and second steps, and then we jointly train the whole method in an end-to-end manner. First, we train the UBCDTN with unpaired artificially degraded images and real-world images , which aims to transfer the LR image from the artificially degraded LR domain to the real-world LR domain. Second, we pre-train the SESRN using the approximated real-like images and their HR version to generate realistic super-resolved images . As for pre-trained UBCDTN and SESRN, we use the same optimization strategy where the Adam optimizer [26] is applied to train both networks by setting , . The learning rate is initialized as and the minibatch size is set as 8. We train the UBCDTN and SESRN 50K epochs separately. After the pre-training process, both UBCDTN and SESRN are able to acquire exceptional initialization weights, which are beneficial to training stability and fast training speed. In the third step, we jointly train two networks together. The proposed UBCDTL-GAN takes an unpaired HR image and as the input to generate the final result . The UBCDTL-GAN applies the Adam optimizer with an initial learning rate = , and to the whole training process. The model is trained for 100K epochs with the minibatch size 8.

| Methods | NTIRE_2020_T1 | |||

|---|---|---|---|---|

| PSNR | SSIM | |||

| Bicubic | 23.87 | 0.644 | ||

| Nearest Neighbor | 23.39 | 0.580 | ||

| EDSR [5] | 25.36 | 0.640 | ||

| ESRGAN [7] | 19.04 | 0.242 | ||

| SRGAN [15] | 20.78 | 0.525 | ||

| SRFBN [27] | 25.37 | 0.642 | ||

| MsDNN [28] | 25.08 | 0.708 | ||

| RCAN [29] | 25.31 | 0.640 | ||

| USISResNet [30] | 21.71 | 0.589 | ||

| CycleGAN [12] | 25.01 | 0.618 | ||

| ZSSR [18] | 24.87 | 0.600 | ||

| Proposed | 26.83 | 0.789 | ||

IV-C Quantitative Comparison

The quantitative results presented in Table I demonstrate that our method has promising superiority, achieving the highest 26.83dB/0.789 in terms of PSNR/SSIM values. The results obtained from SRFBN [27] place the second-best with 25.37dB/0.642. Our method improves the PSNR/SSIM values by 1.46dB/0.147 over their method. EDSR [5] and SRFBN [27] cannot achieve desired numerical results since they are PSNR-oriented methods and are merely trained on simple degradation images. Moreover, we found that the performances of ESRGAN [7] and SRGAN [15] are lower than most of the methods in Table I. Besides, from Fig. 5, the visual results of ESRGAN and SRGAN show over-smoothed textures and unrealistic artifacts. Interestingly, we found such a phenomenon has also been presented in [10, 18, 30]. The underlying reason is that these methods only train on the simple and clean pre-defined LR images, ignoring the difference of domain distribution between the real-world LR domain and the pre-defined LR domain. Benefiting from the proposed UBCDTN and SESRN, our method has the ability to solve the problem of domain distribution shift and boost quantitative performances when dealing with real-world SR tasks.

IV-D Qualitative Comparison

The visual comparisons are provided in Fig. 4. We compare with various SR methods such as Bicubic, Nearest Neighbor, SRGAN [15], ESRGAN [7], CycleGAN [12], and ZSSR [18]. For the Bicubic and Nearest Neighbor methods, it is obvious that the results lack high-frequency contents, producing overly smooth edges and coarse textures. Regarding the SRGAN, it fails to alleviate the blurring details on the lines and edges of the SR results. The results of ESRGAN suffer from apparently broken artifacts and dramatic degradation problems, which are unfaithful to human perception. As for the unsupervised method CycleGAN and ZSSR, the SR results are improved, but there are still far away from the ground truth. Although the SR images of CycleGAN present better shapes than the previously compared methods, the results still remain unnatural edges and distortions, leading to poor visual effects. Besides, the blind method ZSSR was also evaluated, but it fails to reduce visible corruptions in some degree since there are still over-smoothed textures and noise-like characteristics existing in the images.

Compared with the aforementioned methods, the SR results of our method superiorly outperform all other methods, super-resolving visually pleasant SR images with sharper edges and finer textures. Our SR results are more realistic than SRGAN and ESRGAN since these two methods are only trained on simple degradation data (e.g., bicubiced LR images) without introducing any complicated noise and artifacts from the real-world images while our method trains on approximated real-like LR images consisting of similar characteristics as real-world LR images. The unsupervised method CycleGAN is less effective in super-resolve unclear LR images. Although it involves the cycle translation model, it lacks a powerful super-resolution network as the one (SESRN) in our method. Besides, the other unsupervised method ZSSR also fails to achieve the expected results, since it does not take into account the domain gap between noise-free LR images and real-world images. In contrast, benefiting from the domain transfer network (UBCDTN), our method is able to successfully eliminate the domain gap and produce real-like LR images comprising real-world patterns. Overall, The SR results verify the powerful unsupervised learning strategy used in the proposed method for super-resolving photo-realistic SR images.

| Variants | Methods | SESRN | ||||||

|---|---|---|---|---|---|---|---|---|

| VariantA | SESRN | |||||||

| VariantB | SESRN++ | |||||||

| VariantC | SESRN++++ | |||||||

| VariantD | SESRN++++ | |||||||

| VariantE | SESRN++++++ |

V Ablation Study

In this section, we conduct the ablation study to further investigate the components of the proposed method and demonstrate the advantages of UBCDTL-GAN. The list of compared variants of our method is presented in Table II. We provide visual SR results of different variants in Fig. 5. The quantitative comparison of several variants is presented in Table III.

V-A Description of Different Variants of the Proposed Method

In ablation studies, we design several variants which consist of different proposed components. We adopt the SESRN as the baseline variant in the following experiments and pay more attention to investigating the elements used in the UBCDTN. To comply with the single variable principle, we gradually add one of the components to the baseline variant. We describe the details of designed variants, all of which are specified as follows:

1) VariantA: The VariantA is designed as the baseline variant, which only contains SESRN. As shown in Table II, VariantA can be considered as removing all UBCDTN components from the ultimate proposed method. In the following variants, we successively add each of the components to VariantA.

2) VariantB: In VariantB, we introduce and while , and both , are removed. Because and are essential components of the forward cycle module in UBCDTN, VariantB can be considered as composed of the forward cycle module of UBCDTN and baseline model, removing the backward cycle module.

3) VariantC: Besides the baseline model, it consists of two generators , and two feature extractors , of UBCDTN, eliminating discriminators and involved in UBCDTN.

4) VariantD: It is constructed by the four components , , and of UBCDTN, while it removes feature extractors and of UBCDTN.

5) VariantE (Proposed): The VariantE represents the ultimate proposed method which comprises a baseline model and all components of UBCDTN.

| Variants | NTIRE_2020_T1 | |

|---|---|---|

| PSNR | SSIM | |

| VariantA | 25.97 | 0.757 |

| VariantB | 23.47 | 0.698 |

| VariantC | 25.44 | 0.729 |

| VariantD | 25.81 | 0.746 |

| VariantE (Proposed) | 26.83 | 0.789 |

V-B Effect of UBCDTN

This experiment is conducted by VariantA and VariantE. Specifically, because of removing UBCDTN, VariantA is trained on bicubic downsampled LR images directly while VariantE takes real-like LR images obtained by UBCDTN as the training LR inputs. According to the analysis of the performance between VariantA and VariantE, we can demonstrate advantages originating from UBCDTN. As shown in Fig. 5, VariantA produces over-smoothed SR images missing high-frequency details while the SR results of VariantE contain naturally desired edges and textures. In addition, from Table III, the quantitative results of VariantA decrease dramatically from 26.83dB/0.789 to 25.97dB/0.757 after removing UBCDTN. The reason is that VariantA simply trains on bicubic data, ignoring the domain distribution difference between bicubic data and real-world data when solving the real-world SR task. By incorporating the UBCDTN in the variant, there is a noteworthy improvement in terms of both qualitative and quantitative performance, which is able to verify that UBCDTN plays an important role in the super-resolution procedure. Thus, we can validate the effectiveness of the proposed UBCDTN and its necessity.

V-C Effect of and

In this experiment, we compare VariantB and VariantE to verify the effect of and , which is also identical to show the effectiveness of the backward cycle module. Note that VariantB is equivalent to UBCDTN for domain transformation without the backward cycle module. In this setting, VariantB takes the artificially degraded images as the input to and generates real-like LR images through the supervision of in the forward cycle module without the backward cycle module. It can be observed from Table III that with the absence of the backward cycle module, VariantB performs worse than VariantE which contains the whole UBCDTN in terms of PSNR/SSIM values, since there is no restriction to prevent the forward cycle module and backward cycle module from contradicting each other. A huge enhancement can be observed after integrating the backward cycle module into VariantE, where it is able to greatly improve quantitative performance and further produce high quality SR images with desirable details. This is due to the presence of a backward cycle module. The variant is capable of utilizing cycle consistency constraint, which guarantees the correction between two inverse modules in an unsupervised manner. In a word, by introducing and , the whole bi-directional cycle consistency learning strategy can be established to produce real-like LR images which maintain the same characteristics as real-world LR images. These results validate the significance of involving and , which also reveals that the backward cycle module can improve quantitative results and visual quality.

V-D Effect of and

In this experiment, we aim to demonstrate the contribution of and , which can also reflect the importance of an adversarial loss in the UBCDTN. The VariantC and VariantE are compared to investigate the effect of and . In this case, VariantC is trained on cycle-consistency loss, identity loss, and cycle-perceptual loss, while the adversarial loss is not involved resulting from removing and . As shown in Table III, it is obvious that performance severely decreases by removing discriminators from VariantC. By incorporating and into VariantE, we observe that VariantE significantly outperforms VariantC by a large margin of 1.39dB/0.06 in terms of PSNR/SSIM. From Fig. 5, the visual results of VariantC are degraded, where the SR images contain over-smoothed textures and unclear artifacts, while VarantE is able to generate visually realistic SR images with more nature-looking details. The underlying reason for poor performance is that we cannot employ adversarial loss on VariantC, which leads to the lack of adequate iterative adversarial training. According to the quantitative and qualitative comparisons, it can be verified that by applying adversarial loss performed by and to the variant, the SR performance is greatly enhanced, indicating the effectiveness of , and adversarial loss.

V-E Effect of and

We compare VariantD and VariantE in this experiment to verify the effectiveness of and , where the advantages of and are also equal to the benefit of cycle-perceptual loss. By removing and , VariantD no longer employs cycle-perceptual loss on the training phase to optimize the model. As shown in Table III, VariantE achieves higher quantitative performance compared to VariantD, increasing PSNR/SSIM values from 25.81dB/0.746 to 26.83dB/0.789. In addition, Fig. 5 clearly shows the qualitative comparisons evaluated by the SR results from VariantD and VariantE with and without cycle-perceptual loss in the training process. It is apparent that without cycle-perceptual loss, the deteriorated textures are visible in the SR results of VariantD. In contrast, when integrating and into the variant, the cycle-perceptual loss can be calculated, which further motivates the variant to produce perceptually natural images with exceptional details. According to the numerical performance and visual results, we are able to verify that and have a significant impact on the super-resolution process, which also identifies that the cycle-perceptual loss is able to improve the perceptual quality of SR images.

V-F Final Effect

The VariantE can be considered as the ultimate proposed method, which includes all the proposed components. Compared with other variants, the ultimate proposed method is able to greatly improve quantitative performance and obviously enhance the quality of visual results. Thus, we can conclude the effectiveness of the proposed method as well as all the components.

VI Conclusion

We proposed an unsupervised super-resolution method UBCDTL-GAN for real-world scenarios, which does not involve any paired image data and pre-defined degradation operation. The proposed method comprises two networks, UBCDTN and SESRN. First, the UBCDTN transfers an artificially degraded image to a real-like image with real-world artifacts and characteristics. Next, SESRN reconstructs from the approximate real-like LR image to a visually pleasant super-resolved image with realistic details and textures. According to the designed framework and applied optimization constraints, the proposed method UBCDTL-GAN has the ability to improve real-world super-resolution performance. The quantitative and qualitative experiments on NTIRE 2020 T1 real-world SR dataset validate the effectiveness of our method and show superior SR performances compared to existing state-of-the-art methods.

References

- [1] P. Rasti, T. Uiboupin, S. Escalera, and G. Anbarjafari, “Convolutional neural network super resolution for face recognition in surveillance monitoring,” in International conference on articulated motion and deformable objects. Springer, 2016, pp. 175–184.

- [2] D. Capel and A. Zisserman, “Super-resolution enhancement of text image sequences,” in Proceedings 15th International Conference on Pattern Recognition. ICPR-2000, vol. 1. IEEE, 2000, pp. 600–605.

- [3] W. Shi, J. Caballero, C. Ledig, X. Zhuang, W. Bai, K. Bhatia, A. M. S. M. de Marvao, T. Dawes, D. O’Regan, and D. Rueckert, “Cardiac image super-resolution with global correspondence using multi-atlas patchmatch,” in International conference on medical image computing and computer-assisted intervention. Springer, 2013, pp. 9–16.

- [4] Y. Zhang, Y. Tian, Y. Kong, B. Zhong, and Y. Fu, “Residual dense network for image super-resolution,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2472–2481.

- [5] B. Lim, S. Son, H. Kim, S. Nah, and K. Mu Lee, “Enhanced deep residual networks for single image super-resolution,” in Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2017, pp. 136–144.

- [6] T. Tong, G. Li, X. Liu, and Q. Gao, “Image super-resolution using dense skip connections,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 4799–4807.

- [7] X. Wang, K. Yu, S. Wu, J. Gu, Y. Liu, C. Dong, Y. Qiao, and C. Change Loy, “Esrgan: Enhanced super-resolution generative adversarial networks,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 0–0.

- [8] G. Kim, J. Park, K. Lee, J. Lee, J. Min, B. Lee, D. K. Han, and H. Ko, “Unsupervised real-world super resolution with cycle generative adversarial network and domain discriminator,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 456–457.

- [9] S. Maeda, “Unpaired image super-resolution using pseudo-supervision,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 291–300.

- [10] Y. Yuan, S. Liu, J. Zhang, Y. Zhang, C. Dong, and L. Lin, “Unsupervised image super-resolution using cycle-in-cycle generative adversarial networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018, pp. 701–710.

- [11] T. Zhao, W. Ren, C. Zhang, D. Ren, and Q. Hu, “Unsupervised degradation learning for single image super-resolution,” arXiv preprint arXiv:1812.04240, 2018.

- [12] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2223–2232.

- [13] X. Wang, Y. Yang, Q. Pang, Q. Tang, and S. Du, “End-to-end generative adversarial face hallucination through residual in internal dense network,” in 29th European Signal Processing Conference (EUSIPCO), 2021.

- [14] C. Dong, C. C. Loy, K. He, and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE transactions on pattern analysis and machine intelligence, vol. 38, no. 2, pp. 295–307, 2015.

- [15] C. Ledig, L. Theis, F. Huszár, J. Caballero, A. Cunningham, A. Acosta, A. Aitken, A. Tejani, J. Totz, Z. Wang et al., “Photo-realistic single image super-resolution using a generative adversarial network,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 4681–4690.

- [16] J. Gu, H. Lu, W. Zuo, and C. Dong, “Blind super-resolution with iterative kernel correction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 1604–1613.

- [17] K. Zhang, W. Zuo, S. Gu, and L. Zhang, “Learning deep cnn denoiser prior for image restoration,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 3929–3938.

- [18] A. Shocher, N. Cohen, and M. Irani, ““zero-shot” super-resolution using deep internal learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3118–3126.

- [19] A. Jolicoeur-Martineau, “The relativistic discriminator: a key element missing from standard gan,” arXiv preprint arXiv:1807.00734, 2018.

- [20] X. Yu and F. Porikli, “Ultra-resolving face images by discriminative generative networks,” in European conference on computer vision. Springer, 2016, pp. 318–333.

- [21] J. Donahue, P. Krähenbühl, and T. Darrell, “Adversarial feature learning,” arXiv preprint arXiv:1605.09782, 2016.

- [22] V. Dumoulin, I. Belghazi, B. Poole, O. Mastropietro, A. Lamb, M. Arjovsky, and A. Courville, “Adversarially learned inference,” arXiv preprint arXiv:1606.00704, 2016.

- [23] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [24] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. Paul Smolley, “Least squares generative adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2794–2802.

- [25] E. Agustsson and R. Timofte, “Ntire 2017 challenge on single image super-resolution: Dataset and study,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2017, pp. 126–135.

- [26] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [27] Z. Li, J. Yang, Z. Liu, X. Yang, G. Jeon, and W. Wu, “Feedback network for image super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 3867–3876.

- [28] S. Gao and X. Zhuang, “Multi-scale deep neural networks for real image super-resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2019, pp. 0–0.

- [29] Y. Zhang, K. Li, K. Li, L. Wang, B. Zhong, and Y. Fu, “Image super-resolution using very deep residual channel attention networks,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 286–301.

- [30] K. Prajapati, V. Chudasama, H. Patel, K. Upla, R. Ramachandra, K. Raja, and C. Busch, “Unsupervised single image super-resolution network (usisresnet) for real-world data using generative adversarial network,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 464–465.