Real-Time Navigation for Bipedal Robots in Dynamic Environments

Abstract

The popularity of mobile robots has been steadily growing, with these robots being increasingly utilized to execute tasks previously completed by human workers. For bipedal robots to see this same success, robust autonomous navigation systems need to be developed that can execute in real-time and respond to dynamic environments. These systems can be divided into three stages: perception, planning, and control. A holistic navigation framework for bipedal robots must successfully integrate all three components of the autonomous navigation problem to enable robust real-world navigation. In this paper, we present a real-time navigation framework for bipedal robots in dynamic environments. The proposed system addresses all components of the navigation problem: We introduce a depth-based perception system for obstacle detection, mapping, and localization. A two-stage planner is developed to generate collision-free trajectories robust to unknown and dynamic environments. And execute trajectories on the Digit bipedal robot’s walking gait controller. The navigation framework is validated through a series of simulation and hardware experiments that contain unknown environments and dynamic obstacles.

I Introduction

Bipedal robots have been a popular field of research, due to the large range of tasks they can be utilized in. The general humanoid shape of bipedal robots allows them to be better integrated into a society designed for humans. However, for bipedal robots to be fully integrated into society, robust autonomous navigation systems need to be designed. These systems can generally be divided into three stages: perception, planning, and control. It is only with the combination of these three stages into a single navigation framework in which bipedal robots can truly integrate into human society.

In this paper, we seek to implement a holistic bipedal robot navigation framework to enable the exploration of unknown, dynamic environments. The three components of autonomous navigation – perception, planning, and control – must be combined into a single navigation system. The perception system must be capable of extracting obstacle and environment structure information. This environment information must then be used to generate maps of the global and local environment for planning. A planning framework must generate global paths and local trajectories that are robust to unknown environments and dynamic obstacles. Furthermore, planning must respect the kinematic constraints of the robot while avoiding obstacles to ensure safe and feasible navigation. Finally, these trajectories must be executed with a low-level controller that maintains safe and stable walking gaits. The combination of these capabilities will enable the safe navigation of bipedal robots in complex environments.

Many works have expanded on the methods of A* [1, 2, 3, 4, 5], PRM [6], and RRT [7, 8, 9] to the unique problems of bipedal motion planning and footstep planning. However, many of these works lack several components required for autonomous navigation systems such as real-time perception, mapping, and localization processes. Furthermore, only few works expand further to adapt these bipedal motion planning methods into more holistic bipedal navigation frameworks. However, many are still unable to address components required in holistic autonomous bipedal systems such as on-robot perception systems [10], localization methods [11], robustness to dynamic obstacles [12, 13], or validation in hardware.

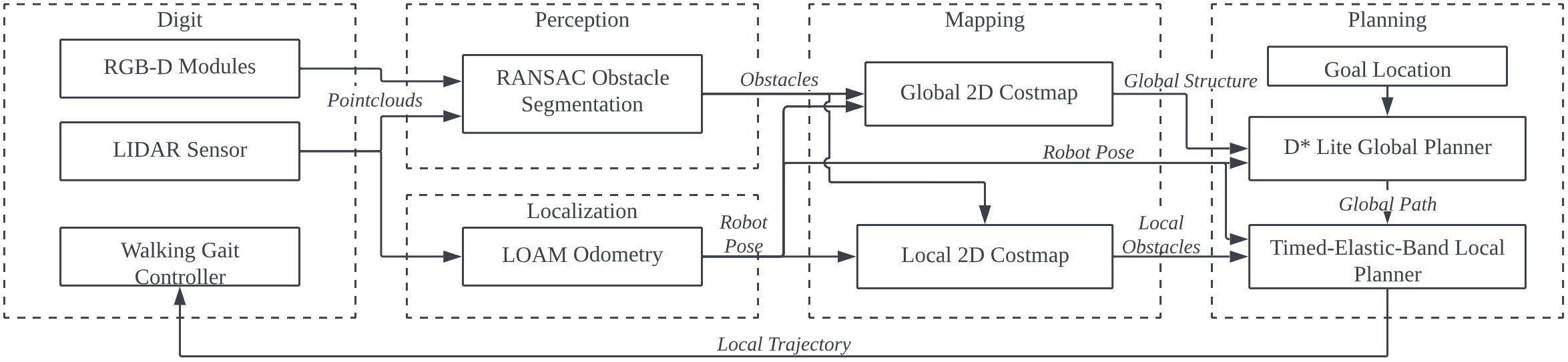

We propose a real-time navigation framework for the Digit robot based on Move Base Flex [14], as shown in Fig. 1. The framework utilizes two RGB-Depth cameras and a LiDAR sensor for perception. The environment is mapped using global and local costmaps to capture large-scale environment structure and local dynamic obstacles. Odometry and localization are calculated using LiDAR Odometry and Mapping during navigation. We developed a two-stage planner to generate collision-free paths and obstacle avoiding trajectories. In particular, a D* Lite global planner capable of fast re-planning is used to generate high-level paths in the global costmap. A Timed-Elastic-Band local planner then follows the global path through optimization-based trajectory generation that respects kinematic, velocity, acceleration, and obstacle avoidance constraints. The local trajectory is executed by generating a sequence of velocity commands sent to Digit’s walking controller.

The rest of this paper is organized as follows. Section II introduces the perception, mapping, and localization process. Next, Section III describes the motion planning methods. Section IV introduces the simulation and hardware experiments and results. Finally, Section V concludes the navigation framework and provides future work discussion.

II Digit Perception, Mapping, and Localization

In this section, we describe the process of building a real-time map of the environment using Digit’s perception sensor suite and localizing Digit within that map. The robot is equipped with two depth cameras (Intel RealSense D430, placed at the pelvis, with one facing forwards at a downward angle and one facing rearwards at a downward angle), and one LiDAR sensor (Velodyne LiDAR VLP-16, placed on top of Digit’s torso).

II-A Perception

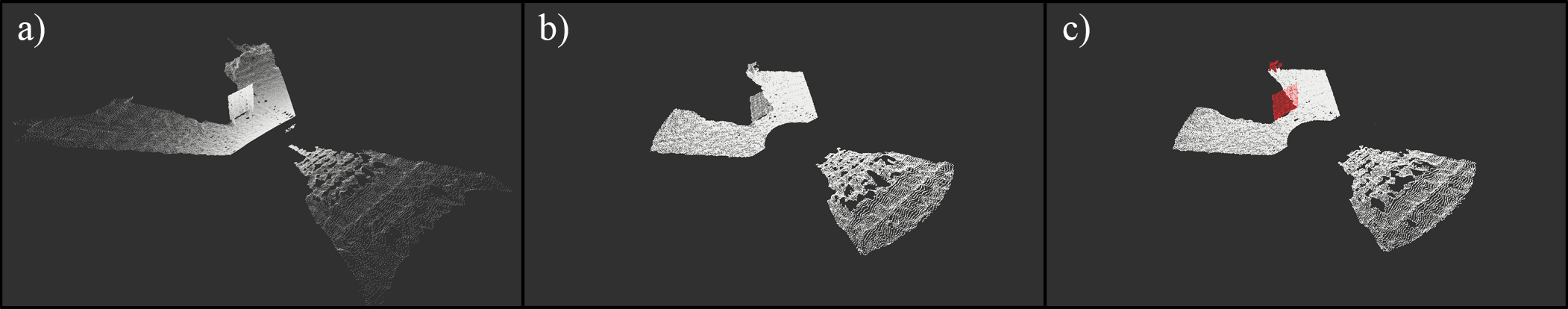

Point Cloud Pre-processing. Before these point clouds can be used for obstacle segmentation, pre-processing is applied to obtain a uniform density and remove outlier detections. First, an average point cloud size reduction of 91.07% is achieved using a Voxel Grid filter [20] for downsampling. Additionally, inaccurate detections outside of the sensors’ accurate range are removed by removing points further than 2.9 m of the depth cameras. Finally, erroneous points detected underground due to reflections are removed using a pass-through filter.

Obstacle Segmentation. After filtering the point cloud from both depth cameras, the resulting clouds are fused into a combined point cloud. The Random Sample Consensus (RANSAC) method proposed in [15] is used to segment obstacles from the fused point cloud. For a given point cloud, , RANSAC randomly samples 3 points to solve a unique plane model:

| (1) |

where are the fitted coefficients, and represents the Cartesian coordinates of a point. Then, the absolute distance, , of each point in the cloud is calculated for the fitted model:

| (2) |

where , is the total number of points in the point cloud . Points within a given threshold distance, , to the plane model are labeled as inliers of the model, denoted ,

| (3) |

This process is repeated iteratively for a specified number of iterations, , determined statistically as:

| (4) |

where is the desired minimum probability of finding at least one good plane from , usually within , and where is the probability that any selected point is an inlier. The resulting plane model is selected as the one which generates the largest number of inliers with the smallest standard deviation of distances. From the resulting RANSAC plane model, inlier points are retained as ground points, and outlier points are retained as obstacle points.

II-B Mapping

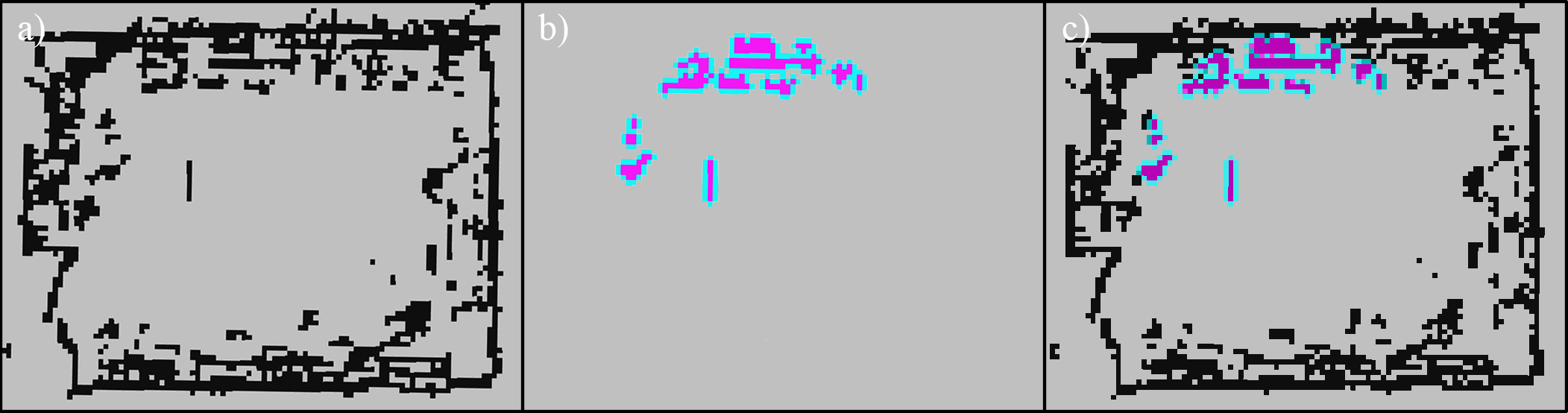

To enable real-time planning in a dynamic environment, we create two 2D layered costmaps presented in [16] to represent the global and local environments.

II-B1 Global Map

The global map is used to capture and store macro-scale information of the environment. To enable this, the global map uses a lower resolution of 0.1 m and only updates at a rate of 2 Hz. Cells in the global map have two possible states:

Occupied

Any 3D point detections from the perception sensors are projected onto the 2D plane of the global map. Any cells that contain projected points are classified as occupied and are considered untraversable.

Free

Any cell that is not occupied is considered free and traversable for the robot. Unexplored regions of the environment are by default considered to be free.

II-B2 Local Map

The local map is used for obstacle avoidance to capture a smaller region local to the robot where dynamic obstacles may be present. We use a higher resolution of 0.05 m and update the local map at 10 Hz. The local map introduces another cell state in addition to occupied and free cells:

Inflated

Cells within a certain distance of obstacles are inflated with non-zero cell costs. These non-zero costs are used to penalize trajectory planning through regions close to obstacles while not completely prohibiting trajectories from entering these regions.

II-C Localization

In this work, we use LiDAR Odometry and Mapping (LOAM), a method introduced in [17], to estimate the odometry of the robot. LOAM introduces simultaneously executed algorithms to estimate LiDAR motion: an odometry estimation algorithm and a mapping algorithm.

The LiDAR odometry algorithm runs at 10 Hz in which the correspondences of extracted features from consecutive LiDAR sensor sweeps are used to estimate the motion of the sensor. For each sensor sweep, LOAM extracts points from edge line and planar patch environment structures as features. These edge lines and planar patches in the environment are referenced as correspondences for feature points extracted from the respective environment structures.

Using the correspondences, geometric relationships can be formed between an edge point and its corresponding edge line and a planar point and its corresponding planar patch:

| (5) | ||||

| (6) |

where is the LiDAR pose transform from , and are the sets of edge points and planar points from the point cloud at , and and are the distances from the corresponding edge and planar feature points to their respective edge line and planar patch environment structures. These relationships are stacked for each edge and planar feature point to obtain a nonlinear function,

| (7) |

where each row of is a feature point and each row of is the correspondance distance. This function is solved with the Levenberg-Marquardt method [21] to obtain the motion estimation of the LiDAR sensor.

The final result from this localization method is an estimate of the LiDAR sensor odometry relative to the 3D environment. The coordinate frame origin of this odometry is initialized to the initial position of the LiDAR sensor. The mapping methods mentioned in Section II-B initialize the global origin as the 2D components of this LOAM origin. We then use this transform to localize the LiDAR sensor, and thus the robot, in the 2D costmaps during navigation.

III Real-Time Motion Planning

Using the global and local map created through the aforementioned process, we developed a real-time motion planning framework for Digit using D* Lite and Timed-Elastic-Band.

III-A D* Lite global planner

During navigation through unknown environments, collision-free paths through the global environment are required. As unknown environments are explored, a planner must be capable of quick re-planning to account for discovered obstacles. Therefore, a D* Lite global planner as presented in [18] is used to generate these global paths. Capable of fast re-planning, D* Lite is designed to solve goal-directed navigation problems in grid-represented environments. This planning method is directly applied to the 2D layered costmaps described in Section II-B.

The D* Lite planner assigns each explored cell, , in the costmap two values: an estimate of the goal distance, , where is the Euclidean distance from the start cell to the current cell ; and a one-step lookahead value based on the g-value, :

| (8) |

where is the cost to traverse to a neighboring cell, , from the current cell, .

To generate the shortest path, the planner begins from the goal position and explores towards the start position. All neighbors of the current cell are added to a priority queue with a key value with

| (9) | ||||

| (10) |

where is a scalar whose value is incremented each time the planner re-plans, and is used to maintain the priority queue ordering. The priority queue is sorted by the following sort rule: a key iff or and . The planner always expands the lowest-valued cell in the priority queue. Then, the successor cells, or neighbors, of this expanded cell have their and values updated and are then themselves inserted into the priority queue. This process ends once the start cell is expanded or no more cells can be expanded.

The main planning algorithm initially generates the shortest path based on the initial environment information. As the robot explores new environment areas and obstacles are detected, the costmap, and corresponding values, is updated to reflect the new obstacle detections and the global planner re-calculates the global path as previously described. The current path is stored as a sequence of waypoint cells within the global costmap to follow and is updated at 5 Hz.

III-B Timed-Elastic-Band local planner

The purpose of the local planner is to generate a smooth, kinematically feasible trajectory that follows the global path generated by the D* Lite global planner. The Timed-Elastic-Band (TEB) method introduced in [19] originally implements a trajectory optimization method for differential models. We adapt this method for use in bipedal robots by applying simplifying assumptions on the high-level kinematic constraints of the low-level controller to mimic those of a differential drive robot. The bipedal locomotion is modeled as a differential drive model to avoid several problems introduced from the omnidirectional movement of bipedal robots. For example, lateral velocity in bipedal locomotion is difficult to control due to oscillating behaviors in the lateral direction.

The TEB problem is defined as an open-loop optimization task to find the sequence of controls needed to move the robot from an initial pose, , to a goal pose, . A trajectory is defined as a sequence of robot poses where denotes the robot pose at time , with and denoting the 2D position of the robot and denoting the orientation of the robot. The TEB planner then augments the trajectory with positive time intervals . The sequence of robot poses and time intervals are then joined to form the parameter vector . TEB solves the non-linear optimization task defined as:

| (11) |

which is subject to several constraints defined as equality and inequality constraints.

Non-holonomic constraint

An equality constraint enforces the kinematic constraints of the robot. This non-holonomic constraint can be interpreted geometrically to assume consecutive poses and must share a common arc of constant curvature [22]. The angle between pose and direction must be equal to angle at pose , with which can be replaced with:

| (12) |

and thus the resulting equality constraint applied to the optimization task in (11) is given by:

| (13) |

Velocity and acceleration constraints

Additionally, limitations of the linear and angular velocities and accelerations are applied. First, the linear and angular velocities are approximated between two consecutive poses and :

| (14) | ||||

| (15) |

where is a sign extraction function which is approximated by a smooth sigmoidal approximation that maps to the interval :

| (16) |

Next, the accelerations are approximated in a similar manner to the velocities between consecutive poses:

| (17) |

Finally, the velocity and acceleration constraints are applied to (11) as:

| (20) | ||||

| (23) |

Obstacle avoidance

The local planner considers obstacles, , as simply-connected regions in the form of points, circles, polygons, and lines in . To ensure a minimum separation between pose and all obstacles, the inequality constraint applied to (11) is:

| (24) | ||||

where is the minimal Euclidean distance between the obstacle and the robot pose.

The nonlinear task (11) is reformulated into an approximate nonlinear least-squares optimization problem for better computational efficiency. With the constraints applied to the approximated objective function as described in [23]. The kinematic equality constraint is expressed as:

| (25) |

and the inequality constraint is approximated as:

| (26) |

where are scalar weights and the min operator is applied row-wise and with constraints and being approximated in an identical manner. The overall optimization problem is approximated with the objective function:

| (27) |

| (28) |

This optimization problem is solved using a variant of the Levenberg–Marquardt algorithm implemented in the open-source graph optimization framework g2o [24]. As described in [19], the Timed-Elastic-Band planner further improves the method by optimizing several of these trajectories from distinctive topologies in parallel to search for the global minimum of (11) respecting (27).

The final output from the Timed-Elastic-Band planner is sequences of velocity commands to achieve the globally optimal trajectory from (27). These velocity commands are sent to Digit’s default low-level controller provided by Agility Robotics.

IV Results

This section presents the simulation results of the proposed approach and the experimental validation with Digit in dynamic and unknown environments111Experiment recordings are shown in the following video: https://www.youtube.com/watch?v=WzHejHx-Kzs..

IV-A Simulation Experiments

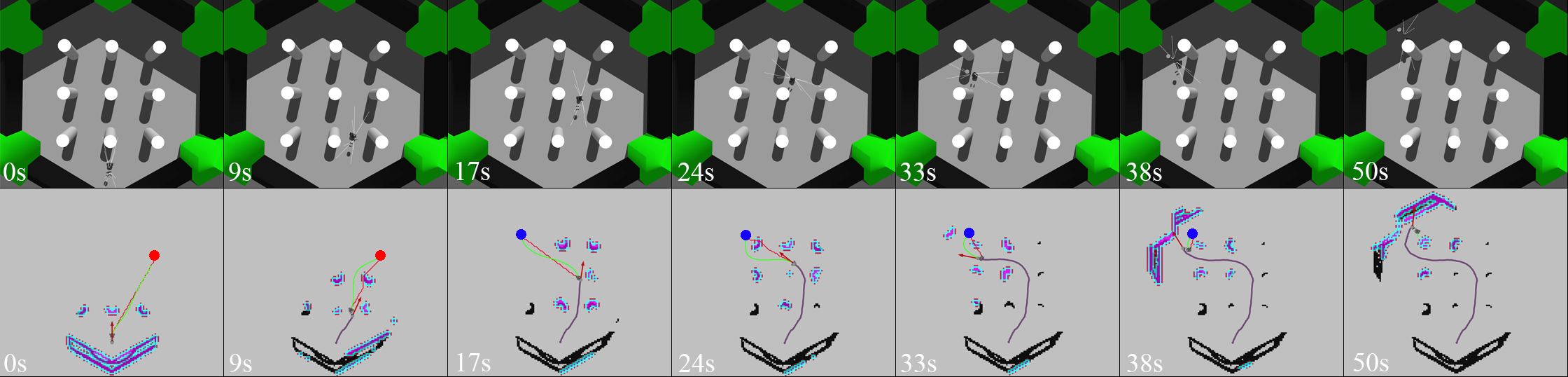

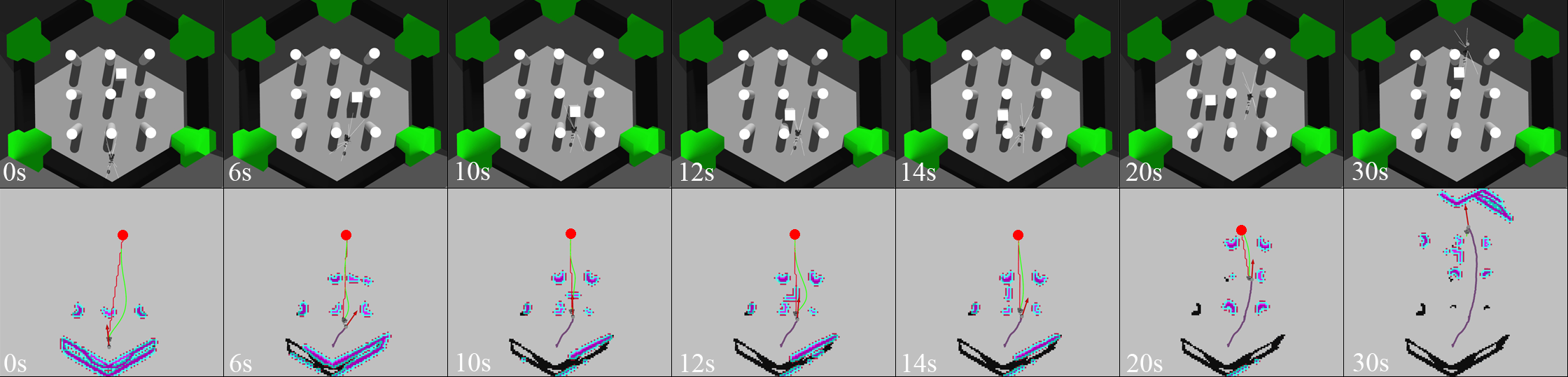

The framework is initially validated in simulation. In experiments shown in Fig. 4 and Fig. 5, the red and blue circles represent the first and second goals. Black grid cells are obstacles in the global map, purple and blue grid cells are obstacles and inflated cells in the local map, respectively. The red arrow is the current estimated odometry. The red path and green paths are the global path and local trajectory, respectively. The purple path shows the robot’s traveled path.

IV-A1 Static, unknown environment

As shown in Fig. 4, a 6.0 m 6.0 m simulated environment contains nine static, cylindrical obstacles with radius 0.15 m evenly spaced apart. At , an initial goal position is selected. From to the robot navigates along the path until an obstacle is discovered obstructing the current trajectory, at which point the global path and local trajectory are quickly re-planned to account for the new obstacle. While continuing to navigate to the first goal, a new goal position is given. The framework quickly re-plans and begins navigating to the new goal. At , a blocking obstacle is again detected and re-planning occurs. From to the robot successfully executes the trajectory and reaches the goal position.

IV-A2 Dynamic, unknown environment

The second simulated experiment occurs in the same environment as the previous experiment, except a dynamic obstacle has been added. At , an initial goal position is selected. The robot begins to navigate until when the dynamic obstacle comes into view and blocks the path. The planners attempt to re-plan around the obstacle but determine the current path is untraversable. The robot stops and begins to reverse until when a viable trajectory is discovered. At , the robot stops reversing and begins to execute the trajectory as the dynamic obstacle has passed. From to , the robot executes the trajectory and reaches the goal.

IV-B Hardware Experiments

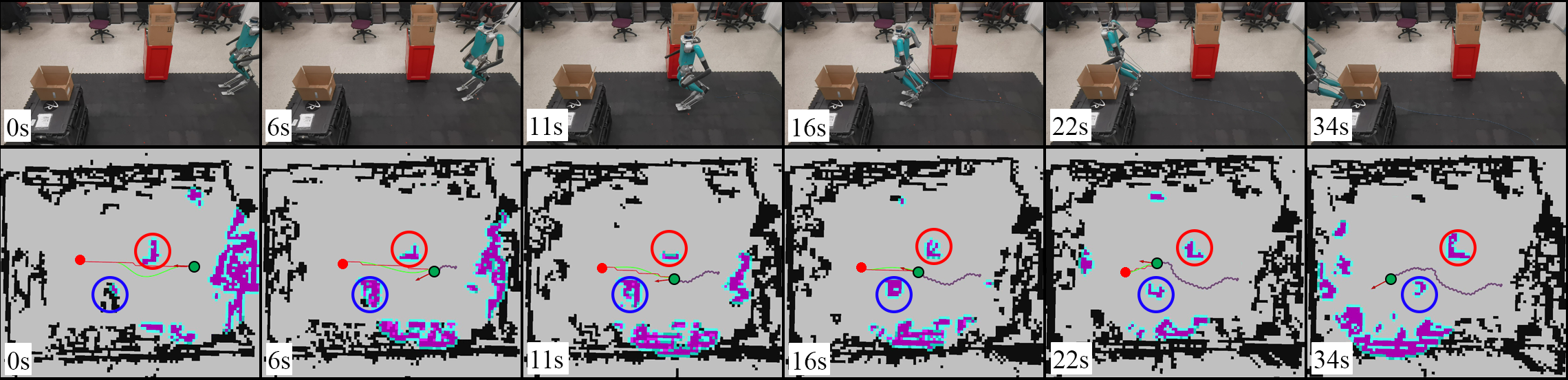

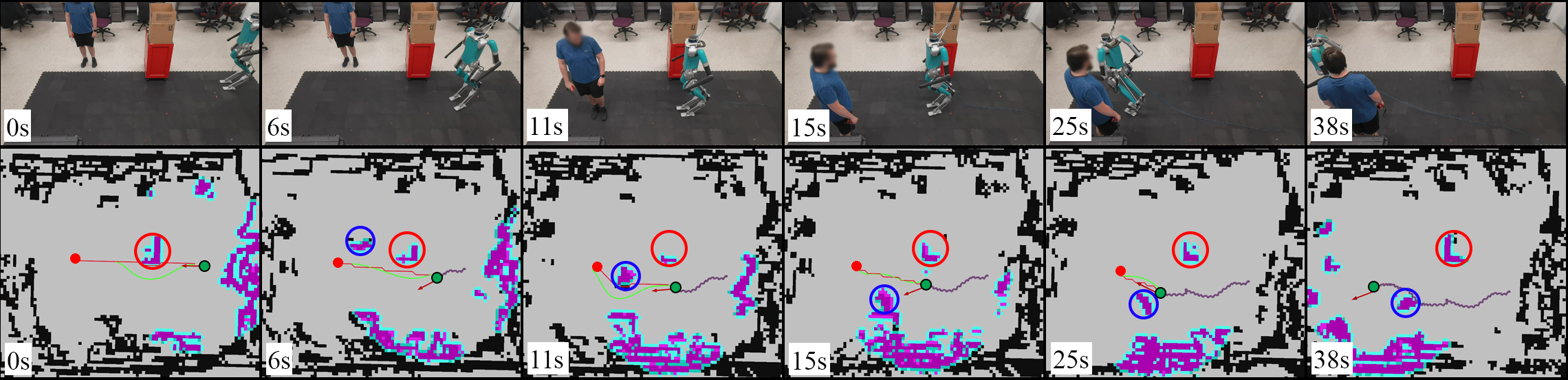

The navigation framework computation is executed on an external desktop using an AMD Ryzen 5800X CPU and connected through a TCP connection to Digit. In this work, two hardware experiments were conducted indoors: 1) Planning in a static environment and 2) Planning in a dynamic environment. Each test is deemed successful once the Digit robot is able to navigate collision-free from the start position to the goal position within a 0.2 m final goal position tolerance and 0.2 rad final goal orientation tolerance. In experiments shown in Fig. 6 and Fig. 7 the solid red circle represents the goal position. The red and blue hollow circles represent the first and second obstacles, the second being a dynamic obstacle in Fig. 7. Black grid cells are obstacles in the global map, purple and blue grid cells are obstacles and inflated cells in the local map, respectively. The red arrow is the current estimated odometry, and the green circle shows the robots current position. The red path and green paths are the global path and local trajectory, respectively. The purple path shows the robot’s traveled path.

IV-B1 Static environment

As shown in Fig. 6, a 10.0 m 8.0 m environment contains two static 0.5 m 1.0 m and 1.0 m 0.5 m obstacles. At a goal position is given to Digit and the robot begins to rotate to safely avoid the first obstacle. Digit continues to travel forward between and at which point Digit begins to rotate to avoid the second obstacle. Digit continues along the generated trajectory until the robot safely reaches the goal position at .

IV-B2 Dynamic environment

The second experiment is conducted in the same environment as the previous experiment, except the second static obstacle has been replaced with a dynamic obstacle. At a goal position is given to Digit. Initially, the dynamic obstacle is not visible to Digit due to being obscured by the first obstacle. Digit begins to execute the generated trajectory and at the dynamic obstacle is detected. At this moment, the dynamic obstacle begins to navigate across the currently planned trajectory. As the obstacle moves in the environment, the planned trajectory begins to deform to maintain a collision-free path, which can be seen at . Between and , Digit begins to slow to a stop as the dynamic obstacle continues to move across the robots path. At , the obstacle comes to a rest and Digit is able to begin executing a collision-free path to the goal. Digit is able to successfully execute the trajectory between and and safely reaches the goal position at .

V Conclusions

In this work, we have presented a real-time navigation framework for bipedal robots in dynamic environments. Successful experiments were conducted in both simulation and hardware.

While the proposed navigation framework was shown to successfully execute in complex environments, there exist several limitations. The current sensor suite configuration results in blind spots on the left and right sides of the robot. This prevents the navigation framework from quickly reacting to dynamic obstacles that move in these blind spots. In future work, a more robust sensor suite providing sensor coverage would allow for better dynamic obstacle reactions. Additionally, the use of 2D layered costmaps limits the navigable environments to only flat 2D terrains. While this is sufficient for many complex environments, it is often desirable to navigate through inclines, stairs, and uneven terrain. Using a mapping and planning framework that can utilize 3D information would allow for more robust navigation in complex 3D terrains. Finally, although the local planner is able to generate robust trajectories, the assumption of differential drive kinematic constraints limits the capabilities of bipedal robots. An extension to omnidirectional locomotion would allow for better utilization of the unique capabilities of bipedal robots.

References

- [1] J. Chestnutt, K. Nishiwaki, J. Kuffner, and S. Kagami, “An adaptive action model for legged navigation planning,” in 2007 7th IEEE-RAS International Conference on Humanoid Robots. IEEE, 2007, pp. 196–202.

- [2] W. Huang, J. Kim, and C. G. Atkeson, “Energy-based optimal step planning for humanoids,” in 2013 IEEE International Conference on Robotics and Automation. IEEE, 2013, pp. 3124–3129.

- [3] A. Hornung, A. Dornbush, M. Likhachev, and M. Bennewitz, “Anytime search-based footstep planning with suboptimality bounds,” in 2012 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2012). IEEE, 2012, pp. 674–679.

- [4] J. Garimort, A. Hornung, and M. Bennewitz, “Humanoid navigation with dynamic footstep plans,” in 2011 IEEE International Conference on Robotics and Automation. IEEE, 2011, pp. 3982–3987.

- [5] D. Kanoulas, A. Stumpf, V. S. Raghavan, C. Zhou, A. Toumpa, O. Von Stryk, D. G. Caldwell, and N. G. Tsagarakis, “Footstep planning in rough terrain for bipedal robots using curved contact patches,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 4662–4669.

- [6] N. Perrin, O. Stasse, F. Lamiraux, and E. Yoshida, “Weakly collision-free paths for continuous humanoid footstep planning,” in 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2011, pp. 4408–4413.

- [7] S. Dalibard, A. El Khoury, F. Lamiraux, A. Nakhaei, M. Taïx, and J.-P. Laumond, “Dynamic walking and whole-body motion planning for humanoid robots: an integrated approach,” The International Journal of Robotics Research, vol. 32, no. 9-10, pp. 1089–1103, 2013.

- [8] L. Baudouin, N. Perrin, T. Moulard, F. Lamiraux, O. Stasse, and E. Yoshida, “Real-time replanning using 3d environment for humanoid robot,” in 2011 11th IEEE-RAS International Conference on Humanoid Robots. IEEE, 2011, pp. 584–589.

- [9] T. Nishi and T. Sugihara, “Motion planning of a humanoid robot in a complex environment using rrt and spatiotemporal post-processing techniques,” International journal of humanoid robotics, vol. 11, no. 02, p. 1441003, 2014.

- [10] P. Michel, J. Chestnutt, J. Kuffner, and T. Kanade, “Vision-guided humanoid footstep planning for dynamic environments,” in 5th IEEE-RAS International Conference on Humanoid Robots, 2005. IEEE, 2005, pp. 13–18.

- [11] A.-C. Hildebrandt, M. Klischat, D. Wahrmann, R. Wittmann, F. Sygulla, P. Seiwald, D. Rixen, and T. Buschmann, “Real-time path planning in unknown environments for bipedal robots,” IEEE Robotics and Automation Letters, vol. 2, no. 4, pp. 1856–1863, 2017.

- [12] K. Nishiwaki, J. Chestnutt, and S. Kagami, “Autonomous navigation of a humanoid robot over unknown rough terrain using a laser range sensor,” The International Journal of Robotics Research, vol. 31, no. 11, pp. 1251–1262, 2012.

- [13] Z. Li, J. Zeng, S. Chen, and K. Sreenath, “Vision-aided autonomous navigation of underactuated bipedal robots in height-constrained environments,” 2021.

- [14] S. Pütz, J. Santos Simón, and J. Hertzberg, “Move base flex a highly flexible navigation framework for mobile robots,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3416–3421.

- [15] M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, vol. 24, no. 6, p. 381–395, Jun 1981.

- [16] D. V. Lu, D. Hershberger, and W. D. Smart, “Layered costmaps for context-sensitive navigation,” in 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2014, pp. 709–715.

- [17] J. Zhang and S. Singh, “Low-drift and real-time lidar odometry and mapping,” Autonomous Robots, vol. 41, no. 2, pp. 401 – 416, February 2017.

- [18] S. Koenig and M. Likhachev, “D*lite.” 01 2002, pp. 476–483.

- [19] C. Rösmann, F. Hoffmann, and T. Bertram, “Integrated online trajectory planning and optimization in distinctive topologies,” Robotics and Autonomous Systems, vol. 88, pp. 142–153, 2017.

- [20] R. B. Rusu and S. Cousins, “3d is here: Point cloud library (pcl),” in 2011 IEEE International Conference on Robotics and Automation, 2011, pp. 1–4.

- [21] R. Hartley and A. Zisserman, Multiple View Geometry in Computer Vision, 2nd ed. New York, NY, USA: Cambridge University Press, 2003.

- [22] C. Rösmann, W. Feiten, T. Wösch, F. Hoffmann, and T. Bertram, “Trajectory modification considering dynamic constraints of autonomous robots,” in ROBOTIK, 2012.

- [23] J. Nocedal and S. J. Wright, Numerical optimization: with 85 illustrations. Cham: Springer International Publishing.

- [24] R. Kümmerle, G. Grisetti, H. M. Strasdat, K. Konolige, and W. Burgard, “G2o: A general framework for graph optimization,” 2011 IEEE International Conference on Robotics and Automation, pp. 3607–3613, 2011.