Real-Time Hand Gesture Identification in Thermal Images

Abstract

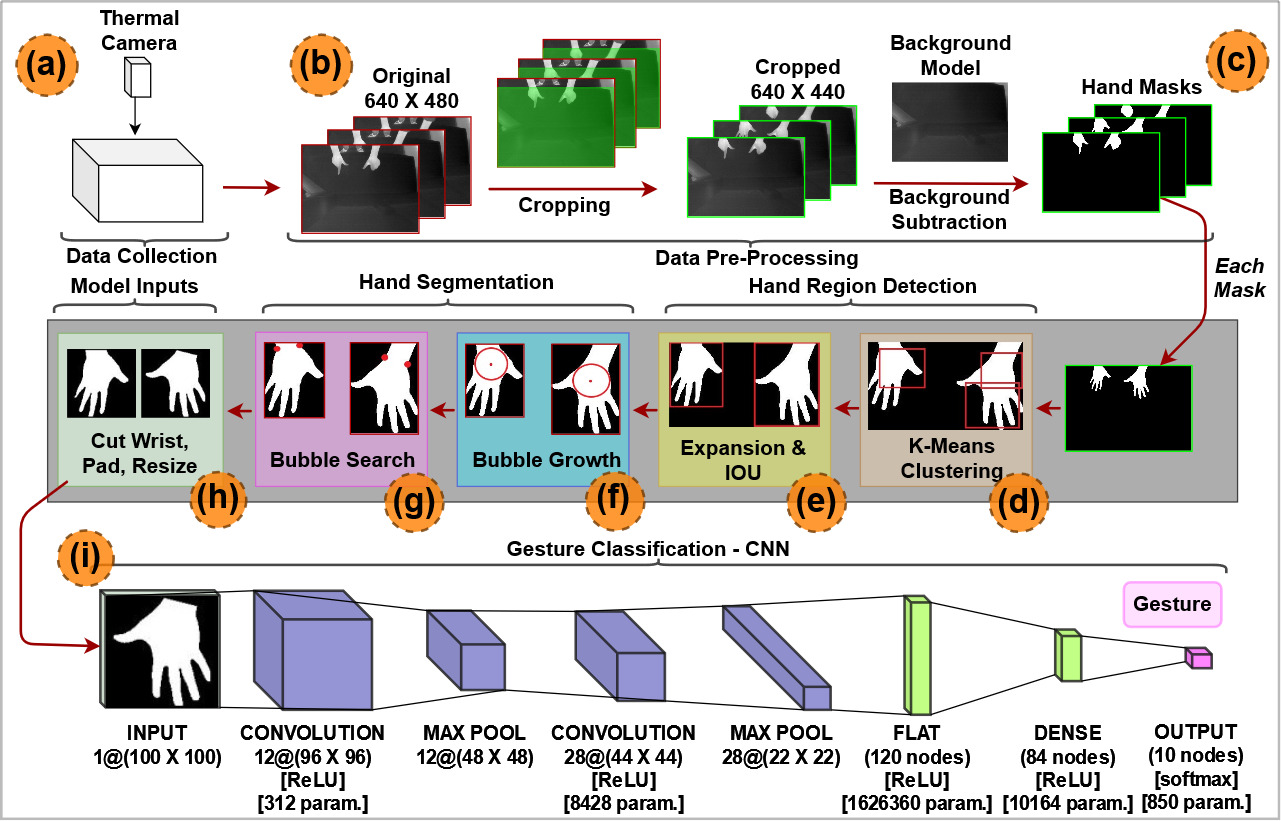

Hand gesture-based human-computer interaction is an important problem that is well explored using color camera data. In this work we proposed a hand gesture detection system using thermal images. Our system is capable of handling multiple hand regions in a frame and process it fast for real-time applications. Our system performs a series of steps including background subtraction-based hand mask generation, k-means based hand region identification, hand segmentation to remove the forearm region, and a Convolutional Neural Network (CNN) based gesture classification. Our work introduces two novel algorithms, bubble growth and bubble search, for faster hand segmentation. We collected a new thermal image data set with 10 gestures and reported an end-to-end hand gesture recognition accuracy of .

Keywords:

Hand Detection Hand Gesture Classification Human Computer Interaction Center of Palm Wrist Points

1 Introduction

Communication between human and computer via hand gestures has been studied extensively and continues to be a fascination in the computer vision community. This is not a surprise given the proliferation in use of artificial intelligence over the past decade to improve the lives of many through the development of smart systems. For humans to convey intention to computers, studies began with the use of external devices [1, 6, 17, 20] to enhance focus on particular regions of hands so as to limit the number of features required to interpret hand movement sequences into pre-determined messages. Over time, more advanced equipment was introduced to eliminate the need for external devices to be attached to a user’s hands to isolate hand movements; equipment like the use of a depth camera [14, 18, 19], high resolution RGB cameras [2, 3, 15], and (less-frequently) thermal cameras [13].

Even though hand gesture detection is a well-explored problem using some modalities of data such as RGB camera images, there have been a very limited number of works on thermal data [8, 12, 13]. These studies have typically required multiple sensors, a fixed location of the hand in frame to estimate wrist points, or have a user wear clothing to separate the hand from the forearm.

Thermal modality can complement RGB data modality because it is not affected by different lighting conditions and skin color variance. Moreover, thermal data based analyses can be extended to swipe detection techniques by temperature tracing on natural surfaces [4]. Therefore, future research can benefit by using multi-modal (thermal and RGB) data for a robust hand gesture detection system. To this end, through research on hand gesture detection techniques using thermal data is essential.

Efficient hand segmentation is vital to the success of thermal camera based hand detection. This is because thermal images lack many distinguishable features such as color and textures, and including regions from other heated objects such as a forearm can reduce the classification accuracy. Our algorithmic pipeline uses background subtraction in the data pre-processing stage for generating a hand mask, k-means clustering and overlapping cluster grouping for hand region isolation of each potential hand region, center of palm and wrist point detection for hand segmentation (removing forearm), and a CNN-based model gesture classification (Figure 1). In this process we introduced two novel algorithms, bubble growth and bubble search, for hand segmentation. The main contributions of this paper are as follows: 1) Collection of a new thermal hand gesture data set from 23 users performing the same 10 gestures. 2) Bubble Growth method, which uses a distance transform and hand-forearm contour to expand a circle (bubble) to the maximum extent possible inside the hand. 3) Bubble Search method, which was inspired by the use of an expansion of the maximum inscribed circle and a threshold distance of two consecutive contour points as detailed in [2], but includes additional constraints, a reference point, and an evolving (in lieu of fixed) bubble expansion. 4) Developing an end-to-end real-time hand gesture detection system that can process multiple hands at a frame-rate of 8 to 10 frames per second (fps) with high accuracy (.). Our bubble growth and bubble search methods are superior to other methods because they neither use nor require knowledge of any projection [5, 15], angle of hand rotation [15], finger location or fixed values for bubble radii [2, 21], degree of palm roundness [19]. The combined average speed of our methods (bubble growth = 0.012 sec/hand, bubble search = 0.007 sec/hand; total = 0.019 sec/hand).

2 Methods

This paper proposes a process (Figure 1) that can perform real-time hand detection and gesture classification from a thermal camera video feed. The process is divided into 5 major parts: (1) data collection; (2) data pre-processing; (3) hand region detection; (4) hand segmentation, and (5) gesture classification.

| Users | Left | Right | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training Data | 20 | 1536 | 1576 | 1438 | 1544 | 1183 | 1459 | 1358 | 1391 | 1524 | 1285 | 6657 | 7637 |

| Test Data | 3 | 103 | 57 | 34 | 92 | 115 | 30 | 17 | 92 | 138 | 214 | 517 | 355 |

2.1 Data Collection

All thermal hand gesture video data is collected with a Sierra Olympic Viento-G thermal camera with a 9mm lens. The video frames are recorded in indoor conditions (temperature between to F) at 30 fps and stored as 16-bit TIFF images with a pixel resolution. The camera is fixed to a wooden stand and oriented downwards towards a tabletop.

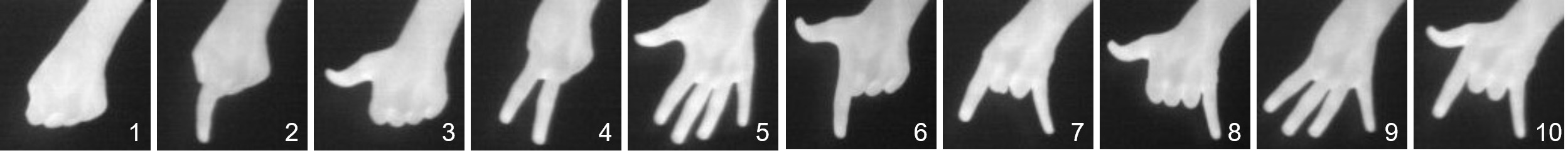

Data was collected from 23 users demonstrating 10 pre-defined gestures with left and right hands to develop training and test data sets. Separate sets of users contributed to the training and test data sets to demonstrate that our method is user-agnostic. Figure 2 illustrates all 10 gestures, and Table 1 lists the data we have collected and divided into a training data set, testing data set.

For this paper, we also used an external data called Finger Digits 0-5 [10] set to assess how our process generalizes. From this set we tested gesture 1, 2, 4, 5, and 9 (2000 images per gesture). This data set was not used in training or in any other experimentation in this project.

2.2 Data Pre-processing

Without loss of generality to image size, we reduced the size of our images from to to reduce camera scope to the boundaries of the table top to make background subtraction simpler. Images were also converted to 8-bit JPG images for ease of viewing and using certain python packages (e.g., OpenCV).

A MOG2 background subtraction model from OpenCV was used to generate hand masks from each thermal image. We initialized the model with frames that, at the beginning of data capture, contain no hands or heated objects and have a table at constant room temperature. The model is updated over the video sequence when there are no pixels found in the hand mask, essentially allowing only pixels associated with slight change in room temperature to be updated. Each mask is binarized (black and white pixels) using Otsu’s method to select an appropriate threshold value.

2.3 Hand Region Detection

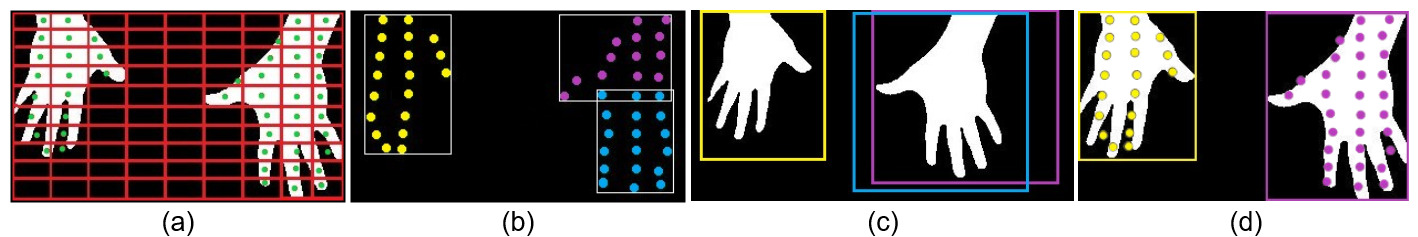

Hand regions are identified in hand masks using tightly bounding boxes around each hand object (which may or may not contain any length of forearm) using a two-step process detailed below.

First, a k-means clustering algorithm (Figure 3) is used to identify contiguous objects in the hand mask using a number of centroids estimated with a silhouette analysis optimal cluster-finding technique. To achieve real-time processing speed, we expedite this step by reducing the number of points to consider when clustering. To do this, we encapsulate all white pixels in the hand mask with a grid that is subdivided into equally-sized partitions. For each coupon containing at least one white pixel, the center of mass is calculated to reduce a coupon’s points down to a single point. The silhouette analysis [11] identifies the optimal cluster number by performing k-means clustering for different values (k in the range of 2 to 3) and selecting the optimal for which the highest silhouette score is calculated.

Second, a bounding box is placed initially around each cluster but is expanded in all dimensions equally until the box entirely contains a set of contiguous white pixels in the hand mask. If the number of centroids selected is larger than the number of hands in the hand mask (e.g., poor hand mask generation), then one or more regions will be bounded by multiple boxes. Removal of these duplicate boxes is performed using intersection-over-union (IOU) and a threshold of 0.7—the boxes that remain after IOU are the hand regions.

Output: ,

Output:

Requires:

2.4 Hand Segmentation

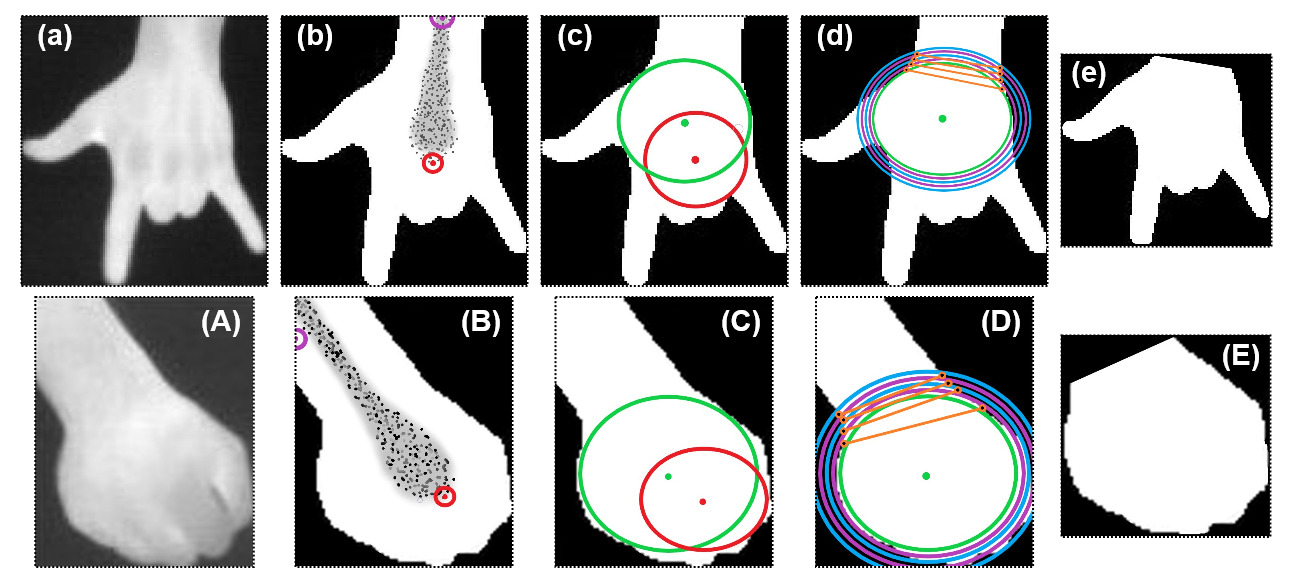

A hand region may include variable forearm length which can hinder high-accuracy gesture classification due to lack of forearm agnosticism. This can be avoided by including gesture samples with variable lengths of forearms in the training set, but this would be a costly endeavor. Instead, as illustrated in Figure 4 we algorithmically sever the hand region from the forearm at the wrist and thus removed all forearm-related data variation. The process uses two novel algorithms: Bubble Growth (to find COP) and Bubble Search (to find WP).

Reference Point Determination: Bubble Growth and Bubble Search require a reference point () located on the edge through which the hand enters the frame, or hand penetration edge. To get this point, we estimate the largest contiguous array of white pixels on each of the four edges of the hand region to be the hand penetration edge. The extreme ends of the array of white pixels on the hand penetration edges are set as the reference point edges () and the midpoint of these points yields .

Bubble growth: Given a subset of contours () from the entire set of contours for the hand region () and an initial estimate for COP (), Algorithm 1 moves around the space in the palm to find the COP (). We form by taking a sparse subset of in a fashion that preserves the overall shape of the hand region; doing this optimized the cost of Algorithm 1. We compute by first calculating the distance transform (DT) [16] of the entire hand region, obtaining the maximum DT value (), and selecting all points in the hand region which have a DT value () such that . We select the point in this set furthest from as the .

Starting with as the first , the algorithm tries to find the next center candidate (). This is performed by ShortAdvancement, which attempts to move by a fraction of a distance () along the connecting vector between and each contour point using the following equation: . The first h resulting in a where , forces to update to and the remaining are skipped to make way for the next iteration. A growing list of centers that have been visited () is used to ensure no Euclidean distance (used to calculate ) is unnecessarily performed because the resulting will have already been visited and assessed from a past iteration. When the bubble does not move, Algorithm 1 terminates.

Bubble search: Given and the , Algorithm 2 searches for WP (). First, is expanded to (i.e., ) but continues expanding incrementally (i.e., ). will expand, searching for a pair of contour points that exist inside of the expanded bubble (i.e., where ). We say that and have been found if two contiguous meet the following criteria:

-

1.

, where .

-

2.

, where .

-

3.

, where is .

and are empirically determined limits of the distance between two WP in the scale of .

Algorithm 2 terminates if: (1) are found; or (2) is reached; or (3) if before searching for and it is determined that . In case 2 and 3, are selected as and . Once WP are identified, all points defined by an inequality whose boundary passed through and and is opposite the side containing the COP is erased (pixels set to 0) to eliminate the forearm from the hand region. Finally, the border around the region is squeezed to the tightest bounding box, and the images is padded by 5 pixels and resized to . At this point, the hand region has been satisfactorily standardized as a CNN model input.

2.5 Gesture Classification

A CNN model was trained to identify 10 pre-defined hand gestures. The architecture and the training parameters are summarized at the bottom of Figure 1. The model was trained using a categorical cross-entropy loss function and an adam optimizer with a variable learning rate.

| Centroids | ||||

|---|---|---|---|---|

| 2 only | 0.0128 | 0.0161 | 0.0176 | 0.0235 |

| 2 to 3 | 0.0236 | 0.0298 | 0.0365 | 0.0397 |

| 2 to 4 | 0.0357 | 0.0451 | 0.0544 | 0.0576 |

| 2 to 5 | 0.0486 | 0.0624 | 0.0749 | 0.0822 |

3 Experiments and Results

All experiments in this paper are performed on a desktop with 32GB of RAM, AMD Ryzen 7, 3700X, 8-core processor at 3.59 GHz on a 64-bit Windows 10 platform with build number 19043.1165. All CNN model training was performed through Google Co-laboratory using a GPU farm stationed at Google.

For hand region detection, The number of centroids and the granularity of the grid in the silhouette analysis affects the cost of clustering. We observed the cost of finding an optimal number of centroids using silhouette analysis for different grid sizes on a single hand gesture over 7 frames. To keep cost low while also ensuring multiple hands are correctly isolated, the grid size should be fixed to a size of and limited to at most 3 centroids. This limits the number of hands that can be detected in a single image to at most 3.

in bubble growth is selected to strike a balance between the cost and accuracy. Table 3 justifies the selection of . Experimentation has shown that affects both the convergence speed and the accuracy of the center of palm. Larger values for cause Algorithm 1 to terminate pre-maturely, while lower value increases the computation cost. While worked best for us, an optimal value selected is left as a future work.

| Points | Bad Bubbles | Avg Cost |

|---|---|---|

| 10 | 95.9% | 0.0041 |

| 20 | 32.3% | 0.0058 |

| 30 | 6.9% | 0.0085 |

| 40 | 5.2% | 0.0112 |

| 50 | 1.7% | 0.0141 |

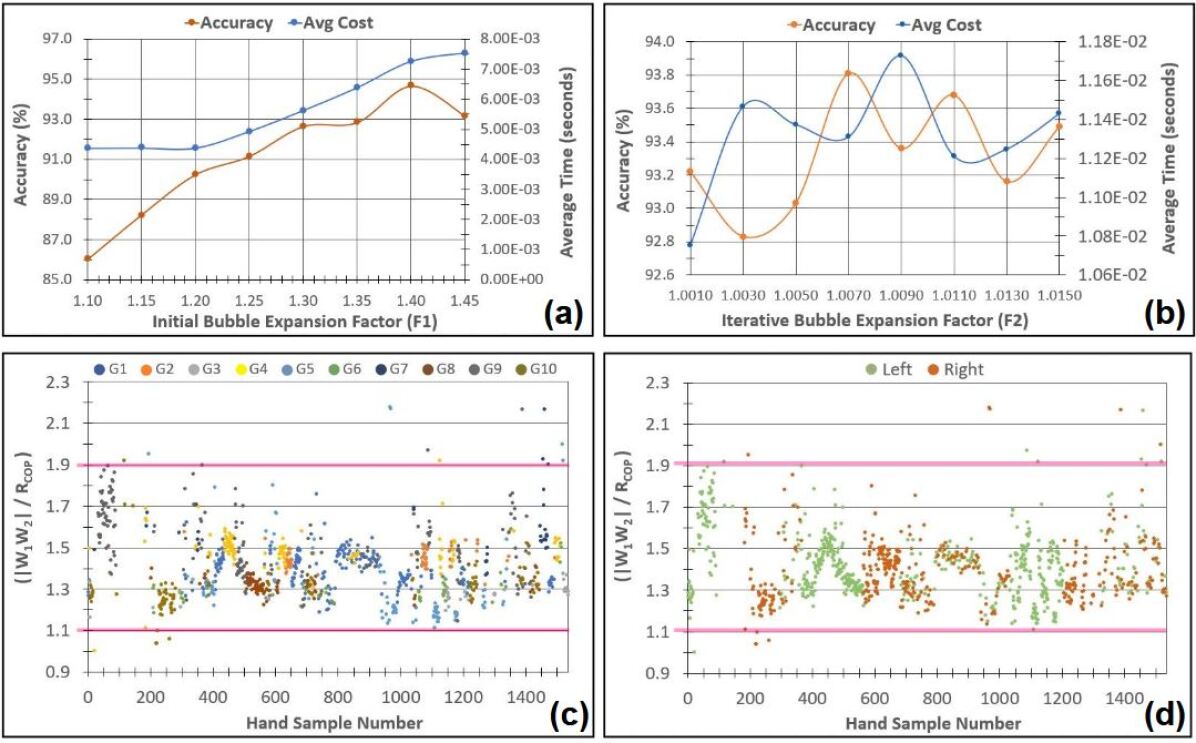

A few experiments were performed to find optimize values for used in Algorithm 2 using 1000 images from 3 different users. A value of 1.2 has been proposed for factor in [2], but we propose after performing a study to assess the average accuracy and cost of Algorithm 2 keeping as constant. is varied between in increments of 0.05 (Figure 5, (a)). Similarly, is varied between in increments of 0.002 keeping as constant (Figure 5, (b)). Values of and are determined by plotting values of divided by and selecting factors that bound 99% of all plotted values(Figure 5, (c-d)). A value of is determined such that it is small enough to minimize calculation time and large enough to allow searching for a significant number of hand samples.

Bubble Growth and Bubble Search were tested using 1532 hand samples obtained using all 1,217 thermal images. The overall hand detection success rate of is on par with the to reported in [7] and is detailed in Table 4. The term success is defined for Algorithm 1 as: (a) exists in palm region; (b) ; (c) bubble contains black pixels. The term success is defined for Algorithm 2 as: and are between and ; (b) segments the hand and forearm regions as expected.

| Samples | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| All | 1532 | 95.64% | 96.22% | 0.012 | 0.001 | 0.120 | 0.007 | 2E-05 | 0.090 |

| 325 | 96.92% | 99.69% | 0.009 | 0.002 | 0.030 | 0.004 | 0.003 | 0.060 | |

| 64 | 98.48% | 98.48% | 0.014 | 0.001 | 0.030 | 0.006 | 0.001 | 0.040 | |

| 67 | 98.51% | 94.03% | 0.010 | 0.003 | 0.030 | 0.008 | 0.005 | 0.070 | |

| 153 | 100.0% | 100.0% | 0.012 | 0.005 | 0.040 | 0.004 | 2E-05 | 0.050 | |

| 182 | 87.36% | 92.86% | 0.012 | 0.003 | 0.030 | 0.018 | 0.005 | 0.090 | |

| 71 | 97.22% | 98.61% | 0.010 | 0.004 | 0.027 | 0.005 | 0.004 | 0.065 | |

| 55 | 98.18% | 94.55% | 0.014 | 0.004 | 0.030 | 0.005 | 0.003 | 0.065 | |

| 127 | 96.06% | 99.21% | 0.010 | 0.003 | 0.030 | 0.004 | 0.005 | 0.013 | |

| 239 | 92.86% | 99.58% | 0.016 | 0.004 | 0.115 | 0.005 | 0.001 | 0.090 | |

| 249 | 97.21% | 86.85% | 0.010 | 0.004 | 0.120 | 0.011 | 0.005 | 0.080 |

The gesture classification model architecture was based on an LeNet-1 architecture. It was trained solely with the training data collected for this study and achieved a training accuracy of . As seen in Table 5, this model exhibits an overall testing accuracy of with our testing set. Our model was tested with finger-digit-05; results are limited to 5 gestures because these were the only gestures matching those with which our model was trained. This is slightly better than reported in [2], which also used a model to classify 10 different gestures. This is also better than the recognition accuracy reported in [15].

We used data augmentation to randomly rotate hand samples between and to fit the model to hands at any orientation. Our process standardizes the size of hands when resizing the image, making our model zoom-agnostic. We experimented with batch sizes between 16 and 32 [9] to obtain the best training results and a variable learning rate between 1E-06 and 1E-04.

| Our Testing Set Accuracy (%) | 96.9 | 97.1 | 98.3 | 97.1 | 96.7 | 99.1 | 90.0 | 94.1 | 92.4 | 97.8 | 97.7 |

| finger-digit-05 Accuracy (%) | 97.3 | 96.8 | 90.4 | - | 99.6 | 99.9 | - | - | - | 100 | - |

4 Discussion and Conclusion

This paper proposes a real-time end-to-end system that can detect hand gestures from the video feed of a thermal camera. In that process the work introduces two novel methods for center of palm detection (bubble growth) and wrist point detection (bubble search) which are fast, accurate, and invariant to hand shape, hand orientation, arm length, and sizes (closeness to camera).

To maintain the real-time processing speed, our method can simultaneously detect hand gestures of 3 regions. However, this can be easily relaxed by introducing more processing power and distributed computing. This can also be achieved by using a heuristic to approximate the optimal number of centroids to use in clustering in lieu of testing a set of per-defined centroids using silhouette analysis.

Finally, we experimentally validated that our algorithm is user-agnostic (i.e. the algorithm can identify hand gestures of users that are not included in the training samples). Our system is highly accurate in detecting center of palm, wrist points, and hand gestures from hand masks produced from thermal images.

Even though there are a lot of hand gesture detection algorithms available using color video data, only a few techniques solve the problem with thermal data. Our methods show that even though limited in features, thermal video is a viable medium to capture hand gestures for accurate gesture recognition. Moreover, future research can combine thermal with other data modalities (e.g., RGB, depth) for an even more robust hand gesture detection system.

References

- [1] Bellarbi, A., Benbelkacem, S., Zenati, N., Belhocine, M.: Hand gesture interaction using color-based method for tabletop interfaces. In: 2011 IEEE 7th International Symposium on Intelligent Signal Processing. pp. 1–6 (09 2011). https://doi.org/10.1109/WISP.2011.6051717

- [2] Chen, Z.h., Kim, J.t., Liang, J., Zhang, J., Yuan, Y.b.: Real-time hand gesture recognition using finger segmentation. The Scientific World Journal p. 9 pages (May 2014). https://doi.org/10.1155/2014/267872

- [3] Dardas, N.H., Georganas, N.D.: Realtime hand gesture detection and recognition using bagoffeatures and support vector machine techniques. IEEE Transactions on Instrumentation and Measurement 60, 11 (2011). https://doi.org/10.1109/TIM.2011.2161140

- [4] Gately, J., Liang, Y., Wright, M.K., Banerjee, N.K., Banerjee, S., Dey, S.: Automatic material classification using thermal finger impression. In: MultiMedia Modeling. pp. 239–250. Springer International Publishing, Cham (2020)

- [5] Grzejszczak, T., Kawulok, M., Galuszka, A.: Hand landmarks detection and localization in color images. Multimedia Tools and Applications 75, 16363–16387 (12 2015). https://doi.org/10.1007/s11042-015-2934-5

- [6] Ibarguren, A., Maurtua, I., Sierra, B.: Layered architecture for real time sign recognition: Hand gesture and movement. Engineering Applications of Artificial Intelligence 23(7), 1216–1228 (2010). https://doi.org/10.1016/j.engappai.2010.06.001

- [7] Islam, M.M., Siddiqua, S., Afnan, J.: Real time hand gesture recognition using different algorithms based on american sign language. In: 2017 IEEE International Conference on Imaging, Vision Pattern Recognition (icIVPR). pp. 1–6 (2017). https://doi.org/10.1109/ICIVPR.2017.7890854

- [8] Kim, S., Ban, Y., Lee, S.: Tracking and classification of in-air hand gesture based on thermal guided joint filter. Sensors 17(12), 166 (Jan 2017). https://doi.org/10.3390/s17010166

- [9] Meng, L., Li, R.: An attention-enhanced multi-scale and dual sign language recognition network based on a graph convolution network. Sensors 21(4) (2021). https://doi.org/10.3390/s21041120, https://www.mdpi.com/1424-8220/21/4/1120

- [10] O’Shea, R.: Finger digits 0-5 (11 2019), https://www.kaggle.com/roshea6/finger-digits-05

- [11] Rousseeuw, P.J.: Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. Journal of Computational and Applied Mathematics 20, 53–65 (1987). https://doi.org/10.1016/0377-0427(87)90125-7, https://www.sciencedirect.com/science/article/pii/0377042787901257

- [12] Sato, Y., Kobayashi, Y., Koike, H.: Fast tracking of hands and fingertips in infrared images for augmented desk interface. In: Proceedings Fourth IEEE International Conference on Automatic Face and Gesture Recognition (CatṄoṖR00580). pp. 462–467 (2000). https://doi.org/10.1109/AFGR.2000.840675

- [13] Song, E., Lee, H., Choi, J., Lee, S.: Ahd: Thermal image-based adaptive hand detection for enhanced tracking system. IEEE Access 6, 12156–12166 (2018). https://doi.org/10.1109/ACCESS.2018.2810951

- [14] Sridhar, S., Mueller, F., Oulasvirta, A., Theobalt, C.: Fast and robust hand tracking using detection-guided optimization. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 3213–3221 (2015). https://doi.org/10.1109/CVPR.2015.7298941

- [15] Stergiopoulou, E., Papamarkos, N.: Hand gesture recognition using a neural network shape fitting technique. Engineering Applications of Artificial Intelligence 22, 1141–1158 (2009). https://doi.org/10.1016/j.engappai.2009.03.008

- [16] Strutz, T.: The distance transform and its computation (2021), https://arxiv.org/abs/2106.03503

- [17] Tompson, J., Stein, M., Lecun, Y., Perlin, K.: Real-time continuous pose recovery of human hands using convolutional networks. vol. 33 (08 2014). https://doi.org/10.1145/2629500

- [18] Wu, D., Pigou, L., Kindermans, P.J., Le, N., Shao, L., Dambre, J., Odobez, J.M.: Deep dynamic neural networks for multimodal gesture segmentation and recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence 38, 1–1 (03 2016). https://doi.org/10.1109/TPAMI.2016.2537340

- [19] Yao, Z., Pan, Z., Xu, S.: Wrist recognition and the center of the palm estimation based on depth camera. 2013 International Conference on Virtual Reality and Visualization pp. 100–105 (September 2013). https://doi.org/10.1109/ICVRV.2013.24

- [20] Yeo, H.S., Lee, B.G., Lim, H.: Hand tracking and gesture recognition system for human-computer interaction using low-cost hardware. Multimedia Tools and Applications 74 (04 2013). https://doi.org/10.1007/s11042-013-1501-1

- [21] Zhou, Y., Jiang, G., Lin, Y.: A novel finger and hand pose estimation technique for real-time hand gesture recognition. Pattern Recognition 49, 102–114 (2016). https://doi.org/10.1016/j.patcog.2015.07.014