RCLC: ROI-based joint conventional and learning video compression

Abstract

COVID-19 leads to the high demand for remote interactive systems ever seen. One of the key elements of these systems is video streaming, which requires a very high network bandwidth due to its specific real-time demand, especially with high-resolution video. Existing video compression methods are struggling in the trade-off between the video quality and the speed requirement. Addressed that the background information rarely changes in most remote meeting cases, we introduce a Region-Of-Interests (ROI) based video compression framework (named RCLC) that leverages the cutting-edge learning-based and conventional technologies. In RCLC, each coming frame is marked as background-updating (BU) or ROI-updating (RU) frame. By applying the conventional video codec, the BU frame is compressed with low-quality and high-compression, while the ROI from RU-frame is compressed with high-quality and low-compression. The learning-based methods are applied to detect the ROI, blend background-ROI, and enhance video quality. The experimental results show that our RCLC can reduce up to 32.55% BD-rate for the ROI region compared to H.265 video codec under a similar compression time with 1080p resolution.

1 Introduction

With the spread of COVID-19, online meetings have become a must globally. Therefore, video streaming, which is the main instrument for a remote meeting system, is facing a huge demand for the video compression method improvement. Especially for high-resolution streaming video, existing methods are standing still because of the trade-off between the reconstruction quality and the specific real-time demand. To overcome that problem, effective video compression with a specific configuration is in need.

Current conventional video codecs usually perform a uniform compression for all-region in a video frame, however, in a remote meeting system, the background is usually pointless that most existing systems provide a blurring filter to hide it. Therefore, a Region-Of-Interests-based (ROI) codec, where the non-interest area will be compressed with a coarser quality than the ROI, is suitable for this problem. R. Delhaye et al. [8] introduced a QP-selection scheme for ROI on H.265 codec [11], this system extracts ROI based on a specific Kinect skeleton detection, which cannot be available for all normal users. L. Zhonglei et al. [16] announced a faster codec for specific airport cameras by sending the specific uniform information of background instead of the whole background, it can reduce a lot of bitrate, however, it results in a very bad visual quality. Then, L. Wu et al. [15] introduced a learning-based ROI compression framework that dynamically chooses the frame to update as background or do the background interpolation instead, however, this framework cannot perform in a streaming application manner.

To overcome the drawback of existing methods, in this work, we introduce an ROI-based join Conventional and Learning Compression framework (RCLC) that can support a real-time video streaming demand with 1080p resolution on a normal PC architecture. For the coming frame, we mark it as a background-updating (BU) or ROI-updating (RU) frame. BU frame is compressed with low quality while the ROI from RU-frame is compressed with high quality by the conventional codec to satisfy the real-time demand. Then, using learning-based methods, we can reconstruct the full RU frame on the decoder-end based on the BU-frame or previous frame. The experimental results show that our RCLC can reduce up to 32.55% bitrate for the ROI region compared to H.265 video codec while supporting a real-time video streaming demand with 1080p resolution on a normal PC architecture. Our contribution is mainly three folds:

-

•

We propose an ROI-based join Conventional and Learning Compression framework (RCLC). It can be combined and extended with any existing/future learning-based methods and video codec to further improve its performance and adapt to any ROI demand.

-

•

Based on our framework, we experiment with several settings to further exploit its demand adaption ability.

-

•

Moreover, we propose two 1080p ROI-based testing sets with ROI is defined as a person. The first set is an online-meeting-related set, where the camera position is fixed indoor while the second one has some changes in camera angles over time and mostly outdoor scenes.

2 Related work

Existing conventional video codecs are well-known for their hand-crafted artifacts. For example, in the case of real-time video streaming over a narrow network bandwidth, the typical block-based video compression codecs such as H.264/AVC [14] and H.265/HEVC [11] get involve in those artifacts because of the large quantization parameter and coding unit size. Moreover, since those codecs usually perform the uniform compression, in which all regions have the same assigned parameters, the quality of Region-Of-Interests (ROI) is similar to the rest. Addressed this problem, [16] have sent the meta-data instead of pixels values for the background while compressing the ROI with high quality. However, their final reconstructed frame is much like machine-generated with many visible blending edges since that meta-data is not enough to synthesize the ROI and the background differences. In our work, different from [16], we only send the meta-data that indicates the ROI region in the frame while repeatedly update the background information and using the learning-based methods to avoid the blending edges in the reconstructed frame.

Recently, several learning-based methods have been applied to improve ROI-based compression. [8] used a specific Kinect device to extract the human skeleton information and do the ROI compression while assigning the quantization factors of H.265 according to the detected area. [7][9] performed learning-based end-to-end ROI compression that learns and sends the ROI allocated map to the decoder. Meanwhile, [15] introduced a foreground-background parallel compression for surveillance video, [15] can remove a sufficient amount of bitrate for background compression by performing the background interpolation between two assigned background templates. However, this updating approach is not suitable to apply to video streaming tasks. Also, [7][9] and [15] require a lot of computation to perform the compression. In our work, all components and network has been carefully chosen to avoid the over complexity problem.

In collaborating the conventional and learning-based methods, we propose an ROI-based joint Conventional and Learning Compression framework (RCLC) that leverages the unused GPU with learning-based methods to improve the performance of ROI-based conventional compression. By defining ROI as a person, our RCLC uses the conventional codec to compress the non-important background as fast as possible while compressing ROI with high quality. Whereas, the learning-based methods are used as the ROI detector, background-ROI blender, and enhancing methods with a specific configuration.

3 The proposed RCLC framework

3.1 Overview of RCLC framework

As shown in Figure 1, for the incoming frame, we decide whether it is background-updating (BU) or ROI-updating (RU) frame based on our Group-Of-Frame (GOF) definition (see Section 3.2). For BU, the whole frame is compressed by a codec that can satisfy the realtime-demand in collating to the hardware. Because BU’s purpose is to provide the background information, which is not much important, the codec is configured with a high quantization parameter to get very fast compression speed and low-bitrate.

For RU, we have to recalculate the compressed area since we reused the previous background (see Section 3.2 and 4.1), only the new calculated ROI area is compressed with a low quantization parameter, although ROI area is usually much smaller than the whole frame, we still need to carefully choose the parameter in considering about compression speed. On the decoder side, we receive the information of the whole frame for BU and the ROI area for RU along with the position information. While the ROI-area of BU is enhanced, based on the received position information, ROI in RU is blended into previous reconstructed BU or previous frame and an edge smoothing network will filter-out the blended edges (see Section 4.2).

3.2 GOF and RU-RU

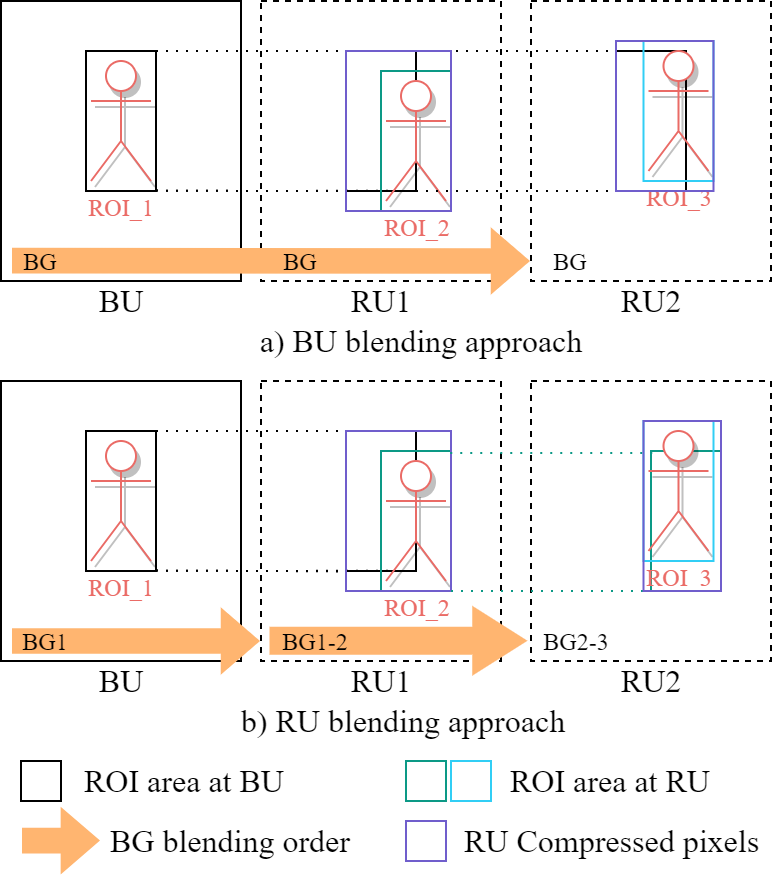

Since background information is not so important in an online meeting, we need to limit the number of bits used for background transfers. Therefore, in our framework, Group-Of-Frame is defined as the period that background is updated, which means the frame at the start of GOF is chosen as BU (see Figure 2). This definition is conducted based on our consideration of normal online meeting video, where the camera angle is rarely changed, so the background remains similar in a close range of visual similarity.

In each GOF, BU provides the background information for all the following RU. Whereas, the compressed ROI of RU is calculated not only based on the RU-frame but also based on the ROI position in BU or the previous frame (see Section 4.1). This manner is to avoid missing information when the ROI moving over frames.

| Moving camera set | |||

| Sequence | BD-rate (%) | Sequence | BD-rate (%) |

| Model_36850 | -15.33 | 180301_07_A_HongKongIsland_11 | -70.89 |

| 190312_24_ParkVillaBorghese_UHD_002 | -55.78 | Woman_23644 | -42.04 |

| 180301_15_A_KowloonPark_08 | -21.72 | 190111_16_MuayThaiTraining_UHD_01 | -16.78 |

| 180626_28_BongeunsaTemple_02 | -66.54 | Coffee_20564 | -38.71 |

| 190111_16_MuayThaiTraining_UHD_06 | -34.34 | TrainingApparatus_1087 | -34.31 |

| 200323_Coronavirus_01_4k_014 | -25.77 | Doctor_22704 | -2.60 |

| Couple_19706 | -8.96 | 190111_16_MuayThaiTraining_UHD_12 | -59.81 |

| Average | -35.26 | ||

| Fixed camera set | |||

| Sequence | BD-rate (%) | Sequence | BD-rate (%) |

| BestCameraQualityforZoom_Trim | -24.01 | LiveStreamingOrVideoConferencing_Trim | -23.64 |

| ChonMauLieu_Trim | -40.80 | NobodyKnew_Trim | -24.07 |

| DuaLeo_Trim | -34.40 | Tan1Cu_Trim | -22.77 |

| DuyLuan_Trim | -48.61 | VinhVatVo_Trim | -35.21 |

| DuyTham_Trim | -29.91 | WebcamAndMicrophone_Trim | -42.13 |

| Average | -32.55 | ||

4 Specific update interval

4.1 ROI calculation

To avoid specific hardware demand, we use the YOLO_v4 method [6] to perform the ROI detection task. YOLO_v4 can do the detection by input a normal RGB camera frame. To meet the real-time with limited hardware resources, the ‘tiny’ version [13], which has a smaller weight-load, is used. Here, we define the ROI as one person. For BU, the detected area is formed to two points of bounding boxes area, this information is used at the decoder to enhanced the ROI-area in BU. Furthermore, in the same GOF, RUs also use this information to recalculate their ROIs.

For RU, after performing normal detection as BU, since the object or ROI can move over time, a direct blending of ROI will lead to the missing pixels information. Therefore, by calculating the difference with the BU ROI bounding box in the same GOF, we can include the missing pixels into the new ROI (BU_blending in Figure 3.a). Furthermore, for large GOF size, where the number of missing pixels between the BU and RU increases due to the large movement of ROI, we introduce another updating rule. Instead of using BU as the background provider, we calculate the compressed area of current RU based on the previous frame, therefore, the distance of movement and the number of missing pixels are reduced, especially on very large GOF size (RU_blending in Figure 3.b).

4.2 BU-RU enhancement

Since there is a gap in the quantization parameter between BU and RU frames, the smoothness of visual quality over the sequences may be affected in the case of the limited bandwidth, at which we have to increase that gap. Hence, we have to perform an enhancement step for BU to catch up with the RU visual quality. However, since the background is not useful information, we only enhance the ROI area of BU on the decoder-end based on the received bounding box information.

While BU facing the quality gap with RU, the ROI inside of RU also facing that gap with the blended background. The blending approach usually leads to noticeable edges at boundary pixels. Therefore, we have to smooth those edges by inputting the decoded-ROI of RU plus the neighboring pixels of the blended background to an edge smoother.

We try to avoid using two separated model-weight sets for enhancing and edge smoothing because of the hardware on normal user PC consideration. Therefore, we use the deep recursive residual network [12] as our enhancement model. This model is suitable for small GPU consumption with its recursive approach while easy to perform fine-tuning and avoid the overfitting problem. After training this network on the edge smoothing task, we fine-tune the network by squeezing the recursive residual block and input layer while training on the quality enhancement task (see Figure 4).

| Sequence | bGOF4 | rGOF4 | bGOF8 | rGOF8 | bOne_BU | rOne_BU |

|---|---|---|---|---|---|---|

| BestCameraQualityforZoom_Trim | -31.00 | -31.43 | -34.31 | -35.33 | -39.87 | -39.20 |

| ChonMauLieu_Trim | -49.60 | -49.74 | -53.42 | -53.77 | -56.78 | -57.27 |

| DuaLeo_Trim | -36.21 | -36.71 | -42.94 | -43.72 | -45.51 | -46.25 |

| DuyLuan_Trim | -57.49 | -57.70 | -60.98 | -61.36 | -62.55 | -64.40 |

| DuyTham_Trim | -37.33 | -37.41 | -40.51 | -40.95 | -40.94 | -44.22 |

| LiveStreamingOrVideoConferencing_Trim | -21.50 | -21.87 | -23.97 | -24.57 | -24.15 | -27.43 |

| NobodyKnew_Trim | -29.58 | -29.95 | -32.66 | -33.31 | -36.47 | -36.79 |

| Tan1Cu_Trim | -23.25 | -23.56 | -23.69 | -24.16 | -25.40 | -25.07 |

| VinhVatVo_Trim | -43.40 | -44.22 | -46.70 | -48.16 | -46.93 | -51.74 |

| WebcamAndMicrophone_Trim | -52.42 | -54.77 | -56.62 | -59.59 | -51.34 | -63.68 |

| Average | -38.18 | -38.74 | -41.58 | -42.49 | -42.99 | -45.60 |

4.3 Time constraint calculation

Based on our framework, the computation time of the system will be calculated as follows:

-

•

For BU: Encoder time = max(Detection, Compression); Decoder time = Decompression + ROI Enhancement.

-

•

For RU: Encoder time = max(Detection) + Re-ROI calculation + Compression; Decoder time = Decompression + ROI Blending + Edge smoothing.

By setting the H.265 codec with ultra-fast preset and all_intra configuration, our system can get framerate higher or equal to 60fps for all components on 1080p video.

5 Results and Comparison

5.1 Experimental Setting

Our experiments were conducted on an NVIDIA RTX 2080Ti GPU while an Intel Core i7-8700K CPU was used to perform non-GPU tasks. For training our enhancement/edge smoothing network, we collected 20 videos with CIF and 720p resolution from [3], all frames were extracted and used. We used compressed frames as input for the enhancement task. For the smoothing task, the ground-truth ROI was blended to the compressed background to form a combination input. All frames were ROI-centered cropped with the size of 512x512 for a fixed training size. We implemented our proposal using the PyTorch[1] framework. We used Adam[10] optimizer and started the edge smoothing task learning with a learning rate of 1e-04, then terminated the training after 20 epochs. Next, we perform the enhancement task training with layers freezing (see Section 4.2) and learning rate of 1e-05 then terminated after 10 epochs.

For testing, since there is no available online meeting related non-compressed dataset with 1080p resolution, we conducted two new test sets. The first set is for the online meeting scheme, where the camera angle is fixed (fixed camera set/tech-reviewer set) and the second one has the viewpoint that changes over time (moving camera set). We collected 24 videos with 4K resolution from YouTube[4] and Videvo[2], then down-scaled them to 1080p using a bicubic operator to remove the compression artifacts. Especially, for the fixed camera set, because there is almost no available 4K online meeting video, we collected the sequences from tech-reviewers, which also satisfies the scheme requirement. Figure 5 shows a brief view of our proposed test sets. In our experiments, we use PSNR and Bjontegaard-delta (BD)-rate [5] metrics to evaluate our results.

5.2 Comparison

We compare our RCLC with H.265 codec[11] over two proposed test sets. Note that, we also use H.265 as our anchor in this comparison. For H.265, QPs equal to 32, 37, 42, 47 which ensure the 60fps compression. For our RCLC, QPs for ROI are 22, 27, 32, 37, while QPs for background are 32, 37 (for only ROI QPs = 22, 27), 42, 47. Except for the Blending approach selection comparison, we use the BU_blending approach (see Section 4.1) for all comparisons because it can cover the smallest GOF size, which is suitable for moving camera cases.

Bitrate reduction compare to H.265. As shown in Table 1, we first compare our RCLC using our basic GOF = 2 with H.265 codec on the ROI are of two proposed test sets. We can see that our RCLC got better results for ROI quality than H.265. In particular, our RCLC can gain up to 35.26% BD-rate reduction over H.265 on the moving camera set and 32.55% on the other. In the best case, our RCLC can reduce up to 70.89% RD-rate and 2.6% for the worst case of Doctor_22704 sequence, where the ROI area is bigger or equal to two-third of frame size. It is worth mentioning that our RCLC can achieve this result while keeping the compressing speed intact from H.265 by leveraging the unused GPU hardware.

GOF selection. Figure 6 shows several RD curves of our RCLC over different GOF selections on the fixed camera set. We can see that when the camera angle is fixed, by increasing the GOF, RCLC can get better performance. And in an ideal situation, where the camera is fixed and the person ratio does not change much during the meeting, we can set the GOF equal to full sequence (one_BU), which means we only need to send the background only one time.

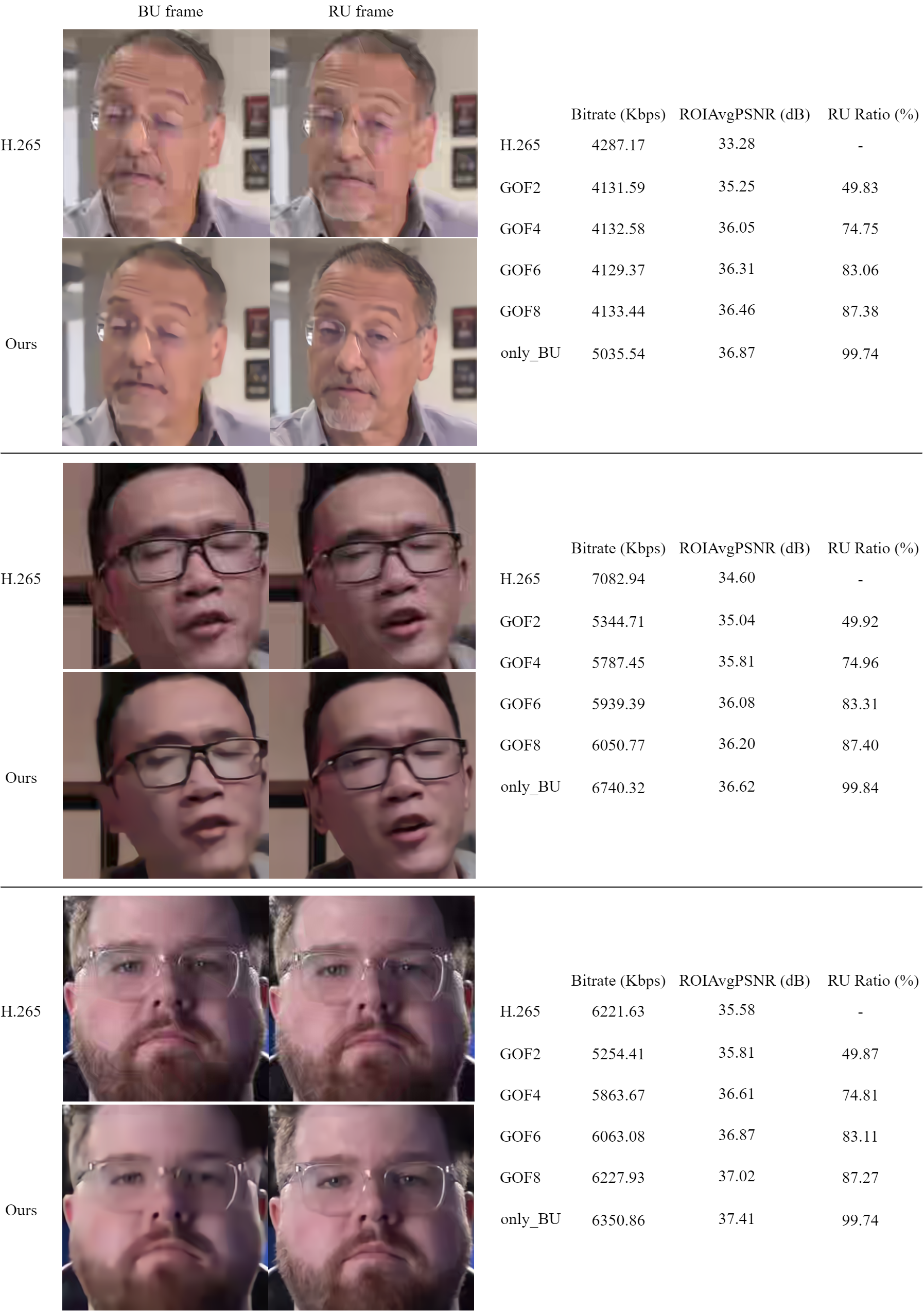

Visual results. We compare several compressed frames with H.265 in Figure 7 . When looking into the cropped ROI part, we can see a lot of noise and block artifacts from H.265 compression. While with smaller transferring bits, our RCLC can get higher-quality visual in texture and smoothness for the ROI with all GOF. In our RCLC framework, BU may have worst quality than RU, however, by increasing the GOF’s size, the RU ratio also increases which leads to the higher average PSNR value for the ROI area along with the sequence’s frames. Hence, in the ideal case, where only the first frame is BU, all remained frames will have much better visual quality compared to H.265.

Blending approach selection. We further exam two blending approaches in Section 4.1. The results of BD-rate reduction over GOFs = 4, 8, and one_BU on fixed camera set are tabulated in Table 2. Here, b_ and r_ denote BU-blending and RU-blending approaches, respectively. We can see that the RU-blending approach gets better performance than the BU-blending approach for most cases. By increasing the GOF size, the performance distance increases from 0.56% at GOF4 to 0.91% at GOF8 and 2.61% at the ideal one_BU case. The performance gain comes mainly from the smaller compressed area because of the minimum motion between two consecutive frames. This result demonstrates that the RU-blending approach is much suitable for stable sequences which have fixed camera angle over time.

6 Conclusion

This work presents a light ROI-based joint conventional and learning compression framework for video streaming. With our idea of interval background updating and reusing, we can reduce a sufficient amount of background transferring bit. Furthermore, our specific training procedure also reduces the required GPU storage twice for storing the learning-based enhancement network. Experiment results show that our RCLC outperforms the H.265 codec and gains up to 32.55% BD-rate reduction while having a competitive compressing speed. Moreover, our framework is flexible that can be applied to any ROI problem in the future.

References

- [1] Pytorch framework. https://pytorch.org/, 11 2019.

- [2] Videvo. https://www.videvo.net/, 3 2021.

- [3] Xiph. https://media.xiph.org/video/derf/, 3 2021.

- [4] Youtube. https://www.youtube.com/, 3 2021.

- [5] G. Bjontegaard. Calculation of average psnr differences between rd-curves. VCEG-M33, 2001.

- [6] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao. Yolov4: Optimal speed and accuracy of object detection, 2020.

- [7] C. Cai, L. Chen, X. Zhang, and Z. Gao. End-to-end optimized roi image compression. IEEE Transactions on Image Processing, 29:3442–3457, 2020.

- [8] R. Delhaye, R. Noumeir, G. Kaddoum, and P. Jouvet. Compression of patient’s video for transmission over low bandwidth network. IEEE Access, 7:24029–24040, 2019.

- [9] Y. Dul, N. Zhaol, Y. Duan, and C. Han. Object-aware image compression with adversarial learning. In 2019 IEEE/CIC International Conference on Communications in China (ICCC), pages 804–808, 2019.

- [10] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [11] G. J. Sullivan, J. Ohm, W. Han, and T. Wiegand. Overview of the high efficiency video coding (hevc) standard. IEEE Transactions on Circuits and Systems for Video Technology, 22(12):1649–1668, 2012.

- [12] Y. Tai, J. Yang, and X. Liu. Image super-resolution via deep recursive residual network. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2790–2798, 2017.

- [13] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao. Scaled-yolov4: Scaling cross stage partial network, 2021.

- [14] T. Wiegand, G. J. Sullivan, G. Bjontegaard, and A. Luthra. Overview of the h.264/avc video coding standard. IEEE Transactions on Circuits and Systems for Video Technology, 13(7):560–576, 2003.

- [15] L. Wu, K. Huang, H. Shen, and L. Gao. Foreground-background parallel compression with residual encoding for surveillance video. IEEE Transactions on Circuits and Systems for Video Technology, pages 1–1, 2020.

- [16] L. Zonglei and X. Xianhong. Deep compression: A compression technology for apron surveillance video. IEEE Access, 7:129966–129974, 2019.