Rate-Distortion-Perception Theory for Semantic Communication

Abstract

Semantic communication has attracted significant interest recently due to its capability to meet the fast growing demand on user-defined and human-oriented communication services such as holographic communications, eXtended reality (XR), and human-to-machine interactions. Unfortunately, recent study suggests that the traditional Shannon information theory, focusing mainly on delivering semantic-agnostic symbols, will not be sufficient to investigate the semantic-level perceptual quality of the recovered messages at the receiver. In this paper, we study the achievable data rate of semantic communication under the symbol distortion and semantic perception constraints. Motivated by the fact that the semantic information generally involves rich intrinsic knowledge that cannot always be directly observed by the encoder, we consider a semantic information source that can only be indirectly sensed by the encoder. Both encoder and decoder can access to various types of side information that may be closely related to the user’s communication preference. We derive the achievable region that characterizes the tradeoff among the data rate, symbol distortion, and semantic perception, which is then theoretically proved to be achievable by a stochastic coding scheme. We derive a closed-form achievable rate for binary semantic information source under any given distortion and perception constraints. We observe that there exists cases that the receiver can directly infer the semantic information source satisfying certain distortion and perception constraints without requiring any data communication from the transmitter. Experimental results based on the image semantic source signal have been presented to verify our theoretical observations.

Index Terms:

Semantic communication, rate-perception-distortion, side information.I Introduction

Most existing communication systems are built mainly based on Shannon information theory which focuses on accurately delivering a set of semantic-agnostic symbols from one point to another. As mentioned in his classic work[1], Shannon believed that “semantic aspects of messages” can be closely related to the users’ background and personal preference, and therefore to develop a general theory of communication, it is critical to assume the “semantic aspects of communication are irrelevant to the engineering problem”.

Recent development in mobile technology has witnessed a surge in the demand of user-defined and human-oriented communication services such as holographic communications, eXtended reality (XR), and human-to-machine interactions, most of which focusing on understanding and communicating the semantic meaning of messages based on users’ background and preference. This motivates a new communication paradigm, referred to as the semantic communication, which focuses on transporting and delivering the meaning of the message.

The concept of semantic communication was first introduced by Weaver in [2] where it claims that one of the key differences between the semantic communication problem and Shannon’s engineering problem is that, in the former problem, the main objective of the receiver is to recover a message that can “match the statistical semantic characteristics of the message” sent by the transmitter[2]. In other words, the symbol-level distortion measures commonly used in the Shannon theory, e.g., Hamming distance, will not be sufficient to evaluate the semantic-level distortion of messages recovered by the receiver. In fact, a recent study has suggested that minimizing the symbol-level distortion does not always result in maximized human-oriented perceptual quality, commonly measured by the divergence between probability distributions of messages.

To address the above challenge, in this paper, we extend the Shannon’s rate-distortion theory by taking into consideration of the constraint on the perceptual quality of semantic information. More specifically, we investigate the correlation between the symbol-level signal distortion and the semantic-level perception divergence as well as its impact to the achievable data rate in a semantic communication system. Motivated by the fact that the semantic information generally involves rich intrinsic information and knowledge that cannot always be directly observed by the encoder, we consider a semantic information source that can only be partially or indirectly observed by the encoder. Also, both encoder and decoder can have access to various types of side information that can be closely related to the communication preference of the user as well as the indirect observation obtained by the encoder. We derive the achievable rate region that characterizes the tradeoff among the data rate, symbol-level signal distortion, and the semantic-level information perception, we referred to as the rate-distortion-perception tradeoff. We prove that there exists a stochastic coding scheme that can achieve the derived tradeoff. We provide a closed-form achievable rate solution for the binary semantic information source under various distortion and perception constraints. We observe that there exist cases that the receiver can directly infer the semantic information under a certain distortion and perception limit without requiring any data communication from the transmitter. Experimental results based on the image-based semantic source signal have been presented to verify our theoretical observations.

II Related Work

II-A Semantic Communications

Most existing works are focusing on directly extending the Shannon’s classic information theory to investigate the semantic communication problem [3, 4, 5, 6]. For example, Carnap and Bar-Hillel replace the set of binary symbols of Shannon information theory with the set of possible models of worlds [3]. The authors of [7] define the semantic entropy by replacing the traditional Shannon entropy of messages with the entropy of models. These earlier works on semantic communication always suffer from the Bar-Hillel-Carnap (BHC) paradox which states the self-contradictory or semantic-false messages often contain much more information, even they have less or no meaningful information, compared to basic facts or common sense knowledge. Recent works attempt to investigate the rate-distortion theory of semantic communication with indirect semantic source and multiple distortion constraints [8, 9, 10]. Unfortunately, all of these works only consider the symbol-based distortion measure to evaluate the semantic distance, which is insufficient to characterize the perceptual quality of semantic information recovered by the receiver. In this paper, we take into consideration the perception divergence between the real semantic information source and the semantic messages recovered by the receiver and investigate how various constraints in perception divergence can influence the achievable rate of the semantic communication.

II-B Rate-Distortion-Perception Tradeoff

Many efforts have been made recently to investigate the lossy source coding problem with constraint on the perceptual quality. More specifically, Blau and Michaeli first formulate the information rate-distortion-perception function (RDPF) [11]. They further investigate the tradeoff of rate-distortion-perception on a Bernoulli source and MNIST dataset in [12]. Wagner establishes the coding theory of RDPF and proves its achievability [13]. Some recent works characterize the RDPF with side information and give some general theorems with respect to the achievability [14, 15].

Additionally, recent works suggest that introducing common randomness at the encoder and decoder can be helpful for further improving perceptual quality. For example, Theis and Wagner suggest that stochastic encoders/decoders with common randomness as secondary input may be particularly useful in the regime of perfect perceptual quality by considering a 1-bit-coding toy example [16]. Wagner further quantifies the amount of common randomness as one of the input of the RDPF with perfect perceptual quality, and shows the four-way tradeoff among rate, distortion, perception, and rate of common randomness [17]. However, some other recent works suggest that the benefit of common randomness is not always available. The authors of [14] and [18] show that common randomness is not necessary under the empirical distribution constraints of perceptual quality. Motivated by these works, in this paper, we consider a semantic communication system in which two types of side information, including the Wyner-Ziv side information and common randomness, can be available at the encoder and decoder. We characterize RDPF under both the empirical and strong perception constraints. We observe that under certain scenarios that the side information along is sufficient for the decoder to fully recover the semantic information source.

III Problem Formulation and Main Results

We consider the semantic communication model introduced in [9]. In this system, a semantic information source involves some intrinsic knowledge or states that cannot be directly observed by the encoder. The encoder can collect a limited number of indirect observations, i.e., explicit signals that reflect some properties or attributes of the semantic information source. The encoder will then compress its indirect observations according to the channel capacity to be sent to the destination user. The main objective is to recover the complete information including all the intrinsic knowledge with minimized semantic dissimilarity, called semantic distance, at the destination user. More formally, the semantic information is formulated as an -length i.i.d. random variable generated by the semantic information source. The encoder can obtain a limited number of indirect observations about the semantic information source, i.e., the input of the encoder is given by . The indirect observation of the encoder may correspond to a limited number of random sampling of noisy observations about the semantic information source obtained by the encoder. We will give a more detailed discussion based on a specific type of semantic information source, e.g., binary source, in Section IV. Let be the conditional probability of obtaining an indirect observation at the encoder under a given semantic information source . Due to the limited channel capacity, the encoder can only send sequences of signals, denoted as , to the channel.

In addition to observe the output of the channel, the decoder can also have access to two types of side information about the encoder and the semantic information source:

Common Randomness (CR): corresponds to a source of randomness that is involved in the encoding function to generate the coded messages sent to the channel. This may correspond to stochastic encoder in which the input of encoder includes both indirect observation and a source of randomness. The source of randomness can be the result of a randomized quantization process included in the encoding process for compressing the indirect observation. It may also correspond to the randomness added to the encoded messages for improving the protection of privacy of the indirect observation, e.g., in differential privacy-enabled encoding. The randomness of the encoding process can be considered as a pseudo-random variable generated by a seed that is known by the decoder. We assume the randomness of the encoder can be represented by a real-valued random variable, denoted by .

Wyner-Ziv (WZ) Side Information: corresponds to some background information related to the indirect observation of the semantic information source. For example, in the human communication scenario[9], different human users may use different languages or preferred sequences of words to express the same idea. In this case, the WZ side information may correspond to the language background and preference of users when converting the semantic information source, i.e., ideas, to a sequence of words. Generally speaking, both encoder and decoder may have some side information. The side information at the encoder and decoder does not have to be the same. Let and be the WZ side information at the encoder and decoder, respectively.

Both encoder and decoder will include the WZ side information and CR in their coding process. More formally, we define the stochastic code as follows:

Definition 1

For an arbitrary set , a (stochastic) encoder is a function

| (1) |

and a (stochastic) decoder is a function

| (2) |

In this paper, we consider two types of semantic distances:

Block-wise Semantic Distance: corresponds to a block-wise distortion measure defined as a function . In this paper, we consider the following block-wise semantic distance constraint: .

Perception-based Semantic Distance: corresponds to non-negative divergence between any given pair of probability distribution functions. In this paper, we adopt the total variance (TV) distance, a commonly used divergence function, to measure the perception-based semantic distance between the real semantic information source and the estimated signal at the decoder, defined as follows:

| (3) |

Let us formally define achievable rate region of the rate-distortion-perception tradeoff as follows:

Definition 2

The rate-distortion-perception triple is achievable with respect to strong and empirical perception constraints if for any , there exists an code with common randomness that satisfies the direct bit distortion constraint

| (4) |

and one of the indirect perception constraints

| (5) |

Note that the main difference between the strong and empirical perception constraints is that in the later case, the TV distance is measured by expectations between two probability distributions, while for strong perception constraint, the TV distance is calculated based on the -length code block.

Definition 3

The rate-distortion-perception region under strong perception constraint is

| (6) | |||||

And the region for empirical perception constraint, , is defined by replacing the perception constraint of above region with .

We can then prove the following result about the achievability of the rate-distortion-perception region.

Theorem 1

is achievable with respect to strong (resp. empirical) perception constraint if and only if it is contained in the closure of (resp. ).

Proof:

See Appendix A. ∎

Observation 1

Definition 3 specifies the constraint of achievable coding rate of our proposed semantic communication system. We can then reformulate the achievable rate as following forms using the chain rules of mutual information,

| (8) |

The first term of the right-hand-side of (1) specifies the amount of uncertainty about the indirect semantic information and direct observation that can be reduced at the receiver. The second term of the right-hand-side of (1) specifies the total amount of uncertainty induced by the existence of side information, which is not required to be transmitted in the channel. (8) quantifies the amount of information of each individual source in terms of conditional entropy. Specifically, specifies the amount of information of the indirect semantic source. quantifies the semantic ambiguity about the semantic information, induced by indirect observation. specifies the volume of information to be encoded at the receiver. quantifies the amount of uncertainty about the semantic information and the channel output which can be reduced when using side information at the decoder. Moreover, as for the informative correlation between the semantic source and the indirect observation, we have where corresponds to the semantic redundancy and corresponds to the semantic redundancy.

IV Closed-Form Achievable Rate of Binary Semantic Information Source

In this section, we focus on the binary semantic information source scenario in which the semantic source follows a Bernoulli distribution with .

The correlation between indirect observation and the semantic information source and that between the side information adn semantic information source can be parameterized as:

We then move on to present the closed-form solution of RDPF under binary alphabet setting. To simplify the expression, we first parameterize the correlation between and as following matrix: We also parameterize the conditional probabilities of semantic source and indirect observation given side information as following matrices Then define the -RDF:

| (9) |

and -RDPF:

| (10) |

where denotes the entropy of a binary variable, denotes the entropy of a ternary variable. Then we have following theorem with respect to the closed-form expression of the rate-distortion-perception function :

Theorem 2

Assume , and follow the doubly symmetric binary distribution with , the achievable rate under distortion and equals to a rate-distortion-perception function, formulated as

| (11) |

for and

| (12) |

for , where , , .

Proof:

See Appendix B. ∎

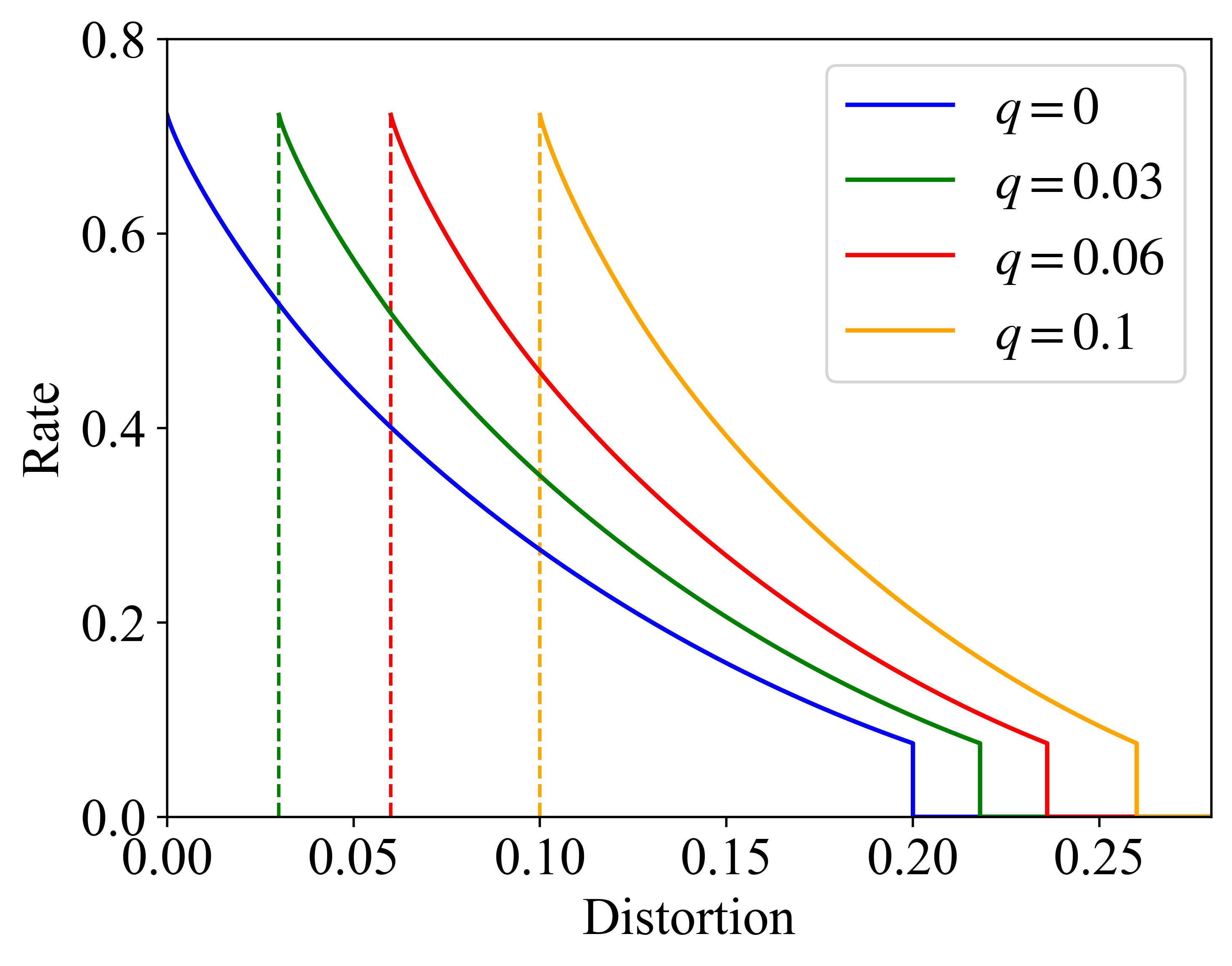

To evaluate the impact of crossover probabilities and constraints of perceptions on the achievable rate of semantic communication, we present RDPF results in Fig. 1 where we set . We have the following observations: We then make several observations as follows.

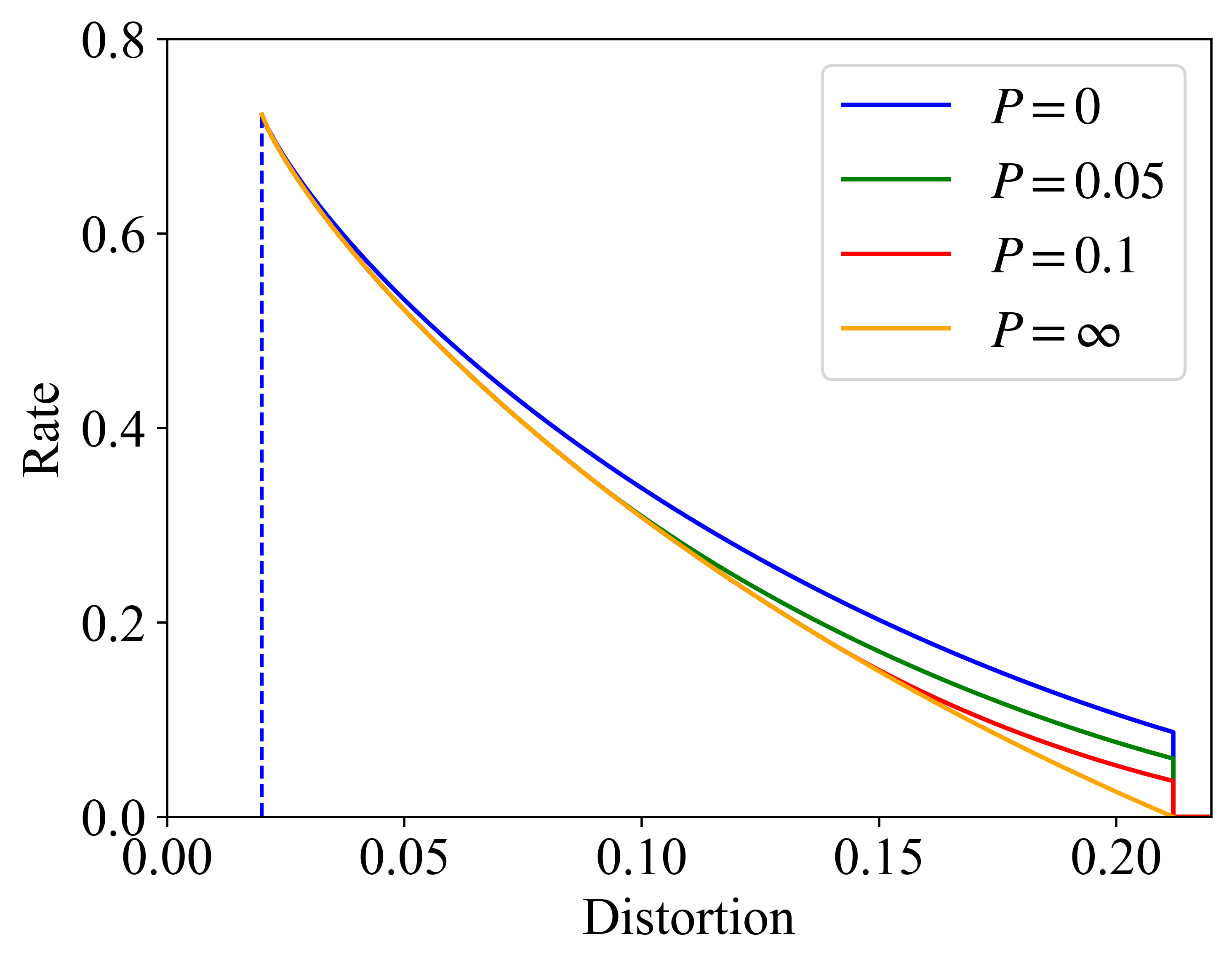

Observation 2

In Fig.1, we can observe that higher data rate is required when the constraints on the symbol-level distortion and higher perceptual quality are more stringent. In particular, in Fig.1b, under a given distortion level, higher achievable rate is demanded when requiring higher perceptual quality, i.e., value of is lower. Similarly, under a given achievable rate, the perceptual quality decreases when requiring higher symbol-level quality. The above observations about the rate-distortion-perception tradeoff is consistent with the traditional RDPF without side information [12].

Observation 3

In the proof of Theorem 2, we can observe that the distortion between semantic information source and the recovered information information, denoted as , and that between the direct observation obtained by the encoder and the the recovered information information, denoted as , has the following relationship:

| (13) |

We can observe from (13) that, since both and must be positive, the symbol-level distortion cannot be lower than . Also, when , we have . This means that trying to perfectly recover the indirect observation obtained by the encoder cannot result in perfect recovery of the semantic information source at the decoder. This further justifies that the objective of the semantic communication which tries to recover the semantic information source, i.e., minimizing , is generally different from that of the traditional communication solution which focusing on recovering the symbol obtained by the encoder, i.e., minimizing .

Observation 4

In Fig.1a, the blue curve represents the direct observation case with in which the encoder can fully observe the semantic information source, i.e., . We can observe that the indirect observation of the semantic information source, i.e., , leads to a higher required data rate under the same distortion and perception constraints, compared to the direct observation case. And the difference between rates achieved by the direct and indirect observations at the encoder increases with the value of .

Observation 5

In Fig.1, we can observe that when the rate decreases to zero, the symbol-level distortion will not increase to the maximum value , but will stick to a fixed value, given by . This means that when the side information is available at both encoder and decoder, the decoder is able to recover semantic information source under certain distortion constraints even when no data has been transmitted from the transmitter. More specifically, suppose and . By substituting these two equations into (5), we have This means the perception divergence becomes zero when . The optimal decoding strategy in this case is then given by . This implies that the decoder can directly use the side information to recover when . This may correspond to the case that the end user can directly infer the semantic information source based only on the background knowledge of source user as long as the required distortion and perception constraints are above a certain tolerable threshold.

V Experimental Results

V-A Experimental Setup

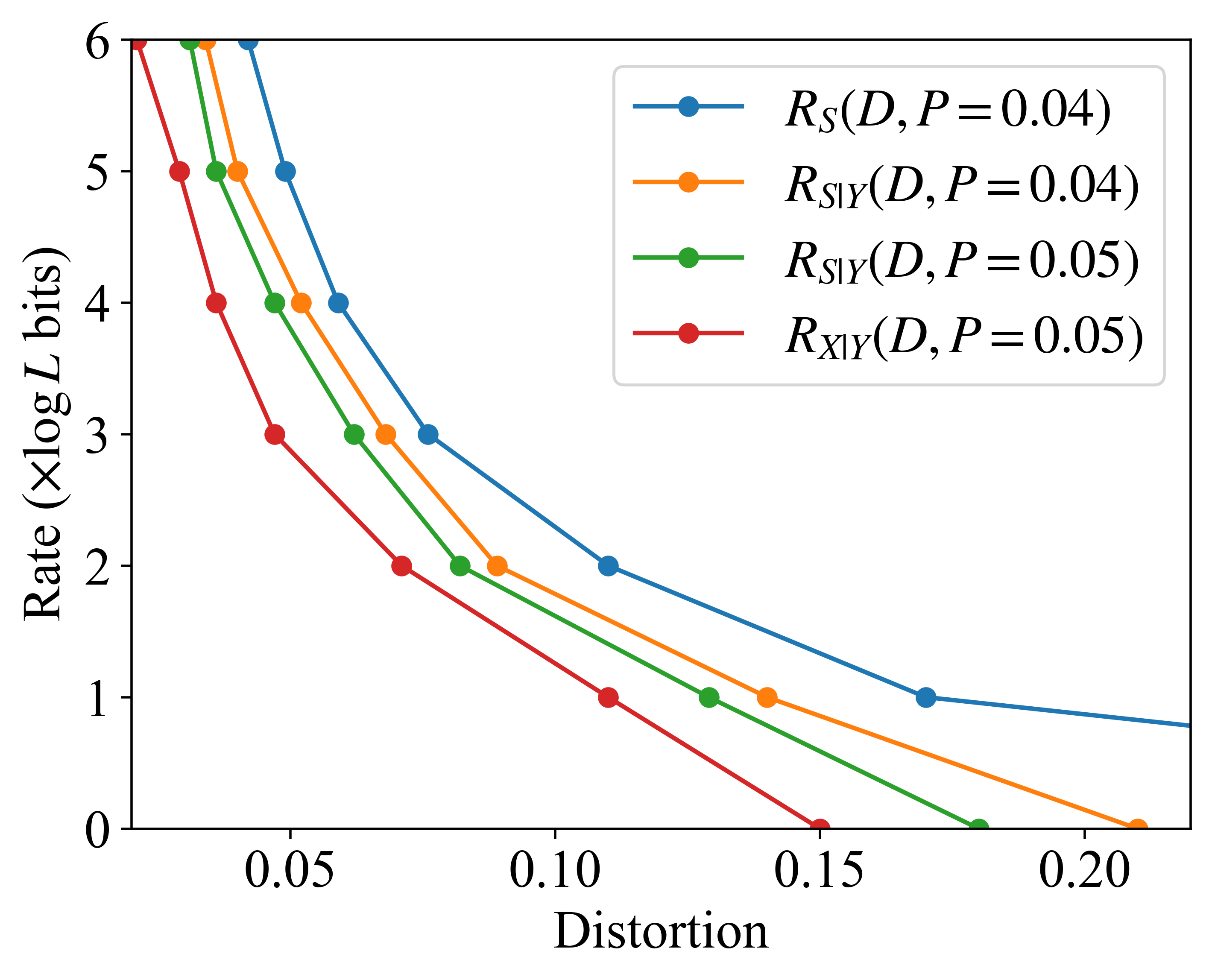

We consider a semantic communication system in which the semantic information source corresponds to image signals that are uniformly randomly sampled from a commonly used image dataset, MNIST. We simulate the indirect observation of the semantic source information by adding a noise signal to the semantic source, i.e. each indirect observation is given by where is the input image, is the noise vector, denotes the indirect observation obtained by the encoder. The side information is some intrinsic feature of semantic source, denoted as , where denotes the side information of , denotes the function that output the feature of , parameterized by deep neural nets (DNNs). The encoder maps the indirect observation into a dimensional latent feature vector, whose entries are then uniformly quantized to levels to obtain the output message. The decoder finally recovers the semantic information based on received message and the side information. Both the encoder and decoder have access to the common randomness, modeled as a Gaussian noise vector, denoted as . The entire process of semantic source coding can be formulated as where and denote the encoding and decoding function. Note that the uniform quantization of the encoder gives an upper bound of for rate . The quantization level is set to be .

We measure symbol-based signal distortion using the mean-squared-error (MSE), denoted as . We also use the Wasserstein distance to measure the perception divergence between distributions and . We then train the our model to minimize following loss function, where is a tuning parameter which controls particular tradeoff point achieved by the model. Based on [12], the above perceptual quality term can be written as where is the set of all bounded 1-Lipschitz functions. This expresses the objective as a min-max problem and allows us to treat it using the generative adversarial network (GAN). We obtain the experimental RDPF curves by evaluating the distortion and perceptual quality of our model on the test set of MNIST. Different rate-distortion-perception points are obtained by training the model under different desired settings.

V-B Experimental Results

In Fig. 2, we compare the RDPF curves with and without the side information at the decoder which are denoted as and , respectively. We also present the RDPF curve when the encoder can obtain a full observation of the semantic information source, which is denoted as for comparison. We can observe that, by allowing the decoder to access the side information, the achievable rate under the same distortion and perception constraints can be reduced. We can also observe that, under the same achievable rate, increasing the perceptual quality at the decoder will lead to higher symbol-level distortion of the decoded signals. Furthermore, under the same distortion and perception constraints, the rate achieved by the indirect observation of semantic source information at the encoder is higher than that achieved when the semantic source information is fully observable by the encoder, i.e., . When the side information is available at the decoder, even when the achievable rate becomes zero, i.e., , the symbol-level distortion, measured by MSE, as well as the perceptual quality of the recovered signal at the decoder will still be within a certain limit. This observation is consistent with that of binary information source discussed in Section IV. In other words, even when the transmitter does not send any data to the channel, it is still possible for the decoder to recover some semantic source information within a certain distortion and perception constraints based on the side information.

VI Conclusions

This paper has investigated the achievable data rate of semantic communication under the distortion and perception constraints. We have considered a semantic communication model in which a semantic information source can only be indirectly observed by the encoder and the encoder and decoder can access to different types of side information. We derive the achievable rate region that characterizes the tradeoff among the data rate, symbol distortion, and semantic perception. We have then theoretically proved that there exists a stochastic coding scheme to achieve the derived region. We derive a closed-form achievable rate for binary semantic information source. We observe that there exist cases that the receiver can directly infer the semantic information source satisfying certain distortion and perception constraints without requiring any data communication from the transmitter. Experimental results based on the image semantic source signal have been presented to verify our theoretical observations.

Acknowledgment

This work was supported in part by the National Natural Science Foundation of China under Grant 62071193 and the major key project of Peng Cheng Laboratory under grant PCL2023AS1-2.

Appendix A Proof of Theorem 1

To prove the sufficiency part of the theorem, we adopt the source coding scheme using strong functional representation in [13] and generate a random codebook consisting of i.i.d codewords where for arbitrary small . These codewords are then distributed uniformly randomly into bins where . Then we map -bit length codewords into a channel coding scheme with bits. Since and correspond to the channel capacity, are joint typical sequences. To prove the necessity part, we follow the same line as [19] and prove that there exists a joint-source channel coding scheme that satisfies Similarly, follow the same line as [19], we can prove that for any coding scheme including the proposed joint source channel coding scheme, the mutual information between the mutual information between the coded message and the channel output should not exceed the channel capacity . Then we have . This concludes the proof.

Appendix B Proof of Theorem 2

Let us first define

| (14) |

with and parameterize following conditional probabilities as Assume that and form the doubly symmetric binary distribution, then , thus we have and The distortion between indirect semantic source and reconstruction signal is then formulated as which is further written as Thus the indirect distortion is converted linearly as direct distortion. For perception constraint, as , we have . We then have where . Similarly, the perception distortion can also be written as the expectation form based on following lemma:

Lemma 1

with If and , we have

We omit the proof due to limit of the space. Following (6) of [12], we then reformulate the mutual information as Thus the optimization problem of minimizing the conditional mutual information of semantic source is written as

To solve (B), we can associate a Lagrangian function and the closed-form solution is obtained by solving the minimization problem of . The detailed proof is omitted.

References

- [1] C. E. Shannon, “A mathematical theory of communication,” The Bell system technical journal, vol. 27, no. 3, pp. 379–423, 1948.

- [2] W. Weaver, “Recent contributions to the mathematical theory of communication,” ETC: a review of general semantics, pp. 261–281, 1949.

- [3] R. Carnap, Y. Bar-Hillel et al., “An outline of a theory of semantic information,” Technical report, Massachusetts Institute of Technology. Research Laboratory of Electronics, 1952.

- [4] G. Shi, Y. Xiao, Y. Li, and X. Xie, “From semantic communication to semantic-aware networking: Model, architecture, and open problems,” IEEE Communications Magazine, vol. 59, no. 8, pp. 44–50, 2021.

- [5] Y. Xiao, Z. Sun, G. Shi, and D. Niyato, “Imitation learning-based implicit semantic-aware communication networks: Multi-layer representation and collaborative reasoning,” IEEE Journal on Selected Areas in Communications, vol. 41, no. 3, pp. 639–658, 2022.

- [6] Y. Xiao, Y. Liao, Y. Li, G. Shi, H. V. Poor, W. Saad, M. Debbah, and M. Bennis, “Reasoning over the air: A reasoning-based implicit semantic-aware communication framework,” to appear at IEEE Trans. Wireless Communications, 2023.

- [7] J. Bao, P. Basu, M. Dean, C. Partridge, A. Swami, W. Leland, and J. A. Hendler, “Towards a theory of semantic communication,” in 2011 IEEE Network Science Workshop. IEEE, 2011, pp. 110–117.

- [8] J. Liu, W. Zhang, and H. V. Poor, “A rate-distortion framework for characterizing semantic information,” in 2021 IEEE International Symposium on Information Theory (ISIT). IEEE, 2021, pp. 2894–2899.

- [9] Y. Xiao, X. Zhang, Y. Li, G. Shi, and T. Başar, “Rate-distortion theory for strategic semantic communication,” in 2022 IEEE Information Theory Workshop (ITW). IEEE, 2022, pp. 279–284.

- [10] P. A. Stavrou and M. Kountouris, “A rate distortion approach to goal-oriented communication,” in 2022 IEEE International Symposium on Information Theory (ISIT). IEEE, 2022, pp. 590–595.

- [11] Y. Blau and T. Michaeli, “The perception-distortion tradeoff,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 6228–6237.

- [12] ——, “Rethinking lossy compression: The rate-distortion-perception tradeoff,” in International Conference on Machine Learning. PMLR, 2019, pp. 675–685.

- [13] L. Theis and A. B. Wagner, “A coding theorem for the rate-distortion-perception function,” in Neural Compression: From Information Theory to Applications–Workshop@ ICLR 2021, 2021.

- [14] X. Niu, D. Gündüz, B. Bai, and W. Han, “Conditional rate-distortion-perception trade-off,” arXiv preprint arXiv:2305.09318, 2023.

- [15] Y. Hamdi and D. Gündüz, “The rate-distortion-perception trade-off with side information,” arXiv preprint arXiv:2305.13116, 2023.

- [16] L. Theis and E. Agustsson, “On the advantages of stochastic encoders,” in Neural Compression: From Information Theory to Applications–Workshop@ ICLR 2021, 2021.

- [17] A. B. Wagner, “The rate-distortion-perception tradeoff: The role of common randomness,” arXiv preprint arXiv:2202.04147, 2022.

- [18] J. Chen, L. Yu, J. Wang, W. Shi, Y. Ge, and W. Tong, “On the rate-distortion-perception function,” IEEE Journal on Selected Areas in Information Theory, 2022.

- [19] N. Merhav and S. Shamai, “On joint source-channel coding for the wyner-ziv source and the gel’fand-pinsker channel,” IEEE Transactions on Information Theory, vol. 49, no. 11, pp. 2844–2855, 2003.