∎

22email: [email protected] 33institutetext: Zhongjian Wang 44institutetext: School of Physical and Mathematical Sciences, Nanyang Technological University, 21 Nanyang Link, Singapore 637371

44email: [email protected] 55institutetext: Zhiwen Zhang 66institutetext: Department of Mathematics, The University of Hong Kong, Pokfulam Road, Hong Kong SAR, China

66email: [email protected]

Randomized methods for computing optimal transport without regularization and their convergence analysis

Abstract

The optimal transport (OT) problem can be reduced to a linear programming (LP) problem through discretization. In this paper, we introduced the random block coordinate descent (RBCD) methods to directly solve this LP problem. Our approach involves restricting the potentially large-scale optimization problem to small LP subproblems constructed via randomly chosen working sets. By using a random Gauss-Southwell- rule to select these working sets, we equip the vanilla version of (RBCD0) with almost sure convergence and a linear convergence rate to solve general standard LP problems. To further improve the efficiency of the (RBCD0) method, we explore the special structure of constraints in the OT problems and leverage the theory of linear systems to propose several approaches for refining the random working set selection and accelerating the vanilla method. Inexact versions of the RBCD methods are also discussed. Our preliminary numerical experiments demonstrate that the accelerated random block coordinate descent (ARBCD) method compares well with other solvers including Sinkhorn’s algorithm when seeking solutions with relatively high accuracy, and offers the advantage of saving memory.

Keywords:

Optimal transportdeep particle methodconvex optimizationrandom block coordinate descentconvergence analysis.MSC:

65C3568W2090C0890C251 Introduction

Background and motivation

The optimal transport problem was first introduced by Monge in 1781, which aims to find the most cost-efficient way to transport mass from a set of sources to a set of sinks. Later, the theory was modernized and revolutionized by Kantorovich in 1942, who found a key link between optimal transport and linear programming. In recent years, optimal transport has become a popular and powerful tool in data science, especially in image processing, machine learning, and deep learning areas, where it provides a very natural way to compare and interpolate probability distributions. For instance, in generative models arjovsky2017wasserstein ; lei2019geometric ; wang2022deepparticle , a natural penalty function is the Wasserstein distance (a concept closely related to OT) between the data and the generated distribution. In image processing, the optimal transport plan, which minimizes the transportation cost, provides solutions to image registration haker2004optimal and seamless copy perrot2016mapping . Apart from data science, in the past three decades, there has been an explosion of research interest in optimal transport because of the deep connections between the optimal transport problems with quadratic cost functions and a diverse class of partial differential equations (PDEs) arising in statistical mechanics and fluid mechanics; see e.g. brenier1991polar ; benamou2000computational ; otto2001geometry ; jordan1998variational ; villani2021topics for just a few of the most prominent results and references therein.

Inspired by this research progress, we have developed efficient numerical methods to solve multi-scale PDE problems using the optimal transport approach. Specifically, in our recent paper, we proposed a deep particle method for learning and computing invariant measures of parameterized stochastic dynamical systems wang2022deepparticle . To achieve this goal, we designed a deep neural network (DNN) to map a uniform distribution (source) to an invariant measure (target), where the Péclet number is an input parameter for the DNN. The network is trained by minimizing the 2-Wasserstein distance () between the measure of network output and target measure . We consider a discrete version of for finitely many samples of and , which involves a linear program (LP) optimized over doubly stochastic matrices sinkhorn1964relationship .

Solving the LP directly using the interior point method wright1997primal is too costly. Motivated by the domain decomposition method toselli2004domain in scientific computing, which solves partial differential equations using subroutines that solve problems on subdomains and has the advantage of saving memory (i.e., using the same computational resource, it can compute a larger problem), we devised a mini-batch interior point method. This approach involves sampling smaller sub-matrices while preserving row and column sums. It has proven to be highly efficient and integrates seamlessly with the stochastic gradient descent (SGD) method for overall network training. However, we did not obtain convergence analysis for this mini-batch interior point method in our previous work wang2022deepparticle .

The objectives of this paper are twofold. First, we aim to provide rigorous convergence analysis for the mini-batch interior point method presented in wang2022deepparticle , with minimal modifications. Second, we seek to enhance the mini-batch selection strategy, thereby achieving improved and more robust performance in computing optimal transport problems. We recognize that the mini-batch interior point method aligns with the random block coordinate descent (RBCD) method in optimization terminology. Specifically, it applies the block coordinate descent (BCD) method to the LP problem directly, selects the working set randomly, and solves subproblems using the primal-dual interior point method wright1997primal or any other efficient linear programming solver. Encouraged by the demonstrated efficiency of this approach, we will develop theoretical results for solving LP with RBCD methods and explore various strategies for selecting working sets.

Theorectical contributions

In this work, we first introduce an expected Gauss-Southwell- rule to guide the selection of the working set. It enables almost sure convergence and a linear convergence rate in expectation when solving a general standard LP. Based on this rule, we develop a vanilla RBCD method - RBCD0, which selects the working set with complete randomness. Then, we investigate the special linear system present in the LP formulation of OT. We characterize all the elementary vectors of the null space and provide a strategy for finding the conformal realization of any given vector in the null space at a low computational cost. Based on these findings, we propose various approaches to refine the working set selection and improve the performance of RBCD0. A better estimation of the constant in the linear convergence rate is shown. Moreover, we incorporate an acceleration technique inspired by the momentum concept to improve the algorithm’s efficiency. Inexact versions of the RBCD methods are also discussed.

Numerical experiments

We perform numerical experiments to evaluate the performance of the proposed methods. Synthetic data sets of various shapes/dimensions and invariant measures generated from IPM methods are utilized to create distributions. Our experiments first compare different RBCD methods proposed in this paper, demonstrating the benefits of refining working set selection and verifying the effectiveness of the acceleration technique. We also illustrate the gap between theory and practice regarding convergence rate, sparse solutions generated by the proposed RBCD methods, and discuss the choice of subproblem size. In the second set of experiments, we compare the best-performance method, ARBCD, with Sinkhorn’s algorithm. Preliminary numerical results show that ARBCD is comparable to Sinkhorn’s algorithm in computation time when seeking solutions with relatively high accuracy. ARBCD is also comparable to a recently proposed interior point inspired algorithm in memory-saving settings. We also test ARBCD on a large-scale OT problem, where Gurobi runs out of memory. This further justifies the memory-saving advantage of ARBCD.

Previous research on (R)BCD

BCD and RBCD are well-studied for essentially unconstrained smooth optimization (sometimes allow separable constraints or nonsmooth separable objective functions): beck2013convergence ; gurbuzbalaban2017cyclic ; sun2021worst investigate BCD with cyclic coordinate search; nesterov2012efficiency ; lu2015complexity ; richtarik2014iteration study RBCD to address problems with possibly nonsmooth separable objective functions; other related works include theoretical speedup of RBCD (richtarik2016parallel ; necoara2016parallel ), second-order sketching (qu2016sdna ; berahas2020investigation ). However, much less is known for their convergence properties when applied to problems with nonseparable nonsmooth functions as summands or coupled constraints. To our best knowledge, no one has ever considered using the RBCD to solve general LP before and the related theoretical guarantees are absent. In necoara2017random , the authors studied the RBCD method to tackle problems with a convex smooth objective and coupled linear equality constraints ; a similar algorithm named random sketch descent method necoara2021randomized is investigated to solve problems with a general smooth objective and general coupled linear equality constraints . However, after adding the simple bound constraints , the analysis in necoara2017random ; necoara2021randomized may not work anymore, nor can it be easily generalized. Beck beck20142 studied a greedy coordinate descent method but focused on a single linear equality constraint and bound constraints. In Paul Tseng and his collaborators’ work tseng2009block ; tseng2009coordinate ; tseng2010coordinate , a block coordinate gradient descent method is proposed to solve linearly constrained optimization problems including general LP. In these works, a Gauss-Southwell- rule is proposed to guide the selection of the working set in each iteration. Therefore, the working set selected in a deterministic fashion can only be decided after solving a quadratic program with a similar problem size as the original one. In contrast, our proposed mini-batch interior point/RBCD method approach selects the working set through a combination of randomness and low computational cost. Another research direction that addresses separable functions, linearly coupled constraints, and additional separable constraints involves using the alternating direction method of multipliers (ADMM) chen2016direct ; he20121 ; xie2019si ; xie2021tractable . This method updates blocks of primal variables in a Gauss-Seidal fashion and incorporates multiplier updates as well.

Existing algorithms for OT

Encouraged by the success in applying Sinkhorn’s algorithm to the dual of entropy regularized OT cuturi2013sinkhorn , researchers have conducted extensive studies in this area, including other types of regularization blondel2018smooth gasnikov2016efficient , acceleration guminov2021combination lin2022efficiency and numerical stability mandad2017variance . In xie2020fast , a proximal point algorithm (PPA) is considered to solve the discrete LP. The entropy inducing distance function is introduced to contruct the proximal term and each subproblem has the same formulation as the entropy regularized OT. This approach is found to stabilize the numerical computation while maintaining the efficiency of the Sinkhorn’s algorithm. In schmitzer2019stabilized , techniques such as log-domain stabilization, and epsilon scaling are discussed to further improve the performance of the Sinkhorn’s algorithm. The approach of iterative Bregman projection is discussed in benamou2015iterative . It is discovered that the Sinkhorn’s iteration is equivalent to a special case of this method. In pmlr-v84-genevay18a , a notion of Sinkhorn distance is proposed, which allows computable differentiation when serving as the loss function when training generative models. Sinkhorn’s algorithm can also be generalized to solve unbalanced OT in chizat2018scaling , and applied to solve OT over geometric domains in solomon2015convolutional . In huang2021riemannian , a Riemannian block coordinate descent method is applied to solve projection robust Wasserstein distance. The problem is to calculate Wasserstein distance after projecting the distributions onto lower-dimensional subspaces. The proposed approach employs entropy regularization, deterministic block coordinate descent, and techniques in Riemannian optimization. A domain decomposition method is considered in bonafini2021domain . Unlike our method, the decomposition is deterministic, focusing on one pair of distributions, and the authors try to tackle entropy-regularized OT.

Other works that significantly deviate from the entropy regularization framework include li2018computations , which computes the Schrödinger bridge problem (equivalent to OT with Fisher information regularization), and multiscale strategies such as gerber2017multiscale , liu2022multiscale and schmitzer2016sparse . In liu2022multiscale , the problem size is reduced using a multiscale strategy, and a semi-smooth Newton method is applied to solve smaller-scale subproblems. The RBCD method employed in this study is a regularization-free method. As a result, it avoids dealing with inaccurate solutions and numerical stability issues introduced by the regularization term. Furthermore, each subproblem in RBCD is a small-size LP, allowing for flexible resolution choices. Interior-point methods and simplex methods are also revisited and enhanced zanetti2023interior ; wijesinghe2023matrix ; natale2021computation ; gottschlich2014shortlist . In particular, the authors of zanetti2023interior propose an interior point inspired algorithm with reduced subproblems to address large-scale OT. The authors of facca2021fast consider an equivalent formulation of minimizing an energy functional to solve OT over graphs. Backward Euler (equivalent to the proximal point method) is used in the outer loop of the algorithm and Newton-Rapson is used in the inner loop. Other approaches include numerical solution of the Monge–Ampère equation benamou2014numerical ; benamou2016monotone , stochastic gradient descent to resolve OT in discrete, semi-discrete and continuous formulations genevay2016stochastic , and an alternating direction method of multipliers (ADMM) approach to solve the more general Wasserstein barycenter yang2021fast .

Organization

The rest of the paper is organized as follows. In Section 2, we review the basic idea of optimal transport and Wasserstein distance. In Section 3, we introduce the expected Gauss-Southwell- rule and a vanilla RBCD (RBCD0) method for solving general LP problems. An inexact version of RBCD0 is also discussed. In Section 4, we investigate the properties of the linear system in OT and propose several approaches to refine and accelerate the RBCD0 method. In Section 5, preliminary numerical results are presented to demonstrate the performance of our proposed methods. Finally, concluding remarks are made in Section 6.

Notation. For any matrix , let denote its element in the th column and th row, and let represent its th row vector. For a vector , we usually use superscripts to denote its copies (e.g., in th iteration of an algorithm) and use subscripts to denote its components (e.g., ); for a scalar, we usually use subscripts to denote its copies. Occasional inconsistent cases will be declared in context. means modulo . For any vector , we define . Given a matrix , we define its vectorization as follows:

For any positive integer , we denote . represents the matrix of all ones.

2 Optimal transport problems and Wasserstein distance

The Kantorovich formulation of optimal transport can be described as follows,

| (1) |

where is the set of all measures on whose marginal distribution on is and marginal distribution on is , is the transportation cost. In this article, we refer to the Kantorovich formulation when we mention optimal transport.

Wasserstein distances are metrics on probability distributions inspired by the problem of optimal mass transport. They measure the minimal effort required to reconfigure the probability mass of one distribution in order to recover the other distribution. They are ubiquitous in mathematics, especially in fluid mechanics, PDEs, optimal transport, and probability theory villani2021topics . One can define the -Wasserstein distance between probability measures and on a metric space with distance function by

| (2) |

where is the set of probability measures on satisfying and for all Borel subsets . Elements are called couplings of the measures and , i.e., joint distributions on with marginals and on each axis. -Wasserstein distance is a special case of optimal transport when and the cost function .

In the discrete case, the definition (2) has a simple intuitive interpretation: given a and any pair of locations , the value of tells us what proportion of mass at should be transferred to , in order to reconfigure into . Computing the effort of moving a unit of mass from to by yields the interpretation of as the minimal effort required to reconfigure mass distribution into that of .

In a practical setting COTFNT , referred to as a point cloud, the closed-form solution of and may be unknown, instead only independent and identically distributed (i.i.d.) samples of and i.i.d. samples of are available. In further discussion, refers to the size of the problem. We approximate the probability measures and by empirical distribution functions:

| (3) |

where is the Dirac measure. Any element in can clearly be represented by a transition matrix, denoted as satisfying:

| (4) |

Then means the mass of that is transferring to .

Remark 1

is in fact the set of doubly stochastic matrix sinkhorn1964relationship divided by .

Another practical setting, which is commonly used in fields of computer vision peleg1989unified ; ling2007efficient , is to compute the Wasserstein distance between two histograms. To compare two grey-scale figures (2D, size ), we first normalize the grey scale such that the values of cells of each picture sum to one. We denote centers of the cell as and , then we can use two probability measures to represent the two figures:

where , . The discrete Wasserstein distance (5) keeps the same form while the transition matrix follows different constraints:

| (6) |

Note that in both settings, the computation of Wasserstein distance is reduced to an LP, i.e.,

| (9) |

where and are two probability distributions, and . More generally, we can let and be two nonnegative vectors and be any appropriate transportation cost from to , so (9) also captures the discrete OT.

However, when the number of particles becomes large, the number of variables (entries of ) scales like , which leads to costly computation. Therefore, we will discuss random block coordinate descent methods to keep the computational workload in each iteration reasonable.

3 Random block coordinate descent for standard LP

In this section, we first generalize the LP problem (9) to a standard LP (see Eq.(10)). Then we propose a random block coordinate descent algorithm for resolution. Its almost sure convergence and linear convergence rate in expectation are analyzed. Last we introduce an inexact version that is implementable.

We consider the following standard LP problem:

| (10) |

where , , , hence is the number of constraint and is the total degree of freedom. Assume throughout that . Suppose that and denote as the feasible set. Assume that (10) is finite and has an optimal solution. For any and , denote

| (11) | ||||

| (12) |

Namely, is the optimal solution set of the linear program in (11) and is the optimal function value. We have that for any . Denote as the optimal solution set of (10). Then the following equations hold for any :

| (13) | ||||

| (14) |

Remark 2

It is worthy to mention that since follows the conditions in (12), , . Furthermore, .

Consider the block coordinate descent (BCD) method for (10):

| (15) |

where is the working set chosen at iteration . Next, we describe several approaches to select the working set .

Gauss-Southwell-q rule

Motivated by the Gauss-Southwell-q rule introduced in tseng2009coordinate , we desire to select such that

| (16) |

for some constant . Note that by (14), we have

| (17) |

where is an optimal solution of (10). Therefore, (12)-(17) imply that

| (18) |

(18) indicates that the gap of function value decays exponentially with rate , as long as we choose according to the Gauss-Southwell-q rule (16) at each iteration . A trivial choice of to satisfy (16) is and . However, this choice results in a potential large-scale subproblem in the BCD method (15), contradicting the purpose of using BCD. Instead, we should set an upper bound on the cardinality of , namely, a reasonable batch size to balance the computational effort in each iteration and convergence performance of BCD. Next, we discuss the existence of such an given an upper bound on , which necessitates the following concept.

Definition 1

A vector is conformal to if

The following Theorem confirms the existence of such an that satisfies (16), the proof of which follows closely to (tseng2009block, , Proposition 6.1).

Theorem 3.1

Suppose that . Given any , and . There exist a set satisfying and a vector conformal to such that

| (19) | ||||

| (20) |

Proof

If , then let and . We have . Therefore, both (19) and (20) are satisfied. If and , then let . Thus, satisfies and . If , then similar to the discussion in (tseng2009block, , Proposition 6.1), we have that

for some and some nonzero conformal to with , . Since , we have . Since and and , we have that . Therefore,

Denote and let , then and

where the last inequalities holds due to and .

However, it is not clear how to identify the set described in Theorem 3.1 with little computational effort for a general . Therefore, we introduced the following.

Expected Gauss-Southwell-q rule

We introduce randomness in the selection of to reduce the potential computation burden in identifying an that satisfies (16). Consider an expected Gauss-Southwell-q rule:

| (21) |

where is a constant, and denotes the history of the algorithm. Therefore, using the notations of LP (10) and BCD method (15):

| (22) |

where is an optimal solution of (10). According to (polyak1987introduction, , Lemma 10, page 49), almost surely. Moreover, if we take expectations on both sides of (22),

i.e., the expectation of function value gap converges to exponentially with rate .

Vanilla random block coordinate descent

Based on the expected Gauss-Southwell- rule, we formally propose a vanilla random block coordinate descent (RBCD0) algorithm (Algorithm 1) to solve the LP (10). Specifically, we choose the working set with full randomness, that is, randomly choose an index set of cardinality out of . Then with probability at least , the index set will be the same as or cover the working set suggested by Theorem 3.1. As a result, (21) will be satisfied with .

Based on the previous discussions, Algorithm 1 generates a sequence such that the value of converges to the optimal with probability 1. Moreover, the expectation of the optimality gap converges to exponentially. It is important to note that is only a loose lower bound of . This bound can become quite small when grows large due to the binomial coefficient . However, in our numerical experiments (c.f. Sec. 5), this lower bound is rarely reached. In Section 4, we will discuss how to further improve this bound given the specific structure of the OT problem. Before that, we first investigate an inexact extension of RBCD0.

Inexact extension of Algorithm 1.

For any and , still denote and as in (11) and (12). However, note that now does not have to be feasible. We consider the inexact version of Algorithm 1, where Step 2 in (1) is only approximately solved. We compute such that for any ,

| (23) |

where the inexactness sequences and should be nonnegative. The inexact algorithm is described as follows.

Next, we analyze the sequence generated by Algorithm 1(a) and summarize the results in the following theorem.

Theorem 3.2

Consider Algorithm 1(a). Given , suppose that and . Then we have that

-

1.

and a.s. for any ,

-

2.

for any ,

-

3.

,

where is a constant only depending on the matrix and vector , and is the constant in the expected Gauss-Southwell-q rule.

Proof

The proof of 1 is straightforward. Note that for any , we have in a.s. sense for any ,

a.s. is also a direct result of (23) (). Now we prove 2. First we argue that . This is true by the duality theory in linear programming and the fact that and the dual feasibility set is nonempty. Suppose that . Then we have

We want to estimate . Suppose that are all the extreme points of the dual feasible region and denote . Then by the theory of LP, we have

Note that we also have , then

| (24) |

Similarly we can show

which concludes 2 if we let . According to Theorem 3.1 and discussion about the exact algorithm, we still have that the expected Gauss-Southwell-q rule (21) holds with . Then

Remark 3

(i). Theorem 3.2 justifies the inexact algorithm. In particular, if we let be small, then we showed that the expected objective function value sequence also converges to the vicinity of the optimal one linearly. During the implementation, we could either let to be a sequence proportional to , or choose them equally as a sufficiently small number so that their accumulation is also insignificant. Choice of the sequence is less stringent if we occasionally project the iterates to the feasible region so that the accumulated error is offset.

(ii). In the proof we implicitly assume that the dual feasible region exists at least one extreme point. This can be implied if has linearly independent rows. In fact, we can eliminate redundant rows of . After this operation, the feasible region remains the same; the analysis and implementation of the algorithms are also similar.

(iii). Inexact versions of the algorithms proposed in Section 4 are of similar fashion and we will omit detailed and repetitive explanations.

4 Random block coordinate descent and optimal transport

Denote the cost matrix in (9). Then calculating the OT between two measures with finite support (problem (9)) is a special case of (10), where , and . The constraint matrix has the following structure:

| (30) |

where is an identity matrix, is an dimensional vector of all ’s (then ). Right hand side in (10) has the form , where can be two discrete probability distributions. Next, we discuss the property of matrix and .

Property of matrix

A nonzero is an elementary vector of if and there is no nonzero that is conformal to and . According to the definition in (30), we say that a nonzero matrix is an elementary matrix of if is an elementary vector of . For simplicity, a matrix being conformal to means being conformal to for the rest of this paper.

Now we define a set :

| a multiple of one of the following matrices: | ||

First, we state a Lemma about , the proof of which is trivial and thus omitted.

Lemma 1

Every matrix in is an elementary matrix of .

Then we show every element in the null space of is related to a matrix in .

Lemma 2

For any nonzero such that , there exists such that is conformal to .

Proof

We prove this by contradiction and induction. We assume a nonzero such that and no is conformal to .

Note that implies

| (31) |

Then without loss of generality, suppose that

Otherwise since is nonzero we can permute row/column to let and by (31) all entries in the first row/column after permutation cannot have the same sign.

By (31), the first column of must have one negative element. Suppose WLOG. The second row of must have one positive element, so suppose WLOG. Since no is conformal to , we must have otherwise is conformal to . Therefore, the principal matrix of has the following sign arrangement (after appropriate row/column permutations),

where we use , , , and to indicate that the corresponding entry is positive, nonnegative, negative, and nonpositive respectively.

If , then the above pattern is impossible as the first column sums to some positive number, leading to a contradiction with (31). Suppose that . For math induction, we assume that,

after appropriate row/column permutations, the principal matrix of has the following sign arrangement (),

| (32) |

namely,

th column of needs to have at least one negative element, otherwise, it contradicts with (31). WLOG, we suppose .

We now claim that the rest of the elements in the th row has to be non-positive, namely , . Otherwise, let be the largest index in such that . Then the submatrix takes the form,

| (33) |

and it is conformal to after row/column permutations. To see this, we can move the first column of (33) to the last. For the whole matrix , it is equivalent to move the th column and insert it between and th column and shift the resulting submatrix to the upper left corner through permutation operations.

While due to (31), th row of needs to have at least one positive element and we have just shown that , , so WLOG we suppose . Similar argument shows if there is no is conformal to , so , .

Therefore, the principal matrix of has exactly the same sign pattern as indicated by (32), after appropriate row/column permutations. So we have the induction .

Finally, we show that characterizes all the elementary matrices.

Theorem 4.1

Given any , if , then has a conformal realization (rockafellar1999network, , Section 10B), namely:

| (34) |

where are elementary matrices of and is conformal to , for all . In particular, , . Therefore, includes all the elementary matrices of .

Proof

By Lemma 2, we suppose that and is conformal to . Then can be scaled properly by such that and is conformal to . Denote and , both of them are conformal to .

By apply the same procedure to , we get such that . We can repeat this process and eventually, we have that the conformal realization (34) holds since and is strictly decreasing.

If is an elementary matrix, in previous construction of (34), we notice that and both of them are conformal to . If , we have while is conformal to . This contradicts the assumption of as an elementary matrix. When , is a multiple of the special matrix in the description of after a certain row/column permutation. Summing up, includes all the elementary matrices of .

Remark 4

For a given such that , a simple algorithm following the proof in Theorem 4.1 to find an elementary matrix conformal to will cost at most operations. We can select an appropriate such that is conformal to and . By repeating this process, we can find the conformal realization in steps. Therefore, the total number of operations needed to find the conformal realization is . In comparison, the approach proposed by tseng2010coordinate finds a conformal realization with support cardinality less than (usually, is much smaller than ) and requires operations.

Working set selection

By analyzing the structure of elementary matrices of , we will have a better idea of potential directions along which the transport cost is minimized by a large amount. This is supported by the following theorem, where we continue using notations introduced in Section 3.

Theorem 4.2

Proof

Now we discuss two approaches to carefully select the support set at iteration of the block coordinate descent method (15):

-

1.

Diagonal band. Given , denote

and construct matrix such that

(36) Therefore, has the following structure:

It is like a band of width across the diagonal, hence the name. Then we may construct and as follows:

(37) Note that .

-

2.

Submatrix. Given , obtain and such that

(38) In this case, the support of is a submatrix of size . Therefore, .

Next, we discuss two random block coordinate descent algorithms to solve the LP problem (10) with given in (30) whose working set selections are based on the two approaches discussed above.

The following result describes the convergence property of Algorithm 2.

Theorem 4.3

Proof

Given , Theorem 4.2 guarantees that there exists such that if , then (35) holds for and , i.e.,

| (39) |

Next, we will estimate the probability that holds.

Suppose that after row/column permutations and scaling of , we obtain , . Then after appropriate row and column swapping, can be written as

| (52) |

That is, elements and are nonzeros; elements and are nonzeros, for all ; elements and are nonzeros; all other elements are zeros. Obviously, support of this matrix is covered by the support of in (36). Moreover, by moving the whole support in matrix (52) downwards or to the bottom right corner, we can create at least more different matrices whose support are all covered by . These matrices can be obtained by permuting rows and columns of in in different ways. Therefore, the probability that will cover the support of is at least , and we have that

Therefore, the expected Gauss-Southwell- rule (21) holds with at least .

Remark 5

It can be shown that if is large enough and is chosen between and , then the lower bound for constant derived in Theorem 4.3 is better than the one estimated for Algorithm 1, i.e., . In fact, we have the following results.

Lemma 3

Suppose that and satisfies

and satisfies

Then for any , and , we have .

Proof

See Appendix.

Let , , . Then according to Lemma 3, for , the lower bound is larger. We believe that this is a fairly reasonable range of when grows large. This lower bound is improved because we have knowledge of the structure of the elementary matrix when solving OT problems.

As for the submatrix approach, we often find it quite efficient in numerical experiments. The optimal solution in the submatrix approach can also be decomposed in . More precisely, consider that is chosen according to (38) associated with a submatrix of size . Then, the decomposition of (more rigorously its matrix form) only involves multiples and permutations of the elementary matrices in . However, global convergence with a fixed-width submatrix is not guaranteed. In fact, there is a counterexample (see B). Therefore, we propose an algorithm that combines these two approaches together.

The convergence of Algorithm 3 is guaranteed by the next theorem.

Theorem 4.4

Proof

Given , Theorem 4.2 shows that there exists such that if , then (35) holds with and . We estimate the probability that .

First, consider the case that after row/column permutations and scaling of , we obtain , . If is chosen according to (37), then similar to discussion in Theorem 4.3, will cover the support of with probability at least . If is chosen according to (38), then will cover the support of with probability

Therefore, in this case, the probability that cover the support of is:

Then we consider the case that when we get , after row/column permutations and rescaling of . In this case, if is chosen according to (37), will cover the support of with probability at least ; if is chosen according to (38), this probability is . Therefore, in this case we have . In general, the probability that cover the support of is at least . Similar to discussion in Theorem 4.2, (21) will hold with .

Accelerated random block coordinate descent

Algorithm 4 is an accelerated random block coordinate descent (ARBCD) algorithm. It selects the working set in a different way from Algorithm 3 intermittently for acceleration purposes. At certain times, we construct based on the iterates generated by the algorithm in the past, i.e., . This vector reflects the progress achieved by running the RBCD-SDB for a few iterations and predicts the direction in which the algorithm could potentially make further improvements. This choice is analogous to the momentum concept often employed in acceleration techniques in optimization, such as in the heavy ball method and Nesterov acceleration. Algorithm 4 has a similar convergence rate as Algorithm 3 (note that the acceleration iteration occurs occasionally). We will verify its improved performance in the numerical experiments.

5 Numerical experiments

In this section, we conduct numerical experiments on various examples of OT problems111All experiments are conducted using Matlab R2021b on Dell OptiPlex 7090 with CPU: Intel(R) Core(TM) i9-10900 @ 2.80GHz (20 CPUs), 2.8GHz and RAM: 65536Mb. Data and codes are uploaded to https://github.com/yue-xie/RBCDforOT.. In Section 5.1, we compare various random block coordinate descent methods with different working set selections proposed in this article. Then, we compare the one with the best performance - ARBCD with Sinkhorn in Section 5.2.1 and an interior point inspired algorithm in Section 5.2.2. Finally, a large-scale OT problem is solved using ARBCD in Section 5.3.

5.1 Comparison between various random block coordinate descent methods with the different working set selection rules

In this subsection, we apply the proposed random block coordinate descent methods (Alg. 1 - Alg. 4) to calculate the Wasserstein distance between three pairs of distributions. We compare these algorithms to illustrate the difference between various working set selection rules. Additionally, we inspect the differences between the theoretical and actual convergence rates, as well as the solution sparsity.

Experiment settings

We compute the Wasserstein distance between a pair of 1-dim probability distributions (standard normal and uniform over ), a pair of 2-dim probability distributions (uniform over and an empirical invariant measure obtained from IPM simulation of reaction-diffusion particles in advection flows, detailed configurations can be found in wang2022deepparticle , Section 4.2, 2D cellular flow, ), and a pair of 3-dim distributions (uniform over and 3-dimensional multivariate normal distribution). When computing the Wasserstein distance between the pair of 1-dim probability distributions, we utilize their histograms (c.f. Section 2): Let . Centers of the cells are , ; , ; , , where is the pdf of standard normal; . When calculating the Wasserstein distance between the second and third pairs, we apply the point cloud setting (c.f. Section 2): Let . For each pair, use i.i.d. samples and , to approximate the two continuous probability measure respectively. Let and . In all cases, we normalize the cost matrix such that its maximal element is . Figure 1 captures these three pairs of distributions. For all cases, we first use the linprog in Matlab to find a solution with high precision (dual-simplex, constraint tolerance 1e-9, optimality tolerance 1e-10).

In 1-d case, we compare the histograms of two distributions; in 2-d and 3-d settings, we compare the samples/point clouds of two distributions.

Methods

We specify the settings of the four algorithms. All algorithms are started at the same feasible in each experiment. We solve the LP subproblems via linprog in Matlab with high precision (dual-simplex, constraint tolerance 1e-9, optimality tolerance 1e-7).

RBCD0. Algorithm 1: Vanilla random block coordinate descent. Let . Stop the algorithm after iterations.

RBCD-DB. Algorithm 2: Random block coordinate descent - diagonal band. Let . Stop the algorithm after iterations.

RBCD-SDB. Algorithm 3: Random block coordinate descent - submatrix and diagonal band. Let , and . Stop the algorithm after iterations.

ARBCD. Algorithm 4: Accelerated random block coordinate descent. Let , , and . Stop the algorithm after iterations. Note that the degree of freedom of the subproblem per iteration is , about the size of the original one.

X-axis is the number of operated iterations. Y-axis is the optimality gap (first row) or corresponding feasibility error (second row). First row of subplots in this figure shows the trajectory/progress of Alg. 1, Alg. 2, Alg. 3 and Alg. 4 when computing the Wasserstein distance between the three pairs of prob. in 1-d, 2-d, and 3-d respectively. Each algorithm is run 5 times and the curves showcase the average behavior. Second row of subplots shows the corresponding average feasibility error against computation time for the algorithms in this experiment.

Comments on Figure 2

We can see from Figure 2 that different approaches to choosing the working set of the same size can significantly affect the performance of random BCD types of methods. The curves of RBCD-DB are below those of RBCD0 in the long run, demonstrating that RBCD-DB has a better average performance. The reason for this is that RBCD0 generates the working set with full randomness, while RBCD-DB takes the structure of the elementary matrices into account. The latter makes an educated guess at the working set that decreases the objective function by a large amount. The submatrix approach (38) works very well in practice, as illustrated by the better performances of RBCD-SDB and ARBCD compared to RBCD-DB. In the long run, ARBCD outperforms RBCD-SDB, verifying the acceleration effect. It is important to note that the algorithm settings are set by default. We expect and have observed similar behaviors of the algorithms when changing their algorithm settings. Also note that the feasibility error of algorithms are controlled at a low level according to the figure. On the other hand, the curves in these numerical experiments suggest sublinear convergence rates. This observation does not contradict the theoretical linear convergence rate as long as is small enough. We will verify that the numerical experiments do not violate the lower bounds we derived for the constant in the linear convergence rates.

| 1-d case | 2-d case | 3-d case | ||||||

|---|---|---|---|---|---|---|---|---|

| iter. | iter. | iter. | ||||||

| 0 | 0.6237 | N/A | 0 | 9.3538 | N/A | 0 | 3.3456 | N/A |

| 1000 | 0.0083 | 4.3e-3 | 1000 | 0.6254 | 2.7e-3 | 1000 | 0.4746 | 2.0e-3 |

| 2000 | 0.0054 | 4.3e-4 | 2000 | 0.4773 | 2.7e-4 | 2000 | 0.3821 | 2.2e-4 |

| 3000 | 0.0045 | 1.8e-4 | 3000 | 0.4183 | 1.3e-4 | 3000 | 0.3438 | 1.1e-4 |

| 4000 | 0.0039 | 1.4e-4 | 4000 | 0.3846 | 8.3e-5 | 4000 | 0.3371 | 2.0e-5 |

| 5000 | 0.0036 | 8.0e-5 | 5000 | 0.3762 | 2.2e-5 | 5000 | 0.3350 | 6.2e-6 |

| 1-d case | 2-d case | 3-d case | ||||||

|---|---|---|---|---|---|---|---|---|

| iter. | iter. | iter. | ||||||

| 0 | 0.6237 | N/A | 0 | 9.3538 | N/A | 0 | 3.3456 | N/A |

| 1000 | 0.0130 | 3.9e-3 | 1000 | 0.6002 | 2.7e-3 | 1000 | 0.4601 | 2.0e-3 |

| 2000 | 0.0057 | 8.2e-4 | 2000 | 0.3920 | 4.3e-4 | 2000 | 0.3414 | 3.0e-4 |

| 3000 | 0.0036 | 4.6e-4 | 3000 | 0.3074 | 2.4e-4 | 3000 | 0.2878 | 1.7e-4 |

| 4000 | 0.0026 | 3.3e-4 | 4000 | 0.2592 | 1.7e-4 | 4000 | 0.2544 | 1.2e-4 |

| 5000 | 0.0020 | 2.6e-4 | 5000 | 0.2276 | 1.3e-4 | 5000 | 0.2316 | 9.4e-5 |

About Table 1 & 2

In these two tables we record the optimality gap every 1000 iterations for both RBCD0 and RBCD-DB. is the average function value at iteration , as we run the algorithms repeatedly for 5 times. The column denotes the estimation of the constant in the expected Gauss-Southwell- rule (21). It is calculated by the formula: . Values of in both Table 1 & 2 are far larger than the lower bounds for : and , corresponding to RBCD0 and RBCD-DB respectively, where . They also decrease as we run more iterations, indicating that the optimality gap shrinkage becomes less when the iterate is closer to the solution. We intend to study this phenomenon in our future work.

Y-axis records , i.e., the number of nonzero elements in . This figure shows the sparsity of in RBCD-SDB and ARBCD when computing the Wasserstein distance given the three pairs of probability distributions. Each curve represents the average over 5 repetitions.

Sparse solutions

We can observe from Figure 3 that the iterates in RBCD-SDB and ARBCD become sparse quickly and remain so. The reason for this is that the solutions of OT problems are usually sparse (for the point cloud setting, at least one of the optimal solutions satisfies because extreme points of the LP in this setting are permutation matrices divided by ), and these two algorithms can locate solutions with high accuracy relatively fast. As a result, the storage need for these two algorithms is considerably reduced after they have been run for a while. In the point cloud setting, storage complexity is typically expected to decrease from to (note that the degree of freedom of the subproblem per iteration is typically chosen as because of the diagonal band approach with ).

Choice of

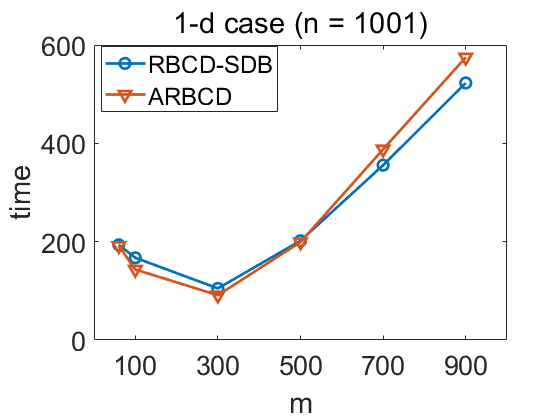

We run RBCD-SDB and ARBCD with different for a fixed to test the optimal setting of subproblem size. As is shown in Figure 4, the best choice of happens at a smaller value (10 or 30 percent of ) in 1-d case. In Figure 5, we observe similar phenomenon in 2-d case. The optimal setting of shifts to a larger value in 3-d case. We conclude that the optimal setting of may vary depending on many factors such as specific problem and subproblem solver efficiency. Based on the given results, we suggest choosing a smaller in practice, especially when there is limitation of computation resources.

Wall-clock time of algorithm RBCD-SDB and ARBCD to compute solutions of accuracy level . Algorithms are stopped if the solution accuracy is within the tolerance. Repetition is 3 and the average time is reported. .

| 50 | 150 | 250 | 350 | 450 | |||

|---|---|---|---|---|---|---|---|

| RBCD-SDB | iter. | 2.00e+04 | 3.55e+02 | 55.0 | 17.7 | 7.67 | |

| err. | 7.28e-07 | 4.95e-07 | 4.88e-07 | 4.01e-07 | 1.71e-07 | ||

| ARBCD | iter. | 1.95e+4 | 3.01e+02 | 58.0 | 22.3 | 8.33 | |

| err. | 5.68e-07 | 4.90e-07 | 4.92e-07 | 9.08e-08 | 9.49e-08 | ||

| 60 | 100 | 300 | 500 | 700 | 900 | ||

| RBCD-SDB | iter. | 2.00e+04 | 1.14e+04 | 2.21e+02 | 47.7 | 20 | 7.67 |

| err. | 3.55e-06 | 4.93e-07 | 4.93e-07 | 2.96e-07 | 3.51e-07 | 1.65e-07 | |

| ARBCD | iter. | 2.00e+04 | 9.67e+03 | 1.74e+02 | 47.3 | 22.3 | 8.33 |

| err. | 2.06e-06 | 4.94e-07 | 4.60e-07 | 4.09e-07 | 3.39e-07 | 1.17e-08 | |

| 75 | 150 | 450 | 750 | 1050 | 1350 | ||

| RBCD-SDB | iter. | 2.00e+04 | 6.80e+03 | 1.69e+02 | 50.3 | 19.3 | 7.67 |

| err. | 3.90e-06 | 4.93e-07 | 4.89e-07 | 4.18e-07 | 3.63e-07 | 1.70e-07 | |

| ARBCD | iter. | 2.00e+04 | 3.11e+03 | 1.67e+02 | 46.3 | 20 | 8 |

| err. | 1.85e-06 | 4.93e-07 | 4.86e-07 | 4.15e-07 | 3.08e-07 | 2.35e-07 | |

The average iterations and solution errors are recorded for reference. Feasibility errors are kept at a low level (below 1e-15) and omitted.

Numerical experiments from 1-d to 3-d cases. Solutions of accuracy level . Repetition is 5 and the box plot of time is shown. .

5.2 Comparison between ARBCD and algorithms in the literature

Experiment settings

We generated 8 pairs of distributions/patterns based on synthetic and real datasets. Descriptions are as follows. Note that we use histogram settings (c.f. Section 2) for datasets 1 and 2, and point cloud settings (c.f. Section 2) for other datasets. We use cost function .

Dataset 1: Uniform distribution to standard normal distribution over . Similar to the 1-d case in Section 5.1.

Dataset 2: Uniform distribution to a randomly shuffled standard normal distribution over .222Similar to the 1-d case in Section 5.1. We randomly shuffled the weights of the normal distribution histogram.

Dataset 3: Uniform distribution over to an empirical invariant measure. Similar to the 2-d case in Section 5.1.

Dataset 4: Distribution of to distribution of , where , and conform uniform distributions on and are independent.

Dataset 5: Similar to Dataset 4, with .

Dataset 6: Distribution of to distribution of , where , conforms a uniform distribution on and conforms a uniform distribution on .

Dataset 7: Distribution of to distribution of , where conforms uniform distribution over and conforms uniform distribution over .

Dataset 8: Distibution of a “cylinder” to a ”spiral”, see Figure 6.

In all cases, we normalize the cost matrix such that its maximal element is . For all cases, we use the linprog in Matlab to find a solution with high precision (dual-simplex, constraint tolerance 1e-9, optimality tolerance 1e-10).

5.2.1 Comparison with Sinkhorn

Methods

Implementation of Sinkhorn and ARBCD are specified as follows.

Sinkhorn. The algorithm proposed in cuturi2013sinkhorn to compute Wasserstein distance. Let be the coefficient of the entropy term. We let as suggested in dvurechensky2018computational . We consider the settings . Iterations of Sinkhorn are projected onto the feasible region using a rounding procedure: Algorithm 2 in altschuler2017near . Note that this projection step is added only for evaluation purposes because Sinkhorn does not provide feasible solutions if early stopped. It does not affect Sinkhorn’s main steps or Sinkhorn’s convergence at all. A similar approach is used for evaluation in jambulapati2019direct . In addition, we take all the updates to log space and use the LogSumExp function to avoid numerical instability issues. We stop Sinkhorn after 300000 iterations when and 100000 iterations if .

ARBCD. Algorithm 4: Accelerated random block coordinate descent. Let when and when . Let , and . Stop the algorithm after iterations. To be fair, we also project the solution in each iteration onto the feasible region via the rounding procedure. LP subproblems are solved via linprog in Matlab with high precision (dual-simplex, constraint tolerance 1e-9).

X-axis is the wall-clock time in seconds. Y-axis is the optimality gap . This figure shows the trajectory/progress of Algorithm 4: ARBCD and Sinkhorn with different settings when computing the Wasserstein distance between eight pairs of probability. ARBCD is run 5 times in each experiment and the curves showcase the average behavior.

This figure shows the transport plan for dataset 1. In each plot, the bottom distribution is uniform and the top is standard normal. Each line segment in between represents mass transported between a pair of points. The darker the line is, the more mass is transported. To plot the plans more clearly, we select every other mass point from to (so only include mass points). The overall transport plans from to are symmetric plots so we show them in this way due to presentation clarity.

| Dataset | method | time(s) | iter. | gap | feas.err. | subproblem size |

|---|---|---|---|---|---|---|

| ARBCD | 82.63 | 2326.7 | 4.9386e-07 | 4.1753e-17 | 22500 | |

| IPM1 | 7.539 | 198 | 3.36713-08 | 1.8924e-17 | 20890 | |

| 1 | IPM2 | 342.6 | 2000 | 4.3408-04 | 2.0889e-17 | 96550 |

| IPM3 | 5.044 | 19 | 1.2319-07 | 5.7525e-17 | 485194 | |

| IPM4 | 11.01 | 21 | 2.8982e-07 | 8.3340e-17 | 1000000 | |

| ARBCD | 240.3 | 10000 | 8.6011e-09 | 8.4518e-17 | 22500 | |

| IPM1 | 413.9 | 2000 | 1.0562-04 | 2.5704e-17 | 20890 | |

| 2 | IPM2 | 0.8815 | 16 | 1.1066-10 | 2.6584e-17 | 96550 |

| IPM3 | 5.923 | 20 | 1.7153-09 | 5.5638e-17 | 485194 | |

| IPM4 | 11.02 | 20 | 3.0871e-09 | 7.6026e-17 | 1000000 | |

| ARBCD | 30.80 | 375 | 1.2787e-04 | 2.3256e-17 | 22500 | |

| IPM1 | N/A | N/A | N/A | N/A | 21055 | |

| 3 | IPM2 | 67.43 | 2000 | 0.0146 | 2.1107e-17 | 92666 |

| IPM3 | 313.7 | 2000 | 0.0110 | 1.3510e-17 | 465075 | |

| IPM4 | 6.696 | 13 | 1.2655e-04 | 6.3473e-17 | 1000000 | |

| ARBCD | 145.2 | 4404.3 | 2.0519e-05 | 1.8927e-17 | 22500 | |

| IPM1 | N/A | N/A | N/A | N/A | 21312 | |

| 4 | IPM2 | N/A | N/A | N/A | N/A | 95639 |

| IPM3 | 345.7 | 2000 | 0.0083 | 1.5114e-17 | 474798 | |

| IPM4 | 7.716 | 15 | 2.0397e-05 | 7.2654e-17 | 1000000 | |

| ARBCD | 56.24 | 569.3 | 2.0412e-05 | 2.1927e-17 | 22500 | |

| IPM1 | N/A | N/A | N/A | N/A | 19988 | |

| 5 | IPM2 | N/A | N/A | N/A | N/A | 94468 |

| IPM3 | 320.6 | 2000 | 0.0050 | 1.4802e-17 | 492856 | |

| IPM4 | 7.616 | 15 | 8.6357e-06 | 6.6523e-17 | 1000000 | |

| ARBCD | 22.20 | 265 | 2.3475e-04 | 2.3622e-17 | 22500 | |

| IPM1 | N/A | N/A | N/A | N/A | 20143 | |

| 6 | IPM2 | 417.6 | 2000 | 0.0032 | 1.4814e-17 | 94072 |

| IPM3 | 337.9 | 2000 | 0.0065 | 1.3566e-17 | 482269 | |

| IPM4 | 5.438 | 11 | 1.4044e-04 | 6.2801e-17 | 1000000 | |

| ARBCD | 63.88 | 342.3 | 7.4215e-05 | 2.0341e-17 | 22500 | |

| IPM1 | N/A | N/A | N/A | N/A | 20139 | |

| 7 | IPM2 | N/A | N/A | N/A | N/A | 99792 |

| IPM3 | 278.4 | 2000 | 0.0221 | 1.9256e-17 | 486651 | |

| IPM4 | 5.136 | 11 | 5.7172e-05 | 5.5542e-17 | 1000000 | |

| ARBCD | 71.44 | 439.3 | 1.9715e-05 | 2.4507e-17 | 22500 | |

| IPM1 | N/A | N/A | N/A | N/A | 10702 | |

| 8 | IPM2 | N/A | N/A | N/A | N/A | 97305 |

| IPM3 | 219.1 | 2000 | 9.3439e-04 | 2.3205e-17 | 499786 | |

| IPM4 | 5.303 | 10 | 1.7165e-05 | 7.5243e-17 | 1000000 |

For ARBCD, the average iterations/time/gap/feasibility error are recorded for reference. For IPM, results of four different settings are recorded. The initial reduced problem size is reflected by the subproblem size column.

Comments on Figure 7

We can observe the following from Figure 7: although Sinkhorn with larger may converge fast, the solution accuracy is also lower. In fact, this is true for all Sinkhorn-based algorithms because the optimization problem is not exact - it has an extra entropy term. Therefore, the larger or is chosen, the less accurate the solution becomes. The discrepancy in accuracy does matter, as can be seen by inspecting the solution quality in Figure 8. On the other hand, when is set smaller, the convergence of Sinkhorn becomes slower. As can be seen from the plots, when or , Sinkhorn converges faster than ARBCD; when , Sinkhorn is comparable to ARBCD; when , Sinkhorn is slower than ARBCD. In conclusion, if relatively higher precision is desired, ARBCD is comparable with Sinkhorn. Moreover, note that here we solve the subproblems in ARBCD using Matlab built-in solver linprog. ARBCD can be faster if more efficient subproblem solvers are applied.

5.2.2 Comparison with an interior point-inspired algorithm

In this experiment we continue using the 8 datasets, except that Dataset 1 has .

Methods

Implementation of ARBCD and IPM are specified as follows.

ARBCD. Algorithm 4. Let , , and . We stop the algorithm when or when iteration reaches the maximum 10000. Other settings are similar to the experiment in Section 5.2.1. The algorithm outputs the last iterate.

IPM. A recent interior point-inspired algorithm proposed in zanetti2023interior . The Matlab code is directly from the github page in this paper (https://github.com/INFORMSJoC/2022.0184). We have made minimal modification for comparison. We stop the algorithm when or when the iteration hits 2000. Run four different settings separately, where the initial reduced problem’s sizes are approximately , , and () correspondingly. For fairness, we also project the solution in each iteration onto the feasible region via the rounding procedure. The algorithm outputs the solution with the best function value gap.

Comments on Table 4

We could see that the performance of ARBCD is better than IPM1, comparable to IPM2 and IPM3. IPM4 has the best performance but its subproblem size is also large. In fact, our observation of the memory storage is IPM4 IPM3 IPM2 IPM1 ARBCD. This is consistent with the subproblem size column. In practice, memory consumption of IPM4 is large, contradicting the goal of the authors in zanetti2023interior to save memory. In zanetti2023interior , the authors suggest the setting of IPM1. We also find that when implementing IPM1,IPM2,IPM3, the algorithms develop numerical instabilities, which is reported as N/A in the table. This is consistent with the result in zanetti2023interior (Figure 4) where ratio of the IPM algorithm finding an ideal solution is below .

We apply ARBCD to solve the large-scale 1-d problem (). y-axis of the left plot shows the optimality gap , y-axis of the right plot shows the feasibility error , and x-axis records the iteration number. ARBCD is repeated for 3 times and average results are reported.

5.3 Test on a large-scale OT problem

In this subsection, we generate a pair of 1-dim probability distributions with large discrete support sets (). For the first distribution, locations of the discrete support () are evenly aligned between , and their weights/probability are uniformly distributed (i.e., ). For the other distribution, locations of the discrete support are determined as , where is a random permutation of , and is a random variable that conforms to a uniform distribution over . Weights/probability are determined as , where is the pdf of the standard normal. The benchmark optimal solution is quickly computed via a closed-form formula for 1-d OT problem (). For ARBCD, we use the setting , , and .

Comments on Figure 9

The figure showcases the average behavior of ARBCD within iterations. It is able to locate a solution such that . The convergence is linear by observing the trajectory. We also want to point out that Gurobi 10.01 (academic license) runs out of memory on the desktop we use for numerical experiments. Indeed, saving memory is one of the merits that motivate us to consider RBCD methods.

6 Conclusion

In this paper, we investigate the RBCD method to solve LP problems, including OT problems. In particular, an expected Gauss-Southwell- rule is proposed to select the working set at iteration . It guarantees almost sure convergence and linear convergence rate and is satisfied by all algorithms proposed in this work. We first develop a vanilla RBCD, called RBCD0, to solve general LP problems. Its inexact version is also investigated. Then, by examining the structure of the matrix in the linear system of OT, characterizing elementary matrices of and identifying conformal realization of any matrix , we refine the working set selection. We use two approaches - diagonal band and submatrix - for constructing and employ an acceleration technique inspired by the momentum concept to improve the performance of RBCD0. In our numerical experiments, we compare all proposed RBCD methods and verify the acceleration effects as well as the sparsity of solutions. We also demonstrate the gap between the theoretical convergence rate and the practical one. We discuss the choice of the subproblem size. Furthermore, we run ARBCD, the best among all other methods, against others and also on large-scale OT problems. The results show the advantages of our method in finding relatively accurate solutions to OT problems and saving memory. For future work, we plan to extend our method to handle continuous measures and further improve it through parallelization and multiscale strategies, among other approaches.

Acknowledgements

The research of YX is supported by Guangdong Province Fundamental and Applied Fundamental Research Regional Joint Fund, (Project 2022B1515130009), and the start-up fund of HKU-IDS. The research of ZZ is supported by Hong Kong RGC grants (Projects 17300318 and 17307921), the National Natural Science Foundation of China (Project 12171406), Seed Fund for Basic Research (HKU), an R&D Funding Scheme from the HKU-SCF FinTech Academy, and Seed Funding for Strategic Interdisciplinary Research Scheme 2021/22 (HKU).

Declarations

The authors have no competing interests to declare that are relevant to the content of this article.

Data availability

The datasets generated during the current study are available in the GitHub repository,

https://github.com/yue-xie/RBCDforOT.

Appendix A Proof of Lemma 3

Proof

Suppose that

| (53) |

Then

where the third inequality holds because and . So we only need to prove (53). Note that

| (54) |

The last inequality holds because for and . Meanwhile, right hand side of (53) satisfies the following:

| (55) |

In order to show (53), we only need to confirm . By observation, this is equivalent to

The last inequality is assumed.

Appendix B A counterexample of interest

Example 1

Consider LP problem (9) with , . Let

where , such that . It can be easily seen that the optimal solution is . Suppose that . If we use the submatrix approach (38) with (largest number less than ) to select a working set , then the algorithm will be stuck at . It is not globally convergent. This cost matrix corresponds to the following case of transporting a three-point distribution to another one:

The dashed hexagon has an edge length of . Transport point distribution , and to that of , and .

References

- (1) Altschuler, J., Niles-Weed, J., Rigollet, P.: Near-linear time approximation algorithms for optimal transport via Sinkhorn iteration. Advances in Neural Information Processing Systems 30 (2017)

- (2) Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: International Conference on Machine Learning, pp. 214–223. PMLR (2017)

- (3) Beck, A.: The 2-coordinate descent method for solving double-sided simplex constrained minimization problems. Journal of Optimization Theory and Applications 162(3), 892–919 (2014)

- (4) Beck, A., Tetruashvili, L.: On the convergence of block coordinate descent type methods. SIAM Journal on Optimization 23(4), 2037–2060 (2013)

- (5) Benamou, J., Brenier, Y.: A computational fluid mechanics solution to the Monge-Kantorovich mass transfer problem. Numerische Mathematik 84(3), 375–393 (2000)

- (6) Benamou, J.D., Carlier, G., Cuturi, M., Nenna, L., Peyré, G.: Iterative bregman projections for regularized transportation problems. SIAM Journal on Scientific Computing 37(2), A1111–A1138 (2015)

- (7) Benamou, J.D., Collino, F., Mirebeau, J.M.: Monotone and consistent discretization of the Monge–Ampère operator. Mathematics of computation 85(302), 2743–2775 (2016)

- (8) Benamou, J.D., Froese, B.D., Oberman, A.M.: Numerical solution of the optimal transportation problem using the Monge–Ampère equation. Journal of Computational Physics 260, 107–126 (2014)

- (9) Berahas, A.S., Bollapragada, R., Nocedal, J.: An investigation of Newton-sketch and subsampled Newton methods. Optimization Methods and Software 35(4), 661–680 (2020)

- (10) Blondel, M., Seguy, V., Rolet, A.: Smooth and sparse optimal transport. In: International Conference on Artificial Intelligence and Statistics, pp. 880–889. PMLR (2018)

- (11) Bonafini, M., Schmitzer, B.: Domain decomposition for entropy regularized optimal transport. Numerische Mathematik 149(4), 819–870 (2021)

- (12) Brenier, Y.: Polar factorization and monotone rearrangement of vector-valued functions. Communications on Pure and Applied Mathematics 44(4), 375–417 (1991)

- (13) Chen, C., He, B., Ye, Y., Yuan, X.: The direct extension of ADMM for multi-block convex minimization problems is not necessarily convergent. Mathematical Programming 155(1), 57–79 (2016)

- (14) Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.X.: Scaling algorithms for unbalanced optimal transport problems. Mathematics of Computation 87(314), 2563–2609 (2018)

- (15) Cuturi, M.: Sinkhorn distances: Lightspeed computation of optimal transport. Advances in Neural Information Processing Systems 26 (2013)

- (16) Dvurechensky, P., Gasnikov, A., Kroshnin, A.: Computational optimal transport: Complexity by accelerated gradient descent is better than by Sinkhorn’s algorithm. In: International Conference on Machine Learning, pp. 1367–1376. PMLR (2018)

- (17) Facca, E., Benzi, M.: Fast iterative solution of the optimal transport problem on graphs. SIAM Journal on Scientific Computing 43(3), A2295–A2319 (2021)

- (18) Gasnikov, A.V., Gasnikova, E., Nesterov, Y.E., Chernov, A.: Efficient numerical methods for entropy-linear programming problems. Computational Mathematics and Mathematical Physics 56(4), 514–524 (2016)

- (19) Genevay, A., Cuturi, M., Peyré, G., Bach, F.: Stochastic optimization for large-scale optimal transport. Advances in neural information processing systems 29 (2016)

- (20) Genevay, A., Peyre, G., Cuturi, M.: Learning generative models with sinkhorn divergences. In: A. Storkey, F. Perez-Cruz (eds.) Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics, Proceedings of Machine Learning Research, vol. 84, pp. 1608–1617. PMLR (2018)

- (21) Gerber, S., Maggioni, M.: Multiscale strategies for computing optimal transport. Journal of Machine Learning Research 18 (2017)

- (22) Gottschlich, C., Schuhmacher, D.: The shortlist method for fast computation of the earth mover’s distance and finding optimal solutions to transportation problems. PloS one 9(10), e110214 (2014)

- (23) Guminov, S., Dvurechensky, P., Tupitsa, N., Gasnikov, A.: On a combination of alternating minimization and Nesterov’s momentum. In: International Conference on Machine Learning, pp. 3886–3898. PMLR (2021)

- (24) Gurbuzbalaban, M., Ozdaglar, A., Parrilo, P.A., Vanli, N.: When cyclic coordinate descent outperforms randomized coordinate descent. Advances in Neural Information Processing Systems 30 (2017)

- (25) Haker, S., Zhu, L., Tannenbaum, A., Angenent, S.: Optimal mass transport for registration and warping. International Journal of Computer Vision 60(3), 225–240 (2004)

- (26) He, B., Yuan, X.: On the convergence rate of the Douglas–Rachford alternating direction method. SIAM Journal on Numerical Analysis 50(2), 700–709 (2012)

- (27) Huang, M., Ma, S., Lai, L.: A Riemannian block coordinate descent method for computing the projection robust Wasserstein distance. In: International Conference on Machine Learning, pp. 4446–4455. PMLR (2021)

- (28) Jambulapati, A., Sidford, A., Tian, K.: A direct iteration parallel algorithm for optimal transport. Advances in Neural Information Processing Systems 32 (2019)

- (29) Jordan, R., Kinderlehrer, D., Otto, F.: The variational formulation of the Fokker–Planck equation. SIAM Journal on Mathematical Analysis 29(1), 1–17 (1998)

- (30) Lei, N., Su, K., Cui, L., Yau, S.T., Gu, X.D.: A geometric view of optimal transportation and generative model. Computer Aided Geometric Design 68, 1–21 (2019)

- (31) Li, W., Yin, P., Osher, S.: Computations of optimal transport distance with Fisher information regularization. Journal of Scientific Computing 75(3), 1581–1595 (2018)

- (32) Lin, T., Ho, N., Jordan, M.I.: On the efficiency of entropic regularized algorithms for optimal transport. Journal of Machine Learning Research 23(137), 1–42 (2022)

- (33) Ling, H., Okada, K.: An efficient earth mover’s distance algorithm for robust histogram comparison. IEEE transactions on pattern analysis and machine intelligence 29(5), 840–853 (2007)

- (34) Liu, Y., Wen, Z., Yin, W.: A multiscale semi-smooth Newton method for optimal transport. Journal of Scientific Computing 91(2), 39 (2022)

- (35) Lu, Z., Xiao, L.: On the complexity analysis of randomized block-coordinate descent methods. Mathematical Programming 152(1), 615–642 (2015)

- (36) Mandad, M., Cohen-Steiner, D., Kobbelt, L., Alliez, P., Desbrun, M.: Variance-minimizing transport plans for inter-surface mapping. ACM Transactions on Graphics (ToG) 36(4), 1–14 (2017)

- (37) Natale, A., Todeschi, G.: Computation of optimal transport with finite volumes. ESAIM: Mathematical Modelling and Numerical Analysis 55(5), 1847–1871 (2021)

- (38) Necoara, I., Clipici, D.: Parallel random coordinate descent method for composite minimization: Convergence analysis and error bounds. SIAM Journal on Optimization 26(1), 197–226 (2016)

- (39) Necoara, I., Nesterov, Y., Glineur, F.: Random block coordinate descent methods for linearly constrained optimization over networks. Journal of Optimization Theory and Applications 173(1), 227–254 (2017)

- (40) Necoara, I., Takáč, M.: Randomized sketch descent methods for non-separable linearly constrained optimization. IMA Journal of Numerical Analysis 41(2), 1056–1092 (2021)

- (41) Nesterov, Y.: Efficiency of coordinate descent methods on huge-scale optimization problems. SIAM Journal on Optimization 22(2), 341–362 (2012)

- (42) Otto, F.: The geometry of dissipative evolution equations: the porous medium equation. Taylor & Francis (2001)

- (43) Peleg, S., Werman, M., Rom, H.: A unified approach to the change of resolution: Space and gray-level. IEEE Transactions on Pattern Analysis and Machine Intelligence 11(7), 739–742 (1989)

- (44) Perrot, M., Courty, N., Flamary, R., Habrard, A.: Mapping estimation for discrete optimal transport. Advances in Neural Information Processing Systems 29 (2016)

- (45) Peyré, G., Cuturi, M.: Computational optimal transport. Foundations and Trends in Machine Learning 11(5-6), 355–607 (2019)

- (46) Polyak, B.T.: Introduction to optimization. Optimization Software, Inc., Publications Division, New York (1987)

- (47) Qu, Z., Richtárik, P., Takác, M., Fercoq, O.: SDNA: stochastic dual Newton ascent for empirical risk minimization. In: International Conference on Machine Learning, pp. 1823–1832. PMLR (2016)

- (48) Richtárik, P., Takáč, M.: Iteration complexity of randomized block-coordinate descent methods for minimizing a composite function. Mathematical Programming 144(1), 1–38 (2014)

- (49) Richtárik, P., Takáč, M.: Parallel coordinate descent methods for big data optimization. Mathematical Programming 156(1), 433–484 (2016)

- (50) Rockafellar, R.T.: Network flows and monotropic optimization, vol. 9. Athena scientific (1999)

- (51) Schmitzer, B.: A sparse multiscale algorithm for dense optimal transport. Journal of Mathematical Imaging and Vision 56, 238–259 (2016)

- (52) Schmitzer, B.: Stabilized sparse scaling algorithms for entropy regularized transport problems. SIAM Journal on Scientific Computing 41(3), A1443–A1481 (2019)

- (53) Sinkhorn, R.: A relationship between arbitrary positive matrices and doubly stochastic matrices. The annals of mathematical statistics 35(2), 876–879 (1964)

- (54) Solomon, J., De Goes, F., Peyré, G., Cuturi, M., Butscher, A., Nguyen, A., Du, T., Guibas, L.: Convolutional Wasserstein distances: Efficient optimal transportation on geometric domains. ACM Transactions on Graphics (ToG) 34(4), 1–11 (2015)

- (55) Sun, R., Ye, Y.: Worst-case complexity of cyclic coordinate descent: gap with randomized version. Mathematical Programming 185(1), 487–520 (2021)

- (56) Toselli, A., Widlund, O.: Domain decomposition methods-algorithms and theory, vol. 34. Springer Science & Business Media (2004)

- (57) Tseng, P., Yun, S.: Block-coordinate gradient descent method for linearly constrained nonsmooth separable optimization. Journal of Optimization Theory and Applications 140(3), 513–535 (2009)

- (58) Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Mathematical Programming 117(1), 387–423 (2009)

- (59) Tseng, P., Yun, S.: A coordinate gradient descent method for linearly constrained smooth optimization and support vector machines training. Computational Optimization and Applications 47(2), 179–206 (2010)

- (60) Villani, C.: Topics in optimal transportation, vol. 58. American Math. Soc. (2021)

- (61) Wang, Z., Xin, J., Zhang, Z.: DeepParticle: learning invariant measure by a deep neural network minimizing Wasserstein distance on data generated from an interacting particle method. Journal of Computational Physics p. 111309 (2022)

- (62) Wijesinghe, J., Chen, P.: Matrix balancing based interior point methods for point set matching problems. SIAM Journal on Imaging Sciences 16(3), 1068–1105 (2023)

- (63) Wright, S.: Primal-dual interior-point methods. SIAM (1997)

- (64) Xie, Y., Shanbhag, U.V.: SI-ADMM: A stochastic inexact ADMM framework for stochastic convex programs. IEEE Transactions on Automatic Control 65(6), 2355–2370 (2019)

- (65) Xie, Y., Shanbhag, U.V.: Tractable ADMM schemes for computing KKT points and local minimizers for -minimization problems. Computational Optimization and Applications 78(1), 43–85 (2021)

- (66) Xie, Y., Wang, X., Wang, R., Zha, H.: A fast proximal point method for computing exact Wasserstein distance. In: Uncertainty in artificial intelligence, pp. 433–453. PMLR (2020)

- (67) Yang, L., Li, J., Sun, D., Toh, K.C.: A fast globally linearly convergent algorithm for the computation of wasserstein barycenters. The Journal of Machine Learning Research 22(1), 984–1020 (2021)

- (68) Zanetti, F., Gondzio, J.: An interior point–inspired algorithm for linear programs arising in discrete optimal transport. INFORMS Journal on Computing (2023)