inkscapepath=i/svg-inkscape/ \svgpathsvg/

RadarCam-Depth: Radar-Camera Fusion for Depth Estimation

with Learned Metric Scale

Abstract

We present a novel approach for metric dense depth estimation based on the fusion of a single-view image and a sparse, noisy Radar point cloud. The direct fusion of heterogeneous Radar and image data, or their encodings, tends to yield dense depth maps with significant artifacts, blurred boundaries, and suboptimal accuracy. To circumvent this issue, we learn to augment versatile and robust monocular depth prediction with the dense metric scale induced from sparse and noisy Radar data. We propose a Radar-Camera framework for highly accurate and fine-detailed dense depth estimation with four stages, including monocular depth prediction, global scale alignment of monocular depth with sparse Radar points, quasi-dense scale estimation through learning the association between Radar points and image patches, and local scale refinement of dense depth using a scale map learner. Our proposed method significantly outperforms the state-of-the-art Radar-Camera depth estimation methods by reducing the mean absolute error (MAE) of depth estimation by 25.6% and 40.2% on the challenging nuScenes dataset and our self-collected ZJU-4DRadarCam dataset, respectively. Our code and dataset will be released at https://github.com/MMOCKING/RadarCam-Depth.

I Introduction

Perceiving the environment is critically important for autonomous driving, where accurate depth estimation is fundamental for dense reconstruction, 3D detection, and obstacle avoidance. Cameras and range sensors have been widely used for perceiving dense depth. Learned monocular depth (mono-depth) estimation methods based on CNN networks [1, 2, 3, 4, 5] have been prevalent in recent years due to their versatile applicability and plausible accuracy. They benefit from the solid contextual priors from extensive training on diverse datasets. While mono-depth networks excel in estimating up-to-scale depth, they fail to predict the accurate metric scale of depth. This limit arises from the inherent challenge of capturing scale with single-view cameras and the difficulty of learning the diverse scale in complex scenarios.

Range sensors like LiDAR and Radar can provide metric scale information of the scene [6, 7]. While LiDAR is renowned for its ability to generate dense and accurate point clouds, its widespread deployment faces challenges due to high costs, power consumption, and data bandwidth limits. In contrast, 3D Radar has witnessed remarkable advancements, making it attractive in autonomous driving, owing to its affordability, low power consumption, and high resilience in challenging fog and smoke scenarios. The emerging 4D Radar additionally provides an elevation dimension with extended applicability. Fusing data from a single camera and a Radar for metric dense depth estimation becomes a promising research avenue [8, 9, 10, 11, 12, 13]. It holds substantial significance in autonomous driving since its appealing characteristics, like cost-effectiveness, complementarity in sensing capabilities, and remarkable robustness and reliability.

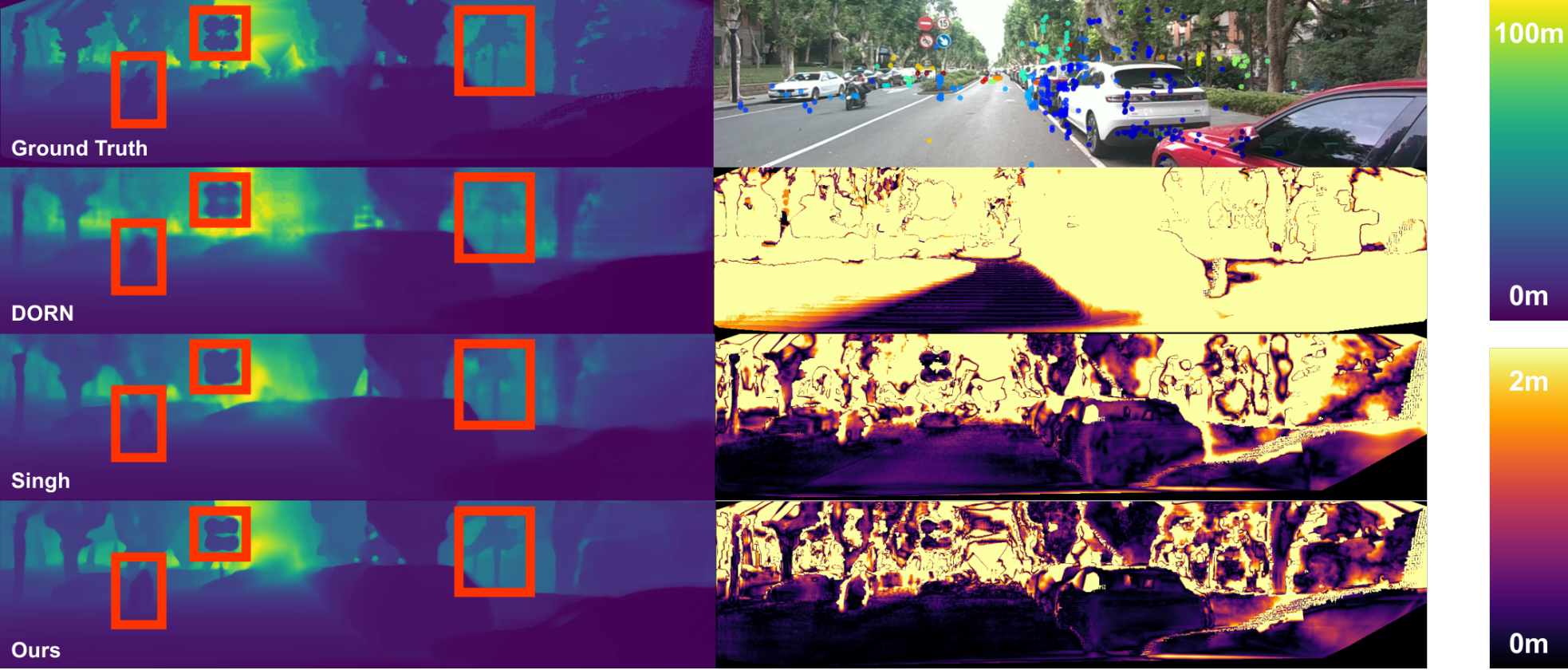

However, sparsity, substantial noise in Radar data, and the imperfect cross-modal association between Radar points and image pixels pose challenges for dense depth estimation. Previous Radar-Camera methods treat the dense depth estimation as a depth completion problem [10, 12]. In these methods, the initial step involves associating the Radar depth to the camera pixels, generating a sparse or semi-dense depth map, which is then completed by an Unet-like network with a fusion of the Radar depth and image data. In this paper, we propose a novel paradigm, RadarCam-Depth, which capitalizes on robust and versatile scaleless monocular depth prediction and learns to assign metric dense scales to the mono-depth with Radar data. Our novel paradigm offers two main benefits: (i) We circumvent the direct fusion of raw data or encodings of heterogeneous Radar and camera data, thereby preventing aliasing artifacts and preserving high-fidelity fine details in dense depth estimation (see Fig.1). (ii) Unlike learning the depth completion with a wide convergence basin, we essentially learn to complete the sparse scale obtained by aligning Radar depth with the mono-depth, which is more accessible and conducive to effective learning.

The primary contributions of this work are as follows: (i) We introduce the first approach that enhances the highly generalizable, scaleless mono-depth prediction with the dense metric scale intricately inferred from the noisy and sparse Radar data. (ii) We present a novel metric dense depth estimation framework that effectively fuses heterogeneous Radar and camera data. Our framework comprises four stages: mono-depth prediction, global scale alignment of the monocular depth, Radar-Camera quasi-dense scale estimation, and scale map learner for refining the quasi-dense scale locally. (iii) The proposed method is extensively tested on the nuScenes benchmark and our self-collected ZJU-4DRadarCam dataset. It outperforms the state-of-the-art (SOTA) techniques, substantially enhancing Radar-Camera dense depth estimation with high metric accuracy and strong generalizability. (iv) To fertilize future research in robust depth estimation, we will release our code and high-quality ZJU-4DRadarCam dataset, including raw 4D Radar data, RGB images, and meticulously generated ground truth from LiDAR measurements.

II RELATED WORK

II-A Monocular Depth Estimation

Monocular depth estimation is a challenging task due to the inherent scale ambiguity. Many researchers have tried to address this issue by integrating it with optical flow [14], uncertainty estimation [15], semantic segmentation [16], instance segmentation [17] and visual odometry [18]. Although some previous studies [19, 20, 4, 3] have achieved promising results in affine-invariant scaleless depth estimation across diverse datasets, recovering the metric scale remains a significant challenge. Some existing methods rely on inertial data to provide scale. To enhance the generalization, VI-SLAM [21] warps the input image to match the orientation prevailing in the training dataset. CodeVIO [22] proposes a tightly coupled VIO system with optimizable learned dense depth. It jointly estimates VIO poses and optimizes the predicted and encoded dense depth of specific keyframes efficiently. Xie et al. [23] utilize a flow-to-depth layer to refine camera poses and generate depth proposals. They solve a multi-frame triangulation problem to enhance the estimation accuracy. Recently, Wofk et al. [5] introduced a framework for metric dense depth estimation from the VIO sparse depth and monocular depth prediction, which inspires our work. They first globally align the scaleless mono-depth with the metric VIO sparse depth and then learn to refine the dense scale of the globally aligned mono-depth.

II-B Depth Estimation from Radar-Camera Fusion

The fusion of Radar and camera data for metric depth estimation is an active research topic. Lin et al. [8] introduce a two-stage CNN-based pipeline that combines Radar and camera inputs to denoise Radar signals and estimate dense depth. Long et al. [9] propose a Radar-2-Pixel (R2P) network that utilizes radial Doppler velocity and induced optical flow from images to associate Radar points with corresponding pixel regions, enabling the synthesis of full-velocity information. They also achieve image-guided depth completion using Radar and video data [10]. Another approach, DORN [11] proposed by Lo et al., extends Radar points in the elevation dimension and applies deep ordinal regression network-based [24] feature fusion. Unlike other methods, R4dyn [13] creatively incorporates Radar as a weakly supervised signal into a self-supervised framework and employs Radar as an additional input to enhance the robustness. However, their method primarily focuses on vehicle targets and does not fully correlate all Radar points with a larger image area, resulting in lower depth accuracy. Recently, Singh et al. [12] present a method that relies solely on a single image frame and Radar point cloud. Their first-stage network infers the confidence scores of Radar-Pixel correspondence, generating a semi-dense depth map. They further employ a gated fusion network to control the fusion of multi-modal Radar-Camera data and predict the dense depth. However, all the above methods directly encode and concatenate the ambiguous Radar depth and images, confusing the learning pipeline and resulting in suboptimal depth estimation.

III METHODOLOGY

Our goal is to recover the dense depth from a pair of RGB image and Radar point cloud in the image coordinate. and denote the height and width of the image, respectively. Either 3D or 4D Radar usually has a small field of view with ambiguous sparse data deteriorated by intensive noises. For cross-modal fusion, it is straightforward to project Radar points onto the image plane, generating Radar depth. However, direct fusion of the inherently ambiguous and sparse Radar depth with images, achieved by concatenating their encodings or raw data, can confuse the learning pipeline [25, 26, 27, 12], resulting in aliasing and other undesirable artifacts in the estimated depth. In this paper, we propose to get the scaleless dense depth with existing versatile monocular depth prediction networks, then learn to augment scaleless depth with accurate metric scales from Radar data.

The framework of our Radar-Camera depth estimation method consists of four stages: scaless monocular depth prediction, global alignment (GA) of mono-depth, quasi-dense scale estimation, and scale map learner (SML) for refining dense scale locally, as shown in Fig.2.

III-A Monocular Depth Prediction

We employ off-the-shelf networks to predict robust and accurate scaleless depth from a single-view image. The high quality of the mono-depth prediction furnishes a solid foundation for scale-oriented learning. In this research, we harnessed SOTA mono-depth networks, like MiDaS v3.1 [19, 3] and DPT-Hybrid [20] with pre-trained weights on mixed diverse datasets. Both networks are built upon transformer architecture [28] and trained with scale and offset-invariant losses, ensuring strong generalization. They infer the relative depth relationship between pixels, producing dense depth (see Fig.3). Notably, our framework is versatile and compatible with arbitrary mono-depth prediction networks that predict depth , inverse depth or others.

III-B Global Alignment

We align the scaleless mono-depth prediction with the Radar depth originating from projecting raw Radar points , by a global scaling factor and optional offset . The global aligned metric depth is calculated by . Then, it is fed into the subsequent scale map learner (SML). There are many options for performing this global alignment between the projected Radar depth and mono-depth prediction, including: (i) Var: A varying for individual frame of mono-depth, calculated via root-finding algorithms [29, 30]. (ii) Const: A constant for all frames of mono-depth prediction, deemed as the mean of scale estimates on the entire training samples. (iii) LS: and for individual frames, computed with linear least-squares optimization [19]. (iv) RANSAC: and for individual frames, computed with linear least-squares while incorporating RANSAC outlier rejection of the Radar depth. We randomly sample 5 Radar points with valid depth values, estimate and with the sampled Radar depth, and adopt the first pair of and that yields an inlier ratio over . The inlier is the one where the discrepancy between the Radar point depth and aligned mono-depth is under m or the inverse depth discrepancy is under .

III-C Quasi-Dense Scale Estimation

Due to inherent sparsity and noises in Radar data, additional enhancement of raw Radar depth is crucial before conducting the scale map learner. To densify the sparse Radar depth obtained from projection, we exploit a transformer-based Radar-Camera data association network (shorthand RC-Net), which predicts the confidence of Radar-Pixel associations. Pixels without a direct correspondence of Radar point during projection might be associated with the depth of neighboring Radar point, thereby densifying the sparse Radar depth to a quasi-dense depth map, denoted as .

III-C1 Network Architecture

Our RC-Net (see Fig.2) is adapted from existing vanilla network RC-vNet [12] by further incorporating self and cross-attention [32] in a transformer module. The image encoder is a standard ResNet18 backbone [33] with 32, 64, 128, 128, 128 channels in each layer, and the Radar encoder is a multi-layer perceptron consisting of fully connected layers with 32, 64, 128, 128, 128 channels. The Radar features are mean pooled and reshaped to the shape of image features. Subsequently, Radar and image features are flattened and passed through layers of self and cross-attention, which involves a larger receptive field for the cross-modal association. These features, combined with skip connections from intermediate layers in the encoder, are forwarded to a decoder with logit output. Finally, the logits are activated by the sigmoid function to obtain the confidence map of cross-modal associations.

III-C2 Confidence of Cross-Modal Associations

For a Radar point and a cropped image patch in its projection vicinity, we use RC-Net to obtain a confidence map , which describes probability of whether the pixels in corresponds to . With points in a Radar point cloud , the forward pass generates confidence maps for individual Radar points. Therefore, each pixel , within image has associated Radar point candidates. By selecting the maximum score above the threshold , we can find the corresponding Radar point for pixel , and assign the depth of to . Ultimately, this stage yields a quasi-dense depth map :

| (1) |

where , and returns the depth value. Finally, the quasi-dense scale map is calculated from , and its inverse is subsequently fed into the scale map learner.

III-C3 Training

For the nuScenes dataset, we first project multiple frames to the current LiDAR frame to obtain the cumulative LiDAR depth . After that, linear interpolation in log space [34] is performed on to obtain . Because of its density, is directly interpolated without accumulation for the ZJU-4DRadarCam dataset. For supervision, we use to build binary classification labels , where points with a depth difference less than 0.5m from the Radar point are labeled as positive. After constructing , we minimize the binary cross-entropy loss:

| (2) | ||||

where denotes the image region of , is a pixel coordinate, and is the confidence of correspondence.

III-D Scale Map Learner

III-D1 Network Architecture

Inspired by [5], we construct a scale map learner (SML) network based on MiDaS-small [19] architecture. SML aims to learn a pixel-level dense scaling map for , which completes the quasi-dense scale map and refine the metric accuracy of . SML requires concatenated and as input. The empty locations in are filled with ones. SML regresses a dense scale residual map , where values can be negative. We obtain the final scale map via , and the final metric depth estimation is computed by .

III-D2 Training

Ground truth depth is obtained from projecting 3D LiDAR points. LiDAR depth is further interpolated to get a densified depth . During training, we minimize the difference between the estimated metric dense depth and , with a smoothed L1 penalty:

| (3) |

| (4) |

where , is the weight of , denotes the region where ground truth has valid depth values. is set to 1 in our practice.

IV EXPERIMENTS

IV-A Datasets

IV-A1 NuScenes Dataset

We first evaluate our method on nuScenes benchmark [31]. NuScenes dataset encompasses data collection across 1000 scenes in Boston and Singapore with LiDAR, 3D Radar, camera, and IMU sensors. It comprises around 40000 synchronized Radar-Camera keyframes. We followed the same data splits as [12] with 850 scenes for training and validation, and 150 for testing. The test split is officially offered by nuScenes v1.0.

IV-A2 ZJU-4DRadarCam Dataset

For extensive evaluation, we collected our own dataset, named ZJU-4DRadarCam, using a ground robot (Fig.6) equipped with Oculii’s EAGLE 4D Radar, RealSense D455 camera, and RoboSense M1 LiDAR sensors. Our dataset consists of various driving scenarios, including urban and wilderness environments. Compared to nuScenes dataset, our ZJU-4DRadarCam offers 4D Radar data with denser measurements. Besides, we provide denser LiDAR depth for supervision and evaluation (see Fig.4). Our ZJU-4DRadarCam comprises a total of 33,409 synchronized Radar-Camera keyframes, split into 29312 frames for training and validation and 4097 frames for testing.

IV-B Training Details and Evaluation Protocol

For the nuScenes dataset, following [12], we accumulate the individual of 160 frames nearby to get , which is then interpolated to yield . Dynamic objects are masked out during the above process. For the ZJU-4DRadarCam dataset, we directly interpolate to obtain with linear interpolation [34] in the log space of depth.

For training the RC-Net on nuScenes, with an input image size of , the size of the cropped patch during confidence map formation is set to . For the training on ZJU-4DRadarCam, the input image size is , while the patch size is . We employ the Adam optimizer with =0.9 and =0.999, and a learning rate of for 50 epochs. Data augmentations, including horizontal flipping, saturation, brightness, and contrast adjustments, are applied with a 0.5 probability. We train our RC-Net for 50 epochs with an NVIDIA RTX 3090 GPU, taking approximately 14 hours with a batch size of 6.

We adopt MiDaS-Small architecture for our SML, where the encoder backbone is initialized with pre-trained ImageNet weights [35], and other layers are randomly initialized. The input data is resized and cropped to . We use an Adam optimizer with and . The initial learning rate is set to and reduced to after 20 epochs. Training SML for 40 epochs takes about 24 hours with a batch size of 24.

Some widely adopted metrics from the literature are used for evaluating the depth estimations, including mean absolute error (MAE), root mean squared error (RMSE), absolute relative error (AbsRel), squared relative error (SqRel), the errors of inverse depth (iRMSE, iMAE), and [36]. To better illustrate our experimental details, the demo video is available at https://youtu.be/JDn0Sua5d9o.

IV-C Evaluation on NuScenes

We evaluate the metric dense depth against within the range of 50, 70, and 80 meters (see Tab.I). Our proposed RadarCam-Depth outperforms all the compared Radar-Camera methods and surpasses the second best method [12] by a large margin at all ranges. Specifically, we observe 25.6%, 23.4%, and 22.5% reductions in MAE and 20.9%, 20.2%, and 19.4% drops in RMSE for 50m, 70m, and 80m, respectively. We attribute the outstanding performance of RadarCam-Depth to the reasonable monocular prediction and our scale learning strategy. Notably, RadarCam-Depth solely relies on a single-frame image and a Radar point cloud, obviating the need to aggregate multi-frame data. The “Radar” and “Image” columns in Tab.I specify the quantities of point clouds and images used as inputs for various methods. For nuScenes dataset, we adopt the sky-sensitive pre-trained model, MiDaS v3.1, as our monocular depth prediction network, which can accurately differentiate the sky from others (see Fig.3). However, since the depth estimations in sky regions are not associated with corresponding LiDAR depth, they are not counted during metric evaluations. Some snapshots of depth estimations from different methods are shown in Fig.7.

| Eval Dist | Method | Radar | Image | MAE | RMSE |

| 50m | RC-PDA [10] | 5 | 3 | 2225.0 | 4156.5 |

| RC-PDA with HG [10] | 5 | 3 | 2315.7 | 4321.6 | |

| DORN [11] | 5(x3) | 1 | 1926.6 | 4124.8 | |

| Singh [12] | 1 | 1 | 1727.7 | 3746.8 | |

| RadarCam-Depth | 1 | 1 | 1286.1 | 2964.3 | |

| 70m | RC-PDA [10] | 5 | 3 | 3326.1 | 6700.6 |

| RC-PDA with HG [10] | 5 | 3 | 3485.6 | 7002.9 | |

| DORN [11] | 5(x3) | 1 | 2380.6 | 5252.7 | |

| Singh [12] | 1 | 1 | 2073.2 | 4590.7 | |

| RadarCam-Depth | 1 | 1 | 1587.9 | 3662.5 | |

| 80m | RC-PDA [10] | 5 | 3 | 3713.6 | 7692.8 |

| RC-PDA with HG [10] | 5 | 3 | 3884.3 | 8008.6 | |

| DORN [11] | 5(x3) | 1 | 2467.7 | 5554.3 | |

| Lin [8] | 3 | 1 | 2371.0 | 5623.0 | |

| R4Dyn [13] | 4 | 1 | N/A | 6434.0 | |

| Sparse-to-dense [37] | 3 | 1 | 2374.0 | 5628.0 | |

| PnP [38] | 3 | 1 | 2496.0 | 5578.0 | |

| Singh [12] | 1 | 1 | 2179.3 | 4898.7 | |

| RadarCam-Depth | 1 | 1 | 1689.7 | 3948.0 |

IV-D Evaluation on ZJU-4DRadarCam

We follow a similar way to Sec.IV-C for the evaluations on the ZJU-4DRadarCam dataset. For the mono-depth prediction in our framework, we tried both MiDaS v3.1 [3], and DPT-Hybrid [20] models. The evaluations of the metric dense depth estimations from various methods are presented in Tab.II, where our approach at different configurations of mono-depth network and global alignment options are marked in bold. After a comprehensive evaluation, we observe that our methods with the DPT model perform better for depth metrics, while the methods with MiDaS demonstrate higher accuracy for inverse depth metrics. Overall, our proposed methodology exhibits significant improvements compared to existing Radar-Camera methods [12] and [11] (Fig.5(b)). Compared to the second best [12], the best configuration of our method shows 40.2%, 40.1%, and 40.2% reductions in MAE within ranges of 50m, 70m, and 80m, respectively.

| Dist | Method | MAE | RMSE | iMAE | iRMSE | AbsRel | SqRel | |

|---|---|---|---|---|---|---|---|---|

| 50m | DORN [11] | 2210.171 | 4129.691 | 19.790 | 31.853 | 0.157 | 939.348 | 0.783 |

| Singh [12] | 1785.391 | 3704.636 | 18.102 | 35.342 | 0.146 | 966.133 | 0.831 | |

| DPT+Var+RC-vNet [12] | 1243.339 | 3045.853 | 12.111 | 24.377 | 0.098 | 644.709 | 0.896 | |

| DPT+Const+RC-Net | 1082.927 | 2803.180 | 10.885 | 23.227 | 0.089 | 561.834 | 0.920 | |

| DPT+Var+RC-Net | 1067.531 | 2817.362 | 10.508 | 22.936 | 0.087 | 575.838 | 0.922 | |

| MiDaS+Var+RC-Net | 1177.257 | 3009.135 | 10.255 | 22.385 | 0.090 | 630.222 | 0.924 | |

| MiDaS+LS+RC-Net | 1083.691 | 2868.950 | 10.059 | 22.388 | 0.086 | 588.091 | 0.928 | |

| 70m | DORN [11] | 2402.180 | 4625.231 | 19.848 | 31.877 | 0.160 | 1021.805 | 0.777 |

| Singh [12] | 1932.690 | 4137.143 | 17.991 | 35.166 | 0.147 | 1014.454 | 0.828 | |

| DPT+Var+RC-vNet [12] | 1337.649 | 3358.212 | 12.047 | 24.294 | 0.099 | 672.084 | 0.894 | |

| DPT+Const+RC-Net | 1178.046 | 3121.317 | 10.824 | 23.149 | 0.090 | 589.377 | 0.918 | |

| DPT+Var+RC-Net | 1157.014 | 3117.721 | 10.444 | 22.853 | 0.087 | 601.052 | 0.921 | |

| MiDaS+Var+RC-Net | 1280.124 | 3323.488 | 10.189 | 22.300 | 0.091 | 658.416 | 0.922 | |

| MiDaS+LS+RC-Net | 1177.253 | 3179.615 | 9.996 | 22.305 | 0.086 | 614.801 | 0.926 | |

| 80m | DORN [11] | 2447.571 | 4760.016 | 19.856 | 31.879 | 0.161 | 1038.919 | 0.776 |

| Singh [12] | 1979.459 | 4309.314 | 17.971 | 35.133 | 0.147 | 1034.148 | 0.828 | |

| DPT+Var+RC-vNet [12] | 1365.383 | 3467.245 | 12.033 | 24.277 | 0.099 | 682.126 | 0.894 | |

| DPT+Const+RC-Net | 1206.541 | 3239.331 | 10.812 | 23.133 | 0.090 | 599.674 | 0.918 | |

| DPT+Var+RC-Net | 1183.471 | 3228.999 | 10.432 | 22.838 | 0.088 | 610.501 | 0.920 | |

| MiDaS+Var+RC-Net | 1309.859 | 3431.046 | 10.176 | 22.282 | 0.091 | 668.038 | 0.922 | |

| MiDaS+LS+RC-Net | 1205.137 | 3295.520 | 9.984 | 22.289 | 0.086 | 624.864 | 0.926 |

We report our proposed method’s runtime at the DPT-based mono-depth prediction configuration. The average processing times per frame are shown in Tab.III. Note that Mono-Pred and GA can run simultaneously with RC-Net. Regarding different scale global alignment methods, GA (Var) and GA (LS) exhibit relatively fast speeds, while GA (RANSAC) is significantly slow and not advocated.

| Mono-Pred | GA (Const) | GA (Var) | GA (LS) | GA (RANSAC) | RC-Net | SML |

| 0.0651 | - | 0.0624 | 0.0044 | 2.2903 | 0.2704 | 0.1227 |

IV-E Ablation

IV-E1 Transformer Module

We commence our analysis by focusing on the transformer mechanism incorporated within our novel quasi-dense scale estimation module, RC-Net. When the transformer component is disengaged, the architecture is identical to the pre-existing vanilla network, RC-vNet [12]. Our evaluation is conducted on the ZJU-4DRadarCam dataset, and the results are presented in Tab.IV. The comparison results are the error in quasi-dense depth estimation against ground truth within a range of 80 meters. Our RC-Net consistently outperforms RC-vNet across all evaluation metrics, as delineated in Tab.IV. Furthermore, as indicated in Tab.II, when integrated with the DPT+Var framework, DPT+Var+RC-Net exhibits notable performance superiority over its RC-vNet counterpart.

| Dataset | Method | MAE | RMSE | iMAE | iRMSE | Output Pts |

|---|---|---|---|---|---|---|

| ZJU-4D | RC-vNet [12] | 1308.742 | 3339.697 | 20.418 | 38.540 | 172567.846 |

| RC-Net | 1083.305 | 3052.870 | 16.203 | 33.414 | 178713.881 |

IV-E2 Global Alignment

Following the discussions in Sec.III-B, we systematically assess the four options for globally aligning the scale of mono-depth predictions. It is worth mentioning that we set a termination criterion of 400 iterations for the GA (RANSAC), at which point we halt the iterative process and select the values of and that yield the highest inlier ratio. The evaluation results of are presented in Tab.V within a range of 80 m. Combining the runtime performance in Tab.III, the best GA method for ZJU-4D (DPT) uses variable (Var), and the optimal method for ZJU-4D (MiDaS) is least-squares for and (LS). The experiments showcase that estimating for DPT leads to substantial inverse errors, significantly degrading the performance of the subsequent SML (conducted in inverse space). However, ZJU-4D (MiDaS) require simultaneous estimating and to achieve higher accuracy.

| Data | Method | MAE | RMSE | iMAE | iRMSE | AbsRel | SqRel | |

|---|---|---|---|---|---|---|---|---|

| ZJU-4D (DPT) | Const | 4733.158 | 6926.261 | 37.942 | 53.913 | 0.392 | 2501.383 | 0.343 |

| Var | 4726.168 | 6940.025 | 36.531 | 52.569 | 0.386 | 2569.835 | 0.374 | |

| LS | 5671.214 | 7409.278 | 111.292 | 530.436 | 0.552 | 4069.064 | 0.271 | |

| RANSAC | 5963.904 | 7662.980 | 336.568 | 1294.117 | 0.614 | 4732.633 | 0.277 | |

| ZJU-4D (MiDaS) | Const | 14008.482 | 245011.138 | 38.973 | 51.691 | 0.752 | 25697452.430 | 0.358 |

| Var | 7119.828 | 14297.549 | 32.285 | 46.340 | 0.468 | 13255.805 | 0.431 | |

| LS | 4799.150 | 7968.478 | 35.670 | 51.559 | 0.390 | 4659.651 | 0.394 | |

| RANSAC | 5113.080 | 11063.605 | 23.920 | 37.881 | 0.347 | 13322.258 | 0.631 |

V Conclusion

This paper presents a novel method for estimating dense metric depth by integrating monocular depth prediction with the scale from sparse and noisy Radar point clouds. We propose a dedicated four-stage framework that effectively combines the high-fidelity fine details of the image and the absolute scale of Radar data, surmounting the inherent challenges of detail loss and the imprecision of metrics that manifest in existing methods based on the direction fusion of Radar and image data or their encodings. Our experimental findings unequivocally demonstrate a significantly superior performance of the proposed methodology over the compared baseline, as substantiated by both quantitative and qualitative assessments. In general, we introduce a pioneering metric depth estimation solution, which is rigorously validated and suitable for application on fusing cameras with 3D or 4D Radars. In our future endeavors, we aim to enhance the applicability and effectiveness of our proposed method by leveraging vision foundation models pre-trained with abundant data.

References

- [1] Zachary Teed and Jia Deng “Deepv2d: Video to depth with differentiable structure from motion” In arXiv preprint arXiv:1812.04605, 2018

- [2] Alex Wong, Xiaohan Fei, Stephanie Tsuei and Stefano Soatto “Unsupervised depth completion from visual inertial odometry” In IEEE Robotics and Automation Letters 5.2 IEEE, 2020, pp. 1899–1906

- [3] Reiner Birkl, Diana Wofk and Matthias Müller “MiDaS v3.1 – A Model Zoo for Robust Monocular Relative Depth Estimation” In arXiv preprint arXiv:2307.14460, 2023

- [4] Vitor Guizilini, Igor Vasiljevic, Dian Chen, Rares Ambrus and Adrien Gaidon “Towards Zero-Shot Scale-Aware Monocular Depth Estimation” In arXiv preprint arXiv:2306.17253, 2023

- [5] Diana Wofk, René Ranftl, Matthias Müller and Vladlen Koltun “Monocular Visual-Inertial Depth Estimation” In arXiv preprint arXiv:2303.12134, 2023

- [6] Yukai Ma, Xiangrui Zhao, Han Li, Yaqing Gu, Xiaolei Lang and Yong Liu “RoLM: Radar on LiDAR Map Localization” In 2023 IEEE International Conference on Robotics and Automation (ICRA), 2023, pp. 3976–3982 IEEE

- [7] Yukai Ma, Han Li, Xiangrui Zhao, Yaqing Gu, Xiaolei Lang, Laijian Li and Yong Liu “FMCW Radar on LiDAR Map Localization in Structual Urban Environments”, 2023

- [8] Juan-Ting Lin, Dengxin Dai and Luc Van Gool “Depth estimation from monocular images and sparse radar data” In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 10233–10240 IEEE

- [9] Yunfei Long, Daniel Morris, Xiaoming Liu, Marcos Castro, Punarjay Chakravarty and Praveen Narayanan “Full-velocity radar returns by radar-camera fusion” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 16198–16207

- [10] Yunfei Long, Daniel Morris, Xiaoming Liu, Marcos Castro, Punarjay Chakravarty and Praveen Narayanan “Radar-camera pixel depth association for depth completion” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 12507–12516

- [11] Chen-Chou Lo and Patrick Vandewalle “Depth estimation from monocular images and sparse radar using deep ordinal regression network” In 2021 IEEE International Conference on Image Processing (ICIP), 2021, pp. 3343–3347 IEEE

- [12] Akash Deep Singh, Yunhao Ba, Ankur Sarker, Howard Zhang, Achuta Kadambi, Stefano Soatto, Mani Srivastava and Alex Wong “Depth Estimation From Camera Image and mmWave Radar Point Cloud” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 9275–9285

- [13] Stefano Gasperini, Patrick Koch, Vinzenz Dallabetta, Nassir Navab, Benjamin Busam and Federico Tombari “R4Dyn: Exploring radar for self-supervised monocular depth estimation of dynamic scenes” In 2021 International Conference on 3D Vision (3DV), 2021, pp. 751–760 IEEE

- [14] Wang Zhao, Shaohui Liu, Yezhi Shu and Yong-Jin Liu “Towards better generalization: Joint depth-pose learning without posenet” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9151–9161

- [15] Matteo Poggi, Filippo Aleotti, Fabio Tosi and Stefano Mattoccia “On the uncertainty of self-supervised monocular depth estimation” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 3227–3237

- [16] Lukas Hoyer, Dengxin Dai, Qin Wang, Yuhua Chen and Luc Van Gool “Improving semi-supervised and domain-adaptive semantic segmentation with self-supervised depth estimation” In International Journal of Computer Vision Springer, 2023, pp. 1–27

- [17] Jiawang Bian, Zhichao Li, Naiyan Wang, Huangying Zhan, Chunhua Shen, Ming-Ming Cheng and Ian Reid “Unsupervised scale-consistent depth and ego-motion learning from monocular video” In Advances in neural information processing systems 32, 2019

- [18] Xiaogang Song, Haoyue Hu, Li Liang, Weiwei Shi, Guo Xie, Xiaofeng Lu and Xinhong Hei “Unsupervised Monocular Estimation of Depth and Visual Odometry uUsing Attention and Depth-Pose Consistency Loss” In IEEE Transactions on Multimedia IEEE, 2023

- [19] René Ranftl, Katrin Lasinger, David Hafner, Konrad Schindler and Vladlen Koltun “Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer” In IEEE transactions on pattern analysis and machine intelligence 44.3 IEEE, 2020, pp. 1623–1637

- [20] René Ranftl, Alexey Bochkovskiy and Vladlen Koltun “Vision transformers for dense prediction” In Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 12179–12188

- [21] Kourosh Sartipi, Tien Do, Tong Ke, Khiem Vuong and Stergios I Roumeliotis “Deep depth estimation from visual-inertial slam” In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 10038–10045 IEEE

- [22] Xingxing Zuo, Nathaniel Merrill, Wei Li, Yong Liu, Marc Pollefeys and Guoquan Huang “CodeVIO: Visual-inertial odometry with learned optimizable dense depth” In 2021 ieee international conference on robotics and automation (icra), 2021, pp. 14382–14388 IEEE

- [23] Jiaxin Xie, Chenyang Lei, Zhuwen Li, Li Erran Li and Qifeng Chen “Video depth estimation by fusing flow-to-depth proposals” In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 10100–10107 IEEE

- [24] Huan Fu, Mingming Gong, Chaohui Wang, Kayhan Batmanghelich and Dacheng Tao “Deep ordinal regression network for monocular depth estimation” In Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2002–2011

- [25] Yanchao Yang, Alex Wong and Stefano Soatto “Dense depth posterior (ddp) from single image and sparse range” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 3353–3362

- [26] Wouter Van Gansbeke, Davy Neven, Bert De Brabandere and Luc Van Gool “Sparse and noisy lidar completion with rgb guidance and uncertainty” In 2019 16th international conference on machine vision applications (MVA), 2019, pp. 1–6 IEEE

- [27] Alex Wong and Stefano Soatto “Unsupervised depth completion with calibrated backprojection layers” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 12747–12756

- [28] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold and Sylvain Gelly “An image is worth 16x16 words: Transformers for image recognition at scale” In arXiv preprint arXiv:2010.11929, 2020

- [29] George E Forsythe “Computer methods for mathematical computations” Prentice-hall, 1977

- [30] Richard P Brent “Algorithms for minimization without derivatives” Courier Corporation, 2013

- [31] Holger Caesar, Varun Bankiti, Alex H Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan and Oscar Beijbom “nuscenes: A multimodal dataset for autonomous driving” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11621–11631

- [32] Jiaming Sun, Zehong Shen, Yuang Wang, Hujun Bao and Xiaowei Zhou “LoFTR: Detector-free local feature matching with transformers” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 8922–8931

- [33] Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun “Deep residual learning for image recognition” In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778

- [34] C Bradford Barber, David P Dobkin and Hannu Huhdanpaa “The quickhull algorithm for convex hulls” In ACM Transactions on Mathematical Software (TOMS) 22.4 Acm New York, NY, USA, 1996, pp. 469–483

- [35] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li and Li Fei-Fei “Imagenet: A large-scale hierarchical image database” In 2009 IEEE conference on computer vision and pattern recognition, 2009, pp. 248–255 Ieee

- [36] Jiaming Sun, Yiming Xie, Linghao Chen, Xiaowei Zhou and Hujun Bao “NeuralRecon: Real-time coherent 3D reconstruction from monocular video” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 15598–15607

- [37] Fangchang Ma and Sertac Karaman “Sparse-to-dense: Depth prediction from sparse depth samples and a single image” In 2018 IEEE international conference on robotics and automation (ICRA), 2018, pp. 4796–4803 IEEE

- [38] Tsun-Hsuan Wang, Fu-En Wang, Juan-Ting Lin, Yi-Hsuan Tsai, Wei-Chen Chiu and Min Sun “Plug-and-play: Improve depth estimation via sparse data propagation” In arXiv preprint arXiv:1812.08350, 2018