QWin: Enforcing Tail Latency SLO at Shared Storage Backend

Abstract

Consolidating latency-critical (LC) and best-effort (BE) tenants at storage backend helps to increase resources utilization. Even if tenants use dedicated queues and threads to achieve performance isolation, threads are still contend for CPU cores. Therefore, we argue that it is necessary to partition cores between LC and BE tenants, and meanwhile each core is dedicated to run a thread. Expect for frequently changing bursty load, fluctuated service time at storage backend also drastically changes the need of cores. In order to guarantee tail latency service level objectives (SLOs), the abrupt changing need of cores must be satisfied immediately. Otherwise, tail latency SLO violation happens. Unfortunately, partitioning-based approaches lack the ability to react the changing need of cores, resulting in extreme spikes in latency and SLO violation happens. In this paper, we present QWin, a tail latency SLO aware core allocation to enforce tail latency SLO at shared storage backend. QWin consists of an SLO-to-core calculation model that accurately calculates the number of cores combining with definitive runtime load determined by a flexible request-based window, and an autonomous core allocation that adjusts cores at adaptive frequency by dynamically changing core policies. When consolidating multiple LC and BE tenants, QWin outperforms the-state-of-the-art approaches in guaranteeing tail latency SLO for LC tenants and meanwhile increasing bandwidth of BE tenants by up to 31x.

1 Introduction

Distributed storage systems are widely deployed as the storage infrastructure in clouds. Typically, they provide virtual disks attached to virtual machines or containers in a multi-tenant way [1, 2, 3, 4, 5, 6, 7]. Different tenants have distinguished performance requirements for virtual disks. For example, latency-critical (LC) tenants (e.g. Web-server/OLTP) require low latency guarantees, and meanwhile best-effort (BE) tenants expect sustainable bandwidth. The performance requirements are usually defined as Service Level Objectives (SLOs). In order to guarantee user’s experience, tail latency SLO at 99th and 99.9th percentiles is becoming the main concern of today’s cloud providers [8, 9, 10, 11].

Storage backend (e.g. Ceph’s OSD [12], HDFS’s DateNode [13]) always plays an important role in distributed storage system. Storage backend receives IO requests from upper-level applications, and then processes them by accessing underlying storage devices (e.g. HDDs or SSDs). Storage backend is usually constructed using the stage event-driven architecture (SEDA) [14]. In SEDA architecture, each stage consists of a queue and a thread pool which is responsible for request processing. A request is always queued until a CPU core is available and an idle thread is scheduled to run. As a result, the latency of a request is affected by CPU core allocation as well as thread scheduling.

When serving LC and BE requests simultaneously, existing works try to guarantee tail latency SLO by priority-based scheduling [3, 4, 5, 1, 6]. Generally, LC and BE requests are usually partitioned in respective queues, but threads are still shared. A few consecutive BE requests (e.g. 64KB or larger) can block subsequent LC requests (e.g. 4KB or smaller). The latencies of LC requests increase, which can cause tail latency violation. Even if threads are also partitioned among LC and BE tenants, they always contend for CPU cores. When threads happen to be scheduled to process BE requests, CPU cores may be all occupied by these threads. Consequently, threads processing LC requests cannot be scheduled since no CPU cores are available. This seriously affects the latencies of LC requests, and tail latency SLO violation can also happen.

The conventional wisdom is that cores are statically partitioned between LC and BE tenants. And each core is dedicated to run a thread. However, many tenants experience bursty request patterns or phased behavior, drastically changing the amount of cores they need. Moreover, the underlying storage device is shared. The service time (i.e. I/O latency) fluctuates greatly, specially SSDs. Large fluctuated service time makes backlog of requests, and more cores are needed to process these requests quickly. Therefore, cores must be dynamically allocated to respond to these situations. In such cases, two issues must be addressed.

First, it is necessary to accurately calculate the number of cores and adjust cores to respond to the changing need. Recent works [3, 15, 16] adopt a incremental core adjustment approach based on historical information at fixed intervals, which cannot immediately derive the accurate cores when the need of cores is changed.

Second, unlike core allocation for in-memory applications [17, 18, 19, 15, 16, 20] which only considers the bursty load, core allocation on storage backend must take the fluctuated service time (which has significant impact on latency) into account.

In order to guarantee tail latency SLO for LC tenants meanwhile maximizing bandwidth of BE tenants, we present QWin, a tail latency SLO aware core allocation for shared storage backend. QWin proposes two key ideas.

First, QWin builds an SLO-to-core calculation model which can accurately calculates the number of cores for LC tenants. Except for target SLO, this model also needs definitive runtime load as a parameter. To this end, we propose a flexible request-based window to accurately quantify the runtime load. To our best knowledge, QWin is the first approach to accurately translate target SLO into the number of cores.

Second, for fast detection and targeted reactions to bursty load and fluctuated service time, QWin provides three core policies for adjusting cores. Core policy determines the frequency to check if the need of cores is changed and how many cores are needed. QWin dynamically changes core policies at different stages to adapt to the changing need of cores. Core adjustment is autonomous, and no dedicated core is required to collect information and adjust cores.

We implement QWin algorithm in the storage backend (OSD [21]) of widely-used open-source distributed storage system Ceph [12]. Using both Fio [22] and workloads from Filebench [23] to simulate LC and BE tenants, we evaluate QWin, and compare it with the-state-of-the-art approaches. The experimental results show that QWin is highly general, supporting multiple workloads. Further, QWin outperforms these approaches, with simultaneously enforcing diverse target SLOs for multiple LC tenants and enhancing bandwidth of BE tenants, e.g. the bandwidth of BE tenants is increased by up to 31x without target SLO violations.

2 Background and Motivation

2.1 Basis of Storage Backend Core Allocation

Within a storage backend of distributed storage system (e.g. Ceph’s OSD), the request processing comprises three stages: 1) a request is received from network and enters into queue waiting for being assigned a thread; 2) once a thread is available, the request is dequeued and processed by local storage engine (e.g. Ceph’s Bluestore [24]); 3) the response is sent back. At runtime, a thread runs on a core and consumes requests from queues. When serving both LC and BE tenants, resources can be shared in three ways as shown in Figure 1.

In Share-everything model (Figure 1(a)), requests of LC tenants are not distinguished from BE tenants, and threads are not dedicated to any queue nor any CPU core. In such case, requests from different tenants compete queues and threads as well as CPU cores. In Partial-sharing model (Figure 1(b)), LC and BE requests enter into dedicated queues may have dedicated threads. However, competition cannot be avoided since threads can still run on any CPU core. These two models suffer from resources (e.g. cores and threads) sharing between LC and BE tenants. This results in inter-tenant interference, making tail latency unpredictable. In Partition model (Figure 1(c)), all resources are partitioned between tenants. A CPU core is dedicated to run one thread to avoid switching. Each tenant type has its own queue and cores (threads). Note that a tenant type represents a group of tenants with same performance requirement. Although static partition can reduce interference, it sacrifices resource utilization because each tenant must be provisioned enough cores to accommodate peak load. Previous works [18, 19, 17, 20, 15, 16, 3, 4] dynamically adjust core allocation among tenants to maximize resource utilization. Unfortunately, all of these approaches do not precisely calculate core requirements according to target SLO, and/or do not target requests accessing the underlying storage devices.

2.2 Limitations of Existing Approaches

SLO-unaware core allocation. Existing works do not consider target SLO when allocating cores among multiple tenants [18, 19, 17, 20]. These systems all target to minimize tail latency for LC tenants. They use metrics that are independent of target SLO such as core utilization, queuing delay or runtime load to reallocate cores at fixed adjustment intervals. However, there are two main issues. First, tail latencies of LC tenants are suffered by different adjustment interval. The interval needs to be manually tuned for different tenants to minimize tail latency. As shown in Figure 2, for read-only LC tenant (100% read), the short the interval, the lower the tail latency; but for read-heavy LC tenant (90% read), tail latency is high when interval is short. This is because such approaches allocate cores without considering the fluctuated service time from the underlying storage devices. Thus, when consolidating multiple tenants, it is hard to set a proper adjustment interval for all LC tenants to minimize tail latencies, respectively. Second, core allocation that only target to minimize tail latency results in low utilization. Actually, if LC tenants have looser tail latency requirement, it means that requests can be queued for a certain while instead of being processed immediately. Consequently, the number of cores for satisfying a looser tail latency is less than that for minimizing tail latency. However, such SLO-unaware core allocation makes that LC tenants occupy more cores than the actual need. This results in lower bandwidth of BE tenants.

SLO-aware core allocation. Previous works dynamically allocate cores among multiple tenants according to target SLO [15, 16]. However, these solutions do not calculate the number of cores according to target SLO. They adopt trial-and-error approach to adjust cores. Meanwhile, each core adjustment is incremental, and converging to the right allocations takes dozens of seconds, which cannot satisfy millisecond timescales tail latency requirement.

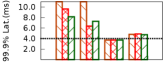

SLO-aware core sharing approaches. Some existing works adopt a sharing model where cores or threads are shared to dynamically schedule LC and BE requests according to target SLO [3, 4, 5, 1, 6]. As shown in Figure 3, Cake [3] adjusts proportional shares and reservations of cores (reservations are set only if shares are found to be insufficient) for an LC tenant based on the historical statistics (tail latency in the previous interval). There is no reserved core for the LC tenant in this experiment. Unfortunately, in most cases, as shown in the upper, tail latencies of two consecutive intervals are significantly different, leading to improper core adjustment. This results in SLO violation in subsequent interval. Although approaches such as rate limiting and priority are combined to schedule LC and BE requests, 99.9th and higher percentile tail latencies suffer since they are more sensitive to the sharing resources. As a result, target SLO is hard to be satisfied, or resource utilization is low due to over-provisioning resources for enforcing target SLOs.

2.3 Challenges

In order to enforce tail latency SLO for LC tenants meanwhile maximizing bandwidth for BE tenants, we had to overcome three key challenges:

Translate target SLOs into core requirements. Target SLO actually reflects core requirement. Meanwhile, core requirement is also affected by other factors, such as the runtime load. Previous works tried to enforce target SLO either by static over-provision [25], or dynamically adjusting cores by using historical tail latencies [3, 15, 16]. However, these works cannot decide the accurate core requirement. As a result, either SLO cannot be satisfied or resources are wasted. It is necessary to decide which factors are used to accurately calculate core requirement. Meanwhile, these factors need to be precisely quantified at runtime with low overhead.

Runtime load quantification. Request rates issued to storage backends are not fixed, and core allocation should be changed with the load. Existing systems [18, 19, 15, 16, 3] adjust cores at fixed intervals (e.g. for Cake [3] and for Shenango [19]). Nevertheless, the runtime load is always changing within an interval due to the incoming requests and the completed requests. No matter how short/long the interval is, the load cannot be precisely quantified. Therefore, it is necessary to design precise load quantification approach.

Fast and precise reactions to bursty loads and fluctuated service time. Workload Bursts are very common with bursting interval at microsecond timescales [19, 26, 17, 20]. Meanwhile, for typical NVMe Flash devices used in storage backend, service time (usually hundreds of microseconds) fluctuations are normal [27, 28, 29, 30, 4]. Both bursty load and fluctuated service time create a backlog of requests, resulting in long queuing delay. Prior works adjust cores incrementally for all tenants at fine time scales to handle bursty load without I/O accessing [19, 20]. However, incremental core adjustment cannot immediately satisfy the bursts of core usage under bursty load or with fluctuated device performance. Therefore, it is necessary to design fast and precise core adjustment approach to handle bursty loads and fluctuated service time.

3 Design

3.1 QWin Overview

The goal of QWin is to enforce tail latency SLO of multiple LC tenants while maximize bandwidth of BE tenants that share the storage backend. The key idea of QWin is to precisely calculate the number of cores according to target SLO, and dynamically adjust cores. Figure 4 shows the overall architecture of QWin. QWin supports multiple levels of target SLO, and each level is corresponded to an LC tenant type which represents a group of LC tenants with a same target SLO. Requests from an LC tenant type are put into a same LC queue. Note that an LC tenant hereafter represents for an LC tenant type. Request from BE tenants are put into their respective queues.

QWin consists of two components. Window Runtime divides each LC queue into variable-length request windows and collects runtime information (e.g. the number of requests and the waiting time) for each window. The number of cores used for each window is accurately calculated according to target SLO and runtime load. Core Allocator dynamically allocates cores to LC tenants, and the remaining cores are used by BE tenants. During processing requests in each window, cores used for LC tenants may be adjusted due to frequently changing interference and loads.

Additionally, each tenant is designated as LC or BE. BE tenants operate at a lower priority: they are only allocated cores that LC tenants do not need. LC tenants will yield extra cores voluntarily when they do not need. If LC tenants need more cores, cores used for BE tenants will be preempted. Before taking a core from BE tenants, the current request handled by the core should be completed. Whereas, cores used by an LC tenant can never be preempted by other tenants.

3.2 Window Runtime

3.2.1 Request-based window

In order to enforce target SLOs and maximize resource utilization, cores must be dynamically allocated since the load is constantly changing with time [18, 19, 17, 20, 15, 16, 3, 4]. The common way to dynamically allocate cores is based on interval [18, 19, 20, 15, 16, 3]. Although a fixed-time interval is simple, the load in each interval is not definitive because the processing of requests can be across multiple consecutive intervals. The non-constant load makes it hard to directly determine core requirements. While for a variable-time interval, it is difficult to set a proper interval due to high variable loads. Therefore, QWin adopts a flexible variable-length division based on current queuing requests, called request-based window. The size of each window (i.e. the number of requests in each window) can be different, but the runtime load in each window is definitive. Once the runtime load is determined, core requirements can be calculated by combining target SLO.

Taking an LC queue as an example, Figure 5 shows the method to divide its queue into windows. Initially, the queue is empty. Requests will enter into its queue later (Figure 5(a)). Then a window is established and all requests in the current queue belong to (Figure 5(b)). If there are requests in the current queue, the size of is . During processing requests in , subsequent incoming requests belong to the next window (Figure 5(c)). When all requests in have been handled, ends. Window is established when ends, and all requests in the current queue belong to (Figure 5(d)). If there are still no incoming requests when a window ends, the queue will be empty. A new window is established until requests enter into the queue again.

In such way, each LC queue is divided into variable-length request-based windows as the changing load. The number of requests in each window reflects the definitive runtime load.

3.2.2 SLO-to-core calculation model

If a target SLO is guaranteed in each window, the target SLO will be finally guaranteed. Therefore, QWin tries to guarantee target SLOs of LC tenants in each window by dynamically adjusting cores. For each window, QWin introduces the SLO-to-core calculation model to translate a target SLO into the number of cores.

For , is the total number of requests, and is the queuing time of its first request. reflects the runtime load, and reflects the waiting time of . Let is a target SLO and is the tail latency of service time (i.e. the I/O latency on storage device of a request), respectively. In order to guarantee the target SLO of , all requests must be dequeued within . Then the average dequeue rate () of can be calculated by the following formula:

| (1) |

As long as the real dequeue rate is no less than , the tail latency of all requests of cannot exceed target SLO. Now let’s consider the classic theory Little’s Law [31] in each window, and let is the average service time. Once is established, the number of cores () used to guarantee the target SLO can be calculated as follows:

| (2) |

Both and can be obtained in real time while the system is running. The SLO-to-core calculation model combines target SLO and runtime load to directly calculate the number of cores for LC tenants, while any spare cores can be used by BE tenants.

3.3 Core Allocator

3.3.1 Core policies

Although SLO-to-core model can be used to precisely calculate the number of cores, only adjusting cores at the begging of each window is not enough for enforcing target SLOs of LC tenants as well as maximizing bandwidth of BE tenants. Bursty loads and fluctuated service time that happen during the processing of a window can cause abrupt changes of core usage. All these cases must be promptly detected and the abrupt changes of core usage must be satisfied immediately, otherwise SLO violation happens. That is to say, in order to guarantee target SLO, it is necessary to adjust cores more than once within a processing window.

For example, if a bursty load occurs during the processing of , bursty requests belong to and make it an enormous window. If requests in are not speeded up, the waiting time of requests in will be longer. Then the tail latency of may exceed target SLO. Similarly, if the service time of a request in is fluctuated beyond the normal, the queuing time of following requests in will be longer. Even if the number of cores is adjusted at the beginning of , the adjustment is too late for and results in SLO violation.

Besides, LC tenants with different target SLOs may contend cores with each other. For an LC tenant, it is possible that the number of cores calculated at the beginning of is more than the available cores (including cores that can be preempted from BE tenants). The reason is that other LC tenants temporarily occupies more cores due to the change of load. This leads that the LC tenant cannot get enough cores at the beginning of , and it needs to adjust cores again within .

In any case, insufficient cores cause queue to grow. Thus, the change of queue length indicates the changing need of cores. To deal with the changing need of cores, QWin constantly monitors queue length and adjusts cores within each window. A temp window is used to reflect the change of queue caused by exceptions that happen within a window. As shown in Figure 6, at moment during the processing of , all requests in current queue belong to a temp window. Using SLO-to-core model, the corresponding number of cores () for the temp window is calculated. If is larger than (the current number of cores), it means that cores are insufficient to enforce target SLO. And more cores () should be added to promptly process these stacked requests.

In theory, a temp window can be used to check the changing need of cores after processing a request. But, this is not always necessary since these exceptions do not happen at all times, and frequent detection may bring extra overhead. Different SLOs reflect different requirements of cores. Tail latency always suffers due to frequently changing interference and load. It is not enough to respond to all these situations only with one policy. Therefore, three policies are proposed to adjust cores without compromising SLO within a window: 1) conservative policy, which only adjusts cores at the beginning of each window; 2) aggressive policy, which continuously adjusts cores after dequeuing a request; 3) SLO-aware policy, which adjusts cores after dequeuing budget requests. The difference among three policies is the frequency to adjust cores within a window. Obviously, the budget used in conservative policy and aggressive policy is and , respectively. For SLO-aware policy, LC tenants with different SLO should have different budget. We use target SLO and the waiting time of to calculate the budget for each window:

| (3) |

Tail latency is meaningful based on sufficient requests. QWin polls tail latency of an LC tenant every THRESH_WIN windows. Then, QWin dynamically selects a core policy by monitoring the slack, the difference between target SLO and the current measured tail latency. If the slack is more than THRESH_HIGH, conservative policy is set. If the slack is less than THRESH_LOW, aggressive policy is set. Otherwise, SLO-aware policy is set. All the tunable parameters are empirically set for our tests; these are described in § 5.

3.3.2 Autonomous core allocation

Different from core allocation approaches which need an extra core to adjust cores at fixed intervals [3, 19, 20, 15, 16, 18], no dedicated core is required for core allocation since each core is autonomous in QWin. The reason is that each core knows the whole information of tenants it served, including the current window, the current queue, the current core policy, the number of cores and the used cores, etc., making it adjust cores autonomously. Only the cores used by LC tenants can execute algorithm to adjust cores. The cores used by BE tenants depend on the core usage of LC tenants.

Take an LC tenant L as example, the core that handles the first request of a window will re-calculate the number of cores, compare it to the current number, and adjust cores when necessary. If L needs more cores, extra cores will be preempted from BE tenants. If L occupies too much cores, superfluous cores will be returned to BE tenants. For conservative policy, core adjustment only happens at the beginning of each window. For aggressive policy and SLO-aware policy, cores may be adjusted at different frequencies within a window. A core that handles the right request under aggressive policy and SLO-aware policy will execute core adjustment. Different from adjusting cores at the beginning of a window, a temp window that can reflect bursty load and fluctuated service time is used to calculate the number of cores within a window when necessary. Note that, in order to avoid unnecessary jitter, core adjustment only monotonously increases within a window. Similarly, increased cores for LC tenants will be preempted from BE tenants. To avoid SLO violation, cores used for LC tenants are never preempted in any case.

3.4 Put Everything Together

QWin’s core allocation runs on each LC core and is integrated with request processing. Algorithm 1 shows the algorithm. Note that the LC tenant in the algorithm represents a group of LC tenants with a same target SLO. When an LC tenant is allowed to register in the system, QWin allocates a core for it and sets aggressive policy as its default core policy. After that, the core begins to monitor the LC tenant and its queue, and dynamically adjust cores by itself.

For any core, the tenant it served is checked. If the tenant is LC, the algorithm is executed as follows. First, if the current window is empty, a new window will be established. calculate_cores() is call to calculate the number of cores by Formula 2, and adjust_cores() is called to adjust cores (lines 4-9). Meanwhile, the core periodically checks if core policy should be changed by a configurable threshold, and update_core_policy() is called to adjust core policy based on the method mentioned in § 3.3.1 (lines 6-7) Second, the thread running on this core will handle a request from the LC queue, and t.wcnt, the number of completed requests, is updated (lines 10-11). Third, the core checks if the number of cores need to be adjusted according to current core policy (except for conservative policy, which only adjusts core at the beginning of each window) (lines 12-16). Core policies are distinguished by different budget as mentioned in § 3.3.1. If the current window is not empty and t.wcnt is divisible by budget, a temp window is established to calculate the current need of cores, and if more cores are needs, adjust_cores() is called to add cores. Finally, if the current window is empty and the queue is also empty, the core will be yielded to BE tenants unless it is the last core used for this LC tenant (lines 17-19).

The core adjustment function adjust_cores() is shown in Algorithm 2. For an LC tenant t, if the current number of cores, t.num, is larger than the target number of cores, target, superfluous cores (t.num-target) are yielded to BE tenants (lines 1-3). Otherwise, more cores will be preempted from BE tenants and assigned to this LC tenant (lines 4-9). If it hanppens that LC tenant cannot be assigned enough cores (delta available), subsequent core allocation will adjust cores again (as mentioned in § 3.3.1).

4 Implementation

We implement QWin in the storage backend (OSD) of the widely-used open-source release of Ceph, but a completely new core allocation strategy is implemented. Moreover, QWin can be integrated into any storage backend which adopts the classical SEDA architecture. Meanwhile, QWin does not need any offline profiling, or a priori knowledge about each tenant’s characteristics except for their target SLOs, making it applicable in wider scenarios.

We modify Ceph’s OSD in three important ways. First, we add a registration module in OSD to receiving target SLOs (e.g., 99.9th percentile latency) of LC tenants. Second, we create a separate FIFO queue for each tenant to reduce interference from contention of a single queue, since that previous works [10, 32, 33] has analyzed that FIFO queuing is the lowest tail latency. QWin’s Window Runtime monitors each queue and quantifies the runtime load of each LC tenant by the flexible variable-length windows. Third, an autonomous core allocation strategy is integrated into the processing of request scheduling. The strategy allows us to completely eliminate the waste of a dedicated core to adjust cores imposed by other systems [3, 19, 20, 17]. Besides, in order to guarantee target SLOs of tenants accessing the storage backend, we modify OSD to enforce access control list (ACL) policies at the granularity of tenants. It checks if a tenant has the right to access the system during the registration. If the tenant is not permitted, it cannot open a connection to the storage backend.

5 Evaluation

In evaluating QWin, we aim to answer the following questions:

1. How can QWin enforcing target SLOs for LC tenants meanwhile maximizing bandwidth of BE tenants compare to previous systems? (§ 5.1)

2. Can QWin satisfy diverse target SLOs for multiple LC tenants? (§ 5.2)

3. How do three core policies provided by QWin enable it to perform well? (§ 5.3)

| Label | Characterization | LC/BE | |||

| A | Fio configure: bs=4KB; iodepth=16; numjobs=8; | randread; | LC | ||

| B | randrw;readratio=95%; | LC | |||

| C | randrw;readratio=90%; | LC | |||

| D | randrw;readratio=85%; | LC | |||

| E | Fio configure: bs=64KB; iodepth=16; numjobs=2; | read; | BE | ||

| F | rw;readratio=99%; | BE | |||

| G | rw;readratio=95%; | BE | |||

| H | rw;readratio=90%; | BE | |||

| J | OLTP from Filebench; | LC | |||

| K | Webserver from Filebench; | LC | |||

| P |

|

LC | |||

Experimental Setup. Our testbed consists of eleven machines, and each has dual 12 physical core Intel Xeon E5-2650v4 CPU, 64GB RAM, two 10GbE NIC, and two 1TB Intel P4510 SSDs, running CentOS 7.3. The Ceph cluster (Ceph 10.2.11 with the BlueStore backend) consists of one monitor and eight OSDs. The monitor is running on a single machine. Two OSDs are co-located on a same machine, and each OSD is configured with a 10GbE NIC, an SSD and a dual 12-core physical CPU. We use six machines as clients, three for LC tenants and three for BE tenants. A 30GB RBD [34] image is created in the Ceph cluster for each tenant. Both Fio [22] and Filebench [23] are used to simulate the workload of LC and BE tenants. The characterizations of all workloads used in the following experiments are shown in Table 1. In order to clarify the configuration of tenants in each experiment, we use T1(LC, A) for short to describe a LC tenant issuing workload A, and T2(BE, E) to describe a BE tenant issuing workload E.

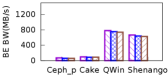

Compared Systems. We compare QWin to Ceph-priority (Ceph_p for short) which can distinguish LC and BE tenants by setting different priorities, Cake which is an SLO-aware approach but proportional sharing cores, and Shenango which is an SLO-unaware approach but using trial-and-error core adjustment. Since Ceph_p can only support two coarse-grained priorities (high and low), we configure high priority for all LC tenants and low priority for all BE tenants. And then LC requests are always processed first. We implement Cake and Shenango in Ceph’s OSD. Cake [3] works by dynamically adjusting proportional shares and reservations of threads (assuming that each thread uses a dedicated core) to meet target SLOs. Since Cake only supports a single LC tenant and a BE tenant, we extend Cake’s SLO compliance-based scheduling algorithm to support multiple LC and BE tenants. Shenango [19] dynamically adjusts cores based on queued requests of LC tenants. If a request is present in the queue for two consecutive intervals, an extra core is added. When the queue is empty, all allocated cores will be reclaimed at once.

Parameters. QWin has three tunable parameters. In our evaluation, we empirically set THRESH_WIN, THRESH_LOW, THRESH_HIGH to windows, and , respectively. The choice of an SLO is driven by LC tenant’s requirement and load, with the intuitive understanding that a more stringent SLO requires more resources. How to determine a good SLO is out of the scope of this paper. The SLO used in our evaluation is tentative. In each experiment, we vary the adjustment interval for Cake (, and ) and Shenango (intervals are shown in Figure 2), and both choose the interval that the tail latencies of LC tenants are the lowest.

5.1 Enforcing target SLO and Increasing Bandwidth

We now compare QWin with Ceph_p, Cake and Shenango by evaluating the ability to enforce target SLO for LC tenants while maximize bandwidth for BE tenants. Three groups of consolidations were designed. Each group consolidates three LC tenants along with three BE tenants. The LC tenants in three groups are different: 1) three LC tenants (T1, T2 and T3) run three Fio workloads B, C and D in Table 1, respectively; 2) three LC tenants (T1, T2 and T3) all run Webserver from Filebench (workload K in Table 1); 3) three LC tenants (T1, T2 and T3) all run OLTP from Filebench (workload J in Table 1). Three BE tenants (T4, T5 and T6) in each group run three Fio workloads F, G and H in Table 1, respectively. Both 99th tail latency SLO and 99.9th tail latency SLO are evaluated in this section for LC tenants. Meanwhile, in each group, three LC tenants are set with a same SLO, or each LC tenant is set with a respective SLO.

Results for three groups are shown in Figure 7, Figure 8 and Figure 9, respectively. For both Fio simulated LC tenants and typical LC tenants (OLTP and Webserver), QWin is the only one that meets target SLOs (99th/99.9th) for LC tenants and yields excellent BE tenants bandwidth. The bandwidth of BE tenants is about 1.2x31x higher than other compared systems without compromising target SLO of LC tenants. Cake can hardly satisfy any SLO for LC tenants, and the bandwidth of BE tenants is much lower than QWin. Shenango does not consider target SLO to adjust cores. Even if LC tenants with different SLO, the tail latency (99th/99.9th) of each LC tenant is nearly the same, and SLO violations happen. Besides, both Cake and Shenango need a dedicated core to adjust cores, which is also a waste of cores. Ceph_p currently only support two priorities: high and low, so it cannot further distinguish LC tenants with different SLO by different priorities. Although LC requests have high priority over BE requests, target SLO still cannot be guaranteed. This is because all cores are shared among LC and BE tenants, and the interference cannot be avoided.

For Cake, either 99th percentile latency or 99.9th percentile latency are not satisfied to corresponding target SLO. During the experiments, we find that Cake only reserves a few cores for each LC tenant, and other cores are shared in proportion among six tenants. For LC tenants, although the proportional share is more than 90%, tail latency (99th/99.9th) is still significantly impacted due to the interference from other tenants. The erraticism of tail latency in each interval (as shown in Figure 3) is aggravated when read requests and write requests are accessing the underlying storage devices simultaneously. The erratic historical tail latency leads to a wrong adjustment, making SLO violation happens. Moreover, the reactive feedback-control based approach adopted by Cake cannot respond to bursty load immediately, and this also results in SLO violation. Besides, the overhead of calculating 99.9th percentile latency in each interval is non-trivial.

For each LC tenant, Shenango only adds a core when congestion is detected, and aggressively acquires cores merely based on runtime load, not target SLO. We monitor the core allocation of Shenango, and find that even if the target SLO is looser, it always occupies more cores than its real need, which results in poor bandwidth of BE tenants. When the load suddenly increases or service time is more fluctuated, incremental adjustment of cores makes that LC tenants need more time to acquire enough cores, and this can have a serious impact on tail latency, especially the 99.9th percentile latency. For all experiments, 99th and 99.9th percentile latencies of three LC tenants in same test are nearly the same. The reason is that Shenango does not distinguish LC tenants that have different target SLO.

For LC tenants with any target SLO (99th/99.9th, same/different), QWin can precisely calculate cores combining with the flexible request-based window, and promptly adjust cores by proper core policy when bursty load and fluctuated service time are detected. From our statistics in the first group of experiment, up to 40,000 variable-length windows are established per minutes to quantify runtime load, and up to 85,000 core allocations are executed per minutes to adjust cores for the changing need of cores. Meanwhile, QWin adaptively changes core policies for LC tenants to adjust cores to respond to the changing needs (further experiments are shown in § 5.3). QWin’s fast and precise reactions enable all three LC tenants to maintain target SLO throughout constantly shifting load and fluctuated service time. All the above experiments show that QWin obviously superior than existing approaches in both enforcing target SLO of LC tenants and enhancing bandwidth of BE tenants.

5.2 Diverse target SLOs

To understand if QWin can maintain its benefits under diverse target SLOs, two scenarios are evaluated: 1) read-only (100% read), where three LC tenants (T1, T2 and T3) all run Fio workload A in Table 1 and three BE tenants (T4, T5 and T6) all run Fio workload E in Table 1; 2) read-heavy (more than 85% read), where three LC tenants (T1, T2 and T3) run three Fio workload B, C and D in Table 1 and three BE tenants (T4, T5 and T6) run three Fio workload F, G and H in Table 1. Note that three different 99.9th percentile tail latency (strict/general/loose) are set as target SLO. There are three tests in each scenario, and in each test, three LC tenants are set with a same target SLO. We compare QWin with Cake which adopts a different strategy to adjust cores with considering target SLO.

As shown in Figure 10, QWin is able to guarantee different target SLOs for LC tenants in two scenarios, and as expected, as target SLOs become looser, bandwidth of BE tenants becomes higher. A looser target SLO uses less cores, and then the remaining cores can be fully used by BE tenants. At any time, including facing bursty loads and fluctuated service time, QWin can precisely calculate the number of cores, and allocate these cores at a time to meet the changing need, instead of increasing them one by one. Differently, Cake only compares the historical tail latency and target SLO to adjust cores. This results in inaccurate core allocation, and it is extremely hard to quickly respond to incoming bursty load or fluctuated service time. Besides, the reactive feedback control to adjust cores adopted by Cake is always too late to handle the fluctuated service time or bursty load. When a strict target SLO (i.e. ) is set for LC tenants in both two scenarios, even if Cake allocates almost all the cores to LC tenants, target SLO is still not satisfied and the bandwidth of BE tenants is nearly zero. Meanwhile, Cake can even hardly satisfy a looser target SLO for LC tenants. That is because a tiny sharing of cores can also significantly impact the tail latency.

5.3 Effects of core policies

To evaluate the benefits of combining three core policies in QWin, two LC tenants and a BE tenant are consolidated in storage backend. Two LC tenants (T1 and T2) run two Fio workload C and P in Table 1, respectively, and the BE tenant (T3) runs Fio workload H in Table 1. We compare target SLOs (99.9th percentile tail latency) of LC tenants, the bandwidth of BE tenant and the allocation of cores under three policies mentioned in § 3.3.1: 1) conservative policy; 2) aggressive policy; 3) SLO-aware policy; and QWin which dynamically selects one of three core policies based on the actual situation. The target SLO (99.9th percentile latency) for T1(LC, C) and T2(LC, P) is set to and , respectively.

Results of this experiment is shown in Figure 11. For conservative policy, target SLO for two LC tenants cannot be guaranteed. For aggressive policy and QWin, target SLO for two LC tenants are both satisfied, but under aggressive policy, the 99.9th percentile latencies are much lower than target SLOs. For SLO-aware policy, target SLO for T1(LC, C) is not satisfied, while target SLO for T2(LC, P) is satisfied. The bandwidth of BE tenant, T3(BE, H), is constantly changing during the experiment due to the changing of its available cores. We calculate the average bandwidth for four strategies: 4200MB/s under conservative policy, 3759MB/s under aggressive policy, 3906MB/s under SLO-aware policy, and 4116MB/s for QWin. The experimental results show that for QWin, not only target SLO of LC tenants are satisfied (with the closest 99.9 percentile tail latency to target SLO), but also the bandwidth of BE tenant is highest.

We also monitor the core allocation during this experiment. Only the results of core allocation for T1(LC, C) are shown in Figure 11 (lower four graphs). Results for T2(LC, P) are similar but not shown for brevity. Compare to other policies, more cores are allocated for aggressive policy. That is because aggressive policy continuously checks and adjusts cores after processing a request. Such policy is too aggressive, and more cores are allocated to LC tenants, seriously decreasing the bandwidth of BE tenants. conservative policy only adjusts cores once per window, which can not quickly respond to bursty load and fluctuated service time. The frequency to adjust cores under SLO-aware policy is between aggressive policy and conservative policy. Such policy is related to target SLO, and may result in SLO violation (the top graph) due to late adjustment. QWin flexibly combines three core policies, and dynamically select a core policy to adjust cores. During the experiment, both T1(LC, C) and T2(LC, P) automatically adopt proper core policy and adjust cores to respond to the changing needs. And for T1(LC, C), core policies are changed about 20 times in a period of 600 seconds. Therefore, for QWin, not only target SLOs of LC tenants can be guaranteed, but also the bandwidth of BE tenants is maximized.

6 Related Work

Dynamic Core allocation. When deciding how to allocate threads or cores for applications, previous works have allocated cores by monitoring performance metrics, utilization, or statistics [15, 18, 19, 20, 17, 16]. Arachne [18] uses load factor and utilization of allocated cores to increase or decrease cores for LC tenants. Both Caladan [20] and Shenango [19] rely on queueing delay to adjust core allocation for LC tenants. PerfIso [17] ensures that there are always servals idle cores available for LC tenants to accommodate bursty load. Both Heracles [15] and Parties [16] adjust cores allocation between LC and BE tenants depending on each tenant’s slack (the difference between the target SLO and the measured tail latency). However, all these approaches allocate cores at a coarse granularity, and they do not calculate the accurate number of cores according to target SLO. And core adjustment is incremental, and latency suffers during the convergence of adjustment. Moreover, they do not consider the underlying storage device which could have long tail latency and large service time fluctuations.

Request Scheduling. Several systems [3, 4, 5, 6, 35] adopt a sharing model and combine priority-based scheduling with rate limiting control to guarantee target SLOs for LC tenants. Cake [3] adjusts proportional share and reservation of cores between an LC tenant and a BE tenant. Reflex [4] first uses target SLOs and offline profiled data of NVMe SSD to determine the upper rate limits, and schedules LC and BE requests based on priorities and rate limiting. PriorityMeister[5], SNC-Meister[6] and WorkloadCompactor[35] determine each tenant’s priority and rate limits through offline analysis of traces using mathematical methods (e.g. DNC[36] and SNC[37]), or through target SLO and offline profiled data of NVMe SSD. Some Cores are still shared between LC and BE tenants in these systems, easily leading target SLO violations. Besides, the above proactive approaches would cause SLO violations or low utilization if the real access patterns of requests deviate from the workload traces. Several scheduling optimizations have been proposed to reduce tail latency (e.g. Rein[38], Few-to-Many[39], Tail Control[40], Shinjuku[41], and Minos[42]). These works mainly focus on different size of requests within an application. They consider reducing tail latency for key-value stores[38, 41, 42], or require application that are dynamically parallelizable[39, 40]. However, guarantee tail latency SLO is beyond the scope of these works.

Replication-based approaches. Existing works focus on reducing tail latency by making use of duplicate requests (e.g. MittOS[43], and CosTLO[44]) and adaptive replica selection (e.g. C3[45] and NetRS[46]). These approaches consider capability of all servers, and select a faster server to serve requests. QWin is orthogonal to those work as it guarantees target SLO within a storage server. We are interested in exploring ways of integrating these techniques with QWin in the future.

7 Conclusion

This paper presents QWin, a tail latency SLO aware core allocation that enforces target SLO for LC tenants and enhances bandwidth of BE tenants. The effectiveness of QWin comes from its key ideas: 1) an SLO-to-core calculation model, which accurately calculates the number of cores, making the allocation of core satisfied at a time without gradually converging. 2) a flexible request-based window, which quantifies the definitive runtime load for the SLO-to-core calculation model; 3) three core policies, which determine the frequency for core adjustment; 4) an autonomous core allocation, which adjusts cores without any dedicated core. These contributions allow QWin to guarantee target SLO of LC tenants meanwhile increase bandwidth of BE tenants by up to 31x.

Acknowledgments

We thank our shepherd Zhiwei Xu and Xinwu Liu for thier useful feedback. This work is supported by the National Key Research and Development Program of China (2016YFB1000202) and Alibaba Innovative Research (No.11969786).

References

- [1] Ning Li, Hong Jiang, Dan Feng, and Zhan Shi. PSLO: enforcing the X percentile latency and throughput slos for consolidated VM storage. In Proceedings of the Eleventh European Conference on Computer Systems, EuroSys 2016, London, United Kingdom, April 18-21, 2016, pages 28:1–28:14, 2016.

- [2] Yunqi Zhang, Michael A. Laurenzano, Jason Mars, and Lingjia Tang. Smite: Precise qos prediction on real-system SMT processors to improve utilization in warehouse scale computers. In 47th Annual IEEE/ACM International Symposium on Microarchitecture, MICRO 2014, Cambridge, United Kingdom, December 13-17, 2014, pages 406–418, 2014.

- [3] Andrew Wang, Shivaram Venkataraman, Sara Alspaugh, Randy H. Katz, and Ion Stoica. Cake: enabling high-level slos on shared storage systems. In ACM Symposium on Cloud Computing, SOCC ’12, San Jose, CA, USA, October 14-17, 2012, page 14, 2012.

- [4] Ana Klimovic, Heiner Litz, and Christos Kozyrakis. Reflex: Remote flash local flash. In Proceedings of the Twenty-Second International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS 2017, Xi’an, China, April 8-12, 2017, pages 345–359, 2017.

- [5] Timothy Zhu, Alexey Tumanov, Michael A. Kozuch, Mor Harchol-Balter, and Gregory R. Ganger. Prioritymeister: Tail latency qos for shared networked storage. In Proceedings of the ACM Symposium on Cloud Computing, Seattle, WA, USA, November 3-5, 2014, pages 29:1–29:14, 2014.

- [6] Timothy Zhu, Daniel S. Berger, and Mor Harchol-Balter. Snc-meister: Admitting more tenants with tail latency slos. In Proceedings of the Seventh ACM Symposium on Cloud Computing, Santa Clara, CA, USA, October 5-7, 2016, pages 374–387, 2016.

- [7] X. U. Zhiwei and L. I. Chundian. Low-entropy cloud computing systems. Scientia Sinica(Informationis), 2017.

- [8] Jeffrey Dean and Luiz André Barroso. The tail at scale. Commun. ACM, 56(2):74–80, February 2013.

- [9] Giuseppe DeCandia, Deniz Hastorun, Madan Jampani, Gunavardhan Kakulapati, Avinash Lakshman, Alex Pilchin, Swaminathan Sivasubramanian, Peter Vosshall, and Werner Vogels. Dynamo: Amazon’s highly available key-value store. In Proceedings of Twenty-first ACM SIGOPS Symposium on Operating Systems Principles, SOSP ’07, pages 205–220, New York, NY, USA, 2007. ACM.

- [10] Jialin Li, Naveen Kr. Sharma, Dan R. K. Ports, and Steven D. Gribble. Tales of the tail: Hardware, os, and application-level sources of tail latency. In Proceedings of the ACM Symposium on Cloud Computing, Seattle, WA, USA, November 3-5, 2014, pages 9:1–9:14, 2014.

- [11] Xiao Zhang, Eric Tune, Robert Hagmann, Rohit Jnagal, Vrigo Gokhale, and John Wilkes. Cpi: CPU performance isolation for shared compute clusters. In Eighth Eurosys Conference 2013, EuroSys ’13, Prague, Czech Republic, April 14-17, 2013, pages 379–391, 2013.

- [12] Sage A. Weil, Scott A. Brandt, Ethan L. Miller, Darrell D. E. Long, and Carlos Maltzahn. Ceph: A scalable, high-performance distributed file system. In 7th Symposium on Operating Systems Design and Implementation (OSDI ’06), November 6-8, Seattle, WA, USA, pages 307–320, 2006.

- [13] Konstantin Shvachko, Hairong Kuang, Sanjay Radia, and Robert Chansler. The hadoop distributed file system. In IEEE 26th Symposium on Mass Storage Systems and Technologies, MSST 2012, Lake Tahoe, Nevada, USA, May 3-7, 2010, pages 1–10, 2010.

- [14] Matt Welsh, David E. Culler, and Eric A. Brewer. SEDA: an architecture for well-conditioned, scalable internet services. In Proceedings of the 18th ACM Symposium on Operating System Principles, SOSP 2001, Chateau Lake Louise, Banff, Alberta, Canada, October 21-24, 2001, pages 230–243, 2001.

- [15] David Lo, Liqun Cheng, Rama Govindaraju, Parthasarathy Ranganathan, and Christos Kozyrakis. Heracles: improving resource efficiency at scale. In Proceedings of the 42nd Annual International Symposium on Computer Architecture, Portland, OR, USA, June 13-17, 2015, pages 450–462, 2015.

- [16] Shuang Chen, Christina Delimitrou, and José F. Martínez. PARTIES: qos-aware resource partitioning for multiple interactive services. In Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS 2019, Providence, RI, USA, April 13-17, 2019, pages 107–120, 2019.

- [17] Calin Iorgulescu, Reza Azimi, Youngjin Kwon, Sameh Elnikety, Manoj Syamala, Vivek Narasayya, Herodotos Herodotou, Paulo Tomita, Alex Chen, Jack Zhang, and Junhua Wang. Perfiso: Performance isolation for commercial latency-sensitive services. In 2018 USENIX Annual Technical Conference (USENIX ATC 18), pages 519–532, Boston, MA, July 2018. USENIX Association.

- [18] Henry Qin, Qian Li, Jacqueline Speiser, Peter Kraft, and John Ousterhout. Arachne: Core-aware thread management. In 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), pages 145–160, Carlsbad, CA, October 2018. USENIX Association.

- [19] Amy Ousterhout, Joshua Fried, Jonathan Behrens, Adam Belay, and Hari Balakrishnan. Shenango: Achieving high CPU efficiency for latency-sensitive datacenter workloads. In 16th USENIX Symposium on Networked Systems Design and Implementation (NSDI 19), pages 361–378, Boston, MA, February 2019. USENIX Association.

- [20] Joshua Fried, Zhenyuan Ruan, Amy Ousterhout, and Adam Belay. Caladan: Mitigating interference at microsecond timescales. In 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), pages 281–297. USENIX Association, November 2020.

- [21] Sage A. Weil, Andrew W. Leung, Scott A. Brandt, and Carlos Maltzahn. RADOS: a scalable, reliable storage service for petabyte-scale storage clusters. In Proceedings of the 2nd International Petascale Data Storage Workshop (PDSW ’07), November 11, 2007, Reno, Nevada, USA, pages 35–44, 2007.

- [22] Jens Axboe. Flexible i/o tester. https://github.com/axboe/fio.git.

- [23] Vasily Tarasov, Erez Zadok, and Spencer Shepler. Filebench: A flexible framework for file system benchmarking. login Usenix Mag., 41(1), 2016.

- [24] Abutalib Aghayev, Sage A. Weil, Michael Kuchnik, Mark Nelson, Gregory R. Ganger, and George Amvrosiadis. File systems unfit as distributed storage backends: lessons from 10 years of ceph evolution. In Proceedings of the 27th ACM Symposium on Operating Systems Principles, SOSP 2019, Huntsville, ON, Canada, October 27-30, 2019, pages 353–369, 2019.

- [25] Jacob Leverich and Christos Kozyrakis. Reconciling high server utilization and sub-millisecond quality-of-service. In Ninth Eurosys Conference 2014, EuroSys 2014, Amsterdam, The Netherlands, April 13-16, 2014, pages 4:1–4:14, 2014.

- [26] Dan Ardelean, Amer Diwan, and Chandra Erdman. Performance analysis of cloud applications. In 15th USENIX Symposium on Networked Systems Design and Implementation, NSDI 2018, Renton, WA, USA, April 9-11, 2018, pages 405–417, 2018.

- [27] Sungjoon Koh, Changrim Lee, Miryeong Kwon, and Myoungsoo Jung. Exploring system challenges of ultra-low latency solid state drives. In 10th USENIX Workshop on Hot Topics in Storage and File Systems, HotStorage 2018, Boston, MA, USA, July 9-10, 2018, 2018.

- [28] Mingzhe Hao, Gokul Soundararajan, Deepak R. Kenchammana-Hosekote, Andrew A. Chien, and Haryadi S. Gunawi. The tail at store: A revelation from millions of hours of disk and SSD deployments. In 14th USENIX Conference on File and Storage Technologies, FAST 2016, Santa Clara, CA, USA, February 22-25, 2016, pages 263–276, 2016.

- [29] Shiqin Yan, Huaicheng Li, Mingzhe Hao, Michael Hao Tong, Swaminathan Sundararaman, Andrew A. Chien, and Haryadi S. Gunawi. Tiny-tail flash: Near-perfect elimination of garbage collection tail latencies in NAND ssds. In 15th USENIX Conference on File and Storage Technologies, FAST 2017, Santa Clara, CA, USA, February 27 - March 2, 2017, pages 15–28, 2017.

- [30] Shine Kim, Jonghyun Bae, Hakbeom Jang, Wenjing Jin, Jeonghun Gong, SeungYeon Lee, Tae Jun Ham, and Jae W. Lee. Practical erase suspension for modern low-latency ssds. In 2019 USENIX Annual Technical Conference, USENIX ATC 2019, Renton, WA, USA, July 10-12, 2019, pages 813–820, 2019.

- [31] John D. C. Little. OR FORUM - little’s law as viewed on its 50th anniversary. Oper. Res., 59(3):536–549, 2011.

- [32] George Prekas, Marios Kogias, and Edouard Bugnion. Zygos: Achieving low tail latency for microsecond-scale networked tasks. In Proceedings of the 26th Symposium on Operating Systems Principles, Shanghai, China, October 28-31, 2017, pages 325–341, 2017.

- [33] Adam Wierman and Bert Zwart. Is tail-optimal scheduling possible? Operations Research, 60(5):1249–1257, 2012.

- [34] Abutalib Aghayev, Sage A. Weil, Michael Kuchnik, Mark Nelson, Gregory R. Ganger, and George Amvrosiadis. File systems unfit as distributed storage backends: lessons from 10 years of ceph evolution. In Proceedings of the 27th ACM Symposium on Operating Systems Principles, SOSP 2019, Huntsville, ON, Canada, October 27-30, 2019, pages 353–369, 2019.

- [35] Timothy Zhu, Michael A. Kozuch, and Mor Harchol-Balter. Workloadcompactor: reducing datacenter cost while providing tail latency SLO guarantees. In Proceedings of the 2017 Symposium on Cloud Computing, SoCC 2017, Santa Clara, CA, USA, September 24-27, 2017, pages 598–610, 2017.

- [36] Jean-Yves Le Boudec and Patrick Thiran. Network Calculus: A Theory of Deterministic Queuing Systems for the Internet, volume 2050 of Lecture Notes in Computer Science. Springer, 2001.

- [37] Markus Fidler and Amr Rizk. A guide to the stochastic network calculus. IEEE Communications Surveys and Tutorials, 17(1):92–105, 2015.

- [38] Waleed Reda, Marco Canini, P. Lalith Suresh, Dejan Kostic, and Sean Braithwaite. Rein: Taming tail latency in key-value stores via multiget scheduling. In Proceedings of the Twelfth European Conference on Computer Systems, EuroSys 2017, Belgrade, Serbia, April 23-26, 2017, pages 95–110, 2017.

- [39] Md. E. Haque, Yong Hun Eom, Yuxiong He, Sameh Elnikety, Ricardo Bianchini, and Kathryn S. McKinley. Few-to-many: Incremental parallelism for reducing tail latency in interactive services. In Proceedings of the Twentieth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’15, Istanbul, Turkey, March 14-18, 2015, pages 161–175, 2015.

- [40] Jing Li, Kunal Agrawal, Sameh Elnikety, Yuxiong He, I-Ting Angelina Lee, Chenyang Lu, and Kathryn S. McKinley. Work stealing for interactive services to meet target latency. In Proceedings of the 21st ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP 2016, Barcelona, Spain, March 12-16, 2016, pages 14:1–14:13, 2016.

- [41] Kostis Kaffes, Timothy Chong, Jack Tigar Humphries, Adam Belay, David Mazières, and Christos Kozyrakis. Shinjuku: Preemptive scheduling for second-scale tail latency. In 16th USENIX Symposium on Networked Systems Design and Implementation, NSDI 2019, Boston, MA, February 26-28, 2019, pages 345–360, 2019.

- [42] Diego Didona and Willy Zwaenepoel. Size-aware sharding for improving tail latencies in in-memory key-value stores. In 16th USENIX Symposium on Networked Systems Design and Implementation, NSDI 2019, Boston, MA, February 26-28, 2019, pages 79–94, 2019.

- [43] Mingzhe Hao, Huaicheng Li, Michael Hao Tong, Chrisma Pakha, Riza O. Suminto, Cesar A. Stuardo, Andrew A. Chien, and Haryadi S. Gunawi. MittOS: Supporting Millisecond Tail Tolerance with Fast Rejecting SLO-Aware OS Interface. In Proceedings of the 26th Symposium on Operating Systems Principles, SOSP ’17, pages 168–183, New York, NY, USA, 2017. ACM.

- [44] Zhe Wu, Curtis Yu, and Harsha V. Madhyastha. Costlo: Cost-effective redundancy for lower latency variance on cloud storage services. In 12th USENIX Symposium on Networked Systems Design and Implementation (NSDI 15), pages 543–557, Oakland, CA, May 2015. USENIX Association.

- [45] P. Lalith Suresh, Marco Canini, Stefan Schmid, and Anja Feldmann. C3: cutting tail latency in cloud data stores via adaptive replica selection. In 12th USENIX Symposium on Networked Systems Design and Implementation, NSDI 15, Oakland, CA, USA, May 4-6, 2015, pages 513–527, 2015.

- [46] Y. Su, D. Feng, Y. Hua, Z. Shi, and T. Zhu. Netrs: Cutting response latency in distributed key-value stores with in-network replica selection. In 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS), pages 143–153, July 2018.