Quaternion-based machine learning on topological quantum systems

Abstract

Topological phase classifications have been intensively studied via machine-learning techniques where different forms of the training data are proposed in order to maximize the information extracted from the systems of interests. Due to the complexity in quantum physics, advanced mathematical architecture should be considered in designing machines. In this work, we incorporate quaternion algebras into data analysis either in the frame of supervised and unsupervised learning to classify two-dimensional Chern insulators. For the unsupervised-learning aspect, we apply the principal component analysis (PCA) on the quaternion-transformed eigenstates to distinguish topological phases. For the supervised-learning aspect, we construct our machine by adding one quaternion convolutional layer on top of a conventional convolutional neural network. The machine takes quaternion-transformed configurations as inputs and successfully classify all distinct topological phases, even for those states that have different distributuions from those states seen by the machine during the training process. Our work demonstrates the power of quaternion algebras on extracting crucial features from the targeted data and the advantages of quaternion-based neural networks than conventional ones in the tasks of topological phase classifications.

I Introduction

The phase classification using machine-learning (ML) based techniques has been attracting intense attentions since the pioneering work in 2017 [1]. In addition to the classical phase detections [2, 3] where each phase is well defined by the corresponding order parameters, detecting topological phase transitions [2] is interesting and challenging [4] due to the lack of local order parameters. Recently, the phase detections and classifications have been performed via different ML techniques for classifying various topological invariants [5, 6, 7, 8, 9, 9, 10, 11, 12, 13, 14, 15, 16, 17, 6, 18, 19, 10, 20, 21, 22, 23, 24, 16, 25, 21, 18, 26, 18, 17, 27, 28, 29, 30, 6, 10, 4, 31, 32, 33, 34, 35, 26, 36], including the Chern number [14, 15, 16, 17, 6, 18, 19, 10, 20, 21, 22, 23], winding number [24, 16, 25, 21, 18, 26, 18], index [17, 27, 28, 29, 30, 6, 10, 4, 31, 32, 33, 34, 35, 26, 36], to name a few. In addition to the applied ML architectures, the forms of the inputs for training the machine also play a crucial role in determining the resulting performance of the topological phase detections [4].

For the topological systems with the Chern numbers or the winding numbers as the topological invariants, various types of inputs are used to perform the phase classifications. For instance, the quantum loop topography (QLT) is introduced to construct multi-dimensional images from raw Hamiltonians or wave functions as inputs [14, 17]. The Bloch Hamiltonians are arranged into an arrays to feed the neural networks [24, 16]. In addition, the real-space particle densities and local density of states [15] and the local projections of the density matrix [6] are also used as inputs. From cold-atom experiments, momentum-space density images were generated as inputs for classifications [20]. The time-of-flight images [10, 19], spatial correlation function [10], density–density correlation function [10] and the density profiles formed in quantum walks were also proposed as appropriate inputs [23]. Furthermore, the spin configurations [18] and the Bloch Hamiltonians over the Brillouin zone (BZ) have also been treated as inputs for the neural networks [21, 18]. For these forms of inputs mentioned above, various ML techniques with distinct real-valued neural networks have been applied to discriminate different topological phases.

As the development of artificial neural networks becomes mature, a raise of representation capability of machines is anticipated by generalizing real-valued neural networks to complex-valued ones [37, 38]. Specifically, a quaternion number, containing one real part and three imaginary parts, and the corresponding quaternion-based neural networks [39, 40, 41, 42] are expected to enhance the performance on processing of data with more degrees of freedom than the conventional real-number and complex-number systems. There have been various proposals about quaternion-based neural networks in ML techniques and applications in computer science, such as the quaternion convolutional neural network (qCNN) [43, 44, 38], quaternion recurrent neural network [45], quaternion generative adversarial networks [46], quaternion-valued variational autoencoder [47], quaternion graph neural networks [48], quaternion capsule networks [49] and quaternion neural networks for the speech recognitions [50]. However, the ML-related applications of the quaternion-based neural networks on solving problems in physics are still limited, especially in the topological phase detections, even though the quaternion-related concepts have been applied in some fields in physics [51, 52, 53].

In this work, we perform the Chern-insulator classifications from both supervised- and unsupervised-learning aspects based on the inputs transformed via the quaternion algebra. For the unsupervised learning, we encode the quaternion-transformed eigenstates of Chern insulators via a convolution function as inputs and study them using the principal component analysis (PCA). We found that using only the first two principal elements is not enough to fully classify the Chern insulators, consistent with Ming’s work [23]. Further studies show that the performance can be improved by including more principal components. For the supervised learning, we construct a quaternion-based neural network in which the first layer is a quaternion convolutional layer. We then show that this quaternion-based machine has better performance than a conventional CNN machine. Our machine is good not only for testing datasets but also for identifying data points that have different distributions from those seen by our machine in the training processes. The good performance can be attributed to the similarities between the formula of the Berry curvatures and our quaternion-based setup. Therefore, our work demonstrates the power of the quaternion algebra on extracting relevant information from data, paving the way to applications of quaternion-based ML techniques in topological phase classifications.

The outline of the remaining part of this work is as follows. In Sec. II, we introduce the model Hamiltonian, generating the data for our classification tasks, and the quaternion convolution layer used in this work. PCA analysis of the quaternion-transformed eigenstates is discussed in Sec. III. The data preparations, the network structures and the performance of the quaternion-based supervised learning task are given in Sec. IV. Discussions and Conclusions are presented in Sec. V and Sec. VI, respectively. We have three appendixes. Appendix A shows the details of data preparation. Appendix B provides a brief introduction to the quaternion algebra. Some properties of functions in Sec. III are included in Appendix C.

II Model and quaternion convolutional layer

II.1 Model

A generic two-band Bloch Hamiltonian with the aid of the identity matrix and Pauli matrices is written as

| (1) |

where is the crystal momentum in the 2D BZ (). can change energy of the system but has nothing to do with topology, so it will be ignored in the remaining part of this paper. The vector acts as an -dependent external magnetic field to the spin , so that the eigenstate of the upper (lower) band at each will be the spin pointing antiparallel (parallel) to . It will be reasonable that the unit vector embeds the topology in this system. Indeed, the topological invariant is the Chern number C,

| (2) |

where the integrand is the Berry curvature and the integration is over the first BZ. For brevity, sometimes we will omit the argument in functions. The Chern number is analogous to the skyrmion number in real space [54]. The integral is the total solid angle subtended in the BZ, so the Chern number counts how many times wraps a sphere.

We construct the normalized spin configurations based on the following models. For topological systems, we choose the Hamiltonian with , where

| (3) |

with positive integer and real parameter to control the Chern number. c is the vorticity for the number of times the inplane component ( and ) swirls around the origin. The sign of the c indicates a counter-clockwise or clockwise swirl. For a nontrivial topology, has to change sign somewhere in the BZ for to wrap a complete sphere. Therefore, is required. Some examples of spin texture based on Eq. (3) are shown in Fig. 1. For , the model is the Qi-Wu-Zhang (QWZ) model [55]. For a given , the Chern number can be either depending on the value of :

| (4) |

The topological phase diagram is shown in Fig. 2. denotes a topologically trivial phase and a nontrivial phase.

II.2 Quaternion convolutional layer

A quaternion number has four components, the first of which stands for the real part and the other three of which stand for the imaginary parts. Given two quaternions and , their product is given by

| (5) |

which can be written as the matrix product form

| (6) |

To implement a quaternion convolutional (q-Conv) layer in numerical programming, we will regard the two quaternions as a matrix and a column matrix, respectively:

| (7) |

More details of quaternion algebra are described in Appendix B.

A conventional CNN contains a real-valued convolutional layer to execute the convolution of the input and the kernel. Let the input have the shape: (Height Width Channel) and the shape of the kernel be . The convolution will produce an output , , whose elements are

| (8) |

Here the stride is assumed to be both in the width and the height directions. The indices and are spatial indicators, is the index of channel in the input feature map and is the kernel index. The shape of the output will be .

Assume that the input has four components. To uncover the entanglement among components through CNN, we will utilize the quaternion product. Now, we introduce another dimension–depth–which is four, as a quaternion number of four components. Both of the input and the kernel have depth of four as two quaternion numbers. The product of and will have depth of four as a quaternion in Eq. (5). Referring to Eq. (7) where we show a matrix representation to implement quaternion algebra and thinking of as and as in Eq. (7), we transform the depth-four input into a matrix, , and keep the kernel still of depth 4, , where . The product of and , say , will have depth four as shown in Eq. (9). Further considering the shapes of and , the convolution is given by

| (9) |

where the summations over ,, are equivalent to those in Eq. (8) and the summation over is for the quaternion product.

More specifically, we consider an input data as (four color squares on the left of Fig. 3) and four kernels encoded in , given in the following

| (10) |

The output feature maps is then calculated based on Eq. (5). As the first step, we permute the order of to obtain

| (11) | |||

(see the four sets of sqaures in the middle of Fig. 3). We then convolute those four quaternions ( with and 4) with four kernels ( with and 4) in the following way:

as shown in the middle of Fig. 3. Finally, we sum over the above four quaternions to get the output feature maps , as shown on the right of Fig. 3.

III principal component analysis

Principal component analysis (PCA) is a linear manifold learning that is to find the relevant basis set among data [56, 57].

We prepare eigenstates of Eq. (1), where stands for the upper (lower) band. For a topologically nontrivial state, the phase cannot be continuous over the whole BZ. Therefore, we can divide the whole BZ into two parts, in each part of them the topological wave function has continuously well-defined phase. We then pick up a gauge by choosing two regions according to the sign of in Eq. (3):

| (12) | ||||

and

| (13) | ||||

where . In this choice of gauge, the first (second) component of is real-valued when , and the second (first) component of is real-valued when .

By translating with , into a quaternion number of four components, we have

| (14) |

To see the correlation of states over , we define the quantity to be the quaternion-based convolutions:

| (15) |

where is the conjugate of . It can be proved that is real-valued. Therefore, of all in the BZ based on a given Hamiltonian can be analysed by using PCA.

We collected various of all BZ within seven topological phases as the dataset for PCA. For each topological phase, 30 ’s were prepared, so the total amount of data was 210. The data for six non-trivial phases were generated based on Eq. (3) with (the sign of determines the sign of ). For the trivial phase, we prepared five data points from each of six combinations of , where and , and then there are totally 30 data. To augment the number of data, we add Gaussian noises at every of the model [Eq. (3)] such that without closing the band gap.

It is notable that for are featureless, for have a dipole moment, and for have a quadruple moment, and for seemingly have a primary dipole and a secondary quadruple moment. The remarkable features imply that the convolution function is a good choice for topological classifications.

We examine data with the standard deviation (SD) equal to 0, 0.1, 0.2 and 0.3 respectively, and show the first two PCs of 210 pieces of data for each SD in Fig. 5. In Fig. 5, data are clustered into four groups and their variances increase with SD. It is successful to separate different topological phases into different clusters via PCA. However, some clusters contain two topological phases of Chern numbers: , , and . This modulo 4 resemblance has also be observed in a previous study [23].

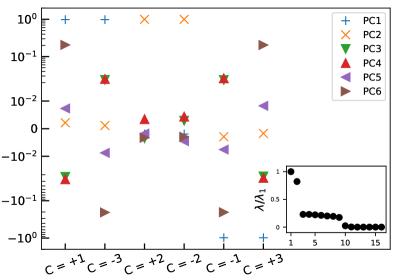

We find that including more PCs helps separate different classes in each cluster. Figure 6 shows the first six PCs of data in topologically non-trivial phases, where PC denotes the -th PC component. One can find that PC1 and PC2 in each pair of , , and are nearly identical, as also shown in Fig. 5. By incorporating more PCs up to PC6, all topological classes are completely classified. Via the proposed convolution, topological states can be successfully classified by using PCA, a linear classification machine.

Compared to the eigenstates, the spin configurations are gauge-invariant. Therefore, it is desired to classify the topology of the spin configurations via PCA. Unfortunately, the performance was not good, which will be discussed later. In order to directly classify the spin configurations, in the following, we train a qCNN machine via the supervised learning algorithm to discriminate spin configurations with different topological phases.

IV Supervised learning of CNN and the qCNN

IV.1 Datasets

The input data are normalized spin configurations , laying on a square lattice with periodic boundary conditions, and their corresponding topological phases are labels with one-hot encoding. We prepared four datasets: training, validation, testing and prediction dataset (more details are described in Appendix A).

The first three datasets are well known in conventional deep learning procedure [58]. The data in the training, validation and testing datasets will be constructed by the same models so that they have the same data distributions even though they are all different data points. Therefore, we denote these three datasets as in-distribution datasets. The data in the prediction dataset, however, are constructed by similar but different models from those for the in-distribution datasets. Therefore, the data in the prediction dataset are not only unseen by the machine during the training process, but also of different distributions. We denote the prediction dataset as a out-of-distribution dataset, which is used to understand whether our machine can also classify spin configurations constructed by other similar but different topological models.

The data pool containing training and validation datasets is constructed as follows. Based on the Eq. (3), we firstly prepared 5760 data points of of nine topological phases with Chern number ranging from -4 to 4 and each phase contains 640 data points. Besides of 5760 spin configurations, the dataset contains 360 two-dimensional spin vortices. A spin-vortex has an in-plane spin texture that winds around a center, which is generated by setting one of three components in Eq. (3) to be zero. By including spin vortices, the machine can tell the difference between 3D winding (non-trivial) and 2D winding (trivial) spin configurations . After the training process, the trained machine is scored by a testing dataset with the same composition of nine phases as that in the training (and validation) dataset. Importantly, without changing the topologies, the Gaussian distributed random transition and random rotation imposed on these three datasets can increase the diversity of dataset and enhance the ability of generalization of the trained machine.

The prediction dataset contains six categories of spin configurations. The first category is generated with uniformly distributed from to . In the second and the third categories, we change the sign of (the second category) and swapping and of (the third category). Finally, we consider three categories for trivial states, which are ferromagnetic (FM), conical, and helical states. FM can be viewed as 1D uncompleted winding configuration while conical and helical can be viewed as 2D uncompleted ones. In total, we prepared six categories for the prediction dataset. More details about data preparations will be described in Appendix A.

For the conventional CNN, we use as the input data. For the qCNN, in order to feed the input data into the qCNN classifier, we transform the 3D spin vector into an unit pure quaternion,

| (16) |

where the scalar part (the first component) is zero and the vector part is . Therefore, the inputs of qCNN are effectively equivalent to those of CNN.

IV.2 network structure and performance

The schematic architectures of these two classifiers are shown in Fig. 7, where the last black arrows point to nine neurons for nine topological phases. In the qCNN classifier, we implement a quaternion convolution (q-Conv) layer as the first layer [red dotted cuboid in Fig. 7(b)], and the operations in a q-Conv layer are based on the quaternion algebra to hybridize spin components. Then the next three layers are typical 3D convolutions (Conv3Ds). Our Conv3Ds do not mix depths by choosing proper sizes of kernels. Followed the Conv3D layers is a 2D convolution (Conv2D) layer to mix data in depth: nine kernels of kernel size 4×1 will transform data from to . On the contrary, the CNN classifer has only Conv2D layers. Although the qCNN is more complex than the CNN, the total network parameters of the qCNN is however less than the CNN. This is one advantage of the qCNN over the conventional CNN.

In order for classifiers to satisfy some physically reasonable conditions, two special designs are implemented. Firstly, we extend the points out of the BZ by padding the input data according to the periodic boundary conditions [59].

Secondly, the first layer takes “overlapping” strides with an arctan activation function, and the latter layers take “non-overlapping” strides with the tanh activation function for both qCNN and CNN machines. Figure 8 illustrates how the “overlapping” and “non-overlapping” feature mapping can be manipulated by varying the size of stride.

Then, both qCNN and CNN machines are trained. The learning curves of both machines are shown in Fig. 9. The CNN machine (orange and light orange lines) jumps over a big learning barrier at around the epoch. After that, the training and the validation accuracy (orange and light orange line respectively) are separated and do not converge up to end of this training process. Even though the same training (and validation) dataset is used in the training process, the learning curves of the qCNN machine (blue and light blue lines) are qualitatively different. The training and the validation accuracy are separated around epoch, but the difference between these two accuracies decreases with increasing epochs. After the training procedure finished, the qCNN (CNN) machine gets 99.67% (94.12%) testing accuracy. This difference in accuracy results from the spin-vortex dataset, where the qCNN works well but CNN dose not.

The trained machines are ready to do prediction, and the result is shown in Fig. 10.

In Fig. 10, since the first category contains of uniformly distributed , where a few data points are very close to the phase boundaries , the accurate rate of the the qCNN is slightly low at . For the second and third categories, we choose , away from the phase transition points, and the performance is nearly perfect. For the uncompleted winding configurations, the qCNN, different from the conventional CNN, can accurately classifies FM, helical and conical states after learning the spin-vortex states. This is the main advantage of the qCNN over the conventional CNN, which is expected to result from the quaternion algebra.

The processing times of two classifiers are summarized in Table 1. Since the q-Conv layer has massive matrix multiplication, the time of one epoch for qCNN is longer than that of convention CNN in our task, especially utilized by CPU.

| Processing time (sec) | ||

|---|---|---|

| Architecture | CPU | GPU |

| CNN | 6.115 | 1.011 |

| qCNN | 72.2 | 3.108 |

V discussions

In this work, we apply the quaternion multiplication laws to both PCA (unsupervised learning) and qCNN (supervised learning). The two methods take different inputs; the former one takes scalar function , which is something to do with a convolution of the wave function, and the second one takes the pure quaternion function , where real part is zero and the imaginary part is the spin vector. We will explain physical intuitions and comment the mechanisms in this section.

On PCA, we did not take simply as the input because the representation of the vector depends on coordinates but the topology is not. We believed that the topology as a global geometry should be embedded in the correlations. The correlation of dot products of turned out to fail since relative angles of two spins were not informative to understand the swirling of on . If one tries the quaternion in the convolution Eq. (15) by , the result is still inappropriate for the convolution is independent of the sign of to discriminate topological states (see Appendix C). Eventually, we found that the defined in Eq. (15) was a proper quantity to characterize topology after PCA. The has the property that it is featured (featureless) when the wave function is unable (able) to be globally continuous that happens in the nontrivial (trivial) phases. Unfortunately, the is not gauge invariant. The results were based on the choice of gauge in Eqs. (12) and (13) that made the wave function continuous locally and discontinuous at where . We had examined other choices of gauge and found that the present gauge exhibited the PCA features most clearly (results not shown). We remark that our PCA results looked good because the inputs were ingeniously designed and the PCA method might not be more practical than the qCNN method.

For qCNN, it is interesting to understand the mechanism behind. There are several possible factors promoting the performance of our supervised learning machine. The first one is that the size of kernel in the first convolutional layer is 2 × 2 with stride = 1, which means the machine can collect spin information among four nearest neighbors [see Fig. 11(b)]. We know that the Chern number is the integral of the Berry curvature in the BZ, and the Berry curvature is twice of the solid angle. A solid angle subtended by three unit vectors , , and is obtained by

| (17) |

Our choice of the size of the kernel in the first hidden layer is the minimal of that mixes only the nearest-neighboring spins. In this way, it is very possible to enforce the machine to notice the solid angle extended in this plaquette. The second factor is the quaternion product. Recall that the conventional CNN might correlate spins in neighboring due to the feature map through the kernel. However, the map does not mix the components of spins. In comparison, the qCNN is more efficient for it directly entangle spins via the quaternion product. It is this entanglement of spin components by the quaternion product that makes the scalar and vector products in calculating the solid angle (see Eq. (17)) become possible to be realized by the machine. As a solid angle involves at least three spins and the feature map by the kernel is just linear, a nonlinear transformation is crucial to create high-order (three spins) terms in the expansion. This is possible and proved in Ref. [60] that multiplication of variables can be accurately realized by simple neural nets with some smooth nonlinear activation function. Therefore, the third factor is the non-linear activation function, arctan in this work. We expect that using arctan as the activation function can further help the machine to learn correct representations because the calculation of a solid angle involves the arctan operation in Eq. (17). This belief is indeed supported by the results shown in Fig. 12, where the arctan activation function outperforms the ReLU and tanh activation functions over nine different datasets. In summary, several factors are combined to enhance the performance of our machine as follows. The quaternion-based operations in the q-Conv layer mix not only spins with their neighbors but also components of spins. When these linear combinations are fed into the non-linear activation functions in our qCNN, the output can be viewed as an expansion of a non-linear function, which may contain a term having both the scalar- and vector-product of neighboring spins, similar to that in Eq. (17). Therefore, after the optimization process, the machine may keep increasing the weight of a solid-angle-related term and eventually learn to classify the topological phases.

Also, adding some noises to the training dataset helped our supervised-learning machine to learn the generic feature of our data. We found that when the training data was generated directly from Eq. (3) without adding any noise, the machine worked well for training and testing datasets but had poor performance on all the prediction dataset. This could be understood by noting that the topological invariant is determined by the sign of , which appears in the component in Eq. (3). By using the dataset without noise, the machine might naively regard the component as the judgment of topology when the training data does not contain wide distribution. We note that the topology is invariant when the spin texture is uniformly translated or rotated. So we trained our machine with randomly translated and rotated data to avoid incorrect learning. (See data preparation in Appendix A.) From our observations, the performance on the prediction dataset was remarkably enhanced when the noise was included, which supports our idea.

VI Conclusions

In summary, we classify topological phases with distinct Chern numbers via two types of machine-learning techniques. For the unsupervised part, we propose a quaternion-based convolution to transform the topological states into the input data. With this convolution, distinct topological states are successfully classified by PCA, a linear machine for classification.

We then go to the supervised learning part where, in contrast to the conventional CNN, we successfully use the qCNN to classify different topological phases. This work demonstrates the power of quaternion-based algorithm, especially for the topological systems with the Chern number as the topological invariants.

Acknowledgements.

This study is supported by the Ministry of Science and Technology (MoST) in Taiwan under grant No. 108-2112-M-110-013-MY3. M.R.L. and W.J.L. contributed equally to this work.Appendix A Data preparation

Training dataset— The normalized spin configurations are based on the formula [refer to Eq. (3)]

in a square lattice with periodic boundary conditions. For each and , we generated four sets , and . The former two sets are topologically nontrivial and each has 640 configurations for different values of :

where are random numbers in the corresponding ranges. The latter two sets are topologically trivial and each has 80 (identical) configurations:

So, for each there were 1280 nontrivial spin configurations and 160 trivial ones. Then the primitive data passed through some manipulations as the effect of data augmentation without changing the topologies. Each spin configuration was translated (), rotated (), and then polluted with noise ():

| (18) |

where is a random displacement in , stands for a random 3D rotation of the spin, and is Gaussian noise () with standard deviation in each component. (The spin should be normalized lastly.) and are homogeneous transformations in , but , inhomogeneous, picks only 30 out of 1600 sites.

In addition to the 5760 sets of data in nine topological phases ( to ), we also include 360 spin vortex states, which are states, based on the formulas:

| (19) |

| (20) |

| (21) |

with their normalized configurations. For each , 30 spin configurations were generated with random ranging from -3 to 3. The data also went through translation and rotation but no noise .

Therefore, we generated 6120 spin configurations totally as the training dataset.

Among the training dataset, 25% of the data are assigned as the validation dataset (light color lines in Fig. 9).

Testing dataset—

In addition to the training and validation dataset, we prepare extra 1224 spin configurations as the testing dataset, with the same composition as the training and validation datasets. This dataset is prepared for scoring the trained classifiers.

Prediction dataset— The prediction dataset is an extra dataset, different from the aforementioned three datasets. It consists of six categories, each of which was not seen by the machine during the training process. This dataset was processed by and but not . The six categories were constructed as following. The First category, the “chern” category, is a set which was generated from Eq. (3) with 30 ’s uniformly ranging from -3 to 3 for each :

As a reminder, this category is different from the training dataset. The training data includes the specific at trivial phase, and two intervals and in nontrivial phases. Therefore, 20% of this category is close to the phase transition .

The next two categories were generated based on Eq. (3) with . The first one was constructed by changing the sign of the -component:

The second one was constructed by swapping the and the components:

The next two categories, called helical and conical spin configurations, were generated based on the following equation

Here is for the helical state and is for a conical state. The last category contains the ferromagnetic spin configurations (FM) whose -component are a constant and - and -component are zero. Some spin configurations in the prediction dataset are illustrated in Fig. 13.

Appendix B Quaternion

The quaternion number system were introduced by Irish mathematician William Rowan Hamilton in 1843 as an extension of the complex numbers. A quaternion number is composed of four real numbers and to be

| (22) |

where is the basis. Sometimes it is written as or in short. Here is called the scalar (or real) part of the quaternion and the vector (or imaginary) part. A quaternion without scalar part is called pure quaternion. Similar to the imaginary number,

| (23) |

Importantly, the algebra of quaternions is noncommutative, based on

| (24) | |||

The conjugate of the quaternion is defined to be

| (25) |

and the norm is given by

| (26) |

Therefore the inverse of is defined as

| (27) |

If is unit quaternion, then their inverse is exactly their conjugate. The multiplication (so-called quaternion product or Hamilton product) of two quaternions and is given by

| (28) |

To realize the algebra in Eqs. (23) and (24), one can choose the representation for the quaternion numbers with

so that

Reversely,

It is evident that in terms of matrices the commutativity of multiplication of quaternions dose not hold. Furthermore, in the matrix representation , conjugation of a quaternion being equal to its transposition. More specifically, an unit quaternion have a property in the representations.

Appendix C Details of definition in Section III

In this section, we provide some properties about the function we defined in PCA section and the convolution of normalized spin vector . Recall that the convolution we defined in the PCA section is as follows

| (29) |

From now on, because of lack of notations, we denoted upper-bar (e.g.:) being conduction band, and lower-bar (e.g. ) being valence band. A vertical line with a variable stand for its corresponding position of BZ.

Property C.1.

is a purely real-valued function.

Proof.

Since and are one-to-one correspondence in BZ, and summing over BZ or BZ are equivalent. Once we take conjugate on , then

The first line come from the property of conjugate on quaternions, the second line come from the equivalence of summing whole BZ, and the third line come from . We see that conjugation of is itself. Therefore, is a purely real-valued function. ∎

Recall that in our model Eq. (3), are both even of and is odd of . That is, given , there is such that

| (30) |

In addition, those two points are one-to-one correspondence in BZ, and identical at the point. Notice that once we normalized by , then each component of is function of .

Property C.2.

Encoding into quaternion by , the convolution is independent on the sign of .

Proof.

We consider two convolutions with but over , and opposite sign , respectively:

| (31) | ||||

and

| (32) | ||||

where are vector parts of the above quaternion product at , respectively. In Property (C.1), we have shown the convolution over entire BZ is a purely real-valued function. That is, we only need to consider dot product of vector part as the quaternion product of two quaternion when both doesn’t have real part. Now, the Eq. (30) and the assumption gives us the fact

Since , we can conclude that

| (33) |

Substituting Eq. (33) into Eq, (32), we identify Eqs. (31) and (32). Therefore, applying opposite , the convolutions over BZ gets exactly the same value. That is, the convolution is independent on the sign of once we encoded quaternion by . ∎

Property (C.2) is based on how we encoded quaterion number. The following two properties are based on the way we transformed quaternion in main text.

Property C.3.

If we encode spinor , , into quaternion number by following

Then,

Proof.

According to Property (C.1), it’s suffice to show real part. By the assumption, given and let with , we have

| (34) | ||||

where is the vector part of the quaternion product. Notice that the second line above is a quaternion product, but the third line above is dot product between two vectors. On the other hand

| (35) | ||||

where is the imaginary part. It’s obvious that Eq. (34) and Eq. (35) have exactly the same value in real part. ∎

Property C.4.

Proof.

One can observe eigenvalues to conclude that

| (36) |

From now on, we consider for all , and therefore the spinor has the following form

After transforming the above two eigenstates into quaternions, we calculate the value of at :

| (37) | ||||

where is the vector part of the above quaternion product at fixed point. Recalling Property (C.1) that shows the vector part has no contribution for real-valued function , it is suffice to calculate the real part of the quaternion product at fixed point.

From Eq. (30), there are two points , and such that

| (38) |

Therefore, the terms at and at in Eq. (37) will cancel with each other. Note that values at and at are zero in Eq. (37) since at these points in our model Eq. (3). Thus, if for all BZ.

Similarly, we assume for all BZ. After calculation, the value of is the same as Eq. (37). Therefore, we can conclude that if has the same sign in the entire BZ, then . ∎

References

- Carrasquilla and Melko [2017] J. Carrasquilla and R. G. Melko, Machine learning phases of matter, Nature Physics 13, 431 (2017).

- Bedolla et al. [2020] E. Bedolla, L. C. Padierna, and R. Castañeda-Priego, Machine learning for condensed matter physics, Journal of Physics: Condensed Matter 33, 053001 (2020).

- Mehta et al. [2019] P. Mehta, M. Bukov, C.-H. Wang, A. G. Day, C. Richardson, C. K. Fisher, and D. J. Schwab, A high-bias, low-variance introduction to machine learning for physicists, Physics reports 810, 1 (2019).

- Beach et al. [2018] M. J. Beach, A. Golubeva, and R. G. Melko, Machine learning vortices at the kosterlitz-thouless transition, Physical Review B 97, 045207 (2018).

- Yoshioka et al. [2018] N. Yoshioka, Y. Akagi, and H. Katsura, Learning disordered topological phases by statistical recovery of symmetry, Physical Review B 97, 205110 (2018).

- Carvalho et al. [2018] D. Carvalho, N. A. García-Martínez, J. L. Lado, and J. Fernández-Rossier, Real-space mapping of topological invariants using artificial neural networks, Physical Review B 97, 115453 (2018).

- Balabanov and Granath [2020a] O. Balabanov and M. Granath, Unsupervised learning using topological data augmentation, Physical Review Research 2, 013354 (2020a).

- Balabanov and Granath [2020b] O. Balabanov and M. Granath, Unsupervised interpretable learning of topological indices invariant under permutations of atomic bands, Machine Learning: Science and Technology 2, 025008 (2020b).

- Greplova et al. [2020] E. Greplova, A. Valenti, G. Boschung, F. Schäfer, N. Lörch, and S. D. Huber, Unsupervised identification of topological phase transitions using predictive models, New Journal of Physics 22, 045003 (2020).

- Ho and Wang [2021] C.-T. Ho and D.-W. Wang, Robust identification of topological phase transition by self-supervised machine learning approach, New Journal of Physics 23, 083021 (2021).

- Zhang et al. [2021] L.-F. Zhang, L.-Z. Tang, Z.-H. Huang, G.-Q. Zhang, W. Huang, and D.-W. Zhang, Machine learning topological invariants of non-hermitian systems, Physical Review A 103, 012419 (2021).

- Narayan and Narayan [2021] B. Narayan and A. Narayan, Machine learning non-hermitian topological phases, Physical Review B 103, 035413 (2021).

- Yu and Deng [2021] L.-W. Yu and D.-L. Deng, Unsupervised learning of non-hermitian topological phases, Physical Review Letters 126, 240402 (2021).

- Zhang and Kim [2017] Y. Zhang and E.-A. Kim, Quantum loop topography for machine learning, Physical review letters 118, 216401 (2017).

- Cheng et al. [2018] Q.-Q. Cheng, W.-W. Luo, A.-L. He, and Y.-F. Wang, Topological quantum phase transitions of chern insulators in disk geometry, Journal of Physics: Condensed Matter 30, 355502 (2018).

- Sun et al. [2018] N. Sun, J. Yi, P. Zhang, H. Shen, and H. Zhai, Deep learning topological invariants of band insulators, Physical Review B 98, 085402 (2018).

- Zhang et al. [2020] Y. Zhang, P. Ginsparg, and E.-A. Kim, Interpreting machine learning of topological quantum phase transitions, Physical Review Research 2, 023283 (2020).

- Kerr et al. [2021] A. Kerr, G. Jose, C. Riggert, and K. Mullen, Automatic learning of topological phase boundaries, Physical Review E 103, 023310 (2021).

- Käming et al. [2021] N. Käming, A. Dawid, K. Kottmann, M. Lewenstein, K. Sengstock, A. Dauphin, and C. Weitenberg, Unsupervised machine learning of topological phase transitions from experimental data, Machine Learning: Science and Technology 2, 035037 (2021).

- Rem et al. [2019] B. S. Rem, N. Käming, M. Tarnowski, L. Asteria, N. Fläschner, C. Becker, K. Sengstock, and C. Weitenberg, Identifying quantum phase transitions using artificial neural networks on experimental data, Nature Physics 15, 917 (2019).

- Che et al. [2020] Y. Che, C. Gneiting, T. Liu, and F. Nori, Topological quantum phase transitions retrieved through unsupervised machine learning, Physical Review B 102, 134213 (2020).

- Chung et al. [2021] M.-C. Chung, T.-P. Cheng, G.-Y. Huang, and Y.-H. Tsai, Deep learning of topological phase transitions from the point of view of entanglement for two-dimensional chiral p-wave superconductors, Physical Review B 104, 024506 (2021).

- Ming et al. [2019] Y. Ming, C.-T. Lin, S. D. Bartlett, and W.-W. Zhang, Quantum topology identification with deep neural networks and quantum walks, npj Computational Materials 5, 1 (2019).

- Zhang et al. [2018] P. Zhang, H. Shen, and H. Zhai, Machine learning topological invariants with neural networks, Physical review letters 120, 066401 (2018).

- Holanda and Griffith [2020] N. Holanda and M. Griffith, Machine learning topological phases in real space, Physical Review B 102, 054107 (2020).

- Tsai et al. [2021] Y.-H. Tsai, K.-F. Chiu, Y.-C. Lai, K.-J. Su, T.-P. Yang, T.-P. Cheng, G.-Y. Huang, and M.-C. Chung, Deep learning of topological phase transitions from entanglement aspects: An unsupervised way, Physical Review B 104, 165108 (2021).

- Zhang et al. [2017] Y. Zhang, R. G. Melko, and E.-A. Kim, Machine learning z 2 quantum spin liquids with quasiparticle statistics, Physical Review B 96, 245119 (2017).

- Mano and Ohtsuki [2019] T. Mano and T. Ohtsuki, Application of convolutional neural network to quantum percolation in topological insulators, Journal of the Physical Society of Japan 88, 123704 (2019).

- Su et al. [2019] Z. Su, Y. Kang, B. Zhang, Z. Zhang, and H. Jiang, Disorder induced phase transition in magnetic higher-order topological insulator: A machine learning study, Chinese Physics B 28, 117301 (2019).

- Lian et al. [2019] W. Lian, S.-T. Wang, S. Lu, Y. Huang, F. Wang, X. Yuan, W. Zhang, X. Ouyang, X. Wang, X. Huang, et al., Machine learning topological phases with a solid-state quantum simulator, Physical review letters 122, 210503 (2019).

- Richter-Laskowska et al. [2018] M. Richter-Laskowska, H. Khan, N. Trivedi, and M. M. Maśka, A machine learning approach to the berezinskii-kosterlitz-thouless transition in classical and quantum models, arXiv preprint arXiv:1809.09927 (2018).

- Zhang et al. [2019] W. Zhang, J. Liu, and T.-C. Wei, Machine learning of phase transitions in the percolation and x y models, Physical Review E 99, 032142 (2019).

- Rodriguez-Nieva and Scheurer [2019] J. F. Rodriguez-Nieva and M. S. Scheurer, Identifying topological order through unsupervised machine learning, Nature Physics 15, 790 (2019).

- Tsai et al. [2020] Y.-H. Tsai, M.-Z. Yu, Y.-H. Hsu, and M.-C. Chung, Deep learning of topological phase transitions from entanglement aspects, Physical Review B 102, 054512 (2020).

- Scheurer and Slager [2020] M. S. Scheurer and R.-J. Slager, Unsupervised machine learning and band topology, Physical review letters 124, 226401 (2020).

- Caio et al. [2019] M. D. Caio, M. Caccin, P. Baireuther, T. Hyart, and M. Fruchart, Machine learning assisted measurement of local topological invariants, arXiv preprint arXiv:1901.03346 (2019).

- Trabelsi et al. [2018] M. Trabelsi, P. Kakosimos, and H. Komurcugil, Mitigation of grid voltage disturbances using quasi-z-source based dynamic voltage restorer, in 2018 IEEE 12th International Conference on Compatibility, Power Electronics and Power Engineering (CPE-POWERENG 2018) (IEEE, 2018) pp. 1–6.

- Gaudet and Maida [2018] C. J. Gaudet and A. S. Maida, Deep quaternion networks, in 2018 International Joint Conference on Neural Networks (IJCNN) (IEEE, 2018) pp. 1–8.

- García-Retuerta et al. [2020] D. García-Retuerta, R. Casado-Vara, A. Martin-del Rey, F. De la Prieta, J. Prieto, and J. M. Corchado, Quaternion neural networks: state-of-the-art and research challenges, in International Conference on Intelligent Data Engineering and Automated Learning (Springer, 2020) pp. 456–467.

- Isokawa et al. [2009] T. Isokawa, N. Matsui, and H. Nishimura, Quaternionic neural networks: Fundamental properties and applications, in Complex-valued neural networks: utilizing high-dimensional parameters (IGI global, 2009) pp. 411–439.

- Parcollet et al. [2020] T. Parcollet, M. Morchid, and G. Linarès, A survey of quaternion neural networks, Artificial Intelligence Review 53, 2957 (2020).

- Matsui et al. [2004] N. Matsui, T. Isokawa, H. Kusamichi, F. Peper, and H. Nishimura, Quaternion neural network with geometrical operators, Journal of Intelligent & Fuzzy Systems 15, 149 (2004).

- Zhu et al. [2018] X. Zhu, Y. Xu, H. Xu, and C. Chen, Quaternion convolutional neural networks, in Proceedings of the European Conference on Computer Vision (ECCV) (2018) pp. 631–647.

- Hongo et al. [2020] S. Hongo, T. Isokawa, N. Matsui, H. Nishimura, and N. Kamiura, Constructing convolutional neural networks based on quaternion, in 2020 International Joint Conference on Neural Networks (IJCNN) (IEEE, 2020) pp. 1–6.

- Parcollet et al. [2018a] T. Parcollet, Y. Zhang, M. Morchid, C. Trabelsi, G. Linarès, R. De Mori, and Y. Bengio, Quaternion convolutional neural networks for end-to-end automatic speech recognition, arXiv preprint arXiv:1806.07789 (2018a).

- Grassucci et al. [2022] E. Grassucci, E. Cicero, and D. Comminiello, Quaternion generative adversarial networks, in Generative Adversarial Learning: Architectures and Applications (Springer, 2022) pp. 57–86.

- Grassucci et al. [2021] E. Grassucci, D. Comminiello, and A. Uncini, A quaternion-valued variational autoencoder, in ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2021) pp. 3310–3314.

- Nguyen et al. [2021] T. D. Nguyen, D. Phung, et al., Quaternion graph neural networks, in Asian Conference on Machine Learning (PMLR, 2021) pp. 236–251.

- Özcan et al. [2021] B. Özcan, F. Kinli, and F. Kiraç, Quaternion capsule networks, in 2020 25th International Conference on Pattern Recognition (ICPR) (IEEE, 2021) pp. 6858–6865.

- Parcollet et al. [2018b] T. Parcollet, M. Ravanelli, M. Morchid, G. Linarès, and R. De Mori, Speech recognition with quaternion neural networks, arXiv preprint arXiv:1811.09678 (2018b).

- Girard [1984] P. R. Girard, The quaternion group and modern physics, European Journal of Physics 5, 25 (1984).

- Girard and Baylis [2008] P. R. Girard and W. E. Baylis, Quaternions, clifford algebras and relativistic physics, SIAM review 50, 382 (2008).

- Girard et al. [2018] P. R. Girard, P. Clarysse, R. Pujol, R. Goutte, and P. Delachartre, Hyperquaternions: a new tool for physics, Advances in Applied Clifford Algebras 28, 1 (2018).

- Nagaosa and Tokura [2013] N. Nagaosa and Y. Tokura, Topological properties and dynamics of magnetic skyrmions, Nature Nanotechnology 8, 899 (2013).

- Qi et al. [2006] X.-L. Qi, Y.-S. Wu, and S.-C. Zhang, Topological quantization of the spin hall effect in two-dimensional paramagnetic semiconductors, Phys. Rev. B 74, 085308 (2006).

- Jolliffe and Cadima [2016] I. T. Jolliffe and J. Cadima, Principal component analysis: a review and recent developments, Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 374, 20150202 (2016).

- Ma and Fu [2012] Y. Ma and Y. Fu, Manifold learning theory and applications, Vol. 434 (CRC press Boca Raton, FL, 2012).

- Chollet [2021] F. Chollet, Deep learning with Python (Simon and Schuster, 2021).

- Efthymiou et al. [2019] S. Efthymiou, M. J. S. Beach, and R. G. Melko, Super-resolving the ising model with convolutional neural networks, Phys. Rev. B 99, 075113 (2019).

- Lin et al. [2017] H. R. Lin, M. Tegmark, and D. Rolnick, Why does deep and cheap learning work so well?, Journal of Statistical Physics 168, 1223 (2017).