Quasi-calibration method for structured light system with auxiliary camera

Abstract

The structured light projection technique is a representative active method for 3-D reconstruction, but many researchers face challenges with the intricate projector calibration process. To address this complexity, we employs an additional camera, temporarily referred to as the auxiliary camera, to eliminate the need for projector calibration. The auxiliary camera aids in constructing rational model equations, enabling the generation of world coordinates based on absolute phase information. Once calibration is complete, the auxiliary camera can be removed, mitigating occlusion issues and allowing the system to maintain its compact single-camera, single-projector design. Our approach not only resolves the common problem of calibrating projectors in digital fringe projection systems but also enhances the feasibility of diverse-shaped 3D imaging systems that utilize fringe projection, all without the need for the complex projector calibration process.

keywords:

Structured Light System , Calibration , Fringe Projection Profilometry[inst1]organization=Department of Mechanical Engineering, Yonsei University,postcode=03722, state=Seoul, country=South Korea

[inst2]organization=Meta Reality Labs,city=Redmond, postcode=98052, state=Washington, country=USA

The proposed method introduces a novel approach to structured light system calibration, significantly enhancing efficiency without compromising on accuracy.

The proposed method incorporates an auxiliary camera to facilitate calibration, accommodating both digital and non-digital illumination sources effectively.

The proposed method offers a reduction in the time and resources required for structured light system calibration, making advanced applications more accessible and cost-efficient.

The proposed method has been validated through extensive experimental analyses.

1 Introduction

Fringe projection profilometry (FPP) technique typically includes a digital projector as an active illuminator to generate fringe signals and a digital camera to receive the reflected signals[1, 2, 3, 4, 5, 6, 7]. Since the digital projector allows per-pixel control of the projection image and the 3-D point cloud can be retrieved by deterministic one-to-one mapping problems, the digital fringe projection (DFP) system is widely used for inspecting the product(s) in manufacturing or scanning highly complex object in medicine and forensic science required to be high-quality 3-D point cloud[8, 9, 10, 11, 12].

Many commonly used hand-held 3-D scanners and highly accurate 3-D scanners with static setups adopt two cameras and one projector system[13, 14, 15, 16, 17]. The main principle of this system is the same as the standard stereo vision, which is a representative passive 3-D reconstruction method. The processor finds the correspondence between two images and calculates 3-D points with the triangulation method. The only difference is that the fringe images from the projector give the processor the information of the correspondence and make it possible to calculate the 3-D point cloud pixel by pixel. However, if the system tries to capture the object(s) having large depth gaps, it is difficult to reconstruct 3-D, and it results in occlusion since all three optical devices, two cameras, and one projector, should look at the point to be reconstructed. To overcome the limitation, Zhang developed the projector calibration method by making the projector an inverse camera and simplified the configuration of the DFP system[18]. Compared to camera calibration, which has been deeply researched in the computer vision field, projector calibration requires elaborate procedures since it can only see through the camera’s field of view. By doing so, the pinhole model for the projector and camera can be uniquely solved, which means that the system can generate the 3-D geometry pixel by pixel with only two optical devices. Even if it is successful to retrieve the 3-D geometry, there is the distortion problem at the corner of the 3-D geometry when the projector imaging plane reprojects the images to the camera’s imaging plane. To resolve the issue, many researchers capture the flat plane as the reference plane and make a look-up table to compensate for the error caused by that distortion[19, 20, 21]. However, there is still a problem in that the accuracy of the DFP system is determined depending on the fabrication accuracy of the flat plane and it is difficult to guarantee which part of the 3-D geometry of the flat plane is correct. Recently, Zhang developed a method to minimize the error by defining the relationship between the world coordinate and the phase value extracted from the fringe images[22]. By using the fact that the 3-D geometry of the object can be calculated by the phase information of the captured fringe images, they set the relationship between the phase value and the world coordinates with the third-order polynomial equation. The measurement error of the system converges, and the distortion effect at the corner becomes much less than before. Nevertheless, the tedious projector calibration procedure should be done before applying this method, meaning that a special projector that does not follow the pinhole model cannot be used, such as the mechanical projector developed by Hyun for high-speed and sub-pixel accuracy[23]. To address the aforementioned limitations, in this research, we propose a novel calibration method for fringe projection systems. Initially, one auxiliary camera is temporarily added to the system. By implementing an auxiliary camera, the system can reconstruct 3-D geometry using the conventional stereo vision method with the projector[24]. Based on the calculated 3-D geometry, we can define the relationship between the fringe information and the world coordinate with respect to the main camera by using the approximate rational model equation[25]. Then, there is no need to keep the auxiliary camera, and without the projector calibration, the system can reconstruct 3-D pixel by pixel. This approach effectively alleviates the problems of camera distortion and skew. Furthermore, our calibration method is not limited to calibrating the DFP system. Instead, it is possible to calibrate any type of FPP system that generates an absolute phase map. Experimental outcomes confirm that our method delivers a 3-D reconstruction resolution almost identical to traditional techniques, all while simplifying the calibration process.

The contents of this paper is as follows. In Section 2, we introduce the theoretical background of our research. Section 3 describes the detailed procedure of our experiment. In addition, Section 3 also includes a rigid evaluation of our research to verify our results. Section 4 summarizes this work.

2 Principle

2.1 N-step phase shifting method

The FPP adopts the concept of phase to find corresponding pixels between the camera and the projector. Using phase instead of intensity has many advantages in reconstructing 3-D geometry; the reconstructed 3-D result becomes more noise tolerant because the system designer can manage the light sources. Moreover, it is less affected by surface reflection and color of the texture. To find the phase value via the system, a set of fringe pattern should be projected onto the target and captured by the camera. The projected pattern should include at least three frames of phase-shifted fringe patterns. This methodology, which utilizes multiple frames to recover phase values, is called the N-step phase shifting method. It finds the phase value by solving the equations as follows,

| (1) |

where is the average intensity and is the intensity modulation of corresponding pixel at the th frame. For example, if there are frames of phase-shifted images, phase can be found by solving Eq. (1) for with the least square method. Then the solution is represented as follows,

| (2) |

However, due to the characteristic of the inverse tangent function, the retrieved phase ranges from to with discontinuities. The discontinuities result in having multiple points with the same phase value, meaning that it cannot set one-to-one correspondence between the camera and projector pixel. A procedure called phase unwrapping is required to resolve the issue, making the retrieved phase continuous and absolute. The phase values are unwrapped with the equation as follows,

| (3) |

where represents the unwrapped phase, the phase without discontinuities, and represents the proper integer added to the phase value to connect the discontinuities. Here, we have denoted the unwrapped phase as to distinguish it from the phase , which has discontinuities in each period. Temporal phase unwrapping, used in the following experiments, connects the discontinuities by projecting additional binary patterns containing the order of the fringe period.

2.2 Fringe projection profilometry system

Every optical device is represented as a pinhole model. The pinhole model is a mathematical expression of the relationship between the 3-D world coordinates and the image pixel . The linear pinhole model is represented mathematically as follows,

| (4) |

where is a scaling factor and A,R and t are

| (5) |

respectively. A represents the intrinsic parameters of the imaging device, which includes the effective focal length and , principal point , and and skew factor . For the research level camera, . If we define projection matrix P as

| (6) |

linear pinhole model can be turned into,

| (7) |

Then, the linear pinhole model of the camera and projector can be expressed as follows,

| (8) |

| (9) |

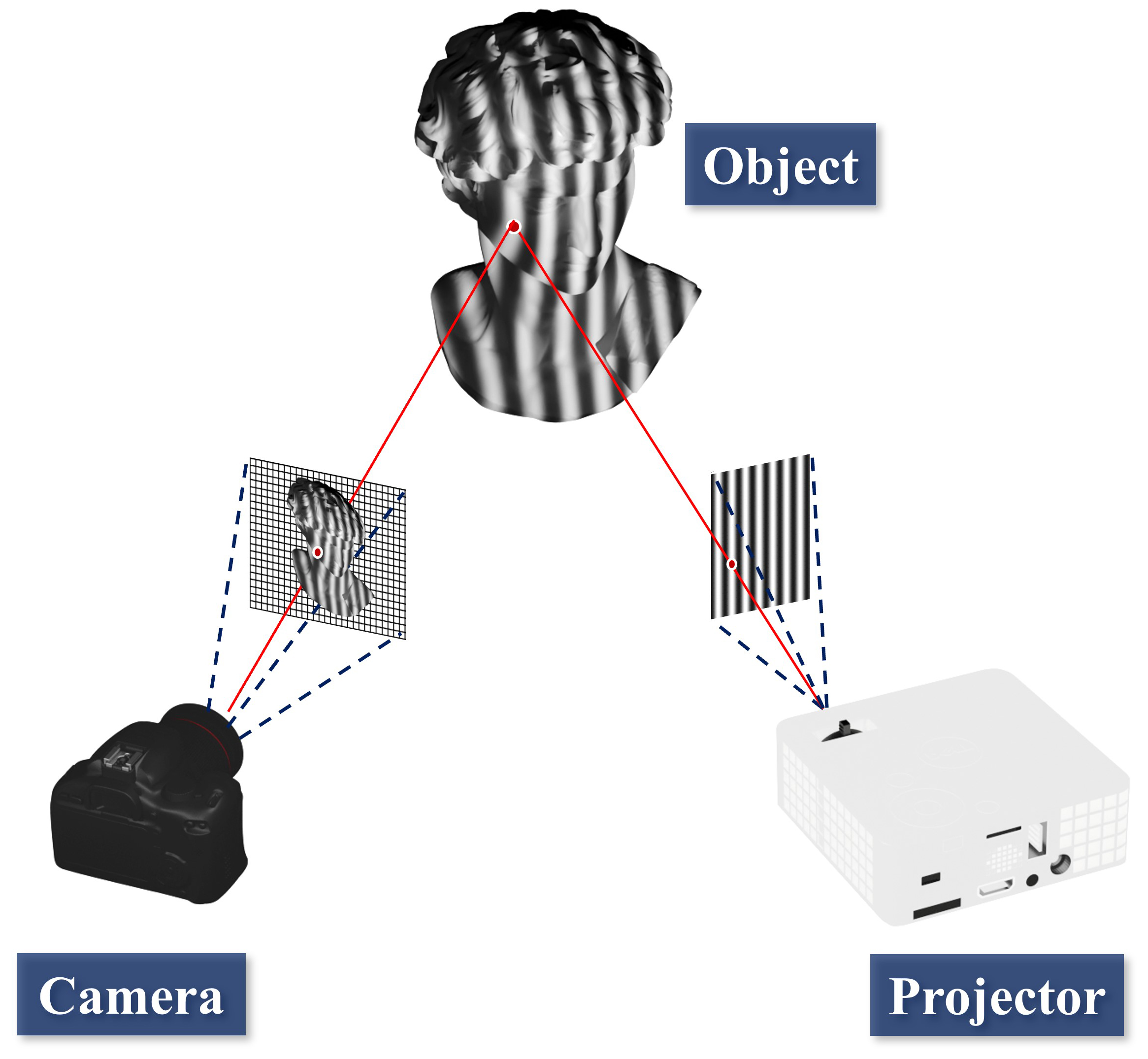

where the superscripts c and p represent the camera and projector respectively, and the superscript T represents the transpose of a matrix. Fig. 1 shows the common configuration of the FPP system. If the FPP system is calibrated, the projection matrix and is determined. Then, the FPP system finds the world coordinates by solving Eq. (8) and Eq. (9). If the camera pixel and is taken into account when solving the equation, the corresponding world coordinates are described as follows,

| (10) |

where and represent elements of the projection matrix of the camera and projector. If the fringes are projected, phase values will be generated with the N-step phase-shifting method. When the vertical fringes are projected, the phase values on the camera pixel can define the corresponding projector pixel as follows,

2.3 Pixel-wise calibration method

Previous studies in fringe projection profilometry have shown that the phase value and the world coordinates have a certain relationship. According to Zhang[22], the relationship can be approximated by the third-order polynomials for each pixel. The polynomial model defines the relationship between the phase value and world coordinates in each camera pixel as follows

| (13) |

| (14) |

| (15) |

where , are the coefficients for the polynomial model, in which each pixel has its own unique value. A fringe projection profilometry system calibrated with a pixel-wise polynomial model has been shown to reconstruct the 3-D geometry with higher accuracy, especially on the edges of the image. However, the polynomial model performs well only in the short depth range. To overcome that limitation, Vargas et al. calibrated the fringe projection profilometry system using another pixel-wise calibration model called the rational model[25]. The relationship between and in the rational model is as follows,

| (16) |

| (17) |

| (18) |

where , are the calibration coefficients for each pixel. While a polynomial model has been assumed based on the experimental data without backbone equations, the rational model was derived from the pin-hole model equation. Compared with the polynomial model, the rational model has achieved a larger depth range of the reconstruction area while maintaining the high accuracy of the pixel-wise calibration method.

2.4 Proposed method

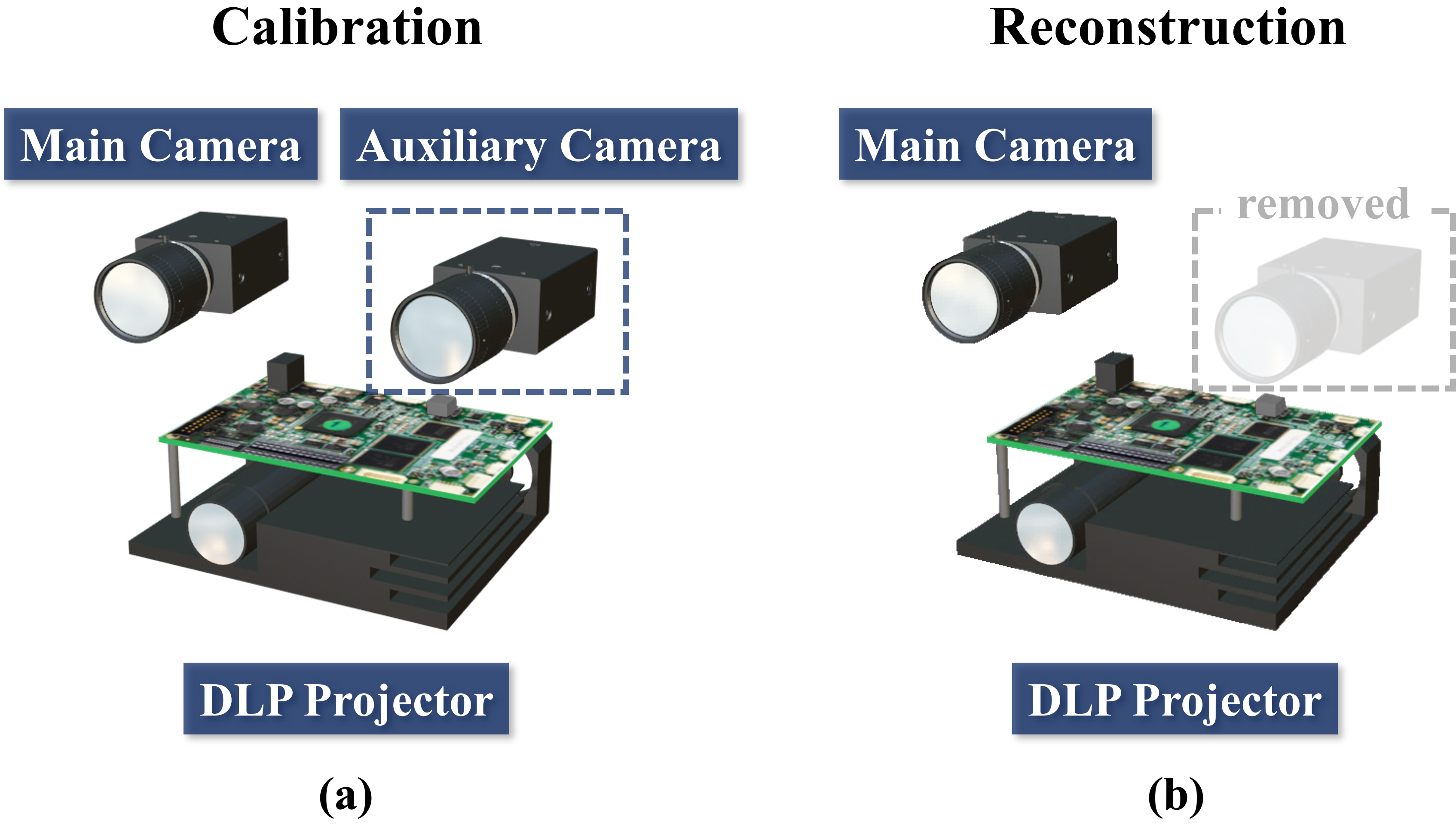

Calibration of a projector in a FPP system is more complicated than calibration of a camera because the projector is output device. Despite previous efforts, such as Zhang’s projector calibration method utilizing camera-captured images[18], calibrating the projector still remains a sophisticated process[26, 27, 28, 29, 30]. In this context, we have developed a system calibration method for FPP system that does not require a projector calibration process throughout the sequence. Moreover, the preceding FPP systems were constrained to employing expensive Digital Light Processing (DLP) projectors as illumination devices. DLP projectors are required because two-directional fringe projection is required for calibration, even though the reconstruction requires only one-directional fringe pattern. However, the proposed method overcomes this limitation by enhancing the system’s adaptability depending on the equipment conditions. Calibration of the projector-camera system will be possible, only if the projection can generate a one-directional phase map with repeatability. This method uses one projector and two cameras. Since we can remove the second camera after the calibration process, we named the second camera an auxiliary camera, and the first one, a main camera. Fig. 2 compares the system configuration during the calibration and reconstruction. The proposed method calibrates the system by finding the relationship between a phase value and world coordinates in each pixel of the main camera. Here, to attain world coordinates in each pixel, the auxiliary camera is temporarily used during the calibration. If the system is calibrated, 3-D geometry can be measured without it. The main camera and projector acquire depth information using previously derived phase-coordinate relations. We named this novel method quasi-calibration method. The proposed calibration process consists of the following steps.

-

1.

Step 1: Calibrate the main and auxiliary camera with the standard stereo vision calibration method to obtain the intrinsic and extrinsic parameters.

-

2.

Step 2: Find the 3-D geometry of the calibration board with stereo cameras based on a conventional stereo-matching algorithm with the phase information calculated by the N-step phase shifting algorithm.

-

3.

Step 3: Determine the ideal planar model that fits the three-dimensional geometry of the calibration board by utilizing the plane fitting equation.

-

4.

Step 4: Find the relationship between the 3-D geometry, , which is centered by the main camera and for each pixel in the pixel-wise calibration model.

-

5.

Step 5: Using only the main camera and digital projector, capture the fringe images of the object(s) to be measured and reconstruct the 3-D geometry based on the previously established relationship.

While finding the 3-D geometry of the calibration board (Step 2), single-directional fringe patterns are projected, and an absolute phase map is generated to give guidance on finding the corresponding pixels of each camera. However, the generated absolute phase map contains phase errors along the boundaries of the calibration circles, resulting from the high contrast between the white circle and a black background. To remove certain artifacts, the phase map was fitted on a smooth polynomial surface[22]. Additionally, before fitting the observation data to the pixel-wise calibration model, the closest flat plane was generated via the equation of the plane as following

| (19) |

where the variables A, B, C, and D are determined to minimize the offset error between the observation and the ideal plane (Step 3). The coefficients of the pixel-wise calibration model are retrieved with the point cloud values of an ideal plane and absolute phase values (Step 4). Using an ideal plane instead of an initial observation clears out the inherent distortions of the optical devices. If coefficients of the pixel-wise calibration model are found, the system is calibrated at this moment, and the auxiliary camera can be removed if necessary. The 3-D shape of the target can be measured only with the projector and the main camera. The following experiment presents 3-D results of the FPP system calibrated with the proposed method. It is proved that this method calibrates the FPP system without projector calibration and successfully reconstructs a complex object. Furthermore, the following experiments conducted with a plastic slit illuminator show that the proposed method allows users to utilize wider types of fringe illumination devices.

3 Experiment

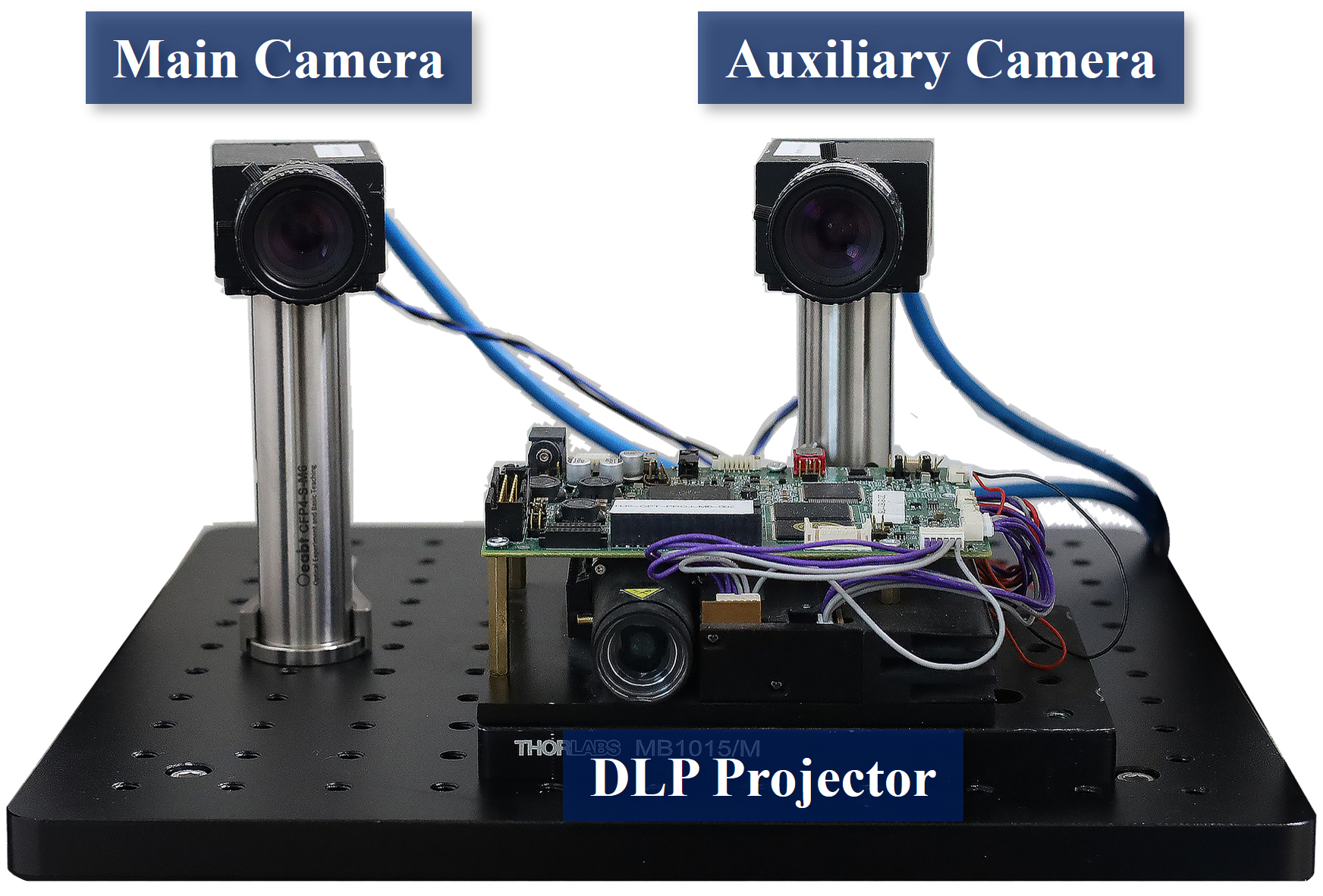

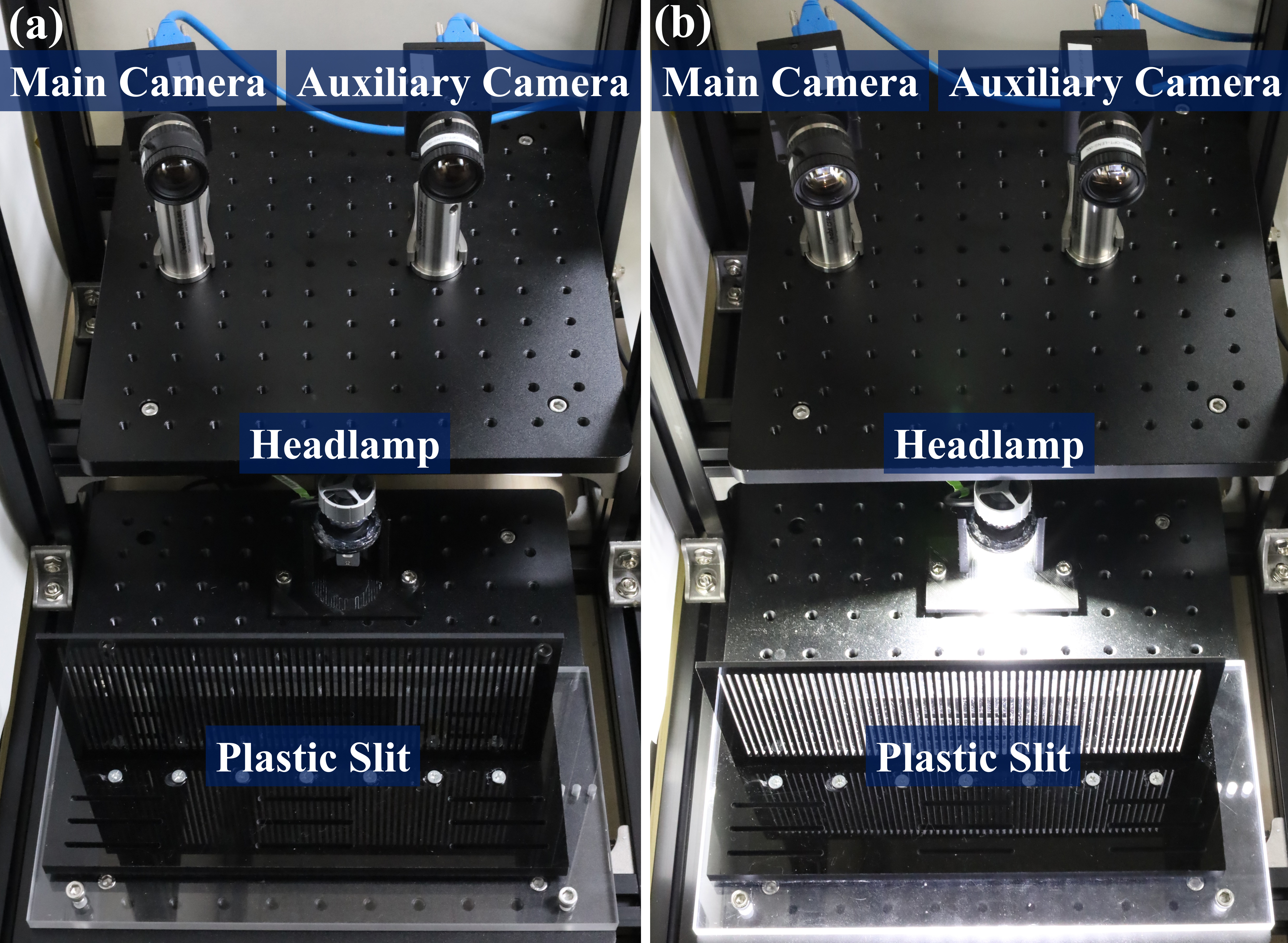

To validate the proposed method, we set up the system as follows: two CCD cameras (Model: FLIR GS3-U3-50S5M-C) with 12 mm focal length lens (Model: Computar M1214-MP2) and one digital light processing (DLP) projector (Model: Texas Instrument DLP LightCrafter 4500) as shown in Fig. 3. The resolution of the camera was set to , and the projector’s resolution was .

The first step of the proposed method is to calibrate the main camera and the auxiliary camera with the standard stereo vision method. Two cameras have taken 60 poses of the calibration board to calibrate the main camera and the auxiliary camera. Next, the 3-D geometry of the calibration board was reconstructed by employing the stereo camera and a projector.

The projector projected vertical fringe patterns on the calibration board. By capturing those projected fringe patterns with two cameras, each camera generated a phase map based on the phase-shifting algorithm. The disparity between the two cameras was found based on the phase value. The projected patterns consisted of a set of 18 phase-shifted images to generate phase values with a fringe pitch of 18 pixels and 7 gray code images for unwrapping these phase values. Next, 20 poses of the calibration board were taken. The generated phase map was once fitted with the equation of the polynomial surface to remove artifacts resulting from large intensity deviations between the adjacent pixels around the edges of the calibration circles. Here, we used a fifth-order polynomial surface equation. Assuming that the calibration board is completely flat, the 3-D geometry of the plane was fitted to the equation of the plane. The relationship between the values and the values of each pixel were found with the rational model using the fitted data. Once the relationship has been found, the geometry was estimated with the main camera and projector. In other words, the auxiliary camera was no longer required to estimate the geometry. During geometry estimation, as in the previous pattern, 25 patterns of 18 phase-shifted images and 7 gray code images were projected onto the geometry.

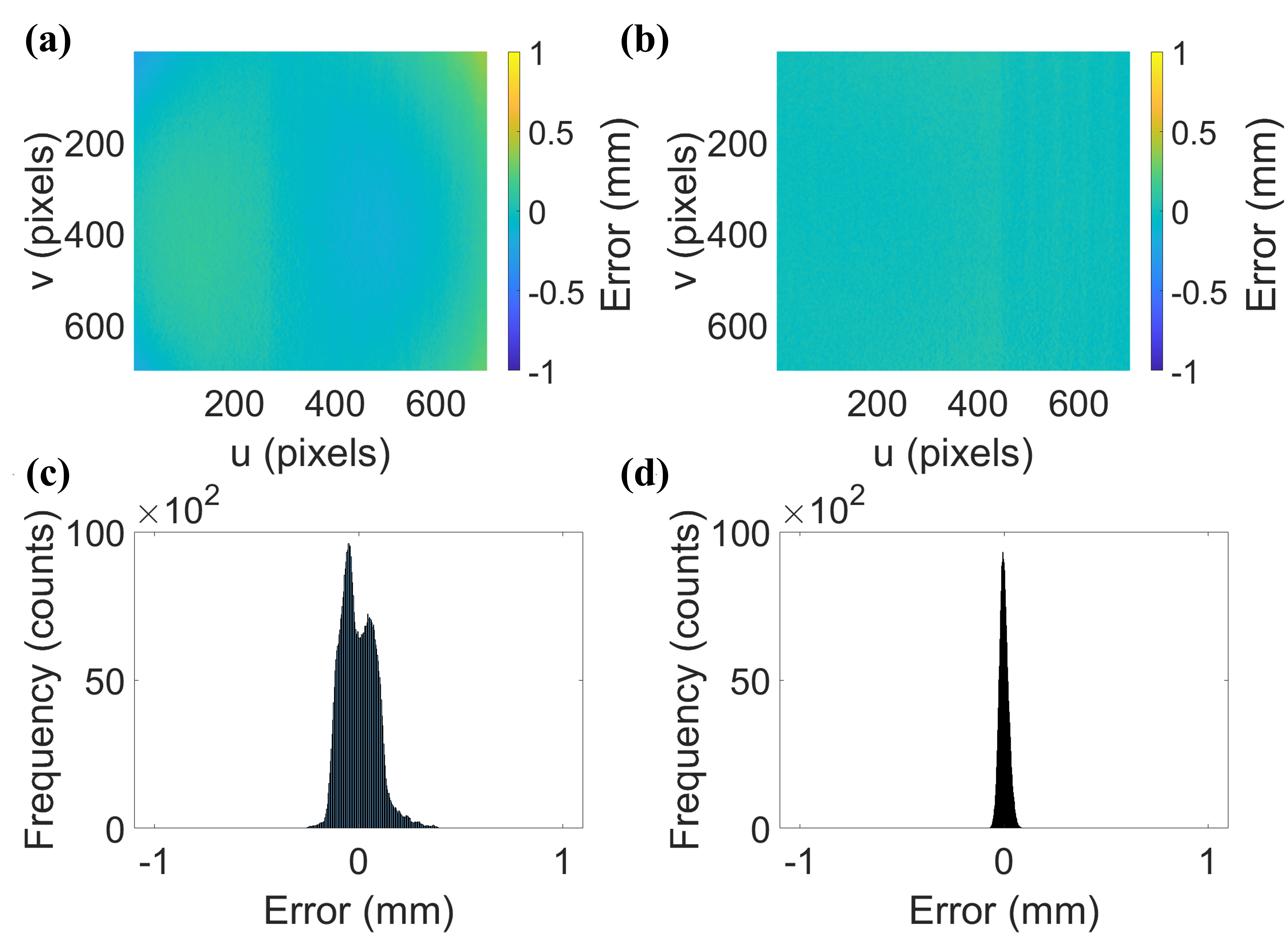

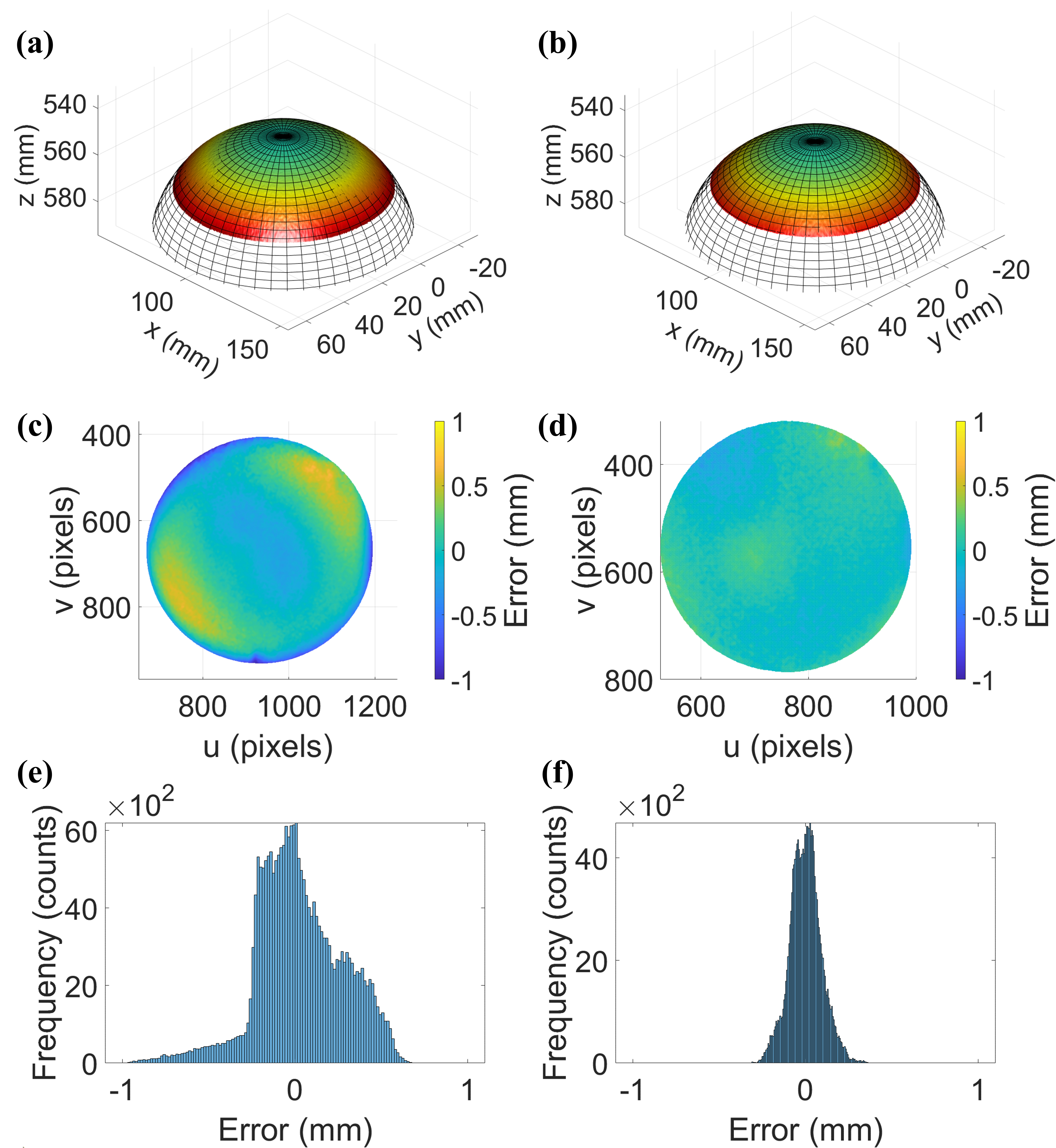

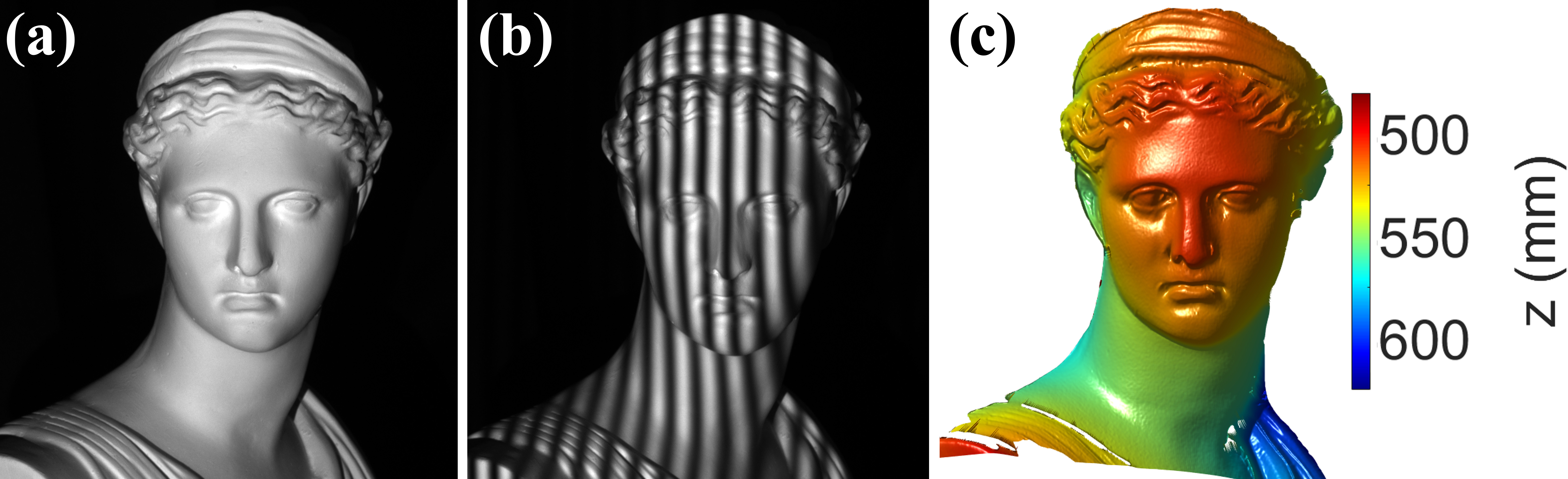

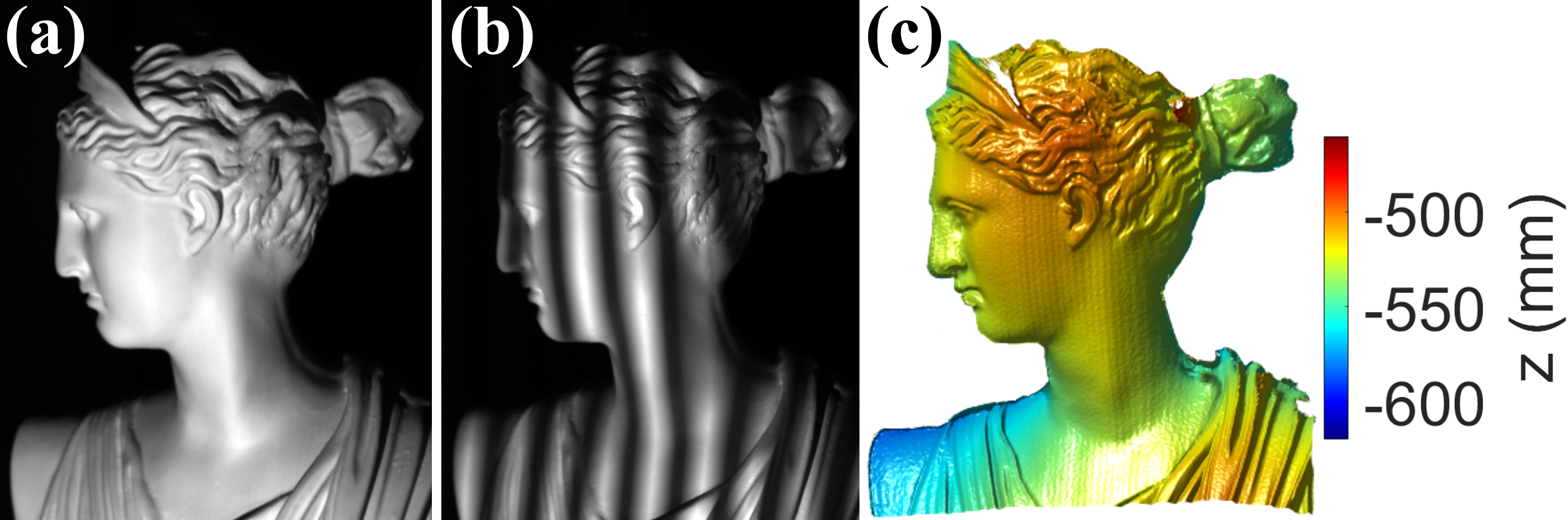

In order to verify the reconstruction accuracy of the proposed method, three objects, including the flat plane, sphere, and statue, were reconstructed with the main camera and projector. For comparison, the same objects were reconstructed using the system calibrated with the conventional FPP calibration method. Calibration images were attained via the same calibration board at the exact position for both calibration methods. Both methods were calibrated using the 20 poses of the calibration board over a range of . However, the conventional calibration method required both horizontal and vertical patterns, whereas the proposed method required one directional fringe pattern. Therefore, additional horizontal patterns were projected after vertical ones onto each pose to calibrate the system by the conventional method. A total of 52 frames of calibration boards with projections for each pose were taken with the system. The 52 patterns include 25 vertical and 25 horizontal fringe patterns as well as additional black and white patterns. It is noteworthy that the following experimental data were filtered with 55 Gaussian filter in the phase map domain to remove significant noise from the measurement. Fig. 4 shows the flat plane reconstruction by each calibration method. Fig. 4(a) and Fig. 4(b) show the corresponding error map, which presents the offset from the measurement to the ideal plane. Fig. 4(c) and Fig. 4(d) show the histogram of the corresponding offset error map. The root-mean-square(RMS) error of the error map has been estimated to be 0.0860 mm for the conventional method and 0.0207 mm for the proposed method. The RMS error of the proposed method has the same level of accuracy as the latest calibration methods [31, 32]. Fig. 5 shows the sphere reconstruction by each calibration method. Fig. 5(a) and Fig. 5(b) show the ideal sphere and estimated result, and Fig. 5(c) and Fig. 5(d) show the corresponding error map, which presents the offset from the measurement to the ideal sphere. Fig. 5(e) and Fig. 5(f) show the histogram of the corresponding offset error map. The estimated radius of the sphere was 50.05 mm with the proposed method, which is an error of 0.1% from the ground truth radius of 50 mm. The estimated RMS error of the sphere was 0.1877 mm for the conventional method and 0.0901 mm for the proposed method. Fig. 6 shows the results of reconstructing complex geometry, in this case, the statue of Artemis, using the proposed calibration method.

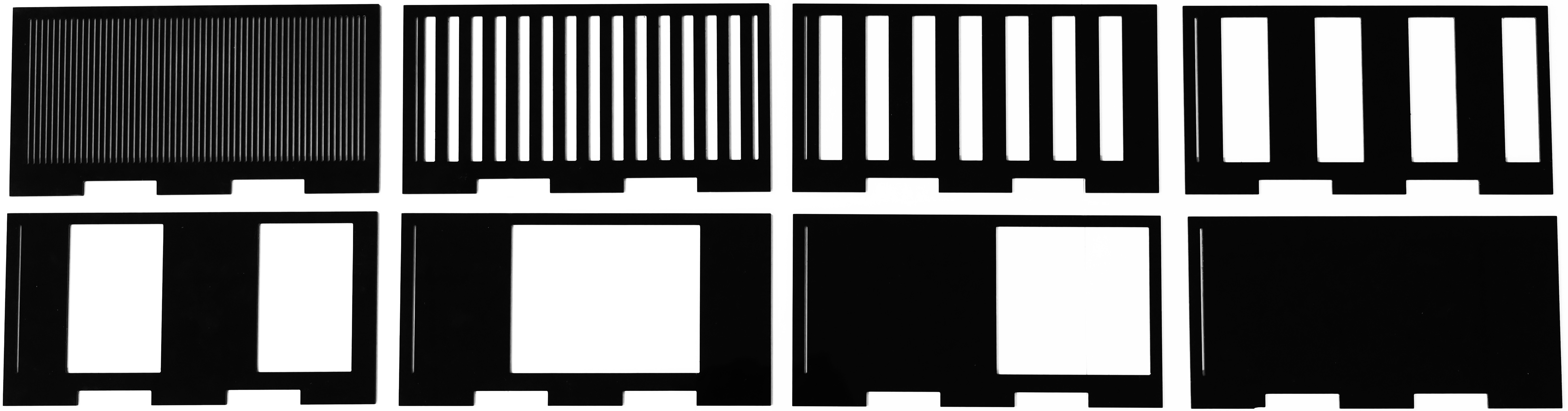

3.1 FPP system with plastic slit illuminator

To validate whether the proposed calibration method can also calibrate the system with a rough type illuminator, the digital light processing (DLP) projector in the previous experiment setup was replaced by a LED with a plastic slit. Fig. 7 shows the experimental setup of the system and Fig. 8 shows the plastic slits that were used in the experiment. When calibrating the system using the proposed method, the patterns were projected by placing the plastic slit in front of the headlamp. To project phase-shifted patterns, the slits were moved one by one and captured with cameras. The gray code patterns were also projected to unwrap the phase map using the plastic slits, which have been cut according to the shape of the gray code pattern. Fig. 9 describes how the slits were shifted for pattern generations. To remove the phase discontinuities caused by machining error of the slits, the phase values of misaligned pixels were replaced with the median filtered phase values of the nearby pixels. In this experiment, 10 poses of the calibration board were taken with the plastic slit illumination system to calibrate the system. Fig. 10 shows the pattern-projected images of the calibration board and corresponding phase map. After the plastic slit illumination system is calibrated with the proposed method, the system can reconstruct a geometry with a simple configuration as shown in Fig. 11. In order to evaluate the performance of the plastic slit illumination system calibrated with the proposed method, a mirror, sphere, and complex statue were taken with the system. Fig. 12(a) and Fig. 12(b) show the reconstruction result of a flat plane and a sphere repectively. Fig. 12(c) and Fig. 12(d) show the corresponding error map, which presents the offset from the measurement to the ideal geometry. The RMS error of the reconstruction result of the flat plane was 0.1647 mm. The measured radius of the sphere was 50.06 mm which has an error of 0.12 % from the ground truth radius of 50 mm. The RMS error of a sphere reconstruction was 0.2521 mm. Fig. 12(e) and Fig. 12(f) show the corresponding histogram of each error map. Fig. 13 presents the result of reconstructing the complex statue with the plastic slit illumination system.

4 Conclusion

In this paper, we introduced a novel calibration method for the FPP system, which skips the projector calibration owing to utilization of an auxiliary camera. It is later removed so that the system can remain a simple one-camera and one-projector configuration for reconstructing the geometry. The conventional FPP calibration method requires both horizontal and vertical direction fringes to be projected. The new method requires only one-directional fringe patterns, which halves the time for acquiring calibration data at each pose. In experiments, we calibrated the FPP system with only vertical patterns while ensuring the same level of accuracy in reconstructing the geometry. Moreover, unlike the conventional FPP calibration method, which is limited to DLP projectors, the proposed method can calibrate any fringe projection system even if it does not follow a pinhole model. In the second experiment, we proved that new method could calibrate an FPP system employing a rough type illuminator composed of a LED and plastic slits.

Acknowledgments

The authors are thankful for the support received from Meta Reality Labs and Yonsei University (2023-22-0434).

References

- [1] W. Schreiber, G. Notni, Theory and arrangements of self-calibrating whole-body 3-d-measurement systems using fringe projection technique, Optical Engineering 39 (1) (2000) 159–169.

- [2] D. Scharstein, R. Szeliski, High-accuracy stereo depth maps using structured light, in: 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings., Vol. 1, IEEE, 2003, pp. I–I.

- [3] H. Du, Z. Wang, Three-dimensional shape measurement with an arbitrarily arranged fringe projection profilometry system, Optics letters 32 (16) (2007) 2438–2440.

- [4] R. Ishiyama, S. Sakamoto, J. Tajima, T. Okatani, K. Deguchi, Absolute phase measurements using geometric constraints between multiple cameras and projectors, Applied Optics 46 (17) (2007) 3528–3538.

- [5] S. S. Gorthi, P. Rastogi, Fringe projection techniques: whither we are?, Optics and lasers in engineering 48 (2) (2010) 133–140.

- [6] C. Bräuer-Burchardt, C. Munkelt, M. Heinze, P. Kühmstedt, G. Notni, Using geometric constraints to solve the point correspondence problem in fringe projection based 3d measuring systems, in: Image Analysis and Processing–ICIAP 2011: 16th International Conference, Ravenna, Italy, September 14-16, 2011, Proceedings, Part II 16, Springer, 2011, pp. 265–274.

- [7] Z. Li, K. Zhong, Y. F. Li, X. Zhou, Y. Shi, Multiview phase shifting: a full-resolution and high-speed 3d measurement framework for arbitrary shape dynamic objects, Optics letters 38 (9) (2013) 1389–1391.

- [8] B. Pan, Q. Kemao, L. Huang, A. Asundi, Phase error analysis and compensation for nonsinusoidal waveforms in phase-shifting digital fringe projection profilometry, Optics Letters 34 (4) (2009) 416–418.

- [9] S. Lei, S. Zhang, Flexible 3-d shape measurement using projector defocusing, Optics letters 34 (20) (2009) 3080–3082.

- [10] S. Zhang, Recent progresses on real-time 3d shape measurement using digital fringe projection techniques, Optics and lasers in engineering 48 (2) (2010) 149–158.

- [11] Z. Zhang, Review of single-shot 3d shape measurement by phase calculation-based fringe projection techniques, Optics and lasers in engineering 50 (8) (2012) 1097–1106.

- [12] V. Suresh, Y. Wang, B. Li, High-dynamic-range 3d shape measurement utilizing the transitioning state of digital micromirror device, Optics and Lasers in Engineering 107 (2018) 176–181.

- [13] T. Weise, B. Leibe, L. Van Gool, Fast 3d scanning with automatic motion compensation, in: 2007 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2007, pp. 1–8.

- [14] Y. Wang, K. Liu, Q. Hao, X. Wang, D. L. Lau, L. G. Hassebrook, Robust active stereo vision using kullback-leibler divergence, IEEE transactions on pattern analysis and machine intelligence 34 (3) (2012) 548–563.

- [15] W. Jang, C. Je, Y. Seo, S. W. Lee, Structured-light stereo: Comparative analysis and integration of structured-light and active stereo for measuring dynamic shape, Optics and Lasers in Engineering 51 (11) (2013) 1255–1264.

- [16] T. Tao, Q. Chen, J. Da, S. Feng, Y. Hu, C. Zuo, Real-time 3-d shape measurement with composite phase-shifting fringes and multi-view system, Optics express 24 (18) (2016) 20253–20269.

- [17] E. R. Eiríksson, J. Wilm, D. B. Pedersen, H. Aanæs, Precision and accuracy parameters in structured light 3-d scanning, The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 40 (2016) 7–15.

- [18] S. Zhang, P. S. Huang, Novel method for structured light system calibration, Optical Engineering 45 (8) (2006) 083601–083601.

- [19] Z. Li, Y. Shi, C. Wang, Y. Wang, Accurate calibration method for a structured light system, Optical Engineering 47 (5) (2008) 053604–053604.

- [20] H. Luo, J. Xu, N. H. Binh, S. Liu, C. Zhang, K. Chen, A simple calibration procedure for structured light system, Optics and Lasers in Engineering 57 (2014) 6–12.

- [21] S. Feng, Q. Chen, C. Zuo, J. Sun, S. L. Yu, High-speed real-time 3-d coordinates measurement based on fringe projection profilometry considering camera lens distortion, Optics Communications 329 (2014) 44–56.

- [22] S. Zhang, Flexible and high-accuracy method for uni-directional structured light system calibration, Optics and Lasers in Engineering 143 (2021) 106637.

- [23] J.-S. Hyun, G. T.-C. Chiu, S. Zhang, High-speed and high-accuracy 3d surface measurement using a mechanical projector, Optics express 26 (2) (2018) 1474–1487.

- [24] Z. Zhang, A flexible new technique for camera calibration, IEEE Transactions on pattern analysis and machine intelligence 22 (11) (2000) 1330–1334.

- [25] R. Vargas, L. A. Romero, S. Zhang, A. G. Marrugo, Pixel-wise rational model for a structured light system, Optics Letters 48 (10) (2023) 2712–2715.

- [26] Y. Wang, S. Zhang, Optimal fringe angle selection for digital fringe projection technique, Applied optics 52 (29) (2013) 7094–7098.

- [27] D. Li, J. Kofman, Adaptive fringe-pattern projection for image saturation avoidance in 3d surface-shape measurement, Optics express 22 (8) (2014) 9887–9901.

- [28] D. Zheng, F. Da, Q. Kemao, H. S. Seah, Phase-shifting profilometry combined with gray-code patterns projection: unwrapping error removal by an adaptive median filter, Optics express 25 (5) (2017) 4700–4713.

- [29] B. Li, Y. An, D. Cappelleri, J. Xu, S. Zhang, High-accuracy, high-speed 3d structured light imaging techniques and potential applications to intelligent robotics, International journal of intelligent robotics and applications 1 (1) (2017) 86–103.

- [30] S. Feng, C. Zuo, L. Zhang, T. Tao, Y. Hu, W. Yin, J. Qian, Q. Chen, Calibration of fringe projection profilometry: A comparative review, Optics and lasers in engineering 143 (2021) 106622.

- [31] J. Wang, Z. Zhang, W. Lu, X. J. Jiang, High-accuracy calibration of high-speed fringe projection profilometry using a checkerboard, IEEE/ASME Transactions on Mechatronics 27 (5) (2022) 4199–4204.

- [32] S. Zhang, Flexible structured light system calibration method with all digital features, Optics Express 31 (10) (2023) 17076–17086.