Quantum Language Model with Entanglement Embedding for Question Answering

Abstract

Quantum Language Models (QLMs) in which words are modelled as a quantum superposition of sememes have demonstrated a high level of model transparency and good post-hoc interpretability. Nevertheless, in the current literature word sequences are basically modelled as a classical mixture of word states, which cannot fully exploit the potential of a quantum probabilistic description. A quantum-inspired neural network module is yet to be developed to explicitly capture the non-classical correlations within the word sequences. We propose a neural network model with a novel Entanglement Embedding (EE) module, whose function is to transform the word sequence into an entangled pure state representation. Strong quantum entanglement, which is the central concept of quantum information and an indication of parallelized correlations among the words, is observed within the word sequences. The proposed QLM with EE (QLM-EE) is proposed to implement on classical computing devices with a quantum-inspired neural network structure, and numerical experiments show that QLM-EE achieves superior performance compared with the classical deep neural network models and other QLMs on Question Answering (QA) datasets. In addition, the post-hoc interpretability of the model can be improved by quantifying the degree of entanglement among the word states.

Index Terms:

quantum language model, complex-valued neural network, interpretability, entanglement embedding.I Introduction

Neural Network Language Model (NNLM) [1] is widely used in Natural Language Processing (NLP) and information retrieval [2]. With the rapid development of deep learning models, NNLMs have achieved unparalleled success on a wide range of tasks [3, 4, 5, 6, 7, 8, 9, 10]. It becomes a common practice for the NNLMs to use word embedding [11, 4] to obtain the representations of words in a feature space. While NNLM has been very successful at knowledge representation and reasoning [7], its interpretability is often in question, making it inapplicable to critical areas such as the credit scoring system [12]. Two important factors have been summarized in [13] for evaluating the interpretability of a machine learning model, namely, Transparency and Post-hoc Interpretability. The model transparency relates to the forward modelling process, while the post-hoc interpretability is the ability to unearth useful and explainable knowledge from a learned model.

Another emerging area is quantum information and quantum computation where quantum theory can be utilized to develop more powerful computers and more secure quantum communication systems than their classical counterparts [14]. The interaction between quantum theory and machine learning has also been extensively explored in recent years. On one hand, many advanced machine learning algorithms have been applied to quantum control, quantum error correction and quantum experiment design [15, 16, 17, 18]. On the other hand, many novel quantum machine learning algorithms such as quantum neural networks and quantum reinforcement learning have been developed by taking advantage of the unique characteristics of quantum theory [19, 20, 21, 22, 23, 24, 25, 26]. Recently, Quantum Language Models (QLMs) inspired by quantum theory (especially quantum probability theory) have been proposed [27, 28, 29, 30, 31, 32, 33, 34] and demonstrated considerable performance improvement in model accuracy and interpretability on information retrieval and NLP tasks. QLM is a quantum heuristic Neural Network (NN) defined on a Hilbert space which models language units, e.g., words and phrases, as quantum states. By embedding the words as quantum states, QLM tries to provide a quantum probabilistic interpretation of the multiple meanings of words within the context of a sentence. Compared with the classical NNLM, the word states in QLM are defined on a Hilbert space which is different from the classical probability space. In addition, modelling the process of feature extraction as quantum measurement which collapses the superposed state to a definite meaning within the context of a sentence could increase the transparency of the model.

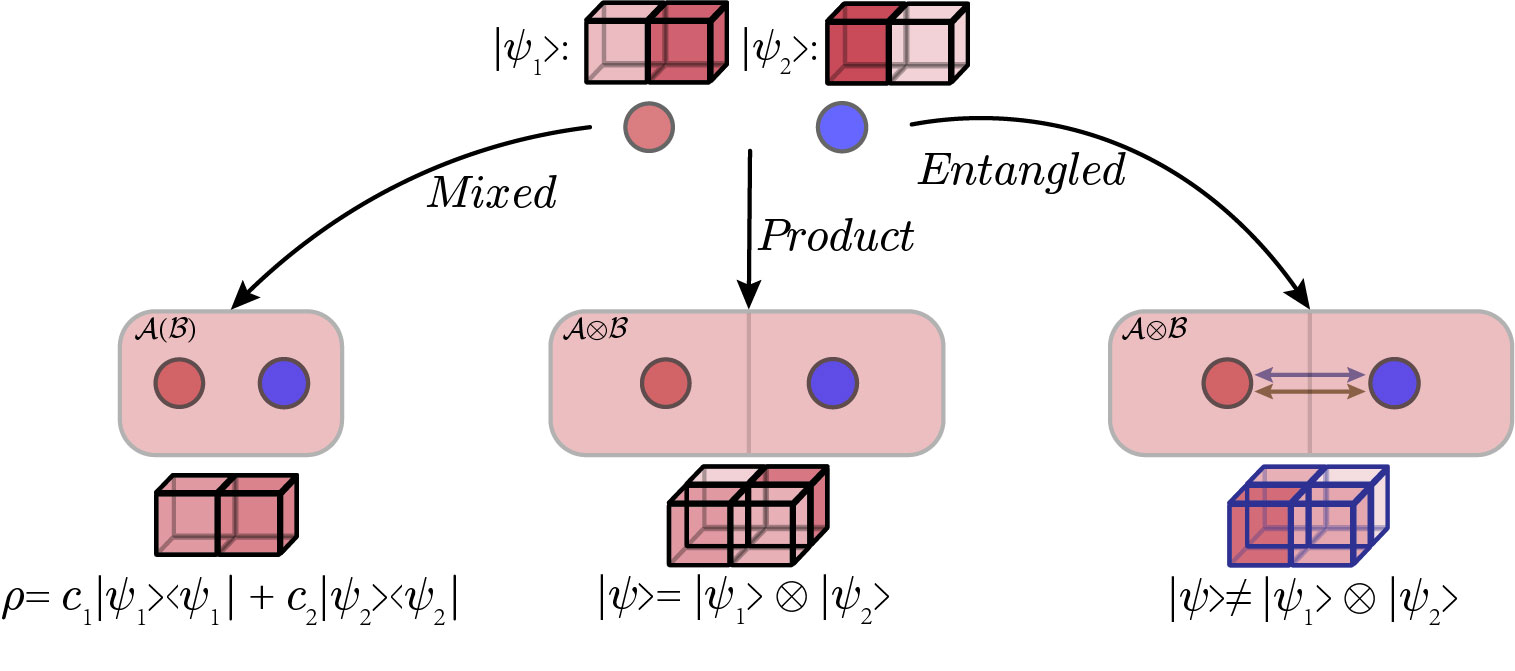

Despite the fact that previous QLMs have achieved good performance and transparency, the state-of-the-art designs still have limitations. For example, the mixed-state representation of the word sequence in [32, 34] is just a classical ensemble of the word states. As shown in the left of Fig. 1, a classical probabilistic mixture of quantum states is not able to fully capture the complex interaction among subsystems. Although Quantum Many-body Wave Function (QMWF) [33] method has been applied to model the entire word sequence as the combination of subsystems, it is still based on a strong premise that the states of the word sequences are separable, as shown in the middle of Fig. 1. A general quantum state has the ability to describe distributions that cannot be split into independent states of subsystems like in the right of Fig. 1. In quantum physics, the state for a group of particles can be generated as an inseparable whole, leading to a non-classical phenomenon called quantum entanglement [35]. Quantum entanglement can be understood as correlations (between subsystems) in superposition, and this type of parallelized correlations can be observed in human language system as well. A word can have different meanings when combined with other words. For example, the verb turn has four meanings {move, change, start doing, shape on a lathe}. If we combine it with on to get the phrase turn on, the meaning of turn will be in the superposition of change and start doing. However, this kind of correlation has not been explicitly generated in the present NN-based QLMs. Besides, a statistical method has been proposed in [36] to characterize the entanglement within the text in a post-measurement configuration, which still lacks the transparency in the forward modelling process.

In this paper, we propose a novel quantum-inspired Entanglement Embedding (EE) module which can be conveniently incorporated into the present NN-based QLMs to learn the inseparable association among the word states. To be more specific, each word is firstly embedded as a quantum pure state and described by a unit complex-valued vector corresponding to the superposition of sememes. The Word Embedding neural network module is adopted from [34]. Word sequences (phrases, -grams, etc.) are initially given as the tensor product of the individual word states, and then transformed to a general entangled state as the output of the EE module. The EE module is realized by a complex-valued neural network, which is essentially approximating the unitary operation that converts the initial product state to an entangled state. After the entanglement embedding, high-level features of the word sequences are extracted by inner products between the entangled state vector and virtual quantum measurement vectors [14]. All the parameters of the complex-valued neural network are trainable with respect to a cost function defined on the extracted features. Entanglement measures for quantifying and visualizing the entanglement among the word states can be directly applied on the output of the learned model. We conduct experiments on two benchmark Question Answering (QA) datasets to show the superior performance and post-hoc interpretability of the proposed QLM with EE (QLM-EE). In addition, the word embedding dimension can be greatly reduced when compared with previous QLMs, due to the composition of word embedding and EE modules in the hierarchical structure of the neural network. Note that the current QLM-EE is proposed to implement on classical computing devices with a quantum-inspired neural network structure.

The main contributions of this paper are summarized as follows.

-

•

A novel EE neural network module is proposed. The output of the EE module represents the correlations among the word states with a quantum probabilistic model which explores the entire Hilbert space of quantum pure states. The embedded states can reveal the possible entanglement between the words, which is an indication of parallelized correlations. The entanglement can be quantified to promote the transparency and post-hoc interpretability of QLMs to an unprecedented level.

-

•

A QLM-EE framework is presented by cascading the word embedding and EE modules. The word embedding module captures the superposed meanings of individual words, while the EE modules encode the correlations between the words at a higher level. The resulting cascaded deep neural network is more expressive and efficient than the shallow networks used by previous QLMs.

-

•

The superior performance of QLM-EE is demonstrated over the state-of-the-art classical neural network models and other QLMs on QA datasets. In addition, the word embedding dimension in QLM-EE is greatly reduced and the semantic similarity of the embedded states of word sequences can be studied using the tools from quantum information theory. The entanglement between the words can be quantified and visualized using analytical methods under the QLM-EE framework.

This paper is organized as follows. Section II provides a brief introduction to preliminaries and related work. Entanglement embedding is presented in Section III. Section IV proposes the QLM-EE model. Experimental results are presented in Section V and the results show that QLM-EE achieves superior performance over five classical models and five quantum-inspired models on two datasets. Post-hoc interpretability is discussed in Section VI and concluding remarks are given in Section VII.

II Preliminaries and related work

II-A Quantum State

Mathematically, an -level quantum system can be described by an -dimensional Hilbert space . A pure state of the quantum system is described by Dirac notation where a ket represents a state vector, written as (equivalent to a complex-valued column vector). The conjugate transpose, denoted by , of a state vector is called bra, denoted as , i.e., . Denote a chosen orthonormal basis of as . Any quantum pure state can be described by a unit vector in , which may be expanded on the basis states as

| (1) |

with complex-valued probability amplitudes satisfying

| (2) |

Note that the set defines a classical discrete probability distribution. A quantum system can be in the superposition of distinct states at the same time, with the probability of being given by . For example, we consider a quantum bit (qubit) that is the basic information unit in quantum computation and quantum information, which can be physically realized using e.g., a photon, an electron spin or a two-level atom [14]. The state of a qubit can be described as

| (3) |

where and

| (4) |

The state of a composite system consisting of two subsystems and can be described by the tensor product () of the states of these two subsystems and as

| (5) |

For example, if two qubits are in and , respectively, the state of the two-qubit system can be described by

| (6) |

where we have denoted

For an open quantum system or a quantum ensemble, its state needs to be described by a density matrix satisfying , and . In this paper, we mainly focus on quantum pure states, and thus the inputs and outputs of the neural network modules are complex-valued vectors that stand for the pure states. If a quantum system is in the state in (1), then the system is physically in the superposition state of . Similar superposition, although not physically, may also exist in the human language systems, which is expressed as the superposition of multiple meanings of a semantic unit.

II-B Quantum Entanglement

Quantum entanglement is one of the most fundamental concepts in quantum theory. Entanglement describes the non-classical correlation between quantum systems. To be more specific, a many-body quantum system is in an entangled state if the state of one subsystem is determined by the measurement result of the other subsystem. Mathematically speaking, the joint state of an entangled quantum system cannot be decomposed into the states of subsystems by tensor product. For example, we consider two quantum systems and defined in Hilbert spaces and , respectively. Assume the basis state vectors of the two subsystems are and . The joint state is then defined on the tensor product space , whose basis state vectors are given by the set . A general pure state of the composite quantum system can be written as follows

| (7) |

where are complex-valued probability amplitudes. The pure state is separable if it can be decomposed as

| (8) |

where and are pure states of the subsystems. Otherwise, the pure state is entangled. According to (7) and (8), separable pure states only constitute a small potion of the quantum states that can be defined on , which means that a significant amount of correlations between the subsystems cannot be characterized by the separable states. For example, one of the entangled Bell States or EPR pairs [14] is defined by

| (9) |

which can not be written as a tensor product of two pure states of the subsystems. The composite system is in the superposition of two basis states and . If we measure the state of the first system and the measurement result is , then the state of the second system is . However, since the first system is a superposition of two states and , the measurement result can be with equal probability. In that case, the state of the second system is . This kind of non-classical correlation cannot be modelled by classical probability. Quantum entanglement can be used to model the superposition of correlations between the subsystems, or in our case, the superposition of multiple meanings between the word states.

II-C Quantum Measurement

Quantum measurement is used to extract information from a quantum system. A widely-used measurement is the projective measurement (von Neumann measurement). For example, when measuring a pure state by projecting onto the measurement basis states , the quantum state will collapse to one of the basis states with the probability of

| (10) |

and the inner product of and is calculated as

| (11) |

In a more general setting, projective measurements can be performed using any state vector (i.e., not just the computational basis), with the probability of obtaining given by

| (12) |

II-D Quantum Fidelity

In quantum information theory, fidelity is a real-valued measure of the similarity between two quantum pure states, which is defined as

| (13) |

According to (10), fidelity is just the probability of collapsing to if is measured by , or the probability of collapsing to if is measured by . In other words, fidelity is the probability that one quantum state will pass the test to be identified as the other.

II-E Complex-valued Word Embedding

Complex-valued word embedding module aims to model words as quantum pure states in the semantic Hilbert space. In this paper, the complex-valued word embedding module is adopted from [34]. As shown in Fig. 2, each word is firstly encoded to a one-hot vector with a fixed length. Then, the amplitude embedding and phase embedding modules map the one-hot vector into a pair of real-valued amplitude and phase vectors . After that, the amplitude vector is normalized to a unit vector and the polar form representation of the word state is given by

| (14) |

where is the imaginary number with . Note that are the basis sememes of the semantic Hilbert space, which represent the minimum semantic units of the word meaning. Finally, the pair of vectors is transformed into a complex-valued vector as , and the word state can be written as

| (15) |

with . The complex-valued word embedding in [37] defines the real-valued amplitude as the semantic meaning of the word, and the complex phases as the positional information of word in the sequence. In contrast, the complex-valued word embedding in this paper aims to model words using quantum state representation, and no specific meaning is given to the phase or amplitude. Instead, the semantic meanings and their quantum-like superposition are jointly determined by the amplitude and phase.

II-F Related Work

In [38], van Rijsbergen argued that quantum theory can provide a unified framework for the geometrical, logical and probabilistic models for information retrieval. Coecke et al. [27] introduced DisCo formalism based on tensor product composition and Zeng et al. [28] presented a quantum algorithm to categorize sentences in DisCo model. Kartsaklis [39] used the traditional machine learning method to quantify entanglement between verbs in DisCo model. Sordoni et al. [29] proposed a quantum language modelling approach for information retrieval and the density matrix formulation was used as a general representation for texts. The single and compound terms were mapped into the same quantum space, and term dependencies are modelled as projectors. In [30], Quantum entropy minimization method has been proposed in learning concept embeddings for query expansion, where concepts from the vocabulary were embedded in rank-one matrices, and documents and queries were described by the mixtures of rank-one matrices. In [31], a quantum language model was presented where a “proof-of-concept” study was implemented to demonstrate its potential.

Two NN-based Quantum-like Language Models (NNQLMs) have been proposed, namely NNQLM-I and NNQLM-II [32]. Words were modelled as quantum pure states in the semantic Hilbert space and a word sequence was also modelled in the same space by mixing the word states in a classical way as

| (16) |

where was the word state representing the -th word in the sentence and is the weight of -th word state satisfying . By (16), the semantic meaning of the word sequence is mainly determined by the word states with larger weights. In NNQLM-I, the representation of a single sentence was obtained by the first three layers as a density matrix corresponding to a mixed state, and then the joint representation of a question/answer pair was generated in the fourth layer by matrix multiplication. The last softmax layer was invoked to match the question/answer pair. NNQLM-II adopted the same first 4-layer network structure to obtain the joint representation of a question/answer pair as NNQLM-I, and employed a 2-dimensional convolutional layer instead to extract the features of the joint representation for comparing the question/answer pairs. In [33], a Quantum Many-body Wave Function (QMWF) method was presented in which the representation of a single sentence was given by the tensor product of word vectors. Operation that mimics the quantum measurement was applied on the product state to extract the correlation patterns between the word vectors. To be more specific, a three-layer Convolutional Neural Network (CNN) was used, in which the first layer generated the product state representation of a word sequence and the projective measurement on the product state was simulated by a 1D convolutional layer with product pooling. A complex-valued network called CNM was presented in [34]. Similar to NNQLMs, CNM embedded the word sequence as a mixed state but with a complex-valued neural network. Then a number of trainable measurement operations were applied on the complex-valued density matrix representations to obtain the feature vectors of question/answer for comparison. CNM achieved comparable performance over the state-of-the-art models based on CNN and recurrent NN. More importantly, CNM has shown its advantage in interpretability, since the model has simulated the generation of a quantum probabilistic description for the individual words with complex-valued word states, and the projection of the superposed sememes onto a fixed meaning by quantum-like measurement within a particular context. The work [40] presented a survey of the quantum-inspired information retrieval and drew a road-map of future directions. Ref. [41] proposed a decision-level fusion strategy for predicting sentiment judgments inspired by quantum theory.

In the existing works, some potential of quantum-inspired NN modules have been explored for language modelling. However, most of the existing works assume that the word states are placed in the same Hilbert space, while in QLM-EE the word states are placed in the tensor product of Hilbert spaces. This enables an explicit entanglement analysis between the word states, which is not possible if the word states are mixed in the same space. Although [33] has modelled the words in independent Hilbert spaces, it was assumed that the interaction among the words are described by product states, which limits the expressive power of quantum state space. This paper continues these efforts and proposes a novel entanglement embedding module for question answering task.

III Entanglement Embedding

Quantum probabilistic superposition of states models the polysemy of words and sequences. Quantum states span the entire complex Hilbert space, while the amplitudes and complex phases of the states give the probability distribution of the multiple meanings of words. An -gram is the composition of words whose joint state is defined on the tensor product of Hilbert spaces. However, the joint state itself is not necessarily the tensor product of states of the Hilbert spaces. The set of entangled state is significantly larger than that of the tensor-product states, and compound semantic meaning lying within the -gram is mainly captured by the entanglement representation. Then the parameters of the entangled states is trained by the EE module, which characterizes the features of entanglement between the word states within the sequence.

The EE module is trained to capture high-level features between the words in the -gram, while the word embedding module can focus on encoding the basic semantic meanings of individual words with reduced dimension. The clear separation of duties in the hierarchical structure of the neural network increases the transparency of the model to an unprecedented level, which allows an accurate interpretation of the intermediate states using the tools borrowed from quantum information theory.

In line with the previous works, the complex-valued word embedding module is used to transform the one-hot representation of the word into a quantum pure state in the Hilbert space of word states , expressed as a state vector , with . The general quantum pure state for describing a word sequence is defined on the tensor-product Hilbert space , and can be formulated as

| (17) |

where . is used to characterize the probability distribution of the sememes. If the word embedding dimension is , then a general pure state vector defined on the tensor product of Hilbert spaces contains elements.

The EE module starts with composing the contiguous sequences of words as -grams [42]; see Fig. 3 for an example. For each -gram, a separable pure state is generated as the tensor product of its word states taking the following form

| (18) |

The word states are defined on Hilbert spaces which are isomorphic to each other, with the same semantic basis states. For this reason, -grams are defined on the same tensor-product space before entering the EE module. The complex-valued EE module is then connected to transform the initial separable state to an unnormalized vector which will then be normalized to determine a general pure state in the form of (17). The transformation induced by the NN can be formally written as

| (19) |

where is the weight matrix. Then the output vector must be normalized by

| (20) |

to be consistent with quantum theory. Note that the operations (19)-(20) induce an endomorphism of this Hilbert space and the EE module transfers one pure state to another. The pure states are unit vectors in the tensor-product Hilbert space. Any unit vectors in the same Hilbert space can be connected by a unitary matrix which induces a rotation. It is known that for any two quantum pure states and of an -qubit register, there exists a gate sequence to physically realize the unitary matrix such that [43]. Since the model proposed in this paper is only quantum-inspired, we are using a linear matrix multiplication and a normalization layer to approximate the effect of the transformation matrix by the classical way of training. In principle, if one layer of linear matrix multiplication is not sufficient for learning the transformation, it is always possible to stack several layers of linear operations to accurately approximate any transformation, which is consistent with the traditional neural network theory.

We take Fig. 3 as an example to illustrate the working mechanism of the EE module. We denote the state vectors for the first two words as and . The two word states form the separable state as the input to the NN layer by the following tensor product

| (21) |

The output of the NN layer is an unnormalized vector . After normalization, we obtain

| (22) |

which is in the most general form of the state vector on the joint Hilbert space. If cannot be written in a decomposable form just like the RHS of (21), then the output vector is a representation for an entangled state which captures the non-classical correlations between the word states.

IV Model

The structure of QLM-EE for QA is shown in Fig. 4. It is a complex-valued, end-to-end neural network optimized by back-propagation on classical computing devices. The QLM-EE can be divided into three major steps.

-

•

Word embedding and entanglement embedding. The word embedding module generates the word states, and the entanglement embedding module generates the complete quantum probabilistic description of the sequence of word states. The complete probabilistic description is given by a generic quantum pure state vector, which may be entangled to encode the information on the non-classical correlations between the word states. Entanglement embedding and word embedding modules encode the states as feature vectors at different levels, which improves the transparency of the quantum probabilistic modelling process. In particular, the distance between the feature vectors in the embedding space can be calculated based on the well-established measures from the quantum information theory for comparing quantum states, which could be used to reveal the relations between the words and phrases on a deeper semantic level. For example, in the classical Word2Vec, the cosine similarity is defined on real-valued embedded vectors as

(23) In our case, the cosine similarity is defined on the complex-valued unit vectors as

(24) In general, is a complex number, which means that the state representations could differ by a complex phase factor. Since complex phases are hard to visualize, we employ the quantum fidelity measure to compare the words and word sequences.

In Fig. 5, we visualize the process how the state of the phrase solar system evolves in our model using the quantum fidelity measure. After the word embedding layer, the state vector that represents the word solar is close to sun, crazy, dominated, which reflects how the model comprehends the meaning of the input words. The word state vector of system is embedded close to party, committee, ordered. After the EE module, the phrase solar system can be linked to other high-level phrases, e.g., united nations, while its state vector is still very close to the phrase the sun and the comet which share a similar meaning.

-

•

Measurement operation. Projective measurement operation is equivalent to the calculation of fidelity between quantum states. That is, the output of the measurement can be seen as a distance between the measured state and a measuring state, corresponding to the distance between the semantic entangled state and a measuring sememe which is used for comparison. Therefore, performing the same set of measurement operations provides a way to compare the semantic similarity of the question and answering word sequences. After entanglement embedding, a series of parameterized measurements are performed on the state via the formula

(25) Here is defined as the measurement output, which indicates the probability of the state possessing the semantic meaning represented by the measurement vector . Note that the unit vectors are optimized in a data-driven way. By using pure states as measurement basis, the computation cost for measuring a quantum state virtually is reduced from to compared to CNM [34], in which density matrices were used as the measurement basis. For a sentence described by the concatenation of word sequences, the feature matrix which stores all the measurement results has entries if measurement vectors are used.

-

•

Similarity Measure. A max-pooling layer is applied on each row of the feature matrix for down-sampling. To be more specific, the down-sampling takes the maximum value of each row of the matrix to form a reduced feature vector. Then a vector-based similarity metric can be employed in the matching layer for evaluating the distance between the pair of feature vectors for question and answer. The answer with the highest matching score is chosen as the predicted result among all candidate answers.

V Experiment

V-A Experiment Details

| Dataset | Train(Q/A) | Dev(Q/A) | Test(Q/A) |

|---|---|---|---|

| TREC-QA | 1,229/53,417 | 65/1,134 | 68/1,478 |

| QA | 873/8,627 | 126/1,130 | 243/2,351 |

V-A1 Dataset

V-A2 Evaluation metrics

The metrics called Mean Average Precision (MAP) and Mean Reciprocal Rank (MRR) [46] are utilized to evaluate the performance of the models. MAP for a set of queries is the mean of the Average Precision scores for each query, formulated as

MRR is the average of the Reciprocal Ranks of results for a sample of queries , calculated as

where refers to the rank position of the first relevant document for the -th query.

V-A3 Baselines

-

a)

Classical models for TREC-QA including

-

•

Unigram-CNN [47]: Unigram-CNN is a CNN-based model that utilizes 1-gram as the input to obtain the representations of questions and answers for comparison. It is composed of one convolutional layer and one average pooling layer.

-

•

Bigram-CNN [47]: Bigram-CNN has the same network structure as Unigram-CNN but it extracts the representations from bi-gram inputs.

-

•

ConvNets [48]: ConvNets is built upon two distributional sentence models based on CNN. These underlying sentence models work in parallel to map questions and answers to their distributional vectors, which are then used to learn the semantic similarity between them.

-

•

QA-LSTM-avg [49]: QA-LSTM-avg generates distributed representations for both the question and answer independently by bidirectional LSTM outputs with average pooling, and then utilizes cosine similarity to measure their distance.

-

•

aNMM-1 [50]: aNMM-1 employs a deep neural network with value-shared weighting scheme in the first layer, which is followed by fully-connected layers to learn the sentence representation. A question attention network is used to learn question term importance and produce the final ranking score.

-

•

-

b)

Classical models for QA including

-

•

Bigram-CNN [47];

-

•

PV-Cnt [45]: PV-Cnt is the Paragraph Vector (PV) model combined with Word Count. The model score of PV is the cosine similarity score between the question vector and the sentence vector.

-

•

CNN-Cnt [45]: CNN-Cnt employs a Bigram-CNN model with average pooling and combines it with Word Count.

-

•

QA-BILSTM [51]: QA-BILSTM uses a bidirectional LSTM and a max pooling layer to obtain the representation of questions and answers, and then computes the cosine similarity between the two representations.

-

•

LSTM-attn[52]: LSTM-attn firstly obtains the representations for the question and answer from independent LSTM models, and then adds an attention model to learn the pair-specific representation for prediction on the basis of the vanilla LSTM.

-

•

- c)

| TREC-QA | QA | ||||

|---|---|---|---|---|---|

| Model | MAP | MRR | Model | MAP | MRR |

| Unigram-CNN | 0.5470 | 0.6329 | Bigram-CNN | 0.6190 | 0.6281 |

| Bigram-CNN | 0.5693 | 0.6613 | PV-Cnt | 0.5976 | 0.6058 |

| ConvNets | 0.6709 | 0.7280 | CNN-Cnt | 0.6520 | 0.6652 |

| QA-LSTM-avg | 0.6819 | 0.7652 | QA-BILSTM | 0.6557 | 0.6695 |

| aNMM-1 | 0.7385 | 0.7995 | LSTM-attn | 0.6639 | 0.6828 |

| QLM-MLE | 0.6780 | 0.7260 | QLM-MLE | 0.5120 | 0.5150 |

| NNQLM-I | 0.6791 | 0.7529 | NNQLM-I | 0.5462 | 0.5574 |

| NNQLM-II | 0.7589 | 0.8254 | NNQLM-II | 0.6496 | 0.6594 |

| QMWF-LM | 0.7520 | 0.8140 | QMWF-LM | 0.6950 | 0.7100 |

| CNM | 0.7701 | 0.8591 | CNM | 0.6748 | 0.6864 |

| QLM-EE | 0.7713 | 0.8542 | QLM-EE | 0.6956 | 0.7003 |

V-A4 Hyper-parameters

Cosine similarity defined by (23) is used as the distance metric between the real-valued feature vectors after pooling. The hinge loss [53] for training the model is given by

| (26) |

where is the cosine similarity of a ground truth answer, is the cosine similarity of an incorrect answer randomly chosen from the entire answer space.

| Model | MAP | MRR | Params. | FLOPs | ||

|---|---|---|---|---|---|---|

| QLM-EE | 8 | 2 | 0.73020.0101 | 0.80710.0156 | 1.45M | 4.20M |

| QLM-ME | 8 | 2 | 0.69050.0711 | 0.75840.0059 | 0.99M | 4.81M |

| QLM-ME | 64 | 2 | 0.72670.0194 | 0.81710.0151 | 7.88M | 124.56M |

| QLM-EE-Real | 16 | 2 | 0.67780.0139 | 0.75050.0187 | 1.97M | 6.01M |

| QLM-SE | 8 | 2 | 0.69340.0149 | 0.78040.0156 | 1.32M | 3.28M |

The parameters in the QLM-EE are determined by the set of hyper-parameters , where is the number of words in a sequence, is the word embedding dimension and is the number of measurement vectors. means embedding the words without composition, and in this case no entanglement can be generated. We test single-layer and two-layer fully connected neural networks with neurons for entanglement embedding. A grid search is conducted using , , , batch size in and learning rate in . In line with CNM, the concatenation of the feature vectors for has also been tested. Larger has been tried, but shows better performance than . All the parameters are initialized from standard normal distributions except the measurement vectors, which are initialized by orthogonal vectors.

V-B Performance

The experimental results on TREC-QA and QA are shown in TABLE II. We compare the performances of the classical models, including CNNs, Recurrent NNs and attention models with the performances of QLMs. QLM-EE is consistently better than all the QLMs and classical models on both datasets if MAP is used as the metric. If we use MRR as the metric, QLM-EE performs slightly worse than CNM on TREC-QA while better than the other models, and slightly worse than QMWF-LM while better than all the other models on QA.

Our word embedding dimension is selected from , which is much smaller than the word embedding dimension of previous QLMs selected from . As a consequence, the amount of parameters for the word embedding layer has seen dramatic reduction while the performance of model has been improved. Fig. 6 illustrates how the word embedding dimension affects the performances of different models in terms of MAP and MRR on TREC-QA. It is clear that the model with the concatenation of the feature vectors for performs best and the best word embedding dimension is , which is significantly smaller than for the previous QLMs.

As increases, the dimension of the state vector after the entanglement embedding is increased according to the formula . As pointed out in the literature [54], learning word embedding has the risk of underfitting or overfitting. Embedding dimension that is too small (less than 50) or too large (more than 300) will degrade the performance. In QLM-EE, the -gram lies in the tensor-product space, which tends to result in a high-dimensional representation and poor performance. For example, if the word embedding dimension is 8, then -grams are described by complex-valued -dimensional vectors, or real-valued -dimensional vectors. In addition, the dimension of EE module and measurement vectors will increase accordingly, leading to a model that easily overfits the data. In our case, the performance of -gram model is worse than that of the -gram model, which is a sign of overfitting in this relatively small-sized dataset. However, it is possible that high-dimensional representation will improve the performance if a much larger dataset is considered.

V-C Ablation Test

Ablation test studies the contribution of certain components to the overall performance by removing these components from the model. We conduct ablation tests to evaluate the effectiveness of entanglement embedding on 2-gram QLM. QLM-SE has removed thxe entanglement embedding module and measurements are directly performed on the separable joint states. QLM-ME generates the mixed-state embedding by (16), and measurements are performed on the density matrix . In the setting of complex-valued QLM-EE, we let and . For the complex-valued QLM-ME whose structure is the same as CNM, we set and with for comparison. Note that when , the complex-valued QLM-ME has the same dimension for -grams as QLM-EE. However, since the word embedding dimension has been increased, the number of parameters for the word embedding module has been increased accordingly. We have doubled the word embedding dimension to in the real-valued QLM-EE (QLM-EE-Real), and thus the dimension of -grams is which is four times larger than the dimension of -grams in the complex-valued QLM-EE. Besides, the dimension of the measurement vectors has been increased four times accordingly. To make the number of parameters compatible, we set for this case.

| Model | ||||

|---|---|---|---|---|

| QLM-ME () | 1 | 1 | ||

| QLM-ME () | 1 | 1 | ||

| QLM-SE () | 1 | 1 | ||

| QLM-EE-Real () | 1 | 1 |

We have run the experiment 10 times. The mean and standard deviation of metrics, the number of parameters and Floating Point Operations (FLOPs) are reported in TABLE III. We can see that QLM-EE achieves the best performance for 2-gram model when the word embedding dimension is . The performances QLM-ME with and QLM-EE with are close, but the number of parameters and FLOPs used by the former is significantly greater than the latter. The result of QLM-EE-Real confirms that the complex-valued neural network indeed promotes the performance of QLMs. The two-sample Kolmogorov-Smirnov (K-S) test result on the performance metrics is shown in TABLE IV, which verifies the performance differences of these models.

VI Post-hoc Interpretability

von Neumann entanglement entropy [55] is an accurate measure of the degree of quantum entanglement for a bipartite quantum pure state. The entanglement entropy is calculated as follows

| (27) |

where is the Schmidt coefficient of the composite pure state and is the minimal dimension of the subsystems, i.e., . Apart from the analytical entanglement measure (27) for bipartite states, it is also worth mentioning that efficient numerical methods are available for quantifying quantum entanglement for multipartite states [56].

| Type | Word combinations |

|---|---|

| Most entangled in questions | annual revenue; as a; is cataracts; how long; tale of; first movie; ethnic background |

| Least entangled in questions | introduced Jar; the name; what year; the main; who is; whom were ; is a; in what |

| Most entangled in answers | ends up; never met; plane assigned; in kindergarten; academy of; going to; agricultural farming; secure defendants |

| Least entangled in answers | skinks LRB; while some; grounded in; of Quarry; of seven; he said; in China; responsibility and |

| Type | Word combinations |

|---|---|

| Most entangled in questions | the company Rohm; Hale Bopp comet; how often does; Insane Clown Posse; Capriati ’s coach |

| Least entangled in questions | there worldwide ?; What is Crips; Criminal Court try; When did James |

| Most entangled in answers | after a song; comet ’s nucleus; Out of obligation; at times .; Black Panther Party |

| Least entangled in answers | Queen , Tirado; came from Britain; Nobel Prize last; appears in the; Americans over 50 |

TABLE V shows the selected most and least 2-grams of words, ranked by the von Neumann entanglement entropy. The most entangled pairs are mostly set phrase or some well-known combinations of words, e.g., how long. However, is cataracts is clearly not a set phrase or well-known combination of words. We found that is cataracts appears many times, and cataracts is always next to is in this particular training dataset. This may be the reason why the learned model takes is cataracts as a fixed combination of words. The least entangled pairs consist of words with fixed semantic meaning such as names Quarry, China and interrogatives what, who, some of which appear only once in the dataset. In other words, there is not so much semantic ambiguity or superposition with these combinations that demands a quantum probabilistic interpretation.

TABLE VI shows the selected most and least entangled 3-grams, whose von Neumann entanglement entropies are calculated to indicate the entanglement between the first two words, and the remaining word (the third word). Similar to 2-grams, the most entangled 3-grams are fixed collocations and combinations that often appear together in the training set, such as a set phrase. Interestingly, punctuation marks also appear in the most entangled 3-grams. For example, entanglement in at times . is large, which implies that the phrase at times is often at the end of the answers. However, there worldwide ? is among the least entangled combinations, since there worldwide is not in any of the question sentences for training. The third word in the least entangled 3-grams are mainly names and numbers, which cannot combine with the first two words to form a fixed phrase.

In Fig. 7, we also visualize the entanglement entropy in some selected sentences, where the degree of darkness indicates the level of entanglement. It can be seen that words with multiple meanings in different contexts, e.g., is, does, the, film, have greater capabilities to entangle with their neighboring words.

VII Conclusion

In this paper, we proposed an interpretable quantum-inspired language model with a novel EE module in the neural network architecture. The EE enables the modelling of the word sequence by a general quantum pure state, which is capable of capturing all the classical and non-classical correlations between the word states. The expressivity of the neural network is greatly enhanced by cascading the word embedding and EE modules. The complex-valued model has demonstrated superior performance on the QA datasets, with much smaller word embedding dimensions compared to previous QLMs. In addition, the non-classical correlations between the word states can be quantified and visualized by appropriate entanglement measures, which improves the post-hoc interpretability of the learned model.

The future plan is to apply the adaptive methods [57, 58] for optimizing the virtual measurement operations to increase the efficiency in feature extraction. The QLM-EE model is expected to be more powerful on huge datasets in which the semantic meanings of words and their correlations are far more complex. With a larger dataset and richer semantic superpositions between the words, several entanglement embedding modules can be cascaded to form a deeper neural network, which could encode the multipartite correlations within the text at different scales.

References

- [1] Y. Bengio, R. Ducharme, P. Vincent, and C. Jauvin, “A neural probabilistic language model,” J. Mach. Learn. Res., vol. 3, pp. 1137–1155, 2003.

- [2] L. Wang, X. Qian, Y. Zhang, J. Shen, and X. Cao, “Enhancing sketch-based image retrieval by cnn semantic re-ranking,” IEEE Trans. Cybern., vol. 50, no. 7, pp. 3330–3342, 2020.

- [3] T. Mikolov, S. Kombrink, L. Burget, J. Černockỳ, and S. Khudanpur, “Extensions of recurrent neural network language model,” in Proc. IEEE ICASSP, May 2011, pp. 5528–5531.

- [4] T. Mikolov, G. Corrado, K. Chen, and J. Dean, “Efficient estimation of word representations in vector space,” in Proc. ICLR, 2013.

- [5] I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to sequence learning with neural networks,” in Proc. NIPS, Dec. 2014, pp. 3104–3112.

- [6] H. He and J. Lin, “Pairwise word interaction modeling with deep neural networks for semantic similarity measurement,” in Proc. NAACL HLT, Jun. 2016, pp. 937–948.

- [7] D. W. Otter, J. R. Medina, and J. K. Kalita, “A survey of the usages of deep learning for natural language processing,” IEEE Trans. Neural Netw. Learn. Syst., vol. 32, no. 2, pp. 604–624, 2021.

- [8] X. Wang, L. Kou, V. Sugumaran, X. Luo, and H. Zhang, “Emotion correlation mining through deep learning models on natural language text,” IEEE Trans. Cybern., pp. 1–14, 2020.

- [9] J. Du, C. M. Vong, and C. L. P. Chen, “Novel efficient rnn and lstm-like architectures: Recurrent and gated broad learning systems and their applications for text classification,” IEEE Trans. Cybern., pp. 1–12, 2020.

- [10] X. Wang, L. Kou, V. Sugumaran, X. Luo, and H. Zhang, “Emotion correlation mining through deep learning models on natural language text,” IEEE Trans. Cybern., vol. 51, no. 9, pp. 4400–4413, 2021.

- [11] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” in Proc. NIPS, Dec. 2013, pp. 3111–3119.

- [12] F. Doshi-Velez and B. Kim. (2017) Towards A Rigorous Science of Interpretable Machine Learning. [Online]. Available: https://arxiv.org/abs/1702.08608

- [13] Z. C. Lipton, “The mythos of model interpretability,” Commun. ACM, vol. 61, pp. 36–43, 2018.

- [14] M. A. Nielsen and I. L. Chuang, Quantum Computation and Quantum Information. Cambridge University Press, 2010.

- [15] D. Dong, X. Xing, H. Ma, C. Chen, Z. Liu, and H. Rabitz, “Learning-based quantum robust control: Algorithm, applications, and experiments,” IEEE Trans. Cybern., vol. 50, pp. 3581–3593, 2020.

- [16] V. Dunjko and H. J. Briegel, “Machine learning & artificial intelligence in the quantum domain: a review of recent progress,” Rep. Prog. Phys., vol. 81, no. 7, p. 074001, 2018.

- [17] C. Chen, D. Dong, B. Qi, I. R. Petersen, and H. Rabitz, “Quantum ensemble classification: A sampling-based learning control approach,” IEEE Trans. Neural Netw. Learn. Syst., vol. 28, no. 6, pp. 1345–1359, 2016.

- [18] C. Chen, D. Dong, H.-X. Li, J. Chu, and T.-J. Tarn, “Fidelity-based probabilistic Q-learning for control of quantum systems,” IEEE Trans. Neural Netw. Learn. Syst., vol. 25, no. 5, pp. 920–933, 2013.

- [19] J. Biamonte, P. Wittek, N. Pancotti, P. Rebentrost, N. Wiebe, and S. Lloyd, “Quantum machine learning,” Nature, vol. 549, no. 7671, pp. 195–202, 2017.

- [20] Y. Du, M.-H. Hsieh, T. Liu, S. You, and D. Tao. (2020) On the learnability of quantum neural networks. [Online]. Available: https://arxiv.org/abs/2007.12369

- [21] V. Havlíček, A. D. Córcoles, K. Temme, A. W. Harrow, A. Kandala, J. M. Chow, and J. M. Gambetta, “Supervised learning with quantum-enhanced feature spaces,” Nature, vol. 567, no. 7747, pp. 209–212, 2019.

- [22] J. R. McClean, S. Boixo, V. N. Smelyanskiy, R. Babbush, and H. Neven, “Barren plateaus in quantum neural network training landscapes,” Nat. Commun., vol. 9, no. 1, pp. 1–6, 2018.

- [23] N. H. Nguyen, E. Behrman, M. A. Moustafa, and J. Steck, “Benchmarking neural networks for quantum computations,” IEEE Trans. Neural Netw. Learn. Syst., vol. 31, pp. 2522–2531, 2020.

- [24] J.-A. Li, D. Dong, Z. Wei, Y. Liu, Y. Pan, F. Nori, and X. Zhang, “Quantum reinforcement learning during human decision-making,” Nat. Hum. Behav., vol. 4, no. 3, pp. 294–307, 2020.

- [25] V. Dunjko, J. M. Taylor, and H. J. Briegel, “Quantum-enhanced machine learning,” Phys. Rev. Lett., vol. 117, no. 13, p. 130501, 2016.

- [26] Q. Wei, H. Ma, C. Chen, and D. Dong, “Deep reinforcement learning with quantum-inspired experience replay,” IEEE Trans. Cybern., pp. 1–13, 2021.

- [27] B. Coecke, M. Sadrzadeh, and S. Clark. (2010) Mathematical foundations for a compositional distributional model of meaning. [Online]. Available: https://arxiv.org/abs/1003.4394

- [28] W. Zeng and B. Coecke. (2016) Quantum algorithms for compositional natural language processing. [Online]. Available: https://arxiv.org/abs/1608.01406

- [29] A. Sordoni, J.-Y. Nie, and Y. Bengio, “Modeling term dependencies with quantum language models for ir,” in Proc. 36th Int. ACM SIGIR Conf. Res. Develop. Inf. Retr., Jul. 2013, pp. 653–662.

- [30] A. Sordoni, Y. Bengio, and J.-Y. Nie, “Learning concept embeddings for query expansion by quantum entropy minimization,” in Proc. 28th AAAI Conf. Artif. Intell., Jul. 2014, pp. 1586–1592.

- [31] I. Basile and F. Tamburini, “Towards quantum language models,” in Proc. EMNLP, Sep. 2017, pp. 1840–1849.

- [32] P. Zhang, J. Niu, Z. Su, B. Wang, L. Ma, and D. Song, “End-to-end quantum-like language models with application to question answering,” in Proc. 32nd AAAI Conf. Artif. Intell., Feb. 2018, p. 5666–5673.

- [33] P. Zhang, Z. Su, L. Zhang, B. Wang, and D. Song, “A quantum many-body wave function inspired language modeling approach,” in Proc. 27th ACM CIKM, Oct. 2018, pp. 1303–1312.

- [34] Q. Li, B. Wang, and M. Melucci, “Cnm: An interpretable complex-valued network for matching,” in Proc. NAACL-HLT, Jun. 2019, pp. 4139–4148.

- [35] R. Horodecki, P. Horodecki, M. Horodecki, and K. Horodecki, “Quantum entanglement,” Rev. Mod. Phys., vol. 81, no. 2, p. 865, 2009.

- [36] M. Xie, Y. Hou, P. Zhang, J. Li, W. Li, and D. Song, “Modeling quantum entanglements in quantum language models,” in Proc. IJCAI, Jul. 2015, pp. 1362–1368.

- [37] B. Wang, D. Zhao, C. Lioma, Q. Li, P. Zhang, and J. G. Simonsen, “Encoding word order in complex embeddings,” in Proc. ICLR, Dec. 2019.

- [38] C. J. van Rijsbergen, The geometry of information retrieval. Cambridge University Press, 2004.

- [39] D. Kartsaklis and M. Sadrzadeh. (2014) A study of entanglement in a categorical framework of natural language. [Online]. Available: https://arxiv.org/abs/1405.2874

- [40] S. Uprety, D. Gkoumas, and D. Song, “A survey of quantum theory inspired approaches to information retrieval,” ACM Computing Surveys (CSUR), vol. 53, no. 5, pp. 1–39, 2020.

- [41] D. Gkoumas, Q. Li, S. Dehdashti, M. Melucci, Y. Yu, and D. Song, “Quantum cognitively motivated decision fusion for video sentiment analysis,” in Proc. of 35th AAAI Conf. Artif. Intell., Feb. 2021, pp. 827–835.

- [42] B. C. William and M. T. John, “N-gram-based text categorization,” Ann Arbor MI, vol. 48113, no. 2, pp. 161–175, 1994.

- [43] V. J. J. B. V. Mottonen, M. and M. M. Salomaa, “Transformation of quantum states using uniformly controlled rotations,” Quantum Information and Computation, vol. 5, no. 6, pp. 467–473, 2005.

- [44] E. M. Voorhees and D. M. Tice, “Building a question answering test collection,” in Proc. 23rd Int. ACM SIGIR Conf. Res. Develop. Inf. Retr., Jul. 2000, pp. 200–207.

- [45] Y. Yang, W.-t. Yih, and C. Meek, “Wikiqa: A challenge dataset for open-domain question answering,” in Proc. EMNLP, Sep. 2015, pp. 2013–2018.

- [46] C. D. Manning, P. Raghavan, and H. Schütze, Introduction to Information Retrieval. Cambridge University Press, 2008.

- [47] L. Yu, K. M. Hermann, P. Blunsom, and S. Pulman, “Deep learning for answer sentence selection,” in Proc. NIPS deep learning workshop, 2014.

- [48] A. Severyn and A. Moschitti, “Learning to rank short text pairs with convolutional deep neural networks,” in Proc. 38th Int. ACM SIGIR Conf. Res. Develop. Inf. Retr., Aug. 2015, pp. 373–382.

- [49] M. Tan, C. d. Santos, B. Xiang, and B. Zhou, “LSTM-based deep learning models for non-factoid answer selection,” in Proc. ICLR, 2016.

- [50] L. Yang, Q. Ai, J. Guo, and W. B. Croft, “aNMM: Ranking short answer texts with attention-based neural matching model,” in Proc. ACM CIKM, Oct. 2016, pp. 287–296.

- [51] C. d. Santos, M. Tan, B. Xiang, and B. Zhou. (2016) Attentive Pooling Networks. [Online]. Available: https://arxiv.org/abs/1602.03609

- [52] Y. Miao, L. Yu, and P. Blunsom, “Neural variational inference for text processing,” in Proc. ICML, Jun. 2016, pp. 1727–1736.

- [53] B. Hu, Z. Lu, H. Li, and Q. Chen, “Convolutional neural network architectures for matching natural language sentences,” in Proc. NIPS, Dec. 2014, pp. 2042–2050.

- [54] Z. Yin and Y. Shen, “On the dimensionality of word embedding,” in Proc. NIPS, Dec. 2018, pp. 895–906.

- [55] R. Horodecki and P. Horodecki, “Quantum redundancies and local realism,” Phys. Lett. A, vol. 194, no. 3, pp. 147–152, 1994.

- [56] M. Zhang, G. Ni, and G. Zhang, “Iterative methods for computing u-eigenvalues of non-symmetric complex tensors with application in quantum entanglement,” Computational Optimization and Applications, vol. 75, no. 3, pp. 779–798, 2020.

- [57] F. Huszár and N. M. Houlsby, “Adaptive bayesian quantum tomography,” Phys. Rev. A, vol. 85, no. 5, p. 052120, 2012.

- [58] B. Qi, Z. Hou, Y. Wang, D. Dong, H.-S. Zhong, L. Li, G.-Y. Xiang, H. M. Wiseman, C.-F. Li, and G.-C. Guo, “Adaptive quantum state tomography via linear regression estimation: Theory and two-qubit experiment,” NPJ Quantum Inf., vol. 3, no. 1, pp. 1–7, 2017.