Quantum Kernel-Based Long Short-term Memory

for Climate Time-Series Forecasting

††thanks: 1 Corresponding Author: [email protected] (NCHC, Narlabs),

[email protected] (QuEST, Imperial College London)

Abstract

We present the Quantum Kernel-Based Long Short-Term Memory (QK-LSTM) network, which integrates quantum kernel methods into classical LSTM architectures to enhance predictive accuracy and computational efficiency in climate time-series forecasting tasks, such as Air Quality Index (AQI) prediction. By embedding classical inputs into high-dimensional quantum feature spaces, QK-LSTM captures intricate nonlinear dependencies and temporal dynamics with fewer trainable parameters. Leveraging quantum kernel methods allows for efficient computation of inner products in quantum spaces, addressing the computational challenges faced by classical models and variational quantum circuit-based models. Designed for the Noisy Intermediate-Scale Quantum (NISQ) era, QK-LSTM supports scalable hybrid quantum-classical implementations. Experimental results demonstrate that QK-LSTM outperforms classical LSTM networks in AQI forecasting, showcasing its potential for environmental monitoring and resource-constrained scenarios, while highlighting the broader applicability of quantum-enhanced machine learning frameworks in tackling large-scale, high-dimensional climate datasets.

Index Terms:

Quantum Machine Learning, Quantum Kernel Methods, LSTM, Time Series Forecasting, Model CompressionI Introduction

Climate time-series forecasting is essential for understanding and predicting environmental phenomena, which has significant implications for public health[1], resource management[2], and policy-making [3]. Accurate forecasting of climatic variables such as temperature, precipitation, and pollutant concentrations enables proactive measures to mitigate adverse effects associated with climate variability and change. Time series forecasting, a fundamental aspect of sequence modeling tasks, is crucial for capturing the temporal dynamics inherent in climate data. Recurrent Neural Networks (RNNs) [4] and Long Short-Term Memory (LSTM) networks [5] have been instrumental in addressing these tasks due to their ability to model temporal dependencies within sequential data. However, as the complexity and dimensionality of climate datasets increase—owing to factors such as diverse environmental variables, varying meteorological conditions, and spatial heterogeneity—classical RNNs and LSTMs often require substantial computational resources and extensive parameterization to effectively model intricate patterns and long-range dependencies [6].

Quantum computing has emerged as a promising paradigm that leverages quantum mechanical principles such as superposition and entanglement to enhance machine learning models, offering significant computational advantages over traditional methods [7]. Specifically, quantum machine learning (QML) aims to exploit the computational strengths of quantum systems to process information in high-dimensional Hilbert spaces more efficiently than classical counterparts [8, 9, 10, 11]. This capability positions quantum computing favorably for large-scale and high-dimensional applications, including environmental monitoring, climate modeling, and other climate-related time-series forecasting tasks [12, 13].

In the context of time series prediction, prior efforts to integrate quantum computing into sequence modeling have led to the development of Quantum-Enhanced Long Short-Term Memory (QLSTM) [14] and Quantum-Trained LSTM [15] architectures based on Variational Quantum Circuits (VQCs) [16]. While VQC-based QLSTMs incorporate quantum circuits into neural network structures, they often entail complex circuit designs and necessitate significant quantum resources. This complexity presents substantial challenges for implementation on current quantum hardware, which is constrained by limitations in qubit coherence times and gate fidelities [17].

Quantum kernel methods offer an alternative approach by embedding classical data into quantum feature spaces using quantum circuits [18], enabling efficient computation of inner products (kernels) in these high-dimensional spaces [19, 20]. Quantum kernels can capture complex data structures with potentially fewer trainable parameters and reduced computational overhead compared to both classical models and VQC-based quantum models [21]. This approach leverages the efficiency of quantum systems in representing and manipulating high-dimensional data, providing a means to enhance model expressiveness without proportionally increasing computational demands [22].

This paper introduces the Quantum Kernel Long Short-Term Memory (QK-LSTM) network [23], which integrates quantum kernel methods within the LSTM architecture to improve the modeling of complex sequential patterns in time-series data. By replacing classical linear transformations in the LSTM cells with quantum kernel evaluations, the QK-LSTM leverages quantum feature spaces to more effectively encode intricate dependencies. This integration enables the model to capture non-linear relationships and temporal dynamics that are challenging for classical models to represent, particularly in applications such as Air Quality Index (AQI) prediction, where environmental variables are influenced by a multitude of interdependent factors.

The proposed approach utilizes quantum gates and circuits to perform transformations that are computationally intensive in classical settings, thereby enhancing the efficiency and predictive accuracy of the network for climate forecasting tasks. Furthermore, this integration simplifies the quantum circuit requirements compared to VQC-based QLSTMs, making the QK-LSTM more feasible for implementation on near-term quantum devices and suitable for deployment in quantum edge computing [24] and resource-constrained environments[25]. Additionally, the quantum kernel can serve as an effective ansatz for distributed quantum computing, suggesting that this method can be extended towards quantum high-performance computing (HPC) and distributed quantum computing architectures [26, 27, 28].

By applying the QK-LSTM to AQI prediction as a case study, we demonstrate its superior performance in capturing the complex temporal dependencies and non-linear patterns inherent in climate time-series data. Experimental results indicate that the QK-LSTM outperforms classical LSTM models in predictive accuracy while requiring fewer parameters. This performance gain highlights the potential of quantum-enhanced models in environmental applications and underscores the viability of integrating quantum computing techniques into existing machine learning frameworks to address computational challenges in processing large-scale, high-dimensional climate data.

II Data Pre-processing

Effective data preprocessing is crucial for improving the performance of time-series models, like those used for predicting AQI[29]. This involves ensuring data consistency, addressing missing values, and appropriately scaling features. By following these steps, the model can better learn underlying patterns and generate more reliable predictions.

II-A Feature Selection

The AQI is calculated based on various pollutant concentrations [30], including carbon monoxide (CO), ammonia (NH3), nitric oxide (NO), nitrogen dioxide (NO2), nitrogen oxides (NOx), sulfur dioxide (SO2), particulate matter with diameters less than or equal to 2.5 micrometers (PM2.5) and 10 micrometers (PM10), and ozone (O3). These pollutants are critical as they collectively determine the overall air quality, providing a comprehensive representation of environmental health status. Accurate monitoring and analysis of these pollutants are essential for predicting air quality trends and understanding their effects on public health and the environment.

In this study, we focus on Bengaluru, a major city in India. The dataset[31], obtained from the Central Pollution Control Board (CPCB) of India, contains several missing values and noisy information. The Xylene feature was excluded from the dataset due to its high rate of missing data and potential to introduce bias. Consequently, a total of 11 features were selected for analysis.

The AQI is computed based on the maximum of the individual pollutant sub-indices, which are normalized concentration values. The AQI calculation is expressed as:

| (1) |

where represents the sub-index for pollutant , obtained by mapping the pollutant’s actual concentration to a standardized scale using established AQI breakpoints. This approach ensures that the AQI reflects the most critical pollutant concentration among the selected features, providing a comprehensive measure of air quality in Bengaluru.

II-B Outlier Detection and Removal

Outlier detection and removal are crucial steps in improving data quality by eliminating anomalous data points that can adversely affect model training. In this study, we employed the Z-score method to detect outliers, which measures how many standard deviations a data point is from the mean . A data point is considered an outlier if its absolute Z-score exceeds a predefined threshold :

| (2) |

where is the standard deviation of the dataset. We set the threshold , which corresponds to data points beyond three standard deviations from the mean, a common practice for outlier detection. By removing these outliers, we ensure a more consistent and reliable dataset for modeling.

II-C Handling Missing Values

Due to the extensive number of missing values in the dataset for Bengaluru city, appropriate imputation methods are necessary to maintain data integrity. In this research, we utilized linear interpolation to estimate missing values. Linear interpolation [32] estimates missing data points based on linear relationships between adjacent known data points, which is particularly effective for time-series data. This method minimizes abrupt changes introduced by interpolation, preserving the smoothness and continuity of the dataset.

The linear interpolation formula is expressed as:

| (3) |

where and are the time points of the known data preceding and succeeding the missing value at time , and and are the corresponding pollutant concentration values. The missing value is estimated based on the linear relationship between these points, as shown in (3).

We chose linear interpolation over other imputation methods, such as mean substitution or advanced techniques like spline interpolation, due to its simplicity and effectiveness in handling missing data in time-series without introducing significant bias or complexity.

III Methodology

III-A Long Short-Term Memory Networks

LSTM networks [5] are a specialized form of RNNs [4], designed to address the vanishing and exploding gradient problems commonly encountered in standard RNNs. The unique memory cell structure of LSTMs, comprising input, forget, and output gates, enables the effective retention and management of long-term dependencies in sequential data. LSTMs have been widely adopted in various domains, including natural language processing [33, 34, 35] and time series forecasting [36, 37, 38], due to their ability to capture both short- and long-term relationships within sequences. This capability significantly enhances model performance in sequence-related tasks, particularly in handling long-range dependencies.

In this study, we utilize LSTM networks for predicting the AQI, leveraging their proficiency in capturing complex temporal dependencies inherent in climate time-series data. The dynamic and nonlinear characteristics of air quality data, influenced by a multitude of atmospheric and anthropogenic factors, present significant challenges for traditional forecasting methods, which often struggle to provide accurate predictions. The gating mechanisms within LSTM networks offer an effective approach to modeling these complex temporal patterns, enabling the network to capture both seasonal trends and abrupt changes in air quality. This leads to substantial improvements in predictive performance. A schematic representation of a standard classical LSTM network architecture is illustrated in Fig. 1.

III-B Quantum Kernel-Based Long Short-Term Memory

To enhance the modeling capability of LSTM networks in capturing intricate nonlinear patterns within sequential climate data, we propose the QK-LSTM model. This model integrates quantum kernel operations within the classical LSTM framework, effectively embedding input data into high-dimensional quantum feature spaces.

As illustrated in Fig. 2, the fundamental unit of the proposed QK-LSTM architecture is the QK-LSTM cell. Each QK-LSTM cell modifies the standard LSTM cell by replacing the traditional linear transformations with quantum kernel evaluations. This integration leverages the expressive power of quantum feature spaces to model complex, non-linear relationships in the data, potentially leading to improved predictive performance in climate time-series forecasting tasks such as AQI prediction.

III-B1 Classical LSTM Equations

The standard LSTM cell comprises three gates—the forget gate , the input gate , and the output gate —and a cell state . The classical LSTM equations governing these components are:

| (4a) | ||||

| (4b) | ||||

| (4c) | ||||

| (4d) | ||||

| (4e) | ||||

| (4f) | ||||

where:

-

•

is the input vector at time ,

-

•

is the hidden state from the previous time step,

-

•

are weight matrices,

-

•

are bias vectors,

-

•

denotes the sigmoid activation function,

-

•

denotes the hyperbolic tangent activation function,

-

•

represents element-wise multiplication.

III-B2 Integration of Quantum Kernels into LSTM

In the QK-LSTM architecture[23], we replace the linear transformations in the gate computations with quantum kernel evaluations. This approach aims to exploit the high-dimensional representation capabilities of quantum feature spaces to capture complex relationships within the data.

We define the concatenated input vector:

| (5) |

where denotes vector concatenation.

We introduce a set of reference vectors , which can be a subset of training data or learned during training. The gate activations are computed using weighted sums of quantum kernel functions as follows:

| (6a) | ||||

| (6b) | ||||

| (6c) | ||||

| (6d) | ||||

| (6e) | ||||

| (6f) | ||||

where:

-

•

, , , and are trainable weights associated with the quantum kernels for each gate,

-

•

, , , and are quantum kernel functions specific to each gate,

-

•

, , , and are bias terms.

III-B3 Quantum Kernel Function

The quantum kernel function measures the similarity between two data points and in a quantum feature space induced by a quantum feature map . It is defined as:

| (7) |

where represents the quantum state corresponding to the classical input .

The quantum feature map is implemented via a parameterized quantum circuit that encodes the classical data into a quantum state:

| (8) |

with being the number of qubits.

Quantum Circuit Design

The quantum circuit comprises the following components:

-

1.

Initialization: All qubits are initialized to the state.

-

2.

Hadamard Transformation: Apply Hadamard gates to create a superposition:

(9) -

3.

Data Encoding: Encode classical data using parameterized rotation gates:

(10) where and are functions of the components of .

-

4.

Entanglement: Introduce entanglement using controlled-NOT (CNOT) gates:

(11) -

5.

Final State Preparation: The quantum state after encoding is:

(12)

Quantum Kernel Evaluation

The quantum kernel between and is evaluated as:

| (13) |

This computation involves preparing the quantum states corresponding to and , applying the inverse circuit followed by , and measuring the probability of the system being in the state. This procedure effectively computes the inner product between the quantum states and , capturing their similarity in the quantum feature space.

III-B4 Training and Optimization

The parameters of the QK-LSTM model include the weights , , , , biases , , , , and any trainable parameters within the quantum circuits used for the kernel computations.

Loss Function

For the regression task of AQI prediction, we define a suitable loss function, such as the Mean Squared Error (MSE):

| (14) |

where is the true AQI value at time , is the predicted AQI value, and is the total number of time steps.

Gradient Computation

The gradients of the loss with respect to the classical parameters and biases are computed using standard backpropagation through time (BPTT). For the quantum circuit parameters, we employ the parameter-shift rule [39], which allows for efficient computation of gradients in quantum circuits.

Parameter-Shift Rule

The gradient of the quantum kernel with respect to a circuit parameter is given by:

| (15) |

where represents the kernel evaluated with the parameter shifted by :

| (16) |

Optimization Algorithm

An optimization algorithm, such as stochastic gradient descent (SGD) or Adam, is employed to update the parameters:

| (17) | ||||

| (18) | ||||

| (19) |

where is the learning rate.

IV Result

IV-A Evaluation Method

To rigorously assess the performance of the proposed QK-LSTM model, we employ a suite of evaluation metrics that provide a comprehensive and quantitative analysis of the regression model’s accuracy and error characteristics. These metrics facilitate an objective comparison between the QK-LSTM and traditional LSTM models.

IV-A1 Root Mean Square Error (RMSE)

The Root Mean Square Error (RMSE) is a widely used metric that measures the average magnitude of the prediction errors. It is defined as:

| (20) |

where denotes the total number of observations, represents the actual AQI values, and denotes the predicted AQI values by the model. RMSE provides a measure of the model’s prediction accuracy, with lower values indicating superior performance.

IV-A2 Mean Absolute Error (MAE)

The Mean Absolute Error (MAE) quantifies the average absolute differences between the predicted and actual values. It is defined as:

| (21) |

MAE offers a straightforward interpretation of the average prediction error, with lower values indicating higher accuracy. Unlike RMSE, MAE is less sensitive to large deviations, providing a robust measure of model performance.

IV-A3 Mean Absolute Percentage Error (MAPE)

The Mean Absolute Percentage Error (MAPE) expresses the prediction error as a percentage of the actual values, enhancing interpretability. It is calculated as:

| (22) |

MAPE facilitates the understanding of the model’s error relative to the magnitude of the actual values, making it particularly useful for comparing performance across different scales. Lower MAPE values indicate better predictive performance.

IV-A4 Coefficient of Determination

The coefficient of determination () measures the proportion of variance in the dependent variable that is predictable from the independent variables. It is defined as:

| (23) |

where represents the mean of the actual AQI values. The value ranges from 0 to 1, with values closer to 1 indicating that a greater proportion of variance is explained by the model, thereby reflecting better performance.

IV-A5 Performance Benchmarking

| Metric | QK-LSTM | LSTM | Improvement |

|---|---|---|---|

| RMSE | 9.20 | 15.94 | -42.8% |

| MAE | 7.15 | 11.07 | -35.4% |

| MAPE | 9.14% | 13.32% | -31.9% |

| 0.84 | 0.78 | +7.1% |

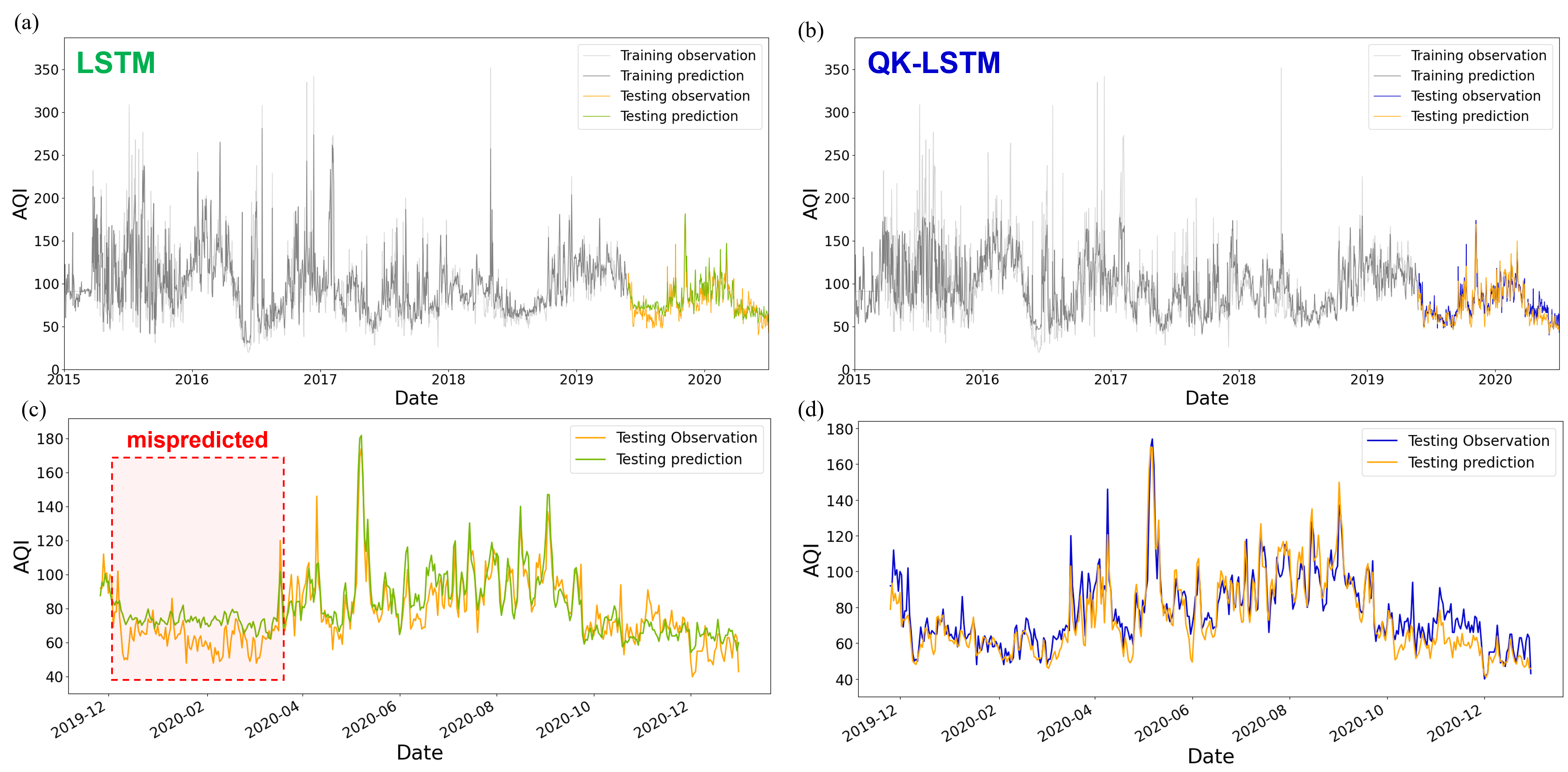

To provide a clear comparison between the QK-LSTM and the conventional LSTM models, we present the evaluation metrics in Table I. The results demonstrate the effectiveness of the QK-LSTM in improving prediction accuracy while maintaining computational efficiency.

In Table I, the QK-LSTM model demonstrates substantial reductions in RMSE, MAE, and MAPE compared to the traditional LSTM model, highlighting its enhanced predictive accuracy. Additionally, the value is slightly higher for the QK-LSTM, further reinforcing the model’s ability to capture intricate temporal dependencies and non-linear patterns within air quality data.

IV-B Model Compression

| Hyperparameter | QK-LSTM | LSTM |

|---|---|---|

| Epochs | 20 | 20 |

| Learning Rate | 0.001 | 0.001 |

| Number of hidden units | 16 | 16 |

| Batch size | 1 | 1 |

| Sequence Length | 3 | 3 |

| Number of Qubits in Quantum Kernel Circuit | 4 | – |

| Total Trainable Parameters | 209 | 1873 |

Table II presents a comparative analysis of the hyperparameters and total trainable parameters between the proposed QK-LSTM network and the traditional LSTM network. Both models were configured with identical settings, including 20 epochs, a learning rate of 0.001, 16 hidden units, a batch size of 1, and a sequence length of 3. The primary distinction lies in the integration of a quantum kernel circuit within the QK-LSTM, utilizing 4 qubits, whereas the conventional LSTM does not incorporate any quantum components. Notably, the QK-LSTM model requires only 209 trainable parameters compared to 1,873 parameters in the standard LSTM. This substantial reduction in the number of parameters highlights the efficiency of the QK-LSTM in capturing complex data representations through quantum-enhanced feature spaces. Fewer trainable parameters not only decrease the computational resource requirements and training time but also mitigate the risk of overfitting, thereby enhancing the model’s generalization capabilities. The incorporation of quantum circuits enables the QK-LSTM to model high-dimensional dependencies and non-linear patterns more effectively with a streamlined architecture. This efficiency makes the QK-LSTM particularly advantageous for deployment in resource-constrained environments and supports scalability for large-scale applications. Overall, the performance benchmarking underscores the potential of quantum-enhanced neural networks to achieve comparable or superior predictive performance with significantly reduced model complexity.

V Scalability and Practicality

The scalability and practicality of the QK-LSTM model are critical considerations for its application to large-scale, high-dimensional climate time-series forecasting tasks. The theoretical foundations of QK-LSTM lie in the integration of quantum kernel methods with classical LSTM architectures, combining the strengths of quantum computing in handling complex data structures with the temporal modeling capabilities of LSTMs.

The QK-LSTM model has been successfully applied to tasks such as Part-of-Speech (POS) tagging task [23], demonstrating that the model achieved competitive accuracy while significantly reducing the number of trainable parameters compared to classical LSTM models. The reduction in parameters is theoretically advantageous, as it decreases the computational complexity and mitigates the risk of overfitting. This efficiency stems from the quantum kernel’s ability to implicitly map input data into high-dimensional Hilbert spaces, allowing the model to capture intricate patterns without the need for extensive parameterization [40].

To further assess the scalability and applicability of QK-LSTM across domains, we extended its use to Air Quality Index (AQI) prediction—a task characterized by complex temporal dynamics and nonlinear dependencies. The experimental results indicated that QK-LSTM outperforms classical LSTM models in predictive accuracy while requiring significantly fewer trainable parameters. Theoretically, this performance gain is attributed to the quantum kernel’s capacity to efficiently compute inner products in high-dimensional feature spaces, effectively enhancing the model’s expressiveness [41]. This property is particularly beneficial for modeling climate time-series data, which often exhibit nonlinearity and high dimensionality due to the multitude of influencing factors.

The QK-LSTM model leverages a specially designed quantum circuit employing the block-encoding technique [42, 43], which facilitates efficient quantum kernel computations. Block-encoding allows for the representation of complex operators within a larger unitary matrix, enabling efficient implementation of quantum kernels that can handle large-scale data. The theoretical advantage of block-encoding lies in its ability to approximate functions of large matrices, such as kernel matrices, without explicitly constructing them, thus reducing computational overhead [44].

Moreover, the QK-LSTM is designed with the Noisy Intermediate-Scale Quantum (NISQ) era in mind. Recognizing the current limitations of quantum hardware, such as qubit coherence times and gate fidelities [17], the model allows for parts of the quantum kernel computations to be simulated on classical hardware, particularly GPUs [27]. This approach is theoretically supported by the correspondence between certain quantum computations and tensor network contractions, which can be efficiently executed on GPUs due to their parallel processing capabilities [45]. By simulating quantum kernels on classical hardware, the QK-LSTM effectively balances resource demands and mitigates the limitations of current quantum devices.

VI Conclusion

This study has demonstrated that integrating quantum kernel methods into LSTM networks significantly enhances climate time-series forecasting, as exemplified by Air Quality Index (AQI) prediction. The proposed QK-LSTM model leverages high-dimensional quantum feature spaces to capture complex nonlinear temporal dependencies inherent in climate data, resulting in improved predictive accuracy and reduced numbers of trainable parameters, thereby increasing computational efficiency. This approach aligns with advancements in quantum high-performance computing (HPC) and hybrid quantum algorithm frameworks, facilitating scalable implementations on emerging quantum hardware and classical co-processors. The successful application of QK-LSTM to AQI prediction suggests its broader applicability to other climate change and time-series analysis problems, such as temperature forecasting, precipitation prediction, flood modeling, and greenhouse gas emission analysis. By extending the QK-LSTM framework to these domains, researchers can effectively address the challenges posed by the intricate and nonlinear nature of climate data, ultimately contributing to more accurate and reliable climate modeling. This advancement supports enhanced decision-making processes related to environmental management and policy formulation, highlighting the potential of quantum-enhanced machine learning models within the context of quantum HPC and hybrid quantum computing paradigms for tackling large-scale, high-dimensional datasets.

Acknowledgment

This work was financially supported by the National Science and Technology Council (NSTC), Taiwan, under Grant NSTC 112-2119-M-007-008- and 113-2119-M-007-013- and EPSRC Distributed Quantum Computing and Applications project (grant number EP/W032643/1). The authors would like to thank the National Center for High-performance Computing of Taiwan for providing computational and storage resources.The authors also thank Dr. An-Cheng Yang and Dr. Chun-Yu Lin for their support with the hardware environment and for the valuable discussions.

References

- [1] P. M. Forster, H. I. Forster, M. J. Evans, M. J. Gidden, C. D. Jones, C. A. Keller, R. D. Lamboll, C. L. Quéré, J. Rogelj, D. Rosen, et al., “Current and future global climate impacts resulting from covid-19,” Nature Climate Change, vol. 10, no. 10, pp. 913–919, 2020.

- [2] I. Haddeland, J. Heinke, H. Biemans, S. Eisner, M. Flörke, N. Hanasaki, M. Konzmann, F. Ludwig, Y. Masaki, J. Schewe, et al., “Global water resources affected by human interventions and climate change,” Proceedings of the National Academy of Sciences, vol. 111, no. 9, pp. 3251–3256, 2014.

- [3] X. Ren, J. Li, F. He, and B. Lucey, “Impact of climate policy uncertainty on traditional energy and green markets: Evidence from time-varying granger tests,” Renewable and Sustainable Energy Reviews, vol. 173, p. 113058, 2023.

- [4] L. R. Medsker, L. Jain, et al., “Recurrent neural networks,” Design and Applications, vol. 5, no. 64-67, p. 2, 2001.

- [5] S. Hochreiter and J. Schmidhuber, “Long Short-Term Memory,” Neural Computation, vol. 9, pp. 1735–1780, 11 1997.

- [6] Y. Yu, X. Si, C. Hu, and J. Zhang, “A review of recurrent neural networks: Lstm cells and network architectures,” Neural computation, vol. 31, no. 7, pp. 1235–1270, 2019.

- [7] H.-Y. Huang, M. Broughton, M. Mohseni, R. Babbush, S. Boixo, H. Neven, and J. R. McClean, “Power of data in quantum machine learning,” Nature communications, vol. 12, no. 1, p. 2631, 2021.

- [8] E. Peters, J. Caldeira, A. Ho, S. Leichenauer, M. Mohseni, H. Neven, P. Spentzouris, D. Strain, and G. N. Perdue, “Machine learning of high dimensional data on a noisy quantum processor,” npj Quantum Information, vol. 7, no. 1, p. 161, 2021.

- [9] J. Biamonte, P. Wittek, N. Pancotti, P. Rebentrost, N. Wiebe, and S. Lloyd, “Quantum machine learning,” Nature, vol. 549, no. 7671, pp. 195–202, 2017.

- [10] S. Yu, Z. Jia, A. Zhang, E. Mer, Z. Li, V. Crescimanna, K.-C. Chen, R. B. Patel, I. A. Walmsley, and D. Kaszlikowski, “Shedding light on the future: Exploring quantum neural networks through optics,” Advanced Quantum Technologies, p. 2400074, 2024.

- [11] K.-C. Chen, Y.-T. Li, T.-Y. Li, and C.-Y. Liu, “Compressedmediq: Hybrid quantum machine learning pipeline for high-dimentional neuroimaging data,” arXiv preprint arXiv:2409.08584, 2024.

- [12] K. T. M. Ho, K.-C. Chen, L. Lee, F. Burt, S. Yu, et al., “Quantum computing for climate resilience and sustainability challenges,” arXiv preprint arXiv:2407.16296, 2024.

- [13] A. Nammouchi, A. Kassler, and A. Theocharis, “Quantum machine learning in climate change and sustainability: A short review,” Quantum, vol. 1, p. 1, 2023.

- [14] S. Y.-C. Chen, S. Yoo, and Y.-L. L. Fang, “Quantum long short-term memory,” in ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8622–8626, IEEE, 2022.

- [15] C.-H. A. Lin, C.-Y. Liu, and K.-C. Chen, “Quantum-train long short-term memory: Application on flood prediction problem,” arXiv preprint arXiv:2407.08617, 2024.

- [16] S. Y.-C. Chen, C.-H. H. Yang, J. Qi, P.-Y. Chen, X. Ma, and H.-S. Goan, “Variational quantum circuits for deep reinforcement learning,” IEEE access, vol. 8, pp. 141007–141024, 2020.

- [17] J. Preskill, “Quantum computing in the nisq era and beyond,” Quantum, vol. 2, p. 79, 2018.

- [18] C. Blank, D. K. Park, J.-K. K. Rhee, and F. Petruccione, “Quantum classifier with tailored quantum kernel,” npj Quantum Information, vol. 6, no. 1, p. 41, 2020.

- [19] P. Rebentrost, M. Mohseni, and S. Lloyd, “Quantum support vector machine for big data classification,” Physical review letters, vol. 113, no. 13, p. 130503, 2014.

- [20] Z. Li, X. Liu, N. Xu, and J. Du, “Experimental realization of a quantum support vector machine,” Physical review letters, vol. 114, no. 14, p. 140504, 2015.

- [21] D. Maheshwari, D. Sierra-Sosa, and B. Garcia-Zapirain, “Variational quantum classifier for binary classification: Real vs synthetic dataset,” IEEE access, vol. 10, pp. 3705–3715, 2021.

- [22] G. Gentinetta, A. Thomsen, D. Sutter, and S. Woerner, “The complexity of quantum support vector machines,” Quantum, vol. 8, p. 1225, 2024.

- [23] Y.-C. Hsu, T.-Y. Li, and K.-C. Chen, “Quantum kernel-based long short-term memory,” arXiv preprint arXiv:2411.13225, 2024.

- [24] L. Ma and L. Ding, “Hybrid quantum edge computing network,” in Quantum Communications and Quantum Imaging XX, vol. 12238, pp. 83–93, SPIE, 2022.

- [25] M. Fellous-Asiani, J. H. Chai, R. S. Whitney, A. Auffèves, and H. K. Ng, “Limitations in quantum computing from resource constraints,” PRX Quantum, vol. 2, no. 4, p. 040335, 2021.

- [26] K.-C. Chen, W. Ma, and X. Xu, “Consensus-based distributed quantum kernel learning for speech recognition,” arXiv preprint arXiv:2409.05770, 2024.

- [27] K.-C. Chen, T.-Y. Li, Y.-Y. Wang, S. See, C.-C. Wang, R. Willie, N.-Y. Chen, A.-C. Yang, and C.-Y. Lin, “cutn-qsvm: cutensornet-accelerated quantum support vector machine with cuquantum sdk,” arXiv preprint arXiv:2405.02630, 2024.

- [28] F. Burt, K.-C. Chen, and K. Leung, “Generalised circuit partitioning for distributed quantum computing,” arXiv preprint arXiv:2408.01424, 2024.

- [29] G. K. Kang, J. Z. Gao, S. Chiao, S. Lu, and G. Xie, “Air quality prediction: Big data and machine learning approaches,” Int. J. Environ. Sci. Dev, vol. 9, no. 1, pp. 8–16, 2018.

- [30] W.-L. Cheng, Y.-S. Chen, J. Zhang, T. Lyons, J.-L. Pai, and S.-H. Chang, “Comparison of the revised air quality index with the psi and aqi indices,” Science of the Total Environment, vol. 382, no. 2-3, pp. 191–198, 2007.

- [31] Central Pollution Control Board, “Air quality index (aqi) data.” https://cpcb.nic.in/, 2020. Accessed: 2020-11-04.

- [32] D. W. Wong, L. Yuan, and S. A. Perlin, “Comparison of spatial interpolation methods for the estimation of air quality data,” Journal of Exposure Science & Environmental Epidemiology, vol. 14, no. 5, pp. 404–415, 2004.

- [33] Q. Chen, X. Zhu, Z. Ling, S. Wei, H. Jiang, and D. Inkpen, “Enhanced lstm for natural language inference,” arXiv preprint arXiv:1609.06038, 2016.

- [34] L. Yao and Y. Guan, “An improved lstm structure for natural language processing,” in 2018 IEEE international conference of safety produce informatization (IICSPI), pp. 565–569, IEEE, 2018.

- [35] B. Alkin, M. Beck, K. Pöppel, S. Hochreiter, and J. Brandstetter, “Vision-lstm: xlstm as generic vision backbone,” arXiv preprint arXiv:2406.04303, 2024.

- [36] H. C. Kilinc, S. Apak, F. Ozkan, M. E. Ergin, and A. Yurtsever, “Multimodal fusion of optimized gru–lstm with self-attention layer for hydrological time series forecasting,” Water Resources Management, pp. 1–18, 2024.

- [37] K. Zhou, S.-K. Oh, W. Pedrycz, J. Qiu, and K. Seo, “A self-organizing deep network architecture designed based on lstm network via elitism-driven roulette-wheel selection for time-series forecasting,” Knowledge-Based Systems, vol. 289, p. 111481, 2024.

- [38] B. Gülmez, “Stock price prediction with optimized deep lstm network with artificial rabbits optimization algorithm,” Expert Systems with Applications, vol. 227, p. 120346, 2023.

- [39] V. Bergholm, J. Izaac, M. Schuld, C. Gogolin, S. Ahmed, V. Ajith, M. Alam, G. Alonso-Linaje, B. AkashNarayanan, A. Asadi, et al., “Pennylane: Automatic differentiation of hybrid quantum-classical computations. arxiv 2018,” arXiv preprint arXiv:1811.04968, 2018.

- [40] M. Schuld and N. Killoran, “Quantum machine learning in feature hilbert spaces,” Physical review letters, vol. 122, no. 4, p. 040504, 2019.

- [41] V. Havlíček, A. D. Córcoles, K. Temme, A. W. Harrow, A. Kandala, J. M. Chow, and J. M. Gambetta, “Supervised learning with quantum-enhanced feature spaces,” Nature, vol. 567, no. 7747, pp. 209–212, 2019.

- [42] J. Martyn, G. Vidal, C. Roberts, and S. Leichenauer, “Entanglement and tensor networks for supervised image classification,” arXiv preprint arXiv:2007.06082, 2020.

- [43] T. Suzuki, T. Hasebe, and T. Miyazaki, “Quantum support vector machines for classification and regression on a trapped-ion quantum computer,” Quantum Machine Intelligence, vol. 6, no. 1, p. 31, 2024.

- [44] A. Gilyén, Y. Su, G. H. Low, and N. Wiebe, “Quantum singular value transformation and beyond: exponential improvements for quantum matrix arithmetics,” in Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, pp. 193–204, 2019.

- [45] I. L. Markov and Y. Shi, “Simulating quantum computation by contracting tensor networks,” SIAM Journal on Computing, vol. 38, no. 3, pp. 963–981, 2008.