Quantum Federated Learning with Entanglement Controlled Circuits and Superposition Coding

Abstract

While witnessing the noisy intermediate-scale quantum (NISQ) era and beyond, quantum federated learning (QFL) has recently become an emerging field of study. In QFL, each quantum computer or device locally trains its quantum neural network (QNN) with trainable gates, and communicates only these gate parameters over classical channels, without costly quantum communications. Towards enabling QFL under various channel conditions, in this article we develop a depth-controllable architecture of entangled slimmable quantum neural networks (eSQNNs), and propose an entangled slimmable QFL (eSQFL) that communicates the superposition-coded parameters of eSQNNs. Compared to the existing depth-fixed QNNs, training the depth-controllable eSQNN architecture is more challenging due to high entanglement entropy and inter-depth interference, which are mitigated by introducing entanglement controlled universal (CU) gates and an inplace fidelity distillation (IPFD) regularizer penalizing inter-depth quantum state differences, respectively. Furthermore, we optimize the superposition coding power allocation by deriving and minimizing the convergence bound of eSQFL. In an image classification task, extensive simulations corroborate the effectiveness of eSQFL in terms of prediction accuracy, fidelity, and entropy compared to Vanilla QFL as well as under different channel conditions and various data distributions.

Index Terms:

Quantum Machine Learning, Quantum Entanglement, Quantum Federated Learning, Superposition CodingI Introduction

I-A Background and Motivation

Recent advances in quantum computing hardware and algorithms have recently lead to the emergence of quantum machine learning (ML) [2, 3, 4]. As opposed to classical computation at a linear scale in bits, quantum computing can perform calculations at an exponential scale in qubits [5]. The main enablers are the stochastic nature and the entanglement phenomenon of qubits, allowing one to make each qubit represent superimposed multiple states and to simultaneously control multiple qubits, respectively. Consequently, even in the current era of noisy intermediate scale quantum (NISQ) [6], i.e., with 50 to a few hundred qubits, quantum ML has achieved linear or sublinear complexity in various applications, as compared with the polynomial complexity of classical ML [7].

Quantum ML has recently established its standard framework. As analogous to the neural network (NN) of classical ML, the parameterized quantum circuit (PQC), also known as the quantum NN (QNN), has become a de facto standard quantum ML architecture [7, 8]. In a PQC, qubits flow through the gates associated with trainable classical parameters, during which the states of the qubits can be adjusted. For various applications ranging from image classification [9] to reinforcement learning [10], with a much smaller number of trainable parameters, PQC training has achieved the prediction accuracy on par with the neural network (NN) of classical ML.

Focusing on the parameter efficiency of PQCs, by integrating federated learning (FL) [11, 12, 13, 14] into standalone quantum ML, quantum FL (QFL) has recently attracted attention [15, 16]. Without communicating qubits via costly quantum communications, QFL enables distributed quantum ML at scale by communicating the PQC’s trainable parameters via classical communications, even over wireless channels [17]. This is not in the distant future, but is an upcoming application, especially considering the ever-increasing pace of innovation in quantum computers, e.g., IBM’s development roadmap planning to implement a 1K-qubit beyond-NISQ computer in 2023 [18] and a 100K-qubit computer in 2026 [19].

I-B Algorithm Design Concept

Motivated by this trend in QFL, the overarching goal of this article is to develop a communication-efficient QFL framework that can cope with heterogeneous and time-varying channel conditions and computing resources. To this end, we first revisit slimmable FL (SFL) in classical ML [1], wherein each device has a width-controllable local model, known as a slimmable NN (SNN) [20, 21], and communicates its superposition-coded local model with different width levels, enabling multi-level local information exchanges depending on channel conditions. Inspired from this, as visualized in Fig. 1, we propose an entangled slimmable quantum FL (eSQFL) framework with entangled slimmable QNNs (eSQNNs), which is a non-trivial extension from SFL with SNNs to their quantum versions as summarized later.

Unlike multi-width SNNs, the eSQNN is a multi-depth PQC wherein more depth levels incur higher von Neumann entanglement entropy on average. Unfortunately, the PQC trainability is often challenged by the problem of vanishing all gradients, known as the barren plateaus [22], which is exacerbated under higher entanglement entropy [23]. Meanwhile, too low entanglement may negate the benefit of quantum ML. To resolve this issue for an unknown target degree of entanglement, our proposed eSQNN entangles different qubits using the controlled universal (CU) quantum gates [24] such that the degree of entanglement is trainable.

Next, simultaneous local training of the multiple eSQNN depths may induce inter-depth interference, hindering convergence. In classical ML, SFL avoids its similar inter-width interference issue by adding the inplace knowledge distillation (IPKD) regularizer that penalizes the output difference from any smaller width to the largest width level [20]. Since the IPKD uses the Kullback-Leibler (KL) divergence, it becomes less accurate (or even diverging) for the larger differences. Alternatively, leveraging the Uhlmann’s fidelity function in quantum information theory [25], we propose a novel inplace fidelity distillation (IPFD) regularizer that is bounded within 0 and 1 while accurately measuring the quantum state difference even between the smallest and the largest levels.

Finally, for communication efficiency, the eSQNN parameters in multiple depths are superposition-coded and transmitted with a different transmit power allocation to each depth. Like SFL, the transmit power is optimized by deriving and minimizing the convergence bound of the eSQFL. Nevertheless, the convergence analysis is completely different since the gradient in PQC training is measured in a quantum computing way using the parameter shift rule [26].

Not only by analysis but also by extensive simulations, we corroborate that the proposed eSQFL with eSQNNs achieves convergence while each different width level can be trained to be a separate model with reasonable accuracy and fidelity, under various channel conditions as well as independent and identically distributed (IID) or non-IID data distributions. Note that unlike eSQFL, Vanilla quantum FL having fixed local PQC architectures cannot cope with different channel conditions [15, 27]. A recent work [28] also considers a slimmable architecture in the context of QFL. However, it does not theoretically guarantee convergence, and its specific architecture (i.e., angle/pole parameters) only allows two-level superposition coding, as opposed to generalized multi-level architectures using CU gates in eSQFL.

I-C Contributions

The major contributions of the work in this paper can be summarized as follows.

-

•

A multi-depth QNN architecture with CU gates, i.e., eSQNN, is proposed to enable superposition-coded transmissions while avoiding barren plateaus. We measure von Neumann entropy between inter-quantum states of different depths. Indeed, CU gates increase the trainability when designing multi-depth QNN.

-

•

A local eSQNN training algorithm with a fidelity-inspired regularizer, i.e., IPFD, is proposed in order to mitigate inter-depth interference. In eSQNN training, the proposed IPFD in this paper shows the crucial role.

-

•

With eSQNNs and IPFD, a novel quantum FL framework, i.e., eSQFL, is proposed, and its convergence bound is theoretically derived.

-

•

Based on the derived convergence bound, transmit power allocation in superposition coding is optimized. In addition, we corroborate that the derived convergence bound helps eSQFL achieve high accuracy.

I-D Organization

The rest of this paper is organized as follows. Sec. II presents the related work to the proposed quantum federated learning. Sec. III introduces the eSQNN architecture and its local training with IPFD regularizer. Sec. IV describes superposition coding, successive decoding, and the proposed eSQFL framework. Sec. V provides the convergence analysis on eSQFL and its insight. Sec. VI presents the numerical experimental results to corroborate eSQFL empirically. Lastly, Sec. VII concludes this paper. Notice that the notations in this paper are in Tab. III.

II Related Work

II-A Quantum Machine Learning Basics

Basic Quantum Gates. A qubit is a quantum computing unit where the quantum state is represented with two bases , and in Bloch sphere [29]. Consider the qubits system, in which the quantum state defined in Hilbert space can be expressed as follows,

| (1) |

where . A classical data is encoded as a quantum state with the rotation gates , , and , where the rotation of occurs in the direction of -, -, and -axes in Bloch sphere, respectively. Moreover, qubits are entangled with controlled-NOT gates (CNOT) [30]. CNOT gates act on two qubits to entangle them by using the first qubit as the control qubit and performing XOR operation on the second qubit. These basic quantum gates configure the QNNs.

Quantum Neural Network. The structure of a QNN is tripartite: the state encoder, PQC, and the measurement layer [31, 32]. In the forward propagation, classical input data needs to be first encoded with the state encoder via basic rotation gates, which is a unitary operation and denoted as . Then, the encoded quantum state is processed through the PQC , a multi-layered set of CNOT gates and rotation gates associated with trainable parameters . The quantum state can be expressed as,

| (2) |

The output of the PQC is the entangled quantum state that can be measured after applying a projection matrix onto the reference -axis. The measured output is called an observable, where denotes the output dimension. The operation of QNN corresponding to -th observable is as follows,

| (3) |

where denotes the complex conjugate operator. Using the observable, a given loss function is calculated. Unlike classical NNs having visible activations in their hidden layers, the quantum states within QNNs are not measurable; otherwise, the quantum states collapse [29]. This does not allows quantum ML to compute the loss gradients via the chain rule, i.e., backpropagations. Alternatively, quantum ML evaluates the gradients using the zero-th order method called the parameter shift rule [26] (see Appendix -A).

II-B Classical Federated Learning

Federated learning (FL) is a machine learning (ML) architecture made up of a server, local devices, and a global model [12]. The server transmits the global model to all the local devices, and each device produces local parameters by training the received global model. Then, these parameters are sent back to the central server, where all the data is aggregated to update the global model. Finally, the updated global model is transmitted to the local devices again for another iteration. Due to this mechanism, FL allows a large number of devices to learn a global model simultaneously without transmitting any data, ensuring data privacy as well. Considering the recent increase in the number and computational power of edge devices, FL is an extremely useful tool for reducing computational overhead and protecting data security which is both emerging challenges in the field of ML [33]. Within this architecture, various techniques with differing methods of aggregating data and training the global model exist, e.g., FedAvg [34], FedBN [35].

The convergence analysis of FL algorithms is especially challenging because of the data heterogeneity in FL, which forces researchers to rely on copious numbers of assumptions. Consequently, gaps in the understanding of FL analysis occur. Over the years, many major works have attempted to better understand FL by removing assumptions and exploring new techniques [36, 37, 34, 38, 39]. Even now, research on FL convergence in various aspects is still being carried out (i.e., non-convex, convergence bounds). For example, [40] has successfully proposed an analysis of local stochastic gradient descent (SGD) using only arbitrarily heterogeneous data while also using weaker assumptions than previous works. On the other hand, convergence analysis of most QFL algorithms has not been fully developed yet. This paper aims to further discuss the convergence analysis of QFL via the analysis of eSQFL with the characteristic of quantum computing. The convergence analysis on a dynamic QFL is elaborated in Sec. IV.

II-C Classical Slimmable Federated Learning

SFL is a framework that executes FL by using slimmable neural networks (SNNs) with SC and SD [1]. The architectural properties of SNN reduce memory costs of SFL [20] while with rigorous communication and computational efficiencies. SC is a process of compressing two different data signals into one signal. As the signals are encoded, different power levels are assigned to each data signal which is used to decide the priority of signals during SD. Additionally, the SNN is composed of the left-hand (LH) and the right-hand (RH) sides. The LH side is occupied by the high priority signal, while the lower priority signal goes to the RH side. After SC is finished, the encoded message will be uploaded to the server, which then undergoes SD. Assuming that the state of the communication channel is good, the LH of the SNN will be decoded first, followed by the RH signal. However, if the communication channel is not stable enough, only LH will be decoded, resulting in a small model. Finally, if the communication channel is completely unstable, no signal will be obtained. This flexible characteristic of SNN allows SFL to be extremely adaptable to dynamic communication environments, making it suitable for practical applications.

II-D Quantum Federated Learning

In this section, the concept of QFL is elaborated in depth. QFL is implementing FL via quantum computation by replacing all the NNs with QNNs. Chen et al., [15] is the first to propose a hybrid quantum-classical QFL architecture where the local devices are replaced with quantum devices, unlike FL models. After receiving global model parameters, the quantum computers carry out QML using QNNs. Then, the output of each device is aggregated to update the global model before repeating the process. In Chehimi et al., [27], a purely QFL framework is proposed. Similar to [15], this model is composed of a server and multiple quantum devices. However, instead of converting classic data into quantum states, the local devices generate quantum data by labeling qubits as excited or not excited according to the degree of rotation on the Bloch sphere. Both [15, 27] use FedAvg to aggregate data and execute training. As seen from the two examples above, a QFL and FL share an identical system structure, but QFL leverages QML instead of ML in order to exploit the advantages of quantum computing. For this work, Vanilla QFL is referring to a purely quantum version of [15]. In addition, quantum application of SFL is studied to improve the communication opportunities [28]. SQFL utilized trainable measurement parameters to configure two messages which contain both the trainable measurement parameters and PQC parameters, respectively. However, in this work, multiple layer architectures and local training algorithms are proposed, which are not present in [28].

III Architecture and Training of eSQNNs

In this section, we describe the architecture of eSQNN and its local training algorithm. To elaborate on this, by slightly modifying the depth-fixed architecture of Vanilla QNN [7], we first prepare its depth-controllable counterpart without controlling the level of entanglement, dubbed Vanilla SQNN, followed by introducing the proposed eSQNN controlling both the depth and the level of entanglement.

Architecture of Vanilla SQNN. Suppose that Vanilla SQNN consists of layers, and produces the desired outputs at any layer . In this paper, the number of sub-models must be larger than 1 (i.e., ). When the -th sub-model is used, it means that the -th model will be configured from the encoding layer to the -th layer. For an arbitrary and , the model parameters of -th local device and the -th layer is denoted as . Note that is a binary mask which eliminates all trainable parameters except parameters of the -th layer. The operation denotes an element-wise product. However, it is difficult to make desirable results at any random layer because the vanilla SQNN is vulnerable to the barren plateau problem [22, 41]. The barren plateau is a bad local optimum which hinders convergence. It is known that more entanglement’s degree introduces worse the barren plateau problem [23]. The operations in Vanilla SQNN are as follows: 1) rotate quantum state with rotation gates, 2) entangle qubits, and 3) repeat the first and second steps. We predict that the operations mentioned above will increase the degree of entanglement.

Architecture of eSQNN. eSQNN is proposed to cope with the problem of Vanilla SQNN architecture. Fig. 2 shows the illustration of eSQNN. eSQNN is mainly composed of CU gates. The operations of the CU gate in two qubits are written as , where is expressed as . Note that is an unitary matrix, i.e., . We focus on the architectural advantage of CU gates because CU gates can adjust the direction of entanglement, disentanglement, or rotation while training. We describe the advantages of eSQNN and the barren plateau phenomenon next.

To this end, at first we consider the von Neumann entanglement entropy, a metric for measuring the degree of quantum entanglement of bipartite subsystems in an entire system [42]. For instance, consider two subsystems, e.g., and -th model configuration, where . According to a two-copy test from [43], we can compare the different quantum states and by using additional qubits. Then, we can measure the entanglement entropy by following the statement below. Suppose a quantum state that exists in and -th depth is represented as . Its pure state is obtained by . Finally, the entanglement entropy is calculated as follows,

| (4) |

where stands for partial trace over the -th layer. As discussed in many studies, avoiding the barren plateaus requires the reduction of the entanglement entropy [23].

On the basis of these studies, we assume that there exists an entropy threshold for every -th model, i.e., for all and . It starts at , because we measure the entanglement entropy from the encoding state, i.e., . If , the barren plateau becomes severe and training of -th model fails. For this, we observe the entanglement entropy between the encoding state and the layer of eSQNN. In order to ensure all model configurations are trained, we define a metric as,

| (5) |

where stands for an indicator function. In order to verify whether the metric works correctly, we provide the following two cases. If all model configurations are satisfied , then , which means it avoids the barren plateau. On the other hand, suppose that that satisfies , we have which it means it suffers from barren plateau. We conjecture that eSQNN is robust to the barren plateau than Vanilla SQNN because the event frequently occurs in eSQNN. More details are in Sec. VI-B.

eSQNN Local Training. This section presents the eSQNN local training algorithm. In general, classic SNNs use the IPKD regularizer to transfer knowledge from a large model to a small model [20], which can be expressed as,

| (6) |

where is the KL divergence. IPKD is ill-suited when the difference between the outputs of two models becomes large, where the KL divergence may even diverge. Alternatively, we propose the IPFD regularizer , inspired by the Uhlmann’s fidelity function [44] in quantum information theory, measuring the similarity between two quantum states [44]. Precisely, the fidelity of the quantum states in the -th and -th model configurations is defined as follows,

| (7) |

In (7), if , is similar to , which means the logits of -th model are almost same as the logits of -th model. On the other hand, the opposite condition means the -th model does not follow the -th model.

Consequently, in a classification task, the local training of an eSQNN with the IPFD regularizer is described as follows. The parameters are denoted as data and label, respectively. The predicted label is an one-hot encoded vector wherein the element becomes unity for a true label and otherwise , i.e., . Hereafter, we describe the local training for the parameters of local device in the -th communication round and -th local training iteration. The logits of class and its prediction of -th model are denoted as,

| (8) | ||||

| (9) |

where represents the observable hyperparameter. Additionally, the cross-entropy loss and the fidelity regularization are as,

| (10) | ||||

| (11) |

The loss function is given as,

| (12) |

where and stand for the batch size and the balanced parameter of fidelity regularization, respectively. The gradient of (12) can be calculated with parameter shift rule [26]. Algorithm 1 summarizes the local training process before one communication round. After training with Algorithm 1, the gradient to be transmitted to the server can be as,

| (13) |

where denotes the learning rate at communication round .

IV Entangled Slimmable Quantum Federated Learning

IV-A Superposition Coding & Successive Decoding

The successful reception of a wireless signal is mainly affected by the signal-to-interference-plus-noise ratio (SINR) [45]. At a receiver, SINR can be expressed as,

| (14) |

where , , , and denote the transmission interference, reception interference, a transmitter-receiver distance, and noise powers, respectively. In addition, is a path loss exponent and is small-scale fading parameter (i.e., Rayleigh fading). Following the Shannon’s capacity formula with a Gaussian codebook, the received throughput with the bandwidth is (bits/sec). When the transmitter encodes raw data with a code rate , its receiver successfully decodes the encoded data if . Finally, the decoding success probability can be given as follows,

| (15) |

where . Consider transmitting messages from a transmitter to a receiver simultaneously. Before transmission, these messages are SC-encoded [46], and the whole transmission power budget is allotted to the -th message, with transmission power for . Note that is an allocation variable such that , , and .

The SC-encoded message is meant to be sequentially decoded at the receiver by first decoding the strongest signal, then canceling out the decoded signal, and finally decoding the next strongest signal, i.e., SD, also known as successive interference cancellation [47, 48]. The small-scale fading parameter under Rayleigh fading follows an exponential distribution, i.e., . Assuming , the receiver may gradually decode the -th message while experiencing the remaining messages as interference , i.e.,

| (16) |

for . However, as there is no interference for the last message. Assume that represents the throughput of the -th message. Then, the distribution of is given as,

| (17) |

where denotes the averaged signal-to-noise ratio (SNR). By using this result, the -th message’s decoding success probability can be expressed as follows,

| (18) | ||||

| (19) |

IV-B eSQFL Operations

This section describes the operations of eSQFL. Algorithm 2 shows the eSQFL algorithm. First of all, local devices are trained with Algorithm 1. The power allocation is conducted to configure SC-encoded model parameters, i.e., for the gradient of the subdivided model configuration. After that, the local devices transmit their SC-encoded model parameters to the server. The server decodes the devices’ SC-encoded model parameters with SD. If the server receives at least one local gradient for every model configuration, the server aggregates; otherwise, no aggregation occurs. In the aggregation of sub-divided model configuration, FedAvg is utilized [34]. The updates of eSQFL will be explained later.

V Convergence Analysis

V-A Setup

In order to analyze the convergence rate of eSQFL, the following assumptions are considered. Firstly, the local-side decoding is always successful (Algorithm 2, lines 12–13) because the server-side transmission power is higher than the uplink power. Secondly, is assumed to be big enough such that , for all . During the -th communication round, the server builds the global model which can be expressed as follows,

| (20) |

The objective function of the global model and the local objective functions are denoted as and respectively. The bar notation is used for the averaged value over , and the superscript ∗ is used to indicate the optimum. For mathematical amenability, we consider the following assumptions on and , as used in [49].

Assumption 1 (-Smoothness).

If and are -smooth,

| (21) |

for all .

Assumption 2 (-Strong Convexity).

If and are -strong convex,

| (22) |

for all .

Assumption 3 (Bounded Local Gradient Variance).

For all device and its local data , the difference between the local gradient and is bounded, i.e.,

| (23) |

According to [40], the metric for the non-IIDness of is given as follows,

| (24) |

V-B Convergence Analysis

In classical ML, the convergence of FedAvg has been analyzed by assuming bounded local gradients in [49]. Without such an unrealistic assumption, the convergence bound of SFL has been derived in [1]. In quantum ML, local gradients can be shown to be inherently bounded thanks to the bounded fidelity and the parameter shift rule computing quantum gradients [26]. Hence, rather than adopting the methods in [1], we first derive the local gradient bound, and then derive the convergence bound of eSQFL by following the steps [49]. The detailed proofs are deferred to Appendix, and only the results are presented as elaborated next.

Lemma 1 (Bounded Local Gradient).

For and , it follows that

| (25) |

Lemma 2 (Bounded Global Gradient).

For , the global gradient has bound as,

| (26) |

Lemma 3 (Bounded Global Gradient Variance).

Under Assumption 3, the variance of the global gradient is bounded within , which is given as,

| (27) |

Note that Lemmas 2 and 3 are different, in the sense that Lemma 2 focuses on the actual gradient, whereas Lemma 3 is related to data distributions. The convergence analysis utilizes Lemmas 1–3 and eSQFL convergence can be proven by [49].

Theorem 1 (eSQFL Convergence).

Hence, .

Theorem 1 exhibits several insights of eSQFL as follows.

-

1.

Failure under extremely poor channels: Consider an extremely poor channel condition, where the server cannot receive -th model configurations, i.e., . In this case, the RHS of (28) diverges.

-

2.

Importance of successful reception: The optimal gap of eSQFL becomes smaller by increasing the communication opportunities. Consider a perfect channel condition, where the RHS of (28) is minimized. By optimizing the SC transmission, the optimality gap is reduced which is referred to Proposition 1 and Corollary 1.

-

3.

Other important metrics: The optimality gap is affected by the local iterations per communication round , balance factor , and the number of layers .

Proposition 1 (Optimal SC Power Allocation).

The transmission power allocation minimizing the optimality gap is given as,

| (31) |

where , for , and .

Proof.

Substituting the term into Theorem 1, the optimality gap is minimized by optimizing the power allocation. ∎

Corollary 1 (Low SNR, ).

For , , and , the optimal power allocation is as,

| (32) |

Proof.

Since for , the RHS of (31) becomes , which is piece-wise convex. Applying the first-order necessary condition (FONC) with respect to completes the proof. ∎

| Description | Value |

| Number of devices () | 10 |

| Local iterations per communication round () | 10 |

| Epoch () | 100 |

| Optimizer | SGD |

| Learning rate (), | |

| Decaying rate | |

| Observable hyperparameter () | |

| Number of qubits | |

| Number of parameters in eSQFL & Vanilla QFL | |

| Number of data per device | |

| Batch size () |

VI Experiments

VI-A Experimental Design

To corroborate the main analysis and hypothesis of this paper, the experiments are designed as follows:

- •

-

•

We investigate the advantage of CU gates that compose eSQNN by designing an experiment which measures entanglement entropy and top-1 accuracy of eSQNN and standard QNNs under the same conditions. Then, the two metrics are compared to demonstrate the advantage of CU gates.

-

•

The increased effectiveness of local training with IPFD compared to IPKD is proven. IPFD trains the local models by regularizing the fidelity of two quantum states. In contrast, IPKD trains local models by ensuring that the small model follows the large model via its prediction. The benchmark scheme comparing the fidelity and top-1 accuracy of IPFD and IPKD is designed.

-

•

According to Proposition 1 and Corollary 1, the convergence bound is minimized by optimal transmission power allocation. To corroborate this, we compare the optimal power allocation scheme to its random power allocation counterpart.

-

•

Finally, we conduct experiments by controlling various variables and assess their various impact on the performance.

|

|

|

| (a) . | (b) . | (c) . |

For the experiment, eSQFL and Vanilla QFL are evaluated. eSQFL is the proposed model which leverages eSQNN. This specific QNN consists of three sub-models named ‘L1’, ‘L2’, and ‘L3’. In contrast, Vanilla QFL uses a standard QNN which is made up of basic quantum gates [7], and does not consider SC and SD [15]. Despite the difference in structure, both eSQNN and standard QNN use equivalent number of parameters. Moreover, we conduct ablation studies on our eSQNN by comparing it with Vanilla SQNN, a depth-controllable yet entanglement-fixed QNN. Since the performance of QFL suffers under a system with a large number of qubits, many QFL works use a simple dataset [15]. In this paper, the MNIST dataset is transformed into a simpler form: the dimension of MNIST data is reduced to by inter-area interpolation, and only four classes are used (i.e., 0, 1, 2 and 3) [50]. The four classes are represented with red, blue, black, and green respectively. In addition, Dirichlet distribution is used to investigate non-IIDness of data [51]. Fig. 3 shows the data distribution with the different values of the Dirichlet concentration ratio . Data with high Dirichlet concentration ratio (i.e., ) is IID while data with low Dirichlet concentration ratio (i.e., ) is non-IID.

To compare IPFD and IPKD, we initialize the parameter of eSQNN identically. The simulation parameters used in these numerical experiments are summarized in Tab. I.

|

|

|

| (a) . | (b) . | (c) . |

|

|

|

| (a) . | (b) . | (c) . |

VI-B Numerical Results

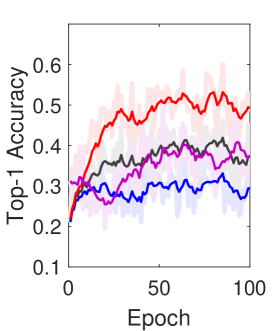

Numerical Results and Convergence Analysis. According to Theorem 1, the convergence bound decreases if the decoding success probability increases. Fig. 4 shows the performance of eSQFL under various channel conditions obtained through various . As increases from to , the decoding success probability and top-1 accuracy of the eSQFL with all layers increase. The small models, i.e., eSQFL-L2 and eSQFL-L1, also show improvement in performance along with eSQFL-L3. Especially, eSQFL-L2 shows significant improvement in top-1 accuracy from to . Fig. 5 shows the top-1 accuracy and convergence of eSQFL and comparison models. When , the sub-models in eSQFL (i.e., eSQFL-L2, eSQFL-L3) achieve higher accuracy than Vanilla QFL. The final standard deviations of eSQFL under are 0.041, 0.051, and 0.066 for eSQFL-L1, eSQFL-L2, and eSQFL-L3, respectively.

According to Theorem 1, the data distribution affects the convergence bound of eSQFL. With non-IID data, the convergence bound is widened. As shown in Fig. 5, we test various Dirichlet concentration, i.e., . The overall performance of all comparison models decreases as decreases. However, eSQFL shows robustness under non-IID data distribution. Vanilla QFL shows low top-1 accuracy under and . In contrast, eSQFL maintains the top-1 accuracy of and under and respectively. From the results in Fig. 4 and Fig. 5, eSQFL is robust under various channel conditions and non-IID data distribution.

|

|

|

| (a) Top-1 accuracy. | (b) Fidelity. | (c) Entropy. |

|

|

| (a) IPKD Regularization. | (b) IPFD Regularization. |

|

|

| (a) . | (b) . |

Structural Advantage of eSQNN. In this subsection, we investigate the general performance, fidelity, and entanglement entropy of eSQNN. We conduct ablation studies corresponding to model architecture (i.e., eSQNN and Vanilla SQNN). Fig. 6 shows the experiment results. As shown in Fig. 6(a), eSQNN shows better top-1 accuracy than Vanilla eSQNN. Especially, eSQNN-L3 achieves a performance improvement over Vanilla SQNN. When eSQNN is used, the quantum state (i.e., knowledge) is successfully distilled to the small model as shown in Fig. 6(b). In contrast, Vanilla SQNN fails to distill the knowledge to its sub-model. To understand why its model is successfully trained, we calculate the entanglement entropy (referred to Sec. III). Fig. 6(c) exhibits the von Neumann entanglement entropy of each layer of eSQNN and Vanilla SQNN. The entanglement entropy of eSQNN is less than Vanilla SQNN for all layers. It means that the event of exceeding the entropy threshold aforementioned in Sec. VI, i.e., , rarely occurs compared to Vanilla SQNN. This underscores that eSQNN is more robust to barren plateaus than Vanilla SQNN.

Effectiveness of IPFD. To investigate the effectiveness of IPFD used in eSQNN local training, we compare the results of local training using IPFD regularizer to IPKD regularizer. Fig. 7 (a)/(b) show the learning curve of and , respectively. The learning curve of IPFD starts at due to the fidelity . As eSQNN is trained, the fidelity decreases and converges to 0.955 for L1 and 0.987 for L2. In the learning curve of , the curve has a tendency to decrease and converge. However, the fluctuation of IPKD regularization is larger than IPFD, especially in eSQFL-L1. This is because the KL divergence becomes unstable when the difference between the two distributions is large, i.e., the overlapping area between the distributions is small. Then, if there is no overlapping area, it diverges. In contrast, the aforementioned phenomena does not occur in IPFD regularization because IPFD regularizer is bounded from 0 to 1. Therefore, IPFD regularization provides more stable noise to its eSQNN than IPKD regularization.

| Condition | |||||

|---|---|---|---|---|---|

| with optimization () | |||||

| w.o. optimization | |||||

Impact on Optimal Power Allocation. We verify the proofs of Proposition 1, and Corollary 1. When , we calculate the power allocation variable as by non-convex optimization. When , we obtain , where the comparison of power allocation is set to for , and for . The final accuracy is Tab. II. Compared to the eSQFL with , the eSQFL with achieves higher top-1 accuracy when and higher top-1 accuracy when . Thus, we corroborate that the optimal power allocation minimizes the convergence bound.

Impact on Balanced Parameter. The balanced parameter is an important parameter in eSQNN local training. Fig. 8 shows the top-1 accuracy according to in various data distributions (i.e., and ). With finitely adjusted IPFD parameter (), eSQNN shows the highest top-1 accuracy under non-IID data distribution (i.e., ). In addition, by not using IPFD () or only using IPFD (), eSQNN fails to classify the mini-MNIST dataset. Under IID data distribution (i.e., ), eSQNN with outperforms eSQNN with only using label training () about . From the result, we recommend utilizing eSQNN with for robust performance in both IID and non-IID data distribution.

VII Conclusions

In this paper, we developed a depth-adjustable QNN architecture, and proposed a novel QFL framework based on wireless communications, termed eQSNN and eSQFL, respectively. To control the level of entanglement and reduce its entropy, we applied CU gates to the eSQNN architecture. To mitigate the inter-depth interference inspired from the fidelity in quantum information theory, we introduced a novel IPFD regularizer. Finally, to cope with various channel conditions, we applied SC across multiple depths and optimized the SC power allocation by deriving and minimizing the convergence bound of eSQFL. In conclusion, we were able to propose a QFL model that shows stable performance despite the NISQ limitation and variable channel conditions. Additionally, the fidelity regularizer was also designed. This novel method decreases the error rate of QML in a way that is exclusively suitable with QC, instead of depending on classical optimization methods. Since the strengths of our model has been theoretically corroborated in this paper, we will go on to test the realistic efficacy of the model. In our future research directions, we will apply it to a plethora of real-life scenarios with various limitations.

| Notation | Description |

|---|---|

| Number of local devices, | |

| Number of local eSQNN blocks, | |

| Number of local iterations, | |

| Number of communication rounds, | |

| Binary mask, | |

| Quantum state | |

| Reduced density matrix | |

| Entanglement entropy of over subsystem | |

| Whole data, | |

| Power allocation for SC, | |

| Dirichlet concentration |

-A Parameter Shift Rule

Parameter shift rule [26], one of the most known quantum gradient calculators, is utilized to train the model. Subsequently, the eSQNN is trained accordingly using the zeroth stochastic gradient descent algorithm, e.g., quantum natural gradient. Consider that eSQNN consists of trainable parameters, i.e., . Then, the partial derivative of -th device’s -th observable over parameter is given as follows,

| (33) |

where denotes the -th standard basis, and . We calculate the loss gradient using (33).

-B Proof of Lemma 1

The true label is class and the predictions and its derivative is canceled out due to the definition of cross-entropy. Then, the cross-entropy loss is simplified as follows,

| (34) |

Hereafter, we denote , and . Let’s denote the partial derivative of cross-entropy loss and fidelity loss as,

| (35) | ||||

| (36) |

By the triangle inequality, the partial derivative of (12) is bounded as follows,

| (37) |

We have

| (38) |

The bound of obtained as follows,

| (39) |

The former step is due to , and the latter step is because the gradient using parameter shift rule is bounded to [26]. The term and its bound are given as,

| (40) | ||||

| (41) |

The former step is due to , and the latter step is due to parameter shift rule. Substituting the bound of and into LHS of (37), we have the bound,

| (42) |

Calculate LHS of (37) for all , the loss gradient is obtained, and its gradient is bounded as,

| (43) |

Applying these results to , we complete the proof.

-C Proof of Lemma 2

We expand the global gradient as follows,

| (44) | ||||

| (45) | ||||

| (46) |

-D Proof of Lemma 3

This step is due to Jensen’s inequality. With Assumption 3, we have . Combining these results finalizes the proof.

-E Completing Proof of Theorem 1

Using (20), the distance between to the optimal is as,

| (50) | ||||

| (51) | ||||

| (52) | ||||

| (53) |

We investigate the bound of as follows,

| (54) |

The term is bounded as,

| (55) | |||||

| (56) | |||||

| (57) |

The steps (a), (b) and (c) are due to -strong convexity, -smoothness, and , respectively. Since , . Combining Lemma 2, Lemma 3, and these results, we have the bound of LHS of (50). Summarizing (53) with taking expectation, and under Assumption 1 with a learning rate , the error between the updated global model and its optimum progress as,

| (58) |

Since , applying (58), we have

| (59) |

For diminishing the step-size, we focus on showing that , where and as elaborated next. It is trivial that due to the definition of . Assuming , we have

| (60) | |||

| (61) | |||

| (62) | |||

| (63) |

For , we obtain

| (64) |

References

- [1] H. Baek, W. J. Yun, Y. Kwak, S. Jung, M. Ji, M. Bennis, J. Park, and J. Kim, “Joint superposition coding and training for federated learning over multi-width neural networks,” in Proc. IEEE Conference on Computer Communications (INFOCOM), May 2022.

- [2] F. Arute, K. Arya, R. Babbush, D. Bacon, J. C. Bardin, R. Barends, R. Biswas, S. Boixo, F. G. Brandao, D. A. Buell et al., “Quantum supremacy using a programmable superconducting processor,” Nature, vol. 574, no. 7779, pp. 505–510, 2019.

- [3] W. J. Yun, J. Park, and J. Kim, “Quantum multi-agent meta reinforcement learning,” in Proc. AAAI Conference on Artificial Intelligence, Washington DC, USA, February 2023.

- [4] W. J. Yun, Y. Kwak, J. P. Kim, H. Cho, S. Jung, J. Park, and J. Kim, “Quantum multi-agent reinforcement learning via variational quantum circuit design,” in Proc. IEEE International Conference on Distributed Computing Systems (ICDCS), Bologna, Italy, July 2022.

- [5] P. W. Shor, “Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer,” SIAM Journal on Computing, vol. 26, no. 5, pp. 1484–1509, October 1997.

- [6] J. Preskill, “Quantum computing in the NISQ era and beyond,” Quantum, vol. 2, p. 79, August 2018.

- [7] S. Y.-C. Chen, C.-H. H. Yang, J. Qi, P.-Y. Chen, X. Ma, and H.-S. Goan, “Variational quantum circuits for deep reinforcement learning,” IEEE Access, vol. 8, pp. 141 007–141 024, 2020.

- [8] “Quantum distributed deep learning architectures: Models, discussions, and applications,” ICT Express, 2022.

- [9] V. Havlíček, A. D. Córcoles, K. Temme, A. W. Harrow, A. Kandala, J. M. Chow, and J. M. Gambetta, “Supervised learning with quantum-enhanced feature spaces,” Nature, vol. 567, no. 7747, pp. 209–212, 2019.

- [10] O. Lockwood and M. Si, “Reinforcement learning with quantum variational circuit,” in Proc. AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, vol. 16, no. 1, 2020, pp. 245–251.

- [11] X. Wang, Y. Han, C. Wang, Q. Zhao, X. Chen, and M. Chen, “In-edge AI: Intelligentizing mobile edge computing, caching and communication by federated learning,” IEEE Network, vol. 33, no. 5, pp. 156–165, 2019.

- [12] J. Park, S. Samarakoon, A. Elgabli, J. Kim, M. Bennis, S.-L. Kim, and M. Debbah, “Communication-efficient and distributed learning over wireless networks: Principles and applications,” Proceedings of the IEEE, vol. 109, no. 5, pp. 796–819, May 2021.

- [13] S. Niknam, H. S. Dhillon, and J. H. Reed, “Federated learning for wireless communications: Motivation, opportunities, and challenges,” IEEE Communications Magazine, vol. 58, no. 6, pp. 46–51, 2020.

- [14] D. Kwon, J. Jeon, S. Park, J. Kim, and S. Cho, “Multiagent DDPG-based deep learning for smart ocean federated learning IoT networks,” IEEE Internet of Things Journal, vol. 7, no. 10, pp. 9895–9903, 2020.

- [15] S. Y.-C. Chen and S. Yoo, “Federated quantum machine learning,” Entropy, vol. 23, no. 4, p. 460, 2021.

- [16] H. Zhou, K. Lv, L. Huang, and X. Ma, “Quantum network: Security assessment and key management,” IEEE/ACM Transactions on Networking, vol. 30, no. 3, pp. 1328–1339, 2022.

- [17] R. Pujahari and A. Tanwar, “Quantum federated learning for wireless communications,” in Federated Learning for IoT Applications. Springer, 2022, pp. 215–230.

- [18] A. Cho, “Ibm promises 1000-qubit quantum computer—a milestone—by 2023,” Science, vol. 15, 2020.

- [19] J. Gambetta, “Our new 2022 development roadmap,” IBM Quantum Computing, May 2022.

- [20] J. Yu and T. S. Huang, “Universally slimmable networks and improved training techniques,” in Proc. IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, October 2019, pp. 1803–1811.

- [21] D. Kim, J. Kim, J. Kwon, and T.-H. Kim, “Depth-controllable very deep super-resolution network,” in Proc. IEEE International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, July 2019, pp. 1–8.

- [22] J. R. McClean, S. Boixo, V. N. Smelyanskiy, R. Babbush, and H. Neven, “Barren plateaus in quantum neural network training landscapes,” Nature Communications, vol. 9, no. 1, pp. 1–6, 2018.

- [23] S. H. Sack, R. A. Medina, A. A. Michailidis, R. Kueng, and M. Serbyn, “Avoiding barren plateaus using classical shadows,” PRX Quantum, vol. 3, no. 2, June 2022.

- [24] T. Sleator and H. Weinfurter, “Realizable universal quantum logic gates,” Physical Review Letters, vol. 74, no. 20, p. 4087, 1995.

- [25] M. M. Wilde, Quantum information theory. Cambridge University Press, 2013.

- [26] K. Mitarai, M. Negoro, M. Kitagawa, and K. Fujii, “Quantum circuit learning,” Physical Review A, vol. 98, no. 3, p. 032309, 2018.

- [27] M. Chehimi and W. Saad, “Quantum federated learning with quantum data,” in Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, May 2022, pp. 8617–8621.

- [28] W. J. Yun, J. P. Kim, S. Jung, J. Park, M. Bennis, and J. Kim, “Slimmable quantum federated learning,” in Proc. of ICML Workshop on Dynamic Neural Networks, Baltimore, MD, USA, July 2022.

- [29] D. Bouwmeester and A. Zeilinger, “The physics of quantum information: basic concepts,” in the Physics of Quantum Information, 2000, pp. 1–14.

- [30] C. P. Williams, S. H. Clearwater et al., Explorations in quantum computing. Springer, 1998.

- [31] N. Killoran, T. R. Bromley, J. M. Arrazola, M. Schuld, N. Quesada, and S. Lloyd, “Continuous-variable quantum neural networks,” Physical Review Research, vol. 1, no. 3, p. 033063, 2019.

- [32] O. Simeone, “An introduction to quantum machine learning for engineers,” Foundations and Trends® in Signal Processing, vol. 16, no. 1-2, pp. 1–223, 2022.

- [33] N. H. Tran, W. Bao, A. Y. Zomaya, M. N. H. Nguyen, and C. S. Hong, “Federated learning over wireless networks: Optimization model design and analysis,” in Proc. IEEE Conference on Computer Communications (INFOCOM), Paris, France, 2019, pp. 1387–1395.

- [34] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Proc. of the International Conference on Artificial Intelligence and Statistics (AISTATS), Ft. Lauderdale, FL, USA, April 2017, pp. 1273–1282.

- [35] X. Li, M. Jiang, X. Zhang, M. Kamp, and Q. Dou, “FedBN: Federated learning on non-iid features via local batch normalization,” in Proc. International Conference on Learning Representations (ICLR), 2021.

- [36] L. Mangasarian, “Parallel gradient distribution in unconstrained optimization,” SIAM Journal on Control and Optimization, vol. 33, no. 6, pp. 1916–1925, 1995.

- [37] A. Cotter, O. Shamir, N. Srebro, and K. Sridharan, “Better mini-batch algorithms via accelerated gradient methods,” Proc. Advances in Neural Information Processing Systems (NIPS), vol. 24, 2011.

- [38] N. Karakoç, A. Scaglione, M. Reisslein, and R. Wu, “Federated edge network utility maximization for a multi-server system: Algorithm and convergence,” IEEE/ACM Transactions on Networking, vol. 30, no. 5, pp. 2002–2017, 2022.

- [39] C. T. Dinh, N. H. Tran, M. N. H. Nguyen, C. S. Hong, W. Bao, A. Y. Zomaya, and V. Gramoli, “Federated learning over wireless networks: Convergence analysis and resource allocation,” IEEE/ACM Transactions on Networking, vol. 29, no. 1, pp. 398–409, February 2021.

- [40] A. Khaled, K. Mishchenko, and P. Richtarik, “Tighter theory for local sgd on identical and heterogeneous data,” in Proc. International Conference on Artificial Intelligence and Statistics (AISTATS), vol. 108, August 2020, pp. 4519–4529.

- [41] X. You and X. Wu, “Exponentially many local minima in quantum neural networks,” in Proc. of the International Conference on Machine Learning (ICML), Virtual, July 2021.

- [42] D. Greenberger, K. Hentschel, and F. Weinert, Compendium of quantum physics: concepts, experiments, history and philosophy. Springer Science & Business Media, 2009.

- [43] Y. Subaşı, L. Cincio, and P. J. Coles, “Entanglement spectroscopy with a depth-two quantum circuit,” Journal of Physics A: Mathematical and Theoretical, vol. 52, no. 4, p. 044001, January 2019.

- [44] R. Jozsa, “Fidelity for mixed quantum states,” Journal of Modern Optics, vol. 41, no. 12, pp. 2315–2323, 1994.

- [45] D. N. C. Tse and P. Viswanath, Fundamentals of Wireless Communications, 2005.

- [46] T. Cover, “Broadcast channels,” IEEE Transactions on Information Theory, vol. 18, no. 1, pp. 2–14, January 1972.

- [47] J. Choi, “Joint rate and power allocation for NOMA with statistical CSI,” IEEE Transactions on Communications, vol. 65, no. 10, pp. 4519–4528, October 2017.

- [48] M. Choi, D. Yoon, and J. Kim, “Blind signal classification for non-orthogonal multiple access in vehicular networks,” IEEE Transactions on Vehicular Technology, vol. 68, no. 10, pp. 9722–9734, 2019.

- [49] X. Li, K. Huang, W. Yang, S. Wang, and Z. Zhang, “On the convergence of fedavg on non-iid data,” in Proc. of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, April 2020.

- [50] L. Deng, “The MNIST database of handwritten digit images for machine learning research,” IEEE Signal Processing Magazine, vol. 29, no. 6, pp. 141–142, 2012.

- [51] T. H. Hsu, H. Qi, and M. Brown, “Measuring the effects of non-identical data distribution for federated visual classification,” CoRR, vol. abs/1909.06335, September 2019.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/yun.jpg) |

Won Joon Yun is currently a Ph.D. student in electrical and computer engineering at Korea University, Seoul, Republic of Korea, since March 2021, where he received his B.S. in electrical engineering. He was a visiting researcher at Cipherome Inc., San Jose, CA, USA, during summer 2022; and also a visiting researcher at the University of Southern California, Los Angeles, CA, USA during winter 2022 for a joint project with Prof. Andreas F. Molisch at the Ming Hsieh Department of Electrical and Computer Engineering, USC Viterbi School of Engineering. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/jpkim.jpg) |

Jae Pyoung Kim has been with the School of Electrical Engineering, Korea University, Seoul, Republic of Korea, since March 2017, where he is currently a B.S. student in electrical and computer engineering. He is now a research engineer at Artificial Intelligence and Mobility (AIM) Laboratory at Korea University, Seoul, Republic of Korea, since 2021. His current research interests include quantum machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/people_hankyulbaek.png) |

Hankyul Baek is currently a Ph.D. student in electrical and computer engineering at Korea University, Seoul, Republic of Korea, since March 2021. He received his B.S. in electrical engineering from Korea University, Seoul, Republic of Korea, in 2020. He was with LG Electronics, Seoul, Republic of Korea, from 2020 to 2021. His current research interests include quantum machine learning and its applications. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/people_jungsoyi.png) |

Soyi Jung has been an assistant professor at the department of electrical and computer engineering, Ajou University, Suwon, Republic of Korea, since September 2022. She also holds a visiting scholar position at Donald Bren School of Information and Computer Sciences, University of California, Irvine, CA, USA, from 2021 to 2022. She was a research professor at Korea University, Seoul, Republic of Korea, during 2021. She was also a researcher at Korea Testing and Research (KTR) Institute, Gwacheon, Republic of Korea, from 2015 to 2016. She received her B.S., M.S., and Ph.D. degrees in electrical and computer engineering from Ajou University, Suwon, Republic of Korea, in 2013, 2015, and 2021, respectively. Her current research interests include network optimization for autonomous vehicles communications, distributed system analysis, big-data processing platforms, and probabilistic access analysis. She was a recipient of Best Paper Award by KICS (2015), Young Women Researcher Award by WISET and KICS (2015), Bronze Paper Award from IEEE Seoul Section Student Paper Contest (2018), ICT Paper Contest Award by Electronic Times (2019), and IEEE ICOIN Best Paper Award (2021). |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/people_jihongpark.jpg) |

Jihong Park (Senior Member, IEEE) received the B.S. and Ph.D. degrees from Yonsei University, South Korea. He is currently a Lecturer (Assistant Professor) with the School of Information Theory, Deakin University, Australia. His research interests include ultra-dense/ultra-reliable/mmWave system designs, and distributed learning/control/ledger technologies and their applications for beyond-5G/6G communication systems. He served as a Conference/Workshop Program Committee Member for IEEE GLOBECOM, ICC, and WCNC, and for NeurIPS, ICML, and IJCAI. He is an Associate Editor of Frontiers in Data Science for Communications, and a Review Editor of Frontiers in Aerial and Space Networks. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/people_mehdibennis.jpg) |

Mehdi Bennis (Fellow, IEEE) is a tenured Full Professor with the Centre for Wireless Communications, University of Oulu, Finland, an Academy of Finland Research Fellow, and the Head of the Intelligent Connectivity and Networks/Systems Group (ICON). He has published more than 200 research papers in international conferences, journals, and book chapters. His main research interests are in radio resource management, heterogeneous networks, game theory, and distributed machine learning in 5G networks and beyond. He has been the recipient of several prestigious awards, including the 2015 Fred W. Ellersick Prize from the IEEE Communications Society, the 2016 Best Tutorial Prize from the IEEE Communications Society, the 2017 EURASIP Best Paper Award for the Journal of Wireless Communications and Networks, the All-University of Oulu Award for research, the 2019 IEEE ComSoc Radio Communications Committee Early Achievement Award, and the 2020 Clarivate Highly Cited Researcher by the Web of Science. He is an Editor of IEEE Transactions on Communications and the Specialty Chief Editor of Data Science for Communications in the Frontiers in Communications and Networks. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f4cde440-da11-43da-a1e6-48ea7dc15568/x21.png) |

Joongheon Kim (Senior Member, IEEE) has been with Korea University, Seoul, Korea, since 2019, where he is currently an associate professor. He received the B.S. and M.S. degrees in computer science and engineering from Korea University, Seoul, Korea, in 2004 and 2006, respectively; and the Ph.D. degree in computer science from the University of Southern California (USC), Los Angeles, CA, USA, in 2014. Before joining Korea University, he was with LG Electronics (Seoul, Korea, 2006–2009), Intel Corporation (Santa Clara in Silicon Valley, CA, USA, 2013–2016), and Chung-Ang University (Seoul, Korea, 2016–2019). He serves as an editor for IEEE Transactions on Vehicular Technology, IEEE Transactions on Machine Learning in Communications and Networking, IEEE Communications Standards Magazine, Computer Networks (Elsevier), and ICT Express (Elsevier). He is also a distinguished lecturer for IEEE Communications Society (ComSoc) (2022-2023) and IEEE Systems Council (2022-2024). He was a recipient of Annenberg Graduate Fellowship with his Ph.D. admission from USC (2009), Intel Corporation Next Generation and Standards (NGS) Division Recognition Award (2015), IEEE Systems Journal Best Paper Award (2020), IEEE ComSoc Multimedia Communications Technical Committee (MMTC) Outstanding Young Researcher Award (2020), IEEE ComSoc MMTC Best Journal Paper Award (2021), and Best Special Issue Guest Editor Award by ICT Express (Elsevier) (2022). He also received several awards from IEEE conferences including IEEE ICOIN Best Paper Award (2021), IEEE Vehicular Technology Society (VTS) Seoul Chapter Awards (2019, 2021), and IEEE ICTC Best Paper Award (2022). |