Quantization Aware Attack: Enhancing Transferable Adversarial Attacks by Model Quantization

Abstract

Quantized neural networks (QNNs) have received increasing attention in resource-constrained scenarios due to their exceptional generalizability. However, their robustness against realistic black-box adversarial attacks has not been extensively studied. In this scenario, adversarial transferability is pursued across QNNs with different quantization bitwidths, which particularly involve unknown architectures and defense methods. Previous studies claim that transferability is difficult to achieve across QNNs with different bitwidths on the condition that they share the same architecture. However, we discover that under different architectures, transferability can be largely improved by using a QNN quantized with an extremely low bitwidth as the substitute model. We further improve the attack transferability by proposing quantization aware attack (QAA), which fine-tunes a QNN substitute model with a multiple-bitwidth training objective. In particular, we demonstrate that QAA addresses the two issues that are commonly known to hinder transferability: 1) quantization shifts and 2) gradient misalignments. Extensive experimental results validate the high transferability of the QAA to diverse target models. For instance, when adopting the ResNet-34 substitute model on ImageNet, QAA outperforms the current best attack in attacking standardly trained DNNs, adversarially trained DNNs, and QNNs with varied bitwidths by 4.3% 20.9%, 8.7% 15.5%, and 2.6% 31.1% (absolute), respectively. In addition, QAA is efficient since it only takes one epoch for fine-tuning. In the end, we empirically explain the effectiveness of QAA from the view of the loss landscape. Our code is available at https://github.com/yyl-github-1896/QAA/.

Index Terms:

Adversarial attack, black-box attack, adversarial transferability, model quantization, convolutional neural network.I Introduction

Recent progress in edge computing [1] has triggered a large demand for deep neural networks (DNNs) that can be deployed on edge devices. As a popular DNN compression and acceleration technique, quantization is widely adopted to reduce computational overhead by replacing 32-bit full-precision DNN parameters (a.k.a. weights) with lower numbers of bitwidths (e.g., 8, 5, 4, 3, 2, and 1-bit settings) while still maintaining high accuracy.

However, many works have shown that DNNs are vulnerable to adversarial examples [2, 3, 4, 5, 6, 7, 8], which are crafted by adding human-imperceptible perturbations and can mislead DNNs. Although the robustness of full-precision DNNs has been extensively studied, few works have focused on the robustness of QNNs, especially from the perspective of realistic black-box settings. A natural question is as follows: Can we perform realistic black-box attacks against DNNs with unknown architecture and quantization bitwidths?

One intriguing property of adversarial examples is their transferability across different DNNs [2, 9, 10, 11, 12], enabling an adversary to generate adversarial examples on a local substitute model and use them to attack the target model [13]. The adversarial transferability property motivates us to achieve black-box attacks against unknown QNNs through transfer-based attacks. The main challenge is that many previous studies [14, 15, 16] have claimed that model quantization makes QNNs more robust. Specifically, the widely-held claim [14] states that adversarial examples transfer poorly across QNNs with different quantization bitwidths because of the following two issues. 1) Quantization shift: Quantization can map two different feature values (i.e. clean feature values and adversarial feature values) into the same bucket, ruining the adversarial effect. 2) Gradient misalignment: Quantization approximately computes gradients with the straight-through estimator (STE) [17], making the gradients of QNNs greatly diverge from that of full-precision DNNs.

However, we discover that the above claim of “poor transferability” is only valid when the substitute model and the target model have the same architecture. When the architectures of the substitute model and the target model differ, QNN substitute models with “extremely low bitwidths” (less than 5 bits) may become the “panacea” for attacking an unknown target model (see Sec. III-A for more details). Please note that the “different architecture” setting is closer to the realistic black-box scenario, and our new finding indicates that an adversary can leverage model quantization to generate highly transferable adversarial examples. However, technically, the attack capability of the QNN substitute model is still unsatisfactory because of the quantization shift and the gradient misalignment issues. The following question now arises: Can we mitigate the above two issues to improve the transferability of adversarial examples across QNNs with different bitwidths?

To enhance the attack transferability, a naive approach is to directly ensembles substitute models with different quantization bitwidth (a.k.a. ensemble-based attack). However, an ensemble-based attack multiplies the computational overhead and leads to a suboptimal attack success rate (for additional details, see Sec. IV-C). To this end, we propose a quantization aware attack (QAA), which fine-tunes substitute QNNs with objective functions possessing multiple quantization bitwidths, making the substitute model “aware of” the target of attacking QNNs with unknown bitwidths. Fig. 1 presents a brief overview of the QAA substitute model, which alternatively utilizes quantized activation and full-precision values during the forward propagation process to promote adversarial transferability. The advantage of the QAA is its ability to introduce gradients of QNNs with different bitwidths during the substitute model training stage and utilize various bitwidths activation values at the inference stage, thus overcoming the quantization shift and gradient misalignment challenges and improving the transferability of the generated adversarial examples, as shown in Sec. III.

We demonstrate the effectiveness of the proposed QAA on both the CIFAR-10 and ImageNet datasets. The experimental results show that compared with the state-of-the-art (SOTA) attacks which were designed only for full-precision target models, the QAA can significantly enhance the transferability of adversarial examples to standardly trained DNNs, adversarially trained DNNs, and QNNs with varied bitwidths by 4.6% 20.9%, 8.8% 13.4% and 2.6% 11.8% (absolute), respectively. Finally, we attempt to understand the underlying causes of the enhancements made by the QAA from the loss landscape perspective [18], in which the explained numerical results are consistent with the attack performance. In summary, our main contributions are as follows.

-

•

For the first time, we examine the widely-held claim that adversarial attacks transfer poorly across QNNs with different bitwidths in the black-box setting, and enhance the attack transferability by mitigating the quantization shift and gradient misalignment issues.

-

•

We propose a quantization aware attack (QAA), which trains substitute models with multiple bitwidths objectives and endows the models with multiple bitwidths awareness capability to solve the above problems.

-

•

Comprehensive evaluations demonstrate the improvement yielded by the proposed QAA over several other SOTA attacks. Numerical evidence derived from the loss landscape view further verifies the effectiveness of QAA.

II Related Work

II-A Transfer-based Black-box Attacks

Papernot et al. [13] first proposed the concept of transfer-based black-box attacks, in which the adversary trains a local substitute model to obtain transferable adversarial examples. Various methods have been developed to enhance the transferability of adversarial examples. These attack methods can be divided into five categories [19]: momentum-based attacks [20, 21, 22, 23, 24], input-transformation-based attacks [25, 26, 27], feature-level attacks [28, 29, 30, 31], GAN-based attacks [32, 12], and model-based attacks [33, 34, 35]. Momentum-based attacks add regularization terms to the original attack objectives when attacking a substitute model; thus, the optimization gradient can be stabilized and the attack transferability can be enhanced. Input-transformation-based attacks mimic model ensembles by applying image-transformation operations when attacking the substitute model to achieve enhanced transferability. Feature-level attacks operate under the hypothesis that the features of the shallower DNN layers represent basic and invariant image information and, thus, are more significant for generating transferable adversarial examples. GAN-based attacks leverage generative models to obtain transferable adversarial examples, and they are mighty for targeted attacks [12]. Model-based attacks devise a substitute model to smooth the loss landscape and thus enhance attack transferability. This paper describes a model-based attack, in which the transferability of adversarial examples across QNNs with different architectures and bitwidths is investigated by quantizing and fine-tuning the substitute model.

II-B Attacks and Defenses for QNNs

Adversarial attacks can be divided into white-box attacks and black-box attacks. Existing works have only designed white-box attacks against QNNs [36]. However, how to improve black-box attacks against both full-precision DNNs and QNNs with different architectures and quantization bitwidths is still an open problem. The adversarial robustness of QNNs has received increased research attention from the defender’s perspective. For example, existing works have designed adversarial training [16], regularization-based defense [37], and ensemble-based defense [15] strategies for QNNs. In particular, previous works have shown adversarial examples transfer poorly across DNNs with the same architecture but different quantization bitwidths because of the quantization shift and gradient misalignment phenomena [14]. Intuitively, this characteristic of QNNs makes it difficult for the adversary to successfully attack QNNs with different bitwidths simultaneously, enabling defenders to enhance the robustness of DNNs by ensembling QNNs with different bitwidths. For instance, EMPIR [15] directly ensembles full-precision DNNs and low-bitwidth QNNs with the different bitwidths to enhance the robustness, and Double-Win Quant [16] alternatively applies the adaptive quantization technique to the adversarial training scheme to enable QNNs to exhibit different bitwidths during inference. The research gap lies in the fact that previous works only studied the transferability of adversarial examples under the “same architecture, different quantization bitwidth” setting, while the conclusion can be different under the “different architecture, different quantization bitwidth” setting, which is closer to the realistic black-box scenario. In summary, there are two dimensions along which our work differs from the others. The first is that it exhibits improved adversarial transferability across target models with unknown architectures and bitwidths. The second is that it quantizes and fine-tunes the substitute model to achieve the above goal.

II-C DNN Quantization

DNN quantization compresses and accelerates DNNs by representing network weights/activations/gradients with lower bitwidth. Model quantization has been widely applied in efficient DNN inference frameworks in industry [38]. Two main categories of quantization techniques are quantization aware training (QAT) [39, 40, 41, 42] and post training quantization (PTQ) [43, 44, 45, 46]. QAT trains DNNs from scratch or fine-tunes full-precision DNNs given a training dataset to convert these DNNs into low-bitwidth DNNs. In contrast, PTQ does not require end-to-end training and instead relies on a calibration dataset () to implement a rounding scheme. PTQ is more computationally efficient than QAT, but it has greater quantization loss. Recent PTQ-related works [43, 44, 45, 46] have modeled weight/activation quantization as perturbations and used Taylor expansion to analyze loss value changes before reconstructing the output of each layer. To implement QAT or PTQ algorithms on GPUs, one can use fake quantization to mimic QNNs, in which the weights are still stored at full-precision but are rounded into lower bitwidth during inference. QAT process with fake quantization can be formalized as follows,

| (1) |

in which denotes the loss function (e.g., cross-entropy loss), is the DNN weight, and is the quantization hyper-parameter used to convert from full-precision floating points into lower bitwidth numbers. PTQ with fake quantization can be formalized as follows.

| (2) |

The major difference between QAT and PTQ is that the PTQ only optimizes quantization hyper-parameter while keeping the model weights unchanged. Apart from the above model quantization techniques with fixed bitwidths, a line of work has studied adaptive bitwidth quantization [47, 48, 16], which can switch the quantization bitwidth after deployment. The application of such adaptive quantization techniques in realistic settings further urges us to develop effective transfer-based attacks against QNNs with different bitwidth.

III Methodology

In this section, we first show our motivating observation, that is, extremely low bitwidth quantized substitute models benefit the generation of transferable adversarial examples. Next, we formulate the black-box transfer attack problem and present our QAA fine-tuning objective function to achieve the attack goal. Finally, we provide a numerical analysis of how the proposed QAA method mitigates the quantization shift and gradient misalignment issues and further enhances the attack transferability of quantized substitute models.

| Substitute | Bitwidth | ResNet-18 | ResNet-34 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 32 | 8 | 5 | 4 | 3 | 2 | Avg. | 32 | 8 | 5 | 4 | 3 | 2 | Avg. | ||

| ResNet-18 | 32 | 100.0 | 99.0 | 90.3 | 88.4 | 88.3 | 85.4 | 91.9 | 83.3 | 82.1 | 76.8 | 78.0 | 76.8 | 78.2 | 79.2 |

| 2 | 91.8 | 92.1 | 99.2 | 99.7 | 99.8 | 100.0 | 97.1 | 80.8 | 81.0 | 79.5 | 82.8 | 84.8 | 89.2 | 83.0 | |

| ResNet-34 | 32 | 89.7 | 88.3 | 86.0 | 85.6 | 83.8 | 80.6 | 85.7 | 100.0 | 99.2 | 78.8 | 79.6 | 79.6 | 76.7 | 85.7 |

| 2 | 91.9 | 92.4 | 92.8 | 94.3 | 94.1 | 95.3 | 93.5 | 87.3 | 89.0 | 98.1 | 99.0 | 100.0 | 100.0 | 95.6 | |

III-A Preliminaries: Transfer-based Attacks against QNNs

In the realistic black-box scenario, the architecture and quantization bitwidth of the target model are unknown to the adversary. Thus, it is important to study the transferability of adversarial examples across DNNs with different architectures and quantization bitwidths. However, previous works claimed that adversarial examples transfer poorly across DNNs with different quantization bitwidths [14, 15, 16]. To verify this claim, we conduct illustrative transfer-based attack experiments on the ImageNet dataset with two model architectures (ResNet-18, ResNet-34) and six quantization bitwidths (32, 8, 5, 4, 3, and 2-bit settings). The model quantization technique is APoT [42], and the transfer-based attack algorithm is MIM [20] with a -norm perturbation budget . The experimental results are shown in Tab. I. From these results, we have the following observations.

Observation 1: Model quantization harms the same-architecture transferability of adversarial examples. Given that the substitute model has the same architecture as that of the target model, the use of different quantization bitwidth lowers the transfer attack success rate. For instance, when using ResNet-18 with 32-bit as the substitute model, the attack success rate decreases as the quantization bitwidth of the target model decreases; when using ResNet-18 with 2-bit as the substitute model, the attack success rates increase as the quantization bitwith of the target model decreases. This observation is exactly the same as the previous “poor transferability” conclusion [14, 15, 16].

Observation 2: Model quantization benefits the cross-architecture transferability of adversarial examples. The conclusion can be different when the substitute model and the target model have different architectures. For instance, when taking ResNet-34 as the substitute model to attack the ResNet-18 target model, the 2-bit substitute model outperforms the 32-bit model under every target bitwidth. A similar phenomenon occurs when ResNet-18 is used as the substitute model to attack ResNet-34. Although the 2-bit substitute model does not achieve higher attack success rates on the 32-bit target model, it outperforms the 32-bit substitute model at other target bitwidths and has higher average attack success rates.

Remark on the above observations. The same-architecture observation (Observation 1) indicates that the defender may ensemble models with different quantization bitwidths to hinder the transferability of adversarial examples and enhance the adversarial robustness of their models; this strategy has been explored in previous works [15, 16]. The cross-architecture observation (Observation 2) indicates that model quantization may enhance the transfer attack ability of the adversary under black-box scenarios, which was ignored by previous works. Please note that the setting of Observation 2 is closer to the realistic black-box setting where both the target architecture and the quantization bitwidth are unknown to the adversary. Given that the adversary has no portable way to detect the architecture or the bitwidth of the target model, the best choice for the adversary is to leverage 2-bit quantized substitute models to achieve the best average attack performance. In the next subsection, we discuss how to achieve the best black-box attack capability from the perspective of the adversary and propose our QAA method.

III-B Problem Formulation

Quantization compresses and accelerates DNNs by converting the 32-bit weight and activation values ( and ) into lower bitwidths ( and ):

| (3) |

where are the bias and scaling terms for weight quantization and activation quantization, respectively; Int denotes the integer operation. The adversary’s goal is to maximize the probability of fooling target model with unknown architecture and bitwidths , that is,

| (4) |

where and are clean example and its true label, respectively, is the adversarial perturbation, denotes the probability, and denotes target model with certain bitwidths and architecture .

To generate adversarial examples, the adversary needs to train a local substitute model with certain architecture and bitwidth on the available training dataset and generate adversarial examples, that is,

| (5) |

where is the surrogate loss function.

From Eqn. 4 and Eqn. 5, we can see that the difference between the architectures and bitwidths of the substitute and target model is the prominent factor that hinders the transferability of the generated adversarial examples. A previous work [14] has proposed the following two hypotheses to explain the poor transferability of adversarial examples across target DNNs and QNNs with different bitwidths.

Quantization shift. Quantization can map two different types of feature values (i.e., clean features and adversarial features) into the same bucket, damaging the adversarial effect.

Gradient misalignment. QNNs approximately compute gradients with the straight-through estimator (STE) [17], making the gradients of QNNs diverge largely from those of full-precision DNNs.

III-C Objective Function

This paper proposes a quantization aware attack (QAA) to address the above two challenges by making the substitute model training procedure “aware of” quantization objective with different bitwidths, that is,

| (6) |

where denotes the substitute model with bitwidths , weights , and quantization parameter set (including the mentioned above). This objective function means that a single substitute model is quantized to multiple bitwidths when calculating losses and gradients, making the substitute model “aware of” different quantization bitwidths. At the attack iteration stage, the substitute model performs inference with diverse bitwidths to prevent adversarial examples from overfitting to attacking the model with a single bitwidth, thus enhancing the transferability of the approach.

The QAA addresses the poor transferability issue of adversarial examples across QNNs in two aspects. From the quantization shift perspective, the QAA performs multiple bitwidths inferences in the attack generation stage, forcing the algorithm to find adversarial examples that are effective on the target QNNs with multiple bitwidths. From the gradient misalignment perspective, the proposed QAA training objective Eqn. 6 makes the gradients of the substitute model align with target QNNs. We provide quantitative analysis in Sec. III-E to support the above claims.

III-D Attack Implementation

Training the substitute model with Eqn. 6 is non-trivial because of the following two challenges. From the view of computational overhead, training the substitute model with all possible bitwidths is expensive and unnecessary in reality. We need to form a trade-off between the substitute model training overhead and the attack performance. From the view of batch normalization (BN) layers, BN is incapable of capturing the complex and largely divergent distributions of weights with different bitwidths, making it difficult for the model training process to converge. This problem has also been observed in other recent works [49, 50]. We plot the BN parameters of 32-bit and 2-bit ResNet-34 in Fig. 2 to quantitatively illustrate this problem. In Fig. 2, we show the channel-wise BN statistics of the 20th layers in the ResNet-34 ImageNet models obtained by full-precision training and quantized training. Each dot represents the running mean and variance of a channel. The full-precision DNN and low-bitwidth QNNs have very different weight distributions. Thus, we need to circumvent the BN incompatibility issue when training substitute models with Eqn. 6. The question then becomes the following: How can we circumvent the computational overhead and the BN incompatibility problem when training substitute models with multiple bitwidth objectives?

To answer the above question, we propose training the substitute model with only two bitwidths (32-bit and 2-bit) to address the trade-off between the computational complexity and attack performance; that is,

| (7) |

We choose 32-bit and 2-bit settings because they represent two extreme cases, i.e., full-precision and low-precision cases. We do not use 1-bit QNN [39] because it is difficult to converge on ImageNet, and needs special tricks that are not compatible with other bitwidths. Regarding the BN incompatibility problem, although weight quantization is incompatible with the BN layer, applying the multiple bitwidths training objective only for activation quantization does not cause problems because activation quantization is performed after the BN layer (please see Fig. 1 for reference). Therefore, we propose applying multiple bitwidths training to the activation quantization process only and keeping the weight quantized as 2-bit.

Alg. 1 summarizes our QAA implementation. The adversary can resume from standardly pre-trained QNN to save the computational budget. In practice, fine-tuning for only one epoch is sufficient for enhancing the attack transferability to a large extent. The QAA trains the substitute model with quantized activation (line 5) and full-precision activation (line 9) alternatively while keeping the weight quantized. When generating adversarial examples, quantized activation (line 18) and full-precision activation (line 20) are also alternatively adopted, making the adversarial examples transferable across target models with different quantization bitwidths. The QAA is flexible and can be implemented using various quantization methods, including QAT [42] and PTQ [46].

The implementation of the QAA based on PTQ is summarized in Alg. 2. Compared to the QAA implementation based on QAT, the QAA implementation based on PTQ is different in two ways. First, because PTQ does not train and quantize the model from scratch, the QAA implementation based on PTQ does not need model training or fine-tuning steps (there is no substitute model training step in Alg. 2); Second, instead of keeping weights quantized all the time, the QAA implementation based on PTQ applies multiple bitwidth inferences to both the weight and activation values (see the forward propagation step in Alg. 2). The PTQ version of the QAA does not require fine-tuning but is less powerful than the QAT version of the QAA.

III-E Quantitative Analysis on QAA

This subsection quantitatively analyzes whether the proposed QAA can mitigate the quantization shift and gradient misalignment issues mentioned above. To visualize the quantization shift phenomenon, we calculate the -th layer feature divergence of the target model on an adversarial example and its corresponding clean example; that is,

| (8) |

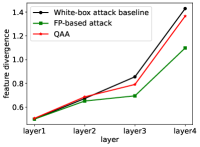

where denotes the -th layer of the target model , is the clean example, is the corresponding adversarial example, and is the -norm. We compare the feature divergence of the target model on adversarial examples generated with the QAA and the traditional attack based on the full-precision substitute model (FP-based attack). The model architecture is ResNet-34 for both the substitute models and target models, and the attack algorithm is MIM [20] with a perturbation budget of . The results are presented in Fig. 3. The feature divergence of the target QNN on the QAA attack is denoted by red lines, and the feature divergence on the FP-based attack is denoted by green lines. The black line denotes the white-box ideal case for reference. The utilized model is ResNet-34 for both the substitute and target models. The applied dataset is ImageNet. The attack is MIM with -norm budget . We can see that compared to the white-box baseline, the FP-based attack (denoted as the green line) substantially decreases the feature divergence, suggesting that the traditional FP-based attack suffers greatly from the quantization shift phenomenon. In comparison, the feature divergence of the QAA (denoted as the red line) decreases less. The above observation shows that the QAA is more robust to the quantization shift phenomenon and thus is more transferable across QNNs with different bitwidths.

To analyze the effectiveness of the QAA from the gradient misalignment perspective, we adopt the same method as that used in [14, 51], calculating the gradient similarity between the target model and substitute model, that is, the cosine similarity of the gradient between the target model and substitute model,

| (9) |

where denotes the transpose of a matrix. Intuitively, a substitute model with higher gradient similarity to the target model enables the generation of more transferable adversarial examples. Specifically, we calculate the gradient similarity of each substitute model on 16 target models with different architectures and bitwidths (which are the same as those of the target models used in Sec. IV) and take the average. The 32-bit, 4-bit, 2-bit, and QAA-based ResNet-34 substitutes have average gradient similarities of 0.1180, 0.1186, 0.1196, and 0.1250, respectively. The maximum standard deviation of this experiment is , which shows that the QAA model has a statistically significant advantage. We draw Fig. 4 to visualize the gradient alignment. We calculate the gradient similarity between each model pair and define the distance between two models as 1 - gradient similarity. We use the multi-dimensional scaling (MDS) algorithm [52] to project the distance relationship into 3D space, as illustrated in Fig. 4. Each point represents a model, and closer points indicate similar gradients. The QAA model is at the center of the target models; in other words, the QAA model has the shortest distances (in other words, highest gradient similarities) to these target models.

IV Experiments

IV-A Experimental Settings

Dataset. We adopt ImageNet [53] and CIFAR-10 [54] to evaluate the QAA. On ImageNet, we follow a previous work [29], which conducted experiments on a subset of the ImageNet 2012 validation set containing 1,000 high-resolution images (299x299). On CIFAR-10, we use the test set to generate adversarial examples, which include 10,000 images (32x32). Please note that many previously developed transfer attacks are not effective on CIFAR-10 (see Tab. III), while the QAA is effective on both CIFAR-10 and ImageNet.

Target Models. We collect target models with various architectures, bitwidths, and defense methods to validate the generalizability of the QAA. For ImageNet, we collect 21 target models, including seven standardly trained DNNs, five adversarially trained DNNs, and nine QNNs with bitwidths ranging from 2-bit to 8-bit. Here, we do not consider 1-bit target models on ImageNet because the adopted QDrop technique [46] cannot achieve substantial accuracy under the 1-bit setting. For CIFAR-10, we collect 16 target models, including five standardly trained DNNs and 11 QNNs with bitwidths ranging from 1-bit to 8-bit.

Baseline Attacks. On ImageNet, we combine the QAA with the SOTA transfer-based attacks designed for full-precision DNNs, including MIM [20], CIM (=TI+DI+SI+MIM) [22], FIA [29], RPA [55], Admix [26], and SSA [27]. On CIFAR-10, we only combine the QAA with PGD, which is strong enough to largely outperform the SOTA transfer-based attacks. We adopt the -norm to bound the adversarial perturbation and the values are and for ImageNet and CIFAR-10, respectively. In the tables in this section, we use bold numbers to denote higher transfer attack success rates than those of the baseline. The experimental results are in Tab. II, Tab. III, Tab. IV, Tab. VI, and Tab. V are repeated three times to calculate their maximum standard deviations. We list the maximum standard deviations in the caption, and they are much less than the improvement magnitude yielded by the QAA, illustrating that the QAA provides statistically significant improvements.

Implementation Details. On ImageNet, we implement the QAA on Res-34 quantized with the QAT and Vgg-16 quantized with PTQ [46]. On CIFAR-10, we implement the QAA based on Res-20 and Res-56 models quantized with QAT [42]. For both datasets, we fine-tune the 2-bit pre-trained QNNs for only one epoch. The batch size is 128, and the optimizer is SGD with a momentum of 0.9 and weight decay of 1e-4. The QAA implementation on PTQ does not need fine-tuning and can be directly used to generate adversarial examples after the QAA modification. The number of attack iterations is 10.

| Substitute | Attack | Inc-v3 | Inc-v4 | IncRes-v2 | Res-50 | Res-152 | Vgg-16 | Vgg-19 | Average |

|---|---|---|---|---|---|---|---|---|---|

| MIM | 43.8 | 42.4 | 34.2 | 76.2 | 63.1 | 76.3 | 71.6 | 58.2 | |

| MIM+QAA | 75.2 | 67.6 | 59.0 | 91.0 | 81.5 | 90.6 | 88.7 | 79.1 | |

| CIM | 88.0 | 82.2 | 77.5 | 96.0 | 92.6 | 93.0 | 93.3 | 88.9 | |

| CIM+QAA | 94.3 | 90.8 | 87.8 | 97.1 | 93.5 | 95.6 | 95.6 | 93.5 | |

| FIA | 77.5 | 76.9 | 63.5 | 95.2 | 89.1 | 91.2 | 91.6 | 83.6 | |

| FIA+QAA | 89.0 | 85.6 | 75.7 | 96.8 | 92.1 | 97.1 | 95.6 | 90.3 | |

| RPA | 76.8 | 77.3 | 63.8 | 95.0 | 89.1 | 92.5 | 91.8 | 83.8 | |

| RPA+QAA | 89.0 | 86.3 | 76.0 | 97.0 | 92.0 | 97.0 | 96.1 | 90.5 | |

| Admix | 54.7 | 51.5 | 40.8 | 85.4 | 73.9 | 83.9 | 80.9 | 67.3 | |

| Admix+QAA | 74.6 | 67.4 | 59.0 | 92.0 | 81.4 | 92.3 | 90.2 | 79.6 | |

| SSA | 51.4 | 54.1 | 42.7 | 85.7 | 70.7 | 80.2 | 76.3 | 65.9 | |

| Res-34 | SSA+QAA | 66.6 | 63.0 | 49.3 | 85.4 | 73.8 | 84.5 | 82.9 | 72.2 |

| MIM | 33.2 | 39.3 | 27.8 | 54.9 | 37.8 | 99.9* | 95.7 | 55.5 | |

| MIM+QAA | 39.5 | 46.5 | 31.6 | 65.2 | 44.0 | 100.0* | 97.6 | 60.6 | |

| CIM | 73.7 | 74.8 | 61.3 | 85.4 | 71.8 | 100.0* | 99.6 | 80.9 | |

| CIM+QAA | 79.5 | 78.4 | 66.4 | 90.4 | 76.2 | 100.0* | 99.4 | 84.3 | |

| FIA | 56.4 | 66.5 | 46.0 | 82.3 | 62.5 | 100.0* | 98.3 | 73.1 | |

| FIA+QAA | 65.3 | 72.6 | 52.5 | 86.7 | 70.3 | 99.9* | 99.2 | 78.1 | |

| RPA | 58.4 | 68.9 | 47.2 | 83.3 | 65.1 | 100.0* | 98.6 | 74.5 | |

| RPA+QAA | 66.9 | 74.6 | 55.2 | 88.2 | 71.7 | 100.0* | 99.6 | 79.5 | |

| Admix | 29.0 | 37.4 | 21.4 | 59.0 | 40.4 | 99.1* | 97.2 | 54.8 | |

| Admix+QAA | 30.3 | 38.6 | 22.9 | 60.5 | 40.5 | 100.0* | 98.7 | 55.9 | |

| SSA | 38.4 | 43.7 | 31.8 | 60.7 | 44.3 | 100.0* | 98.0 | 59.6 | |

| Vgg-16 | SSA+QAA | 39.3 | 44.3 | 32.3 | 61.4 | 44.6 | 100.0* | 98.5 | 60.1 |

| Substitute | Attack | Res-18 | Res-50 | Vgg-19 | Dense-121 | MobileNet-v2 | Average |

|---|---|---|---|---|---|---|---|

| PGD | 39.44 | 43.66 | 71.73 | 41.31 | 90.06 | 57.24 | |

| MIM | 49.54 | 54.14 | 73.19 | 51.04 | 86.69 | 62.92 | |

| CIM | 40.20 | 42.41 | 45.50 | 41.70 | 56.61 | 45.28 | |

| FIA | 40.30 | 43.44 | 68.49 | 41.89 | 86.04 | 56.03 | |

| RPA | 39.94 | 42.09 | 67.42 | 40.70 | 85.40 | 55.11 | |

| Res-20 | PGD+QAA | 61.99 | 64.77 | 87.62 | 63.09 | 96.15 | 74.72 |

| PGD | 26.20 | 28.85 | 52.80 | 27.17 | 78.96 | 42.80 | |

| MIM | 41.29 | 44.96 | 63.87 | 42.73 | 79.11 | 54.39 | |

| CIM | 37.63 | 40.44 | 42.76 | 40.74 | 53.66 | 43.05 | |

| FIA | 48.25 | 51.82 | 76.18 | 50.25 | 90.10 | 63.32 | |

| RPA | 47.29 | 50.81 | 75.92 | 49.36 | 89.72 | 62.62 | |

| Res-56 | PGD+QAA | 58.85 | 62.18 | 86.84 | 58.25 | 95.11 | 72.25 |

| Substitute | Attack | Adv-Inc-v3 | Adv-IncRes-v2 | Ens3-Inc-v3 | Ens4-Inc-v3 | Ens-IncRes-v2 | Average |

|---|---|---|---|---|---|---|---|

| MIM | 27.7 | 23.0 | 26.5 | 24.3 | 15.2 | 23.3 | |

| MIM+QAA | 41.6 | 32.1 | 40.2 | 34.4 | 24.7 | 34.6 | |

| CIM | 79.5 | 71.9 | 75.7 | 76.8 | 65.2 | 73.8 | |

| CIM+QAA | 88.9 | 81.4 | 85.0 | 83.6 | 74.1 | 82.6 | |

| FIA | 60.2 | 49.2 | 59.0 | 50.5 | 38.8 | 51.5 | |

| FIA+QAA | 75.1 | 63.0 | 71.3 | 63.6 | 50.6 | 64.7 | |

| RPA | 61.2 | 50.8 | 59.9 | 51.5 | 40.8 | 52.8 | |

| RPA+QAA | 76.6 | 65.9 | 72.1 | 64.6 | 52.2 | 66.3 | |

| Admix | 34.6 | 29.7 | 33.8 | 28.8 | 20.3 | 29.4 | |

| Admix+QAA | 54.3 | 43.5 | 50.9 | 42.7 | 33.3 | 44.9 | |

| SSA | 36.5 | 24.7 | 32.0 | 29.5 | 17.7 | 28.1 | |

| Res-34 | SSA+QAA | 46.2 | 35.1 | 40.8 | 38.0 | 23.9 | 36.8 |

| MIM | 19.1 | 13.5 | 18.3 | 15.7 | 9.8 | 15.3 | |

| MIM+QAA | 23.0 | 18.1 | 20.8 | 19.5 | 13.5 | 19.0 | |

| CIM | 55.5 | 46.0 | 53.4 | 49.0 | 38.7 | 48.5 | |

| CIM+QAA | 62.4 | 50.9 | 59.6 | 55.7 | 44.6 | 54.6 | |

| FIA | 35.5 | 24.5 | 31.3 | 26.8 | 20.0 | 27.6 | |

| FIA+QAA | 41.3 | 30.1 | 37.3 | 32.2 | 23.6 | 32.9 | |

| RPA | 36.8 | 25.9 | 33.9 | 28.5 | 21.8 | 29.4 | |

| RPA+QAA | 43.9 | 33.6 | 39.2 | 32.1 | 25.1 | 34.8 | |

| Admix | 16.1 | 11.1 | 15.4 | 13.8 | 8.8 | 13.0 | |

| Admix+QAA | 22.5 | 15.0 | 19.6 | 18.8 | 11.6 | 17.5 | |

| SSA | 21.7 | 14.8 | 20.0 | 18.4 | 11.1 | 17.2 | |

| Vgg-16 | SSA+QAA | 23.9 | 15.3 | 21.0 | 18.8 | 11.6 | 18.1 |

| Substitute | Attack | NIN | AlexNet | Dense-121 | Res-20 | MN-V2 | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | 1 | 8 | 1 | 8 | 4 | 8 | 4 | 2 | 8 | 4 | |||

| PGD | 35.98 | 47.86 | 58.78 | 47.03 | 69.91 | 46.63 | 74.75 | 72.94 | 62.48 | 61.72 | 37.73 | 55.98 | |

| MIM | 42.40 | 54.75 | 64.67 | 56.48 | 74.60 | 59.74 | 75.87 | 74.85 | 68.24 | 64.88 | 47.12 | 62.15 | |

| FIA | 46.20 | 57.96 | 60.90 | 57.12 | 74.63 | 74.76 | 76.31 | 74.76 | 69.61 | 63.13 | 46.03 | 63.76 | |

| CIM | 36.56 | 41.19 | 39.99 | 37.57 | 45.96 | 38.60 | 50.49 | 49.85 | 42.14 | 42.54 | 37.61 | 42.05 | |

| Res-20 | QAA+PGD | 53.14 | 73.96 | 81.56 | 73.87 | 89.28 | 74.21 | 92.57 | 92.14 | 90.34 | 84.62 | 63.34 | 79.00 |

| PGD | 30.24 | 36.29 | 39.84 | 34.02 | 53.64 | 32.96 | 59.81 | 56.16 | 49.55 | 46.88 | 27.81 | 42.47 | |

| MIM | 38.43 | 48.39 | 53.99 | 48.54 | 65.10 | 50.86 | 68.42 | 64.49 | 60.77 | 57.20 | 41.28 | 54.32 | |

| FIA | 48.74 | 62.09 | 66.83 | 61.29 | 79.03 | 61.40 | 79.37 | 78.40 | 75.88 | 69.90 | 52.03 | 66.81 | |

| CIM | 33.64 | 37.04 | 36.64 | 34.52 | 41.06 | 34.99 | 46.49 | 45.55 | 40.10 | 39.67 | 34.56 | 38.57 | |

| Res-56 | QAA+PGD | 53.96 | 77.37 | 82.49 | 79.79 | 91.46 | 77.24 | 93.53 | 94.70 | 91.31 | 86.20 | 66.96 | 81.36 |

| Substitute | Attack | Res-50 | Vgg-16 | MobileNet-v2 | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | 4 | 2 | 8 | 4 | 2 | 8 | 4 | 3 | |||

| MIM | 76.6 | 77.1 | 87.6 | 76.0 | 78.0 | 86.9 | 75.5 | 85.6 | 94.0 | 81.9 | |

| MIM+QAA | 91.1 | 92.9 | 97.0 | 89.7 | 91.8 | 95.0 | 93.2 | 95.4 | 97.0 | 93.7 | |

| CIM | 91.6 | 96.8 | 96.8 | 89.1 | 94.0 | 97.5 | 90.1 | 94.4 | 96.2 | 94.1 | |

| CIM+QAA | 97.1 | 97.6 | 98.6 | 95.7 | 96.4 | 98.6 | 98.2 | 98.3 | 98.9 | 97.7 | |

| FIA | 95.1 | 95.1 | 96.3 | 91.0 | 92.2 | 97.0 | 95.0 | 94.4 | 96.9 | 94.8 | |

| FIA+QAA | 96.7 | 97.1 | 99.0 | 96.9 | 97.3 | 98.9 | 97.8 | 99.1 | 99.3 | 98.0 | |

| RPA | 94.8 | 95.0 | 96.7 | 92.5 | 93.6 | 97.1 | 93.6 | 94.7 | 97.1 | 95.0 | |

| RPA+QAA | 96.5 | 97.0 | 98.8 | 96.2 | 97.3 | 98.2 | 97.1 | 98.0 | 99.0 | 97.6 | |

| Admix | 55.7 | 55.3 | 67.5 | 55.5 | 57.4 | 71.1 | 60.3 | 67.9 | 84.6 | 63.9 | |

| Admix+QAA | 92.0 | 93.0 | 98.2 | 92.2 | 93.4 | 96.5 | 94.6 | 97.1 | 97.9 | 95.0 | |

| SSA | 86.7 | 85.5 | 89.3 | 80.3 | 81.9 | 88.2 | 86.2 | 86.7 | 93.3 | 86.5 | |

| Res-34 | SSA+QAA | 85.9 | 87.3 | 93.5 | 84.4 | 86.0 | 93.5 | 92.0 | 91.0 | 96.1 | 90.0 |

| MIM | 54.5 | 53.9 | 76.3 | 99.9* | 99.9* | 99.8* | 67.4 | 80.7 | 92.3 | 80.5 | |

| MIM+QAA | 65.6 | 68.5 | 84.2 | 100.0* | 99.9* | 99.9* | 78.1 | 86.0 | 95.4 | 86.4 | |

| CIM | 85.4 | 86.5 | 90.7 | 100.0* | 100.0* | 100.0* | 90.7 | 92.1 | 95.8 | 93.5 | |

| CIM+QAA | 89.8 | 91.0 | 94.8 | 100.0* | 100.0* | 100.0* | 94.8 | 94.9 | 97.0 | 95.8 | |

| FIA | 82.1 | 82.5 | 91.4 | 100.0* | 100.0* | 99.6* | 91.4 | 94..5 | 96.5 | 92.9 | |

| FIA+QAA | 86.6 | 87.3 | 93.8 | 99.9* | 99.9* | 99.9* | 94.2 | 96.2 | 97.4 | 95.0 | |

| RPA | 83.9 | 83.3 | 91.8 | 100.0* | 100.0* | 99.6* | 91.6 | 94.0 | 96.6 | 93.4 | |

| RPA+QAA | 88.4 | 89.2 | 95.0 | 100.0* | 100.0* | 99.9* | 95.0 | 96.7 | 97.7 | 95.8 | |

| Admix | 60.7 | 59.8 | 76.4 | 99.1* | 99.1* | 99.1* | 73.9 | 79.7 | 91.2 | 82.1 | |

| Admix+QAA | 61.0 | 61.9 | 79.3 | 100.0* | 100.0* | 100.0* | 77.1 | 82.8 | 92.4 | 83.8 | |

| SSA | 62.3 | 63.1 | 75.8 | 100.0* | 100.0* | 100.0* | 76.0 | 79.1 | 88.5 | 82.8 | |

| Vgg-16 | SSA+QAA | 61.6 | 64.6 | 77.6 | 100.0* | 100.0* | 100.0* | 78.2 | 81.9 | 91.5 | 83.9 |

IV-B Transferability of the QAA

Attacking Standardly Trained Models and Adversarially Trained Models. Adversarial training is the SOTA adversarial defense method, which is selected as the target for evaluating the effectiveness of the QAA. The results are reported in Tab. II and Tab. IV. We can see when the QAA is included, the baseline attack success rates against both standardly and adversarially trained target models are significantly enhanced. Specifically, QAA improves the attack success rates of MIM, CIM, FIA, RPA, Admix, and SSA on the Res-34 substitute model by 20.9%, 4.6%, 6.7%, 6.7%, 12,3%, 6.3% against standardly trained models; and 11.3%, 8.8%, 13.2%, 13.5%, 15.5%, 8.7% against adversarially trained models on average. Similar observations can be drawn from Tab. III concerning CIFAR-10. In Tab. III, we can see that some previously developed transfer-based attacks (CIM, FIA, and RPA) do not outperform the traditional MIM and PGD attacks on CIFAR-10. This suggests that the effectiveness of these advanced attacks that were originally claimed on ImageNet may not generalize well to other (simpler) datasets such as CIFAR-10. This finding is consistent with that in [35]. In comparison, the QAA largely outperforms the other transfer-based attacks on both CIFAR-10 and ImageNet, highlighting its good generalizability across different datasets.

Attacking QNNs with Different Bitwidths. Tab. VI and Tab. V report the attack success rates against QNNs with different architectures and bitwidths on ImageNet and CIFAR-10, respectively. The results show that the QAA achieves higher attack success rates than the baseline attacks, highlighting QAA’s capability of improving the adversarial transferability across QNNs with unknown architectures and quantization bitwidths. We can see that on the Vgg-16 ImageNet substitute model, the QAA improves less when combined with Admix and SSA. This is because, on Vgg-16, we apply the QAA implementation based on PTQ, which does not fine-tune the substitute QNN but only “self-ensemble” it, which contradicts the intuition of Admix and SSA (Admix and SSA resort to image transformation to achieve “self-ensembling”). However, for the Res-34 substitute model, even Admix and SSA improves substantially when combined with the QAA, highlighting the effectiveness of QAA fine-tuning.

Remarks on the above transfer-based attack results. Previous works [14, 15, 16] have investigated the attack transferability problem mainly from the defender’s perspective and agree that adversarial examples transfer poorly across QNNs with the same architecture but different bitwidth, and this paper draws a different conclusion from the black-box attack perspective that extremely-low bitwidth QNN substitute models can be a powerful tool in generating adversarial examples across unknown model architectures and bitwidths. The experimental results in this section verify the above claim and our findings complement those of previous works.

IV-C Ablation Study

| Substitute | Attack | Inc-v3 | Inc-v4 | IncRes-v2 | Res-50 | Res-152 | Vgg-16 | Vgg-19 | Average | Training | Storage |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Res-34 | QAA | 94.3 | 90.8 | 87.8 | 97.1 | 93.5 | 95.6 | 95.6 | 93.5 | 1x | 1x |

| LGV | 93.1 | 90.4 | 86.0 | 98.4 | 94.8 | 97.9 | 97.1 | 94.0 | 10x | 40x | |

| LGV+QAA | 95.3 | 92.9 | 90.6 | 98.3 | 96.0 | 97.5 | 97.3 | 95.4 | 10x | 40x |

| Substitute | Attack | Adv-Inc-v3 | Adv-IncRes-v2 | Ens3-Inc-v3 | Ens4-Inc-v3 | Ens-IncRes-v2 | Average | Training | Storage |

|---|---|---|---|---|---|---|---|---|---|

| Res-34 | QAA | 88.9 | 81.4 | 85.0 | 83.6 | 74.1 | 82.6 | 1x | 1x |

| LGV | 86.8 | 80.4 | 86.0 | 84.6 | 74.0 | 82.4 | 10x | 40x | |

| LGV+QAA | 91.4 | 85.6 | 89.7 | 88.3 | 80.3 | 87.1 | 10x | 40x |

This section compares the QAA with other naive approaches to highlight its contribution. The dataset is ImageNet, the attack algorithm is MIM with -norm , and the substitute model architecture is ResNet-34 if not mentioned.

Comparing QAA with QNN-based Attacks. We compare the QAA with QNN-based attacks to verify that the QAA overcomes the shortcomings of substitute models with single bitwidths. For the QNN-based attacks, we use pre-trained QNNs (5, 4, 3, 2-bit) without any fine-tuning or other modifications as the substitute model. The results are presented in Fig. 5a. We can see that the QAA outperforms every compared baseline attack on every target model, verifying that the QAA overcomes the limitations of transfer-based attacks with single-bitwidth substitute models.

Comparing the QAA with ensemble attacks. To enhance the attack transferability against target QNNs, a naive and straightforward approach is to ensemble substitute QNNs with different bitwidths, which is denoted by ensemble attacks in this paper. Because QAA uses two quantization states (32-bit and 2-bit settings) in Eqn. 7, we ensemble 32-bit and 2-bit versions of ResNet-34 for a fair comparison. We have tried three commonly adopted ensemble techniques: ensembling at the logits layer (logits), ensembling at the softmax layer (softmax), and randomly sampling one model for each attack iteration (sampling). The results are presented in Fig. 5b. We can see that QAA outperforms every ensemble attack except on ResNet-50, on which the attack success rate of QAA is slightly lower than that of the logits attack. Please note that the substitute model used in the experiment is also ResNet, which means that while QAA may not be better when attacking ResNet-50, it transfers better on totally different target architectures. Another advantage of the QAA is that its training, inference, and storage overheads are the same as those of a single QNN substitute model. In contrast, ensemble attacks multiply the computational overhead because they rely on training and running multiple substitute models. These experimental results demonstrate that the QAA provides further improvements and contributions compared to naive ensemble attacks. We also compare the QAA with the ensemble attack LGV [34] against standardly trained and adversarially trained target models in Tab. VII and Tab. VIII, respectively. We can see that, compared to LGV, QAA achieves comparable transfer attack success rates with only 1/10 of the training overhead and 1/40 of the storage overhead. In addition, the QAA can also be combined with LGV to further enhance the attack transferability without increasing the computational overhead. The detailed description of LGV and the hyper-parameter settings of this experiment can be found in the Appendix.

Comparing the QAA with other Training Objectives. We compare the QAA with four other naive training objectives to highlight the contribution of the QAA. The hyper-parameters of the compared methods are the same as those of the QAA for a fair comparison. The compared methods include A. fine-tuning QNNs with only the original low bitwidth objective; B. fine-tuning 2-bit QNNs with 32-bit objective; C. fine-tuning 32-bit DNNs with Eqn. 7; and D. fine-tuning QNNs with 32-bit activation. The results in Fig. 5c show that each training method used in this experiment fails to achieve the same attack success rates as those of the QAA.

IV-D Explaining the QAA

This section explains why the QAA is effective from various perspectives. First, we take a closer look at the QAA to determine how the different states of QAA contribute to the final attack performance. Second, we explain the effectiveness of the QAA from the view of the loss landscape.

| Substitute | Bitwidth | Inc-v3 | Inc-v4 | IncRes-v2 | Res-50 | Res-152 | Vgg-16 | Vgg-19 |

|---|---|---|---|---|---|---|---|---|

| 32-bit | 40.8 | 42.3 | 30.4 | 74.4 | 56.7 | 77.6 | 75.4 | |

| 32-bit QAA | 48.7 | 46.1 | 37.9 | 72.1 | 54.3 | 79.1 | 77.0 | |

| 2-bit | 47.8 | 46.6 | 34.6 | 73.6 | 53.1 | 81.4 | 80.7 | |

| 2-bit QAA | 53.6 | 50.5 | 37.8 | 76.9 | 56.2 | 85.8 | 81.7 | |

| Res-34 | QAA | 66.0 | 59.1 | 45.4 | 85.3 | 67.5 | 91.2 | 87.6 |

| 32-bit | 43.8 | 42.4 | 34.2 | 76.2 | 63.1 | 76.3 | 71.6 | |

| 32-bit QAA | 59.0 | 54.7 | 46.2 | 80.7 | 64.9 | 80.1 | 79.0 | |

| 2-bit | 56.5 | 52.2 | 42.6 | 79.4 | 64.4 | 82.2 | 80.6 | |

| 2-bit QAA | 64.7 | 59.0 | 50.5 | 83.7 | 70.4 | 85.0 | 83.7 | |

| Res-18 | QAA | 75.2 | 67.6 | 59.0 | 91.0 | 81.5 | 90.6 | 88.7 |

A closer look at the QAA. As mentioned in Sec. III, the QAA substitute model implements multiple quantization states when generating adversarial examples, including both the 32-bit activation state and the 2-bit activation state. It is natural to ask the following two questions. First, does each quantization state of the QAA substitute model improve compared to QNNs with the same bitwidth? Second, does QAA improve upon each state it performs? To answer these two questions, we conduct transfer-based attack experiments with standardly trained models on ImageNet, and the attack algorithm is MIM [20] with a -norm budget of . The results are shown in Tab. IX. We can see that a single QAA quantization state improves upon single QNN substitute models (e.g., 2-bit QAA is better than 2-bit QNN), and the attack performance of the QAA is even greater than that of the QAA with a single state. The results are consistent across different target models and substitute models. Now we can conclude that the answer to the above two questions is yes. From this experiment, we can see that QAA not only improves the attack transferability of substitute models with single quantization states but also improves the transferability over these quantization states. Please note that these improvements come with a trivial computational cost, because the adversary only needs to fine-tune a pre-trained QNN for just one epoch to obtain a QAA substitute model, and the inference cost of the QAA model is the same as that of a single QNN model.

Explanation from the loss landscape view. As mentioned in Section III, the QAA improves its transferability by overcoming quantization shift and gradient misalignment. This section provides a different explanation inspired by [34]. The transferability between the two models can be explained by loss sharpness in both weight space and feature space. As stated in [34], substitute models with flatter loss landscape in the weight space (in other words, lower sharpness in the weight space) enable the generation of adversarial examples in the flatter region of the loss landscape in the feature space. Adversarial examples in the flatter region of the feature space have better transferability. Please see Fig. 6, which is adapted from [34, 18] for reference and illustrates the loss landscape in the feature space. The Y-axis indicates the loss values, and the X-axis denotes the input features. The transfer gap of the adversarial examples in the flat region is significantly lower than that in the sharp region. The blue line marks the loss landscape of the target model, and the red line marks the loss landscape of the substitute model, which is slightly different from that of the target model. Adversarial examples falling on the flat region are more robust to slight landscape variations caused by the different architecture and quantization bitwidths between the substitute and target models; thus, they have better transferability. We believe that the QAA can promote the convergence of adversarial examples to flat regions. The following experimental results verify the above claim.

Similar to [34], we calculate the loss sharpness in both the weight space and the feature space. The weight space sharpness of the substitute model is defined as

| (10) |

where is a constraint set with magnitude of . As has been proven by previous work [18], Eqn. 10 is a good approximation of the magnitude of the eigenvalues , and is used to characterize the sharpness of a model. The feature space sharpness of the substitute model around the adversarial example is defined as

| (11) |

where is a constraint set with magnitude of . Intuitively, Eqn. 11 calculates the loss variation within a small ball around , and a lower sharpness suggests a flatter loss landscape in the feature space. We solve the above optimization problem with a projected gradient algorithm for 20 iterations and report the results with two values of , in Tab. X. Compared to transfer attacks using full-precision substitute models, the QAA consistently achieves lower sharpness values in both the weight space and the feature space, verifying that the QAA promotes adversarial examples to fall in the flat region and thus enhances the attack transferability.

| Substitute | Bitwidth | Sharpness (weight) | Sharpness (feature) | ||

|---|---|---|---|---|---|

| =5e-4 | =1e-3 | =5e-4 | =1e-3 | ||

| 32 | 60.8154 | 125.7775 | 71.8195 | 146.1035 | |

| 2 | 24.3069 | 52.1593 | 25.6317 | 60.5207 | |

| Res-34 | QAA | 25.3686 | 51.9040 | 27.4976 | 58.6584 |

| 32 | 76.9116 | 172.1912 | 91.3237 | 195.1994 | |

| 2 | 38.7024 | 86.0094 | 42.7450 | 101.1433 | |

| Vgg-16 | QAA | 30.8196 | 69.5630 | 25.6498 | 58.3942 |

V Limitations and Future Work

Despite the above achievements, this work still exhibits some limitations that deserve to be solved by future work.

Mixed precision substitute model search. Limited by the available computational resources, the mixed precision design of the QAA substitute model is straightforward. Although we perform ablation studies, it is unknown whether an even better mixed-precision substitute model can be obtained. Answering this question requires an exhaustive search process with many computational resources, which will be our future work.

Experiments on more models. We test the effectiveness of the QAA on ResNets and Vggs with both the QAT and PTQ methods. Limited by the lack of available pre-trained QNN models (most of the previous model quantization works only implemented their methods on ResNets), we cannot provide experimental results for additional substitute models.

More explanations of the attack transferability. This paper explains the effectiveness of the QAA from three perspectives: quantization shift, gradient misalignment, and loss sharpness. Although these metrics are convincing with the support of previous works, we currently cannot provide more rigorous and insightful explanations because of the lack of explanatory works on DNNs and adversarial transferability. We leave this gap for future work.

VI Potential Social Impact

Positive: Strong transferability can benefit black-box applications of adversarial images for social goods, such as protecting user privacy [56, 57, 58, 59, 60, 61, 62]. In addition, the proposed approach can also motivate the community to design stronger defenses given our findings that even simple attacks can generate highly transferable adversarial images.

Negative: It is possible that our method may be susceptible to misuse by malicious entities aiming to compromise legitimate systems. However, we firmly believe that the substantial value our paper offers to the research community far surpasses any potential utility it might extend to malevolent actors.

VII Conclusion

In this paper, we propose a quantization aware attack (QAA) to mitigate the poor transferability of adversarial examples across target QNNs with different quantization bitwidths caused by quantization shift and gradient misalignment problems. Extensive experimental results demonstrate the effectiveness of the QAA. The QAA lowers the barriers to attacking target QNNs with unknown quantization bitwidths and exposes the security risks of deploying QNNs in realistic scenarios. In the end, we explain the effectiveness of the QAA from the view of the loss landscape. Our findings remind the adversarial learning research community to pay attention to the vulnerability of QNNs but also raise new challenges for secure QNN design.

VIII Acknowledgement

This research is supported by the National Key Research and Development Program of China (2020AAA0107702), the National Natural Science Foundation of China (62376210, 62161160337, 62132011, U21B2018, U20A20177, U20B2049, 62206217, and 62006181), the Shaanxi Province Key Industry Innovation Program (2023-ZDLGY-38 and 2021ZDLGY01-02), the China Postdoctoral Science Foundation (2022M722530 and 2023T160512), and the Fundamental Research Funds for the Central Universities under grant (xzy012022082, xtr052023004, and xtr022019002).

-A Settings for the Comparison with LGV

LGV [34] is a SOTA transfer-based attack that fine-tunes and ensembles the substitute model to enhance the attack transferability. We implement LGV, QAA, and LGV+QAA on Res-34 with the ImageNet dataset, and the attack algorithm is CIM with perturbation budget .

The LGV re-implementation follows the original paper, which fine-tunes the pre-trained Res-34 for 10 epochs with a constant learning rate of 0.05 and collects 40 checkpoints for ensembling. In the attack generation stage, the collected checkpoints are randomly and non-repeatedly sampled. The training overhead of LGV is 10 times greater than that of the QAA, and the storage overhead is 40 times greater.

For LGV+QAA, we utilize either the QAA checkpoint or the LGV checkpoints in each attack iteration. To find the optimal sampling ratio, we perform multiple trials as shown in Fig. 7 and set the sampling ratio to 1:1.

References

- [1] D. Liu, H. Kong, X. Luo, W. Liu, and R. Subramaniam, “Bringing ai to edge: From deep learning’s perspective,” Neurocomputing, vol. 485, pp. 297–320, 2022.

- [2] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” in Proc. ICLR, 2014, pp. 1–10.

- [3] H. Guo, Y. Wang, N. Ivanov, L. Xiao, and Q. Yan, “Specpatch: Human-in-the-loop adversarial audio spectrogram patch attack on speech recognition,” in Proc. ACM CCS, Nov. 2022, pp. 1353–1366.

- [4] R. Pang, H. Shen, X. Zhang, S. Ji, Y. Vorobeychik, X. Luo, A. Liu, and T. Wang, “A tale of evil twins: Adversarial inputs versus poisoned models,” in Proc. ACM CCS, Nov. 2022, pp. 85–99.

- [5] N. Papernot, P. McDaniel, S. Jha, M. Fredrikson, Z. B. Celik, and A. Swami, “The limitations of deep learning in adversarial settings,” in Proc. EuroSP, Mar. 2016, pp. 372–387.

- [6] S. Calzavara, C. Lucchese, and G. Tolomei, “Adversarial training of gradient-boosted decision trees,” in Proc. ACM CIKM, Nov. 2019, pp. 2429–2432.

- [7] H. Ma, K. Xu, X. Jiang, Z. Zhao, and T. Sun, “Transferable black-box attack against face recognition with spatial mutable adversarial patch,” IEEE Trans. Inf. Forensics Security, 2023.

- [8] Z. Zhao, Z. Liu, and M. Larson, “Towards large yet imperceptible adversarial image perturbations with perceptual color distance,” in CVPR, 2020.

- [9] J. Dong, Y. Wang, J. Lai, and X. Xie, “Restricted black-box adversarial attack against deepfake face swapping,” IEEE Trans. Inf. Forensics Security, 2023.

- [10] Q. Zhu, Y. Zhou, L. Fei, D. Zhang, and D. Zhang, “Multi-spectral palmprints joint attack and defense with adversarial examples learning,” IEEE Trans. Inf. Forensics Security, vol. 18, pp. 1789–1799, 2023.

- [11] Y. Zhong and W. Deng, “Towards transferable adversarial attack against deep face recognition,” IEEE Trans. Inf. Forensics Security, vol. 16, pp. 1452–1466, 2020.

- [12] Z. Wang, H. Yang, Y. Feng, P. Sun, H. Guo, Z. Zhang, and K. Ren, “Towards transferable targeted adversarial examples,” in Proc. CVPR, 2023, pp. 20 534–20 543.

- [13] N. Papernot, P. McDaniel, I. Goodfellow, S. Jha, Z. B. Celik, and A. Swami, “Practical black-box attacks against machine learning,” in Proc. ACM CCS, 2017, pp. 506–519.

- [14] R. Bernhard, P.-A. Moellic, and J.-M. Dutertre, “Impact of low-bitwidth quantization on the adversarial robustness for embedded neural networks,” in IEEE CW, 2019, pp. 308–315.

- [15] S. Sen, B. Ravindran, and A. Raghunathan, “Empir: Ensembles of mixed precision deep networks for increased robustness against adversarial attacks,” in Proc. ICLR, 2020, pp. 1–12.

- [16] Y. Fu, Q. Yu, M. Li, V. Chandra, and Y. Lin, “Double-win quant: Aggressively winning robustness of quantized deep neural networks via random precision training and inference,” in Proc. ICML, 2021, pp. 3492–3504.

- [17] Y. Bengio, N. Léonard, and A. Courville, “Estimating or propagating gradients through stochastic neurons for conditional computation,” arXiv preprint arXiv:1308.3432, 2013.

- [18] N. S. Keskar, D. Mudigere, J. Nocedal, M. Smelyanskiy, and P. T. P. Tang, “On large-batch training for deep learning: Generalization gap and sharp minima,” in Proc. ICLR, 2017, pp. 1–16.

- [19] Z. Zhao, H. Zhang, R. Li, R. Sicre, L. Amsaleg, M. Backes, Q. Li, and C. Shen, “Revisiting transferable adversarial image examples: Attack categorization, evaluation guidelines, and new insights,” arXiv preprint arXiv:2310.11850, 2023.

- [20] Y. Dong, F. Liao, T. Pang, H. Su, J. Zhu, X. Hu, and J. Li, “Boosting adversarial attacks with momentum,” in Proc. CVPR, 2018, pp. 9185–9193.

- [21] J. Lin, C. Song, K. He, L. Wang, and J. E. Hopcroft, “Nesterov accelerated gradient and scale invariance for adversarial attacks,” in Proc. ICLR, 2020.

- [22] X. Wang and K. He, “Enhancing the transferability of adversarial attacks through variance tuning,” in Proc. CVPR, 2021, pp. 1924–1933.

- [23] Y. Xiong, J. Lin, M. Zhang, J. E. Hopcroft, and K. He, “Stochastic variance reduced ensemble adversarial attack for boosting the adversarial transferability,” in Proc. CVPR, 2022, pp. 14 983–14 992.

- [24] J. Weng, Z. Luo, S. Li, N. Sebe, and Z. Zhong, “Logit margin matters: Improving transferable targeted adversarial attack by logit calibration,” IEEE Trans. Inf. Forensics Security, 2023.

- [25] C. Xie, Z. Zhang, Y. Zhou, S. Bai, J. Wang, Z. Ren, and A. L. Yuille, “Improving transferability of adversarial examples with input diversity,” in Proc. CVPR, 2019, pp. 2730–2739.

- [26] X. Wang, X. He, J. Wang, and K. He, “Admix: Enhancing the transferability of adversarial attacks,” in Proc. ICCV, 2021, pp. 16 158–16 167.

- [27] Y. Long, Q. Zhang, B. Zeng, L. Gao, X. Liu, J. Zhang, and J. Song, “Frequency domain model augmentation for adversarial attack,” in Proc. ECCV, 2022, pp. 549–566.

- [28] Q. Huang, I. Katsman, H. He, Z. Gu, S. Belongie, and S.-N. Lim, “Enhancing adversarial example transferability with an intermediate level attack,” in Proc. CVPR, 2019, pp. 4733–4742.

- [29] Z. Wang, H. Guo, Z. Zhang, W. Liu, Z. Qin, and K. Ren, “Feature importance-aware transferable adversarial attacks,” in Proc. ICCV, 2021, pp. 7639–7648.

- [30] Z. Zhao, Z. Liu, and M. Larson, “On success and simplicity: A second look at transferable targeted attacks,” vol. 34, pp. 6115–6128, 2021.

- [31] J. Zhang, W. Wu, J.-t. Huang, Y. Huang, W. Wang, Y. Su, and M. R. Lyu, “Improving adversarial transferability via neuron attribution-based attacks,” in Proc. CVPR, 2022, pp. 14 993–15 002.

- [32] M. Salzmann et al., “Learning transferable adversarial perturbations,” Advances in Neural Information Processing Systems, vol. 34, pp. 13 950–13 962, 2021.

- [33] D. Wu, Y. Wang, S.-T. Xia, J. Bailey, and X. Ma, “Skip connections matter: On the transferability of adversarial examples generated with resnets,” in Proc. ICLR, 2020.

- [34] M. Gubri, M. Cordy, M. Papadakis, Y. L. Traon, and K. Sen, “Lgv: Boosting adversarial example transferability from large geometric vicinity,” in Proc. ECCV, 2022, pp. 603–618.

- [35] Y. Zhu, Y. Chen, X. Li, K. Chen, Y. He, X. Tian, B. Zheng, Y. Chen, and Q. Huang, “Toward understanding and boosting adversarial transferability from a distribution perspective,” IEEE Transactions on Image Processing, vol. 31, pp. 6487–6501, 2022.

- [36] K. Gupta and T. Ajanthan, “Improved gradient-based adversarial attacks for quantized networks,” in Proc. AAAI, vol. 36, no. 6, 2022, pp. 6810–6818.

- [37] C. Song, E. Fallon, and H. Li, “Improving adversarial robustness in weight-quantized neural networks,” arXiv preprint arXiv:2012.14965, 2020.

- [38] B. Jacob, S. Kligys, B. Chen, M. Zhu, M. Tang, A. Howard, H. Adam, and D. Kalenichenko, “Quantization and training of neural networks for efficient integer-arithmetic-only inference,” in Proc. CVPR, 2018, pp. 2704–2713.

- [39] I. Hubara, M. Courbariaux, D. Soudry, R. El-Yaniv, and Y. Bengio, “Binarized neural networks,” in Proc. NeurIPS, vol. 29, 2016.

- [40] M. Rastegari, V. Ordonez, J. Redmon, and A. Farhadi, “Xnor-net: Imagenet classification using binary convolutional neural networks,” in Proc. ECCV, 2016, pp. 525–542.

- [41] S. Zhou, Y. Wu, Z. Ni, X. Zhou, H. Wen, and Y. Zou, “Dorefa-net: Training low bitwidth convolutional neural networks with low bitwidth gradients,” arXiv preprint arXiv:1606.06160, 2016.

- [42] Y. Li, X. Dong, and W. Wang, “Additive powers-of-two quantization: An efficient non-uniform discretization for neural networks,” in Proc. ICLR, 2020, pp. 1–15.

- [43] M. Nagel, R. A. Amjad, M. Van Baalen, C. Louizos, and T. Blankevoort, “Up or down? adaptive rounding for post-training quantization,” in Proc. ICML, 2020, pp. 7197–7206.

- [44] Y. Li, R. Gong, X. Tan, Y. Yang, P. Hu, Q. Zhang, F. Yu, W. Wang, and S. Gu, “Brecq: Pushing the limit of post-training quantization by block reconstruction,” in Proc. ICLR, 2021.

- [45] I. Hubara, Y. Nahshan, Y. Hanani, R. Banner, and D. Soudry, “Accurate post training quantization with small calibration sets,” in Proc. ICML, 2021, pp. 4466–4475.

- [46] X. Wei, R. Gong, Y. Li, X. Liu, and F. Yu, “Qdrop: Randomly dropping quantization for extremely low-bit post-training quantization,” in Proc. ICLR, 2022, pp. 1–19.

- [47] Q. Jin, L. Yang, and Z. Liao, “Adabits: Neural network quantization with adaptive bit-widths,” in Proc. CVPR, 2020, pp. 2146–2156.

- [48] L. Guerra, B. Zhuang, I. Reid, and T. Drummond, “Switchable precision neural networks,” arXiv preprint arXiv:2002.02815, 2020.

- [49] J. Yoon, G. Park, W. Jeong, and S. J. Hwang, “Bitwidth heterogeneous federated learning with progressive weight dequantization,” in Proc. ICML, 2022, pp. 25 552–25 565.

- [50] H. Wang, A. Zhang, S. Zheng, X. Shi, M. Li, and Z. Wang, “Removing batch normalization boosts adversarial training,” in Proc. ICML, 2022, pp. 23 433–23 445.

- [51] A. Demontis, M. Melis, M. Pintor, J. Matthew, B. Biggio, O. Alina, N.-R. Cristina, F. Roli et al., “Why do adversarial attacks transfer? explaining transferability of evasion and poisoning attacks,” in Proc. 28th USENIX Secur. Symp., 2019, pp. 321–338.

- [52] J. B. Kruskal and M. Wish, Multidimensional scaling. Sage, 1978, no. 11.

- [53] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in Proc. CVPR, 2009, pp. 248–255.

- [54] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” Tech. Rep., 2009. [Online]. Available: https://www.cs.toronto.edu/~kriz/cifar.html.

- [55] Y. Zhang, Y. Tan, T. Chen, X. Liu, Q. Zhang, and Y. Li, “Enhancing the transferability of adversarial examples with random patch,” in Proc. IJCAI, 2022.

- [56] V. Cherepanova, M. Goldblum, H. Foley, S. Duan, J. P. Dickerson, G. Taylor, and T. Goldstein, “Lowkey: Leveraging adversarial attacks to protect social media users from facial recognition,” in Proc. ICLR, 2021.

- [57] A. Rajabi, R. B. Bobba, M. Rosulek, C. Wright, and W.-c. Feng, “On the (im) practicality of adversarial perturbation for image privacy,” in Proc. on Privacy Enhancing Technologies, 2021.

- [58] Z. Zhao, Z. Liu, and M. Larson, “Adversarial image color transformations in explicit color filter space,” IEEE Trans. Inf. Forensics Security, 2023.

- [59] Z. Liu, Z. Zhao, and M. Larson, “Who’s afraid of adversarial queries? the impact of image modifications on content-based image retrieval,” in Proc. ICMR, 2019, pp. 306–314.

- [60] S. Joon Oh, M. Fritz, and B. Schiele, “Adversarial image perturbation for privacy protection–a game theory perspective,” in Proc. ICCV, 2017, pp. 1482–1491.

- [61] M. Larson, Z. Liu, S. Brugman, and Z. Zhao, “Pixel privacy. increasing image appeal while blocking automatic inference of sensitive scene information,” 2018.

- [62] Z. Liu, Z. Zhao, and M. Larson, “Pivoting image-based profiles toward privacy: Inhibiting malicious profiling with adversarial additions,” in Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization, 2021, pp. 267–273.

Appendix A Biography Section

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Yulong_Yang.jpg) |

Yulong Yang received the B.Eng. degree in Computer Science and Engineering from Xi’an Jiaotong University in 2022, where he is currently pursuing the Ph.D. degree in Cyberspace Security with the School of Cyber Science and Engineering. His current research interests include adversarial machine learning and model compression. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Chenhao_Lin.jpg) |

Chenhao Lin (Member, IEEE) received the B.Eng. degree in automation from Xi’an Jiaotong University in 2011, the M.Sc. degree in electrical engineering from Columbia University, in 2013, and the Ph.D. degree from The Hong Kong Polytechnic University, in 2018. He is currently a Research Fellow at the Xi’an Jiaotong University of China. His research interests are in artificial intelligence security, identity authentication, biometrics, adversarial attack and robustness, and pattern recognition. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Qian_Li.jpg) |

Qian Li (Member, IEEE) received the Ph.D. degree in computer science and technology from Xi’an Jiaotong University, China, in 2021. He is currently an Assistant Professor with the School of Cyber Science and Engineering, Xi’an Jiaotong University. His research interests include adversarial deep learning, artificial intelligence security, and optimization of theory. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Zhengyu_Zhao.jpg) |

Zhengyu Zhao (Member, IEEE) received the Ph.D. degree from Radboud University, The Netherlands. He is currently an Associate Professor at Xi’an Jiaotong University, China. His general research interests include machine learning security and privacy. Most of his work has concentrated on security (e.g., adversarial examples and data poisoning) and privacy (e.g., membership inference) attacks against deep learning-based computer vision systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Haoran_Fan.jpg) |

Haoran Fan received the B.Eng. degree in Software Engineering from Dalian University of Technology in 2023 and is currently pursuing the M.Eng. degree in Software Engineering with the School of Software Engineering, Xi’an Jiaotong University. His current research interest is adversarial machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Dawei_Zhou.png) |

Dawei Zhou received the B.Eng. degree in telecommunications engineering from Xidian University, Xian, China, in 2019, where he is currently pursuing the Ph.D. degree in information and communication engineering with the School of Telecommunications Engineering. His current research interests include computer vision and adversarial machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Nannan_Wang.png) |

Nannan Wang (Member, IEEE) received the B.Sc. degree in information and computation science from the Xi’an University of Posts and Telecommunications in 2009 and the Ph.D. degree in information and telecommunications engineering from Xidian University in 2015. He is currently a Professor with the State Key Laboratory of Integrated Services Networks, Xidian University. His current research interests include computer vision and machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Tongliang_Liu.png) |

Tongliang Liu (Senior Member, IEEE) is currently a Lecturer and Director of the Trustworthy Machine Learning Lab with the School of Computer Science at the University of Sydney. He is broadly interested in the fields of trustworthy machine learning and its interdisciplinary applications, with a particular emphasis on learning with noisy labels, transfer learning, adversarial learning, unsupervised learning, and statistical deep learning theory. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f27c2d89-1daa-4076-889b-b2bdfc71f9cb/Chao_Shen.jpg) |

Chao Shen (Senior Member, IEEE) received the B.S. degree in Automation from Xi’an Jiaotong University, China in 2007; and the Ph.D. degree in Control Theory and Control Engineering from Xi’an Jiaotong University, China in 2014. He is currently a Professor in the Faculty of Electronic and Information Engineering, Xi’an Jiaotong University of China. His current research interests include AI Security, insider/intrusion detection, behavioral biometrics, and measurement and experimental methodology. |