Quantitative Understanding of VAE as a Non-linearly Scaled Isometric Embedding

Abstract

Variational autoencoder (VAE) estimates the posterior parameters (mean and variance) of latent variables corresponding to each input data. While it is used for many tasks, the transparency of the model is still an underlying issue. This paper provides a quantitative understanding of VAE property through the differential geometric and information-theoretic interpretations of VAE. According to the Rate-distortion theory, the optimal transform coding is achieved by using an orthonormal transform with PCA basis where the transform space is isometric to the input. Considering the analogy of transform coding to VAE, we clarify theoretically and experimentally that VAE can be mapped to an implicit isometric embedding with a scale factor derived from the posterior parameter. As a result, we can estimate the data probabilities in the input space from the prior, loss metrics, and corresponding posterior parameters, and further, the quantitative importance of each latent variable can be evaluated like the eigenvalue of PCA.

1 Introduction

Variational autoencoder (VAE) (Kingma & Welling, 2014) is one of the most successful generative models, estimating posterior parameters of latent variables for each input data. In VAE, the latent representation is obtained by maximizing an evidence lower bound (ELBO). A number of studies have attempted to characterize the theoretical property of VAE. Indeed, there still are unsolved questions, e.g., what is the meaning of the latent variable VAE obtained, what represents in -VAE (Higgins et al., 2017), whether ELBO converges to an appropriate value, and so on. Alemi et al. (2018) introduced the RD trade-off based on the information-theoretic framework to analyse -VAE. However, they did not clarify what VAE captures after optimization. Dai et al. (2018) showed VAE restricted as a linear transform can be considered as a robust principal component analysis (PCA). But, their model has a limitation for the analysis on each latent variable basis because of the linearity assumption. Rolínek et al. (2019) showed the Jacobian matrix of VAE is orthogonal, which seems to make latent variables disentangled implicitly. However, they do not uncover the impact of each latent variable on the input data quantitatively because they simplify KL divergence as a constant. Locatello et al. (2019) also showed the unsupervised learning of disentangled representations fundamentally requires inductive biases on both the metric and data. Yet, they also do not uncover the quantitative property of disentangled representations which is obtained under the given inductive biases. Kumar & Poole (2020) connected the VAE objective with the Riemannian metric and proposed new deterministic regularized objectives. However, they still did not uncover the quantitative property of VAE after optimizing their objectives.

These problems are essentially due to the lack of a clear formulation of the quantitative relationship between the input data and the latent variables. To overcome this point, Kato et al. (2020) propose an isometric autoencoder as a non-VAE model. In the isometric embedding (Han & Hong, 2006), the distance between arbitrary two input points is retained in the embedding space. With isometric embedding, the quantitative relationship between the input data and the latent variables is tractable. Our intuition is that if we could also map VAE to an isometric autoencoder, the behavior of VAE latent variables will become clear. Thus, the challenge of this paper is to resolve these essential problems by utilizing the view point of isometric embedding.

1. First of all, we show that VAE obtains an implicit isometric embedding of the support of the input distribution as its latent space. That is, the input variable is embedded through the encoder in a low dimensional latent space in which the distance in the given metric between two points in the input space is preserved. Surprisingly, this characterization resolves most unsolved problems of VAE such as what we have enumerated above. This implicit isometric embedding is derived as a non-linear scaling of VAE embedding.

2. More concretely, we will show the following issues via the isometric embedding characterization theoretically:

(a) Role of in -VAE: controls each dimensional posterior variance of isometric embedding as a constant .

(b) Estimation of input distribution: the input distribution can be quantitatively estimated from the distribution of implicit isometric embedding because of the constant Jacobian determinant between the input and implicit isometric spaces.

(c) Disentanglement: If the manifold has a separate latent variable in the given metric by nature, the implicit isometric embedding captures such disentangled features as a result of minimizing the entropy.

(d) Rate-distortion (RD) optimal: VAE can be considered as a rate-distortion optimal encoder formulated by RD theory (Berger, 1971).

3. Finally, we justify our theoretical findings through several numerical experiments. We observe the estimated distribution is proportional to the input distribution in the toy dataset. By utilizing this property, the performance of the anomaly detection for real data is comparable to state-of-the-art studies. We also observe that the variance of each dimensional component in the isometric embedding shows the importance of each disentangled property like PCA.

2 Variational autoencoder

In VAE, ELBO is maximized instead of maximizing the log-likelihood directly. Let be a point in a dataset. The original VAE model consists of a latent variable with fixed prior , a parametric encoder , and a parametric decoder . In the encoder, is provided by estimating parameters and . Let be a local cost at data . Then, ELBO is described by

| (1) |

In , the second term is a Kullback–Leibler (KL) divergence. Let , , and be -th dimensional values of , , and KL divergence, respectively. Then is derived as:

| (2) |

The first term is called the reconstruction loss. Instead directly estimate in training, is derived and is evaluated as reconstruction loss, where denotes the predetermined conditional distribution. In the case Gaussian and Bernoulli distributions are used as , becomes the sum square error (SSE) and binary cross-entropy (BCE), respectively. In training -VAE (Higgins et al., 2017), the next objective is used instead of Eq. 1, where is a parameter to control the trade-off.

| (3) |

3 Isometric embedding

Isometric embedding (Han & Hong, 2006) is a smooth embedding from to () on a Riemannian manifold where the distances between arbitrary two points are equivalent in both the input and embedding spaces. Assume that and belong to a Riemannian metric space with a metric tensor and a Euclidean space, respectively. Then, the isometric embedding from to satisfies the following condition for all inputs and dimensions as shown in Kato et al. (2020), where denotes Kronecker delta:

| (4) |

The isometric embedding has several preferable properties. First of all, the probability density of input data at the given metric is preserved in the isometric embedding space. Let and be distributions in their respective metric spaces. denotes , i.e., an absolute value of the Jacobian determinant. Since is 1 from orthonormality, the following equation holds:

| (5) |

Secondly, the entropies in both spaces are also equivalent. Let and be sets of and , respectively. and denotes the entropies of and in each metric spaces. Then and are equivalent as follows:

| (6) | |||||

Thus, the isometric embedding is a powerful tool to analyse input data. Note that Eqs. 5-6 do not hold in general if the embedding is not isometric.

Recently, Kato et al. (2020) proposed an isometric autoencoder RaDOGAGA (Rate-distortion optimization guided autoencoder for generative analysis), inspired by deep image compression (Ballé et al., 2018). In the conventional image compression using orthonormal transform coding, Rate-distortion optimization (RDO) objective has been widely used (Sullivan & Wiegand, 1998). Let and be a rate and distortion after encoding, respectively. Then RDO finds the best encoding parameters that minimizes at given Lagrange multiplier . In the deep image compression (Ballé et al., 2018), the model is composed of a parametric prior and posterior with constant variance, then trained using the RDO objective. Kato et al. (2020) proved that such a model achieves an isometric embedding in Euclidean space, and they proposed an isometric autoencoder RaDOGAGA for quantitative analysis. By contrast, VAE uses a fixed prior with a variable posterior. Here, we have an intuition that VAE can be mapped to an isometric embedding such as RaDOGAGA by introducing a non-linear scaling of latent space. If our intuition is correct, the behavior of VAE will be quantitatively explained.

4 Understanding of VAE as a scaled isometric embedding

This section shows the quantitative property of VAE by introducing an implicit isometric embedding. First, we present the hypothesis of mapping VAE to an implicit isometric embedding. Second, we theoretically formulate the derivation of implicit isometric embedding as the minimum condition of the VAE objective. Lastly, we explain the quantitative properties of VAE to provide a practical data analysis.

4.1 Mapping -VAE to implicit isometric embedding

In this section, we explain our motivations for introducing an implicit isometric embedding to analyse -VAE. Rolínek et al. (2019) showed that each pair of column vectors in the Jacobian matrix is orthogonal such that for when is SSE. From this property, we can introduce the implicit isometric embedding by scaling the VAE latent space appropriately as follows: denotes . Let and be an implicit variable and its -th dimensional component which satisfies . Then forms the isometric embedding in Euclidean space:

| (7) |

If the L2 norm of is derived mathematically, we can formulate the mapping VAE to an implicit isometric embedding as in Eq. 7. Then, this mapping will strongly help to understand the quantitative behavior of VAE as explained in section 3. Thus, our motivation in this paper is to formulate the implicit isometric embedding theoretically and analyse VAE properties quantitatively.

Figure 1 shows how -VAE is mapped to an implicit isometric embedding. In VAE encoder, is calculated from an input . Then, the posterior is derived by adding a stochastic noise to . Finally, the reconstruction data is decoded from .

Our theoretical analysis in section 4.2 reveals that implicit isometric embedding can be introduced by mapping to with a scaling in each dimension. Then, the posterior is derived by adding a stochastic noise to . Note that the noise variances, i.e., the posterior variances, are a constant for all inputs and dimensions, which is analogous to RaDOGAGA. Then, the mutual information in -VAE can be estimated as:

| (8) | |||||

This implies that the posterior entropy should be smaller enough than to give the model a sufficient expressive ability. Thus, the posterior variance should be also sufficiently smaller than the variance of input data. Note that Eq. 8 is consistent with the Rate-distortion (RD) optimal condition in the RD theory as shown in section 6.

4.2 Theoretical derivation of implicit isometric embedding

In this section, we derive the implicit isometric embedding theoretically. First, we reformulate and in -VAE objective in Eq. 3 for mathematical analysis. Then we derive the implicit isometric embedding as a minimum condition of . Here, we set the prior to for easy analysis. The condition where the approximation in this section is valid is that is smaller enough than the variance of the input dataset, which is important to achieve a sufficient expressive ability. We also assume the data manifold is smooth and differentiable.

Firstly, we introduce a metric tensor to treat arbitrary kinds of metrics for the reconstruction loss in the same framework. denotes a metric between two points and . Let be . If is small, can be approximated by using the second order Taylor expansion, where is an dependent positive definite metric tensor. Appendix G.2 shows the derivations of for SSE, BCE, and structural similarity (SSIM) (Wang et al., 2001). Especially for SSE, is an identity matrix , i.e., a metric tensor in Euclidean space.

Next, we formulate the approximation of via the following three lemmas, to examine the Jacobian matrix easily.

Lemma 1. Approximation of reconstruction loss:

Let be .

denotes .

Then the reconstruction loss in can be approximated as:

| (9) |

Proof: Appendix A.1 describes the proof. The outline is as follows: Rolínek et al. (2019) show can be decomposed to . We call the first term a transform loss. Obviously, the average of transform loss over is still . We call the second term a coding loss. The average of coding loss can be further approximated as the second term of Eq. 9.

Lemma 2. Approximation of KL divergence:

Let be a prior probability density where .

Then the KL divergence in can be approximated as:

| (10) |

Proof: The detail is described in Appendix A.2. The outlines is as follows: First, will be observed in meaningful dimensions. For example, will almost hold if the dimensional component has information that exceeds only 1.2 nat. Furthermore, when , we have . Thus, by ignoring the in Eq. 2, can be approximated as:

| (11) |

As a result, the second equation of the proposition Eq. 4.2 is derived by summing the last equation of Eq. 4.2. Then, using , the last equation of Eq. 4.2 is derived. Appendix A.2 shows that the approximation of the second line in Eq. 4.2 can be also derived for arbitrary priors, which suggests that the theoretical derivations that follow in this section also hold for arbitrary priors.

Lemma 3. Estimation of transform loss:

Let be a 1-dimensional dataset.

When -VAE is trained for , the ratio between the transform loss and the coding loss is estimated as:

| (12) |

Proof: Appendix A.3 describes the proof. As explained there, this is analogous to the Wiener filter (Wiener, 1964), one of the most basic theories for signal restoration.

Lemma 4.2 is also validated experimentally in the multi-dimensional non-Gaussian toy dataset. Fig. 24 in Appendix D.2 shows that the experimental results match the theory well. Thus, we ignore the transform loss in the discussion that follows, since we assume is smaller enough than the variance of the input data. Using Lemma 4.2-4.2, we can derive the approximate expansion of as follows:

Theorem 1. Approximate expansion of VAE objective:

Assume is smaller enough than the variance of input dataset.

The objective can be approximated as:

| (13) |

Then, we can finally derive the implicit isometric embedding as a minimum condition of Eq. 4.2 via Lemma 4.2-4.2.

Lemma 4. Orthogonality of Jacobian matrix in VAE:

At the minimum condition of Eq. 4.2, each pair and of column vectors in the Jacobian matrix show the orthogonality in the Riemannian metric space, i.e., the inner product space with the metric tensor as:

| (14) |

Proof: Eq. 14 is derived by examining the derivative . The proof is described in Appendix A.4. A diagonal posterior covariance is the key for orthogonality.

Eq. 14 is consistent with Rolínek et al. (2019) who show the orthogonality for SSE metric. In addition, we quantify the Jacobian matrix for arbitrary metric spaces.

Lemma 5. L2 norm of :

the L2 norm of in the metric space of is derived as:

| (15) |

Proof: Apply to Eq. 14 and arrange it.

Theorem 2. Implicit isometric embedding:

An implicit isometric embedding is introduced by mapping -th component of VAE latent variable to with the following scaling factor:

| (16) |

denotes . Then satisfies the next equation:

| (17) |

This shows the isometric embedding from the inner product space of with metric to the Euclidean space of .

Proof: Apply to Eq. 14.

Remark 1: Isometricity in Eq. 17 is on the decoder side. Since the transform loss is close to 0, holds. As a result, the isometricity on the encoder side is also almost achieved. If is explicitly reduced by using a decomposed loss, the isometricity will be further promoted.

Theorem 3. Posterior variance in isometric embedding:

The posterior variance of implicit isometric embedding is a constant for all inputs and dimensional components.

4.3 Quantitative data analysis method using implicit isometric embedding in VAE

This section describes three quantitative data analysis methods by utilizing the property of isometric embedding.

4.3.1 Estimation of the data probability distribution:

Estimation of data distribution is one of the key targets in machine learning. We show VAE can estimate the distribution in both metric space and input space quantitatively.

Proposition 1. Probability estimation in metric space:

Let be a probability distribution in the inner product space of .

can be quantitatively estimated as:

| (19) | |||||

Proof: Appendix A.5 explains the detail. The outline is as follows: The third equation is derived by applying Eq. 16 to , showing that bridges between the distributions of input data and prior. The fourth equation is derived by applying Eq. 16 to Eq. 4.2. The last equation implies that the VAE objective converges to the log-likelihood of the input as expected. When the metric is SSE, Eq. 19 show the probability distribution in the input space since is an identity matrix.

Proposition 2. Probability estimation in the input space:

In the the case , the probability distribution in the input space can be estimated as:

| (20) |

In the case and holds where is an -dependent scalar factor, can be estimated as:

| (21) |

Proof: The absolute value of Jacobian determinant between the input and metric spaces gives the the PDF ratio. In the case , this is derived as . In the case and , the Jacobian determinant is proportional to . Appendix A.6 explains the detail.

4.3.2 Quantitative analysis of disentanglement

Assume the data manifold has a disentangled property with independent latent variable by nature. Then each will capture each disentangled latent variable like to PCA. This subsection explains how to derive the importance of each dimension in the given metrics for data analysis.

Proposition 3. Meaningful dimension:

The dimensional components with have meaningful information for representation,

where the entropy of is larger than .

In contrast, the dimension with has no information, where and will be observed.

Proof: Appendix A.7 shows the detail in view of RD theory. This appendix also explains that the entropy of becomes minimum after optimization.

Proposition 4. Importance of each dimension:

Assume that the prior is a Gaussian distribution .

Let be the variance of the -th implicit isometric component , indicating the quantitative importance of each dimension.

in the meaningful dimension () can be roughly estimated as:

| (22) |

Proof: Appendix A.8 shows the derivation from Eq. 16. The case other than Gaussian prior is also explained there.

4.3.3 Check the isometricity after training

This subsection explains how to determine if the model acquires isometric embedding by evaluating the norm of . Let be a vector where the -th dimension is and others are . Let be , where denotes a minute value for the numerical differential. Then the squared L2 norm of can be evaluated as the last equation:

| (23) | |||||

Observing a value close to 1 means a unit norm and indicates that an implicit isometric embedding is captured.

5 Experiment

This section describes three experimental results. First, the results of the toy dataset are examined to validate our theory. Next, the disentanglement analysis for the CelebA dataset is presented. Finally, an anomaly detection task is evaluated to show the usefulness of data distribution estimation.

5.1 Quantitative evaluation in the toy dataset

The toy dataset is generated as follows. First, three dimensional variables , , and are sampled in accordance with the three different shapes of distributions , , and , as shown in Fig. 2. The variances of , , and are , , and , respectively, such that the ratio of the variances is 1:4:16. Second, three -dimensional uncorrelated vectors , , and with L2 norm are provided. Finally, toy data with dimensions are generated by . The data distribution is also set to . If our hypothesis is correct, will be close to . Then, will also vary a lot with these varieties of PDFs. Because the properties in Section 4.3 are derived from , our theory can be easily validated by evaluating those properties.

|

trained with the square error loss.

trained with the downward-convex loss.

|

Then, the VAE model is trained using Eq. 3. We use two kinds of the reconstruction loss to analyze the effect of the loss metrics. The first is the square error loss equivalent to SSE. The second is the downward-convex loss which we design as Eq. 5.1, such that the shape becomes similar to the BCE loss as in Appendix G.2:

| (24) |

Here, is chosen such that the mean of for the toy dataset is 1.0 since the variance of is 1/6+2/3+8/3=7/2. The details of the networks and training conditions are written in Appendix C.1.

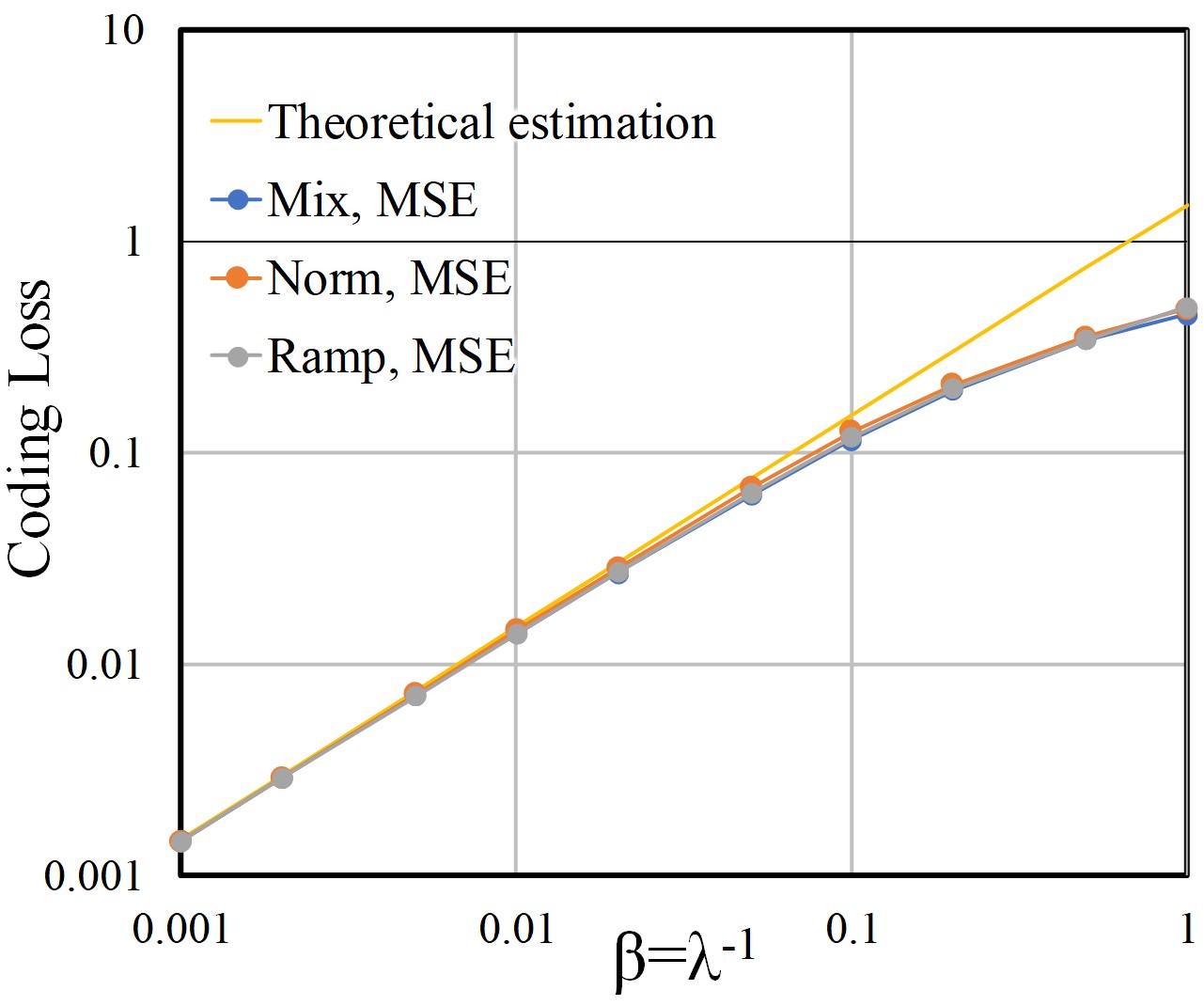

After training with two types of reconstruction losses, the loss ratio for the square error loss is 0.023, and that for the downward-convex loss is 0.024. As expected in Lemma 4.2, the transform losses are negligibly small.

First, an implicit isometric property is examined. Tables 2 and 2 show the measurements of (shown as ), , and described in Section 4.3. In these tables, , , and show acquired latent variables. ”Av.” and ”SD” are the average and standard deviation, respectively. In both tables, the values of are close to 1.0 in each dimension, showing isometricity as in Eq. 21. By contrast, the average of , which corresponds to , is different in each dimension. Thus, for the original VAE latent variable is not isometric.

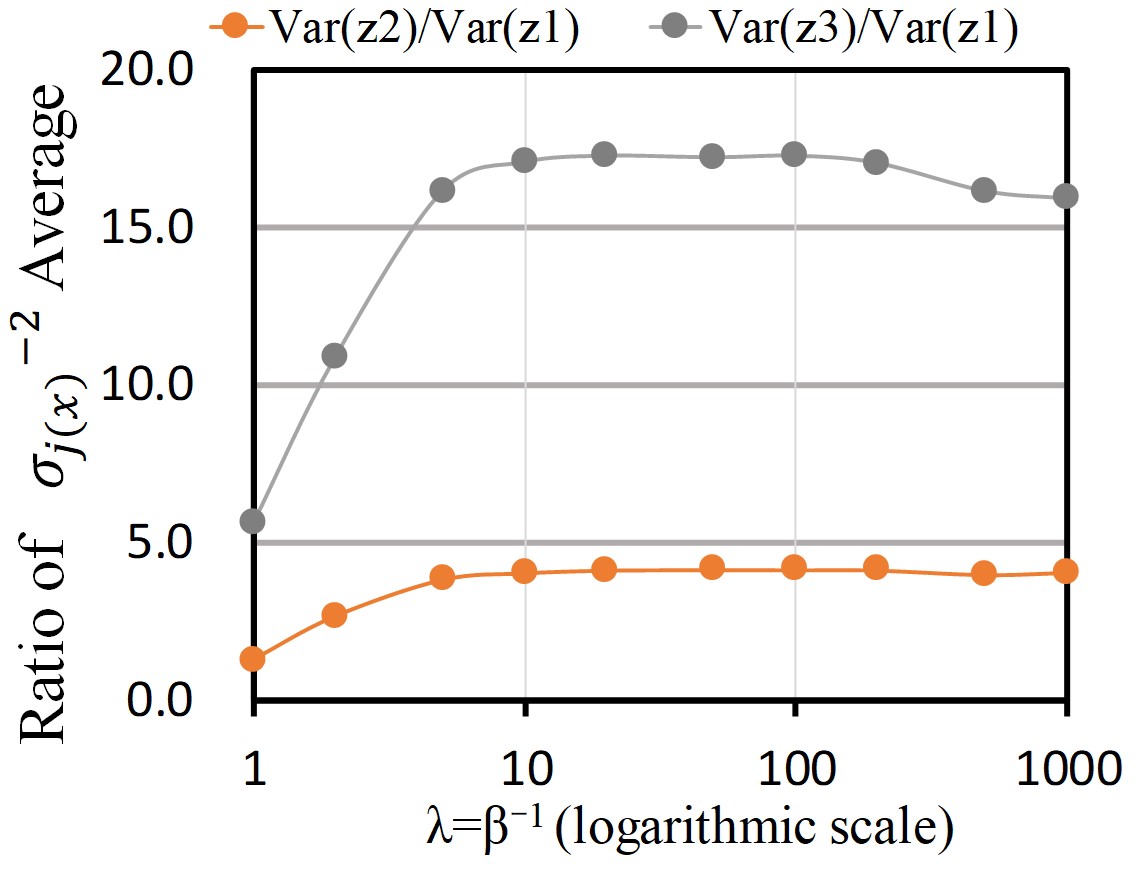

Next, the disentanglement analysis is examined. The average of in Eq.22 and its ratio are shown in Tables 2 and 2. Although the average of is a rough estimation of variance, the ratio is close to 1:4:16, i.e., the variance ratio of generation parameters , , and . When comparing both losses, the ratio of and for the downward-convex loss is somewhat smaller than that for the square error. This is explained as follows. In the downward-convex loss, tends to be from Eq. 17, i.e. . Therefore, the region in the metric space with a larger norm is shrunk, and the estimated variances corresponding to and become smaller.

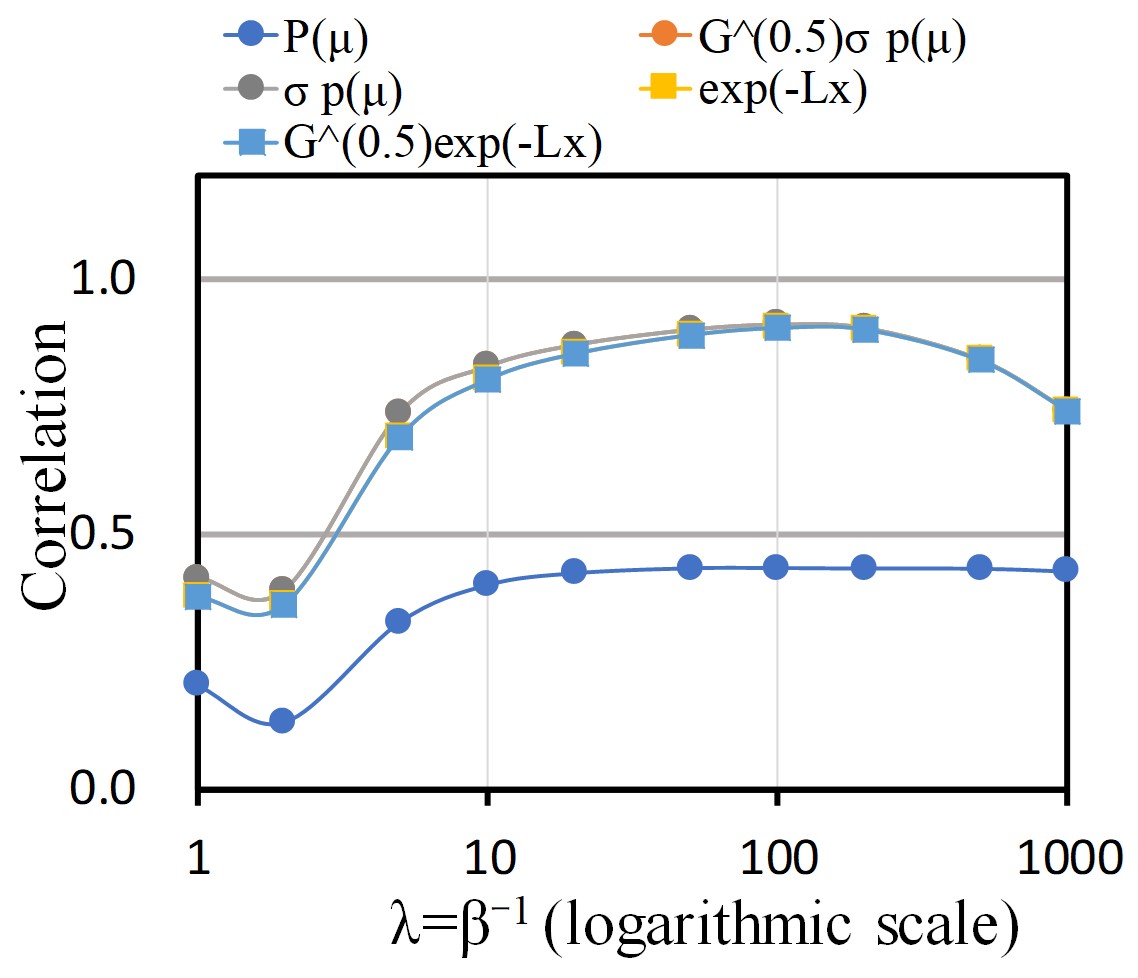

Finally, we examine the probability estimation. Figure 3 shows the scattering plots of the data distribution and estimated probabilities for the downward-convex loss. Figure 3 shows the plots of and the prior probabilities . This graph implies that it is difficult to estimate only from the prior. The correlation coefficient shown as ”R” (0.434) is also low. Figure 3 shows the plots of and in in Eq. 19. The correlation coefficient (0.771) becomes better, but is still not high. Lastry, Figures 3-3 are the plots of and in Eq. 21, showing high correlations around 0.91. This strongly supports our theoretical probability estimation which considers the metric space.

Appendix D also shows results using square error loss. The correlation coefficient for also gives a high score 0.904, since the input and metric spaces are equivalent.

Appendix D shows the exhaustive ablation study with different PDFs, losses, and , which further supports our theory.

5.2 Evaluations in CelebA dataset

This section presents the disentanglement analysis using VAE for the CelebA dataset 111(http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html) (Liu et al., 2015). This dataset is composed of 202,599 celebrity facial images. In use, the images are center-cropped to form sized images. As a reconstruction loss, we use SSIM which is close to subjective quality evaluation. The details of networks and training conditions are written in Appendix C.2.

Figure 6 shows the averages of in Eq.22 as the estimated variances, as well as the average and the standard deviation of in Eq.23 as the estimated square norm of implicit transform. The latent variables are numbered in descending order by the estimated variance. In the dimensions greater than the 27th, the averages of are close to 1 and that of is close to 0, implying . Between the 1st and 26th dimensions, the mean and standard deviation of averages are 1.83 and 0.13, respectively. This also implies the variance is around . These values seem almost constant with a small standard deviation; however, the mean is somewhat larger than the expected value 1. This suggests that the implicit embedding which satisfies can be considered as almost isometric. Thus, averages still can determine the quantitative importance of each dimension.

We also train VAE using the decomposed loss explicitly, i.e., . Figure 6 shows the result. Here, the mean and standard deviation of averages are 0.92 and 0.04, respectively, which suggests almost a unit norm. This result implies that the explicit use of decomposed loss promotes isometricity and allows for better analysis, as explained in Remark 1.

Figure 6 shows decoder outputs where the selected latent variables are traversed from to while setting the rest to 0. The average of is also shown there. The components are grouped by averages, such that , , to the large, , to the medium, and to the small, respectively. In the large group, significant changes of background brightness, face direction, and hair color are observed. In the medium group, we can see minor changes such as facial expressions. However, in the small group, there are almost no changes. In addition, Appendix E.1 shows the traversed outputs of all dimensional components in descending order of averages, where the degree of image changes clearly depends on averages. Thus, it is strongly supported that the average of indicates the importance of each dimensional component like PCA.

5.3 Anomaly detection with realistic data

| Dataset | Methods | F1 |

|---|---|---|

| KDDCup | GMVAE111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9326 |

| DAGMM111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9500 (0.0052) | |

| RaDOGAGA(d)111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9624 (0.0038) | |

| RaDOGAGA(log(d))111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9638 (0.0042) | |

| vanilla VAE | 0.9642 (0.0007) | |

| Thyroid | GMVAE111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.6353 |

| DAGMM111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.4755 (0.0491) | |

| RaDOGAGA(d)111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.6447 (0.0486) | |

| RaDOGAGA(log(d))111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.6702 (0.0585) | |

| vanilla VAE | 0.6596 (0.0436) | |

| Arrythmia | GMVAE111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.4308 |

| DAGMM111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.5060 (0.0395) | |

| RaDOGAGA(d)111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.5433 (0.0468) | |

| RaDOGAGA(log(d))111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.5373 (0.0411) | |

| vanilla VAE | 0.4985 (0.0412) | |

| KDDCup-rev | DAGMM111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9779 (0.0018) |

| RaDOGAGA(d)111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9797 (0.0015) | |

| RaDOGAGA(log(d))111Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) | 0.9865 (0.0009) | |

| vanilla VAE | 0.9880 (0.0008) |

Using a vanilla VAE model with a single Gaussian prior, we finally examine the performance in anomaly detection in which PDF estimation is the key issue. We use four public datasets333Datasets can be downloaded at https://kdd.ics.uci.edu/ and http://odds.cs.stonybrook.edu.: KDDCUP99, Thyroid, Arrhythmia, and KDDCUP-Rev. The details of the datasets and network configurations are given in Appendix H. 11footnotetext: Scores are cited from Liao et al. (2018) (GMVAE) and Kato et al. (2020)(DAGMM, RaDOGAGA) For a fair comparison with previous works, we follow the setting in Zong et al. (2018). Randomly extracted 50% of the data were assigned to the training and the rest to the testing. Then the model is trained using normal data only. Here, we use the explicit decomposed loss to promote isometricity. The coding loss is set to SSE. For the test, the anomaly score for each sample is set to in Eq. 3 after training since gives a log-likelihood of the input data from Proposition 4.3.1. Then, samples with anomaly scores above the threshold are identified as anomalies. The threshold is given by the ratio of the anomaly data in each data set. For instance, in KDDCup99, data with in the top 20 % is detected as an anomaly. We run experiments 20 times for each dataset split by 20 different random seeds.

5.3.1 BASELINE METHODS

We compare previous methods such as GMVAE (Liao et al., 2018), DAGMM (Zong et al., 2018), and RaDOGAGA (Kato et al., 2020) that conducted the same experiments. All of them apply GMM as a prior because they believe GMM is more appropriate to capture the complex data distribution than VAE with a single Gaussian prior.

5.3.2 Results

Table 3 reports the average F1 scores and standard deviations (in brackets). Recall and precision are shown in Appendix H. Liao et al. (2018) insisted that the vanilla VAE is not appropriate for PDF estimation. Contrary to their claim, by considering the quantitative property as proven in this paper, even a vanilla VAE achieves state-of-the-art performance in KDDCup99 and KDDCup-rev. In other data sets, the score of VAE is comparable with RaDOGAGA, which is the previous best method. Here, RaDOGAGA attempts to adapt the parametric distribution such as GMM to the input distribution in the isometric space. However, fitting sufficiency is strongly dependent on the capability of the parametric distribution. By contrast, VAE can flexibly adapt a simple prior distribution to the input distribution via trainable posterior variance . As a result, VAE can provide a simpler tool for estimating the data distribution.

6 Relation with previous studies

First of all, we show VAE can be interpreted as a Rate-distortion (RD) optimal encoder based on RD theory (Berger, 1971), which has been successfully applied to image compression in the industry. The optimal transform coding (Goyal, 2001) for the Gaussian data with SSE metric is formulated as follows: First, the data are transformed deterministically using the orthonormal transform (orthogonal and unit norm) with a PCA basis. Note that the orthonormal transform is a part of the isometric embedding where the encoder is restricted as linear. Then, the transformed data is entropy-coded. Here, the key point for optimizing RD is to introduce equivalent stochastic distortion in all dimensions (or to use a uniform quantizer for image compression). Then the rate at the optimum condition is derived as follows: denotes transformed data from inputs. Let be the -th dimensional component of . denotes a variance of in a dataset. Note that is equivalent to the eigenvalue of PCA in each dimension. Let be a distortion equally allowed in each dimensional channel. Assume the input dimension is and is smaller than for all . Then, is derived as:

| (25) | |||||

Here, if is set to , Eq. 8 is equivalent to Eq. 25. This suggests that VAE can be considered as a rate-distortion optimal encoder where RD theory is extended from linear orthonormal transform to general isometric embedding in the given metric. More details are described in Appendix B.6.

Next, our theory can intuitively explain how the captured features in -VAE behave when varying . Higgins et al. (2017) suggests that -VAE with large can capture a global features while degrading the reconstruction quality. Our intuitive explanation is as follows: Assume the case of 2D manifold in 3D space. According to Gauss’s Theorema Egregium, the Gaussian curvature is an intrinsic invariant of a 2D surface and its value is unchanged after any isometric embeddings (Andrews, 2002). Figure 7 shows the conceptual explanation of captured features in the implicit isometric space for 2D manifold with non-zero Gaussian curvature. Our theory shows that is considered as the allowable distortion in each dimensional component of implicit isometric embedding. If is large as shown in Fig. 7, -VAE can capture global features in the implicit isometric space allowing large distortion with lower rate. If is small as shown in Fig. 7, by contrast, -VAE will capture only fragmented features allowing small distortion with higher rate. We believe similar behaviors occur in general higher-dimensional manifolds.

Finally, we correct the analysis in Alemi et al. (2018). They describe ”the ELBO objective alone cannot distinguish between models that make no use of the latent variable versus models that make large use of the latent variable and learn useful representations for reconstruction,” because the reconstruction loss and KL divergence have unstable values after training. From this reason, they introduce a new objective to fix this instability using a target rate . Correctly, the reconstruction loss and KL divergence are stably derived as a function of as shown in Appendix B.1 and B.4.

7 Conclusion

This paper provides a quantitative understanding of VAE by non-linear mapping to an isometric embedding. According to the Rate-distortion theory, the optimal transform coding is achieved by using orthonormal transform with a PCA basis, where the transform space is isometric to the input. From this analogy, we show theoretically and experimentally that VAE can be mapped to an implicit isometric embedding with a scale factor derived from the posterior parameter. Based on this property, we also clarify that VAE can provide a practical quantitative analysis of input data such as the probability estimation in the input space and the PCA-like quantitative multivariate analysis. We believe the quantitative properties thoroughly uncovered in this paper will be a milestone to further advance the information theory-based generative models such as VAE in the right direction.

Acknowledgement

We express our gratitude to Tomotake Sasaki and Takashi Katoh for improving the clarity of the manuscript. Taiji Suzuki was partially supported by JSPS KAKENHI (18H03201, and 20H00576), and JST CREST.

References

- Alemi et al. (2018) Alemi, A., Poole, B., Fischer, I., Dillon, J., Saurous, R. A., and Murphy, K. Fixing a broken ELBO. In Proceedings of the 35th International Conference on Machine Learning(ICML), pp. 159–168. PMLR, July 2018.

- Andrews (2002) Andrews, B. Notes on the isometric embedding problem and the nash-moser implicit function theorem. Proceedings of the Centre for Mathematics and its Applications, 20:157–208, January 2002.

- Ballé et al. (2016) Ballé, J., Valero, L., and Eero P., S. Density modeling of images using a generalized normalization transformation. In Proceedings of the 4t International Conference on Learning Representations (ICLR), May 2016.

- Ballé et al. (2018) Ballé, J., Minnen, D., Singh, S., Hwang, S. J., and Johnston, N. Variational image compression with a scale hyperprior. In Proceedings of the 6th International Conference on Learning Representations (ICLR), April 2018.

- Berger (1971) Berger, T. (ed.). Rate Distortion Theory: A Mathematical Basis for Data Compression. Prentice Hall, 1971. ISBN 0137531036.

- Bishop (2006) Bishop, C. M. Pattern Recognition and Machine Learning. Springer, 2006. ISBN 978-0387-31073-2.

- Dai & Wipf (2019) Dai, B. and Wipf, D. Diagnosing and enhancing vae models. In International Conference on Learning Representations (ICLR), May 2019.

- Dai et al. (2018) Dai, B., Wang, Y., Aston, J., Hua, G., and Wipf, D. Hidden talents of the variational autoencoder. The Journal of Machine Learning Research, 19:1573–1614, January 2018.

- Dua & Graff (2019) Dua, D. and Graff, C. UCI machine learning repository. http://archive.ics.uci.edu/ml, 2019.

- Goyal (2001) Goyal, V. K. Theoretical foundations of transform coding. IEEE Signal Processing Magazine, 18:9–21, September 2001.

- Han & Hong (2006) Han, Q. and Hong, J.-X. Isometric Embedding of Riemannian Manifolds in Euclidean Spaces. American Mathematical Society, 2006. ISBN 0821840711.

- Higgins et al. (2017) Higgins, I., Matthey, L., Pal, A., Burgess, C., Glorot, X., Botvinick, M., Mohamed, S., and Lerchner, A. beta-VAE: Learning basic visual concepts with a constrained variational framework. In Proceedings of the 5th International Conference on Learning Representations (ICLR), April 2017.

- Huang et al. (2020) Huang, S., Makhzani, A., Cao, Y., and Grosse, R. Evaluating lossy compression rates of deep generative models. In Proceedings of the 37th International Conference on Machine Learning (ICML), July 2020.

- Kato et al. (2020) Kato, K., Zhou, Z., Sasaki, T., and Nakagawa, A. Rate-distortion optimization guided autoencoder for generative analysis. In Proceedings of the 37th International Conference on Machine Learning (ICML), July 2020.

- Kingma & Welling (2014) Kingma, D. P. and Welling, M. Auto-encoding variational bayes. In Proceedings of the 2nd International Conference on Learning Representations (ICLR), Banff, Canada, April 2014.

- Kumar & Poole (2020) Kumar, A. and Poole, B. On implicit regularization in -VAEs. In Proceedings of the 37th International Conference on Machine Learning (ICML), July 2020.

- Liao et al. (2018) Liao, W., Guo, Y., Chen, X., and Li, P. A unified unsupervised gaussian mixture variational autoencoder for high dimensional outlier detection. In 2018 IEEE International Conference on Big Data (Big Data), pp. 1208–1217. IEEE, 2018.

- Liu et al. (2015) Liu, Z., Luo, P., Wang, X., and Tang, X. Deep learning face attributes in the wild. In Proceedings of International Conference on Computer Vision (ICCV), December 2015.

- Locatello et al. (2019) Locatello, F., Bauer, S., Lucic, M., Rätsch, G., Gelly, S., Schölkopf, B., and Bachem, O. Challenging common assumptions in the unsupervised learning of disentangled representations. In Proceedings of the 36th International Conference on Machine Learning (ICML), volume 97, pp. 4114–4124. PMLR, June 2019.

- Pearlman & Said (2011) Pearlman, W. A. and Said, A. Digital Signal Compression: Principles and Practice. Cambridge University Press, 2011. ISBN 0521899826.

- Rao & Yip (2000) Rao, K. R. and Yip, P. (eds.). The Transform and Data Compression Handbook. CRC Press, Inc., Boca Raton, FL, USA, 2000. ISBN 0849336929.

- Rolínek et al. (2019) Rolínek, M., Zietlow, D., and Martius, G. Variational autoencoders pursue pca directions (by accident). In Proceedings of Computer Vision and Pattern Recognition (CVPR), June 2019.

- Sullivan & Wiegand (1998) Sullivan, G. J. and Wiegand, T. Rate-distortion optimization for video compression. IEEE Signal Processing Magazine, 15:74–90, November 1998.

- Tishby et al. (1999) Tishby, N., Pereira, F. C., and Bialek, W. The information bottleneck method. In The 37th annual Allerton Conference on Communication, Control, and Computing, September 1999.

- Wang et al. (2001) Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. on Image Processing, 13:600–612, April 2001.

- Wiener (1964) Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series. The MIT Press, 1964. ISBN 978-0-262-73005-1.

- Zong et al. (2018) Zong, B., Song, Q., Min, M. R., Cheng, W., Lumezanu, C., Cho, D., and Chen, H. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In In International Conference on Learning Representations, 2018.

Appendix A Derivations and proofs in Section 4.3

A.1 Proof of Lemma 4.2: Approximated expansion of the reconstruction loss

The approximated expansion of the reconstruction loss is mainly the same as Rolínek et al. (2019) except we consider a metric tensor which is a positive definite Hermitian matrix.

and denote and , respectively. Let be an added noise in the reparameterization trick where . Then, is approximated as:

| (26) |

Next, the reconstruction loss can be approximated as follows.

| (27) | |||||

Then, we evaluate the average of over , i.e., for all . Note that where is the Kronecker delta. First, the average of in the last line of Eq. 27 is still since this term does not depend on . Second, the average of in the last line of Eq. 27 is approximated as:

| (28) | |||||

Third, the average of the third term in the last line of Eq. 27, i.e., , is since the average of over is .

As a result, the average of over can be approximated as:

| (29) |

A.2 Proof of Lemma 4.2: More precise derivation of KL divergence approximation.

This appendix explains more precise derivation of KL divergence approximation. First, we show the approximation for the Gaussian prior. We also show at the end of this appendix that our approximation also holds for arbitrary prior.

In the case of Gaussian prior, we show that KL divergence can be interpreted as an amount of information in the transform coding (Goyal, 2001) allowing the distortion . In the transform coding, input data is transformed by an orthonormal transform. Then, the transformed data is quantized, and an entropy code is assigned to the quantized symbol, such that the length of the entropy code is equivalent to the logarithm of the estimated symbol probability. Here, we assume will be observed in meaningful dimensions as shown later.

It is generally intractable to derive the rate and distortion of individual symbols in the ideal information coding. Thus, we first discuss the case of uniform quantization. Let and be the probability and amount of information in the uniform quantization coding of . Here, and are regarded as a quantized value and a coding noise after the uniform quantization, respectively. Since we assume , will also hold. Let be a quantization step size. The coding noise after quantization is for the quantization step size , as explained in Appendix G.1. Thus, is derived as from . We also assume . As shown in Fig.8, is denoted by where is . Using Simpson’s numerical integration method and expansion, is approximated as:

| (30) | |||||

Using expansion, is derived as:

| (31) |

When and in Eq. 2 are compared, both equations are equivalent except a small constant difference for each dimension. As a result, KL divergence for -th dimension is equivalent to the rate for the uniform quantization coding, allowing a small constant difference.

To make theoretical analysis easier, we use the simpler approximation as instead of Eq.30, as shown in Fig.8. Then, is derived as:

| (32) |

Here, the first term of the right equation is equivalent to Eq. 4.2. This equation also means that the approximation of KL divergence in Eq. 4.2 is equivalent to the rate in the uniform quantization coding with approximation, allowing the same small constant difference as in Eq. 31. It is noted that the approximation in Figure 8 can be applied to any kinds of prior PDFs because there is no explicit assumption for the prior PDF. This implies that the theoretical discussion after Eq. 4.2 in the main text will hold in arbitrary prior PDFs.

The meaning of the small constant difference in Eqs. 31 and 32 can be explained as follows: Pearlman & Said (2011) show that the difference of the rate between the ideal information coding and uniform quantization is . This is caused by the entropy difference of the noise distributions. In the ideal case, the noise distribution is known as a Gaussian. In the case the noise variance is , the entropy of the Gaussian noise is . For the uniform quantization with a uniform noise distribution, the entropy is . As a result, the difference is just . Because the rate estimation in this appendix uses a uniform quantization, the small offset can be regarded as a difference between the ideal information coding and the uniform quantization. As a result, KL divergence in Eq. 2 and Eq. 4.2 can be regarded as a rate in the ideal informaton coding for the symbol with the mean and variance .

Here, we validate the assumption that will be observed in meaningful dimensions. From the discussion above, the information in each dimension can be considered as KL divergence:

| (33) |

For simple analysis, we assume that is constant in the -th dimension. We further assume . Then which shows the information of the -th dimensional component is derived as:

| (34) | |||||

From this equation, we can estimate an amount of information in each dimension from the posterior variance. From this equation, it is derived that if the amount of information is more than about nat or bit, holds. In addition, as the information is increasing, becomes exponentially decreasing. As a result, the assumption that will be observed in meaningful dimensions is reasonable.

Finally, we show that the approximation of the KL divergence in the second line of Eq. 4.2 also holds for arbitrary priors. Let , , and be an arbitrary prior, a posterior , and KL divergence, respectively. First, the shape of becomes close to a delta function when each is small. Thus will act like a delta function . Next the differential entropy of , i.e., is derived as . Using these equations, KL divergence for an arbitrary prior can be approximated by the second line of Eq. 4.2 as follows:

| (35) | |||||

In the derivation of the third line in Eq. 4.2, we assume that is close to where is the prior distribution of . The reason of this assumption is as follows: ELBO can be also derived as (Bishop, 2006). When ELBO is maximized at each , will hold to minimize KL divergence where is a constant. Finally, we have by the marginalization of . Appendix A.3 also validates this assumption in the simple 1-dimensional VAE case where holds. Thus, we can derive the approximation in Eq. 4.2.

A.3 Proof of Lemma 4.2: Estimation of the coding loss and transform loss in -dimensional linear VAE

This appendix estimates the coding loss and transform loss in -dimensional linear -VAE for the Gaussian data, and also shows that the result is consistent with the Wiener filter (Wiener, 1964). Let be a one dimensional input data with the normal distribution:

| (36) |

First, a simple VAE model in this analysis is explained. denotes a one dimensional latent variable. Let the prior distribution be . Next, two linear parametric encoder and decoder are provided with constant parameters , , and to optimize:

| (37) |

Here, the encoding parameter consists of , and the decoding parameter consists of . Then the square error is used as a reconstruction loss.

Next, the objective is derived. and denote the KL divergence and reconstruction loss at , respectively. We further assume that uses a square error. Then we define the loss objective at as . Using Eq. 4.2, can be evaluated as:

| (38) | |||||

Then, is evaluated as:

| (39) | |||||

By averaging over , the objective to minimize is derived as:

| (40) | |||||

Here, the first term and the second term in the last line are corresponding to the transform loss and coding loss , respectively.

By solving , , and , , , and are derived as follows:

| (41) |

From Eq. A.3, and are derived as:

| (42) |

As shown in section 4.1, the added noise, , should be reasonably smaller than the data variance . If , and in Eq. A.3 can be approximated as:

| (43) |

As shown in this equation, is small in the VAE where the added noise is reasonably small, and can be ignored.

Note that the distribution of , i.e., , is derived as by scaling with a factor of . Thus is equivalent to the prior of , i.e., in this simple VAE case.

Next, the relation to the Wiener filter (Wiener, 1964) is discussed. The Wiener filter is one of the most basic, but most important theories for signal restoration. We consider an simple -dimensional Gaussian process. Let be input data. Then, is scaled by , and a Gaussian noise is added. Thus, is observed. From the Wiener filter theory, the estimated value with minimum distortion, can be formulated as:

| (44) |

In this case, the estimation error is derived as:

| (45) |

In the second equation, the first term is corresponding to the transform loss, and the second term is corresponding to the coding loss. Here the ratio of the transform loss and coding loss is derived as . By appying and to and assuming , this ratio can be described as:

| (46) |

This result is consistent with Eq. 43, implying that optimized VAE and the Wiener filter show similar behaviours.

A.4 Proof of Lemma 4.2 : Derivation of the orthogonality

Lemma 4.2 is proved by examining the minimum condition of at . The proof outline is similar to Kato et al. (2020) while should be also considered as a variable in our derivation.

We first show the following mathematical formula which is used our derivation. Let be a regular matrix and be its -th column vector. denotes the -th column vector of a cofactor matrix for . Then the following equation holds mathematically.

| (47) |

Let be the -th column vector of a cofactor matrix for Jacobian matrix . Using the formula in Eq. 47, the partial derivative of by is described by

| (48) |

Note that holds by the cofactor’s property. Here, denotes the dot product, and denotes the Kronecker delta. By setting Eq. 48 to zero and multiplying from the left, we have the next orthogonal form of :

| (49) |

Next, the partial derivative of by is derived as:

| (50) |

By setting Eq. 50 to zero, we have the next equation:

| (51) |

Note that Eq. 51 is a part of Eq. 49 where . As a result, the condition to minimize is derived as Eq. 49.

A.5 Proof of Proposition 4.3.1: Estimation of input data distribution in the metric space

This equation explains the derivation of Eq. 19 in Proposition 4.3.1. Using Eq. 16, the third equation in Eq. 19 is derived as:

| (52) |

This shows that the posterior variance bridges between the distributions of data and prior. Thus the prior close to the data distribution will facilitate training, where is close to constant.

The fourth equation in Eq. 19 in Proposition 4.3.1 is derived by applying Eq. 16 to Eq. 4.2 and arranging the result. Let be a minimum of at . and denote a coding loss and KL divergence in , respectively.

First, is derived. The next equation holds from Eq. 14.

| (53) |

By applying Eq. 53 to the first term of Eq. 4.2, is derived as:

| (54) |

This implies that the reconstruction loss is constant for all inputs at the minimum condition.

Second, is derived. From Eq. 52, the next equation holds.

| (55) |

By applying Eq. 55 to the second equation of Eq. 4.2, is derived as:

| (56) |

As a result, the minimum value of the objective is derived as:

| (57) |

As a result, can be evaluated as:

| (58) |

This result implies that the VAE objective converges to the log-likelihood of the input at the optimized condition as expected.

A.6 Proof of Proposition 4.3.1: Estimation of data distribution in the input space

This appendix shows the derivation of variables in Eqs. 19 and 21. When we estimate a probability in real dataset, we use an approximation of . First, the derivation of approximation for the input is presented. Then, the PDF ratio between the input space and inner product space is explained for the cases and .

Derivation of approximation for the input of real data:

As shown in in Eq. 1, is denoted as .

We approximate as , i.e., the average of two samples, instead of the average over .

can be calculated from and using Eq. 2.

The PDF ratio in the case :

The PDF ratio for is a Jacobian determinant between two spaces.

First, holds from Eq. 17.

also holds by calculating the determinant.

Finally, is derived as using .

The PDF ratio in the case and :

Although the strict derivation needs the treatment of the Riemannian manifold, we provide a simple explanation in this appendix. Here, it is assumed that holds for all . If for some , is replaced by the number of latent variables with .

For the implicit isometric space , there exists a matrix such that both and holds. denotes a point in , i.e., . Because is assumed as in Section 4.3, holds. Then, the mapping function between and is defined, such that:

| (59) |

Let and are infinitesimal displacements around and , such that . Then the next equation holds from Eq. 59:

| (60) |

Let , , , and be two arbitrary infinitesimal displacements around and , such that and . Then the following equation holds, where denotes the dot product.

| (61) |

This equation shows the isometric mapping from the inner product space for with the metric tensor to the Euclidean space for .

Note that all of the column vectors in the Jacobian matrix also have a unit norm and are orthogonal to each other in the metric space for with the metric tensor . Therefore, the Jacobian matrix should have a property that all of the column vectors have a unit norm and are orthogonal to each other in the Euclidean space.

Then -dimensional space which is composed of the meaningful dimensions from the implicit isometric space is named as the implicit orthonormal space . Figure 9 shows the projection of the volume element from the implicit orthonormal space to the isometric space and input space. Let be an infinitesimal -dimensional volume element in . This volume element is a -dimensional rectangular solid having each edge length . Let be the -dimensional volume of a volume element . Then, holds. Next, is projected to dimensional infinitesimal element in by . Because of the orthonormality, is equivalent to the rotation / reflection of , and is the same as , i.e., . Then, is projected to -dimensional element in by . Because each dimension is scaled equally by the scale factor , holds. Here, the ratio of the volume element between and is . Note that the PDF ratio is derived by the reciprocal of . As a result, the PDF ratio is derived as .

A.7 Proof of proposition 4.3.2: Determination of the meaningful dimension for representation

This appendix explain the derivation of Proposition 4.3.2. Here, we estimate the KL divergence, i.e., a rate for the dimensions whose variance is less than . As shown later, the discussion in this appendix is closely related with Rate-distortion theory (Berger, 1971; Pearlman & Said, 2011; Goyal, 2001).

Let , , and be averages of , , and in Appendix A.5 over , respectively. Here, holds by definition. Since is a constant as in Eq. 54, is derived as:

| (62) |

As is constant, the minimum condition of is equivalent to that of . Let be a KL divergence of the -th dimensional component at the minimum condition. Here, holds by definition. Eq. 4.2 holds for for small . Thus, we can approximate for small from Eqs. 16 and 4.2 as:

| (63) | |||||

Here, denotes a entropy of the Gaussian with variance . Next, is expressed as:

| (64) | |||||

Note that the KL-divergence is always equal or greater than 0 by definition. By considering this, is further approximated as:

| (65) |

Note that the approximation of Eq. 65 is reasonable from the Rate-distortion theory and optimal transform coding theory (Berger, 1971; Pearlman & Said, 2011; Goyal, 2001). The outline of Rate-distortion theory and optimal transform coding is explained in Appendix B.6. The term is the entropy of . Thus, the optimal implicit isometric space is derived such that the entropy of data representation is minimum. When the data manifold has a disentangled property in the given metric by nature, each will capture a disentangled feature with minimum entropy such that the mutual information between implicit isometric components becomes minimized. This is analogous to PCA for Gaussian data, which gives the disentangled representation with minimum entropy in SSE. Considering the similarity to the PCA eigenvalues, the variance of will indicate the importance of each dimension.

Thus, if the entropy of is larger than , then it is reasonable that holds. By contrast, if the entropy of is less than , then will hold. In such dimensions, , , and will hold. In addition, will be close to 0 because this needs not to be balanced with .

These properties of VAE can be clearly explained by rate-distortion theory (Berger, 1971), which has been successfully applied to transform coding such as image / audio compression. Appendix B.6 explains that VAE can be interpreted as an optimal transform coding with non-linear scaling of latent space.

Thus, latent variables with variances from the largest to the n-th with are sufficient for the representation and the dimensions with can be ignored, allowing the reduction of the dimension for .

A.8 Proof of proposition 4.3.2: Derivation of the estimated variance

This appendix explains the derivation of quantitative importance for each dimension in Eq. 22 of Proposition 4.3.2.

First, we set to at to derive value from in Eq. 16. We also assume that the prior distribution is . The variance is derived by the subtraction of , i.e., the square of the mean, from , i.e., the square mean. Thus, the approximations of both and are needed.

First, the approximation of the mean is explained. Because the cumulative distribution functions (CDFs) of are the same as CDF of , the following equations hold:

| (66) |

This equation means that the median of the distribution is . Because the mean and median are close in most cases, the mean can be approximated as . As a result, the variance of can be approximated by the square mean .

Second, the approximation of the square mean is explained. Since we assume the manifold has a disentangled property by nature, the standard deviation of the posterior is assumed as a function of , regardless of . This function is denoted as . For , is approximated as follows, using Eq. 16 and replacing the average of over by :

| (67) |

The same approximation is applied to . Then the square mean of is approximated as follows, assuming that the correlation between and is low:

| (68) |

Finally, the square mean of is approximated as the following equation, using and replacing by , i.e., the posterior variance derived from the input data:

| (69) |

Although some rough approximations are used in the expansion, the estimated variance in the last equation seems still reasonable, because shows a scale factor between and while the variance of is always 1 for the prior . Considering the variance of the prior in the expansion, this estimation method can be applied to any prior distribution.

Appendix B Detailed relation to prior works

This section first describes the clear formulation of ELBO in VAE by utilizing isometric embedding. Then the detailed relationship, including correction, with previous works are explained.

B.1 Derivation of ELBO with clear and quantitative form

This section clarifies that the ELBO value after optimization becomes close to the log-likelihood of input data in the metric space (not input space), by the theoretical derivation of the reconstruction loss and KL divergence via isometric embedding.

We derive the ELBO (without ) at in Eq. 1, i.e., when the objective of -VAE (with ) in Eq. 57, i.e., is optimised.

First, the reconstruction loss can be rewritten as:

| (70) |

Let be a implicit isometric variable corresponding to . Because the posterior variance in each isometric latent variable is a constant , will hold. If is small, will hold. Then, the next equation will hold also using isometricity;

| (71) |

Thus the reconstruction loss is estimated as:

| (72) | |||||

Next, KL divergence is derived from Eq. 56 as:

| (73) |

By summing both terms, ELBO at can be estimated as

| (74) | |||||

As a result, ELBO (Eq. 1) in the original form (Kingma & Welling, 2014) is close to the log-likelihood of , regardless or not, when the objective of -VAE (Higgins et al., 2017) is optimised. Note that in Eq.73 is defined in the metric space. This also implies that the representation depends on the metrics.

Next, the predetermined conditional distribution used for training and the true conditional distribution after optimization are examined. Although and are expected to be equivalent after optimization, the theoretical relationship between both is not well discussed. Assume . In this case, the metric is derived as . Using Eq. 53, the following equations are derived:

| (75) |

Assume that for all are equivalent. Then the next equation is derived:

| (76) |

Because the variance of each dimension is , the conditional distribution after optimization is estimated as .

If , i.e., the original VAE objective, both and are equivalent. This result is consistent with what is expected.

If , however, and are different. In other words, what -VAE really does is to scale a variance of the pre-determined conditional distribution in the original VAE by a factor of as Eq. 76. The detail is explained in Appendix B.3.

If is not SSE, by introducing a variable where satisfies , the metric can be replaced by SSE in the Euclidean space of .

B.2 Relation to Tishby et al. (1999)

The theory described in Tishby et al. (1999), which first proposes the concept of information bottleneck (IB), is consistent with our analysis. Tishby et al. (1999) clarified the behaviour of the compressed representation when the rate-distortion trade-off is optimized. denotes the signal space with a fixed probability and denotes its compressed representation. Let be a loss metric. Then the rate-distortion trade-off can be described as:

| (77) |

By solving this condition, they derive the following equation:

| (78) |

As shown in our discussion above, will hold in the metric defined space from our VAE analysis. This result is equivalent to Eq. 78 in their work if is SSE and is set to , as follows:

| (79) |

If is not SSE, the use of the space transformation explained in appendix B.1 will lead to the same result.

B.3 Relation to -VAE (Higgins et al., 2017)

This section explains the clear understanding of -VAE (Higgins et al., 2017), and also corrects some of their theory.

In Higgins et al. (2017), ELBO equation is modified as:

| (80) |

However, they use the predetermined probabilities of such as the Bernoulli and Gaussian distributions in training (described in table 1 in Higgins et al. (2017)). As shown in our appendix G.2, the log-likelihoods of the Bernoulli and Gaussian distributions can be regarded as BCE and SSE metrics, respectively. As a result, the actual objective for training in Higgins et al. (2017) is not Eq. 80, but the objective in Eq. 3 using BCE and SSE metrics with varying . Thus ELBO as Eq. 1 form will become in the BCE / SSE metric defined space regardless or not, as shown in appendix B.1.

Actually, the equation 80 dose not show the log-likelihood of after optimization. When and are applied, the value of Eq. 80 is derived as , which is different from the log-likelihood of in Eq. 73 if .

Correctly, what -VAE really does is only to scale the variance of the pre-determined conditional distribution in the original VAE by a factor of . In the case the pre-determined conditional distribution is Gaussian , the objective of -VAE can be can be rewritten as a linearly scaled original VAE objective with a Gaussian where the variance is instead of :

| (81) | |||||

Here, the underlined terms in the last equation is just the ELBO with the predetermined conditional distribution . So the optimization of -VAE objective with the predetermined conditional distribution is just the same as the optimization of the original VAE objective (=1) with with the predetermined conditional distribution .

B.4 Relation to Alemi et al. (2018)

Alemi et al. (2018) discuss the rate-distortion trade-off by the theoretical entropy analysis. Their work is also presumed that the objective was not mistakenly distinguished from ELBO, which leads to the incorrect discussion. In their work, the differential entropy for the input , distortion , and rate are derived carefully. They suggest that VAE with is sensitive (unstable) because and can be arbitrary value on the line . Furthermore, they also suggest that at and at will hold as shown the figure 1 of their work.

In this appendix, we will show that determines the value of and specifically. We also show that will hold regardless or not.

In their work, these values of , , and are mathematically defined as:

| (82) | |||||

| (83) | |||||

| (84) |

Here, is a true PDF of , is a stochastic encoder, is a decoder, and is a marginal probability of .

Our work allows a rough estimation of Eqs. 82-84 with by introducing the implicit isometric variable as explained in our work.

Using isometric variable and the relation , Eq. 83 can be rewritten as:

| (85) |

Let be the implicit isometric latent variable corresponding to the mean of encoder output . As discussed in section 4.1, will hold. Because of isometricity, the value of will be also close to . Though must depend on , this important point has not been discussed well in this work. By using the implicit isometric variable, we can connect both theoretically. Thus, can be estimated as:

| (86) | |||||

Second, is examined. is a marginal probability of . Using the relation and , Eq. 84 can be rewritten as:

| (87) |

Because of isometricity, will approximately hold where denotes a decoder output. Thus can be approximated by:

| (88) |

Here, if , i.e., added noise, is small enough compared to the variance of , a normal distribution function term in this equation will act like a delta function. Thus can be approximated as:

| (89) |

In the similar way, the following approximation will also hold.

| (90) |

By using these approximation and applying Eqs. 85-86, in Eq. 84 can be approximated as:

| (91) | |||||

As discussed above, and can be specifically derived from . In addition, Shannon lower bound discussed in Alemi et al. (2018) can be roughly verified in the optimized VAE with clearer notations using .

From the discussion above, we presume Alemi et al. (2018) might wrongly treat in their work. They suggest that VAE with is sensitive (unstable) because and can be arbitrary value on the line ; however, our work as well as Tishby et al. (1999) (appendix B.2) and Dai & Wipf (2019)(appendix B.5) show that the differential entropy of the distortion and rate, i.e., and , are specifically determined by after optimization, and will hold for any regardless or not. Alemi et al. (2018) also suggest should satisfy because is a distortion; however, we suggest should be treated as a differential entropy and can be less than 0 because is once handled as a continuous signal with a stochastic process in Eqs. 82-84. Here, can be if , as also shown in Dai & Wipf (2019). Thus, upper bound of at is not , but , as shown in RD theory for a continuous signal. Huang et al. (2020) show this property experimentally in their figures 4-8 such that seems to diverge if MSE is close to 0.

B.5 Relation to Dai et al. (2018) and Dai & Wipf (2019)

Dai et al. (2018) analyses VAE by assuming a linear model. As a result, the estimated posterior is constant. If the distribution of the manifold is the Gaussian, our work and Dai et al. (2018) give a similar result with constant posterior variances. For non-Gaussian data, however, the quantitative analysis such as probability estimation is intractable using their linear model. Our work reveals that the posterior variance gives a scaling factor between in VAE and in the isometric space when VAE is ideally trained with rich parameters. This is validated by Figures 3 and 3, where the estimation of the posterior variance at each data point is a key.

Next, the relation to Dai & Wipf (2019) is discussed. They analyse a behavior of VAE when ideally trained. For example, the theorem 5 in their work shows that and hold if , where , and denote a variance of , data dimension, and latent dimension, respectively. By setting and , this theorem is consistent with and derived in Eq. 86 and Eq. 91.

B.6 Relation to Rate-distortion theory (Berger, 1971) and transform coding (Goyal, 2001; Pearlman & Said, 2011)

RD theory (Berger, 1971) formulated the optimal transform coding (Goyal, 2001; Pearlman & Said, 2011) for the Gaussian source with square error metric as follows. Let be a point in a dataset. First, the data are transformed deterministically with the orthonormal transform (orthogonal and unit norm) such as Karhunen-Loève transform (KLT) (Rao & Yip, 2000). Note that the basis of KLT is equivalent to a PCA basis. Let be a point transformed from . Then, is entropy-coded by allowing equivalent stochastic distortion (or posterior with constant variance) in each dimension. A lower bound of a rate at a distortion is denoted by . The derivation of is as follows. Let be the -th dimensional component of and be the variance of in a dataset. It is noted that is the equivalent to eigenvalues of PCA for the dataset. Let be a distortion equally allowed in each dimensional channel. At the optimal condition, the distortion and rate on the curve is calculated as a function of :

| (92) |

The simplest way to allow equivalent distortion is to use a uniform quantization (Goyal, 2001). Let be a quantization step, and be a round function. Quantized value is derived as , where . Then, is approximated by as explained in Appendix G.1.

To practically achieve the best RD trade-off in image compression, rate-distortion optimization (RDO) has also been widely used (Sullivan & Wiegand, 1998). In RDO, the best trade-off is achieved by finding a encoding parameter that minimizes the cost at given Lagrange parameter as:

| (93) |

This equation is equivalent to VAE when .

We show the optimum condition of VAE shown in Eq. 62 and 65 can be mapped to the optimum condition of transform coding (Goyal, 2001) as shown in Eq. B.6. First, the derivation of Eq. B.6 is explained by solving the optimal distortion assignment to each dimension. In the transform coding for dimensional the Gaussian data, an input data is transformed to using an orthonormal transform such as KLT/DCT. Then each dimensional component is encoded with allowing distortion . Let be a target distortion satisfying . Next, denotes a variance of each dimensional component for the input dataset. Then, a rate can be derived as . By introducing a Lagrange parameter and minimizing a rate-distortion optimization cost , the optimum condition is derived as:

| (94) |

This result is consistent with Eq. 62 and 65 by setting . This implies that is a rate-distortion optimization (RDO) cost of transform coding when is deterministically transformed to in the implicit isometric space and stochastically encoded with a distortion .

Appendix C Details of the networks and training conditions in the experiments

This appendix explains the networks and training conditions in Section 5.

C.1 Toy data set

This appendix explains the details of the networks and training conditions in the experiment of the toy data set in Section 5.1.

Network configurations:

FC(i, o, f) denotes a FC layer with input dimension i, output dimension o, and activate function f.

The encoder network is composed of FC(16, 128, tanh)-FC(128, 64, tahh)-FC(64, 3, linear) (for and ). The decoder network is composed of FC(3, 64, tanh)-FC(64, 128, tahh)-FC(128, 16, linear).

Training conditions:

The reconstruction loss is derived such that the loss per input dimension is calculated and all of the losses are averaged by the input dimension .

The KL divergence is derived as a summation of as explained in Eq. 2.

In our code, we use essentially the same, but a constant factor scaled loss objective from the original -VAE form in Eq. 1, such as:

| (95) |

Equation 95 is essentially equivalent to , multiplying a constant to the original form. The reason why we use this form is as follows. Let be the true ELBO in the sense of log-likelihood, such as . As shown in Eq. 57, the minimum of the loss objective in the original -VAE form is likely to be a . If we use Eq. 95, the minimum of the loss objective will be , which seems more natural form of ELBO. Thus, Eq. 95 allows estimating a data probability from in Eqs. 19 and 21, without scaling by .

Then the network is trained with using 500 epochs with a batch size of 128. Here, Adam optimizer is used with the learning rate of 1e-3. We use a PC with CPU Inter(R) Xeon(R) CPU [email protected], 32GB memory equipped with NVIDIA GeForce GTX 1080. The simulation time for each trial is about 20 minutes, including the statistics evaluation codes.

C.2 CelebA data set

This appendix explains the details of the networks and training conditions in the experiment of the toy data set in Section 5.2.

Network configurations:

CNN(w, h, s, c, f) denotes a CNN layer with kernel size (w, h), stride size s, dimension c, and activate function f.

GDN and IGDN †††Google provides a code in the official Tensorflow library (https://github.com/tensorflow/compression) are activation functions designed for image compression (Ballé et al., 2016).

This activation function is effective and popular in deep image compression studies.

The encoder network is composed of CNN(9, 9, 2, 64, GDN) - CNN(5, 5, 2, 64, GDN) - CNN(5, 5, 2, 64, GDN) - CNN(5, 5, 2, 64, GDN) - FC(1024, 1024, softplus) - FC(1024, 32, None) (for and ) in encoder.

The decoder network is composed of FC(32, 1024, softplus) - FC(1024, 1024, softplus) - CNN(5, 5, 2, 64, IGDN) - CNN(5, 5, 2, 64, IGDN) - CNN(5, 5, 2, 64, IGDN)-CNN(9, 9, 2, 3, IGDN).

Training conditions:

In this experiment, SSIM explained in Appendix G.2 is used as a reconstruction loss.

The reconstruction loss is derived as follows.

Let be a SSIM calculated from two input images.

As explained in Appendix G.2, SSIM is measured for a whole image, and its range is between and .

If the quality is high, SSIM value becomes close to 1.

Then is set to .

We also use the loss form as in Equation 95 in our code. In the case of the decomposed loss, the loss function is set to in our code. Then, the network is trained with using a batch size of 64 for 300,000 iterations. Here, Adam optimizer is used with the learning rate of 1e-3.

We use a PC with CPU Intel(R) Core(TM) i7-6850K CPU @ 3.60GHz, 12GB memory equipped with NVIDIA GeForce GTX 1080. The simulation time for each trial is about 180 minutes, including the statistics evaluation codes.

Appendix D Additional results in the toy datasets

D.1 Scattering plots for the square error loss in Section

Figure 10 shows the plots of and estimated probabilities for the square error coding loss in Section 5.1, where the scale factor in Eq. 21 is . Thus, both and show a high correlation, allowing easy estimation of the data probability in the input space. In contrast, still shows a low correlation. These results are consistent with our theory.

D.2 Ablation study using 3 toy datasets, 3 coding losses, and 10 parameters.

In this appendix, we explain the ablation study for the toy datasets. We introduce three toy datasets and three coding losses including those used in Section 5.1. We also change from to in training. The details of the experimental conditions are shown as follows.

Datasets: First, we call the toy dataset used in Section 5.1 the Mix dataset in order to distinguish three datasets. The second dataset is generated such that three dimensional variables , , and are sampled in accordance with the distributions , , and in Figure 12. The variances of the variables are the same as those of the Mix dataset, i.e., 1/6, 2/3, and 8/3, respectively. We call this the Ramp dataset. Because the PDF shape of this dataset is quite different from the prior , the fitting will be the most difficult among the three. The third dataset is generated such that three dimensional variables , , and are sampled in accordance with the normal distributions , , and , respectively. We call this the Norm dataset. The fitting will be the easiest, because both the prior and input have the normal distributions, and the posterior standard deviation, given by the PDF ratio at the same CDF, can be a constant.

Coding losses: Two of the three coding losses is the square error loss and the downward-convex loss described in Section 5.1. The third coding loss is a upward-convex loss which we design as Eq. 96 such that the scale factor becomes the reciprocal of the scale factor in Eq. 5.1:

| (96) |

Figure 12 shows the scale factors in Eqs. 5.1 and 96, where in moves within .

Parameters: As explained in Appendix C.1, is used as a hyper parameter. Specifically, , , , , , , , , , and are used.

to generate a Ramp dataset.

Figures 13 - 21 show the property measurements for all combinations of the datasets and coding losses, with changing . In each Figure, the estimated norms of the implicit transform are shown in the figure (a), the ratios of the estimated variances are shown in the figure (b), and the correlation coefficients between and estimated data probabilities are shown in the figure (c), respectively.