Quantifying the Effects of Recommendation Systems

Abstract

Recommendation systems today exert a strong influence on consumer behavior and individual perceptions of the world. By using collaborative filtering (CF) methods to create recommendations, it generates a continuous feedback loop in which user behavior becomes magnified in the algorithmic system. Popular items get recommended more frequently, creating the bias that affects and alters user preferences. In order to visualize and compare the different biases, we will analyze the effects of recommendation systems and quantify the inequalities resulting from them.

Index Terms:

recommendation systems, collaborative filtering, inequality, popularity biasI Introduction

With the increasing amount of data available, recommender systems are becoming pervasive in many online applications in order to help users navigate huge amounts of information and influence everyday decision making. By 2020, there will be around 40 trillion gigabytes of data, which will consist of social media data, emails, and internet searches [8]. All this data could be easily utilized to train and update recommendation systems in online platforms, such as Facebook, Instagram, and Amazon, in order to provide better recommendations for users.

However, because these algorithms are trained on real-world data, which may contain all sorts of social biases [13], there are concerns about the risk of artificial intelligence (AI) exacerbating and perpetuating the present biases. For example, there is increasing evidence that online media is causing political polarization [5]. This polarization, if not considered, could be reinforced by news recommendation systems that are dynamically trained as new data comes into the system. Indeed, this same phenomena can create a rich-get-richer effect that reinforces the popularity of already-popular products [9]. The issue comes when a recommendation system recommends choices that are universally popular, thus affecting the behavior of users in the system. These users will interact more with already popular items, creating biased data that will be used as input for retraining the algorithmic recommendations. This process is called the feedback loop. Chaney, Stewart, and Engelhardt discussed what happens during the feedback loop and how it would be problematic [7]. Because of this loop, online platforms might optimize recommendations based on what is considered popular with the majority group, which causes popularity bias and homogenizes users’ interests and perceptions. Popularity bias is when popular items are recommended frequently while less popular, niche products, are recommended rarely. Needs and preferences of the minority could be undermined if these issues are not considered.

Common recommendation systems, such as Amazon, are based on sales and ratings. It shows that people who bought product A also bought product B, which means novel items that have not been discovered by many other people might have a hard time surfacing and getting exposure. This tends to create the rich-get-richer effect mentioned earlier for popular items as it prevents consumers from finding better product matches because of this popularity bias [9, 12]. However, increasing the discovery of novel items could also create a bias that is more personalized. For example, if we consume news through personalized recommendations, our perspective on the world would also be narrow. The key challenge is finding a balance between accuracy of the recommendation and diversity of the recommendation set.

Figure 1 illustrates the concept of “long tail” items, which are items that have average popularity [1]. These items are considered to be good, but typically do not surface in recommendations. This is because these items are not exposed to many people, so they do not have as many indicators that convey its quality to a broader audience. The x axis represents the item rank and the y axis represents the number of ratings per item. Indicated by the curve of the graph, “short head” items are very popular and receive much more viewer attention.

In this paper, we will first discuss related works and their experiments (section 2). We introduce our claims that all filtering methods, besides the random method, increase inequality compared to optimal collaborative filtering (CF) recommendation systems and dynamic training has more inequality than static training (section 3). Next, we proceed to describe our methodology in conducting our own experiments to quantify the effects of inequality (section 4). To conduct our experiments we created several algorithm frameworks using CF and non-CF methods (section 5); this allows us to visualize the effects of each recommendation model and how they apply to real models used in online platforms (section 6). We will discuss how the results from our models convey inequalities in real life models (section 7) before we conclude (section 8).

II Related work

Similar to our approach, researchers have explored the effects on diversity of various collaborative recommendation methods by running simulations on synthetic data and assuming different models of choice or preference for the users [9, 7]. Chaney, Stewart, and Engelhardt [7] used simulations to demonstrate how using data from users exposed to algorithmic recommendations homogenizes user behavior. They created six different recommendation algorithms with different filtering methods for the experiments: popularity, matrix factorization, random, ideal, content filtering, and social filtering. All six of the approaches recommend from the set of items that existed in the system at the time of training. Their experiments showed that these methods did cause homogeneity through their simulated communities. A similar approach was taken in [9], except that the choices of consumers were modeled using the multinomial logit instead of a simple linear model of preference. In our experiments, we do not take any assumptions on the data nor the preferences of users as we compare five filtering methods using real-world data gathered from users in [10].

In October 2006, Netflix released a dataset containing 100 million anonymous movie ratings and challenged the data mining, machine learning and computer science communities to develop systems that could beat the accuracy of its recommendation system, Cinematch [4]. Through this challenge, Netflix became a contribution to the rise in popularity of rating prediction in recommendation algorithms. In regards to their algorithm, Cinematch automatically analyzes the accumulated movie ratings weekly using a variant of Pearson’s correlation to determine a list of “similar” movies. After a user provides ratings, their recommendation process uses multivariate regression in real-time based on the correlations computed previously to make a personalized recommendation. The challenge primarily was concerned with accuracy rather than inequality in recommendations. The main differences between our frameworks are that we are determining “similar” users rather than “similar” items and that our recommendation process happens offline rather than real-time. Although accuracy is important to our algorithm, our main focus is to predict and visualize the inequalities.

Many experiments, such as a music artists study [6] and a sales diversity experiment [9], were conducted to understand the behaviors of recommender systems and whether they affect diversity by reinforcing the popularity of already-popular items. These empirical studies along with other previous works [14, 16, 18] discuss issues of popularity bias and item inequality resulting from long-term effects of usual recommendation algorithms. As more people rely on recommendation systems as a method to discover new things, popularity bias will become more prevalent than it is currently if more online platforms use these algorithms. Research has been done to address the growing issue of popularity bias. As a result, methods were proposed to create a balance between accuracy and diversity, such as using a Novelty Score [3], partial clustering [14], and using multiple recommender systems in one framework [16]. It is also possible to sacrifice a small fraction of short-term recommendation accuracy in exchange for higher long-term diversity [18].

The experiments in previous works were done either using more popular datasets such as MovieLens111https://grouplens.org/datasets/movielens/ and Netflix or were based on simulations. Celma and Cano’s music study was based on Last.fm data, but its rating scale is “play counts” instead of a limited range of values. In our experiment, we use Jester’s dataset with Pearson correlation (Pearson), popularity (pop), matrix factorization (MF), random, and optimal as recommendation algorithms.

III Problem Statement

Real-world recommendation systems are trained using data from users who use the platforms. The problem arises when repeated recommendations could cause homogeneity because of the popularity bias and its continuous feedback loop. This is especially problematic through CF methods since one user’s decisions would heavily influence those of another user. We will be focusing on analyzing and quantifying the effects of CF models to answer our claim: repeated training increases inequality in CF recommendation systems.

IV Experimental Approach

We conducted offline experiments on an existing dataset from a joke recommendation system, Jester 5.0 222http://eigentaste.berkeley.edu/, that was developed at University of California, Berkeley [10]. They implemented their own CF algorithm, Eigentaste, to make the recommendations [11]. The dataset is a matrix that contains the following information:

-

1.

100 joke IDs

-

2.

73,421 user IDs

-

3.

number of jokes a user has rated

-

4.

over 4.1 million continuous ratings (-10.00 to +10.00)

We only use the first file of this dataset (Dataset 1), which contains 24,983 users and about 250,000 continuous ratings. Using the analysis on Dataset 1 will give an idea of the inequalities that might be present in their system, which could also suggest inequalities that might exist in other online platforms. A system has inequalities if the same few items are being recommended frequently, thus not all items are given equal exposure and consideration to be recommended.

IV-A Data

The actual data was organized in a matrix where the rows represented the users and the columns were the jokes. There was an additional column designated to record the number of jokes a user rated. Users had rated at least 36 jokes. For the jokes that were unrated, their values were 99 rather than leaving them blank. The graph in Figure 2 shows the popularity based on the real dataset. The shape of the graph in Figure 2 strengthens the concept of popularity bias and “long-tail” items mentioned in the beginning. It shows that bias is already present before conducting our experiment, which means there could have been recommendation inequality using Eigentaste.

Dataset 1 has no correlation between the number of ratings for a joke and its mean rating, as shown in Figure 3. More ratings for a joke does not necessarily mean it has a higher mean rating. This gives an idea of how Eigentaste made recommendations. Since it is a CF algorithm, it was focused more on recommending based on a higher mean rating rather than a high number of ratings (which could contain high and low value ratings) for a certain joke.

IV-B Cases

These are the 2 cases we used for experimental analysis.

Case 1: single training. For every test user, if the recommended joke does not have a rating, it is labeled as “no rating,” but the joke ID is still recorded. Unlike Case 2, any ratings and data on each test user are not incorporated into the training set.

Case 2: repeated training. For every test user, if the recommended joke does not have a rating, we move to the next joke with the second best recommendation score. If we do not find a joke with a rating within the top 3 recommended jokes, then we simply do not have a rating for the test user. The new inputs that are included in the retraining of the training set are the ratings of the random jokes during the profiling phase and the rating of the recommended joke. For example, if user Y was given 10 random jokes to rate and was given 1 recommendation afterwards, then the ratings of those 11 jokes are incorporated into the training set for retraining. We set , where new inputs are incorporated only after every th iteration of an algorithm.

IV-C Method

In this section, we will lay out the general procedure for conducting our experiments.

Reorganize data and filter users who have rated at least 50% of the jokes. Little data processing and cleaning was needed other than adding labels to categorize the data for easy manipulation. We used about 20,000 users who had rated at least 50% of the jokes to decrease the chance of not having a rating for a joke that could be recommended through our system. Since we are only using the data from Dataset 1, any rating predictions we make are used as a guide and are not treated as actual data as they will not be true to the user. The analysis we are performing is reliant on true data from the user so we can preserve their true preferences that are conveyed through the previously rated jokes. Because of this aspect, we attempt to lower the chances of making a recommendation that has no rating data by taking users who have rated at least 50% of the jokes.

Divide users into 2 groups: training set and test set. For the training set, we randomly picked 500 users for a smaller training set to serve as the starting point since for the filtering methods, we need data in the system first before we can make recommendations. For the test set, we randomly chose about 4000 users from the data to represent the “new users” being added through our system. As we run this experiment multiple times, we randomly change the training and test set with different users each time.

Create 2 different cases for filtering methods. Case 1 is single training (static) and Case 2 is repeated training (dynamic). To quantify the effect of the feedback-loop in recommendation systems, we compare dynamic training vs a one time static training of the recommendation methods. Both cases will start with 500 users from the training set. In single training, the number of users in the training set stays constant during the whole experiment. Static training will only utilize ratings of the static set to recommend jokes to the test users (“new users”). In repeated training, the starting set of users will be updated, thus the number of users in the system will not stay constant during the experiment. After every 100 recommendations, rating data from test users are incorporated into the starting set and their data will be considered in the calculation when making recommendations for the rest of the test users. Dynamic training mimics how real online platforms update data. Because of how often new users enter the system, the data that the model is making predictions on must have a similar distribution as the data on which the model was trained in order to make accurate predictions. Data distributions can be expected to drift over time, so retraining a model on newer data is common in online platforms since the data distribution could have deviated significantly from the original training data distribution [15]. Online platforms retrain their models periodically to ensure the accuracy of their recommendations. By using multiple methods, we are able to see the inequalities that are present and make comparisons about the impact of retraining on the system.

Run Case 1 and Case 2 each multiple times on 10 jokes for the filtering methods. The gauge set in the data consisted of 10 jokes that every user in Dataset 1 has rated. This serves as the common set of jokes used to profile the users before making recommendations. We run the algorithm to simulate the action of giving the test users 10 jokes from the gauge set to rate. We follow the same procedure for both cases for the above methods. Based on those ratings, we compare the test user to the current database of users and its predicted ratings to generate a recommendation.

Record which jokes were recommended, the order of recommended jokes, and their ratings. For each test user, only 1 recommendation is made. We record the joke ID and the rating of the recommended joke for each test user for both static training and dynamic training. We also record the order of the recommended jokes as it is needed to calculate the inequalities overtime. For the jokes that have no rating, we categorize them as “no rating,” but they are still part of the recommendation order and inequality calculation. For dynamic training, since we are retraining, it is ideal to obtain a recommended rating rather than no rating. More details will be discussed in the next section on how the algorithm works for the 2 cases.

Use the Gini coefficient to measure the inequalities overtime. To measure the inequalities, we calculate the frequencies of joke i (xi) and joke j (xj) being recommended out of n number of recommended jokes. These frequencies are then inputted into the equation defined below to calculate the Gini coefficient G:

| (1) |

The value output from the formula is between the range of 0 and 1— 0 being perfect equality and 1 being maximal inequality. During the experiment, we calculate the Gini every 100 recommendations to produce a visualization of the inequality overtime.

V Recommendation Algorithms

Next, we describe in detail all the recommendation methods analysed in our simulations. For Pearson correlation and matrix factorization (MF), which are CF methods, the algorithms are more complex compared to the popularity, random, and optimal methods, which are non-CF methods.

V-A Random Method

For this method, we expect the Gini coefficient to be a low value because the randomness of the recommendations should give each joke an equal chance at being recommended. This means that all 100 jokes should have been recommended during the experiment. The jokes are treated the same in quality, which means their ratings have no advantage when using the random method. Thus, there is very little recommendation inequality as the Gini coefficient is low. Although, there is little inequality, it is imbalanced in terms of joke quality. Chances of getting poor recommendations is high, so it is important to find a balance between recommendation inequality and joke quality. A recommendation system would not be effective if users get recommended items that do not match their preferences. Using random filtering methods increases the chances of getting poor recommendations since they are not personalized.

V-B Popularity Method

Our popularity method takes the most popular jokes, the ones with the highest average rating, and recommends one of them to the test users. In other words, a joke’s probability of being recommended is proportional to its rating. This means that if a joke has a high probability of being recommended, then it has a high mean rating. We expect this type of popularity method to have the highest inequality because it only recommends a select amount of jokes that have high probabilities and high mean ratings. The Gini coefficient for this method is the highest out of all the filtering methods. However, if there are many popular jokes, such that among the 100 jokes, the majority had similar ratings that exhibit their popularity, then the inequality would not be as obvious. In the real world, recommendation systems do not always recommend only a few popular items. Depending on the platform and the amount of user data it has, popularity could span to a majority of items, which could lower the overall recommendation inequality.

We will not be focusing on the popularity method with maximal inequality mentioned previously as most online platforms would not use this type of algorithm. Instead, we will be using these two popularity methods: 1) one that uses the joke’s mean rating scaled to an exponent of 1 and the other that uses the joke’s mean rating scaled to an exponent of 2. The methods are defined by the equation below:

| (2) |

is the probability of joke being recommended, is the mean rating of joke , is the exponent, i.e 1 or 2, and is the sum of the scaled mean ratings of jokes. Scaling it to exponent 2 increases and exaggerates the probability of a joke being picked based on its popularity, making it even more likely to pick a popular joke but not restricting it to only a select amount of popular jokes.

V-C Pearson Correlation

We modified a simple CF Pearson correlation algorithm written in 2012 by Wai Yip Tung [17]. The main idea is that we compute the similarity score between a user X from the training set for all users and a user Y from the test set. Using that similarity score as the weight, we calculate a value, in which we label as the similar rating score, by combining the weight with each joke rating. We repeat this with all users in the training set. In other words, each test user will have at least 500 similarity scores, one for each user in the training set, and 100 similar rating scores, one for each joke. Although 100 similar rating scores are given, we only use at most 90 ratings since we exclude the gauge set. In the original dataset from Jester 5.0, the gauge set was not part of the recommendations so we excluded it so our recommendation process would be similar to Jester. The similarity score is calculated using Pearson correlation X,Y, which is defined as:

| (3) |

Covariance cov for n total ratings is

| (4) |

where Xi are the ratings of user X, is the mean of Xi, Yj are the ratings of user Y and is the mean of Yj. The covariance is the joint variability of the 2 users. Pearson correlation gives a more accurate range (from 0 to 1) on user similarity. Close to 0 means that users have different preferences and close to 1 means users have similar preferences.

To calculate and normalize the recommendation score for each joke, we divide the sum of the similar rating scores by the sum of the similarity scores. We sort the jokes with the highest recommendation score and exclude any recommended jokes that are part of the gauge set. The joke with the highest recommendation score is recommended. There are some cases where the top recommended joke does not have a rating recorded in Dataset 1. This is because that our CF algorithm behaves differently and would not necessarily give the exact same recommendations as Eigentaste. Nevertheless, our algorithm handles that aspect differently in both cases.

V-D Matrix Factorization

For MF, we modified another simple algorithm by Nick Becker in 2016 [2]. The process of using the training set and test set is similar to the CF Pearson algorithm, but the way it creates recommendations is different. This algorithm utilizes singular value decomposition (SVD), which decomposes a matrix to a smaller approximation of the original matrix Mathematically, it decomposes into two unitary matrices and a diagonal matrix:

| (5) |

where is a user ratings matrix, is the user “features” matrix, is the diagonal matrix of singular values (weights), and is the joke “features” matrix. represents how much users “like” each feature and represents how relevant each feature is to each joke. To get the lower rank approximation, we take these matrices and keep only the top features, which we think of as the most important underlying taste and preference vectors [2]. In other words, these features are not explicitly passed when computing the recommendations. It is through matrix multiplication that it had generated some hidden “features” and picked up underlying preferences of users. After multiplying the 3 matrices, we get prediction ratings for each user. If prediction ratings for a user are high for certain jokes, then we recommend those jokes to the user.

V-E Optimal Method

We use this model to compare against the real preferences of people. It serves as the baseline for what people actually like, which encourages recommendation systems to improve their frameworks to meet people’s expectations. In the optimal method, the Gini coefficient stabilizes at a value very quickly. Optimal does not necessarily mean perfect equality, but in a sense that all users like the items being recommended to them. Note that this is very difficult in the real world. Recommendation algorithms will recommend items close to a user’s preferences, but they are not always accurate. There are times where users will reject the recommendations because they did not match their interests.

Since we defined the optimal case as having all users like the items being recommended, we assume those items have the highest ratings. Thus, the method is defined as:

| (6) |

where is a set of ratings for each user . There are a total of joke ratings for each user. To find the optimal joke for user , we find the highest or maximum rating in , which is defined below:

| (7) |

.

Unlike the cases for single training and repeated training, all recommended jokes will have ratings recorded previously because we are taking the highest rating that exists in Dataset 1 for each test user. For each user in this experiment, we find the joke that has the highest rating and record the joke ID and its rating. From here, the procedure for finding the Gini is the same as the above cases.

VI Results

In this section, we compare the experiments that resulted from our algorithms and evaluate the implications of the results.

In Figure 4, the optimal method is compared to all the other methods as well as the original data distribution. Comparing the graph to the earlier graph in Figure 2, the shape of this graph shows it is less biased by a huge degree. It is not perfect uniformity, as that would be impossible in the real world. Making it completely uniform would be difficult and unrealistic as there would have to be a constant number of people who like each joke. By having the optimal model, it creates a baseline for comparison when analyzing the various methods.

Figure 6 shows similar patterns of increasing inequalities in both static and dynamic training for Pearson, MF, and popularity methods compared to the optimal method. In single training, most methods stayed relatively with the same inequality as more recommendations were made overtime. The slopes of the lines are low, which shows that they are stabilizing in inequality with their recommendations. For the optimal, it appears to be higher in inequality compared to random, but, as mentioned earlier, recommendation inequality is not the only factor in determining an effective recommendation method. If inequality is too low, then users would frequently get recommendations that might not correspond to their preferences. In dynamic training, the graph shows a clear increase in growth for most of the methods, especially the popularity methods. The growth in Pearson is not as obvious, but its Gini and slope is greater than its counterpart in static training. Since various recommendation methods operate differently and can be more robust than others, their approaches can vary in inequality. Little changes between static and dynamic training could mean that the method is more robust than others. Nevertheless, in both cases, these recommendation methods have higher inequality than the optimal during the majority of the recommending process.

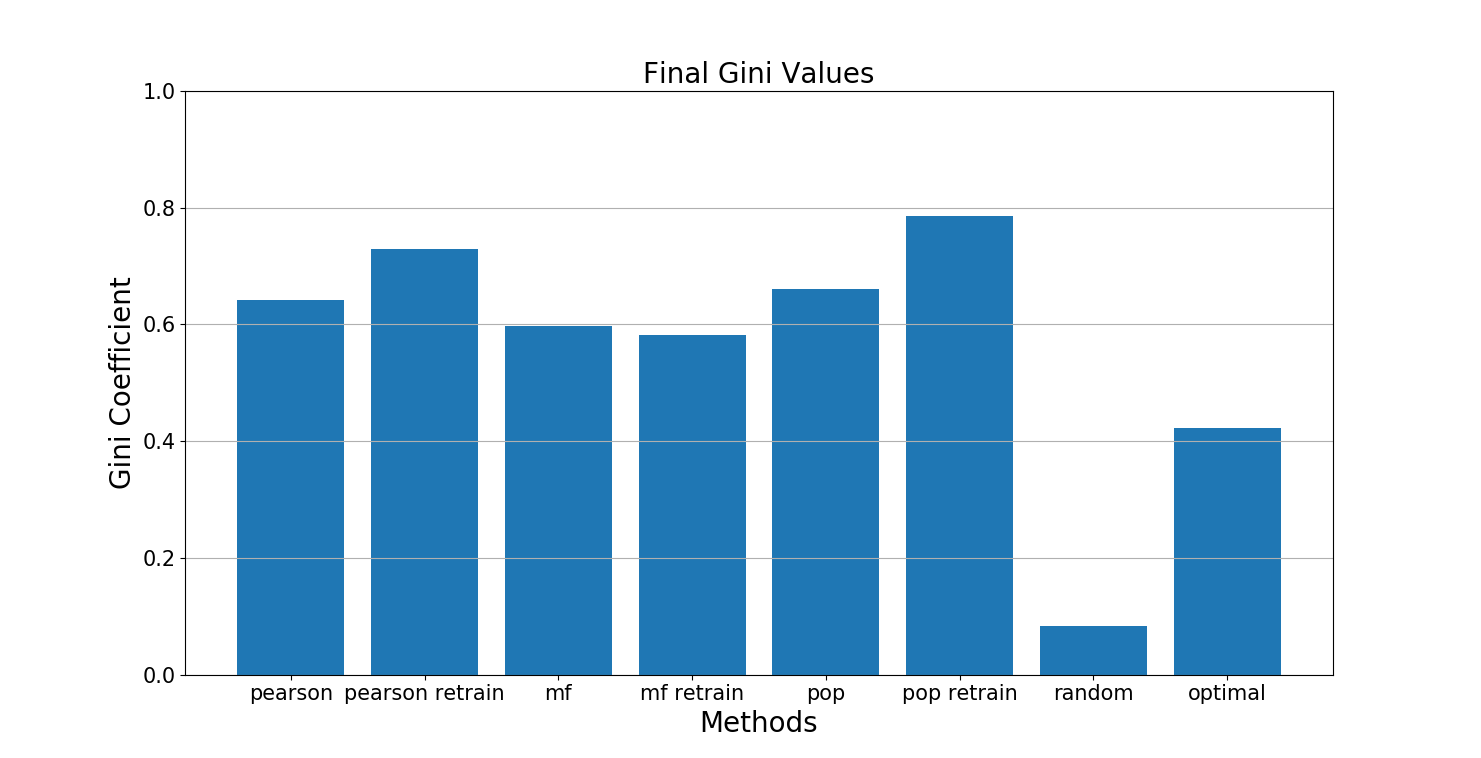

In Figure 5, we recorded the median Gini values for multiple trials of the experiment. The graph for the optimal Gini is around 0.4. From this experiment, we define the Gini of about 0.4 as the optimal case if a recommendation system recommends jokes that every user would like. In other words, giving recommendations that satisfies every user’s unique preferences shows that a diverse set of jokes are being recommended in the optimal case. Looking at Figure 5, the final Gini value of the optimal is the lowest, not including the random method, which proves that our claim that various filtering methods increase inequalities in CF recommendation systems.

VII Discussion

Based on our results, we could apply our findings to other datasets. For example, if we had a dataset of user ratings or likes from online platforms, such as Amazon, Instagram, Netflix, etc., analyzing their datasets will show if there is recommendation inequality present in their systems. This experiment works best with platforms that utilize CF models, but it could be modified for other types of filtering methods. Following our definition of the optimal case, the ideal recommendation system for live platforms might not have a Gini of exactly 0.4, but it should be lower compared to the Gini value of the actual recommendation framework. Our results suggest that real recommendation systems have plenty of room for improvement.

VIII Conclusion

An exploratory analysis of a CF dataset visualizes the impact of recommendation systems on users. We found that common recommendation methods such as Pearson, MF, and popularity, have more inequality in recommendations than the optimal and dynamic training has more inequality than static training, which reinforces the idea of feedback loop (section 6). These findings create awareness for designers and consumers in live recommendation platforms. Designers need to consider how these inequalities will affect their users. If these effects are not addressed, they will be amplified and become problematic as more users conform to interacting with only certain recommended items, creating the lack of diversity. Every system will have some bias; the best we can do is to reduce it. Every dataset is represented differently, and using certain recommendation methods might increase recommendation inequality. The first step in solving this issue is to increase awareness that using different recommendation methods affects the amount of inequality present. Once people realize that, then they can make individual choices or propose solutions for these recommendation algorithms in order to achieve the balance in quality and diversity shown in the optimal method.

Acknowledgements

This work was performed under REU Site program and funded by NSF grant #1659886.

References

- [1] Himan Abdollahpouri, Robin Burke, and Bamshad Mobasher. Managing popularity bias in recommender systems with personalized re-ranking. arXiv preprint arXiv:1901.07555, 2019.

- [2] Nick Becker. Matrix factorization for movie recommendations in python, 2016.

- [3] Punam Bedi, Anjali Gautam, Chhavi Sharma, et al. Using novelty score of unseen items to handle popularity bias in recommender systems. In 2014 International Conference on Contemporary Computing and Informatics (IC3I), pages 934–939. IEEE, 2014.

- [4] James Bennett, Stan Lanning, et al. The netflix prize. In Proceedings of KDD cup and workshop, volume 2007, page 35. New York, NY, USA., 2007.

- [5] James E Campbell. Polarized: Making sense of a divided America. Princeton University Press, 2018.

- [6] Òscar Celma and Pedro Cano. From hits to niches?: or how popular artists can bias music recommendation and discovery. In Proceedings of the 2nd KDD Workshop on Large-Scale Recommender Systems and the Netflix Prize Competition, page 5. ACM, 2008.

- [7] Allison JB Chaney, Brandon M Stewart, and Barbara E Engelhardt. How algorithmic confounding in recommendation systems increases homogeneity and decreases utility. arXiv preprint arXiv:1710.11214, 2017.

- [8] Jeff Desjardins. How much data is generated each day?, 2019.

- [9] Daniel Fleder and Kartik Hosanagar. Blockbuster culture’s next rise or fall: The impact of recommender systems on sales diversity. Management science, 55(5):697–712, 2009.

- [10] Ken Goldberg. Anonymous ratings from the jester online joke recommender system, 2019.

- [11] Ken Goldberg, Theresa Roeder, Dhruv Gupta, and Chris Perkins. Eigentaste: A constant time collaborative filtering algorithm. Information Retrieval, 4(2):133–151, 2001.

- [12] Kartik Hosanagar. Recommended for you’: How well does personalized marketing work? Report, Wharton School of University of Pennsylvania, 2015.

- [13] Ninareh Mehrabi, Fred Morstatter, Nripsuta Saxena, Kristina Lerman, and Aram Galstyan. A survey on bias and fairness in machine learning. arXiv preprint arXiv:1908.09635, 2019.

- [14] Yoon-Joo Park and Alexander Tuzhilin. The long tail of recommender systems and how to leverage it. In Proceedings of the 2008 ACM conference on Recommender systems, pages 11–18. ACM, 2008.

- [15] Amazon Web Services. Retraining models on new data, 2019.

- [16] Xiaoyu Shi, Ming-Sheng Shang, Xin Luo, Abbas Khushnood, and Jian Li. Long-term effects of user preference-oriented recommendation method on the evolution of online system. Physica A: Statistical Mechanics and its Applications, 467:490–498, 2017.

- [17] Wai Yip Tung. Collaborative filtering in 9 lines of code, 2012.

- [18] An Zeng, Chi Ho Yeung, Matúš Medo, and Yi-Cheng Zhang. Modeling mutual feedback between users and recommender systems. Journal of Statistical Mechanics: Theory and Experiment, 2015(7):P07020, 2015.