QR Decomposition of Dual Matrices and its Application to Traveling Wave Identification in the Brain

Abstract

Matrix decompositions in dual number representations have played an important role in fields such as kinematics and computer graphics in recent years. In this paper, we present a QR decomposition algorithm for dual number matrices, specifically geared towards its application in traveling wave identification, utilizing the concept of proper orthogonal decomposition. When dealing with large-scale problems, we present explicit solutions for the QR, thin QR, and randomized QR decompositions of dual number matrices, along with their respective algorithms with column pivoting. The QR decomposition of dual matrices is an accurate first-order perturbation, with the Q-factor satisfying rigorous perturbation bounds, leading to enhanced orthogonality. In numerical experiments, we discuss the suitability of different QR algorithms when confronted with various large-scale dual matrices, providing their respective domains of applicability. Subsequently, we employed the QR decomposition of dual matrices to compute the DMPGI, thereby attaining results of higher precision. Moreover, we apply the QR decomposition in the context of traveling wave identification, employing the notion of proper orthogonal decomposition to perform a validation analysis of large-scale functional magnetic resonance imaging (fMRI) data for brain functional circuits. Our approach significantly improves the identification of two types of wave signals compared to previous research, providing empirical evidence for cognitive neuroscience theories.

Key words. Dual matrices, QR decomposition, Proper orthogonal decomposition, Randomized algorithm, Traveling wave identification, Brain dynamics

Mathematics Subject Classification. 15A23, 15B33, 65F55

1 Introduction

Time series analysis is a technique for uncovering hidden information and patterns within time-ordered data. Principal Component Analysis (PCA) is employed to interpret the meaning of principal components through orthogonal transformations in time series processes [38]. In statistics, only a small fraction of components contain a significant amount of initial information, and their importance decreases sequentially. However, despite the orthogonality between these principal components, they may not necessarily be independent.

A natural idea is to utilize dual numbers to augment the original data with differentials related to time. This concept has been applied in the context of traveling wave detection [34]. However, this approach exhibits limitations in terms of orthogonality, particularly in the application of brainwave detection. In this paper, we introduce the notion of appropriate orthogonal decomposition, considering the fact that the target time series also represents signal waves. We propose the QR decomposition of dual matrices as an effective and efficient solution to enhance traveling wave detection.

Clifford [5] initially introduced dual numbers in 1873 as a hyper-complex number system, constitute an algebraic structure denoted as , where ‘’ and ‘’ are real numbers, and satisfies with . These dual numbers can be viewed as an infinitesimal extension of real numbers, representing either minute perturbations of the standard part or time derivatives of time series data.

Dual numbers find applications across diverse domains, including robotics [23], computer graphics [18], control theory [10], optimization [3], automatic differentiation [9], and differential equations [11], owing to their distinctive properties. Consequently, researchers are increasingly exploring the fundamental theoretical aspects of dual matrices, encompassing eigenvalues [21], the singular value decomposition (SVD) [15, 34], the low-rank approximations [19, 36, 37], dual Moore-Penrose pseudo-inverse [1, 6, 17, 27, 28, 29, 31], dual rank decomposition [32], and the QR decomposition [16], among others.

The properties and algorithms for dual matrix decomposition have long been a focal point of research. Previous studies primarily utilized the Gram-Schmidt orthogonalization process or redundant linear systems via Kronecker products to compute matrix decompositions. For instance, Pennestrì et al. [17] employed the Gram-Schmidt orthogonalization process to compute the QR decomposition of dual matrices. Qi et al. [20] used redundant linear systems based on Kronecker products to solve the Jordan decomposition of dual complex matrices with a Jordan block standard part. These approaches are not well-posed for solving large-scale problems.

Recently, Wei et al. [34] introduced a compact dual singular value decomposition (CDSVD) algorithm with explicit solutions. By observing the solving process, we realize that the essence of computing these decompositions is solving a generalized Sylvester equation. Building upon this idea, we provide the QR decomposition of dual matrices (DQR), the thin QR decomposition of dual matrices (tDQR), and the randomized QR decomposition of dual matrices (RDQR), all of which account for column-pivoting scenarios. The QR decomposition of dual matrices also offers an effective approach for computing the dual Moore-Penrose generalized inverse (DMPGI), ensuring heightened precision in the resultant outcomes. The DQR, tDQR, and RDQR proposed here are the proper orthogonal decomposition that we require in time series models. In traveling wave detection, we can see that the DQR, tDQR, and RDQR algorithms perfectly fulfill this function. Moreover, it exhibits stronger orthogonality in large-scale functional magnetic resonance imaging (fMRI) data on brain activity, enabling better identification of traveling wave signals in brain functional areas. In large-scale problems, we can also observe that our proposed QR decomposition is faster than the CDSVD.

The rest of this paper is structured as follows. In Section 2, we present some operational rules and notations for dual numbers and introduce the Sylvester equation. In Section 3, we introduce the concepts of proper orthogonal decomposition for the dual matrix, leading to the development of the QR decomposition (DQR), thin QR decomposition (tDQR), and randomized QR decomposition (RDQR) for the dual matrix, all of which account for column-pivoting scenario. We demonstrate the conditions for the existence of these decompositions and provide algorithms for their computations. In Section 4, we provide a perturbation analysis perspective to demonstrate that the QR decomposition of dual matrices is an accurate first-order perturbation, satisfying the rigorous perturbation bounds for the Q-factor in the QR decomposition. This confirms its improved orthogonality. In Section 5, we compare the computational times of different QR decompositions for large-scale dual matrices and find that their applicability aligns with theoretical expectations. Subsequently, we employed the QR decomposition of dual matrices to compute the DMPGI, thereby attaining results of higher precision. Moreover, we verify that the QR decomposition, based on the idea of proper orthogonal decomposition, can effectively identify traveling waves. Additionally, we demonstrate its utility in accurately characterizing brain functional regions using functional magnetic resonance imaging (fMRI) data on wave patterns.

2 Preliminaries

In this section, we briefly review some basic results for dual matrices and the Sylvester equation. Furthermore, scalars, vectors, and matrices are denoted by lowercase (), bold lowercase (), and uppercase letters (), respectively. In some representations of matrix results, we will employ the notation used in MATLAB.

2.1 Dual numbers

In mathematics, dual numbers form an extension of real numbers by introducing a second component with the infinitesimal unit satisfying and . This component plays a crucial role in capturing infinitesimal changes and uncertainties in various mathematical operations.

We denote as the set of dual real numbers. Let be a dual real number where and . In this paper, and are represented as the standard part and the infinitesimal part of , respectively. If , we say that is appreciable. Otherwise, is infinitesimal. For two real matrices , represents a dual real matrix, where is the set of dual real matrices. The following proposition shows the preliminary properties of dual real matrices.

Proposition 2.1.

[21] Suppose that , and . Then

-

1.

The transpose of is . Moreover,

-

2.

The dual matrix is diagonal, if the matrices and are both diagonal.

-

3.

The dual matrix is orthogonal, if , where is an identity matrix. Furthermore, , confirms the orthogonal property of the dual matrix .

-

4.

The dual matrix is invertible (nonsingular), if for some . Furthermore, is unique and denoted by , where .

-

5.

When , the dual matrix is defined to have orthogonal columns if . In particular, is a real matrix with orthogonal columns, and , where denotes a zero matrix.

2.2 Sylvester equation

The Sylvester equation, named in honor of the 19th-century mathematician James Joseph Sylvester, represents a prominent matrix equation within the domain of linear algebra. It finds extensive utility across diverse realms of scientific and engineering disciplines, encompassing control theory, signal processing, system stability analysis, and numerical analysis. The Sylvester equation emerges prominently in inquiries linked to time-invariant linear systems, with its solutions serving as indispensable tools for elucidating the characteristics and attributes of these intricate systems. The equation is of the form,

| (2.1) |

where , and are given matrices of appropriate dimensions, and is the unknown matrix that we seek to find. There exists a unique solution exactly when the spectra of and are disjoint. Here, we first introduce the concept of the Moore-Penrose pseudoinverse.

Definition 2.1.

[13] Given , its Moore-Penrose pseudoinverse, denoted as which is a matrix that satisfies the following four properties,

| (2.2) |

Then the solution form of the generalized Sylvester equation can be given by the following theorem.

Theorem 2.1.

Theorem 2.1 constitutes a pivotal conclusion, holding significant relevance in the subsequent decomposition of dual matrices.

3 QR Decomposition of Dual Matrices

In prior research [34], the compact SVD decomposition of dual matrices is employed to perform a proper orthogonal decomposition of dual matrices for traveling wave identification. This prompted our exploration of whether QR decomposition could be leveraged for proper orthogonal decomposition in the context of traveling wave identification. In this section, we initially establish the existence of QR decomposition for dual matrices and propose an algorithm. Subsequently, we present an algorithm for QR decomposition with column pivoting in the standard part of dual matrices. We then extend our approach by introducing the concept of thin QR decomposition [13] and presenting algorithms for both thin QR decomposition of dual matrices and thin QR decomposition with column pivoting in the standard part. Furthermore, we harness the idea of randomized QR decomposition with column pivoting [7] to formulate an algorithm for randomized QR decomposition with column pivoting in the standard part of dual matrices. The latter two categories of algorithms are specifically designed for addressing large-scale data scenarios where , enables a proper orthogonal decomposition.

3.1 QR Decomposition of Dual Matrices (DQR)

Theorem 3.1.

(DQR Decomposition) Given . Assume that is the QR decomposition of , where is an orthogonal matrix and is an upper triangular matrix. Then the QR decomposition of the dual matrix where is an orthogonal dual matrix and is an upper triangular dual matrix exists. Additionally, the expressions of , , the infinitesimal parts of , are

| (3.1) |

where is a skew-symmetric matrix.

Assuming and , we can derive the lower triangular portion’s expression for as

| (3.2) |

where and the remaining lower triangular portion is entirely composed of zeros.

Proof.

Assume the QR decomposition of exists, then we have that

| (3.3) |

by simple computation.

By treating as and as , the given equation in (3.3) transforms into a Sylvester equation involving and . Leveraging Theorem 2.1, if and only if

| (3.4) |

which holds true identically by the orthogonal nature of and the fact , one can derive the solution

| (3.5) |

where and are arbitrary.

Upon examining the first expression of in (3.5), one can deduce, based on the established fact that ,

| (3.6) | ||||

Likewise, drawing on the foundational premise of and , we can derive the second expression of in (3.5) into

| (3.7) | ||||

Subsequently, the determination of arbitrary values for becomes imperative. Notably, the orthogonal nature of the dual matrix and the upper triangular configuration of the dual matrix can be employed as constraining equations to ascertain the values of . Primarily, our scrutiny revolves around the orthogonal property of the dual matrix . This leads us to consider the following

| (3.8) |

Substituting (3.7) into the term in (3.8) yields

| (3.9) |

Hence, (3.8) can be transformed into

| (3.10) |

Here, owing to the orthogonal and invertible nature of matrix , we can denote (thus equivalently, , then the investigation into the values of can be transmuted into an exploration of the values pertaining to ). According to (3.9), it follows that is a skew-symmetric matrix. Through the skew-symmetric matrix , we can attain expressions for and ,

| (3.11) |

Now, we must revisit the upper triangular property of the dual matrix to determine . Upon substituting the expression into (3.6), we obtain

| (3.12) |

We denote and where . By partitioning the matrices column-wise, (3.12) is transformed into the following system of linear equations,

| (3.13) |

Exploiting the upper triangular nature of matrix , along with and where , the system (3.13) can be transmuted into

| (3.14) |

Recognizing the upper triangular structure of , it follows that for , the lower entries are all zeros. Consequently, also requires computation solely for the lower entries , thereby ensuring the maintenance of ’s skew-symmetric property.

Given , it follows that all the elements . Hence, the system of equations has the solutions

| (3.15) |

Thus, we complete the proof.

Theorem 3.1 furnishes the proof of existence for the QR decomposition of the dual matrices, accompanied by a delineation of the construction process within the proof. In Algorithm 1, we furnish pseudocode for the QR decomposition of the dual matrices.

In practical traveling wave detection [34], it becomes necessary to perform column pivoting in the standard components of the QR decomposition. Thus, suppose that the QR decomposition with column pivoting in the standard part of exists, then we have

| (3.16) |

Building upon (3.16), we can employ a similar approach as in Theorem 3.1 to complete the proof. We omit the details here. Algorithm 2 presents the pseudocode for the QR decomposition with column pivoting in the standard part of the dual matrix (DQRCP).

3.2 Thin QR Decomposition of Dual Matrices (tDQR)

In Algorithm 1, it is evident that while computing the QR decomposition of the dual matrix , in order to construct the skew-symmetric matrix , a space of dimension is required. In the context of large-scale numerical computations, a large value of can have a detrimental impact on our calculations. Therefore, this necessitates our contemplation of thin QR decomposition of dual matrices. The concept of thin QR decomposition was initially introduced by Golub and Van Loan [13]. For a matrix with full column rank, the thin QR decomposition entails the unique existence of a matrix with columns orthogonality and an upper triangular matrix with positive diagonal entries. Subsequently, we proceed to present the thin QR decomposition of the dual matrices (tDQR decomposition) based on this notion.

Theorem 3.2.

(tDQR Decomposition) Given where is full column rank. Assume that is the thin QR decomposition of , where is a matrix with orthogonal columns and is an upper triangular matrix with positive diagonal entries. Then the thin QR decomposition of the dual matrix where is a dual matrix with orthogonal columns and is an upper triangular dual matrix exists. Additionally, the expressions of , , the infinitesimal parts of , are

| (3.17) |

where is a skew-symmetric matrix.

We can derive the lower triangular portion’s expression for as

| (3.18) |

where .

The proof can be found in the Appendix A.

Theorem 3.2 provides the thin QR decomposition of dual matrices (tDQR), offering an efficient alternative for the QR decomposition of dual matrices (DQR) when dealing with large-scale problems where . Algorithm 3 furnishes pseudocode for the tDQR.

Similar to Algorithm 2, we can derive an algorithm for the thin QR decomposition with column pivoting in the standard part of the dual matrix based on analogous reasoning. Algorithm 4 presents the pseudocode for the thin QR decomposition with column pivoting in the standard part of the dual matrix (tDQRCP).

3.3 Randomized QR Decomposition with Column Pivoting of Dual Matrices (RDQRCP)

In practical applications utilizing QR decomposition with column pivoting, it is often sufficient to compute only the first rows of . Given an matrix , we seek a truncated QR decomposition for

| (3.19) |

where is orthonormal, is a permutation, and is upper triangular. Addressing this concern, Duersch and Gu devised a randomized algorithm for QR decomposition with column pivoting [7]. This truncated QR decomposition employing randomized algorithms proves to be highly effective for numerous low-rank matrix approximation techniques. According to the results of the Johnson-Lindenstrauss lemma [33], randomized sampling algorithms maintain a high probability guarantee of approximation error while simultaneously reducing communication complexity through dimensionality reduction. The Johnson-Lindenstrauss lemma implies that let represent the -th column () of . We construct the sample columns by a Gaussian Independent Identically Distributed (GIID) compression matrix . The 2-norm expectation values and variance of are,

| (3.20) |

Moreover, the probability of successfully detecting all column norms as well as all distances between columns within a relative error for is bounded by

| (3.21) |

We can select the column with the largest approximate norm by using the sample matrix . The remaining columns can be processed as represented in Algorithm 5, with detailed proofs available in the work by Duersch and Gu [7].

Building upon the randomized QR decomposition with column pivoting for (3.19) as illustrated in Algorithm 5, we present a randomized QR decomposition with column pivoting in the standard part of dual matrices.

Theorem 3.3.

(RDQRCP Decomposition) Given . Assume that is the randomized QR decomposition with column pivoting of , where , is a matrix with orthogonal columns, is a truncated upper trapezoidal matrix and is a permutation matrix and . Then the randomized QR decomposition with column pivoting in the standard part of the dual matrix where is a dual matrix with orthogonal columns and is a truncated upper trapezoidal dual matrix exists if and only if

| (3.22) |

Additionally, the expressions of , , the infinitesimal parts of , are

| (3.23) |

where is a skew-symmetric matrix.

We can derive the lower triangular portion’s expression for as

| (3.24) |

where .

The proof can be found in the Appendix A.

Theorem 3.3 provides the existence of a randomized QR decomposition with column pivoting in the standard part of dual matrices. Algorithm 6 presents its pseudocode.

4 Perturbation Analysis of the Q-factor

There has been extensive research [24, 26] on perturbation analysis for the thin QR decomposition of a matrix with full column rank, where and . Suppose that is a small perturbation matrix such that has full column rank, then has a unique QR factorization

| (4.1) |

where has orthonormal columns and is upper triangular with positive diagonal entries. Perturbation analysis for the Q-factor in the QR factorization has received extensive attention in the literature [24, 26]. The infinitesimal part within dual numbers possesses highly distinctive properties

| (4.2) |

Hence, if we view the decomposition of dual number matrices as the decomposition of matrices with perturbed terms, then what we obtain is an accurate first-order approximation. We substitute for in (4.1), for , and for . Hence, using dual numbers, we can obtain the explicitly accurate first-order perturbation [14] of the QR factorization,

| (4.3) |

This allows us to view the QR decomposition of dual matrices from the perspective of perturbation analysis. For the three types of perturbation analyses previously mentioned and given conditions, it is evident that we can satisfy all of them. Stewart [24] initially provided perturbation bounds for the Q-factor in QR decomposition under componentwise computation,

| (4.4) |

Subsequently, Sun [26] improved rigorous perturbation bounds for the Q- factor when is given,

| (4.5) |

Now, we utilize the examples in [26] to test whether our tDQR algorithm satisfies the perturbation upper bounds as indicated in (4.5). Let

| (4.6) |

where . Table 1 illustrates the perturbation errors obtained through the MATLAB QR algorithm and our tDQR decomposition in Algorithm 3 for different small values of . Clearly, through numerical experiments, we can observe that the perturbation errors obtained using dual matrices are smaller and adhere to the perturbation bounds given by (4.5). This further supports the notion that dual matrix QR decomposition is an explicitly accurate first-order approximation with improved orthogonality.

| 1e-1 | 1e-2 | 1e-5 | 1e-8 | |

| 0.24310492 | 0.02431049 | 2.43104916e-05 | 2.43104915e-08 | |

| 0.33558049 | 0.03162668 | 3.13819444e-05 | 3.13816983e-08 | |

| 0.31381698 | 0.03138170 | 3.13816980e-05 | 3.13816980e-08 | |

| 1.04034046 | 0.10403405 | 1.04034046e-04 | 1.04034045e-07 |

5 Numerical Experiments

In this section, we compare the various proposed QR algorithms for the dual matrix and assess their respective suitability for different scenarios. Next, we utilize the QR decomposition of dual matrices to construct and prove the existence of dual Moore-Penrose generalized inverse (DMPGI). Numerical examples demonstrate that our approach achieves higher precision compared to previous methods. Subsequently, we verify the effectiveness of QR algorithms for the dual matrix in traveling wave detection for composite waves. Finally, we apply the proposed QR algorithm of the dual matrix to traveling wave detection in large-scale brain functional magnetic resonance imaging (fMRI) data in the field of neurosciences, significantly enhancing the efficiency relative to previous algorithms. The code was implemented using Matlab R2023b and all experiments were conducted on a personal computer equipped with an M2 pro Apple processor and 16GB of RAM, running macOS Ventura 13.3.

5.1 Numerical Comparison

In this section, we compare the runtime of the DQR, the DQRCP, the tDQ, and the tDQRCP decompositions of a dual matrix under various scenarios where , and , to assess their performance at different scales. We construct a GIID dual matrix as the synthetic data for evaluating and comparing. The assessment criteria comprise runtime measurements due to the absence of uniform norms, rendering computational error comparisons challenging. To ensure the reliability of our findings, each algorithm is executed 10 times, resulting in robust results.

| DQR | DQRCP | |

|---|---|---|

| 0.1267(1.1504e-4) | 0.1344(3.5918e-5) | |

| 0.1456(2.3226e-4) | 0.1748(1.9633e-4) | |

| 0.2727(0.0011) | 0.2815(6.240e-4) | |

| 5.7008(0.1042) | 5.7608(0.0054) | |

| 6.9206(0.0210) | 8.0753(0.0622) | |

| 16.6889(0.4629) | 17.4049(0.1718) | |

| 19.1934(1.4439) | 22.3248(2.2537) | |

| 21.4972(0.6180) | 28.0318(0.6010) | |

| 70.5938(0.9510) | 73.6737(0.9375) | |

| 33.3352(1.4165) | 38.3178(1.2820) | |

| 38.3174(2.1581) | 51.5523(1.2879) | |

| 123.6001(18.5784) | 133.7725(15.5367) |

| tDQR | tDQRCP | |

|---|---|---|

| 0.1319(2.9287e-4) | 0.1422(6.0144e-5) | |

| 1.9193(0.0134) | 2.9907(0.0430) | |

| 0.1849(7.980e-4) | 0.1808(3.636e-4) | |

| 5.7371(0.0094) | 6.0572(0.0162) | |

| 18.1065(4.5056) | 20.0397(1.7046) | |

| 8.3085(0.1454) | 8.6551(0.1427) | |

| 20.4515(1.1058) | 22.6906(1.5815) | |

| 62.7255(38.3143) | 67.9553(20.5681) | |

| 28.2839(1.1321) | 31.9453(0.9432) | |

| 34.1231(3.4861) | 39.2982(1.8496) | |

| 109.0411(22.0643) | 120.6198(20.0132) | |

| 48.1549(7.5332) | 55.9513(6.7111) |

Tables 2 and 3 present a time comparison for the DQR, the DQRCP, the tDQR, and the tDQRCP decompositions for dual matrices of varying dimensions, with variance values provided in parentheses. It is evident that QR decompositions with column pivoting consistently exhibit slower runtime than those without column pivoting. However, they offer enhanced numerical stability. When considering dual matrices of size , we observe that for , both DQR decomposition and DQRCP decomposition outperform the tDQR decomposition and the tDQRCP decomposition in terms of speed. Conversely, when , the situation is reversed, thus confirming our hypothesis. When , it is noticeable that (3.1) lacks the presence of the term compared to (3.17). However, in cases where and are equal, and the dual matrix has full column rank, then . Nonetheless, thin QR decomposition still computes this term, leading to increased runtime.

Next, we aim to construct a low-rank dual matrix , where . Let be a GIID dual matrix, and be a GIID dual matrix, where . For the synthetic data that we have constructed, we compare the efficiency of the low-rank decomposition using the tDQRCP decomposition and the RDQRCP decomposition, with the target rank set to 10. To ensure the reliability of our findings, each algorithm is executed 50 times, resulting in robust results.

| tDQRCP | RDQRCP | |

|---|---|---|

| 0.0546(2.1961e-4) | 0.0100(3.2969e-5) | |

| 0.2088(0.0017) | 0.0386(3.0649e-4) | |

| 1.4337(0.0114) | 0.1557(2.1831e-4) | |

| 7.3579(0.0973) | 0.6936(0.0058) |

Table 4 presents a time comparison for the tDQRCP decomposition and the RDQRCP decomposition for dual matrices of varying dimensions, with variance values provided in parentheses. Clearly, in the low-rank problem, the RDQRCP decomposition exhibits faster speed and superior stability, with the potential to handle larger-scale data within memory constraints.

5.2 Dual Moore-Penrose Generalized Inverse

In previous research on dual matrices, many scholars have investigated the theory of dual Moore-Penrose generalized inverse (DMPGI) [1, 28, 29, 31]. We utilize the QR decomposition of dual matrices to compute DMPGI as an example. Similar to how Definition 2.1 defines the Moore-Penrose pseudoinverse, we can define the DMPGI accordingly.

Definition 5.1.

Given a dual matrix , its dual Moore-Penrose generalized inverse (DMPGI), denoted as which is a dual matrix that satisfies the following four properties,

| (5.1) |

In the following theorem, we establish the relationship between the existence of DMPGI and QR decomposition of the dual matrix.

Theorem 5.1.

Given a dual matrix where is full column rank. Then the DMPGI of exists, and

| (5.2) |

where is the thin QR decomposition of .

Proof.

Based on the results provided by Theorem 5.1, we can compute the DMPGI using the QR decomposition of the dual matrix. Utilizing the examples in reference [16], let’s consider the dual matrix

| (5.4) |

By the computation based on Theorem 5.1, we determine that the DMPGIs of and are respectively

| (5.5) |

and

| (5.6) |

Remark 5.1.

Note that the DMPGI of calculated here differs from the result in reference [16]. Through simple numerical verification, we ascertain that our method provides higher accuracy. For the DMPGI of , our computation matches the result in reference [16], indicating that with the assistance of QR decomposition, we achieve higher precision in DMPGI. Our approach offers an effective, concise, and precise method for computing DMPGI.

5.3 Simulations of Standing and Traveling Waves

In the past, numerous theoretical explorations have paved the way for research into the application aspects of dual numbers. Eduard Study [25] introduced the concept of dual angles when determining the relative positions of two skew lines in three-dimensional space, where the angle is defined as the standard part and the distance as the infinitesimal part. Similarly, when dealing with raw time series data, we regard the standard part as the observed data and the infinitesimal part as its derivative or first-order difference, which naturally identifies standing and traveling waves in the simulations. Consider a spatiotemporal propagating pattern [8]

| (5.7) |

where represents a real vector of particle positions at the time , , are real scalars representing the exponential decay rate and angular frequency, and c, d are real vectors representing two modes of oscillation. Overall, (5.7) demonstrates the circularity and continuity of propagation between c and d. When , (5.7) represents a ‘standing wave’ mode oscillation of rank-1. When , (5.7) represents a ‘traveling wave’ mode oscillation of rank-2 which behaves as a wavy motion. In previous research [8], wave propagation percentages of a wave were measured using an index, without distinguishing the exact traveling wave from the original wave. Wei, Ding, and Wei [34] introduced an effective method for traveling wave identification and extraction, including the determination of its propagation path. Now, we build upon their ideas and explore whether the QR decompositions of dual matrices proposed in this paper can be effectively utilized for traveling wave identification and whether they offer computational advantages. Here, we employ proper orthogonal decomposition to investigate wave behavior. Therefore, our focus lies in the component of the QR decomposition for the dual matrix.

Now, we consider the spatiotemporal propagation patterns in (5.7) and construct a two-dimensional grid constructed as a array of pixels by Gaussian waves. We arrange particles in rows and time points in columns, allowing us to construct an ensemble matrix . Here, we set c and d as two-dimensional Gaussian curves. By taking the derivative of with respect to time, we obtain . By treating as the standard part and as the infinitesimal part, we can construct the dual matrix . When , Figure 1(a) illustrates the standard part and infinitesimal part of the dual matrix after the QR decomposition. This is clearly a standing wave, with the center point at . When , Figure 1(b) displays the standard part and infinitesimal part of the dual matrix after QR decomposition, with center points at and . It’s noteworthy that signals , and exhibit paired similarities, where one is homologous and the other is heterologous. This property can be succinctly explained under the rank-2 truncated QR decomposition. First, due to the construction of , we have the range spaces , implying the existence of a matrix such that . Consequently, we have

| (5.8) |

For a skew-symmetric matrix , it is evident that

| (5.9) |

By utilizing (5.8) and (5.9) to analyze the components of the QR decompositions proposed in this paper, it becomes apparent that paired similarities are an inevitable occurrence.

To validate the effectiveness of traveling wave identification, we construct a combination wave and attempt to separate different signals. We combine four standing waves and two traveling waves with different frequencies and weights, each with the same standard deviation . Additionally, we introduce Gaussian noise with a peak value of . Using the same methodology as before, we treat the data matrix of the combination wave as the standard part and its first-order derivative as the infinitesimal part to generate the dual matrix . We perform a QR decomposition on and utilize to separate the six different waves. Figure 1(c) illustrates the standard and infinitesimal parts of the first four columns of , corresponding to four weighted standing waves. The centers of these four standing waves (50, 50), (100, 100), (150, 70), and (170, 180) represent the wave peaks. Similarly, in the standard and infinitesimal parts of columns 5 to 8 of , we can observe two pairs of traveling waves. In one pair, (50, 100) and (100, 50) represent the wave peaks, while in the other pair, (70, 150) and (120, 150) serve as the wave peaks.

In summary, our QR decomposition of the dual matrix provides an effective approach for traveling wave identification through proper orthogonal decomposition. Moreover, it is computationally straightforward.

5.4 Traveling Wave Identification in the Brain

Building upon prior research regarding the application of dual matrices in traveling wave identification for time series data in the brain, we hypothesize that performing a proper orthogonal decomposition on them yields superior results. Subsequently, we employ the previously proposed QR decomposition algorithm for dual matrices to test our hypothesis.

In our subsequent experiments, we made use of the Human Connectome Project (HCP) database [30], which comprises high-resolution 3D magnetic resonance scans of healthy adults aged between 22 to 35 years. This database includes task-state functional magnetic resonance imaging (tfMRI) images, a pivotal imaging modality in medical analysis. These tfMRI images were acquired while the subjects engaged in various tasks designed to stimulate cortical and subcortical networks. Each tfMRI scan encompasses two phases: one with right-to-left phase encoding and the other with left-to-right phase encoding. Achieving an in-plane field of view (FOV) rotation was accomplished by inverting both the readout (RO) and phase encoding (PE) gradient polarity 111https://www.humanconnectome.org/storage/app/media/documentation/s1200/HCP_S1200_Release_Reference_Manual.pdf.

Building upon the theoretical groundwork laid out in Section 5.3 regarding traveling wave identification by proper orthogonal decomposition, our present objective is to delve into the associations between this methodology and empirical brain responses within functional brain regions. Specifically, we concentrate on assessing the applicability of traveling wave identification within brain regions associated with typical language processing tasks encompassing all seven types, including semantic and phonological processing tasks, as initially developed by Binder et al. [4]. In this experimental setup, Binder et al. selected four blocks of a story comprehension task and four blocks of an arithmetic task. The story comprehension task involved the presentation of short auditory narratives consisting of 5-9 sentences, followed by binary-choice questions related to the central theme of the story. Conversely, the arithmetic task required participants to engage in addition and subtraction calculations during auditory experiments.

For our investigation, we considered a randomly chosen participant from the aforementioned experiment. The corresponding task-state functional magnetic resonance imaging (tfMRI) sequence data associated with the language processing task is stored as a 4-dimensional voxel-based image, encompassing three-dimensional spatial locations and 316 frames. The voxel size is set at 2mm, and the repetition time (TR) is established at 0.72 seconds. Data preprocessing assumes a pivotal role in maintaining substantial information while effectively compressing the image matrix. We initiated temporal filtering of the tfMRI signals, employing a Butterworth band-pass zero-phase filter in the frequency range of 0.01-0.1 Hz. This step aligns the signals with the conventional low-frequency range. Subsequently, we applied spatial smoothing, employing a 3-dimensional Gaussian low-pass filter with a half-maximum width of 5mm, involving convolution and exclusion of NANs. To ensure uniformity in voxel size across different frames, we implemented a Z-score transformation on the BOLD time series of all voxels. This transformation ensures a mean of zero and unit variance, maintaining consistency in the data.

Following this, our primary aim is to derive a dual matrix from the original brain sequence data matrix and affirm the coherence between areas displaying significant extracted traveling waves and the respective functional cortical activities in the brain. To achieve this, the initial three spatial dimensions are transformed into column vectors for each frame, and the fourth temporal dimension is sequentially appended. This operation culminates in the creation of a real matrix. This real matrix constitutes what we refer to as the standard component of the dual matrix . Similarly, the infinitesimal component is acquired by performing first-order temporal differentiation.

By utilizing the previously proposed RDQRCP in Algorithm 6 of the dual matrix , we easily obtain the desired proper orthogonal decomposition. Furthermore, compared to other researchers’ compact dual singular value decomposition (CDSVD) [34], our orthogonality is more pronounced. While CDSVD requires 14.661 seconds, we only need 5.660 seconds, providing a significant advantage in terms of processing time.

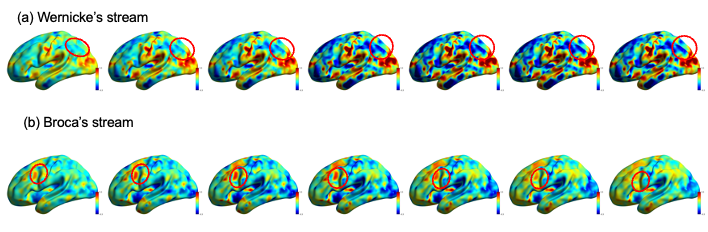

In Figure 2, the utilization of CDSVD [34] and the RDQRCP is presented in proper orthogonal decomposition, showcasing the results of inner products between the standard part and infinitesimal part. More specifically, within the QR decomposition for dual matrices, the inner product computed between and yields a substantial value of 0.840. Similarly, for and , the inner product registers -0.723. These calculations unveil the presence of a conspicuous traveling wave, characterized by the dominant principal components residing in the standard part. Subsequent to this discovery, the rank-2 matrix, originating from the second and third principal components, is meticulously rescaled to its original dimensions. This rescaled matrix is then subjected to spatial projection onto the brain’s anatomical structure using the ‘BrainNet’ toolkit within the MATLAB environment [35]. Through a sequential concatenation of these brain images, a dynamic video representation is created. This video provides a visually striking means of observing the temporal dynamics of brain activity across distinct regions, as depicted through the dynamic variations in color representation.

In Figure 3 (a), we can discern a substantial signal propagation. This module represents a language task in progress, where the wave originates from Brodmann area 39 (angular gyrus) and terminates at Brodmann area 40 (supramarginal gyrus) [22]. Both of these regions are intricately linked with Wernicke’s area, a pivotal node in language processing, particularly in the comprehension of speech. This specific cerebral region plays a critical role in the interpretation and generation of language, especially in speech comprehension. It enables us to adeptly grasp spoken language from others and articulate our own thoughts and emotions. Additionally, the prominence of this wave in the left hemisphere, as opposed to the right hemisphere, underscores the left hemisphere’s primary role in language processing.

Simultaneously, in Figure 3 (b), we also discern the propagation of the wave from Brodmann area 44 (pars opercularis) to Brodmann area 45 (Pars triangularis), both of which are constituents of Broca’s area [12]. This region plays a pivotal role in language processing, encompassing tasks related to the formulation and organization of language expression, as well as the processing of grammatical structures and syntax. Lesions or impairments in this area can lead to Broca’s aphasia, characterized by challenges in sentence production and reduced linguistic fluency, while language comprehension abilities remain largely intact. Additionally, it is implicated in language memory functions, particularly short-term memory processes.

To summarize, our findings have delineated two distinct wave patterns that correspond to well-established brain functional circuits, thus corroborating prior research in the field of cognitive neuroscience. In essence, this serves as a robust validation mechanism that reinforces the established conclusions within this domain.

Appendix A Proof of Theorem 3.2 and Theorem 3.3

The proof of Theorem 3.2.

Proof.

Suppose the thin QR decomposition of exists, then we have that

| (A.1) |

through simple computation. By treating as and as , the given equation in (A.1) transforms into a Sylvester equation involving and . Utilizing Theorem 2.1, if and only if

| (A.2) |

which holds true identically by the invertibility nature of and the fact , one can derive the solution

| (A.3) |

where and are arbitrary.

Considering the fact and , we have

| (A.4) | ||||

and

| (A.5) | ||||

where is arbitrary.

Since is a matrix with orthogonal columns, there exists a matrix with orthogonal columns, such that is an orthogonal matrix. Thus, , where and are arbitrary matrices. At this moment, we have . Then the expressions for and are as follows,

| (A.6) |

where is an arbitrary matrix.

Just as we discussed in Theorem 3.1, we aim to exploit the orthogonal column properties of and the upper triangular nature of to ascertain the values of . In this regard, we first contemplate the scenario where is a dual matrix with orthogonal columns. Thus, we have

| (A.7) |

By substituting the expression for from (A.6), we can obtain

| (A.8) | ||||

with the fact .

We deduce that is a skew-symmetric matrix from (A.8). Next, we leverage the upper triangular property of to ascertain the elements within matrix . Considering the expression of in (A.6), we have

| (A.9) |

We denote and where . By partitioning the matrices column-wise and given that is of full rank, (A.9) is transformed into the following system of equations,

| (A.10) |

Exploiting that matrix is upper triangular, along with and where , the system (A.10) can be transmuted into

| (A.11) |

Recognizing the upper triangular structure of , it follows that for , the lower entries are all zeros. Consequently, also requires computation solely for the lower entries , thereby ensuring the maintenance of ’s skew-symmetric property. Hence, the system of equations has the solutions

| (A.12) |

Thus, we complete the proof.

The proof of Theorem 3.3.

Proof.

Suppose randomized QR decomposition with column pivoting in the standard part of exists, then we have that

| (A.13) |

through computation directly. By treating as and as , the given equation in (A.13) transforms into a Sylvester equation involving and . Utilizing Theorem 2.1 and the fact , if and only if

| (A.14) |

holds true, one can derive the solution

| (A.15) |

where and are arbitrary.

Considering the fact and , we have

| (A.16) | ||||

and

| (A.17) | ||||

where is arbitrary.

Since is a matrix with orthogonal columns, there exists a matrix with orthogonal columns, such that is an orthogonal matrix. Thus, , where and are arbitrary matrices. At this moment, we have .Then the expressions for and are as follows,

| (A.18) |

where is an arbitrary matrix.

First, we contemplate the scenario where is a dual matrix with orthogonal columns. Thus, we have

| (A.19) |

By substituting the expression for from (A.18), we can obtain

| (A.20) | ||||

with the fact .

We deduce that is a skew-symmetric matrix from (A.20). Next, we leverage the upper trapezoidal property of to ascertain the elements within matrix . Considering the expression of in (A.18), we have

| (A.21) |

We denote and where . By partitioning the matrices column-wise and given that is of full rank, (A.21) is transformed into the following system of equations,

| (A.22) |

Exploiting that matrix is upper trapezoidal, along with and where , the overdetermined system (A.22) can be transmuted into

| (A.23) |

Recognizing the upper trapezoidal structure of , it follows that for , the lower entries are all zeros. Consequently, also requires computation solely for the lower entries , thereby ensuring the maintenance of ’s skew-symmetric property. Moreover, the consistency of an over-determined system (A.22) can be uniquely determined by . Hence, the over-determined system of equations has the solutions

| (A.24) |

Thus, we complete the proof.

Data Availability

The numerical data shown in the figures are available upon request, and so is the code to run the experiment and generate the figures.

Declarations

Conflicts of Interest. The authors declare no competing interests.

References

- [1] J. Angeles. The dual generalized inverses and their applications in kinematic synthesis. In Latest advances in robot kinematics, pages 1–10. Springer, 2012.

- [2] J. K. Baksalary and R. Kala. The Matrix Equation . Linear Algebra and its Applications, 25:41–43, 1979.

- [3] A. G. Baydin, B. A. Pearlmutter, A. A. Radul, and J. M. Siskind. Automatic differentiation in machine learning: a survey. Journal of Marchine Learning Research, 18:1–43, 2018.

- [4] J. R. Binder, W. L. Gross, J. B. Allendorfer, L. Bonilha, J. Chapin, J. C. Edwards, T. J. Grabowski, J. T. Langfitt, D. W. Loring, M. J. Lowe, et al. Mapping anterior temporal lobe language areas with fMRI: a multicenter normative study. Neuroimage, 54(2):1465–1475, 2011.

- [5] M. Clifford. Preliminary sketch of biquaternions. Proceedings of the London Mathematical Society, 1(1):381–395, 1871.

- [6] C. Cui, H. Wang, and Y. Wei. Perturbations of moore-penrose inverse and dual moore-penrose generalized inverse. Journal of Applied Mathematics and Computing, pages 1–24, 2023.

- [7] J. A. Duersch and M. Gu. Randomized QR with column pivoting. SIAM Journal on Scientific Computing, 39(4):C263–C291, 2017.

- [8] B. Feeny. A complex orthogonal decomposition for wave motion analysis. Journal of Sound and Vibration, 310(1-2):77–90, 2008.

- [9] J. A. Fike and J. J. Alonso. Automatic differentiation through the use of hyper-dual numbers for second derivatives. In Recent Advances in Algorithmic Differentiation, pages 163–173. Springer, 2012.

- [10] M. Fliess and C. Join. Model-free control. International Journal of Control, 86(12):2228–2252, 2013.

- [11] B. Fornberg. Generation of finite difference formulas on arbitrarily spaced grids. Mathematics of computation, 51(184):699–706, 1988.

- [12] A. D. Friederici, N. Chomsky, R. C. Berwick, A. Moro, and J. J. Bolhuis. Language, mind and brain. Nature human behaviour, 1(10):713–722, 2017.

- [13] G. H. Golub and C. F. Van Loan. Matrix Computations. JHU Press, 4th edition, 2013.

- [14] A. Greenbaum, R.-C. Li, and M. L. Overton. First-order perturbation theory for eigenvalues and eigenvectors. SIAM Rev., 62(2):463–482, 2020.

- [15] R. Gutin. Generalizations of singular value decomposition to dual-numbered matrices. Linear and Multilinear Algebra, 70(20):5107–5114, 2022.

- [16] E. Pennestrì and R. Stefanelli. Linear algebra and numerical algorithms using dual numbers. Multibody System Dynamics, 18:323–344, 2007.

- [17] E. Pennestrì, P. Valentini, and D. De Falco. The Moore–Penrose dual generalized inverse matrix with application to kinematic synthesis of spatial linkages. Journal of Mechanical Design, 140(10):102303, 2018.

- [18] R. Peón, O. Carvente, C. A. Cruz-Villar, M. Zambrano-Arjona, and F. Peñuñuri. Dual numbers for algorithmic differentiation. Ingeniería, 23(3):71–81, 2019.

- [19] L. Qi, D. M. Alexander, Z. Chen, C. Ling, and Z. Luo. Low rank approximation of dual complex matrices. arXiv:2201.12781, 2022.

- [20] L. Qi and C. Cui. Eigenvalues and Jordan forms of dual complex matrices. Communications on Applied Mathematics and Computation, pages 1–17, 2023.

- [21] L. Qi and Z. Luo. Eigenvalues and singular values of dual quaternion matrices. Pacific Journal of Optimization, 19(2):257–272, 2023.

- [22] Y. Sakurai. Brodmann areas 39 and 40: human parietal association area and higher cortical function. Brain and nerve, 69(4):461–469, 2017.

- [23] J. Sola. Quaternion kinematics for the error-state kalman filter. arXiv:1711.02508, 2017.

- [24] G. Stewart. On the perturbation of LU, Cholesky, and QR factorizations. SIAM Journal on Matrix Analysis and Applications, 14(4):1141–1145, 1993.

- [25] E. Study. Geometrie der Dynamen. Druck und verlag von BG Teubner, 1903.

- [26] J.-G. Sun. On perturbation bounds for the QR factorization. Linear Algebra and its Applications, 215:95–111, 1995.

- [27] F. E. Udwadia. Dual generalized inverses and their use in solving systems of linear dual equations. Mechanism and Machine Theory, 156:104158, 2021.

- [28] F. E. Udwadia. When does a dual matrix have a dual generalized inverse? Symmetry, 13(8):1386, 2021.

- [29] F. E. Udwadia, E. Pennestri, and D. de Falco. Do all dual matrices have dual Moore–Penrose generalized inverses? Mechanism and Machine Theory, 151:103878, 2020.

- [30] D. C. Van Essen, S. M. Smith, D. M. Barch, T. E. Behrens, E. Yacoub, K. Ugurbil, W.-M. H. Consortium, et al. The Wu-Minn human connectome project: an overview. Neuroimage, 80:62–79, 2013.

- [31] H. Wang. Characterizations and properties of the MPDGI and DMPGI. Mechanism and Machine Theory, 158:104212, 2021.

- [32] H. Wang, C. Cui, and X. Liu. Dual -rank decomposition and its applications. Computational and Applied Mathematics, 42(8):349, 2023.

- [33] J. WB and J. LINDENSTRAUSS. Extensions of Lipschitz mappings into a Hilbert space. In Conference in Modern Analysis and Probability, pages 189–206, 1984.

- [34] T. Wei, W. Ding, and Y. Wei. Singular value decomposition of dual matrices and its application to traveling wave identification in the brain. SIAM Journal on Matrix Analysis and Applications, 45(1):634–660, 2024.

- [35] M. Xia, J. Wang, and Y. He. Brainnet viewer: a network visualization tool for human brain connectomics. PloS one, 8(7):e68910, 2013.

- [36] Z. Xiong, Y. Wei, R. Xu, and Y. Xu. Low-rank traffic matrix completion with marginal information. Journal of Computational and Applied Mathematics, 410:114219, 2022.

- [37] R. Xu, T. Wei, Y. Wei, and H. Yan. UTV decomposition of dual matrices and its applications. Computational and Applied Mathematics, 43(1):41, 2024.

- [38] K. Yang and C. Shahabi. A pca-based similarity measure for multivariate time series. In Proceedings of the 2nd ACM international workshop on Multimedia databases, pages 65–74, 2004.