QP Chaser: Polynomial Trajectory Generation for Autonomous Aerial Tracking

Abstract

Maintaining the visibility of the target is one of the major objectives of aerial tracking missions. This paper proposes a target-visible trajectory planning pipeline using quadratic programming (QP). Our approach can handle various tracking settings, including 1) single- and dual-target following and 2) both static and dynamic environments, unlike other works that focus on a single specific setup. In contrast to other studies that fully trust the predicted trajectory of the target and consider only the visibility of the target’s center, our pipeline considers error in target path prediction and the entire body of the target to maintain the target visibility robustly. First, a prediction module uses a sample-check strategy to quickly calculate the reachable sets of moving objects, which represent the areas their bodies can reach, considering obstacles. Subsequently, the planning module formulates a single QP problem, considering path topology, to generate a tracking trajectory that maximizes the visibility of the target’s reachable set among obstacles. The performance of the planner is validated in multiple scenarios, through high-fidelity simulations and real-world experiments.

Note to Practitioners

This paper proposes an aerial target tracking framework applicable to both single- and dual-target following missions. This paper proposes the prediction of the reachable area of moving objects and the generation of a target-visible trajectory, both of which are computed in real-time. Since the proposed planner considers the possible reach area of moving objects, the generated trajectory of the drone is robust to the prediction inaccuracy in terms of the target visibility. Our system can be utilized in crowded environments with multiple moving objects and extended to multiple-target scenarios. We extensively validate our system through several real-world experiments to show practicality.

Index Terms:

Aerial tracking, visual servoing, mobile robot path-planning, vision-based multi-rotorI Introduction

Vision-aided multi-rotors [1, 2, 3] are widely employed in applications such as surveillance [4] and cinematography [5], and autonomous target chasing is essential in such tasks. In target-chasing missions, various situations exist in which a single drone has to handle both single- and multi-target scenarios without occlusion. For example, in film shooting, there are scenes in which one or several actors should be shot in a single take, without being visually disturbed by structures in the shooting set. Moreover, the occlusion of main actors by background actors is generally prohibited. Additionally, autonomous shooting can be used in sporting events to provide aerial views of athletes’ dynamic play. Similarly, the athletes’ movement should not be obstructed by crowds. Therefore, a tracking strategy capable of handling both single and multiple targets among static and dynamic obstacles can benefit various scenarios in chasing tasks.

Despite great attention and research over the recent decade, aerial target tracking remains a demanding task for the following reasons. First, the motion generator in the chasing system needs to account for visibility obstruction caused by environmental structures and the limited camera field-of-view (FOV), in addition to typical considerations in UAV motion planning, such as collision avoidance, quality of the flight path, and dynamical limits. Since the sudden appearance of unforeseen obstacles can cause target occlusion, a trajectory that satisfies all these considerations needs to be generated quickly. Second, it is difficult to forecast accurate future paths of dynamic objects due to perceptual error from sensors, surrounding environmental structures, and unreliable estimation of intentions. Poor predictions can negatively impact trajectory planning performance and lead to tracking failures.

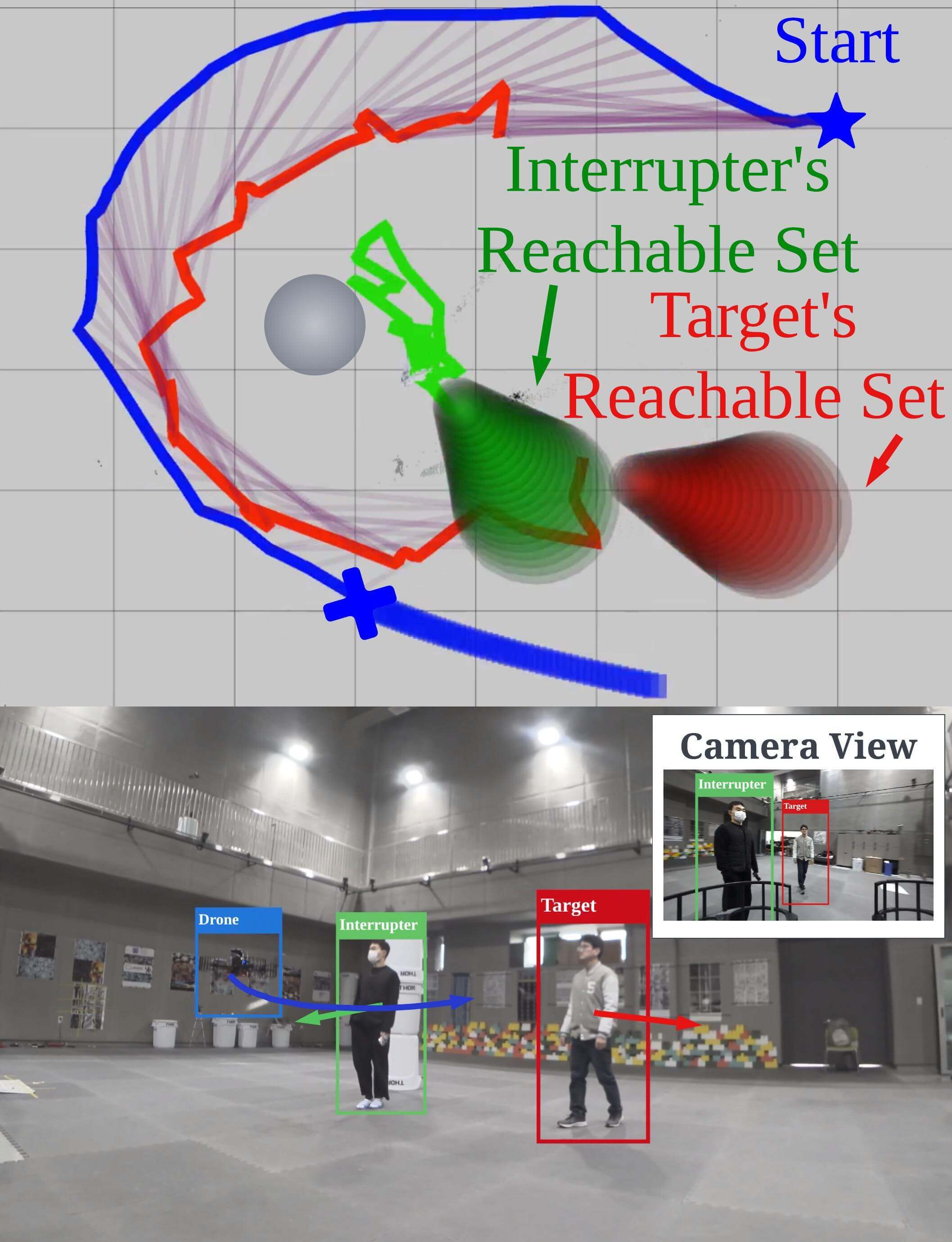

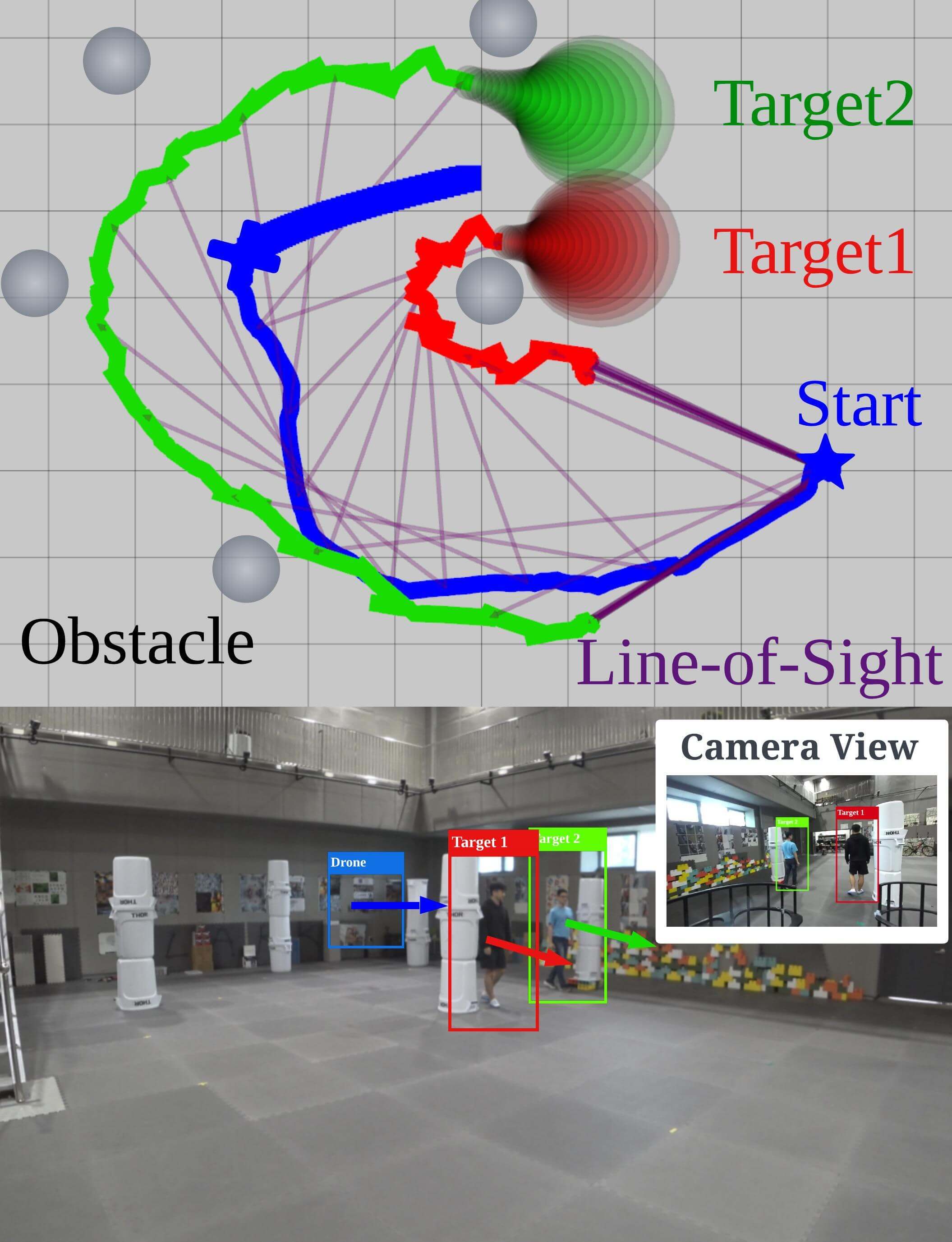

In order to address the factors above, we propose a real-time single- and dual-target tracking strategy that 1) can be adopted in both static and dynamic environments and 2) enhances the target visibility against prediction error, as illustrated in Fig. 1. The proposed method consists of two parts: prediction and chasing. In the prediction module, we calculate reachable sets of moving objects, taking into account the obstacle configuration. For efficient calculations, we use a sample-check strategy. First, we sample motion primitives representing possible trajectories, generated by quadratic programming (QP), which is solved in closed form. Then, collisions between primitives and obstacles are checked while leveraging the properties of Bernstein polynomials. We then calculate reachable sets enclosing the collision-free primitives.

In the chasing module, we design a chasing trajectory via a single QP. QP is known for being solvable in polynomial time and guaranteeing global optimality. We adopted this method because the optimization was solved within tens of milliseconds during numerous tests. The key idea of our trajectory planning is to define a target-visible region (TVR), a time-dependent spatial set that keeps the visibility of targets. Two essential factors of TVR enhance the target visibility. First, analyzing the topology of the targets’ path helps avoid situations where target occlusion inescapably occurs. Second, TVR is designed to maximize the visible area of the target’s reachable set, considering potential visual obstructions from obstacles and ensuring robust target visibility against the prediction error. Moreover, by allowing the entire target body to be viewed instead of specific points, the TVR enhances the target visibility. Additionally, to handle dual-target scenarios, we define an area where two targets can be simultaneously viewed with a limited FOV camera. Table I presents the comparison with the relevant works, and our main contributions are summarized as follows.

-

•

We propose a QP-based trajectory planning framework capable of single and dual target-chasing missions amidst static and dynamic obstacles, in contrast with existing works that address either single-target scenarios or static environments only.

-

•

(Prediction) We propose an efficient sample-check-based method to compute reachable sets of moving objects, leveraging Bernstein polynomials. This method reflects both static and dynamic obstacles, which contrasts with other works that do not fully consider the obstacle configurations in motion predictions.

-

•

(Chasing) We propose a target-visible trajectory generation method by designing a target-visible region (TVR), in which the target visibility is robustly maintained. This approach considers 1) the path topology, 2) the entire body of targets, and 3) the prediction error of moving objects. This contrasts with other works that fully trust potentially erroneous path predictions.

The remainder of this paper is arranged as follows. We review the relevant references in Section II, and the relationship between the target visibility and the path topology is studied in Section III. The problem statement and the pipeline of the proposed system are presented in Section IV, and Section V describes the prediction of the reachable set of moving objects. Section VI describes TVR, designs the reference trajectory for the chasing drone, and completes QP formulation. The validation of the proposed pipeline is demonstrated with high-fidelity simulations and real-world experiments in Section VII.

II Related Works

II-A Single Target Visibility against Static Obstacles

There have been various studies that take the target visibility into account in single-target chasing in static environments. [3] and [5] design cost functions that penalize target occlusion by obstacles, while [6] designs an environmental complexity cost function to adjust the distance between a target and a drone, implicitly reducing the probability of occlusion. These methods combine the functions with several other objective functions related to actuation efficiency and collision avoidance. Such conflicting objectives can yield a sub-optimal solution that compromises tracking motion. Therefore, the visibility of the target is not ensured. In contrast, our approach ensures the target visibility by incorporating it as a hard constraint in the QP problem, which can be solved quickly. On the other hand, there are works that explicitly consider visibility as hard constraints in optimization. [7] designs sector-shaped visible region and [8] designs select the view region among annulus sectors centered around the target. They use their target-visible regions in unconstrained optimization to generate a chasing trajectory. However, these works focus on the visibility of the center of a target, which may result in partial occlusion. In contrast, our method considers the visibility of the entire body of the target and accounts for the prediction error, thus enhancing robustness in target tracking.

Also, there are works that handle target path prediction for aerial tracking. [9] and [10] use past target observations to predict the future trajectory via polynomial regression. However, their method can generate erroneous results by insufficiently considering surroundings, resulting in path conflicts with obstacles. With inaccurate target path prediction, planners may not produce effective chasing paths and fail to chase a target without occlusion. Some works [11] and [12] predict the future target’s movements by formulating optimization problems that encourage the target to move towards free spaces. However, these approaches are limited to static environments. In contrast, our framework predicts the target’s movement considering both static and dynamic obstacles.

| Method | Scenarios | Prediction | Planning | ||

| Dual-target | Dynamic obstacles | Environ. informed | PE-aware | WB of target | |

| [11] | ✕ | ✕ | ✕ | ✕ | |

| [13] | ✕ | ✕ | ✓ | ||

| [14] | ✕ | ✕ | ✕ | ✕ | |

| [15] | ✕ | ✕ | ✕ | ✕ | |

| [16] | ✕ | ✕ | ✕ | ||

| [17] | ✕ | ✕ | ✕ | ||

| [18] | ✕ | ✕ | ✕ | ||

| Ours | ✔ | ✔ | ✔ | ✔ | ✔ |

means that the algorithm explicitly considers the corresponding item. (PE: Perception-error, and WB: Whole body)

II-B Single-Target Chasing in Dynamic Environments

Some studies treat tracking a target in dynamic environments. There are works that attempt to solve the occlusion problem by approximating the future motion of both dynamic obstacles and targets using a constant velocity model. [13] designs a target-visibility cost that is inspired by GPU ray casting model. [14] uses a learned occlusion model to evaluate the target visibility and tests the planner in dynamic environments. [15] aims to avoid occlusion by imposing a constraint that prevents a segment connecting a target and the drone from colliding with dynamic obstacles. Since [13] and [14] use the constant velocity model to predict the movements of dynamic objects, there are cases where the target and obstacles overlap, leading to incorrect target-visibility cost evaluation, which can ruin the planning results. Furthermore, the constraint in [15] cannot be satisfied if the predicted path of the target traverses occupied space (e.g. obstacles), which makes the optimization problem infeasible. In contrast, our approach predicts the movements of objects by fully considering the environment and incorporates the prediction error into planning to enhance the target visibility.

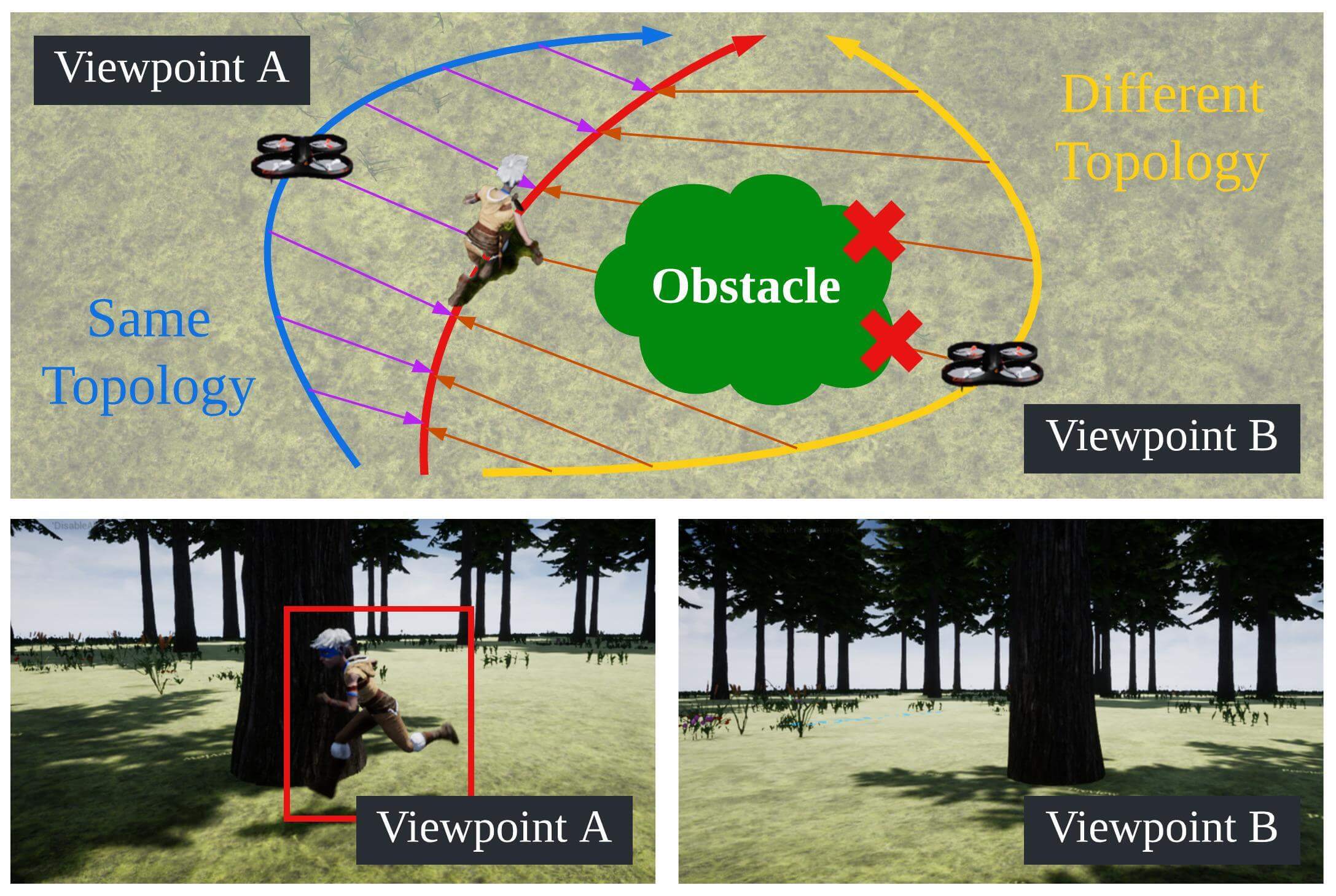

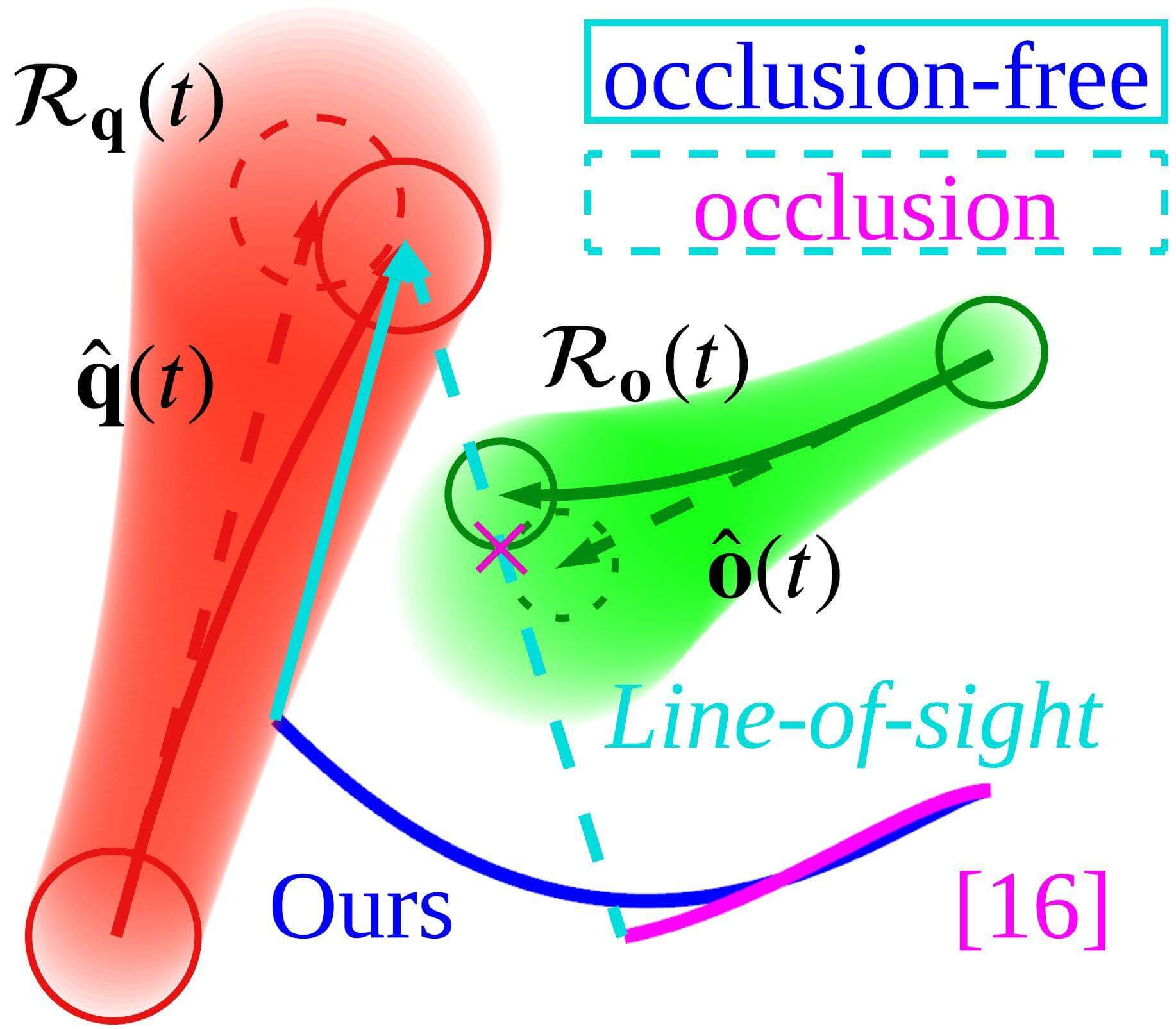

On the other hand, the approach in [16] makes a set of polynomial motion primitives and selects the best path under safety and target visibility constraints to acquire a non-colliding target path and generate a chasing trajectory. However, when the target and drone are near an obstacle, the planner [16] may select the path toward a region where occlusion is inevitable from the perspective of path topology, as will be shown in Section VII-C. In contrast, our method directly applies concepts of the path topology to prevent inescapable occlusion.

II-C Multiple Target Following

Multi-target tracking with a single drone has been discussed in a few works. [19] and [20] minimize the change in the target’s position in the camera image; however, they do not consider obstacles. [17] designs a dual visibility score field to handle the visibility of two targets among obstacles and a camera field-of-view (FOV) limit. Because the field is constructed heuristically, the success rate of tracking heavily depends on parameter settings. In contrast, our work handles these issues by applying them as hard constraints that must be satisfied. [18] tracks multiple targets by simultaneously controlling the camera’s intrinsic and extrinsic parameters. However, it is limited to systems where the intrinsic parameters can be controlled. In contrast, we consider the fixed and limited camera FOV with hard constraints to ensure the simultaneous observation of the targets.

II-D Target Following Using Quadratic Programming

Few aerial tracking works utilize QP for chasing trajectory planning: [12] and [21]. [12] calculates a series of viewpoints and uses QP optimization to interpolate them, but it does not ensure the target visibility when moving between the viewpoints. [21] generates safe flight corridors along the target’s path and pushes the drone’s trajectory toward the safe regions, but the target visibility is not considered in its QP problem. In contrast, we formulate the target visibility constraints for the full planning horizon. Also, in conventional drone trajectory generation methods using QP, the trajectory is confined to a specific part of the space over time intervals. However, by incorporating time-variant constraints, our method enables the drone to operate in a wider region, providing an advantage in target tracking.

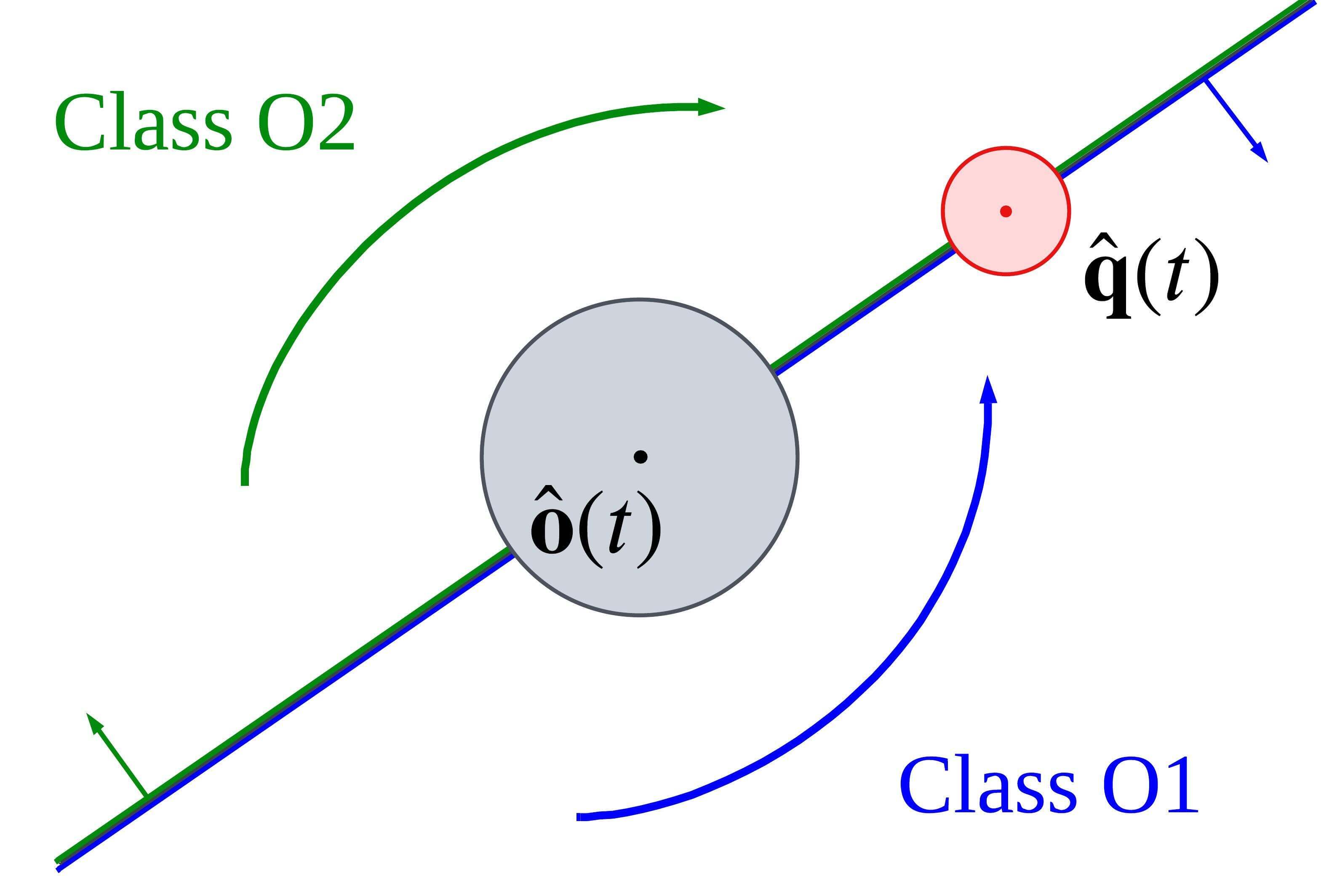

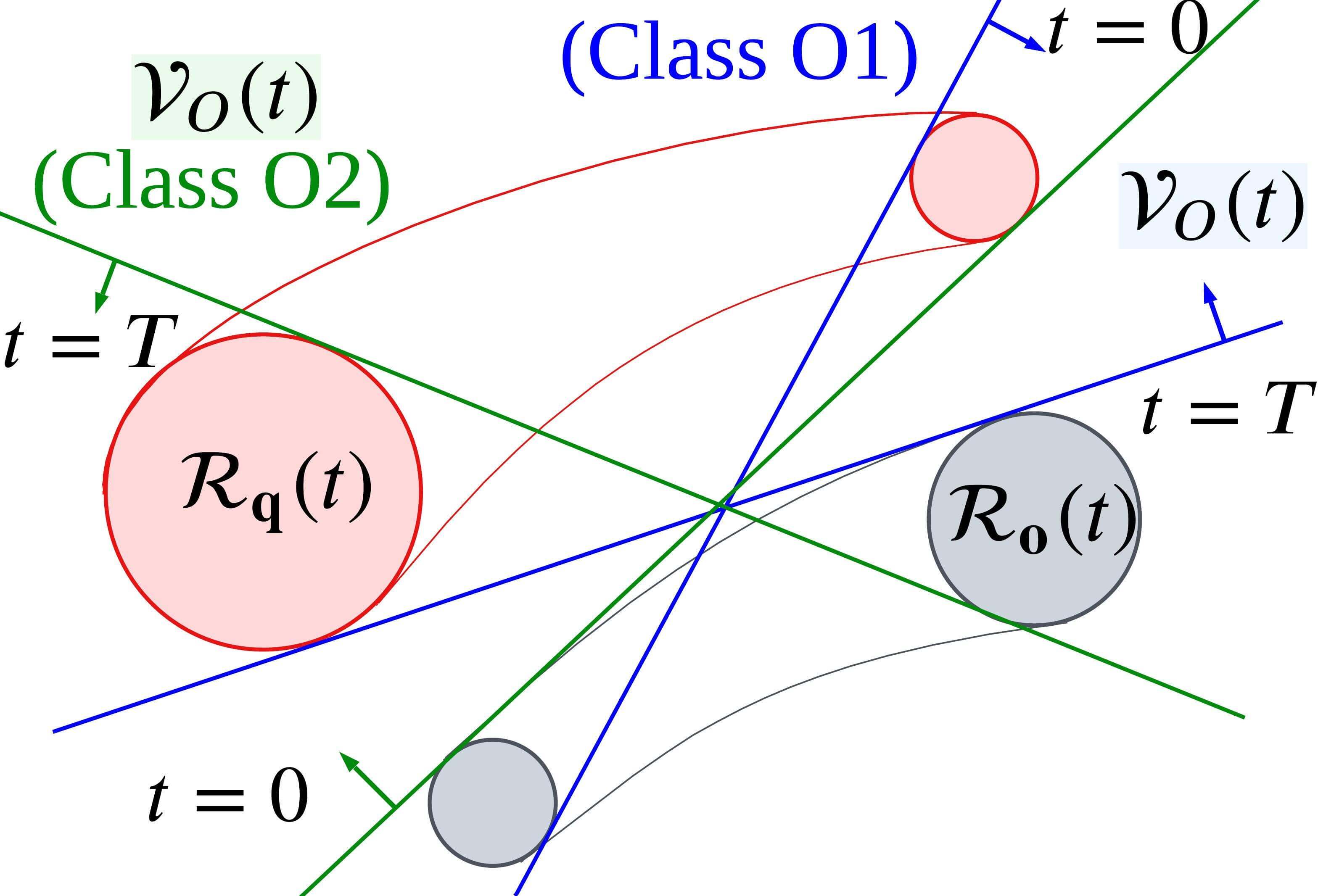

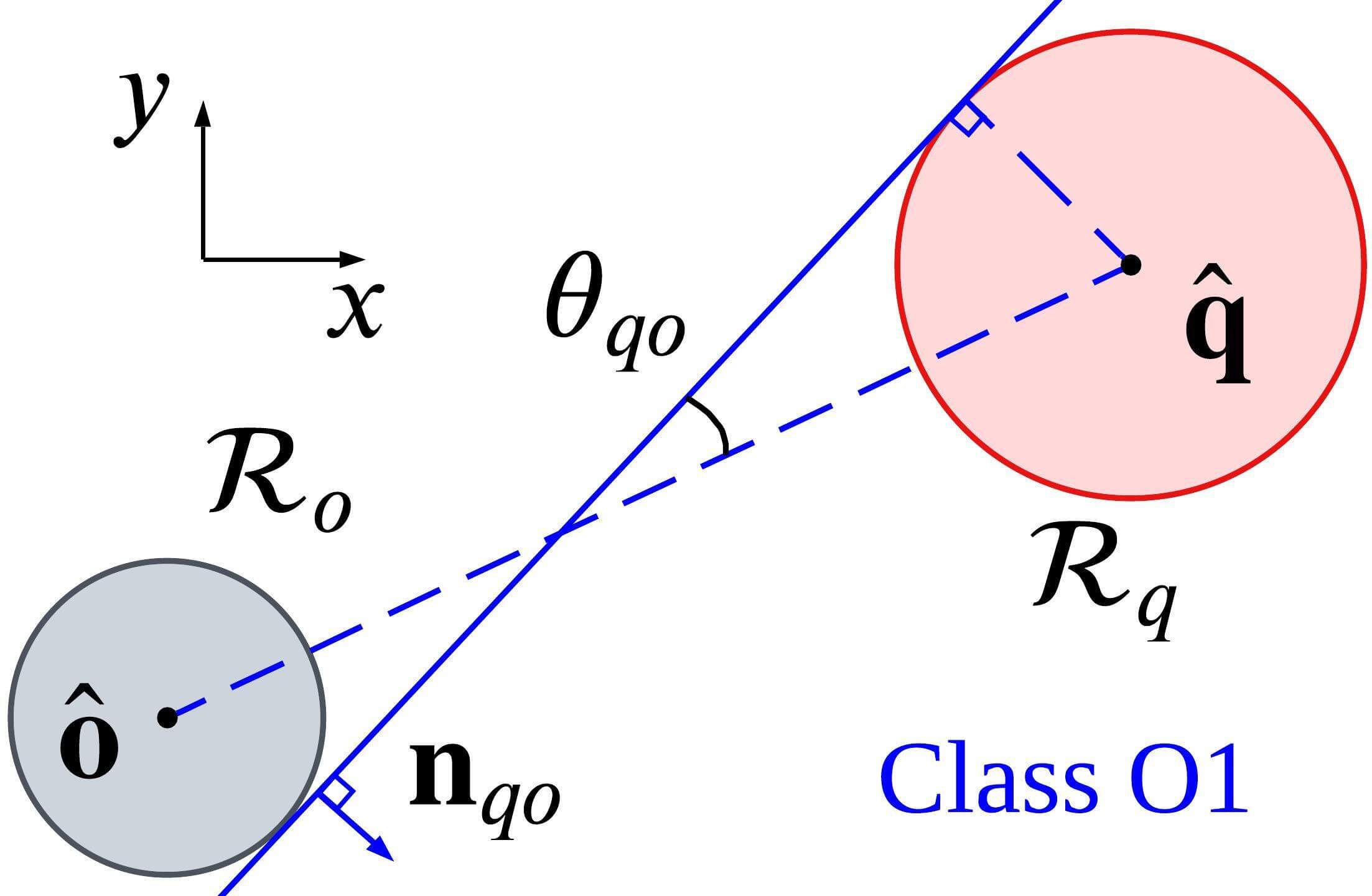

III Preliminary

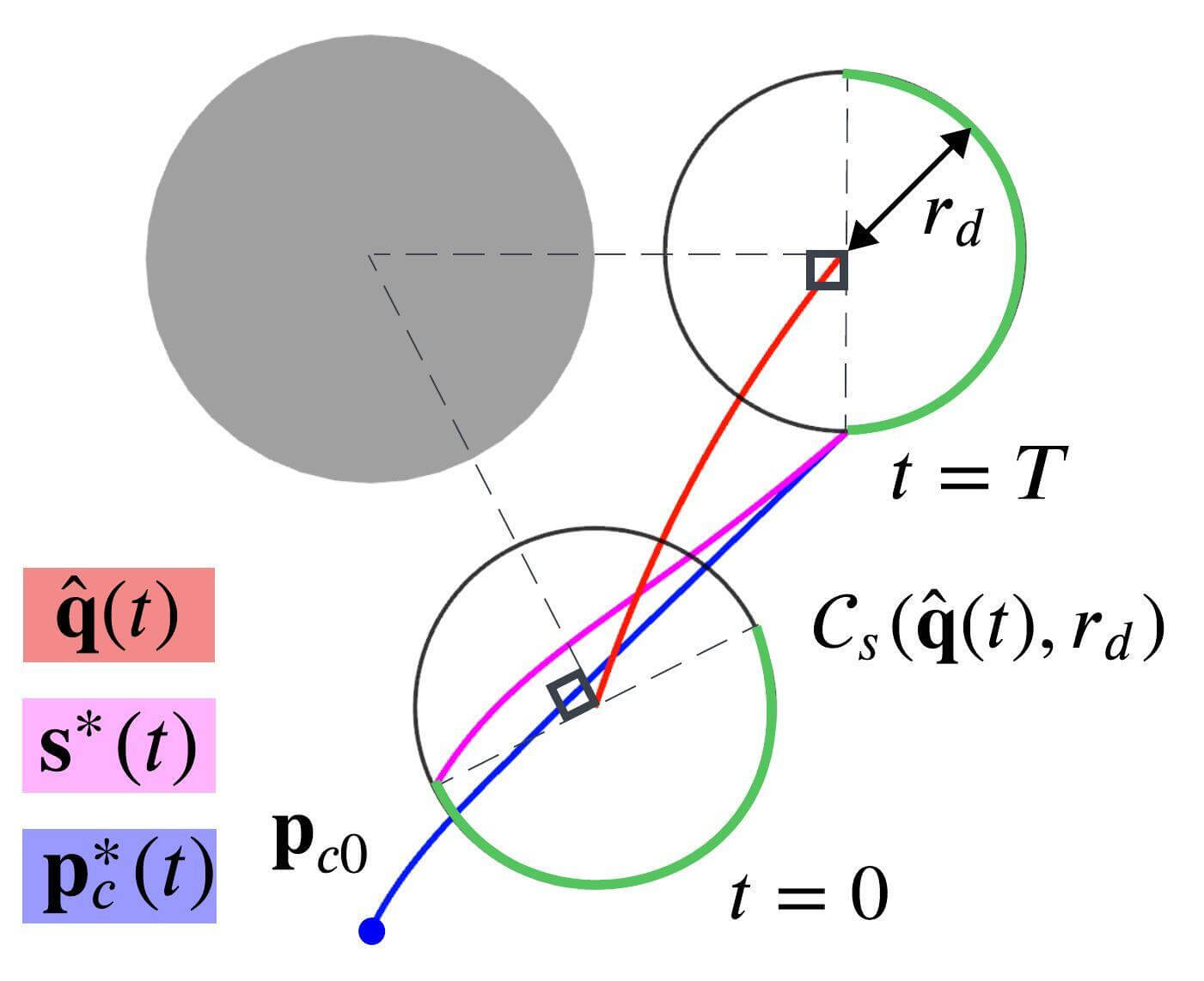

This section presents the relationship between target occlusion and path topology. In an obstacle environment, there exist multiple path topology classes [22]. As shown in Fig. 2, the visibility of the target is closely related to path topology. We analyze the relation between them and take it into consideration when generating the chasing trajectory.

As stated in [23], the definition of path homotopy is as follows.

Definition 1.

Paths are path-homotopic if there is a continuous map such that

| (1) |

where is unit interval .

In this paper, Line-of-Sight is of interest, which is defined as follows.

Definition 2.

Line-of-Sight is a segment connecting two objects .

| (2) |

where represents time.

Based on the definitions above, we derive a relation between the path topology and visibility between two objects.

Theorem 1.

When two objects are reciprocally visible, the paths of objects are path-homotopic.

Proof.

Suppose that two objects move along paths in free space during time interval , respectively. i.e. , , where is the time mapping function such that . If the visibility between and is maintained, the Line-of-Sight does not collide with obstacles: for . Then, by definition, for . Since such a condition satisfies the definition of continuous mapping in Definition 1, the two paths are homotopic. ∎

From Theorem 1, when the drone chooses a path with a different topology class from the target path, target occlusion inevitably occurs. Therefore, we explicitly consider path-homotopy when planning a chasing trajectory.

Throughout this article, we use the notation in Table II. The bold small letters represent vectors, calligraphic capital letters denote sets, and italic lowercase letters mean scalar values.

IV Overview

| Symbol | Definition |

| A trajectory of the drone. | |

| An optimization variable that consists of Bernstein coefficients representing . | |

| The degree of a polynomial trajectory . | |

| Planning horizon | |

| FOV of the camera built on the drone. | |

| The maximum speed and acceleration of the drone. | |

| Free space | |

| A set of obstacles. An -th obstacle in | |

| The number of elements of and samples of end-points in the prediction. | |

| A reachable set of an -th target. A center trajectory and radius of . We omit a subscript to handle an arbitrary target. | |

| A reachable set of an -th obstacle. A center trajectory and radius of . We omit a subscript to handle an arbitrary obstacle. | |

| End Point Set, a set of candidate trajectories and a set of non-colliding candidate trajectories. | |

| Line-of-Sight between and . | |

| Target visible region against a single obstacle (TVR-O) and target visible region that can see the two targets simultaneously with camera FOV (TVR-F). | |

| A region where the drone cannot see both targets with the limited camera FOV. | |

| , | Visibility score against the -th obstacle. Visibility score against all obstacles. |

| Relative position between and at time . . | |

| norm of x | |

| First, second, third time derivatives of | |

| Transposed vector a and matrix | |

| elementwise inequality symbols | |

| - and -components of x. | |

| Binomial coefficients, the number of -combinations from a set of elements. | |

| An matrix with all-zero elements. | |

| An identity matrix with rank . | |

| Determinant of a matrix. | |

| A boundary of a closed set . | |

| A ball with center at x and radius . A circumference of the ball. | |

| The number of elements in set | |

| , | Segment connecting points and . Angle between and . |

IV-A Problem Setup

In this section, we formulate the trajectory planning problem for a tracking drone with firmly attached camera sensors that have limited FOVs [rad]. We consider an environment , which consists of separate cylindrical static and dynamic obstacles. Our goal is to generate a trajectory of the drone so that it can see the single and dual targets ceaselessly in over the time horizon . To achieve the goal, the drone has to predict the future movement of moving objects, such as targets and dynamic obstacles, and generate a target-chasing trajectory. To reflect the prediction error, we compute the reachable sets of moving objects. Then, based on the reachable sets, the planner generates a continuous-time trajectory that satisfies dynamical feasibility and avoids collision while preserving the target visibility. To accomplish the missions, we set up two problems: 1) Prediction problem and 2) Chasing problem.

IV-A1 Prediction problem

The prediction module forecasts reachable sets of moving objects such as targets and dynamic obstacles, over a time horizon . The reachable sets and are sets that encompass the future positions of the targets and obstacles , respectively. The goal is to calculate the reachable sets considering an obstacle set as well as estimation error. Specifically, we represent the -th target () and -th obstacle () as and respectively. Since the methods to compute and are equivalent, we use a symbol p instead of q and o to represent information of reachable sets of moving objects, in Section V.

IV-A2 Chasing problem

Given the reachable sets of the target and the obstacles obtained by the prediction module, we generate a trajectory of the drone that keeps the target visibility from obstacles (3b), simultaneously sees the two targets with camera FOV in dual-target scenarios (3c), does not collide with obstacles (3d), and satisfies dynamical limits (3e)-(3f). For the smoothness of the trajectory and the high target visibility, the cost function (3a) consists of terms penalizing jerky motion () and tracking error to designed reference trajectory ().

| (3a) | |||||

| s.t. | (3b) | ||||

| (3c) | |||||

| (3d) | |||||

| (3e) | |||||

| (3f) | |||||

where and are weight factors, is the relative position between the drone and the -th target, is the radius of the drone, and and are current position and velocity of the drone, respectively. Section VI-D discusses the design of the chasing trajectory to maximize the target visibility, which is related to (3a). To handle (3b) and (3c), we design a target-visible region (TVR) in Section VI-B. (3d)-(3f) are addressed in Sections VI-C and VI-E.

IV-A3 Assumptions

For the Prediction problem, we assume that (AP1) the moving objects do not collide with obstacles, and (AP2) they do not move in a jerky way. In the Chasing problem, we assume that (AC1) the maximum velocity and the maximum acceleration of the drone are higher than the target and obstacles, and (AC2) the current state of drone does not violate (3b)-(3f). Furthermore, based on Theorem 1, when the targets move along paths with different path topology, occlusion unavoidably occurs, so we assume that (AC3) all targets move along homotopic paths against obstacles.

In addition, we set the flying height of the drone to a fixed level for the acquisition of consistent images of the target. From the problem settings, we focus on the design of chasing trajectory in the plane.

IV-A4 Trajectory representation

Due to the virtue of differential flatness of quadrotor dynamics [24], the trajectory of multi-rotors can be expressed with a polynomial function of time . In this paper, Bernstein basis is employed to express polynomials. Bernstein bases of -th order polynomial for time interval are defined as follows:

| (4) |

Since the bases defined above are non-negative in the time interval , a linear combination with non-negative coefficients makes the total value become non-negative. We utilize this property in the following sections.

The trajectory of the drone, , is represented as an -segment piecewise Bernstein polynomial:

| (5) |

where (current time), , is the degree of polynomial, and are control points and the corresponding basis vector of the -th segment, respectively. The array of control points in the -th segment is defined as , and the concatenated vector of all control points is the decision variable of the polynomial trajectory optimization.

IV-A5 Objectives

For the Prediction Problem, the prediction module forecasts moving objects’ reachable set . Then, for the Chasing Problem, the chasing module formulates a QP problem with respect to and finds an optimal so that the drone chases the target without occlusion and collision while satisfying dynamical limits.

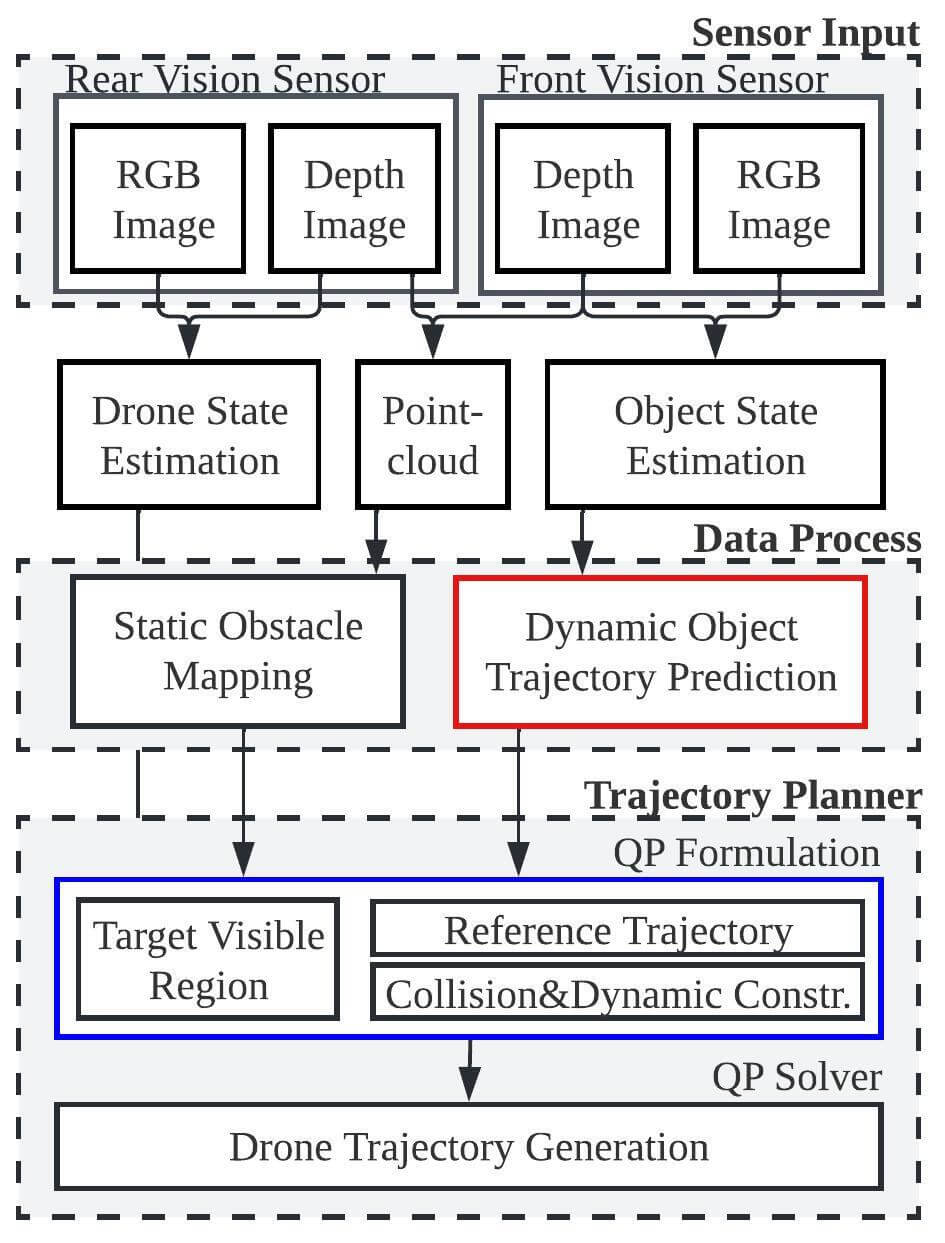

IV-B Pipeline

The overall architecture of QP Chaser is shown in Fig. 3. From cameras, RGB and depth images are acquired, and point cloud and information of target poses are extracted from the images. Then, locations and dimensions of static obstacles are estimated from the point cloud to build an obstacle map, and the reachable sets of the target and dynamic obstacles are predicted based on observed poses of the dynamic obstacles and the targets. Based on the static obstacle map and prediction results, the chasing trajectory for the drone is generated.

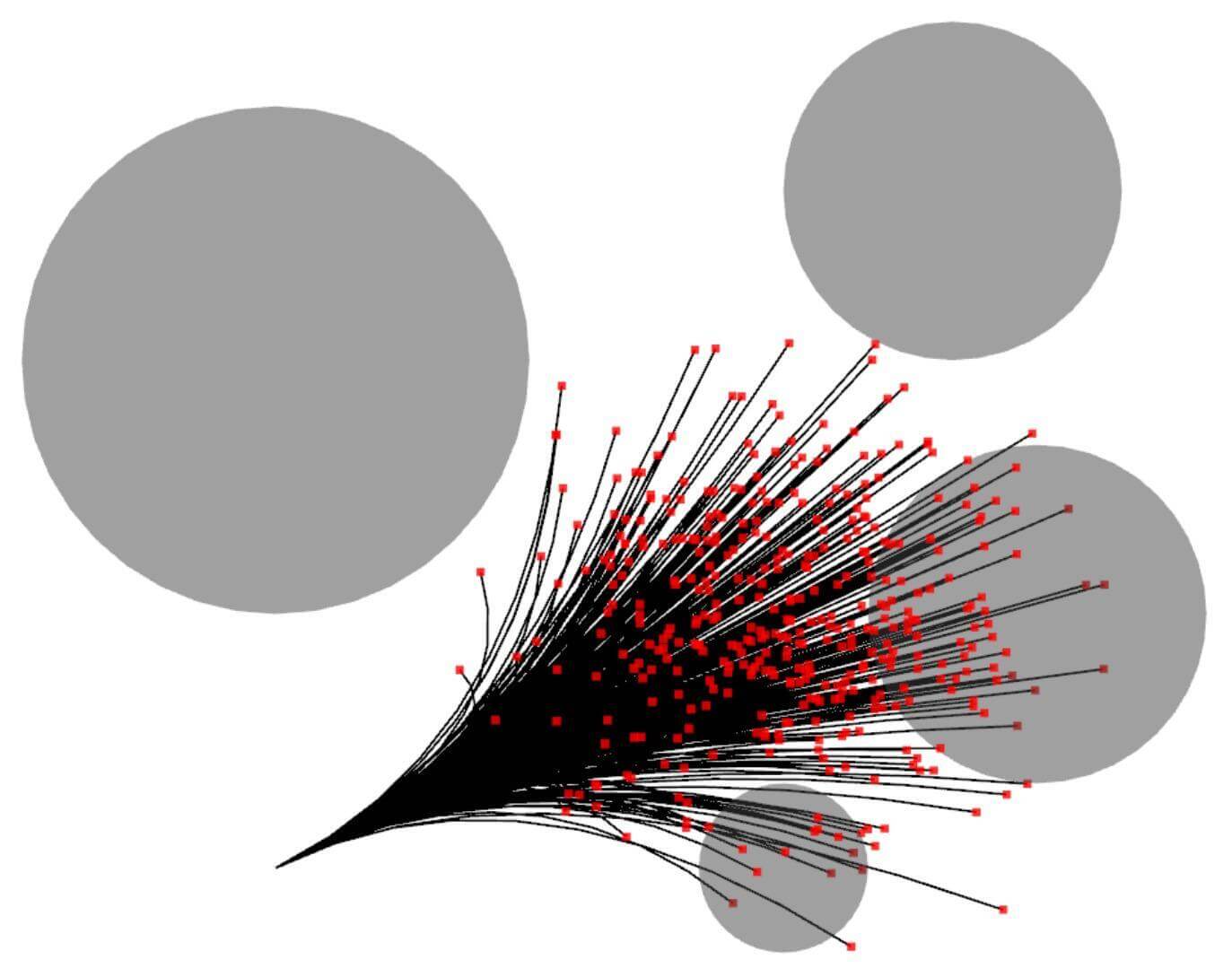

V Reachable Set Prediction

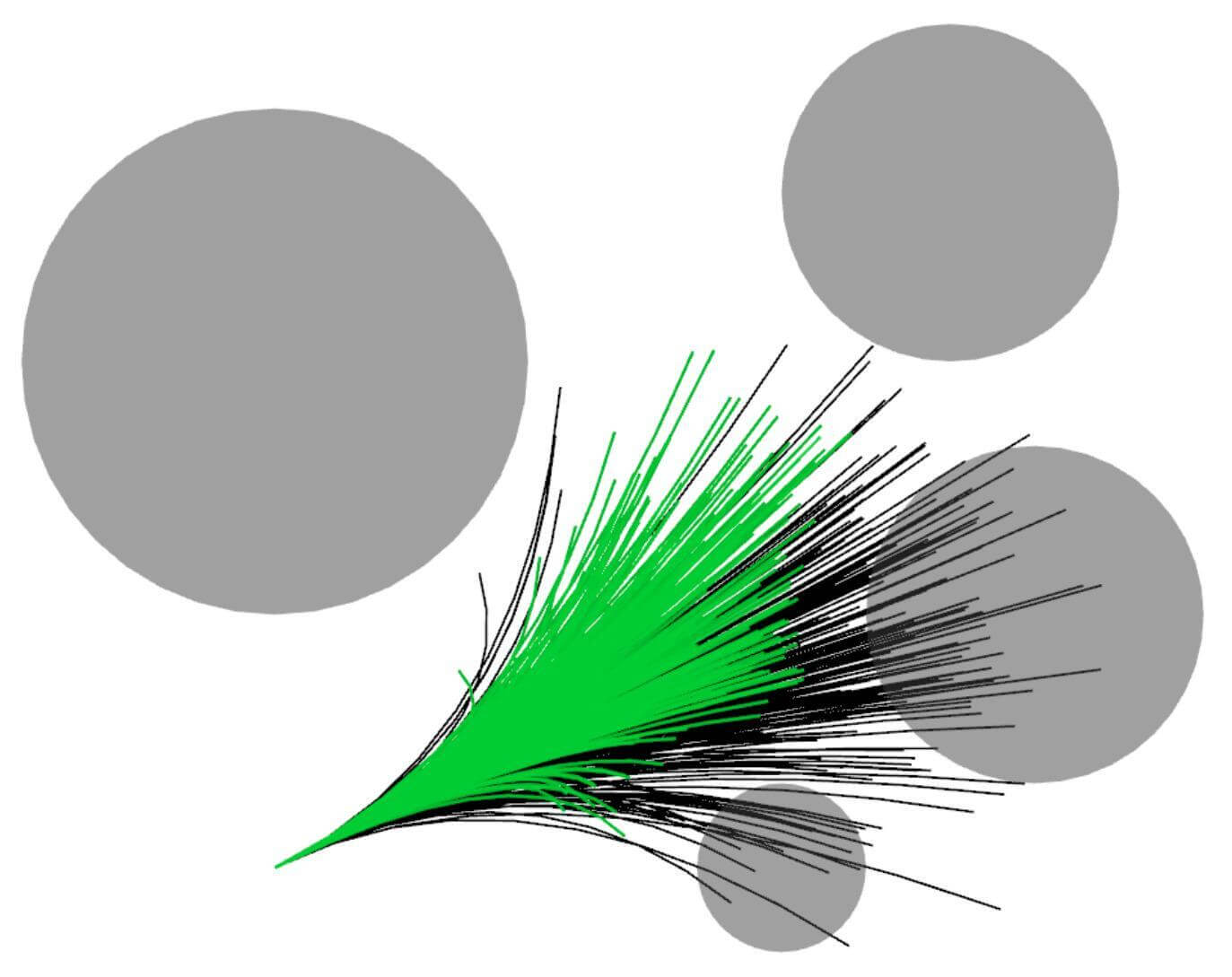

This section introduces a method for calculating reachable sets using the sample-check strategy. We sample a set of motion primitives and filter out the primitives that collide with obstacles. Then, we calculate a reachable set that encloses the remaining collision-free primitives. Figs. 4(a)-4(c) visualize the prediction process.

V-A Candidate for Future Trajectory of Moving Object

We sample the motion primitives of the target by collecting the positions that can be reached at and interpolating them with the current position.

V-A1 Endpoint sampling

The positions of a moving object at time are sampled, using a constant velocity model under disturbance:

| (6) | ||||

where is position of the moving object, and is white noise with power spectral density . Then, with no measurement update, estimation error covariance in continuous-time Kalman filter propagates with time [25].

| (7) |

We collect points from a 2-dimensional Gaussian distribution , where , , and are position, velocity, and estimation error covariance at current time , respectively. We call the set of the samples the Endpoint Set:

| (8) |

V-A2 Primitive generation

Given the initial position, velocity, and end positions , we design trajectory candidates for moving objects. Under the assumption (AP2) that moving objects do not move in a jerky way, we establish the following problem.

| (9) | ||||||

| s.t. |

Recalling that the trajectory is represented with Bernstein polynomial, we write an -th candidate trajectory as , where and . By defining as an optimization variable, (9) becomes a QP problem

| (10) | ||||||

| s.t. |

where is a positive semi-definite matrix, is a matrix, and is a vector composed of , , and . (10) can be converted into unconstrained QP, whose optimal is a closed-form solution as follows.

| (11) |

where is a lagrange multiplier. A set of candidate trajectories of the moving object is defined as .

V-B Collision Check

Under the assumption (AP1) that moving objects do not collide, trajectory candidates that violate the below condition are filtered out.

| (12) |

Due to the fact that all terms in (12) can be represented in polynomials, and Bernstein bases are non-negative in the time period , entirely non-negative coefficients make the left-hand side of (12) non-negative. We examine all coefficients of (12) for the primitives belonging to . For details, see Appendix A. We call a set of primitives that pass the test .

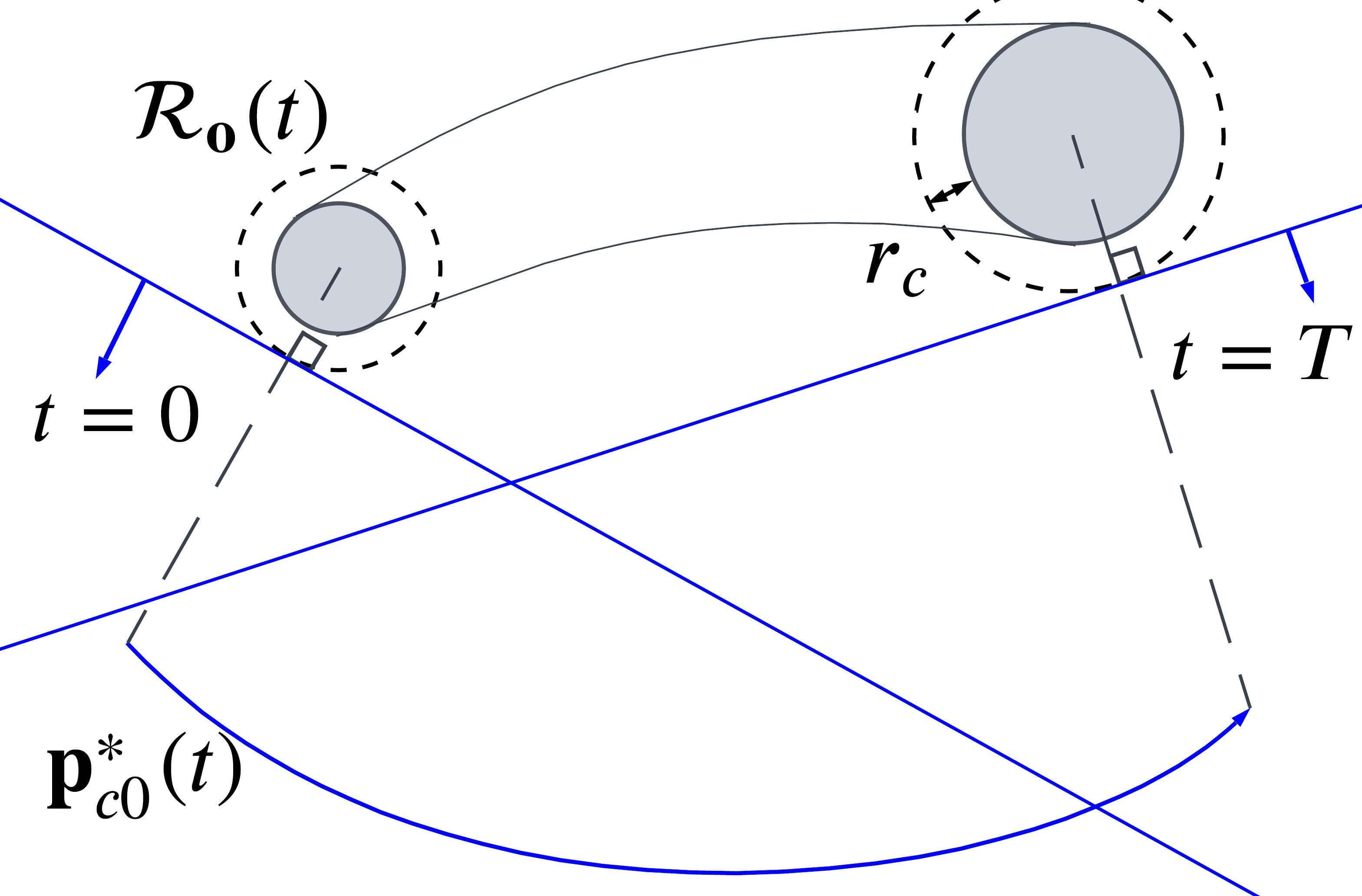

V-C Prediction with Error Bounds

With the set , the reachable set is defined as a time-varying ball enclosing all the primitives in .

| (13) |

We determine a center trajectory as the primitive with the smallest sum of distances to the other primitives in :

| (14) |

Proposition 1.

The optimization problem (14) is equivalent to the following problem.

| (15) | ||||

Proof.

See Appendix B. ∎

From Proposition 1, is determined by simple arithmetic operations and the process has a time complexity of . Then, we define so that encloses all the primitives in for .

| (16) |

The second term in the right hand side of (16) allows the whole body to be contained in . The use of and in chasing problem allows for the consideration of the visibility of the entire bodies of the targets, as well as the potential movements of moving targets and dynamic obstacles.

V-D Evaluation

We measure the computation time of prediction in obstacle environments. We set as 3 and test 1000 times for different scenarios (500,2), (500,4), (2000,2), (2000,4). The prediction module is computed on a laptop with an Intel i7 CPU, with a single thread implementation, and execution time is summarized in Table III. As and increase, the time needed for calculating primitives and collision checks increases. However, obstacles that are close to make small. Accordingly, the time to compute a reachable set decreases as the number of close obstacles increases.

| (500,2) | (500,4) | (2000,2) | (2000,4) | |||

|

43.97 | 47.23 | 168.3 | 184.1 | ||

| Collision Check [s] | 146.3 | 185.3 | 487.9 | 750.7 | ||

|

0.245 | 0.172 | 2.921 | 2.073 | ||

| Total Time [ms] | 0.435 | 0.405 | 3.577 | 3.008 |

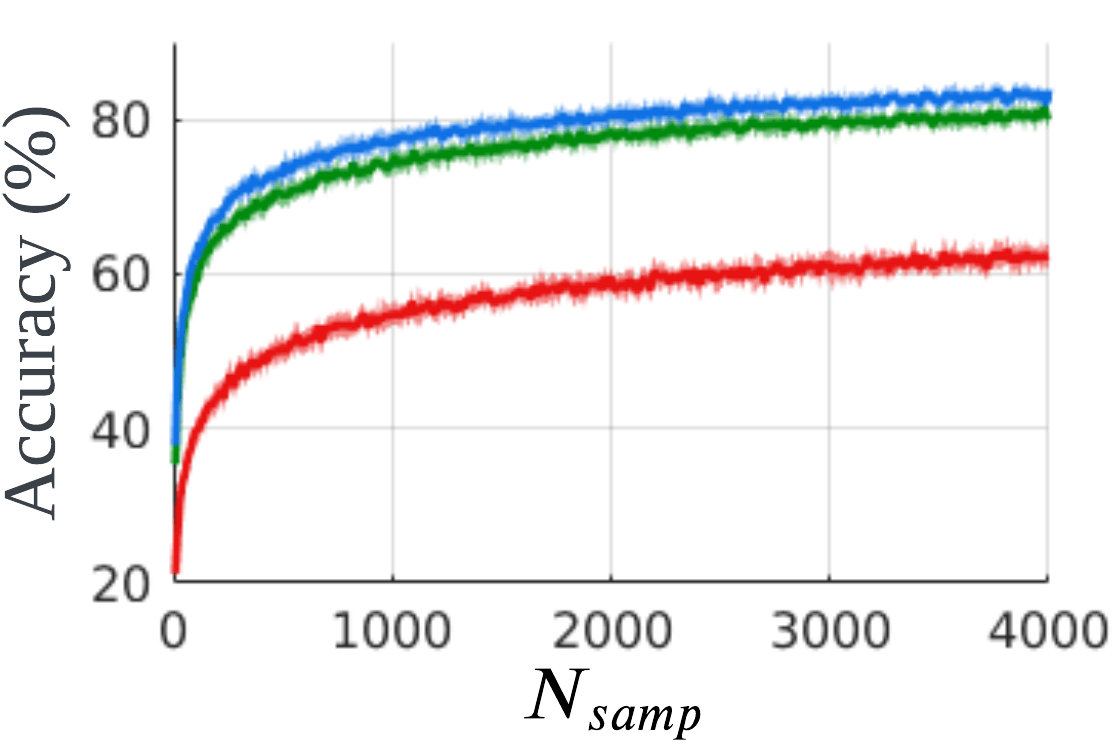

Also, we test the accuracy of the presented prediction methods. For evaluation, discretized models of (6) with power spectral density of white noise 0.1, 0.5, 1.0 are considered. We confirm whether is satisfied in obstacle-free situations while progressively increasing the . For each , the tests are executed 10000 times, and the acquired accuracy is shown in Fig. 5. Contrary to the assumption (AP2) that a moving object moves smoothly, the discretized model can move in a jerky way. Therefore, since the proposed method cannot describe jerky movements during certain initial time intervals, we excluded some initial parts from the tests. As increases, the accuracy improves, but the computation time increases, as presented in Table III. Therefore, should be determined according to the computation resources for a balance between accuracy and running time. In this paper, we set as 2000. With this setting, , was satisfied 98.8% empirically in the simulations in Section VII-C.

As elaborated in Section IV-A, we specifically calculate the reachable sets of moving targets and obstacles: and . Subsequently, in the following section, we generate a trajectory that maximizes the visibility of while avoiding .

VI Chasing Trajectory Generation

This section formulates a QP problem to make a chasing trajectory occlusion-free, collision-free, and dynamically feasible. We first define the target visible region (TVR), the region with maximum visibility of the target’s reachable set, and formulate constraints regarding collision avoidance and dynamic limits. Then, we design the reference trajectory to enhance the target visibility. Regarding the target visibility, all the steps are based on the path topology.

VI-A Topology Check

In 2-dimensional space, there exist two classes of path topology against a single obstacle, as shown in Fig. 6(a). As stated in Theorem 1, the drone should move along a homotopic path with the target path to avoid occlusion. Based on the relative position between the drone and the obstacle, , and the relative position between the target and the obstacle, , at the current time , the topology class of chasing path is determined as follows.

| (17) |

After conducting the topology check above, we define the visibility constraint and reference chasing trajectory.

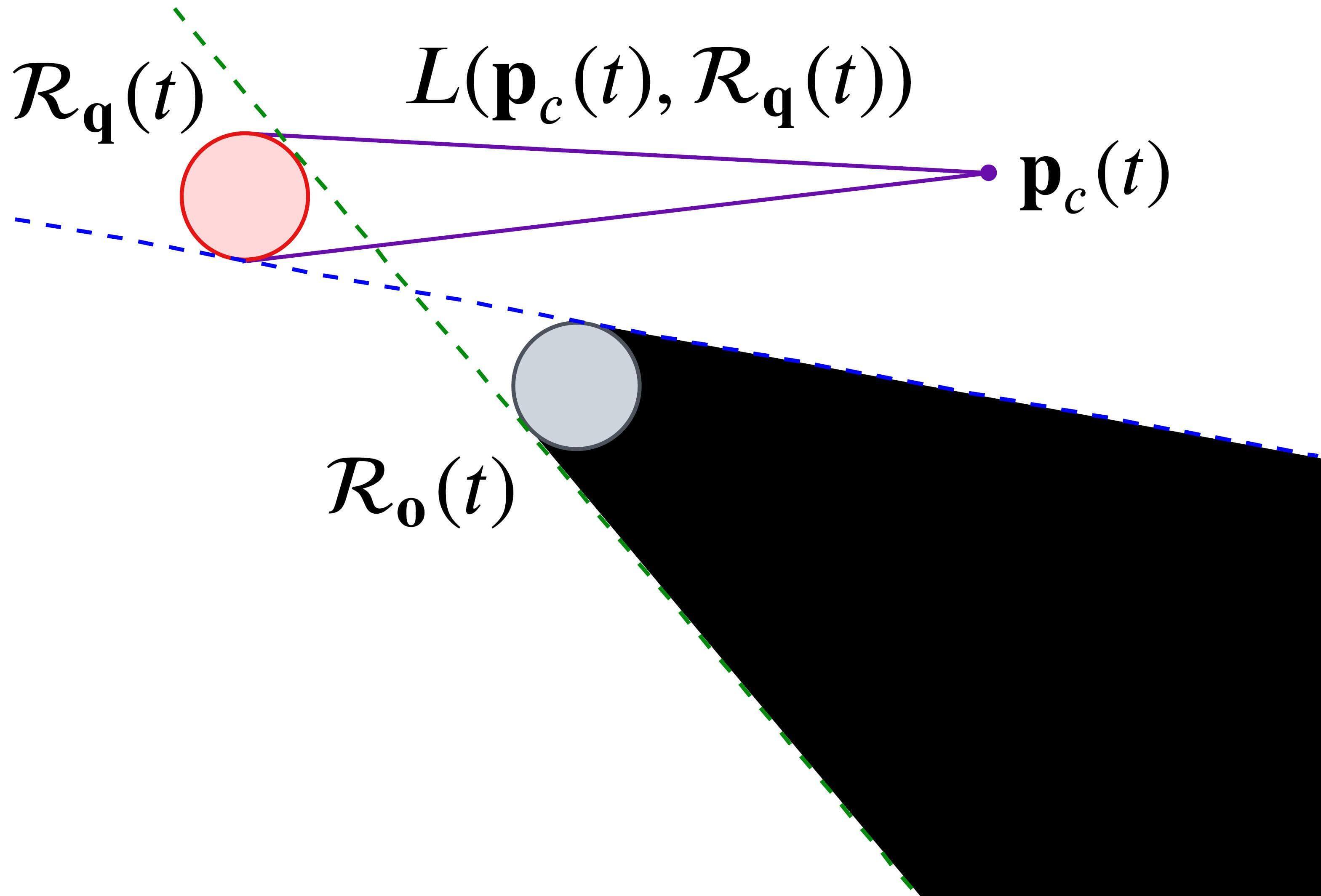

VI-B Target Visible Region

To robustly maintain the target visibility despite the prediction error, the target visible region TVR is defined considering the reachable sets of the moving objects: and .

VI-B1 Target visibility against obstacles

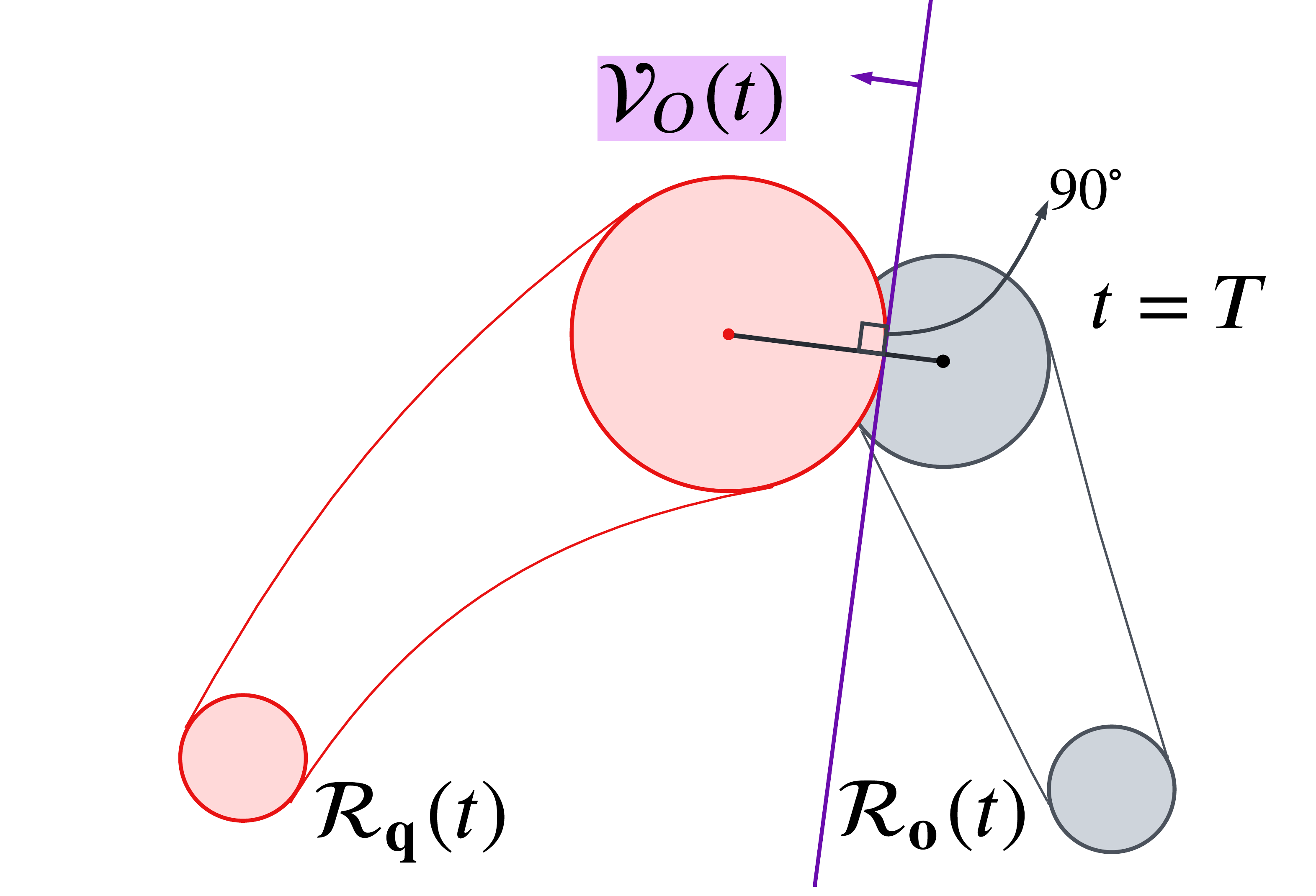

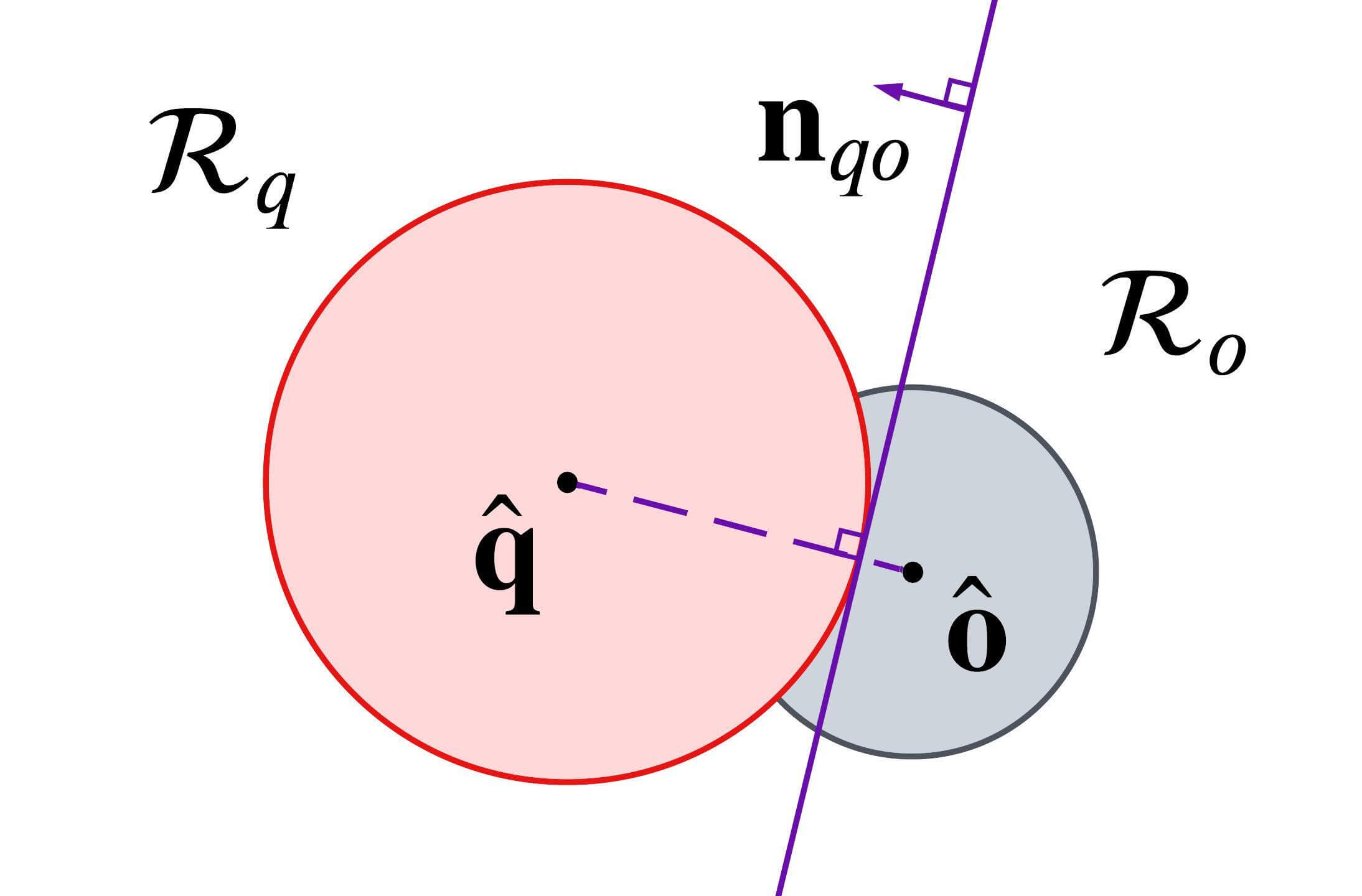

We define the target visible region against an obstacle (TVR-O), for each obstacle. To maximize a visible area of the targets’ reachable set that is not occluded by the reachable set of obstacles, TVR-O is set to a half-space so that it includes the target’s reachable set and minimizes the area that overlaps with the obstacle’s reachable set. There are two cases where the reachable sets of the target and obstacles overlap and do not overlap, and TVR-O is defined accordingly.

Case 1: In the case where the reachable sets of the target and an obstacle do not overlap, the target invisible region is made by straight lines which are tangential to both and , as shown in Fig. 6(b). Accordingly, we define TVR-O as a half-space made by a tangential line between and according to the topology class (17), as shown in Fig. 6(c). denotes TVR-O and is represented as follows.

| (18) | |||

where , , and . The double signs in (18) are in the same order, where the lower and upper signs are for Class O1 and Class O2, respectively.

Lemma 1.

If , is satisfied.

Proof.

is a half-space which is convex, and both and belong to . By the definition of convexity, all the Lines-of-Sight connecting the drone and all points in the reachable set of the target are included in . Since the TVR-O defined in (18) is disjoint with , . ∎

Remark 1.

In dual-target scenarios, in order to avoid the occlusion of a target by another target, (18) is additionally defined by considering one of the targets as an obstacle.

Case 2: For the case where the reachable sets overlap, TVR-O becomes a half-space made by a tangential line to , which is perpendicular to a line segment connecting the centers of the target and the obstacles , illustrated as straight lines in Fig. 6(d). Then the TVR-O is represented as

| (19) |

VI-B2 Bernstein polynomial approximation

In defining TVR-O, all terms in (18)-(19) are polynomials except and . To make affine with respect to the optimization variable , we first convert into Bernstein polynomials using the following numerical technique that originates from Lagrange interpolation with standard polynomial representation [26].

For the time interval , , are approximately represented as . is the degree of , and control points can be acquired as follows:

| (20) |

where is a Bernstein-Vandermonde matrix [27] with elements given by

, and

consists of sequential samples .

With the approximated terms , we represent the target visibility constraint as follows.

| (21a) | ||||

| (21b) | ||||

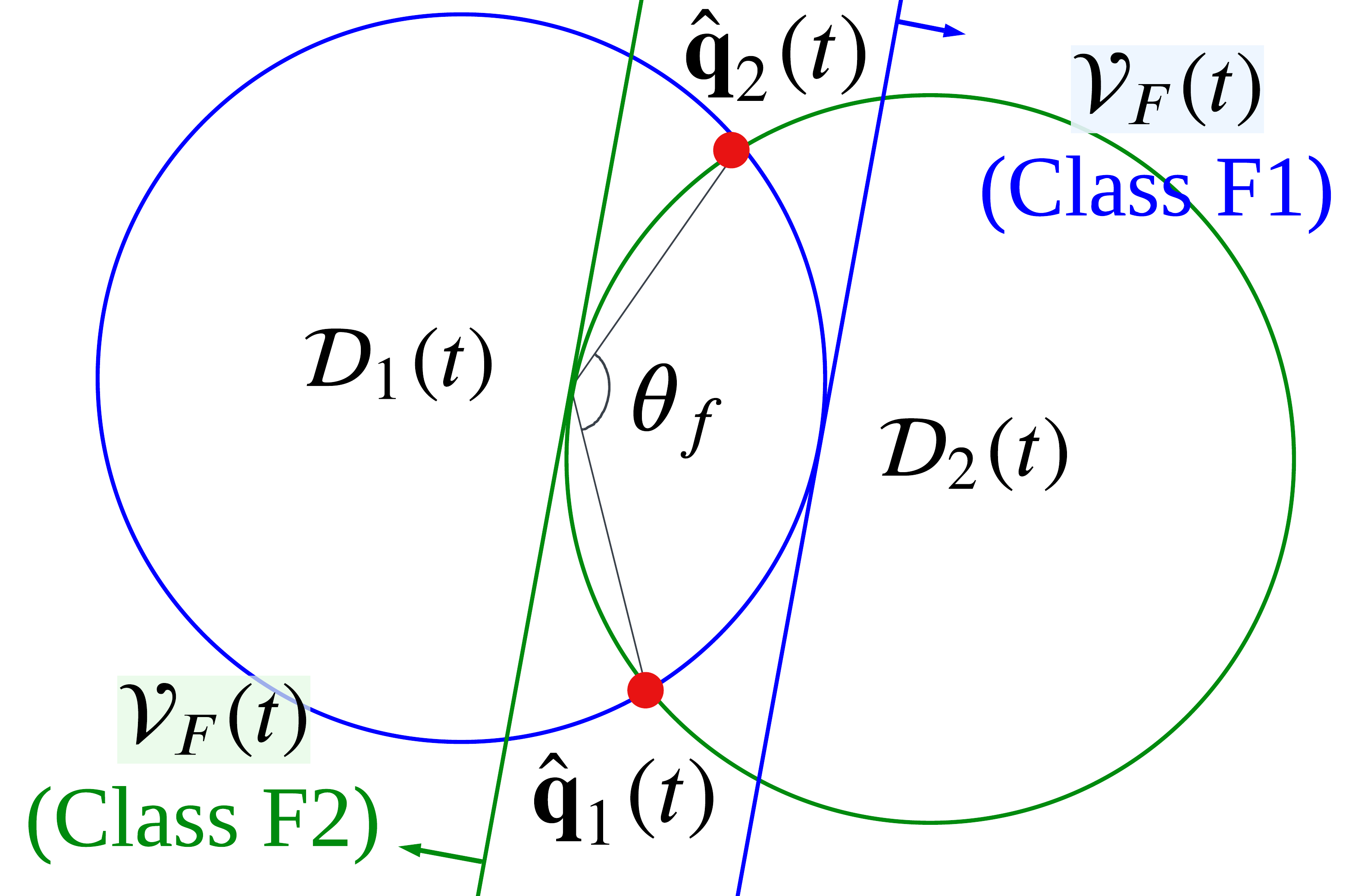

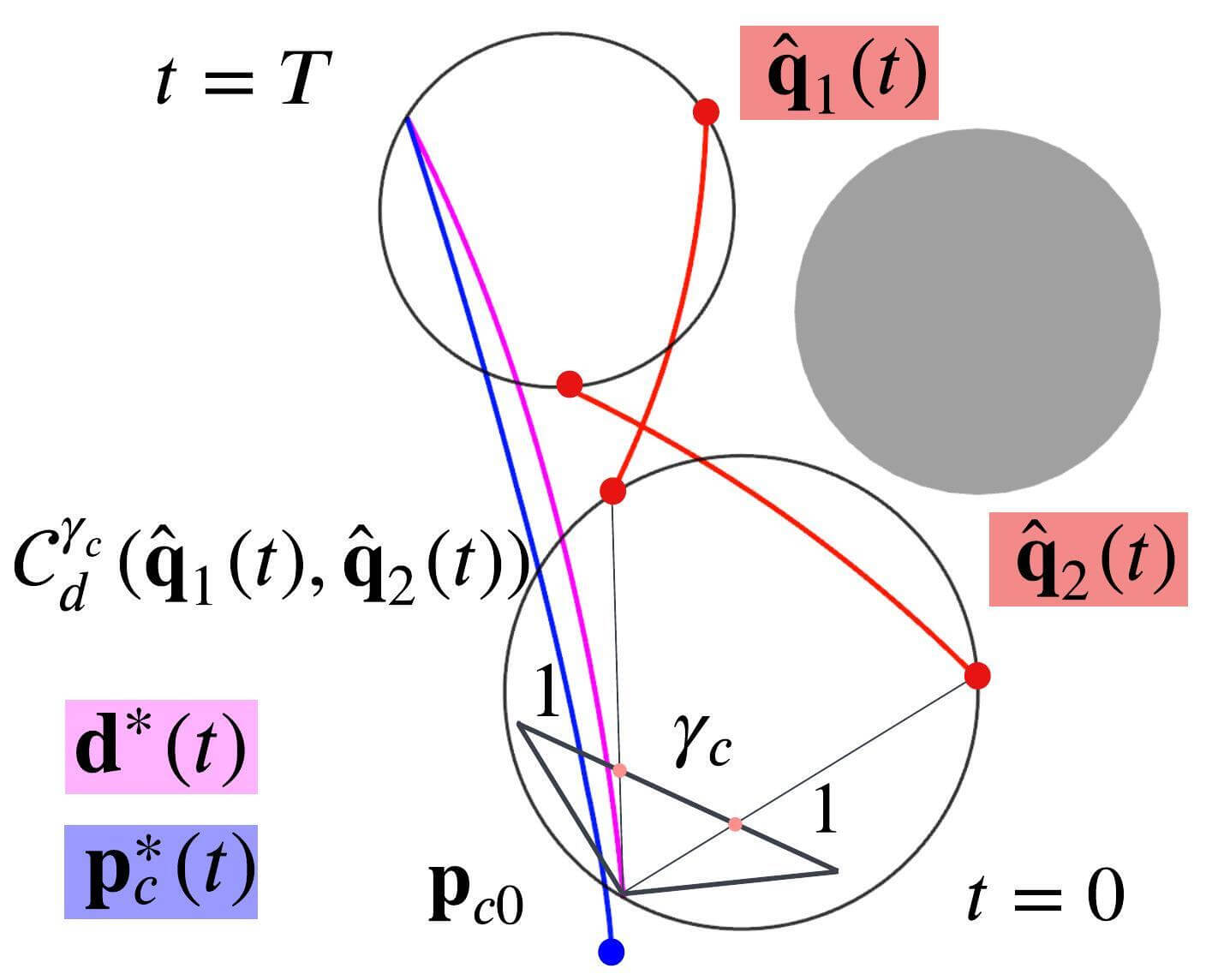

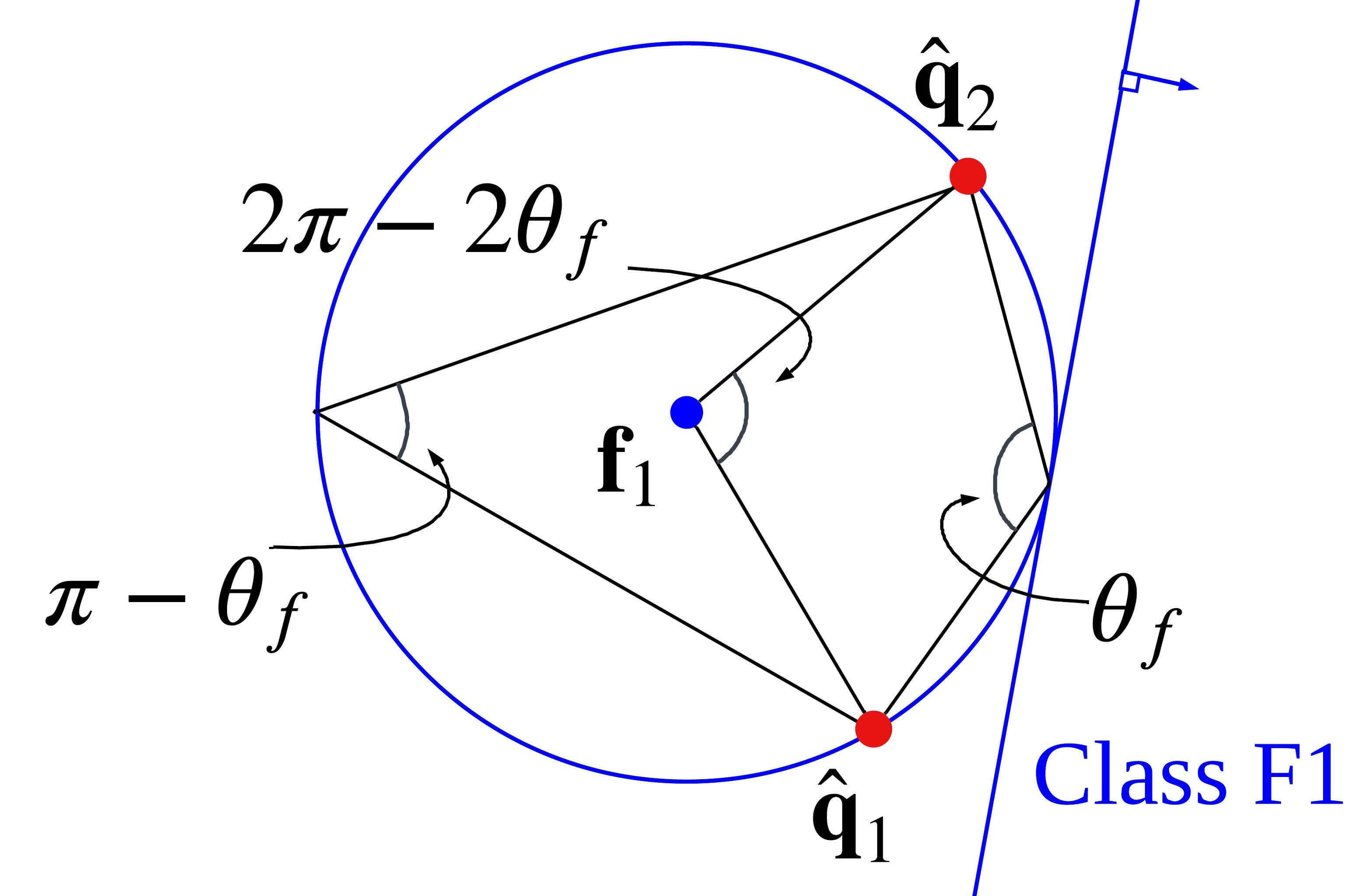

VI-B3 FOV constraints

In addition to (21), the drone should avoid the region where it cannot see the two targets with its limited camera FOV. As shown in Fig. 6(e), circles whose inscribed angle of an arc tracing points and at , equals , are represented as follows.

| (22) | ||||

According to the geometric property of an inscribed angle, , for , , where is represented as follows.

| (23) |

Since the camera FOV is , the drone inside misses at least one target in the camera image inevitably, and the drone outside of is able to see both targets.

We define the target visible region considering camera FOV (TVR-F) as a half-space that does not include and is tangential to , as shown in Fig. 6(e). denotes TVR-F and is represented as follows.

| (24) |

The upper and lower signs in (24) are for Class F1 and Class F2, respectively, which are defined as

| (25) |

We enforce the drone to satisfy in order to see both targets. For the details, see the Appendix C-B.

VI-C Collision Avoidance

To avoid collision with obstacles, we define a collision-free area as a half-space made by a tangential line to a set that is inflated by from . The tangential line is drawn considering a trajectory , which is the previous planning result. This is illustrated in Fig. 6(f) and represented as follows.

| (26) |

where . As in (20), we approximate a non-polynomial term to a polynomial . With the approximated term , the collision constraint is defined as follows:

| (27) |

Due to the fact that the multiplication of Bernstein polynomials is also a Bernstein polynomial, the left-hand side of the (21), (24), and (27) can be represented in a Bernstein polynomial form. With the non-negativeness of Bernstein basis, we make coefficients of each basis non-negative in order to keep the left-hand side non-negative, and (21), (24), and (27) turn into affine constraints with the decision vector . The constraints can be written as follows, and we omit details.

| (28) | ||||

VI-D Reference Trajectory for Target Tracking

In this section, we propose a reference trajectory for target chasing that enhances the visibility of the targets.

VI-D1 Single-target case

In designing the reference trajectory, we use the visibility score, which is computed based on the Euclidean Signed Distance Field (ESDF), as discussed in [28]. The definition of the visibility score is the closest distance between all points in an -th obstacle, , and the Line-of-Sight connecting the target and the drone:

| (29) |

In order to keep the projected size of the target on the camera image, we set the desired shooting distance . With the desired shooting distance , a viewpoint candidate set can be defined as . Under the assumption that the environment consists of cylindrical obstacles, half-circumference of acquires the maximum visibility score, as illustrated in green in Fig. 7(a). Therefore, the following trajectory maintains the maximum .

| (30) |

where . The upper and lower signs are for Class O1 and Class O2, respectively. We define the reference trajectory as a weighted sum of .

| (31) |

’s are weight functions that are inversely proportional to the current distance between the target and each obstacle.

VI-D2 Dual-target case

In order to make aesthetically pleasing scenes, we aim to place two targets in a ratio of on the camera image. To do so, the drone must be within a set , which is defined as follows and illustrated as a black circle in Fig. 7(b).

| (32) | ||||

The upper and lower signs are for Class F1 and Class F2. We define the reference trajectory to acquire a high visibility score while maintaining the ratio.

| (33) | ||||

, and and are defined as in (30), for two targets. The upper and lower signs are for Class F1 and Class F2, respectively. is defined as the farthest direction from the two targets to maximize a metric, + , representing the visibility score between two targets.

For the numerical stability of the QP solver, the reference trajectory is redefined by interpolating between , and the current drone’s position . With a non-decreasing polynomial function that satisfies and , the reference trajectory is defined as follows.

| (34) |

Since trigonometric terms for , s or d in (31), (33) are non-polynomial, the Lagrange interpolation is used as (20). The approximated is denoted as . Based on the construction of reference trajectory and the target visibility constraints, we formulate the trajectory optimization problem as a QP problem.

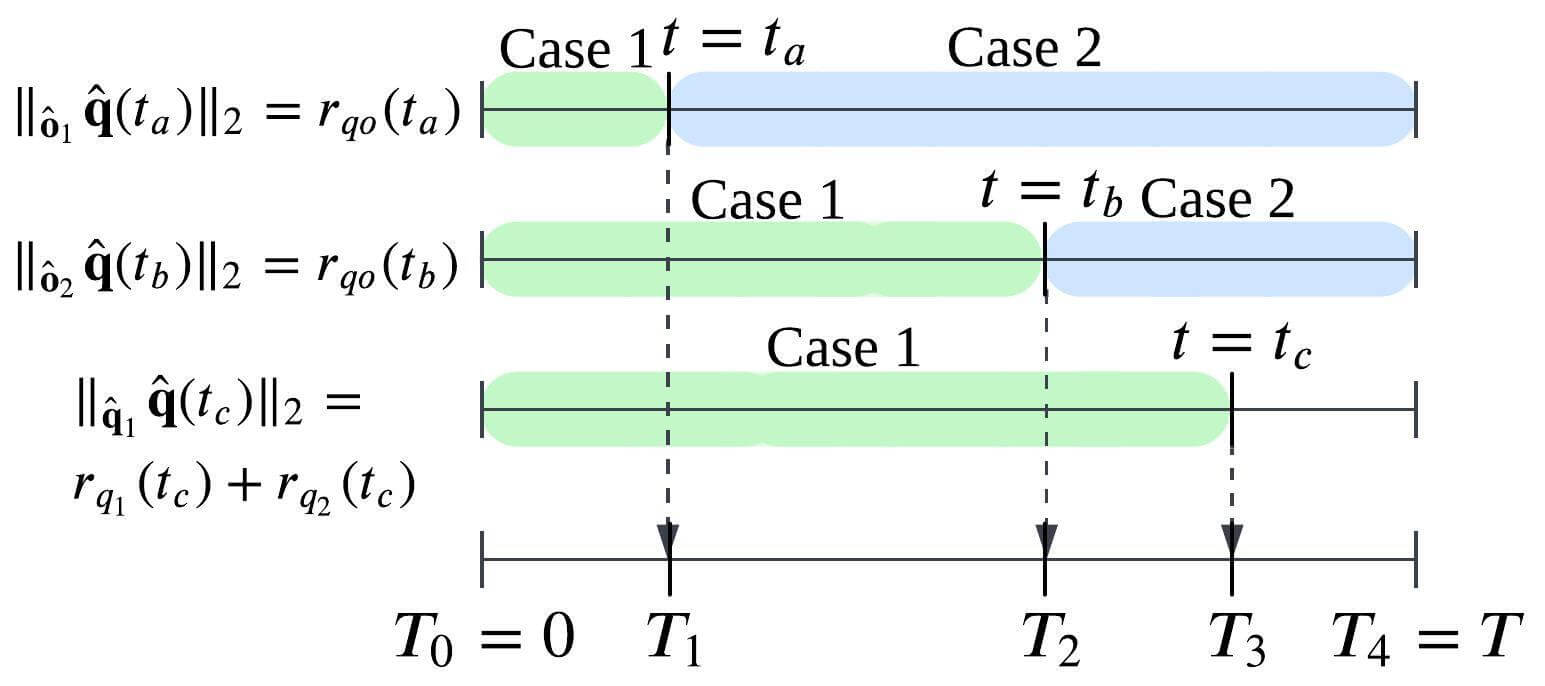

VI-E QP Formulation

VI-E1 Trajectory segmentation

The constraint (21) is divided into the cases: when the reachable sets are separated and when they overlap. To apply (21) according to the situation, the roots of two equations and should be investigated for . We define by arranging the roots of the above equations in ascending order, and we set time intervals, as stated in (5). Fig. 8 visualizes the above process. Thanks to De Casteljau’s algorithm [29], the single Bernstein polynomial such as can be divided into Bernstein polynomials. Using an -segment-polynomial representation, we formulate a QP problem.

VI-E2 Constraints

The drone’s trajectory should be dynamically feasible, (3e) and (3f), while maintaining the target visibility, (3b) and (3c), and avoiding collision, (3d). To construct the QP problem, we formulate the constraints to be affine with respect to . By leveraging the properties of Bernstein polynomials, which include P1) the first and last coefficients corresponding to the endpoints and P2) the convex hull property, we can effectively formulate dynamic constraints. Also, the -th derivative of an -th order Bernstein polynomial is an -th order polynomial. The coefficients of the -th derivative of a polynomial are obtained by multiplying the coefficients of the original polynomial by the following matrix.

| (35) | ||||

First, the trajectory is made to satisfy the initial condition, (3e): current position and velocity. Using P1), we can get

| (36a) | |||

| (36b) | |||

where is an one-hot vector where the -th element is 1, and all other elements are 0. Second, by using P1), the continuity between consecutive segments is achieved by the following constraints.

| (37) | ||||

Third, by using P2), the constraints of velocity and acceleration limits, (3f), are formulated as follows.

| (38a) | |||

| (38b) | |||

Since can be acquired by flattening , the dynamic constraints (36)-(38) are affine with respect to . Then, the constraints in (28) are applied to ensure the target visibility and the drone’s safety.

VI-E3 Costs

As stated in (3), the cost function is divided into two terms: and . First, penalizes the jerkiness of the drone’s path.

| (39) | ||||

Second, mimimizes tracking error to the designed reference trajectory .

| (40) | ||||

Similarly to the constraint formulation, the cost, , can be expressed with c and becomes quadratic in c.

Since all the constraints are affine, the cost is quadratic with respect to , and Hessian matrices of the cost, , , are positive semi-definite, Chasing problem (3) can be formulated as a convex QP problem.

| (41) | ||||||

| s.t. |

| Computation | |||||||||||||||||

| time [ms] | # polynomial segment | # polynomial segment | # polynomial segment | ||||||||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |||

| Single | # obstacle | 1 | |||||||||||||||

| 2 | |||||||||||||||||

| 3 | |||||||||||||||||

| 4 | |||||||||||||||||

| Dual | # obstacle | 1 | |||||||||||||||

| 2 | |||||||||||||||||

| 3 | |||||||||||||||||

VI-F Evaluation

VI-F1 Computation time

We set as 4, 5, 6 and test 5000 times for different scenarios . qpOASES [30] is utilized as a QP solver, and the execution time to solve the QP problem is summarized in Table IV. As shown in the table, the computation time increases as the number of segments and obstacles increases. To satisfy real-time criteria (10 Hz) even as increases, we reduce by defining furthest primitives as outliers, removing them from , and recalculating . We determine the number of outliers with a factor . The effect of is visualized in Fig. 4(d), and it can lower the number of segments . With this strategy, real-time planning is possible even in complex and dense environments.

VI-F2 Benchmark test

We now demonstrate the proposed trajectory planner’s capability to maintain the target visibility against dynamic obstacles. The advantages of the proposed planner are analyzed by comparing it with non-convex optimization-based planners: [3], [5], and [13]. We consider a scenario where the target is static and a dynamic obstacle cuts in between the target and the drone. The total duration of the scenario is 10 seconds, and the position and velocity of the obstacle are updated every 20 milliseconds. We set as 5 and the planning horizon as 1.5 seconds for all planners. Fig. 9 visualizes the history of the positions of the target, the drone, and the obstacles, and Table V summarizes the performance indices related to jerkiness, collisions, and occlusions.

All other planners fail to avoid occlusion and collision because constraint violations can occur to some degree in non-convex optimization. In contrast, our planner considers future obstacle movements and successfully finds trajectories by treating the drone’s safety and the target visibility as hard constraints in a single QP. In addition, the computation efficiency of our method is an order of magnitude greater than other methods.

We also compare our planner with a motion-primitive-based planner [16] that does not account for the path prediction error. Fig. 10 illustrates a situation where occlusion may occur, highlighting that the success of planning can depend on whether the prediction error is considered. Even if the results of the planning method that does not consider the error are updated quickly, occlusion can still occur if the error accumulates. In contrast, our planner generates relatively large movements, which are suitable for maintaining the target visibility when obstacles approach.

VI-F3 Critical analysis

We now study the harsh situations that can fail to maintain the target visibility. The optimization cannot be solved for the following reasons: 1) conflicts between the target visibility constraints and actuator limit constraints and 2) incompatibility among the target visibility constraints. For example, when a dynamic obstacle cuts in between the target and the drone at high speed, the huge variation in the TVR over time conflicts with the actuator limits. Next, a situation where two dynamic obstacles cut in simultaneously from opposite directions may incur conflicts between the target visibility constraints. These obstacles force the drone to move in opposite directions. Target occlusion inevitably occurs when the target and obstacles align. In these cases, we formulate the optimization problem considering constraints, excluding the target visibility constraint. While occlusion may occur, drone safety is the top priority, and planning considering the target visibility resumes once conditions return to normal.

VII Chasing Scenario Validation

In this section, the presented method is validated through realistic simulators and real-world experiments.

VII-A Implementation Details

We perform AirSim [31] simulations for a benchmark test of our method with [16] and [17]. In real-world experiments, we install two ZED2 cameras facing opposite directions. The front-view camera is used for object detection and tracking, while the rear-view camera is used for the localization of the robot. Since the rear-view camera does not capture multiple moving objects in the camera image, the localization system is free from visual interference caused by dynamic objects, thereby preventing degradation in localization performance. Additionally, we utilize 3D human pose estimation to determine the positions of the humans. The humans in experiments are distinguished by color as described in [17]. By using depth information and an algorithm from [32], we build a static map. To distribute the computational burden, we use the Jetson Xavier NX to process data from the ZED2 cameras and the Intel NUC to build maps and run the QP-Chaser algorithm. To manage the payload on the drone, we utilize a coaxial octocopter and deploy Pixhawk4 as the flight controller. For consistent acquisition of positional and scale data of the target and the interrupter, the drone looks between them, and it adjusts its altitude to the height of the target. The parameters used in validations are summarized in Table VI. Since the pipeline is updated at short intervals, the time horizon is set to 1.5 seconds, which is relatively short. The desired distance, , is set to 4 meters for the ZED2 camera setup to ensure a visually pleasing capture of the target. Additionally, we set the screen ratio, , to 1 to pursue the rule of thirds in cinematography.

VII-B Evaluation Metrics

| Scenario Settings | ||

| Name | Single Target | Dual Target |

| drone radius () [m] | 0.4 | 0.4 |

| camera FOV | 120 | 120 |

| Prediction Parameters | ||

| Name | Single Target | Dual Target |

| time horizon () [s] | 1.5 | 1.5 |

| sampled points () | 2000 | 2000 |

| Planning Parameters | ||

| Name | Single Target | Dual Target |

| polynomial degree | 6 | 6 |

| max velocity () [m/s] | 4.0 | 4.0 |

| max acceleration () [] | 5.0 | 5.0 |

| shooting distance () [m] | ||

| screen ratio () | 1.0 | |

| tracking weight () | 10.0 | 10.0 |

| jerk weight () | 0.01 | 0.01 |

In the validations, we evaluate the performance of the proposed planner using the following metrics: drone safety and target visibility. We measure the distances between the drone and targets, the minimum distance between the drone and obstacles, and the visibility score defined as follows:

| (42a) | ||||

| (42b) | ||||

| (42c) | ||||

Also, we assess the tracking performance in the image plane. First, we measure multi-object tracking accuracy (MOTA) and IDF1 to evaluate tracking accuracy. Then, we measure the visibility proportion, which is defined as follows:

| (43) |

where and represent the bounding boxes of the targets and obstacles in the camera image, respectively. The score means the proportion of the unoccluded part of the targets’ bounding box. In the dual-target chasing scenarios, one target can be an obstacle occluding the other target, and the above metrics are measured accordingly. Throughout all validations, we use YOLO11 [33] for the object tracking.

| Metrics | Planner | Single Target | Dual Target | ||

| Scenario 1 | Scenario 2 | Scenario 3 | Scenario 4 | ||

| Target Distance (42a) [m] | proposed | 1.595/3.726 | 0.995/2.562 | 1.213/2.204 | 1.505/3.135 |

| baseline | 1.198/3.298 | -0.131/3.280 | 1.060/3.610 | 0.890/2.611 | |

| Obstacle Distance (42b) [m] | proposed | 0.872/4.070 | 0.714/3.320 | 0.428/1.873 | 0.315/2.847 |

| baseline | -0.264/1.715 | -0.364/2.520 | 0.302/1.901 | 0.059/3.207 | |

| Visibility Score (42c) [m] | proposed | 0.687/2.369 | 0.028/2.529 | 0.193/1.187 | 0.151/1.975 |

| baseline | -0.397/1.183 | -0.379/1.649 | -0.260/0.834 | -0.214/2.103 | |

| MOTA | proposed | 0.987 | 0.917 | 0.913 | 0.934 |

| baseline | 0.939 | 0.808 | 0.882 | 0.879 | |

| IDF1 | proposed | 0.994 | 0.990 | 0.997 | 0.997 |

| baseline | 0.969 | 0.894 | 0.974 | 0.972 | |

| Visibility Proportion (43) | proposed | 1.0/1.0 | 0.523/0.996 | 0.726/0.998 | 0.951/0.999 |

| baseline | 0.0/0.954 | 0.0/0.926 | 0.0/0.942 | 0.0/0.951 | |

| Computation Time [ms] | proposed | 13.64 | 15.41 | 20.53 | 21.22 |

| baseline | 29.45 | 32.12 | 79.61 | 81.37 | |

The upper and lower data in each metric represent the reported metrics of the proposed planner and the baselines (single target: [16], dual target: [17]), respectively. The values for the first, second, third, and sixth metrics indicate the (minimum/mean) performance. means that a value below 0 indicates a collision or occlusion. means that higher is better.

VII-C Simulations

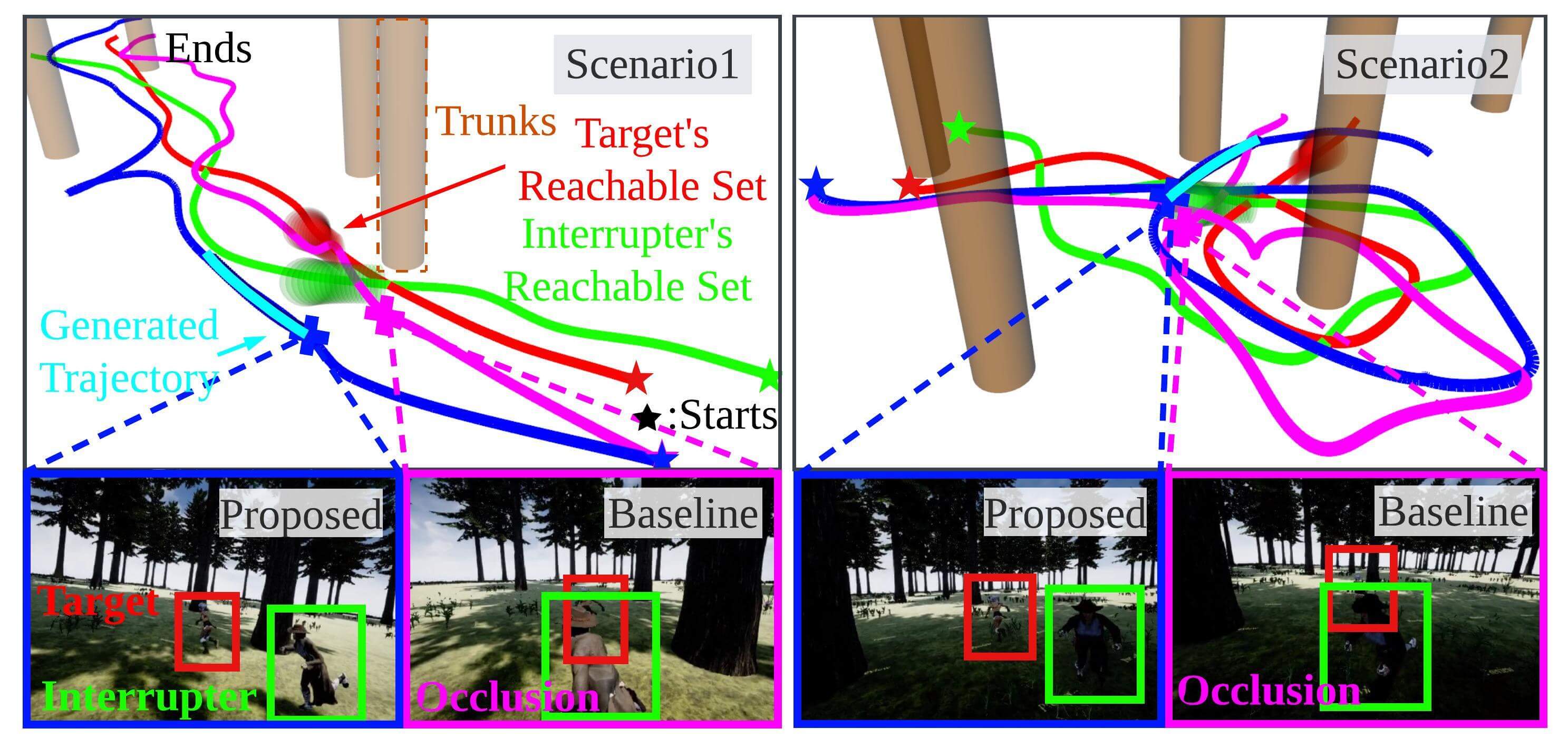

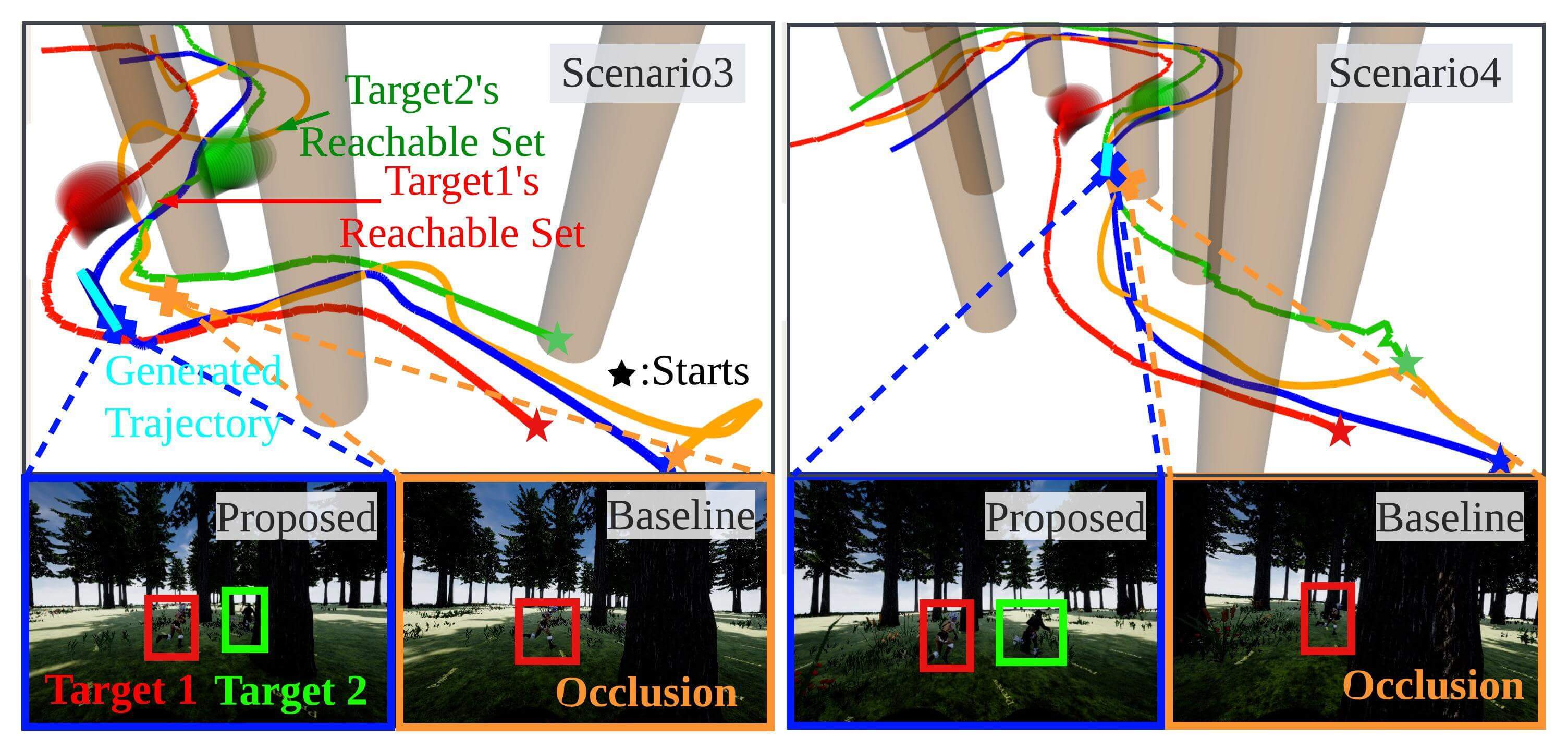

We test the proposed planner in scenarios where two actors run in the forest. To show the robust performance of the planner, we bring the following four scenarios. In the first two scenarios, one actor is the target and the other is the interrupter. In the remaining two scenarios, both actors are targets.

-

•

Scenario 1: The interrupter runs around the target, ceaselessly disrupting the shooting.

-

•

Scenario 2: The interrupter intermittently cuts in between the target and the drone.

-

•

Scenario 3: The two targets run while maintaining a short distance between them.

-

•

Scenario 4: The two targets run while varying the distance between them.

Of the many flight tests, we extract and report some of the 30-40 seconds long flights. We compare the proposed planner with the other state-of-the-art planners [16] in single-target chasing scenarios and [17] in dual-target chasing scenarios. Figs. 11 and 12 show the comparative results with key snapshots, and Table VII shows that the proposed approach makes the drone follow the target while maintaining the target visibility safely, whereas the baselines cause collisions and occlusions. There are rare cases in which partial occlusion occurs with our planner due to the limitations of the PD controller provided by the Airsim simulator. However, in the same situation, full occlusions occur with the baselines.

In single-target scenarios, [16] fails because it alters the chasing path topology when obstacles are close to the drone. In dual-target scenarios, [17] calculates good viewpoints considering the visibility score (42c) and camera FOV, but the path between these viewpoints does not guarantee the target visibility. In contrast, our planner succeeds in chasing because we consider these factors as hard constraints. Moreover, despite the heavy computational demands of the physics engine, the planning pipeline is executed within 25 milliseconds, which is faster than the baselines.

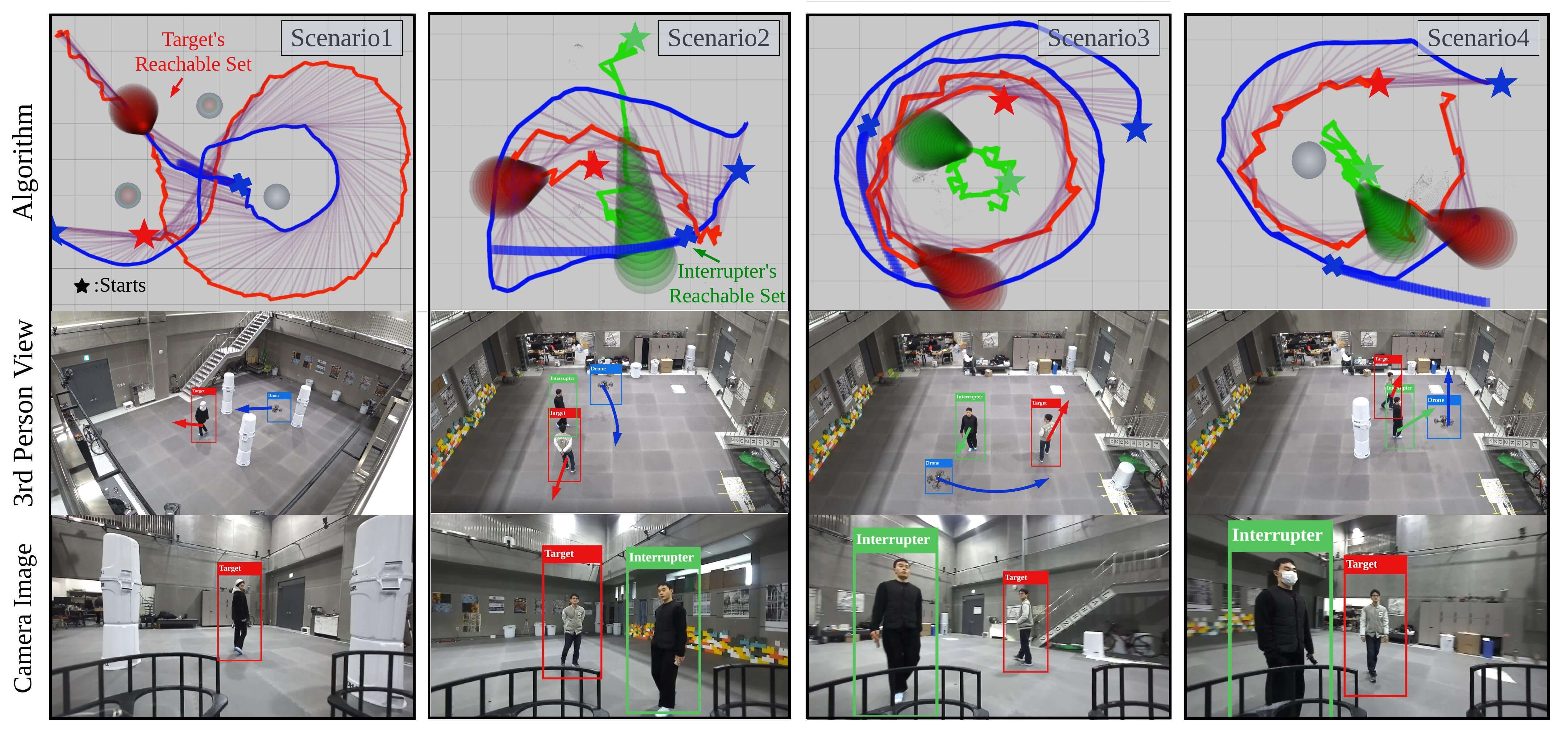

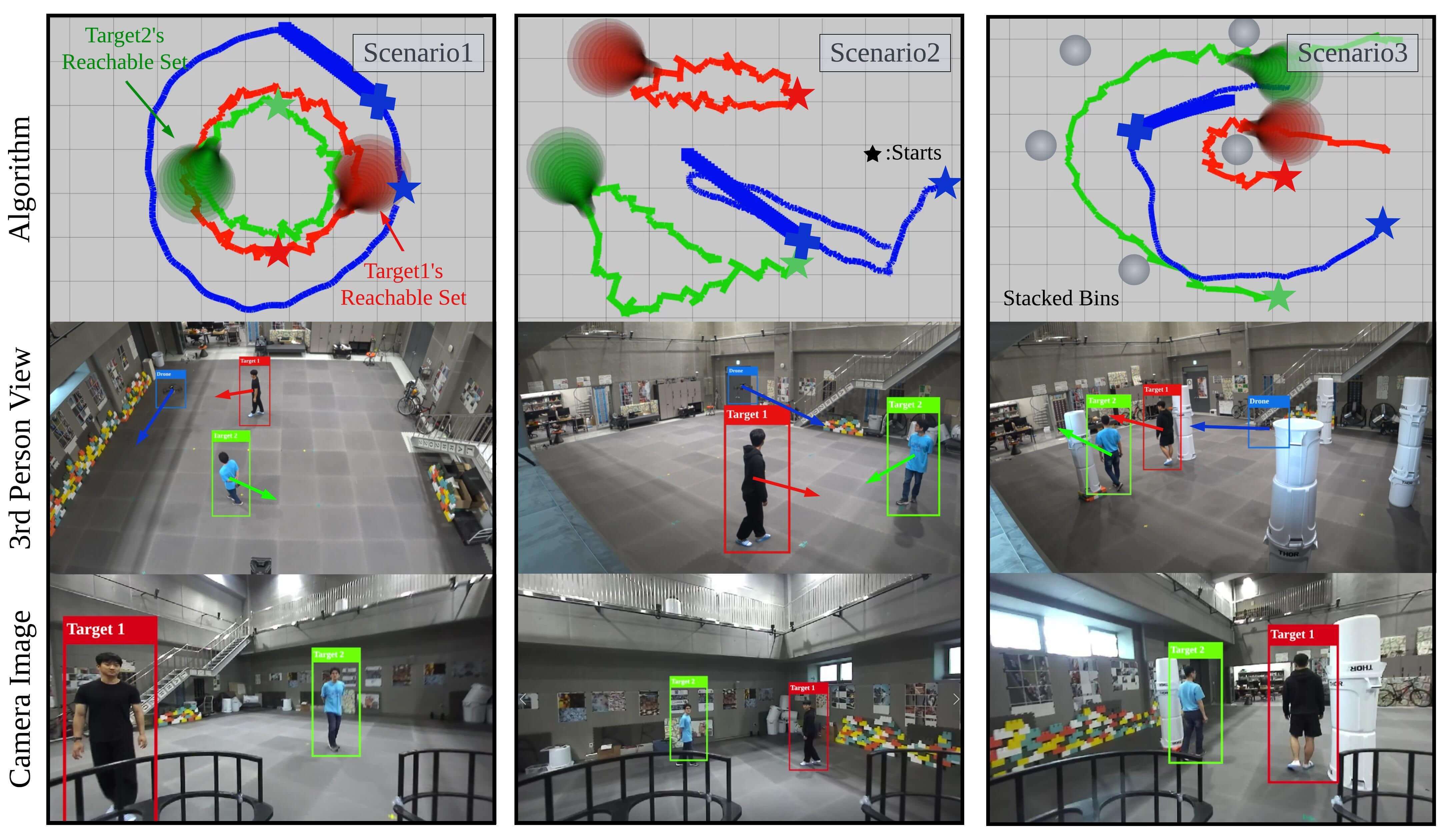

VII-D Experiments

To validate our chasing strategy, we conduct real-world experiments with several single- and dual-target tracking scenarios.

Two actors move in an [m2] indoor space with stacked bins. As in the simulations, one actor is the target, and the other is the interrupter in single-target scenarios, while the two actors are both targets in dual-target scenarios.

For the single-target chasing, we bring the following scenarios.

-

•

Scenario S1: The drone senses the bins as static obstacles and follows the target.

-

•

Scenario S2: The target moves away from the drone, and the interrupter cuts in between the target and the drone.

-

•

Scenario S3: Two actors rotate in a circular way, causing the target to move away from the drone, while the interrupter consistently disrupts the visibility of the target.

-

•

Scenario S4: The interrupter intentionally hides behind bins and appears abruptly to obstruct the target.

For the dual-target chasing, we bring the following scenarios.

-

•

Scenario D1: The targets move in a circular pattern. To capture both targets in the camera view, the drone also moves in circles.

-

•

Scenario D2: The targets move while repeatedly increasing and reducing their relative distance. The drone adjusts its distance from the targets to keep them within the image.

-

•

Scenario D3: The drone detects the bins and follows the targets among them.

Figs. 13 and 14 show the histories with key snapshots of the single- and dual-target experiments, respectively. The planner generates chasing trajectories in response to the targets’ diverse movements and obstacles’ interference. Table VIII confirms that the drone successfully followed the targets without collision and occlusion.

| Metrics | Single Target | Dual Target | |||||

| Scenario S1 | Scenario S2 | Scenario S3 | Scenario S4 | Scenario D1 | Scenario D2 | Scenario D3 | |

| Target Distance (42a) [m] () | 1.668/2.303 | 1.301/2.153 | 1.793/2.382 | 0.805/1.739 | 1.336/2.037 | 1.061/3.349 | 1.567/2.358 |

| Obstacle Distance (42b) [m] () | 0.534/2.289 | 0.701/2.285 | 1.405/2.109 | 0.545/1.862 | 2.316/2.877 | 1.094/3.277 | 0.209/1.889 |

| Visibility Score (42c) [m] () | 0.393/2.111 | 1.020/1.678 | 1.302/1.831 | 0.765/1.461 | 2.403/2.809 | 1.397/3.099 | 0.534/2.289 |

| MOTA () | 0.998 | 0.990 | 0.987 | 0.990 | 0.991 | 0.844 | 0.990 |

| IDF1 () | 0.999 | 0.995 | 0.995 | 0.993 | 0.996 | 0.990 | 1.0 |

| Visibility Proportion (43) () | 1.0/1.0 | 1.0/1.0 | 1.0/1.0 | 1.0/1.0 | 1.0/1.0 | 1.0/1.0 | 0.901/0.995 |

| Computation Time [ms] | 12.61 | 14.63 | 16.12 | 15.32 | 14.61 | 13.94 | 20.81 |

The values for the first, second, third, and sixth metrics indicate the minimum/mean performance. means that a value below 0 indicates a collision or occlusion. means that higher is better.

We use compressed RGB and depth images in the pipeline to record the camera images in limited onboard computer storage and to transfer images between computers. Since the compressed data is acquired at a slow rate, information about moving objects is updated slowly. Therefore, trajectories of moving objects drawn in Figs. 13 and 14 seem jerky. Nevertheless, the drone keeps the target visible and ensures its own safety by quickly updating the chasing trajectory.

VII-E Adaptability and Scalability

As shown in the validations, our planner can be used in environments such as forests or crowds, where obstacles can be modeled as cylinders. Extension to environments with ellipsoidal objects is manageable, but the QP-based approach that can handle unstructured obstacles should be studied further. Extension to multi-target chasing is also feasible. As in dual-target missions, we apply occlusion avoidance and FOV constraints to all pairs of targets, but the reference trajectory to keep high visibility of all targets needs further study.

VII-F Practicality

From a practical perspective, the proposed pipeline can be used in various applications, including the following:

-

•

Surveillance: tracking a suspicious person in crowds

-

•

Search and rescue: providing a missing person’s location in the forest until the rescue team arrives

-

•

Cinematography: producing smooth tracking shots of the main character in scenes with many background actors

In real-world experiments, we confirmed that despite the path prediction error, the drone successfully tracked the targets by quickly updating its chasing trajectory. However, to handle greater sensor noise and more severe unexpected target behaviors, not only is a fast planning algorithm required, but also greater maneuverability of the drone. Two cameras used for stable localization and two onboard computers to meet the pipeline’s real-time constraints significantly increase the overall weight of our system. Reducing the drone’s weight will be beneficial for its agility.

VIII Conclusion

We propose a real-time target-chasing planner among static and dynamic obstacles. First, we calculate the reachable sets of moving objects in obstacle environments using Bernstein polynomial primitives. Then, to prevent target occlusion, we define a continuous-time target-visible region (TVR) based on path homotopy while considering the camera field-of-view limit. The reference trajectory for target tracking is designed and utilized with TVR to formulate trajectory optimization as a single QP problem. The proposed QP formulation can generate dynamically feasible, collision-free, and occlusion-free chasing trajectories in real-time. We extensively demonstrate the effectiveness of the proposed planner through challenging scenarios, including realistic simulations and indoor experiments. In the future, we plan to extend our work to chase multiple targets in environments with moving unstructured obstacles.

Appendix A Implementation of Collision Check

The collision between the trajectories of the moving object and the -th obstacle in (12) can be determined using coefficients of Bernstein polynomials.

| (44) | ||||

| where | ||||

represents -th elements of a vector, and is the Bernstein coefficient representing the radius of the -th obstacle. The condition makes the moving objects not collide with obstacles during .

Appendix B Proof of the Proposition1

Appendix C TVR Formulation

We derive TVR-O, which are represented as (18) and (19), and TVR-F, which are represented as (24). For the mathematical simplicity, we omit time to represent variables.

C-A TVR-O Formulation

As mentioned in Section. VI-B, there are two cases where the reachable sets of the target and obstacles Case 1: do not overlap and Case 2: overlap.

C-A1 Case 1: Non-overlap

Fig. 15(a) shows the TVR-O, when the relation between the target, the obstacle, and the drone corresponds to Class O1. TVR-O is made by the tangential line, and its normal vector is represented as . TVR-O is represented as follows:

| (46) |

is perpendicular to the tangential line, and the line is rotated by from a segment connecting the centers of the reachable sets. By using rotation matrices, becomes as follows.

| (47a) | |||

| (47b) | |||

C-A2 Case 2: Overlap

Fig. 15(b) shows the TVR-O, when the reachable sets overlap. TVR-O is made by the tangential line that is perpendicular to the segment connecting the centers of the reachable sets. TVR-O is represented as follows:

| (48a) | ||||

| (48b) | ||||

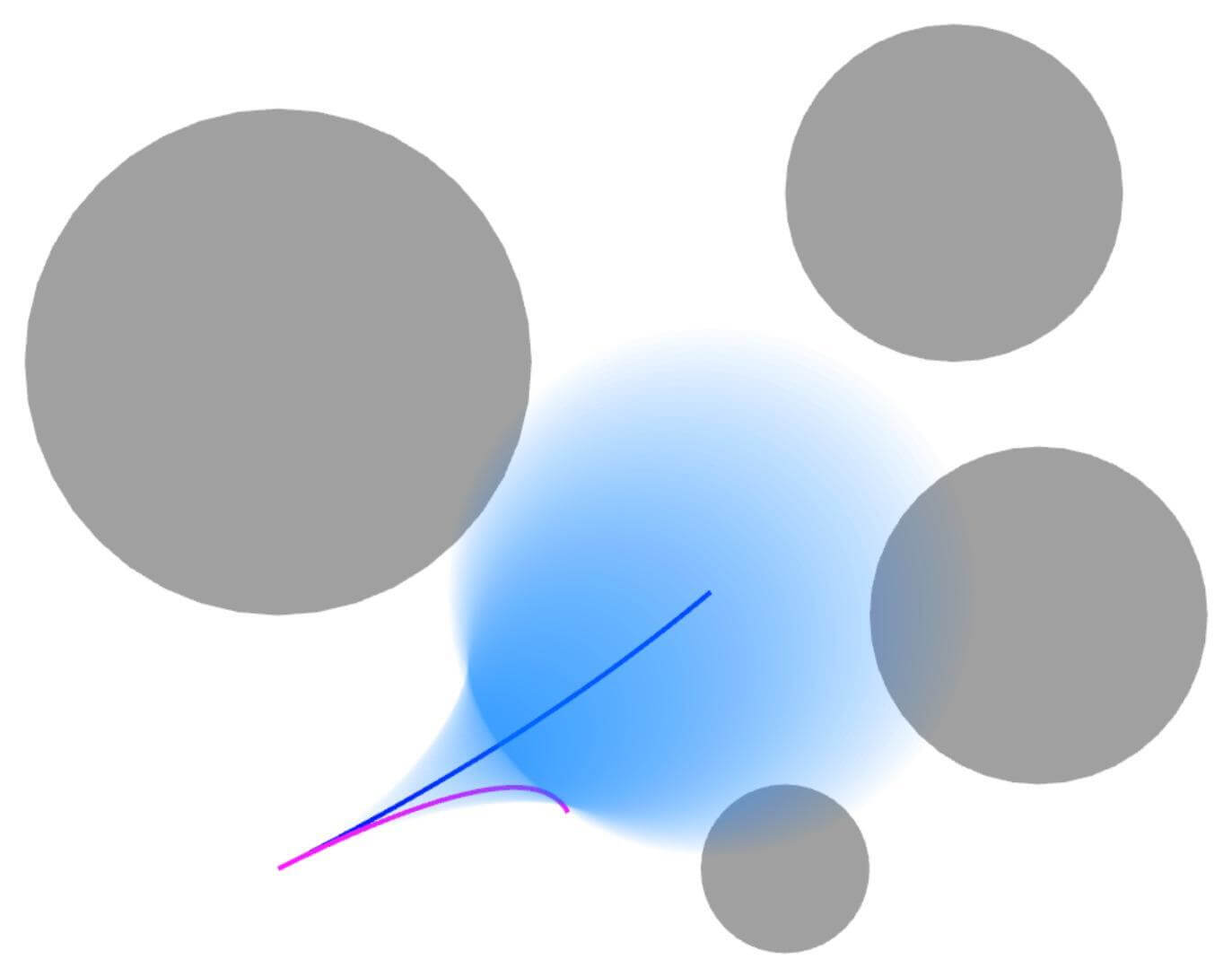

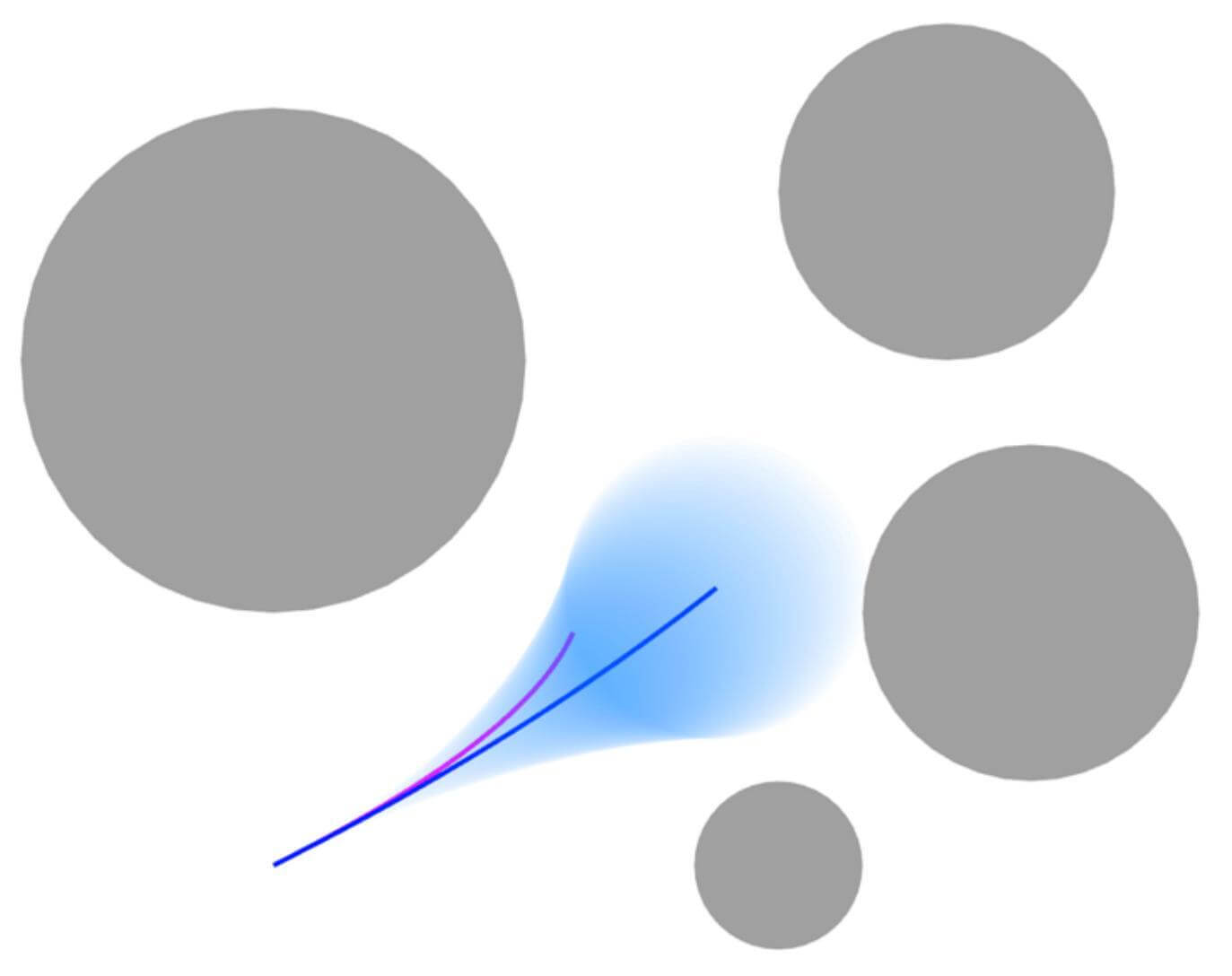

C-B TVR-F Formulation

Fig. 16 shows the TVR-F, when the relation between the targets and the drone corresponds to Class F1. The blue circle has inscribed angles of an arc tracing two points at and equals camera FOV . The position of its center, , is obtained by a relation: rotation of the segment connecting and by an angle becomes the segment connecting and .

| (49) |

The angle comes from the property of the inscribed angle. The radius of the circle. , is equal to the distance between the points at and .

| (50) | ||||

References

- [1] M. Aranda, G. López-Nicolás, C. Sagüés, and Y. Mezouar, “Formation control of mobile robots using multiple aerial cameras,” IEEE Transactions on Robotics, vol. 31, no. 4, pp. 1064–1071, 2015.

- [2] A. Alcántara, J. Capitán, R. Cunha, and A. Ollero, “Optimal trajectory planning for cinematography with multiple unmanned aerial vehicles,” Robotics and Autonomous Systems, vol. 140, p. 103778, 2021.

- [3] B. Penin, P. R. Giordano, and F. Chaumette, “Vision-based reactive planning for aggressive target tracking while avoiding collisions and occlusions,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3725–3732, 2018.

- [4] H. Huang, A. V. Savkin, and W. Ni, “Online uav trajectory planning for covert video surveillance of mobile targets,” IEEE Transactions on Automation Science and Engineering, vol. 19, no. 2, pp. 735–746, 2022.

- [5] R. Bonatti, W. Wang, C. Ho, A. Ahuja, M. Gschwindt, E. Camci, E. Kayacan, S. Choudhury, and S. Scherer, “Autonomous aerial cinematography in unstructured environments with learned artistic decision‐making,” Journal of Field Robotics, vol. 37, no. 4, pp. 606 – 641, June 2020.

- [6] H. Wang, X. Zhang, Y. Liu, X. Zhang, and Y. Zhuang, “Svpto: Safe visibility-guided perception-aware trajectory optimization for aerial tracking,” IEEE Transactions on Industrial Electronics, vol. 71, no. 3, pp. 2716–2725, 2024.

- [7] J. Ji, N. Pan, C. Xu, and F. Gao, “Elastic tracker: A spatio-temporal trajectory planner flexible aerial tracking,” ArXiv, vol. abs/2109.07111, 2021.

- [8] Z. Zhang, Y. Zhong, J. Guo, Q. Wang, C. Xu, and F. Gao, “Auto filmer: Autonomous aerial videography under human interaction,” IEEE Robotics and Automation Letters, vol. 8, no. 2, pp. 784–791, 2023.

- [9] Z. Han, R. Zhang, N. Pan, C. Xu, and F. Gao, “Fast-tracker: A robust aerial system for tracking agile target in cluttered environments,” in 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 328–334.

- [10] N. Pan, R. Zhang, T. Yang, C. Cui, C. Xu, and F. Gao, “Fast‐tracker 2.0: Improving autonomy of aerial tracking with active vision and human location regression,” IET Cyber-Systems and Robotics, vol. 3, 11 2021.

- [11] S. Cui, Y. Chen, and X. Li, “A robust and efficient uav path planning approach for tracking agile targets in complex environments,” Machines, vol. 10, no. 10, 2022. [Online]. Available: https://www.mdpi.com/2075-1702/10/10/931

- [12] B. Jeon, Y. Lee, and H. J. Kim, “Integrated motion planner for real-time aerial videography with a drone in a dense environment,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020, pp. 1243–1249.

- [13] T. Nägeli, J. Alonso-Mora, A. Domahidi, D. Rus, and O. Hilliges, “Real-time motion planning for aerial videography with dynamic obstacle avoidance and viewpoint optimization,” IEEE Robotics and Automation Letters, vol. 2, no. 3, pp. 1696–1703, 2017.

- [14] H. Masnavi, J. Shrestha, M. Mishra, P. B. Sujit, K. Kruusamäe, and A. K. Singh, “Visibility-aware navigation with batch projection augmented cross-entropy method over a learned occlusion cost,” IEEE Robotics and Automation Letters, vol. 7, no. 4, pp. 9366–9373, 2022.

- [15] H. Masnavi, V. K. Adajania, K. Kruusamäe, and A. K. Singh, “Real-time multi-convex model predictive control for occlusion-free target tracking with quadrotors,” IEEE Access, vol. 10, pp. 29 009–29 031, 2022.

- [16] B. F. Jeon, C. Kim, H. Shin, and H. J. Kim, “Aerial chasing of a dynamic target in complex environments,” International Journal of Control, Automation, and Systems, vol. 20, no. 6, pp. 2032–2042, 2022.

- [17] B. F. Jeon, Y. Lee, J. Choi, J. Park, and H. J. Kim, “Autonomous aerial dual-target following among obstacles,” IEEE Access, vol. 9, pp. 143 104–143 120, 2021.

- [18] P. Pueyo, J. Dendarieta, E. Montijano, A. Murillo, and M. Schwager, “Cinempc: A fully autonomous drone cinematography system incorporating zoom, focus, pose, and scene composition,” IEEE Transactions on Robotics, vol. PP, pp. 1–18, 01 2024.

- [19] N. R. Gans, G. Hu, K. Nagarajan, and W. E. Dixon, “Keeping multiple moving targets in the field of view of a mobile camera,” IEEE Transactions on Robotics, vol. 27, no. 4, pp. 822–828, 2011.

- [20] M. Zarudzki, H.-S. Shin, and C.-H. Lee, “An image based visual servoing approach for multi-target tracking using an quad-tilt rotor uav,” in 2017 International Conference on Unmanned Aircraft Systems (ICUAS), 2017, pp. 781–790.

- [21] J. Chen, T. Liu, and S. Shen, “Tracking a moving target in cluttered environments using a quadrotor,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2016, pp. 446–453.

- [22] S. Bhattacharya, M. Likhachev, and V. Kumar, “Topological constraints in search-based robot path planning,” Autonomous Robots, vol. 33, 10 2012.

- [23] J. R. Munkres, “Topology prentice hall,” Inc., Upper Saddle River, 2000.

- [24] D. Mellinger and V. Kumar, “Minimum snap trajectory generation and control for quadrotors,” in 2011 IEEE International Conference on Robotics and Automation, 2011, pp. 2520–2525.

- [25] L. F. L. et al, “Optimal and robust estimation: With an introduction to stochastic control theory, second edition,” CRC Press., 2008.

- [26] G. Collins et al., “Fundamental numerical methods and data analysis,” Fundamental Numerical Methods and Data Analysis, by George Collins, II., 1990.

- [27] A. Marco, J.-J. Martı et al., “A fast and accurate algorithm for solving bernstein–vandermonde linear systems,” Linear algebra and its applications, vol. 422, no. 2-3, pp. 616–628, 2007.

- [28] B. F. Jeon and H. J. Kim, “Online trajectory generation of a mav for chasing a moving target in 3d dense environments,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 1115–1121.

- [29] C. Kielas-Jensen and V. Cichella, “Bebot: Bernstein polynomial toolkit for trajectory generation,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 3288–3293.

- [30] H. J. Ferreau, C. Kirches, A. Potschka, H. G. Bock, and M. Diehl, “qpoases: A parametric active-set algorithm for quadratic programming,” Mathematical Programming Computation, vol. 6, no. 4, pp. 327–363, 2014.

- [31] S. Shah, D. Dey, C. Lovett, and A. Kapoor, “Airsim: High-fidelity visual and physical simulation for autonomous vehicles,” in Field and Service Robotics, 2017. [Online]. Available: https://arxiv.org/abs/1705.05065

- [32] M. Przybyła, “Detection and tracking of 2d geometric obstacles from lrf data,” in 2017 11th International Workshop on Robot Motion and Control (RoMoCo). IEEE, 2017, pp. 135–141.

- [33] G. Jocher and J. Qiu, “Ultralytics yolo11,” 2024. [Online]. Available: https://github.com/ultralytics/ultralytics

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d105d4b-7315-4283-84e3-0244fd340800/YunwooPhoto_compressed.jpg) |

Yunwoo Lee received the B.S. degree in electrical and computer engineering in 2019 from Seoul National University, Seoul, South Korea, where he is currently working toward the integrated M.S./Ph.D. degree in mechanical and aerospace engineering. His current research interests include aerial tracking, vision-based planning, and control of unmanned vehicle systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d105d4b-7315-4283-84e3-0244fd340800/JungwonPhoto_compressed.jpg) |

Jungwon Park received the B.S. degree in electrical and computer engineering in 2018, and the M.S and Ph.D. degrees in mechanical and aerospace engineering at Seoul National University, Seoul, South Korea in 2020 and 2023, respectively. He is currently an Associate Professor at Seoul National University of Science and Technology (SeoulTech), Seoul, South Korea. His current research interests include path planning and task allocation for distributed multi-robot systems. His work was a finalist for the Best Paper Award in Multi-Robot Systems at ICRA 2020 and won the top prize at the 2022 KAI Aerospace Paper Award. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d105d4b-7315-4283-84e3-0244fd340800/SeungwooPhoto_compressed.jpg) |

Seungwoo Jung received the B.S. degree in Mechanical engineering and Artificial Intelligence in 2021 from Korea University, Seoul, South Korea. He is currently pursuing the intergrated M.S./PH.D. degree in Aerospace engineering as member of the Lab for Autonomous Robotics Research under the supervision of H. Jin Kim. His current research interests include learning-based planning and control of unmanned vehicle systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d105d4b-7315-4283-84e3-0244fd340800/x1.jpg) |

Boseong Jeon received the B.S. degree in mechanical engineering and Ph.D. degrees in aerospace engineering from Seoul National University, Seoul, South Korea, in 2017, and 2022 respectively. He is currently a Researcher with Samsung Research, Seoul, South Korea. His research interests include planning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d105d4b-7315-4283-84e3-0244fd340800/DahyunPhoto_compressed.jpg) |

Dahyun Oh received a B.S. in Mechanical Engineering in 2021 from Korea University, Seoul, South Korea. He is currently working on an M.S. degree in Aerospace engineering as a member of the Lab for Autonomous Robotics Research under the supervision of H. Jin Kim. His current research interests include reinforcement learning for multi-agents. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d105d4b-7315-4283-84e3-0244fd340800/HyounjinPhoto_compressed.jpg) |

H. Jin Kim received the B.S. degree from the Korean Advanced Institute of Technology, Daejeon, South Korea, in 1995, and the M.S. and Ph.D. degrees from the University of California, Berkeley, Berkeley, CA, USA, in 1999 and 2001, respectively, all in mechanical engineering. From 2002 to 2004, she was a Postdoctoral Researcher with the Department of Electrical Engineering and Computer Science, University of California, Berkeley. In 2004, she joined the School of Mechanical and Aerospace Engineering, Seoul National University, Seoul, South Korea, where she is currently a Professor. Her research interests include navigation and motion planning of autonomous robotic systems. |