School of Computer Science, Fudan University, China 33institutetext: Academy for Engineering and Technology, Fudan University, China 44institutetext: Fudan Zhangjiang Institute, Shanghai 55institutetext: The Hong Kong Polytechnic University, China

Pyramid Region-based Slot Attention Network for Temporal Action Proposal Generation

Abstract

It has been found that temporal action proposal generation, which aims to discover the temporal action instances within the range of the start and end frames in the untrimmed videos, can largely benefit from proper temporal and semantic context exploitation. The latest efforts were dedicated to considering the temporal context and similarity-based semantic context through self-attention modules. However, they still suffer from cluttered background information and limited contextual feature learning. In this paper, we propose a novel Pyramid Region-based Slot Attention (PRSlot) modules to address these issues. Instead of using the similarity computation, our PRSlot module directly learns the local relations in an encoder-decoder manner and generates the representation of a local region enhanced based on the attention over input features called slot. Specifically, upon the input snippet-level features, PRSlot module takes the target snippet as query, its surrounding region as key and then generates slot representations for each query-key slot by aggregating the local snippet context with a parallel pyramid strategy. Based on PRSlot modules, we present a novel Pyramid Region-based Slot Attention Network termed PRSA-Net to learn a unified visual representation with rich temporal and semantic context for better proposal generation. Extensive experiments are conducted on two widely adopted THUMOS14 and ActivityNet-1.3 benchmarks. Our PRSA-Net outperforms other state-of-the-art methods. In particular, we improve the AR@100 from the previous best 50.67% to 56.12% for proposal generation and raise the mAP under 0.5 tIoU from 51.9% to 58.7% for action detection on THUMOS14. Code is available at https://github.com/handhand123/PRSA-Net

Keywords:

Temporal Proposal Generation, Video Analysis, Temporal Action Detection1 Introduction

Temporal action detection is a popular and fundamental problem for video content understanding. This task aims to predict the precise temporal boundaries and categories of each action instance in the videos. Similar to two-stage object detection in images, most temporal action detection methods [26, 25, 45, 38] follow a two-stage strategy: temporal action proposal generation and action proposal classification. Action classification [41] has achieved convincing performance, but temporal action detection accuracy is still unsatisfactory on mainstream benchmarks. It indicates that the quality of generated proposals is the bottleneck of the final action detection performance.

Existing proposal generation approaches make sorts of efforts to exploit rich semantic information for the sake of better high-quality proposal generation. The majority of previous works [26, 25, 24] embed the temporal representation by stacked temporal convolutions. [7, 51] extend the temporal segment by a pre-defined ratio to capture contextual content, while G-TAD [45] proposes to explicitly model temporal contextual information by graph convolutional networks (GCNs). More recently, like DETR [5] in object detection, transformer based methods [38, 29] are introduced to provide long-range temporal context for proposal generation and action detection.

Despite impressive progress, these aforementioned approaches still confront two challenges that remain to be addressed: 1) limited contextual representation learning and 2) sensitivity to the complicated background. In the former case, although video snippets contain richer information than a single image such as temporal dynamical content, offering useful cues for generating proposals, much fewer efforts are spent on semantic temporal content modeling. Therefore, effectively capturing action-matter context is critical for generating proposals. In the latter case, due to the complicated background in untrimmed videos, [45] adopts a similarity-based attention mechanism to selectively extract the most relevant information and relationships. However, it is sub-optimal to exploit the dependencies only based on computing the pairwise similarity. Because there exists highly similar content for consistent frames, where the pairwise relations may introduce spurious noise such as cluttered background and inaccurate action-related content. As illustrated in Fig. 1, the action instances surrounded by the background content, such as video segments , , can hardly be discriminated due to the camera motion and progressive action transitions. It demonstrates that applying traditional pairwise similarity-based relations such as the operation dot product, Euclidean distance are insufficient to deliver complete contextual information for proposal generation.

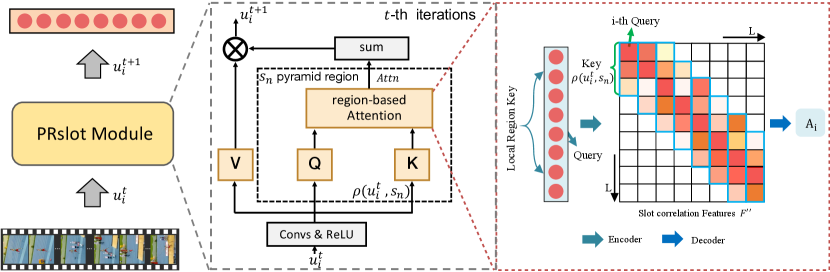

To relieve the above issues, we propose a novel Pyramid Region-based Slot Attention (PRSlot) to contextualize action-matter representation. It is building upon the recently-emerged slot attention [28], which learns the object-centric representations for image classification task. Our well-design PRSlot module takes the snippet-level features as input and maps them to a set of output vectors by aggregating local region context that we refer to as slots. First, our PRSlot module is enhanced by a Region-based Attention (RA) which directly estimates the confidence from inputs and its local region to the slots. Unlike the similarity-based attention mechanism, our RA restricts the scope of slot interactions to local surroundings and learns the semantic attention directly using an encoder-decoder architecture. In detail, an encoder is deployed to exploit the snippet-relevant feature map in the local region by zeroing out the position outside of the desired local scope, while a decoder is followed to map the correlation features into the relation score vectors directly. Second, instead of applying recurrent function to update slots over multiple iterations in original slot attention [28], our tailor-modified PRSlot module presents a parallel pyramid slot representation updating strategy with multi-scale local regions. Finally, the complementary action-matter representation generated by the PRSlot modules are used to produce boundaries scores and proposal-level confidence respectively by linear layers. Based on the above components, we present a novel Pyramid Region-based Slot Attention Network called PRSA-Net to capture abundant semantic representation for high-quality proposal generations. Experimental results show our PRSA-Net is superior to the state-of-the-art performance on two widely used benchmarks. The main contributions of this paper are therefore as follows,

-

•

A newly Region-based Attention is proposed to directly generate relation scores from the slot representations and its surroundings using encoder-decoder manner instead of similarity-based operation.

-

•

We propose a novel Pyramid Region-based Slot Attention (PRSlot) module, which incorporates a region-based attention mechanism and a parallel pyramid iteration strategy to effectively capture contextual representations for better boundaries discrimination. Based on this module, we develop a Pyramid Region-based Slot Attention Network (PRSA-Net) to exploit action-matter information for high-quality proposal generation.

-

•

We perform extensive experiments on the THUMOS14 and ActivityNet-1.3 benchmarks and evaluate the performances of temporal action proposal generation and detection. The results show that our proposed approach outperforms other state-of-the-art methods. Our ablation study also highlights the importance of both architecture components.

2 Related Work

Video Feature Extraction. Since video data can be generally treated as a sequence of ordered frames, the most straightforward approach to video feature extraction is to first generate deep features on every frame by CNN backbones with 2D convolutional filters, and then fuse them into snippet or video level features [20]. Besides the 2D filters, 3D or (2+1)D convolutional filters powered backbones [39, 6, 32] have been designed to directly extract features from multiple stacked video frames, which are more suitable for motion feature extraction. Another widely used framework to explicitly incorporate motion clues is the two-stream CNNs [36, 10], in which one stream with 2D CNN is deployed on the RGB frames, while the other stream extracts features from the optical flows. In this work, we adopt the Kinetics [21] pretrained inflated 3D network (I3D)[6] as the feature extractor. We consider multi-modal information such as RGB and optical flow information in the adopted backbone. Also, for a fair comparison, we also conduct experiments using the TSN [42], where ResNet [15] network and BN-Inception [19] network are used as the spatial and temporal network respectively.

Temporal Action Proposals and Detection. Based on the extracted video features from the feature extractor, numbers of recent approaches solve the action detection problems in two steps: (1) proposal generation; (2) proposal classification and refinement. In the first step, the proposals can be generated in a top-down fashion, which is based on preset sliding temporal windows or anchors [34, 17, 12, 7]. Or alternatively, the proposals can be generated in a bottom-up fashion, which directly predicts proposal locations based on video frames or snippets features [9, 3, 35, 47, 11]. In such way, actionness probabilities estimated at every time step can be analyzed and the potential start and end positions of the predicted action instances can be connected [51, 26, 14, 48, 24]. In the second step, each generated proposal is classified into one of the action categories, whose boundaries could be refined by regression models [34, 12].

Video Context Modeling. A most simple way to merge the features from multiple snippets is by directly applying pooling or convolution operations on the snippet features [34, 33]. Also, multi-scale pooling or convolution has been widely employed to improve the feature representations [11, 51, 46, 14]. Besides the modeling by convolutions, some recent works have attempted to utilize graph convolutional networks (GCNs) or transformer [40] for long-term relationship modeling. For example, G-TAD [45] embeds the temporal context with GCNs, while transformer based method RTDNet [38] is employed to directly generate proposals without post-process. More recently, many methods [49, 52, 31] pay attention to refine the proposals. In particular, the video features and proposals generated by other proposal generation methods, e.g., BSN[26] or BMN [25], are all processed to enhance the proposal-level representations. However, our proposal generation method only inputs video features and generates proposals by ours.

Apart from the above-mentioned ones, some other approaches may even exploit the context information at object-level [16, 43]. However, those methods are beyond the scope of this paper since we mainly focus on the snippet-level feature learning and proposal generation while not proposal refinement.

Attention Mechanism. The vanilla attention mechanism is proposed by [40] and contributes more to capturing long-range dependencies. Recently, attention mechanism has been widely applied in the computer vision field, e.g., image classification ViT [8], video detection [13], group activity recognition [23], and object detection [5]. ViT [8] is the first work to employ a pure attention mechanism for image classification. Original Slot Attention [28] is proposed to exploit object-centric representation. In our work, different from the previous attention mechanism, our proposed region-based attention is estimated directly by high-level correlation features instead of similarity operations, which can concentrate on the local context better.

3 Methodology

3.1 Problem Formulation

Assume that an arbitrary untrimmed video contains a collection of action instances , where refers to the -th action instance and correspond to its annotated start time, end time. The aim of temporal action proposal generation task is to predict a set of action proposals as close to the ground truth annotations as possible based on the content of . Here is the -th proposal confidence.

3.2 Overall Architecture

We propose a Pyramid Region-based Slot Attention Network (PRSA-Net) to generate temporal action proposals precisely. The pipeline of our PRSA-Net is illustrated in Fig. 2. PRSA-Net consists of three major components: feature extractor, PRSlot modules and dual branch proposal generation head. The original video is first fed into the feature extractor to produce the snippet-level features of size , where is the snippet length and is the feature dimension. Then, PRSlot modules are employed to enhance action-matter contextual representations. After this, a dual branch generation head maps the output embedding from the PRSlot modules to final boundaries and confidence scores respectively. The Boundary classifier is used to detect the boundaries, while the proposal regressor is utilized to evaluate the proposal-level confidence. We provide detailed descriptions of PRSA-Net below.

3.3 Feature Extractor

Given an untrimmed video , we utilize the Kinetics [21] pre-trained I3D [6] to extract video features. We consider RGB and optical flow features jointly as they can capture different motion aspects. Following the implementation of previous methods [26, 45], each frame feature is flattened into a -dimensional feature vector and then we group every consecutive frames into snippets. In this work, these generated video snippets are the minimal units for further feature modeling. For convenience, we denote the extracted snippet features as , where is the feature dimension and is the total number of video snippets. In the end, a 1d convolution is applied to transform the channels into . For fair comparisons with [26, 25, 45], we also conduct experiments based on the Kinetics pre-trained TSN [42] to extract video features.

3.4 Pyramid Region-based Slot Attention

The original slot attention module is firstly proposed for updating the object-centric representations (slots) by similarity-based attention. Slots produced by slot attention bind to any object in the input embedding and are updated via a learned recurrent function for object-centric learning. Inspired by this, we propose a tailor-modified Pyramid Region-based Slot Attention (PRSlot) module for capturing action-matter representations by a novel attention mechanism.

3.4.1 Overview.

We adopt dense slots to capture boundary-aware representation. The insight is that the motion content in boundaries context transfers rapidly and can be distinguished from the background. The slot is initialized with snippet features and then is updated by the proposed PRSlot module for Times For convenience, the -th slot representation refined times denotes as . In particular, slot features are processed through convolutional layers with stride 1, window size 3 and padding 1, followed by a ReLU activation layer, and produce the slot features shaped as . Then, based on the proposed Region-based attention mechanism (will describe below), we update our slot representations by the weighted sum of input and then apply batchnorm to the output vectors.

3.4.2 Region-based Attention Mechanism.

As shown in Fig. 3, to focus on the crucial local region relations, we design a newly region-based attention mechanism to learn the snippets relationships instead of using the similarity computation (cosine, dot product or Euclidean distance). Our region-based attention directly estimates the snippets interactions by the content of the snippet and its local surroundings. Mathematically, given the target input feature and its surrounding features denoted as , where represents the window size of its local region, we formulate the calculation of the interaction scores as

| (1) |

Here the output is defined to be the attention vector for the -th snippet (slot), and its length exactly equals , which is the temporal length of the covered surrounding . is a series of operations to map the local region features into the interaction confidence scores for the target snippet (slot). The value of -th element in , here denoted as , is expected to indicate the relation score between -th slot and the target slot . We take every input feature as query, and the local context slot of as key, our designed region-based attention operation constructs a learnable score vector, which is used to quantitatively measure the importance between the target query and key. In detail, this attention mechanism consists of an encoder to embed local contextual information and a decoder to estimate the relevant attention. Due to the permutation invariant of temporal snippets, we additionally add the position embedding to the input features. After this, the output slot representation can be described based on the attention over the feature map in the local region.

Encoder: Summarizing the surrounding slot context for the target slot is critical to augment slot representation. The encoder is utilized to exploit contextual information for the surrounding slots of the target slot. The detailed implementation is described below. Upon the input slot features (we omit the batch dimension for clarity), we repeat the temporal length in last dimension for sampling local windows, and then generate a 2D feature map . A convolutional layer is adopted to transform the -dimension channel into -dimension channel. Next, we apply the 2D convolution layer to augment the target slot using the local surrounding context shaped as . Specifically, the 2D convolutional layer is implemented for target slot feature embedding, where we denote the kernel size as , padding size and stride , indicating that we capture local context with size from each column slot. It generates the feature map , and we regard this feature map as the high-level correlation matrix. For this content-based feature map , we only consider the local contextual information for each target slot. The process can be formulated as,

| (2) |

where denotes the slot correlation features between -th slot and -th slot.

Decoder: For the sparse relation features , a decoder is deployed to map the relation features into the correspond relation scores. It is noted that we only consider the local surroundings of the target slot and operate scores mapping, which can be formulated as,

| (3) |

Here represents the decoding functions which is used to map the slots relation features into scores confidence. In practice, a 2D convolutional layer is applied to distribute the relation features into relation scores, where we set kernel size to , padding size to , out channel to and stride to . This convolutional layer is only deployed for the local slots of target slot and formulate a slot confidence vector. Finally, a operation is followed to normalize the score matrix. In this way, each interaction score is actually dependent on the content of and .

3.4.3 Iteration and update strategy.

The original slot attention updates representations via recurrent function at each iteration . However, the recurrent function (e.g.GRU) is time-consuming and achieves limited performance boost. It will be demonstrated in ablation study. In order to tackle the issues and make the PRSlot specialize in the temporal action-matter representation exploiting, we develop a parallel pyramid iteration strategy for slot representations updating. Due to the variants of video duration, we apply a variety of local regions to exploit the slot representation completely. In detail, region-based attention using different scale surroundings are deployed in parallel, where is a collection of snippet region size and denotes the carnality of the set. In the end, we fuse the slot attention by element sum-wise and aggregate the input values to their assigned slots.

3.5 Dual branch head

Next, slot embedding provided by PRSlot modules serves as the latent action-aware representations for the proposal estimation.

Boundary Classifier.

A lightweight 1d convolution layer is introduced to transform the input channels into 2 for (start, end) detection and a non-linear function is followed to form the start/end probabilities / separately.

Proposal Confidence Regressor.

Following the conventional proposal regression method [25], we use pre-defined dense anchors to generate densely distributed proposals shaped as and then apply 1DAlignLayer [45]

to extract the proposal-level features for corresponding proposal anchors shaped as .

Finally, 2 FC layers are used to predict the proposal-level completeness maps and classification map .

3.6 Training and Inference

Label Assignment.

We first generate temporal boundary label and followed by BSN [26].

Next, we generate the dense proposal label map .

As described in [25], for a proposal with start frame and end frame , we calculate the intersection over union (IoU) with all ground-truth and denote the maximum IoU as the value of .

Training.

We define the following loss function to train our proposed model

| (4) |

Here the boundary classification loss is a weighted binary logistic regression loss used to determine the starting and ending scores or . is the regularization term for network weights, we set . We also construct the proposal confidence losses which is the combination of cross-entropy loss and mean square error loss to optimize dense proposals confidence scores and . It can be formulated as,

| (5) |

where is binary logistic regression loss and is the MSE loss, usually we set the balance term .

Inference.

Based on the outputs of the boundaries classifier, we select the valid starting snippets from by two conditions:

(1) ;

(2) .

We apply the same rule for recording ending snippets.

Then, we combine these valid starting and ending snippets and obtain the candidate proposals denoted as , where and proposal-level confidence .

Finally, we fuse the proposal confidence scores and output the predicted proposals , here .

Post-Processing.

The non-maximum suppression (NMS) algorithm [30] is adopted to suppress redundant proposals with high overlaps.

Also, we use the Soft-NMS [2] algorithm to suppress the proposals and report the performance for a fair comparison with previous methods.

4 Experiments

4.1 Datasets and Evaluation Metrics

THUMOS14 [18]. THUMOS14 dataset contains respectively 200 videos in the validation for training and 213 videos in the test set for inference.

It has a total number of 20 classes, and each video has around 15 action instances on average.

ActivityNet-1.3 [4].

ActivityNet-1.3 dataset contains 19994 untrimmed videos labeled in a wider range of 200 action categories with a lower 1.5 action instances per video on average.

These videos are split into the training, validation, and test set by the ratio of 2:1:1.

We evaluate on the validation set of ActivityNet-1.3.

Evaluation metric.

To assess the performances of action proposal generation, we report the average recall (AR) under different intersections over union thresholds (tIoUs) with various average number of proposals (AN) for each video.

Following the conventions, we adopt the tIoUs of on THUMOS14 and on ActivityNet-1.3.

To evaluate the quality of our generated proposals, we also evaluate the performances of action detection using the mean average precision (mAP) at different tIoUs.

Following the official evaluation API, the tIoUs of are used for THUMOS14, and are used for ActivityNet-1.3.

4.2 Experimental Setup

Following the conventional setting, we extracted the 2048-dimensional video features by two-stream I3D [6] pre-trained on Kinetics [21] on THUMOS14. Besides, for a fair comparison, we also conduct experiments based on the two-stream network TSN [42] backbone, where ResNet network [15] and BN-Inception network [19] are applied as the spatial and temporal network respectively. We also set . On ActivityNet-1.3, the video features were extracted by the pre-trained model provided in [44, 4]. In data preparation, we set the snippet interval to 4 on THUMOS14 and 16 on ActivityNet-1.3. Then we cropped each video feature sequence with the overlapped windows of stride of and length on THUMOS14. While on ActivityNet-1.3, we set the temporal length to by linear interpolation. Also, we enumerate all possible anchors where the max durations is on THUMOS14 and on ActivityNet-1.3. For the PRSlot module, we set the embed dimension , , and the local region scale . In post-processing, we set the NMS threshold to 0.65 and 0.45 on THUMOS14 and ActivityNet-1.3 respectively. During the training, it was optimized on one NVIDIA TESLA V100 GPU with batchsize 16 on ActivityNet1.3 and 8 on THUMOS14. We adopted the Adam optimizer [22] for network optimization. For THUMOS14, the models were tuned for 10 epochs with the learning rate set to . For ActivityNet-1.3, we trained our models by setting the learning rate to in the first 7 epochs and decaying it to in the last 3 epochs.

| Method | Backbone | @50 | @100 | @200 | @500 |

| MGG [27] | TSN | 39.93 | 47.75 | 54.65 | 61.36 |

| BSN [26] + SNMS | TSN | 37.46 | 46.06 | 53.21 | 60.64 |

| BMN [25] + SNMS | TSN | 39.36 | 47.72 | 54.70 | 62.07 |

| BC-GNN [1] + NMS | TSN | 41.15 | 50.35 | 56.23 | 61.45 |

| BU-TAL [50] | I3D | 44.23 | 50.67 | 55.74 | - |

| BSN++ [37] +SNMS | TSN | 42.44 | 49.84 | 57.61 | 65.17 |

| RTD-Net [38] | I3D | 41.52 | 49.32 | 56.41 | 62.91 |

| Ours + NMS | TSN | 47.49 | 55.14 | 60.18 | 63.53 |

| Ours + SNMS | TSN | 44.11 | 52.52 | 59.19 | 65.12 |

| Ours + NMS | I3D | 49.06 | 56.12 | 61.30 | 63.20 |

| Ours + SNMS | I3D | 45.81 | 53.13 | 59.32 | 66.32 |

4.3 Temporal Proposal Generation

4.3.1 Comparisons with State-of-the-Arts.

We compare our PRSA-Net with other state-of-the-art methods on two backbones for a fair comparison. The results on THUMOS14 are summarized in Table 1. It can be observed that our proposed PRSA-Net outperforms all of the aforementioned methods by a large margin with either NMS or Soft-NMS used in post-processing. For instance, when using TSN as feature extractor, our method respectively improve the AR@50 from 42.44% to 47.49%, AR@100 from 49.84% to 55.14%, and AR@200 from 57.61% to 60.18%. It is worth noting that our model with I3D backbone outperforms all previous methods by a large margin (+ 4.83% AR@50 and +5.45% AR@100). With the well-design PRSlot and its region-based attention, our method establishes new state of the art performance on THUMOS14. The results on ActivityNet-1.3 are displayed in Table 2. Our method shows better performances than the previous best results under both AR@100 and AUC scores. In particular, the AR@1 achieves 2.32% performance boost in this well-studied benchmark.

4.4 Ablation Studies

We conduct extensive experiments on THUMOUS14 using the I3D [42] backbone and NMS for post-processing to investigate the effectiveness and proper settings of the different components of our proposed models.

| Attention Mechanism | Iteration Strategy | AR@AN (testing set) | ||||

| SA | RA | original | ours | @50 | @100 | @200 |

| ✓ | ✓ | 35.6 | 43.1 | 50.9 | ||

| ✓ | ✓ | 43.5 | 52.3 | 56.5 | ||

| ✓ | ✓ | 42.9 | 52.8 | 55.6 | ||

| ✓ | ✓ | 49.1 | 56.1 | 61.3 | ||

| Local Region Scale | @50 | @100 | @200 | @500 |

|---|---|---|---|---|

| 2 | 47.2 | 54.3 | 59.4 | 62.9 |

| 4 | 47.8 | 55.4 | 59.9 | 63.2 |

| 8 | 48.8 | 56.0 | 60.6 | 63.0 |

| 12 | 49.0 | 56.1 | 60.5 | 62.7 |

| 2, 4 | 48.6 | 55.7 | 60.4 | 63.0 |

| 4, 8 | 49.1 | 56.1 | 61.3 | 63.2 |

| 2, 4, 8 | 49.1 | 56.1 | 60.2 | 63.2 |

| 4, 8, 12 | 48.7 | 55.8 | 61.5 | 63.4 |

| Iteration times | @50 | @100 | @200 | @500 |

|---|---|---|---|---|

| 1 | 48.4 | 55.3 | 60.1 | 61.6 |

| 2 | 49.1 | 56.1 | 61.3 | 63.2 |

| 3 | 48.9 | 55.7 | 61.9 | 62.3 |

Variants of our PRSA-Net. To measure the importance of our proposed region-based attention and parallel pyramid iteration strategy, we conduct the ablation study with the following variants. Baseline: we replace the PRSlot with the original slot attention, which includes the similarity-based attention and recurrent iteration strategy. More baseline details can be found in our Supplementary materials. We also adopt different combinations of our PRSlot architecture components. Table 3 reports the detailed proposal generation results on THUMOS14. The ✓ symbols stand for with the corresponding components or strategies. We can find that our proposed region-based attention (the last row) improves the AR by more than 6%, indicating the effectiveness of our designed PRSlot module. Additionally, our parallel pyramid iteration strategy also contributes to performance boosts. We attribute performance differences to variations in architecture design and iteration schemes.

Scale of local region.

Next, we investigate the effect of the local region size.

The results on THUMOS14 are displayed in Table 4.

The first column of the table lists the corresponding choices of in a parallel pyramid strategy.

We can find that (1) a single scale with large can perform already quite well; and

(2) the multi-scale regions of and alternatively reach the best results under different AN.

These results demonstrate the effectiveness of our parallel pyramid strategy for complementary context representation modeling.

To balance the performance and model simplicity, we implement our models with .

Sensitivity to iteration times.

We also report the results of the sensitivity of our model to the different settings for iteration times in Table 5.

We find that using iterations can reach the best results while performance slightly degrades at using times.

4.5 Qualitative Results

In Fig. 4, we visualize some action detection results generated by our PRSA-Net on THUMOS14. We can find that our model can successfully detect the action instances in these examples with precise action boundaries. For the untrimmed video with multiple action instances, the foreground actions and background scenes can be well distinguished.

4.6 Action Detection with our proposals

| Method | Backbone | Classifier | 0.7 | 0.6 | 0.5 | 0.4 | 0.3 |

| BSN [26] | TSN | UNet | 20.0 | 28.4 | 36.9 | 45.0 | 53.5 |

| MGG [27] | TSN | UNet | 21.3 | 29.5 | 37.4 | 46.8 | 53.9 |

| BMN [25] | TSN | UNet | 20.5 | 29.7 | 38.8 | 47.4 | 56.0 |

| G-TAD [45] | TSN | UNet | 23.4 | 30.8 | 40.2 | 47.6 | 54.5 |

| BU-TAL et al. [50] | I3D | UNet | 28.5 | 38.0 | 45.4 | 50.7 | 53.9 |

| BSN++ [37] | TSN | UNet | 22.8 | 31.9 | 41.3 | 49.5 | 59.9 |

| BC-GNN [1] | TSN | UNet | 23.1 | 31.2 | 40.4 | 49.1 | 57.1 |

| RTD-Net [38] | I3D | UNet | 25.0 | 36.4 | 45.1 | 53.1 | 58.5 |

| Ours | TSN | UNet | 28.8 | 39.2 | 51.1 | 58.9 | 65.4 |

| Ours | I3D | UNet | 30.9 | 44.0 | 55.0 | 64.4 | 69.1 |

| BSN [26] | TSN | PGCN | - | - | 49.1 | 57.8 | 63.6 |

| G-TAD [45] | TSN | PGCN | 22.9 | 37.6 | 51.6 | 60.4 | 66.4 |

| RTD-Net [38] | I3D | PGCN | 23.7 | 38.8 | 51.9 | 62.3 | 68.3 |

| Ours | I3D | PGCN | 28.4 | 47.3 | 58.7 | 73.2 | 76.3 |

| Method | 0.5 | 0.75 | 0.95 | Average |

|---|---|---|---|---|

| TAL-Net [7] | 38.23 | 18.30 | 1.30 | 20.22 |

| BSN [26] | 46.45 | 29.96 | 8.02 | 30.03 |

| P-GCN [49] | 48.26 | 33.16 | 3.27 | 31.11 |

| BMN [25] | 50.07 | 34.78 | 8.29 | 33.85 |

| G-TAD [45] | 50.36 | 34.60 | 9.02 | 34.09 |

| BSN++ [37] | 51.27 | 35.70 | 8.33 | 34.88 |

| BC-GNN [1] | 50.56 | 35.35 | 9.71 | 34.68 |

| RTD-Net [38] | 47.21 | 30.68 | 8.61 | 30.83 |

| Ours | 52.37 | 37.18 | 9.78 | 36.26 |

When evaluating the quality of our proposals, we follow the conventional two-stage action detection works [45, 25, 26]. Therefore, we classify the candidate proposals using external classifiers. We use the video classifier in UntrimmedNet [41] to assign the video-level action classes on THUMOS14, while on ActivityNet1.3, we adopt the video-level classification results from [44] to assign the action labels to detect the action class. Furthermore, we also introduce the proposal-level classifier P-GCN [49] to predict action labels for every candidate proposals. We evaluate the final action detection performances and make comparisons with the state-of-the-art. The results on THUMOS14 are summarized in Table 6. Compared with the other state-of-the-art methods, our approach achieves significant improvements under all tIoU settings. Especially, the mAP at the typical IoU = 0.5 was boosted from 45.4% to 55.0%, reaching a considerable improvement ratio of 21.1%. Also, when proposal-level classifier P-GCN is applied to classify our generated proposals following the same implementations in [45, 38], the performance can be boosted rapidly and achieve 58.7% [email protected]. Table 7 shows our action detection results on the validation set of ActivityNet-1.3 compared with previous works. Again, our method outperforms most other methods by a large margin in almost all cases, including the average mAPs over different tIoUs. These experiments demonstrate that the proposals generated by our PRSA-Net are able to boost the action detection performance better.

5 Conclusion

In this paper, we propose a novel Pyramid Region-based Slot Attention Network (PRSA-Net) for temporal action proposal generation. Specifically, a Pyramid Region-based Slot Attention (PRSlot) module is introduced to capture action-aware representations, which is enhanced by the proposed region-based attention and parallel pyramid iteration strategy. The experiments show the advantages of our method both in action proposals and action detection performance.

References

- [1] Bai, Y., Wang, Y., Tong, Y., Yang, Y., Liu, Q., Liu, J.: Boundary content graph neural network for temporal action proposal generation. In: European Conference on Computer Vision. pp. 121–137. Springer (2020)

- [2] Bodla, N., Singh, B., Chellappa, R., Davis, L.S.: Soft-nms – improving object detection with one line of code. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (Oct 2017)

- [3] Buch, S., Escorcia, V., Shen, C., Ghanem, B., Niebles, J.C.: SST: Single-Stream Temporal Action Proposals. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

- [4] Caba Heilbron, F., Escorcia, V., Ghanem, B., Carlos Niebles, J.: ActivityNet: A Large-scale Video Benchmark for Human Activity Understanding. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

- [5] Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: European Conference on Computer Vision. pp. 213–229 (2020)

- [6] Carreira, J., Zisserman, A.: Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

- [7] Chao, Y.W., Vijayanarasimhan, S., Seybold, B., Ross, D.A., Deng, J., Sukthankar, R.: Rethinking the Faster R-CNN Architecture for Temporal Action Localization. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

- [8] Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

- [9] Escorcia, V., Heilbron, F.C., Niebles, J.C., Ghanem, B.: DAPs: Deep Action Proposals for Action Understanding. In: European Conference on Computer Vision (ECCV) (2016)

- [10] Feichtenhofer, C., Pinz, A., Zisserman, A.: Convolutional Two-Stream Network Fusion for Video Action Recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

- [11] Gao, J., Yang, Z., Chen, K., Sun, C., Nevatia, R.: TURN TAP: Temporal Unit Regression Network for Temporal Action Proposals. In: IEEE International Conference on Computer Vision (ICCV) (2017)

- [12] Gao, J., Yang, Z., Nevatia, R.: Cascaded Boundary Regression for Temporal Action Detection. In: British Machine Vision Conference (BMVC) (2017)

- [13] Girdhar, R., Carreira, J.a., Doersch, C., Zisserman, A.: Video Action Transformer Network. In: CVPR (2019)

- [14] Gong, G., Zheng, L., Mu, Y.: Scale Matters: Temporal Scale Aggregation Network for Precise Action Localization in Untrimmed Videos. In: IEEE International Conference on Multimedia and Expo (ICME) (2020)

- [15] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (June 2016)

- [16] Heilbron, F.C., Barrios, W., Escorcia, V., Ghanem, B.: SCC: Semantic Context Cascade for Efficient Action Detection. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

- [17] Heilbron, F.C., Niebles, J.C., Ghanem, B.: Fast Temporal Activity Proposals for Efficient Detection of Human Actions in Untrimmed Videos. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

- [18] Idrees, H., Zamir, A.R., Jiang, Y.G., Gorban, A., Laptev, I., Sukthankar, R., Shah, M.: The THUMOS Challenge on Action Recognition for Videos “In the Wild”. Computer Vision and Image Understanding (CVIU) 155, 1–23 (2017)

- [19] Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. CoRR abs/1502.03167 (2015)

- [20] Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-Scale Video Classification with Convolutional Neural Networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014)

- [21] Kay, W., Carreira, J., Simonyan, K., Zhang, B., Hillier, C., Vijayanarasimhan, S., Viola, F., Green, T., Back, T., Natsev, P., et al.: The Kinetics Human Action Video Dataset. arXiv:1705.06950 (2017)

- [22] Kingma, D.P., Ba, J.A.: A method for stochastic optimization. arXiv:1412.6980 (2019)

- [23] Li, S., Cao, Q., Liu, L., Yang, K., Liu, S., Hou, J., Yi, S.: Groupformer: Group activity recognition with clustered spatial-temporal transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 13668–13677 (2021)

- [24] Lin, C., Li, J., Wang, Y., Tai, Y., Luo, D., Cui, Z., Wang, C., Li, J., Huang, F., Ji, R.: Fast Learning of Temporal Action Proposal via Dense Boundary Generator. In: AAAI Conference on Artificial Intelligence (AAAI) (2020)

- [25] Lin, T., Liu, X., Li, X., Ding, E., Wen, S.: BMN: Boundary-Matching Network for Temporal Action Proposal Generation. In: IEEE International Conference on Computer Vision (ICCV) (2019)

- [26] Lin, T., Zhao, X., Su, H., Wang, C., Yang, M.: BSN: Boundary Sensitive Network for Temporal Action Proposal Generation. In: European Conference on Computer Vision (ECCV) (2018)

- [27] Liu, Y., Ma, L., Zhang, Y., Liu, W., Chang, S.F.: Multi-Granularity Generator for Temporal Action Proposal. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

- [28] Locatello, F., Weissenborn, D., Unterthiner, T., Mahendran, A., Heigold, G., Uszkoreit, J., Dosovitskiy, A., Kipf, T.: Object-centric learning with slot attention. Advances in Neural Information Processing Systems 33, 11525–11538 (2020)

- [29] Nawhal, M., Mori, G.: Activity graph transformer for temporal action localization. arXiv preprint arXiv:2101.08540 (2021)

- [30] Neubeck, A., Van Gool, L.: Efficient Non-maximum Suppression. In: International Conference on Pattern Recognition (ICPR) (2006)

- [31] Qing, Z., Su, H., Gan, W., Wang, D., Wu, W., Wang, X., Qiao, Y., Yan, J., Gao, C., Sang, N.: Temporal context aggregation network for temporal action proposal refinement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 485–494 (2021)

- [32] Qiu, Z., Yao, T., Mei, T.: Learning Spatio-Temporal Representation with Pseudo-3D Residual Networks. In: IEEE International Conference on Computer Vision (ICCV) (2017)

- [33] Shou, Z., Chan, J., Zareian, A., Miyazawa, K., Chang, S.F.: CDC: Convolutional-De-Convolutional Networks for Precise Temporal Action Localization in Untrimmed Videos. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

- [34] Shou, Z., Wang, D., Chang, S.F.: Temporal Action Localization in Untrimmed Videos via Multi-stage CNNs. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

- [35] Shyamal Buch, Victor Escorcia, B.G., Niebles, J.C.: End-to-End, Single-Stream Temporal Action Detection in Untrimmed Videos. In: British Machine Vision Conference (BMVC) (2017)

- [36] Simonyan, K., Zisserman, A.: Two-Stream Convolutional Networks for Action Recognition in Videos. In: Advances in Neural Information Processing Systems (NeurIPS) (2014)

- [37] Su, H., Gan, W., Wu, W., Yan, J., Qiao, Y.: Bsn++: Complementary boundary regressor with scale-balanced relation modeling for temporal action proposal generation. arXiv preprint arXiv:2009.07641 (2020)

- [38] Tan, J., Tang, J., Wang, L., Wu, G.: Relaxed transformer decoders for direct action proposal generation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 13526–13535 (2021)

- [39] Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning Spatiotemporal Features with 3D Convolutional Networks. In: IEEE International Conference on Computer Vision (ICCV) (2015)

- [40] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need. arXiv preprint arXiv:1706.03762 (2017)

- [41] Wang, L., Xiong, Y., Lin, D., Van Gool, L.: UntrimmedNets for Weakly Supervised Action Recognition and Detection. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

- [42] Wang, L., Xiong, Y., Wang, Z., Qiao, Y., Lin, D., Tang, X., Van Gool, L.: Temporal Segment Networks: Towards Good Practices for Deep Action Recognition. In: European Conference on Computer Vision (ECCV) (2016)

- [43] Wu, J., Kuang, Z., Wang, L., Zhang, W., Wu, G.: Context-Aware RCNN: A Baseline for Action Detection in Videos. In: European Conference on Computer Vision (ECCV) (2020)

- [44] Xiong, Y., Wang, L., Wang, Z., Zhang, B., Song, H., Li, W., Lin, D., Qiao, Y., Van Gool, L., Tang, X.: CUHK & ETHZ & SIAT Submission to ActivityNet Challenge 2016. arXiv:1608.00797 (2016)

- [45] Xu, M., Zhao, C., Rojas, D.S., Thabet, A., Ghanem, B.: G-TAD: Sub-Graph Localization for Temporal Action Detection. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

- [46] Yang, C., Xu, Y., Shi, J., Dai, B., Zhou, B.: Temporal Pyramid Network for Action Recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

- [47] Yeung, S., Russakovsky, O., Mori, G., Fei-Fei, L.: End-to-End Learning of Action Detection from Frame Glimpses in Videos. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

- [48] Yuan, Z., Stroud, J.C., Lu, T., Deng, J.: Temporal Action Localization by Structured Maximal Sums. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

- [49] Zeng, R., Huang, W., Tan, M., Rong, Y., Zhao, P., Huang, J., Gan, C.: Graph Convolutional Networks for Temporal Action Localization. In: IEEE International Conference on Computer Vision (ICCV) (2019)

- [50] Zhao, P., Xie, L., Ju, C., Zhang, Y., Wang, Y., Tian, Q.: Bottom-up Temporal Action Localization with Mutual Regularization. In: European Conference on Computer Vision (ECCV) (2020)

- [51] Zhao, Y., Xiong, Y., Wang, L., Wu, Z., Tang, X., Lin, D.: Temporal Action Detection With Structured Segment Networks. In: IEEE International Conference on Computer Vision (ICCV) (2017)

- [52] Zhu, Z., Tang, W., Wang, L., Zheng, N., Hua, G.: Enriching local and global contexts for temporal action localization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 13516–13525 (2021)