Pushing the Envelope of Thin Crack Detection

Abstract

In this study, we consider the problem of detecting cracks from the image of a concrete surface for automated inspection of infrastructure, such as bridges. Its overall accuracy is determined by how accurately thin cracks with sub-pixel widths can be detected. Our interest is in making it possible to detect cracks close to the limit of thinness if it can be defined. Toward this end, we first propose a method for training a CNN to make it detect cracks more accurately than humans while training them on human-annotated labels. To achieve this seemingly impossible goal, we intentionally lower the spatial resolution of input images while maintaining that of their labels when training a CNN. This makes it possible to annotate cracks that are too thin for humans to detect, which we call super-human labels. We experimentally show that this makes it possible to detect cracks from an image of one-third the resolution of images used for annotation with about the same accuracy. We additionally propose three methods for further improving the detection accuracy of thin cracks: i) -pooling to maintain small image structures during downsampling operations; ii) Removal of short-segment cracks in a post-processing step utilizing a prior of crack shapes learned using the VAE-GAN framework; iii) Modeling uncertainty of the prediction to better handle hard labels beyond the limit of CNNs’ detection ability, which technically work as noisy labels. We experimentally examine the effectiveness of these methods.

1 Introduction

Automating infrastructure inspection such as roads, bridges, tunnels, etc. has recently attracted a lot of attention. One of the important tasks comprising the inspection is detecting cracks emerging on concrete surfaces of bridges, etc. Their images can be efficiently captured by a robot, e.g., an UAV, from which the existence of cracks can be automatically identified by computer vision methods.

In this study, we consider the problem of detecting cracks from the image of a concrete surface. There are already a large number of studies of crack detection [27, 23, 37]. Recent studies employ convolutional neural networks (CNNs), having taken detection accuracy to a higher level. In the problem of crack detection, thick cracks are easy to detect while thin cracks are not. Thus, accuracy is mostly determined by how well the method can detect thin cracks.

It is practically important to accurately detect thin cracks for better management of infrastructure. In the case of concrete surfaces of a typical type of bridges, it is requested to find cracks with 0.5mm width and sometimes even those with 0.1mm width. From a computer vision point of view, what is essential is the width of imaged cracks than the real widths, as they depend on the distance between the camera and the concrete surface.

Humans and CNNs can recognize thin cracks with subpixel widths, owing to the large image structure along cracks. That said, there has to be a limit in the width of recognizable cracks. In this study, we are interested in making it possible to detect cracks close to the limit of thinness. Toward this ends, we propose a collection of techniques.

We first present a method that trains a CNN with super-human labels. Its aim is to make CNNs detect cracks more accurately than humans while training them on the labels annotated by humans. As shown in Fig. 1(a), humans cannot detect some of thinnest cracks from its image. When we train a CNN using human-annotated labels, the best outcome will be human annotation reproduction. Obviously, this will not make a machine detector surpassing the annotator(s) in detection accuracy. To overcome this, we intentionally lower the spatial resolution of input images while maintaining that of their labels when training a CNN. This enables the annotation of cracks that are too thin for humans to detect, which we call super-human labels. The details are given in Sec. 4.1.

We additionally propose several methods for further improving the accuracy of detecting thin cracks. To be specific, we propose to employ i) -pooling [30] in the design of CNNs to extract small image structures; ii) removal of fake, short-segment cracks in a post-processing step utilizing a prior of crack shapes learned using the VAE-GAN framework [11, 17]; iii) modeling uncertainty of the prediction to better handle hard labels beyond the limit of CNNs’ detection ability, which technically work as noisy labels.

2 Related Work

Detecting cracks emerging on the surface of various materials (e.g., roads, concrete surfaces, metals, etc.) from its image has attracted a lot of attention, because of its wide range of industrial applications. In early studies, image processing methods have been employed, such as thresholding [28], edge detectors [2, 35], ridge detectors [15], etc. CrackIT [20] is a comprehensive crack-detection approach by using traditional low-level image processing techniques. MFCD [12] formulates pavement crack detection as a multiscale image fusion problem based on the assumption that the cracks have lower intensity values than the background. These approaches tend to be sensitive to noise, variance of brightness and contrast etc. or rely too much on assumptions on the appearance of cracks, limiting the applicability.

More recently, machine-learning-based approaches were studied. In CrackForest [27], a random structured forest are employed to find the mapping between crack patches and structure tokens, in which the paired ground-truths are necessary for the training. In [4], textural descriptors are chosen by AdaBoost to better describe imaged cracks, and morphological filters are used to reduce the pixel intensity variance. The STRUM (a spatially tuned robust multifeature) AdaBoost [23] is developed to classify the status of cracks in image patches. Although these methods perform better than earlier efforts based on pure image processing methods, detection accuracy is not enough for practical applications, arguably due to limited performance of feature extraction.

As with many other visual recognition tasks, deep learning has contributed to significant performance improvement. Several studies [29, 14, 5, 25, 3, 32, 38, 34] extract features from a patch and determine whether its center pixel is crack or not. They achieved better performance with transitional areas (i.e., the pixels on the crack/non-crack boundary) at the expense of computational cost. In [26, 33], transfer learning is employed to use a CNN to classify pavement images into cracks and sealed-cracks; the method can detect hairline cracks while eliminating local noises and maintaining fast processing speed. In [21], a two-step approach is proposed to detect cracks on road pavement, which first segment the target area from input image and then detect cracks from target area. In [13, 1], the standard formulation of semantic segmentation is employed, and popular CNN architectures (fully convolutional networks etc.) are adopted, making it possible to deal with images of different sizes. Currently, this formulation is the most popular for crack detection. The success of this approach mostly relies on the availability of good datasets that are given accurate annotation of cracks. It is not so easy to obtain/create such good datasets, limiting its applicability to the real world.

Any visual recognition/detection tasks generally require a large-scale dataset to train a CNN, so does crack detection. There are several datasets for crack detection that are publicly available. For instance, expanded from an early CrackTree206 dataset [37], CrackTree260 [36] contains 260 images of road pavement, each of which is of 800600 pixel size. DeepCrack [16] is a public benchmark dataset containing 537 images of cracks in multiple scales and scenes that can be used for evaluation of crack detection methods. These datasets basically consists of images containing only thick cracks, and are not fit for the purpose of this study.

3 The Problem: Crack Detection

3.1 Practical Requirements for Crack Detection

Recent studies of crack detection tend to formulate crack detection as image segmentation. Their output is a pixel-wise crack map; each pixel has the likelihood indicating that the pixel lies on a crack. In this study, employing this formulation, we consider only two classes, i.e., crack and non-crack (i.e., background); then, it turns to a binary class segmentation problem.

However, from a practical perspective, identifying cracks in a pixel-wise fashion is usually unnecessary. What is more important is determining the existence/nonexistence of crack(s) as precisely as possible. The detected crack’s position does not need to be very accurate; a few pixel shifts from the ground truth position are perfectly allowable. Thus, it is sufficient to represent each crack as a one-dimensional curve, or a poly-line, with one-pixel width. Based on this requirement, we reconsider how to annotate cracks and evaluate their detectors.

3.2 Annotation of Cracks

Many studies follow the standard method for semantic segmentation for annotation of cracks, where cracks are annotated as pixel regions. This may be appropriate when the imaged cracks have widths of at least a few pixels. However, we are interested in detecting cracks, including those with sub-pixel widths; they do not form a ‘region.’ Besides, the pixel-wise annotation is not efficient; it will take a long time annotate a single image.

Considering also the above practical requirement, we employ a different approach for annotation. We represent a crack as a one-dimensional structure, or more precisely, as a set of connected pixels with the chain code. We developed an assisting tool for the annotation, using which the annotator needs only to click the start and end points of each crack. Then, the tool finds the path along the crack connecting the specified two points. It is based on an algorithm finding the shortest distance path, in which the distance traversing each pixel is designed to reflect its ‘crack-ness’, which is calculated by a ridge detector [15].

3.3 Training with One-pixel-width Crack Label

The choice of this annotation method poses two problems. One is how to fill in the differences between the images and the label maps when training our network. As we employ the same formulation as semantic segmentation, the network outputs a map of crack likelihood. Even for a thick crack having several pixel widths on the image, it has one-pixel width in the label map, resulting in that many pixels on the imaged crack will be annotated as non-crack. The standard loss function used for semantic segmentation may not work well in the presence of such label differences.

We apply a Gaussian filter to the label maps to cope with this. A similar approach has been employed for human pose estimations (e.g., [19]). In relation to this, we employ a mean squared error (MSE) loss for the loss function in the training of our CNNs instead of the standard binary cross-entropy (BCE) loss. We have empirically found that the former works better than the latter. The details are given in the supplementary.

3.4 Evaluation of Crack Detection

The other issue with the above annotation is how to evaluate detection results. Our network outputs a two-dimensional map of crack likelihood. By thresholding it, we get a binary crack prediction map. Assuming the ground truth map of crack labels, all the pixels are classified into true positive (TP), true negative (TN), false positive (FP), and false negative (FN). Then, the standard evaluation metrics for semantic segmentation, such as IoU and DICE coefficients, can be calculated by counting the numbers of these classified pixels. However, these metrics are not fit for our purpose, as the ground truth map has only one-dimensional crack labels whereas the predicted cracks tend to have a few pixel widths, partly due to the fundamental difficulty with predicting segmented ‘regions’ with one pixel width and partly due to the label smoothing explained above.

We dilate the crack labels with a small disk when calculating TPs and FPs to address this issue. If the predicted crack pixels are inside the dilated region, they are counted as TPs, and if not, the pixels are counted as FPs. This method avoids penalizing predicted crack pixels that do not precisely lie on the true crack. It also absorbs slight positional errors of predicted cracks; they are totally allowable in practice, as mentioned earlier. The radius of the dilating disk should desirably be the smallest that achieves these effects, to make it possible to distinguish two isolated cracks that are close to each other. We set the radius to three pixels in our experiments. We use the original one-pixel-width label when counting TNs and FNs.

4 Methods

4.1 Pseudo Super-human Labels by Lowering Image Resolution

As shown in Fig. 1(a), humans cannot detect all the cracks emerging on a concrete surface from its image. When we train a CNN using human-annotated labels, the best outcome is the human annotation’s perfect reproduction. Is it possible to break this limit?

Let us assume that a CNN can potentially detect thinner and thus harder cracks than humans, as shown in Fig. 1(b). To train the CNN to unleash its potential, different training data in which such hard cracks are given the positive label are necessary. Ideally, the annotation should desirably coincide with the upper bound of its potential performance.

However, this is easier said than done. To annotate hard cracks in images as crack beyond humans’ ability, we will need different sensor data to better identify real cracks. For example, we can capture additional images of the same concrete surface using a different camera with higher resolution or simply placing the original camera more closely to the surface. Even if having the same annotator annotate the new images, thinner cracks that are harder to detect will be correctly identified in these images, from which we can get proper labels for the original images.

The primary issue with this approach is its cost. Every image in the training data needs a corresponding higher-resolution image(s). We consider creating such pairs of low- and high-resolution images by image synthesis based on simple downsampling to cope with this. To be specific, assuming an image and a label annotated for it, we downsample the image while maintaining the label map ‘as is.’ We then upsample the downsampled image to the original size, due to the reason explained below, and pair it with the original label map to create an image-label pair for training (and testing) (See Fig. 2.). Intuitively, high-resolution image is used as evidence for achieving pseudo super-human label, and a pair of a corresponding low-resolution image and a pseudo super-human label is for training (and testing).

This down-/up-sampling processing may lead to undetectable cracks which means that some thin cracks in row images disappear in newly created images. A example is showed in Fig. 2. A strength of this approach is that we can control the boundary between detectable and undetectable cracks shown in Fig. 1(a) and (b) by changing the downsampling factor. We conduct experiments in which we vary the downsampling factor to create training/test data to study the relation of the limit of human annotation and that of the CNN detectors.

We employ the pair of image down- and up-sampling, as mentioned above, to precisely evaluate the effects of different downsampling factors. For instance, downsampling of an image shrinks the image area to . This will lead to severe imbalance of data size, as the amount of training data is proportional to the number of image pixels in the case of pixel-wise classification (i.e., segmentation tasks).

4.2 Methods for Further Improvements

We consider several additional methods to improve the accuracy of detecting hard cracks.

4.2.1 Pooling Sensitive to Spatial Input Perturbation

In [30], a new pooling method, named -pooling, is proposed, aiming at equipping the pooling operation with both sensitivity to spatial input perturbation and non-linearity of the computation. It is defined by

| (1) |

where is the -th pooling region in the input feature map and is a hyperparameter or a learnable parameter. The standard max pooling has the latter but not the former, while the average pooling has the former but not the latter. The study’s original motivation is to better use a pre-trained CNN on a classification task for segmentation tasks. The two tasks have opposite objectives regarding the (in)sensitivity to spatial input perturbation. The study aims at reconciling them by replacing the pooling/downsampling operation in the pre-trained CNN with -pooling.

Although our CNNs do not rely on pre-training, we have found that the employment of -pooling improves the accuracy of crack detection. This is arguably due to the increased sensitivity to input perturbation; it is crucial for detecting thin cracks. We replace all the max-pooling layers in the U-Net architecture with this pooling method.

4.2.2 Eliminating Short Segments by Shape Prior

Cracks emerging on concrete surfaces tend to have more than a certain length and usually do not form isolated short segments due to a physical reason. As a matter of fact, this is also the case with annotated label maps. Our CNNs are trained on them, and thus ideally, they should not produce isolated short-segment cracks. Although this is true when there are only easy (i.e., thick) cracks in the training/testing data, it is not otherwise. When the training data contains hard cracks, and we train our CNNs to detect them, it starts to produce short segment cracks in the predicted map. This happens, probably because the labels beyond the performance limit of CNNs may technically work as noisy labels.

In any case, we need additional treatment to deal with the emergence of such short-segment cracks. We propose to learn a prior on the shape of cracks and use it to eliminate them in a post-processing stage. Measuring the length of predicted cracks with image processing methods such as finding connected components will not work, since it is difficult to distinguish isolated short segments from a series of short segments forming a long crack.

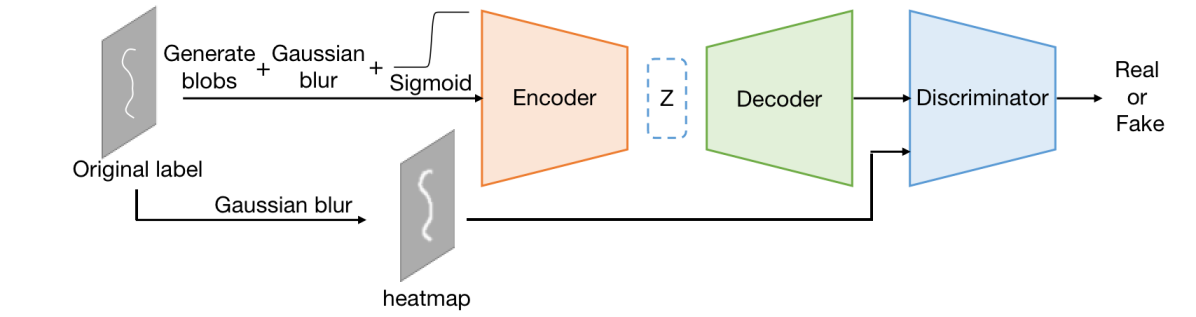

To cope with this, we use an auto-encoder to learn the shape prior of cracks. Several attempts [18, 31] were proposed in the past targeted the segmentation of biological organs from medical images. We adopt a method (shape constrained network; SCN) proposed in [17] that utilizes the VAE-GAN [11]. Crack shapes are arbitrary, and their variety will be larger than the shape of an eye considered in [17]. Employing the same network architecture as [17], we test the effectiveness of this method in our experiments.

In our experiments, we test two methods shown in Fig. 3. To make the VAE learn to remove short segments, which tend to have the shape of blobs in the predicted maps, we randomly generate several oval blobs in the input label maps. We then apply Gaussian blur to the maps, followed by the application of a logistic sigmoid function. The resulting images are inputted to the VAE. The sigmoid function is used as a proxy of binarization that is differentiable:

where we set and in our experiments. We train the VAE together with a discriminator classifying its input as the ground truth label map (blurred and optionally binarized) or the generated one in the framework of VAE-GAN.

4.2.3 Consideration of Prediction Uncertainty

Cracks lying around the limit of humans’ detection ability can be annotated erroneously. Furthermore, for cracks beyond the performance limit of CNNs, their correct labels may technically work as noise labels, as shown in Fig. 1. To cope with such wrongly annotated or too hard labels, we consider modeling uncertainty of the prediction [9]. As mentioned above, we use an MSE loss for training the CNNs due to its good performance, where is the predicted crack likelihood and is the Gaussian blurred label. Regarding to be an observation . Then, training with only an MSE loss is equivalent to estimating while setting to be a constant. Estimating not only but in the framework of maximum likelihood, the loss function turns to

| (2) |

We reparametrize as . We add a single unit to the final output layer of the CNN to predict . The only unit already existing in the original output layer predicts . Thus, the loss for the two output is given by

| (3) |

The above loss makes it possible to predict the uncertainty of the crack prediction. When minimizing the loss, if the CNN can predict that is sufficiently close to the label , then will be small. If it cannot, then will be large to compensate for the squared error. Thus, the CNN learns to predict depending on the certainty of the prediction of [9]. However, we are not interested in this predictive uncertainty itself here. We rather wish that this mechanism will help handle noisy labels, e.g., when an invisible crack is annotated as crack.

5 Experimental Results

5.1 Experimental Configuration

Dataset

As mentioned in Sec. 2, there is no dataset fit for the purpose of this study. Thus, we create the dataset by ourselves. It contains 352 images of concrete surfaces of various bridges captured by hand-held cameras. Their sizes are in the range from 4,000 3,000 to 5,000 4,000 pixels. Examples are provided in the supplementary. We had three persons annotate these images by the semi-automatic method, which produces crack labels with one-pixel width, as explained in Sec. 3.2. We split the images into 282 for training/validation and 70 for test. Our original images are too large for CNNs. Thus, we crop into small patches (e.g.512512). To increase the size of training dataset, we crop patches from images by using a sliding window with a half size of the patch size. Thus, we achieve 24700 patches (512512) for training, and 4972 patches for testing.

Network architecture

We choose U-Net [24] for the baseline architecture of CNNs. Our preliminary experiments have found that a U-Net variant with narrow layer widths works fairly well. Thus, we first reduce the number of channels of every convolutional layer to 1/4, setting them to be {1-16-32-64-128-256-128-64-32-16-1}. This decreases the number of trainable parameters from around 31.0 million to only 1.9 million, and also contributes significantly faster training. We then add a batch normalization layer after every convolutional layer (before activation layer), which contributes to stabilization of training. This network shows the top-level accuracy in our experiments. We call this U-Net*+BN later.

A small but noticeable gain is further obtained by modification of the network design. We experimentally tested several modifications, such as incorporation of the residual (Res) blocks [7] and its variants as well as the squeeze-and excitation (SE) block [8] and its variants. From the results, we choose the model in which every convolutional layer of the above base network is replaced with a modified Res block with an additional standard SE block. The details of the comparisons are given in the supplementary.

Training methods

As the images in our dataset are large, we crop patches of a fixed size and feed them to our CNNs; for U-Net*+BN and for the others. We crop patches in a sliding window fashion with a stride of a half size of the patch size. There are much more negative patches (patches without any crack pixel) than positive patches (patches with crack pixels). We employ random minority oversampling for selection of patches used for training. The patches are augmented by random 360-degree rotation and random flipping.

Unless otherwise noted, all models are trained by the Adam optimizer [10] with initial learning rate of , = 0.9 and = 0.999. All the parameters in convolutional layers are initialized using the He initialization [6]. We train the model for 60 epochs and drop the learning rate to after 50 epochs. We set the size of minibatches to 16. We use PyTorch [22] for the experiments.

Evaluation

Our networks output a two-dimensional map storing crack likelihood at each pixel. We then bianarize the map with a threshold , and compute TP, FP, TN, and FN using the method explained in Sec. 3.4. In all the experiments, we repeat each trial three times and report their average.

5.2 Effects of Training on Super-human Annotation

We first conducted an experiment to see the effects of training on down-/up-sampled images. For brevity, we refer to the factor of down-/up-sampling applied to images as resolution. Figure 4 shows the detection accuracy measured by F1-score for the models trained and tested on different resolutions. To be specific, we first train a network (U-Net*+BN) from scratch on the images down-/up-sampled by a factor of , which is denoted by “” in the figure. Each network is then tested on the images down-/up-sampled by a factor of , which is specified by the horizontal axis of the plots. We do this training and test for every pair of and . Figures 4(a) and 4(b) show the results obtained when bilinear interpolation and Lanczos interpolation are used for down-/up-sampling, respectively. Figure 4(c) shows the top ‘envelop’ of the curves, which represents the best score for each resolution of test images. We can make several observations from these figures.

It is first observed that the highest score at each test image resolution is achieved by the model trained on the same resolution “” (except the model of “”, which achieves the best score at the test image resolution=“”). This is reasonable since the potential domain shift between the training and test images should be the smallest when their resolutions are the same.

It is also seen from Fig. 4(c) that the highest scores at test resolutions , , and are very close to each other. That of is even slightly higher than . We think that these prove the effectiveness of the super-human labels. The test images of resolutions and contain cracks that are harder to detect due to their decreased resolution, to which nevertheless they are labeled as crack. This makes each model possible to learn to detect such hard cracks, achieving detection accuracy similar to resolution .

This result means that we can achieve (at least) the same accuracy level with up to lower resolution by using the proposed down-sampling method as the accuracy obtained by the standard training with the native resolution. Note that this is achieved using the labels annotated by humans on images with their native resolution. This has multiple practical implications. For instance, images captured from a distance to the concrete surface will have lower resolution. Thus, we can achieve the maximum accuracy for images captured from a distance three times farther from the target than the distance at which the annotated images are captured.

It is seen from Fig. 4(c) that the detection accuracy decreases gradually but monotonically from resolution , which will be because it is beyond the detection ability of the CNN; cracks that are too hard for the CNN to detect are annotated as crack. The detection accuracy can decrease due to the following two reasons: i) hard cracks in the test images are simply too hard to detect, and ii) the annotated labels for the hard cracks work as noisy labels for the training, further lowering the accuracy. Further analyses are left to a future study. As for interpolation methods, the tendency is mostly similar but the results with the Lanczos interpolation are slightly better for the lower resolution cases.

| Down-sampling factor (at test time) | ||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |||

|

||||||||||

| Down-sampling factor (at train time) | 1 | 0.905 / 0.909 | 0.894 / 0.895 | 0.843 / 0.845 | 0.774 / 0.778 | 0.701 / 0.706 | 0.641 / 0.644 | 0.585 / 0.589 | 0.548 / 0.553 | |

| 2 | 0.885 / 0.890 | 0.913 / 0.913 | 0.857 / 0.860 | 0.783 / 0.781 | 0.718 / 0.723 | 0.681 / 0.686 | 0.624 / 0.631 | 0.570 / 0.577 | ||

| 3 | 0.856 / 0.867 | 0.901 / 0.907 | 0.908 / 0.910 | 0.855 / 0.859 | 0.787 / 0.793 | 0.750 / 0.757 | 0.702 / 0.710 | 0.659 / 0.669 | ||

| 4 | 0.854 / 0.866 | 0.882 / 0.889 | 0.902 / 0.904 | 0.882 / 0.885 | 0.804 / 0.810 | 0.742 / 0.750 | 0.701 / 0.721 | 0.679 / 0.692 | ||

| 5 | 0.813 / 0.827 | 0.834 / 0.846 | 0.889 / 0.893 | 0.895 / 0.897 | 0.881 / 0.886 | 0.827 / 0.833 | 0.743 / 0.757 | 0.695 / 0.710 | ||

| 6 | 0.786 / 0.804 | 0.794 / 0.811 | 0.853 / 0.864 | 0.877 / 0.883 | 0.876 / 0.880 | 0.866 / 0.872 | 0.813 / 0.819 | 0.734 / 0.752 | ||

| 7 | 0.759 / 0.772 | 0.763 / 0.789 | 0.811 / 0.835 | 0.847 / 0.861 | 0.855 / 0.863 | 0.856 / 0.863 | 0.839 / 0.847 | 0.778 / 0.791 | ||

| 8 | 0.725 / 0.740 | 0.765 / 0.786 | 0.779 / 0.803 | 0.825 / 0.836 | 0.840 / 0.847 | 0.846 / 0.853 | 0.839 / 0.845 | 0.816 / 0.829 | ||

| Down-sampling factor (at test time) | ||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |||

|

||||||||||

| Down-sampling factor (at train time) | 1 | 0.909 / 0.911 | 0.895 / 0.898 | 0.845 / 0.849 | 0.778 / 0.783 | 0.706 / 0.709 | 0.644 / 0.645 | 0.589 / 0.593 | 0.553 / 0.555 | |

| 2 | 0.890 / 0.892 | 0.913 / 0.917 | 0.860 / 0.864 | 0.781 / 0.784 | 0.723 / 0.725 | 0.686 / 0.688 | 0.631 / 0.634 | 0.577 / 0.580 | ||

| 3 | 0.867 / 0.869 | 0.907 / 0.910 | 0.910 / 0.916 | 0.859 / 0.864 | 0.793 / 0.796 | 0.757 / 0.760 | 0.710 / 0.714 | 0.669 / 0.671 | ||

| 4 | 0.866 / 0.869 | 0.889 / 0.894 | 0.904 / 0.909 | 0.885 / 0.895 | 0.810 / 0.814 | 0.750 / 0.752 | 0.721 / 0.726 | 0.692 / 0.694 | ||

| 5 | 0.827 / 0.832 | 0.846 / 0.849 | 0.893 / 0.898 | 0.897 / 0.904 | 0.886 / 0.899 | 0.833 / 0.843 | 0.757 / 0.762 | 0.710 / 0.714 | ||

| 6 | 0.804 / 0.806 | 0.811 / 0.811 | 0.864 / 0.871 | 0.883 / 0.891 | 0.880 / 0.893 | 0.872 / 0.887 | 0.819 / 0.835 | 0.752 / 0.762 | ||

| 7 | 0.772 / 0.777 | 0.789 / 0.792 | 0.835 / 0.841 | 0.861 / 0.866 | 0.863 / 0.870 | 0.863 / 0.878 | 0.847 / 0.866 | 0.791 / 0.807 | ||

| 8 | 0.740 / 0.744 | 0.786 / 0.788 | 0.803 / 0.809 | 0.836 / 0.841 | 0.847 / 0.858 | 0.853 / 0.867 | 0.845 / 0.865 | 0.829 / 0.856 | ||

| Down-sampling factor (at test time) | ||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |||

|

||||||||||

| Down-sampling factor (at train time) | 1 | 0.911 / 0.907 | 0.898 / 0.887 | 0.849 / 0.836 | 0.783 / 0.776 | 0.706 / 0.701 | 0.645 / 0.639 | 0.593 / 0.582 | 0.553 / 0.541 | |

| 2 | 0.892 / 0.890 | 0.917 / 0.912 | 0.860 / 0.858 | 0.784 / 0.779 | 0.725 / 0.722 | 0.688 / 0.681 | 0.634 / 0.629 | 0.580 / 0.572 | ||

| 3 | 0.869 / 0.872 | 0.910 / 0.908 | 0.916 / 0.911 | 0.864 / 0.859 | 0.796 / 0.793 | 0.760 / 0.758 | 0.714 / 0.709 | 0.671 / 0.668 | ||

| 4 | 0.869 / 0.871 | 0.894 / 0.895 | 0.909 / 0.909 | 0.895 / 0.893 | 0.814 / 0.811 | 0.752 / 0.747 | 0.726 / 0.720 | 0.694 / 0.689 | ||

| 5 | 0.832 / 0.839 | 0.849 / 0.856 | 0.898 / 0.902 | 0.904 / 0.906 | 0.889 / 0.905 | 0.843 / 0.851 | 0.762 / 0.773 | 0.714 / 0.727 | ||

| 6 | 0.806 / 0.819 | 0.811 / 0.823 | 0.871 / 0.878 | 0.891 / 0.900 | 0.893 / 0.899 | 0.887 / 0.892 | 0.835 / 0.846 | 0.762 / 0.773 | ||

| 7 | 0.777 / 0.792 | 0.792 / 0.804 | 0.841 / 0.853 | 0.866 / 0.872 | 0.870 / 0.879 | 0.878 / 0.888 | 0.866 / 0.880 | 0.807 / 0.821 | ||

| 8 | 0.744 / 0.762 | 0.788 / 0.801 | 0.809 / 0.817 | 0.841 / 0.852 | 0.858 / 0.872 | 0.867 / 0.881 | 0.865 / 0.883 | 0.856 / 0.874 | ||

5.3 Methods for Further Improvements

We then conducted experiments to examine the effectiveness of the proposed techniques in Sec. 4.2. Note that the results given in Sec. 5.2 are obtained by the baseline method that do not employ these methods. Thus, the improvements shown in what follows are gained over the baseline method.

-pooling

We first test the effectiveness of -pooling. We compare the original U-Net*-BN with that with all the pooling layer replaced with -pooling. We empirically found that works the best. Table 1 show the results obtained by two CNNs with and without -pooling. It is seen that the pooling method improves accuracy for almost all of pairs of training/test resolutions. The improvements are more significant for the cases of testing on higher-resolution images than training images. Figure 5 shows a few examples.

Elimination of Short Segments by Shape Prior

We then test the effectiveness of the proposed method for elimination of short segments in the post-processing step. We apply the method to the output map of U-Net*-BN with -pooling. Table 2 shows the accuracy before and after the application of the method. Interestingly, it is seen from the table that it brings about larger improvements for the case of training and testing on the same resolution. Figure 6 shows a few examples. The improvements are small when training and testing on different resolutions.

Training with uncertainty modeling

We train the same network as above using the augmented loss function (3), followed by the elimination of short segments. Table 3 shows comparisons between the results obtained with the MSE loss and those obtained with the new loss. The results show that the employment of the uncertainty model improves only the cases of training the CNN on low resolution images. It even worsen the detection accuracy for the CNNs trained on high resolution images. We should use this method selectively according to the chosen pair of training/test resolutions.

6 Summary and Conclusion

We have presented several approaches to improve the accuracy of the detection of cracks. Stating that the difficulty with crack detection lies in detecting thin cracks, we first presented a formulation of the problem that is more proper than the standard one in the literature. We then proposed a method for obtaining super-human labels by controlling the resolution of training images. We also presented three methods aiming at further improvement of the accuracy of thin crack detection. The experimental results show the effectiveness of the proposed approaches.

References

- [1] Seongdeok Bang, Somin Park, Hongjo Kim, and Hyoungkwan Kim. Encoder-decoder network for pixel-level road crack detection in black-box images. Computer-Aided Civil and Infrastructure Engineering, 02 2019.

- [2] J. Canny. A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, PAMI-8(6):679–698, 1986.

- [3] Young-Jin Cha, Wooram Choi, and Oral Buyukozturk. Deep learning-based crack damage detection using convolutional neural networks. Computer-Aided Civil and Infrastructure Engineering, 32:361–378, 03 2017.

- [4] Aurélien Cord and Sylvie Chambon. Automatic road defect detection by textural pattern recognition based on adaboost. Computer-Aided Civil and Infrastructure Engineering, 27, 04 2012.

- [5] Zhun Fan, Yuming Wu, Jiewei Lu, and Wenji Li. Automatic pavement crack detection based on structured prediction with the convolutional neural network. SoICT 2018: Proceedings of the Ninth International Symposium on Information and Communication Technology, pages 251–256, 11 2018.

- [6] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In The IEEE International Conference on Computer Vision (ICCV), December 2015.

- [7] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [8] Jie Hu, Li Shen, and Gang Sun. Squeeze-and-excitation networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [9] Alex Kendall and Yarin Gal. What uncertainties do we need in bayesian deep learning for computer vision? In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems 30, pages 5574–5584. Curran Associates, Inc., 2017.

- [10] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Yoshua Bengio and Yann LeCun, editors, 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015.

- [11] Anders Boesen Lindbo Larsen, Søren Kaae Sønderby, Hugo Larochelle, and Ole Winther. Autoencoding beyond pixels using a learned similarity metric. In Maria-Florina Balcan and Kilian Q. Weinberger, editors, Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, June 19-24, 2016, volume 48 of JMLR Workshop and Conference Proceedings, pages 1558–1566. JMLR.org, 2016.

- [12] H. Li, D. Song, Y. Liu, and B. Li. Automatic pavement crack detection by multi-scale image fusion. IEEE Transactions on Intelligent Transportation Systems, 20(6):2025–2036, 2019.

- [13] Qing-tong Li and Dong-ming Zhang. Deep learning based image recognition for crack and leakage defects of metro shield tunnel. Tunnelling and Underground Space Technology, 77:166–176, 07 2018.

- [14] Yundong Li, Hongguang Li, and Hongren Wang. Pixel-wise crack detection using deep local pattern predictor for robot application. Sensors, 18:3042, 09 2018.

- [15] T. Lindeberg. Edge detection and ridge detection with automatic scale selection. In Proceedings CVPR IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 465–470, 1996.

- [16] Yahui Liu, Jian Yao, Xiaohu Lu, Renping Xie, and Li Li. Deepcrack: A deep hierarchical feature learning architecture for crack segmentation. Neurocomputing, 338:139–153, 2019.

- [17] Bingnan Luo, Jie Shen, Shiyang Cheng, Yujiang Wang, and Maja Pantic. Shape constrained network for eye segmentation in the wild. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), March 2020.

- [18] Zahra Mirikharaji, Yiqi Yan, and Ghassan Hamarneh. Learning to segment skin lesions from noisy annotations. In Qian Wang, Fausto Milletari, Hien V. Nguyen, Shadi Albarqouni, M. Jorge Cardoso, Nicola Rieke, Ziyue Xu, Konstantinos Kamnitsas, Vishal Patel, Badri Roysam, Steve Jiang, Kevin Zhou, Khoa Luu, and Ngan Le, editors, Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data - First MICCAI Workshop, DART 2019, and First International Workshop, MIL3ID 2019, Shenzhen, Held in Conjunction with MICCAI 2019, Shenzhen, China, October 13 and 17, 2019, Proceedings, volume 11795 of Lecture Notes in Computer Science, pages 207–215. Springer, 2019.

- [19] Alejandro Newell, Kaiyu Yang, and Jia Deng. Stacked hourglass networks for human pose estimation. In Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling, editors, Computer Vision – ECCV 2016, pages 483–499, Cham, 2016. Springer International Publishing.

- [20] Henrique Oliveira and Paulo Correia. Crackit-an image processing toolbox for crack detection and characterization. IEEE ICIP, pages 798–802, 01 2014.

- [21] Somin Park, Seongdeok Bang, Hongjo Kam, and Hyoungkwan Kim. Patch-based crack detection in black box images using convolutional neural networks. Journal of Computing in Civil Engineering, 33(3), May 2019.

- [22] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems 32, pages 8024–8035. Curran Associates, Inc., 2019.

- [23] P. Prasanna, K. J. Dana, N. Gucunski, B. B. Basily, H. M. La, R. S. Lim, and H. Parvardeh. Automated crack detection on concrete bridges. IEEE Transactions on Automation Science and Engineering, 13(2):591–599, 2016.

- [24] O. Ronneberger, P.Fischer, and T. Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI), volume 9351 of LNCS, pages 234–241. Springer, 2015. (available on arXiv:1505.04597 [cs.CV]).

- [25] S. J. Schmugge, L. Rice, N. R. Nguyen, J. Lindberg, R. Grizzi, C. Joffe, and M. C. Shin. Detection of cracks in nuclear power plant using spatial-temporal grouping of local patches. In 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 1–7, 2016.

- [26] S. J. Schmugge, L. Rice, N. R. Nguyen, J. Lindberg, R. Grizzi, C. Joffe, and M. C. Shin. Detection of cracks in nuclear power plant using spatial-temporal grouping of local patches. In 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 1–7, 2016.

- [27] Y. Shi, L. Cui, Z. Qi, F. Meng, and Z. Chen. Automatic road crack detection using random structured forests. IEEE Transactions on Intelligent Transportation Systems, 17(12):3434–3445, 2016.

- [28] J. Tang and Y. Gu. Automatic crack detection and segmentation using a hybrid algorithm for road distress analysis. In 2013 IEEE International Conference on Systems, Man, and Cybernetics, pages 3026–3030, 2013.

- [29] Nguyen Tien Sy, Stephane Begot, Florent Duculty, and Manuel Avila. Free-form anisotropy: A new method for crack detection on pavement surface images. In International Conference on Image Processing, ICIP, pages 1069–1072, 09 2011.

- [30] Zhen Wei, Jingyi Zhang, Li Liu, Fan Zhu, Fumin Shen, Yi Zhou, Si Liu, Yao Sun, and Ling Shao. Building detail-sensitive semantic segmentation networks with polynomial pooling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [31] Yuan Xue, Hui Tang, Zhi Qiao, Guanzhong Gong, Yong Yin, Zhen Qian, Chao Huang, Wei Fan, and Xiaolei Huang. Shape-aware organ segmentation by predicting signed distance maps. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020, pages 12565–12572. AAAI Press, 2020.

- [32] Xincong Yang, Heng Li, Y. Yu, Xiaochun Luo, Ting Huang, and X. Yang. Automatic pixel-level crack detection and measurement using fully convolutional network. Comput. Aided Civ. Infrastructure Eng., 33:1090–1109, 2018.

- [33] Kaige Zhang, H.D. Cheng, and Boyu Zhang. Unified approach to pavement crack and sealed crack detection using preclassification based on transfer learning. Journal of Computing in Civil Engineering, 32, 03 2018.

- [34] L. Zhang, F. Yang, Y. Daniel Zhang, and Y. J. Zhu. Road crack detection using deep convolutional neural network. In 2016 IEEE International Conference on Image Processing (ICIP), pages 3708–3712, 2016.

- [35] H. Zhao, G. Qin, and X. Wang. Improvement of canny algorithm based on pavement edge detection. In 2010 3rd International Congress on Image and Signal Processing, volume 2, pages 964–967, 2010.

- [36] Qin Zou, Yu Cao, Qingquan Li, Qingzhou Mao, and Song Wang. Crack tree: Automatic crack detection from pavement images. Pattern Recognition Letters, 33:227–238, 02 2012.

- [37] Qin Zou, Yu Cao, Qingquan Li, Qingzhou Mao, and Song Wang. Cracktree: Automatic crack detection from pavement images. Pattern Recognition Letters, 33(3):227–238, 2012.

- [38] Qin Zou, Zheng Zhang, Qingquan Li, Xianbiao Qi, Qian Wang, and Song Wang. Deepcrack: Learning hierarchical convolutional features for crack detection. IEEE Transactions on Image Processing, PP:1–1, 10 2018.