PS-TTL: Prototype-based Soft-labels and Test-Time Learning for Few-shot Object Detection

Abstract.

In recent years, Few-Shot Object Detection (FSOD) has gained widespread attention and made significant progress due to its ability to build models with a good generalization power using extremely limited annotated data. The fine-tuning based paradigm is currently dominating this field, where detectors are initially pre-trained on base classes with sufficient samples and then fine-tuned on novel ones with few samples, but the scarcity of labeled samples of novel classes greatly interferes precisely fitting their data distribution, thus hampering the performance. To address this issue, we propose a new framework for FSOD, namely Prototype-based Soft-labels and Test-Time Learning (PS-TTL). Specifically, we design a Test-Time Learning (TTL) module that employs a mean-teacher network for self-training to discover novel instances from test data, allowing detectors to learn better representations and classifiers for novel classes. Furthermore, we notice that even though relatively low-confidence pseudo-labels exhibit classification confusion, they still tend to recall foreground. We thus develop a Prototype-based Soft-labels (PS) strategy through assessing similarities between low-confidence pseudo-labels and category prototypes as soft-labels to unleash their potential, which substantially mitigates the constraints posed by few-shot samples. Extensive experiments on both the VOC and COCO benchmarks show that PS-TTL achieves the state-of-the-art, highlighting its effectiveness. The code and model are available at https://github.com/gaoyingjay/PS-TTL.

1. Introduction

Object detection (Zhang et al., 2021a; Wang et al., 2023; Ren et al., 2016; Zhou et al., 2023) is a fundamental task of computer vision and multimedia, involving a variety of applications, including autonomous driving (Zhang et al., 2023b, 2022a), robotic manipulation (Ma and Huang, 2023; Qin et al., 2023), medical analysis (Liu et al., 2019; Li et al., 2019), etc. Despite that significant progress has been achieved in recent years (Tian et al., 2020; Zhang et al., 2020a; Pu et al., 2024; Zhang et al., 2023a), detectors heavily rely on many training samples. Considering that labeling data is rather expensive and collecting examples for rare categories is extremely hard, solutions are required to deal with data-limited scenarios.

Few-Shot Object Detection (FSOD) is a promising way to address this issue. It aims to train an object detector using only a few samples on novel classes with the help of abundant data on base classes, which has received widespread attention from both the academia and industry. Early FSOD methods typically adopt the meta-learning paradigm, organizing object detection into a series of episode tasks with few-shot samples, where each episode includes a support set of -way -shot images and a query set. The support set is utilized for model training with a limited number of samples, while the query set is employed to assess the performance of the model on novel objects. Kang et al. (Kang et al., 2019) propose a lightweight feature reweighting module that learns to capture global features of support images and embeds such features into reweighting coefficients to adjust meta features of query images. Meta R-CNN (Yan et al., 2019) conducts meta-learning on Reigion-of-Interest (RoI) features instead of those of full images to better fit the detection problem. Subsequent alternatives progress from optimizing both classification and localization features (Wang et al., 2019; Fan et al., 2020; Han et al., 2021; Yang et al., 2020; Demirel et al., 2023; Liu et al., 2023), by employing Transformer to capture spatial relationships between support and query classes (Han et al., 2022b; Zhang et al., 2022b) as well as exploring inter-class relationships (Zhang et al., 2021b; Karlinsky et al., 2019; Han et al., 2023; Lu et al., 2023). However, such methods suffer complex architectures and training procedures with increased computational costs. Additionally, they are criticized for its interpretability of what the model learns in the novel stage.

To achieve fast training and simple deployment for adaptation to novel classes, most existing FSOD methods follow a fine-tuning based paradigm. The detector is first pre-trained on base classes with adequate samples and then fine-tuned on novel ones with few samples. Early attempts (Chen et al., 2018; Wu et al., 2020) employ a jointly fine-tuning based architecture, where the entire pre-trained base model, comprising both the class-agnostic and class-specific layers, is simultaneously updated during training on the novel task. Later, the two-stage fine-tuning methods (Wang et al., 2020; Sun et al., 2021; Cao et al., 2021; Fan et al., 2022; Wu et al., 2021; Zhang et al., 2020b; Ma et al., 2022) demonstrate that maintaining the feature extraction part of the model unchanged and solely fine-tuning the last layer can significantly boost the accuracy. Based on this fact, most of the subsequent methods are combined with knowledge distillation (Wu et al., 2022; Pei et al., 2022; Nguyen et al., 2022), context reasoning (Zhu et al., 2021; Kim et al., 2021), or decoupling detection networks (Qiao et al., 2021; Yang et al., 2022; Lu et al., 2022) to further improve the detection performance. Unfortunately, constrained by the limited samples of novel classes, they struggle to precisely capture the data distribution. Some methods address this issue by generating synthetic data for novel classes (Zhang and Wang, 2021; Zhao et al., 2022) or mining implicit novel instances from the training set (Kaul et al., 2022; Cao et al., 2022; Tang et al., 2023). But the former synthesize novel samples according to the base data and the results often deviate from the true distribution as shown in Fig. 1(a), while the latter rely on the assumption that unlabeled novel instances are widely present in abundant base data as shown in Fig. 1(b), which may not always hold in real-world cases.

The accessibility of novel instances in test data motivates us to explore a new framework which fine-tunes an object detection model at test-time as shown in Fig. 1(c). Compared to mining novel instances from base data (the presence of unlabeled novel instances in data of base classes essentially incurs a loophole in FSOD settings), conducting online learning on test data is a more realistic manner aligned with real-world applications. In this paper, we propose a Test-Time Learning (TTL) module, which utilizes a mean-teacher network for self-training to simultaneously train and infer on test data, effectively leveraging novel instances present in test data. Specifically, both the student and teacher networks are first initialized by the few-shot detector fine-tuned on novel data. Then, the teacher network takes test data as input to generate pseudo-labels. The student model is trained using these pseudo-labels after post-processing and -way -shot data as supervision signals and updates the teacher through Exponential Moving Average (EMA). Additionally, considering the limited number of high-quality pseudo-labels and the fact that a large number of low-quality pseudo-labels can recall foreground but exhibit a low classification accuracy, we develop a Prototype-based Soft-labels (PS) strategy to unlock the potential of these low-quality pseudo-labels. In this case, we maintain class prototypes and compute the feature similarity between low-confidence pseudo-labels and class prototypes to replace them with soft-labels. Class prototypes are initialized using -way -shot data and dynamically updated during online learning using instance features of high-confidence pseudo-labels. Finally, we integrate the two modules aforementioned to build a new framework for FSOD, dubbed PS-TTL.

In summary, the major contributions of this paper includes:

-

•

We propose a novel PS-TTL framework for FSOD, which effectively mines implicit novel instances from test data to address the issue of limited samples of novel classes. To the best of our knowledge, it is the first attempt to explore fitting data distribution of novel classes in a way that is more in line with real-world scenarios.

-

•

We design the TTL module that adopts a mean-teacher network for self-training to discover novel instances on test data and develop the PS strategy to unleash the power of low-quality pseudo-labels.

-

•

We achieve a newly state-of-the-art performance of all few-shot settings on the VOC and COCO benchmarks in comparison to the published counterparts, demonstrating its advantage in detecting novel objects.

2. Related Work

2.1. Object Detection

Object detection aims to identify and localize objects within images, constituting a fundamental challenge in the fields of computer vision and multimedia. Recently, the success of deep learning has triggered numerous effective object detection methods. They can be roughly categorized into two main groups: single-stage and two-stage. Single-stage detectors (e.g. SSD (Liu et al., 2016) and RetinaNet (Lin et al., 2017)) predict bounding boxes and classification scores based on pre-defined anchors, exhibiting good real-time performance. The YOLO (Redmon et al., 2016; Wang et al., 2023) series, by continuously assimilating the latest advancements, such as label assignment and multi-scale feature fusion, has delivered high-precision real-time object detection. Although the structure of single-stage detectors is straightforward and efficient, their integrated design makes them less adaptable to FSOD tasks. In contrast, two-stage detectors (e.g. Faster R-CNN (Ren et al., 2016)) usually first use a Region Proposal Network (RPN) to generate potential proposals and then refine them by other modules. Compared to single-stage detectors, two-stage ones report higher detection performance and are commonly used for FSOD.

2.2. Few-shot Object Detection

FSOD methods enable detectors to rapidly adapt to new objects with minimal data while preserving competitive performance. There are mainly two paradigms: meta-learning based and fine-tuning based. Meta-learning based methods (Wang et al., 2019; Li et al., 2021; Li and Li, 2021; Yin et al., 2022) employ a series of -way -shot detection tasks for training, aiming to well generalize to novel tasks with limited samples. FSRW (Kang et al., 2019) proposes a feature reweighting strategy, which extracts class-specific representations from support images and utilizes them to reweight the importance of query features. Similarly, Meta R-CNN (Yan et al., 2019) combines a two-stage detector and reweights RoI features in the detection head. Attention-RPN (Fan et al., 2020) exploits matching relationship between the few-shot support set and query set with a contrastive training scheme, which can then be applied to detect novel objects without retraining and fine-tuning. QA-FewDet (Han et al., 2021) uses a graph model to capture multi-relations among the proposal and class nodes. While theoretical promising, their training and inference processes are highly complex, making them difficult to deploy in real-world scenarios.

Fine-tuning based methods leverage a two-stage training procedure, i.e., first base training and then few-shot fine-tuning, which expects to transfer prior knowledge from base classes to novel ones. LSTD (Chen et al., 2018) is the earliest attempt in this domain, incorporating detection knowledge transfer and background depression regularization. TFA (Wang et al., 2020) simply freezes the backbone and only fine-tunes the detection head with novel classes, which largely improves the performance. Subsequent research refines the TFA method and integrates it with other techniques to further enhance the FSOD performance (Sun et al., 2021; Nguyen et al., 2022; Pei et al., 2022; Wu et al., 2022; Kim et al., 2021; Zhu et al., 2021; Lu et al., 2022; Qiao et al., 2021; Fan et al., 2021; Yang et al., 2022). FSCE (Sun et al., 2021) introduces contrastive learning to learn discriminative object proposal representations, alleviating the misclassification issue in novel classes. DeFRCN (Qiao et al., 2021) employs the gradient decoupled layer for multi-stage decoupling and the prototypical calibration block to align original classification scores. Although fine-tuning based methods demonstrate satisfactory results, the limited samples of novel classes still make it challenging for detectors to capture accurate data distributions.

To alleviate the issue above, HallucFsDet (Zhang and Wang, 2021) introduces a hallucinator network trained on base classes to synthesize additional training examples for novel classes. Zhao et al. (Zhao et al., 2022) assume that the features of both base and novel classes follow a Gaussian distribution and generate samples for novel classes according to the variance of similar base classes. However, these hallucination methods relying on base classes tend to result in biased synthetic novel samples. The other semi-supervised FSOD methods (Cao et al., 2022; Kaul et al., 2022; Tang et al., 2023) investigate mining implicit novel instances from training data with the assumption that novel instances do appear in base data. Kaul et al. (Kaul et al., 2022) present a simple pseudo-labelling strategy to detect potential novel instances in base datasets while MINI (Cao et al., 2022) comprises an offline and online mechanism, facilitating novel instance mining. However, as such an assumption does not always hold (e.g. in rare disease or animal detection), they are problematic in practical cases. Considering the accessibility of novel instances in test data, we are motivated to explore fine-tuning detectors at test-time.

3. Method

3.1. Problem Formulation

We follow the standard FSOD setting introduced in (Wang et al., 2020) with two disjoint training sets: a base dataset with exhaustively annotated instances for each base class and a novel dataset with only instances (usually less than 30) for each novel class , where and refer to the input image and the Ground Truth (GT) label, respectively. It is worth noting that there is no intersection between base and novel classes, i.e., . In this case, the ultimate goal of FSOD is to train a robust detector based on and to deal with the objects in the test set that contains both types of instances in .

3.2. Base Detector

DeFRCN (Qiao et al., 2021) is a state-of-the-art fine-tuning based few-shot object detector, built through two training stages. In the first phase, Faster-RCNN is trained on base classes with sufficient samples, while in the second phase, transfer learning is conducted by fine-tuning Faster-RCNN on base classes and novel classes with instances per class. Fine-tuning on a balanced set containing training samples for both base and novel classes helps to preserve the performance on base classes. The entire procedure is summarized as follows:

| (1) |

where , , and indicate the detector in the initialization, base training, and novel fine-tuning stages, respectively.

Different from previous fine-tuning based methods, which only fine-tune a small number of parameters of Faster-RCNN, such as the prediction head, to avoid overfitting of the detector, DeFRCN introduces a gradient decoupled layer during fine-tuning to stop the gradient between RPN and backbone while scaling the gradient between RCNN and backbone. This allows the detector to sufficiently learn from novel data while preventing overfitting, making DeFRCN remarkably superior to other existing counterparts.

Despite the significant progress made by the fine-tuning based methods, given only novel instances, they fail to accurately capture the data distribution. To overcome this obstacle, we propose Prototype-based Soft-labels and Test-Time Learning (PS-TTL) to mine novel instances in test data. The overall architecture of the model is illustrated in Fig. 2.

3.3. Test-Time Learning with Mean-Teacher

Self-training has shown promising performance for semi-supervised object detection (Lee, 2013; Tarvainen and Valpola, 2017; Liu et al., 2021). It typically predicts pseudo-labels for unlabeled data, where high-confidence pseudo-labels are used to supervise detector training.

In this work, we aim to fully leverage novel instances in test data, especially in the scenario of online learning, called Test-Time Learning (TTL). To this end, we employ a mean-teacher self-training paradigm (Tarvainen and Valpola, 2017), which mainly consists of two architecturally identical detectors, i.e. the student network and the teacher network. The teacher network first works on test data and renders pseudo-labels from its detection results through post-processing procedures (e.g., Non-Maximum Suppression (NMS) and filtering using a confidence threshold). The high-quality pseudo-labels are screened out to supervise the student network, enhancing its capability.

Since the teacher network is expected to produce reliable pseudo-labels of novel classes in test data, the few-shot detector which has been fine-tuned on novel data is used to initialize both the student and teacher networks. However, the self-training paradigm inevitably generates noisy pseudo-labels, particularly for novel classes. Considering that excessively noisy pseudo-labels deteriorate the performance of the student network as training progresses, to address this issue, we first apply NMS for each class to remove duplicate detection boxes. Then, we set a high confidence threshold to exclude uncertain labels. Finally, we optimize the student network using the remaining high-quality pseudo-labels with the loss function as follows:

| (2) |

where is the input test image and denotes the filtered pseudo-label. Note that the unsupervised loss is only applied to the classification heads of RPN and RoI.

On the other side, as the few-shot detector is not strong enough, pseudo-labels still contain noise even after filtering out low-confidence predictions. Therefore, to alleviate the degradation of the few-shot detector during test-time learning, we propose to take -way -shot data as supervision signals. The supervised loss for training the student network can thus be defined as:

| (3) |

where . Both the RPN and RoI heads adopt the classification loss and bounding box regression loss.

Following the mean-teacher framework (Tarvainen and Valpola, 2017), we update the weights of the student network using both and . To obtain high-quality pseudo-labels from test data, we update the weights of the teacher network via Exponential Moving Average (EMA) of those of the student as below:

| (4) |

where and are the network parameters of the teacher and the student, respectively. is the EMA momentum coefficient.

3.4. Prototype-based Soft-labels

The mean-teacher self-training framework presented in Section 3.3 for TTL on test data promotes the detection performance, and a large threshold to filter the generated pseudo-labels is more beneficial (please see the experiments). However, this leads to severe detection missing, and many test images do not have any pseudo-label. Different from semi-supervised object detection, where multiple rounds of fine-tuning can be conducted on unlabeled data, under the TTL setting, we can only perform one epoch of training on test data, making it challenging to fully utilize every input test image.

As shown in Fig. 3, we observe that relatively low-confidence pseudo-labels, despite suffering from classification confusion, mostly recall foreground. Based on this phenomenon, we propose a Prototype-based Soft-labels (PS) strategy to replace the hard labels of these implicit foreground predictions with soft-labels for fully unleashing the potential of low-quality pseudo-labels.

Specifically, we first introduce a lower bound confidence threshold , and the predicted results between and are also assigned as foreground. Due to unavoidable classification confusion in these implicit foreground predictions, it fails to effectively remove redundant boxes by employing class-specific NMS in the teacher network. Instead, after removing the hard labels of these implicit foreground predictions, we apply class-agnostic NMS to them using every high-confidence pseudo prediction (i.e., whose confidence score is greater than ) to filter the redundant ones.

We then generate soft-labels for these implicit foreground predictions by measuring their similarities to each class. Formally, given an implicit foreground prediction , we define its similarity to a class as the cosine distance between its RoI feature and the prototype of the class :

| (5) |

Finally, follows a softmax function to generate , which represents the soft-label of the implicit foreground prediction . We minimize the Kullback-Leibler (KL) divergence between the soft-label and the class probability of each implicit foreground prediction :

| (6) |

where is the class probability, denotes foreground classes and one background class. Hence, we set .

To leverage soft-labels of implicit foreground predictions at the early stage during TTL, we initialize the class prototypes with -way -shot data:

| (7) |

where is the RoI feature of the -th instance for class . Because the -way -shot data cannot accurately represent the class prototypes, we propose to dynamically update them using both the supervised -way -shot data and test data with high-confidence pseudo-labels, enabling the class prototypes to converge to the true representations as training progresses. In this case, we update the class prototypes using the following formula:

| (8) |

where is the averaged RoI feature of high-confidence predictions for class during TTL, and denotes the normalized cosine similarity function as:

| (9) |

| Method / Shots | Novel Split 1 | Novel Split 2 | Novel Split 3 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 5 | 10 | 1 | 2 | 3 | 5 | 10 | 1 | 2 | 3 | 5 | 10 | |

| LSTD (Chen et al., 2018) | 8.2 | 1.0 | 12.4 | 29.1 | 38.5 | 11.4 | 3.8 | 5.0 | 15.7 | 31.0 | 12.6 | 8.5 | 15.0 | 27.3 | 36.3 |

| FSRW (Kang et al., 2019) | 14.8 | 15.5 | 26.7 | 33.9 | 47.2 | 15.7 | 15.3 | 22.7 | 30.1 | 40.5 | 21.3 | 25.6 | 28.4 | 42.8 | 45.9 |

| MetaDet (Wang et al., 2019) | 18.9 | 20.6 | 30.2 | 36.8 | 49.6 | 21.8 | 23.1 | 27.8 | 31.7 | 43.0 | 20.6 | 23.9 | 29.4 | 43.9 | 44.1 |

| Meta R-CNN (Yan et al., 2019) | 19.9 | 25.5 | 35.0 | 45.7 | 51.5 | 10.4 | 19.4 | 29.6 | 34.8 | 45.4 | 14.3 | 18.2 | 27.5 | 41.2 | 48.1 |

| TFA w/cos (Wang et al., 2020) | 39.8 | 36.1 | 44.7 | 55.7 | 56.0 | 23.5 | 26.9 | 34.1 | 35.1 | 39.1 | 30.8 | 34.8 | 42.8 | 49.5 | 49.8 |

| MPSR (Wu et al., 2020) | 41.7 | 51.4 | 55.2 | 61.8 | 24.4 | 39.2 | 39.9 | 47.8 | 35.6 | 42.3 | 48.0 | 49.7 | |||

| HallucFsDet (Zhang and Wang, 2021) | 47.0 | 44.9 | 46.5 | 54.7 | 54.7 | 26.3 | 31.8 | 37.4 | 37.4 | 41.2 | 40.4 | 42.1 | 43.3 | 51.4 | 49.6 |

| Retentive R-CNN(Fan et al., 2021) | 42.4 | 45.8 | 45.9 | 53.7 | 56.1 | 21.7 | 27.8 | 35.2 | 37.0 | 40.3 | 30.2 | 37.6 | 43.0 | 49.7 | 50.1 |

| FSCE (Sun et al., 2021) | 44.2 | 43.8 | 51.4 | 61.9 | 63.4 | 27.3 | 29.5 | 43.5 | 44.2 | 50.2 | 37.2 | 41.9 | 47.5 | 54.6 | 58.5 |

| UP-FSOD (Wu et al., 2021) | 43.8 | 47.8 | 50.3 | 55.4 | 61.7 | 31.2 | 30.5 | 41.2 | 42.2 | 48.3 | 35.5 | 39.7 | 43.9 | 50.6 | 53.3 |

| SRR-FSD (Zhu et al., 2021) | 47.8 | 50.5 | 51.3 | 55.2 | 56.8 | 32.5 | 35.3 | 39.1 | 40.8 | 43.8 | 40.1 | 41.5 | 44.3 | 46.9 | 46.4 |

| DCNet (Hu et al., 2021) | 33.9 | 37.4 | 43.7 | 51.1 | 59.6 | 23.2 | 24.8 | 30.6 | 36.7 | 46.6 | 32.3 | 34.9 | 39.7 | 42.6 | 50.7 |

| Meta FRCN (Han et al., 2022a) | 43.0 | 54.5 | 60.6 | 66.1 | 65.4 | 27.7 | 35.5 | 46.1 | 47.8 | 51.4 | 40.6 | 46.4 | 53.4 | 59.9 | 58.6 |

| QA-FewDet (Han et al., 2021) | 42.4 | 51.9 | 55.7 | 62.6 | 63.4 | 25.9 | 37.8 | 46.6 | 48.9 | 51.1 | 35.2 | 42.9 | 47.8 | 54.8 | 53.5 |

| CME (Li et al., 2021) | 41.5 | 47.5 | 50.4 | 58.2 | 60.9 | 27.2 | 30.2 | 41.4 | 42.5 | 46.8 | 34.3 | 39.6 | 45.1 | 48.3 | 51.5 |

| FADI (Cao et al., 2021) | 50.3 | 54.8 | 54.2 | 59.3 | 63.2 | 30.6 | 35.0 | 40.3 | 42.8 | 48.0 | 45.7 | 49.7 | 49.1 | 55.0 | 59.6 |

| LVC‡ (Kaul et al., 2022) | 54.5 | 53.2 | 58.8 | 63.2 | 65.7 | 32.8 | 29.2 | 50.7 | 49.8 | 50.6 | 48.4 | 52.7 | 55.0 | 59.6 | 59.6 |

| DeFRCN* (Qiao et al., 2021) | 55.4 | 62.1 | 65.0 | 68.4 | 67.6 | 35.5 | 45.4 | 51.8 | 51.7 | 47.5 | 50.8 | 57.4 | 57.8 | 62.7 | 65.0 |

| Ours | 58.4 | 65.7 | 67.9 | 69.3 | 68.1 | 38.4 | 47.8 | 52.8 | 53.6 | 49.1 | 53.0 | 58.8 | 59.2 | 63.8 | 64.1 |

| Method | 10-shot | 30-shot | ||

|---|---|---|---|---|

| nAP | nAP75 | nAP | nAP75 | |

| FSRW (Kang et al., 2019) | 5.6 | 4.6 | 9.1 | 7.6 |

| MetaDet (Wang et al., 2019) | 7.1 | 6.1 | 11.3 | 8.1 |

| Meta R-CNN (Yan et al., 2019) | 8.7 | 6.6 | 12.4 | 10.8 |

| TFA w/cos (Wang et al., 2020) | 10.0 | 9.3 | 13.7 | 13.4 |

| MPSR (Wu et al., 2020) | 9.8 | 9.7 | 14.1 | 14.2 |

| Retentive R-CNN (Fan et al., 2021) | 10.5 | 13.8 | ||

| FSCE (Sun et al., 2021) | 11.9 | 10.5 | 16.4 | 16.2 |

| UP-FSOD (Wu et al., 2021) | 11.0 | 10.7 | 15.6 | 15.7 |

| SRR-FSD (Zhu et al., 2021) | 11.3 | 9.8 | 14.7 | 13.5 |

| DCNet (Hu et al., 2021) | 12.8 | 11.2 | 18.6 | 17.5 |

| Meta FRCN (Han et al., 2022a) | 12.7 | 10.8 | 16.6 | 15.8 |

| QA-FewDet (Han et al., 2021) | 11.6 | 9.8 | 16.5 | 15.5 |

| CME (Li et al., 2021) | 15.1 | 16.4 | 16.9 | 17.8 |

| FADI (Cao et al., 2021) | 12.2 | 11.9 | 16.1 | 15.8 |

| LVC‡ (Kaul et al., 2022) | 17.8 | 17.8 | 24.5 | 25.0 |

| DeFRCN* (Qiao et al., 2021) | 17.1 | 15.9 | 20.2 | 19.5 |

| Ours | 17.3 | 16.7 | 20.9 | 21.3 |

3.5. Training Procedure

During TTL, the total loss to optimize is as follows:

| (10) |

consisting of the supervised loss of -way -shot data, the unsupervised loss of pseudo-labels on test data, and the KL loss of soft-labels on test data. Here, and are hyper-parameters to balance such losses.

During this procedure, few-shot detectors are able to learn from test data. When a mini-batch of test samples arrive, we update the weights of the model through the total loss . A detailed description is provided in Algorithm 1.

4. Experiments

4.1. Datasets

PASCAL VOC. For PASCAL VOC (Everingham et al., 2015), the overall 20 classes are divided into 15 base classes and 5 novel classes. Following TFA (Wang et al., 2020), we utilize three different class splits, namely split 1, 2, and 3. For each split, base classes are exhaustively annotated, but novel classes only have annotated instances per class. Both base and novel instances are sampled from the PASCAL VOC (07+12) trainval set, and the model is tested on the PASCAL VOC07 test set. We report AP50 for novel classes during evaluation.

MS COCO. MS COCO (Lin et al., 2014) has 80 classes, and we select the 20 classes that overlapped with PASCAL VOC as novel classes and the remaining 60 classes as base classes. In this case, we evaluate our method with shots for each novel class. We report mAP and AP75, respectively.

4.2. Implementation Details

Our method can be combined with the majority of fine-tuning based few-shot object detectors. For simplicity, we choose the most representative SOTA method, i.e. DeFRCN (Qiao et al., 2021), as our baseline. DeFRCN adopts Faster-RCNN (Ren et al., 2016) as the detection model and uses the ResNet-101 backbone pre-trained on ImageNet. We use DeFRCN, which has been pre-trained on base classes and fine-tuned on novel classes, to initialize our model, and then fine-tune it on test data. During TTL, we fine-tune our model with a mini-batch of 2 on a single GPU, which simulates the real inference process of the few-shot detector. Besides, we follow a one-epoch setting, where we fine-tune on test data for only one epoch. We also utilize the -way -shot data for novel fine-tuning during the testing process. Due to the unsatisfactory performance of the few-shot detector, we apply weak data augmentation to both the -way -shot data and the test data, including random resizing and random horizontal flipping. For the hyper-parameters, we set and for all the experiments. We set the thresholds and . We optimize the network using Stochastic Gradient Descent (SGD) and set the learning rate to 0.00125. The momentum coefficient of EMA for the teacher network is set to 0.9996.

| nAP50 | |||||

|---|---|---|---|---|---|

| 1-shot | 2-shot | 3-shot | |||

| 55.4 | 62.1 | 65.0 | |||

| 54.3 | 61.5 | 63.2 | |||

| 56.1 | 63.4 | 65.7 | |||

| 57.0 | 63.8 | 65.4 | |||

| 58.4 | 65.7 | 67.9 | |||

| nAP50 | ||||

| 1-shot | 2-shot | 3-shot | ||

| 55.4 | 62.1 | 65.0 | ||

| 0.95 | 55.5 | 61.6 | 64.2 | |

| 0.90 | 57.0 | 63.8 | 65.4 | |

| 0.85 | 56.8 | 63.4 | 65.6 | |

| 0.90 | 0.8 | 57.1 | 65.2 | 67.4 |

| 0.90 | 0.7 | 58.4 | 65.7 | 67.9 |

| 0.90 | 0.6 | 57.5 | 65.2 | 67.5 |

4.3. Main Results

PASCAL VOC. Experimental results on the PASCAL VOC dataset are shown in Table 1. We use DeFRCN as our baseline, which incorporates an additional Prototypical Calibration Block (PCB) to refine predictions. However, we find that the -way -shot data utilized by PCB may not align with that used at the novel fine-tuning stage. Therefore, we exclude PCB and present our re-implementation results DeFRCN* in Tables 1 and 2. It can be observed that our method achieves decent improvements across various splits and different shots on the PASCAL VOC benchmark. Our method outperforms HallucFsDet (Zhang and Wang, 2021) and LVC (Kaul et al., 2022), which represent synthetic novel class data and semi-supervised learning on base data, respectively. Meanwhile, we find that the performance gain obtained from TTL becomes more significant as the shot number decreases, especially in the 1-shot scenario.

MS COCO. Table 2 shows the detection results on MS COCO. The MS COCO dataset conveys more categories, and typically, a single image contains multiple instances. few-shot detectors generally do not perform well on MS COCO due to these factors, which also undermine the performance of our method. However, we observe that our method still achieves a significant improvement compared to the baseline, especially in the mAP75 metric. There is a 5.0% improvement in AP75 at 10 shots and a 9.2% improvement in AP75 at 30 shots. LVC (Kaul et al., 2022) demonstrates a noticeable improvement on the MS COCO dataset, because the base data in the MS COCO benchmark include a large number of implicit novel instances. However, an issue arises from its setting of few-shot detection, which does not match the real-world scenario. In contrast, under the TTL setting, we only have 5,000 images available for mining implicit novel instances.

| Update Methods | nAP50 | ||

|---|---|---|---|

| 1-shot | 2-shot | 3-shot | |

| Static | 57.5 | 65.1 | 67.7 |

| Dynamic | 58.4 | 65.7 | 67.9 |

| Student Aug. | Teacher Aug. | nAP50 | ||

|---|---|---|---|---|

| 1-shot | 2-shot | 3-shot | ||

| Strong | Weak | 56.9 | 64.9 | 66.6 |

| Weak | Weak | 58.4 | 65.7 | 67.9 |

4.4. Ablation Studies

Ablation experiments are carried out on novel split 1 of the PASCAL VOC benchmark to highlight the necessity of the main components of the proposed method.

4.4.1. Effectiveness of each component.

We conduct a detailed ablation study on each component of our method, as shown in Table 3. The first row presents our baseline, i.e. DeFRCN. Initially, we attempt to solely utilize -way -shot data for supervised learning during testing but find that the model tends to overfit to these data, resulting in decreased performance. In the third row, we only fine-tune the model using high-quality pseudo-labels at the test phase, yielding the results superior to those of the baseline model. To further enhance the performance in low-sample scenarios, we combine -way -shot data with pseudo-labels for fine-tuning. It is observed that except for the 3-shot setting, the model achieves extra gains in other cases, suggesting that it effectively prevents the accumulation of biases. Finally, by introducing , i.e., employing the PS strategy in testing, the model significantly improves its performance across various sample sizes. This also indicates that our proposed method utilizes pseudo-labels in a more efficient way.

4.4.2. Upper and lower threshold setting.

Threshold selection is crucial in pseudo-labeling methods, and we thus conduct ablation experiments on the thresholds, as shown in Table 4. Firstly, we use a large threshold to filter high-quality pseudo-labels as hard labels for training the student network. To determine the appropriate value of , we perform standard self-training on test data without using soft-labels. From Table 4, it can be observed that a larger threshold may incur the problem that only a few pseudo-labels are available as hard labels. This often leads to many foreground objects being mistakenly classified as background, degrading the detection performance of the model. Conversely, a smaller threshold tends to introduce excessive noisy labels, which also negatively affects the performance. By comparing the results from rows 1 to 4, we set . Next, we conduct experiments on the threshold , where prediction boxes with confidence scores between and are considered as implicit foreground predictions and assigned soft-labels. Similarly, setting too high may result in only a few implicit foreground predictions available as soft-labels, while setting it too low is prone to suffer many false positives, mistaking background as implicit foreground. From Table 4, we set , which helps the model efficiently utilize implicit foreground predictions, especially in extremely low-shot scenarios (i.e., shot=1). Additionally, we notice that when the few-shot detector performs well, it is not sensitive to . The reason probably lies in that implicit foreground predictions are correctly assigned higher confidence scores, and background is given lower confidence scores.

4.4.3. Class prototype update.

As mentioned earlier, we utilize the feature similarity between low-confidence pseudo-labels and class prototypes to generate soft-labels, where well-defined class prototypes can produce more accurate soft-labels for implicit foreground predictions. However, since we initialize class prototypes using -way -shot data, the features of objects in each class often change during TTL, and static prototypes cannot accurately represent their respective classes. We thus propose to dynamically update class prototypes using high-confidence pseudo-labels, aiming to gradually converge the prototypes to their true class distributions during TTL. In Table B, we compare the results of static class prototypes and dynamic ones across multiple samplings, with the latter showing consistent improvements. We observe that the improvement by dynamically updating class prototypes becomes more pronounced as the number of samples decreases.

4.4.4. Alternative data augmentation.

We also validate the data augmentation techniques used for both the student and teacher networks. Generally, in semi-supervised object detection, weak augmentation is applied to input images for the teacher network, while strong augmentation is used for the student network (please refer to (Liu et al., 2021) for more details). However, in our case, we find that even with weak data augmentation for the student network, its performance improves. As shown in Table 6, consistently using weak-weak data augmentation enhances the performance across all the settings. This is because, during TTL, we can only fine-tune on test data for one epoch. Additionally, in the presence of data scarcity, strong data augmentation tends to disrupt the original data distribution, impeding model convergence.

4.4.5. Qualitative evaluation.

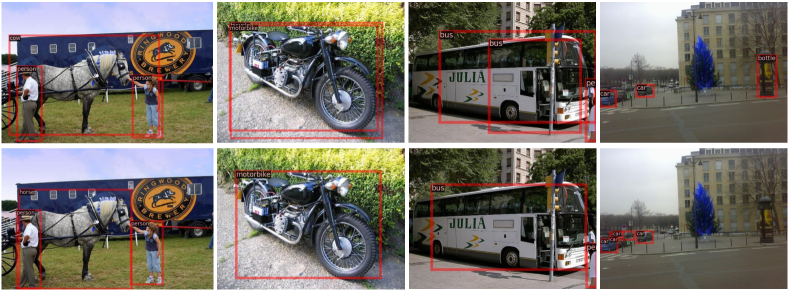

We visualize the detection results of 1-shot of PASCAL VOC in Fig. 4. Our method significantly alleviates the problem of classification confusion between base classes and novel classes. In the first column, DeFRCN misclassifies a base class (horse) as a novel class (cow), and in the second column, it misclassifies a novel class (motorcycle) as a base class (bicycle). Our method addresses this issue through fine-tuning at test-time. In the third column, DeFRCN predicts multiple local regions of a bus (novel class) as the bus category. Although we do not specifically design any loss for regression, the improvement in classification performance does help the model alleviate this issue. Additionally, our method improves the performance of base classes. For example, in column 4 of Fig. 4, DeFRCN incorrectly identifies a newsstand as a bottle and incompetently misses dense cars, both of which are refined by our method.

5. Conclusion

This paper proposes a novel framework for few-shot object detection, namely Prototype-based Soft-labels and Test-Time Learning (PS-TTL). It aims to address the challenge of precisely capturing the real data distribution of novel classes with scarce labeled samples. To this end, we propose a Test-Time Learning (TTL) module to discover novel instances from test data, allowing detectors to learn better representations and classifiers for novel classes. Furthermore, we design a Prototype-based Soft-labels (PS) strategy to unleash the potential of low-quality pseudo-labels, thereby significantly mitigating the constraints posed by few-shot samples. Extensive experiments are conducted on PASCAL VOC and MS COCO, and PS-TTL achieves the state-of-the-art performance, validating its effectiveness.

Acknowledgements.

This work is partly supported by the National Natural Science Foundation of China (No. 62022011), the Research Program of State Key Laboratory of Complex and Critical Software Environment, and the Fundamental Research Funds for the Central Universities.References

- (1)

- Cao et al. (2021) Yuhang Cao, Jiaqi Wang, Ying Jin, Tong Wu, Kai Chen, Ziwei Liu, and Dahua Lin. 2021. Few-shot object detection via association and discrimination. In Advances in Neural Information Processing Systems, Vol. 34. 16570–16581.

- Cao et al. (2022) Yuhang Cao, Jiaqi Wang, Yiqi Lin, and Dahua Lin. 2022. Mini: Mining implicit novel instances for few-shot object detection. arXiv preprint arXiv:2205.03381 (2022).

- Chen et al. (2018) Hao Chen, Yali Wang, Guoyou Wang, and Yu Qiao. 2018. Lstd: A low-shot transfer detector for object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 32.

- Demirel et al. (2023) Berkan Demirel, Orhun Buğra Baran, and Ramazan Gokberk Cinbis. 2023. Meta-tuning loss functions and data augmentation for few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7339–7349.

- Everingham et al. (2015) Mark Everingham, SM Ali Eslami, Luc Van Gool, Christopher KI Williams, John Winn, and Andrew Zisserman. 2015. The pascal visual object classes challenge: A retrospective. International Journal of Computer Vision 111 (2015), 98–136.

- Fan et al. (2022) Qi Fan, Chi-Keung Tang, and Yu-Wing Tai. 2022. Few-shot object detection with model calibration. In Proceedings of the European Conference on Computer Vision. Springer, 720–739.

- Fan et al. (2020) Qi Fan, Wei Zhuo, Chi-Keung Tang, and Yu-Wing Tai. 2020. Few-shot object detection with attention-RPN and multi-relation detector. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4013–4022.

- Fan et al. (2021) Zhibo Fan, Yuchen Ma, Zeming Li, and Jian Sun. 2021. Generalized few-shot object detection without forgetting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4527–4536.

- Han et al. (2021) Guangxing Han, Yicheng He, Shiyuan Huang, Jiawei Ma, and Shih-Fu Chang. 2021. Query adaptive few-shot object detection with heterogeneous graph convolutional networks. In IEEE/CVF International Conference on Computer Vision. 3263–3272.

- Han et al. (2022a) Guangxing Han, Shiyuan Huang, Jiawei Ma, Yicheng He, and Shih-Fu Chang. 2022a. Meta faster r-cnn: Towards accurate few-shot object detection with attentive feature alignment. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36. 780–789.

- Han et al. (2022b) Guangxing Han, Jiawei Ma, Shiyuan Huang, Long Chen, and Shih-Fu Chang. 2022b. Few-shot object detection with fully cross-transformer. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5321–5330.

- Han et al. (2023) Jiaming Han, Yuqiang Ren, Jian Ding, Ke Yan, and Gui-Song Xia. 2023. Few-shot object detection via variational feature aggregation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 37. 755–763.

- Hu et al. (2021) Hanzhe Hu, Shuai Bai, Aoxue Li, Jinshi Cui, and Liwei Wang. 2021. Dense relation distillation with context-aware aggregation for few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10185–10194.

- Kang et al. (2019) Bingyi Kang, Zhuang Liu, Xin Wang, Fisher Yu, Jiashi Feng, and Trevor Darrell. 2019. Few-shot object detection via feature reweighting. In IEEE/CVF International Conference on Computer Vision. 8420–8429.

- Karlinsky et al. (2019) Leonid Karlinsky, Joseph Shtok, Sivan Harary, Eli Schwartz, Amit Aides, Rogerio Feris, Raja Giryes, and Alex M Bronstein. 2019. Repmet: Representative-based metric learning for classification and few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5197–5206.

- Kaul et al. (2022) Prannay Kaul, Weidi Xie, and Andrew Zisserman. 2022. Label, verify, correct: A simple few shot object detection method. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 14237–14247.

- Kim et al. (2021) Geonuk Kim, Hong-Gyu Jung, and Seong-Whan Lee. 2021. Spatial reasoning for few-shot object detection. Pattern Recognition 120 (2021), 108118.

- Lee (2013) Dong-Hyun Lee. 2013. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the International Conference on Machine Learning Workshop.

- Li and Li (2021) Aoxue Li and Zhenguo Li. 2021. Transformation invariant few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3094–3102.

- Li et al. (2021) Bohao Li, Boyu Yang, Chang Liu, Feng Liu, Rongrong Ji, and Qixiang Ye. 2021. Beyond max-margin: Class margin equilibrium for few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7363–7372.

- Li et al. (2019) Zhuoling Li, Minghui Dong, Shiping Wen, Xiang Hu, Pan Zhou, and Zhigang Zeng. 2019. CLU-CNNs: Object detection for medical images. Neurocomputing 350 (2019), 53–59.

- Lin et al. (2017) Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. 2017. Focal loss for dense object detection. In IEEE/CVF International Conference on Computer Vision. 2980–2988.

- Lin et al. (2014) Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. 2014. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision. Springer, 740–755.

- Liu et al. (2023) Nanqing Liu, Xun Xu, Turgay Celik, Zongxin Gan, and Heng-Chao Li. 2023. Transformation-invariant network for few-shot object detection in remote-sensing images. IEEE Transactions on Geoscience and Remote Sensing 61 (2023), 1–14.

- Liu et al. (2016) Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, and Alexander C Berg. 2016. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision. Springer, 21–37.

- Liu et al. (2019) Yang Liu, Zhuo Ma, Ximeng Liu, Siqi Ma, and Kui Ren. 2019. Privacy-preserving object detection for medical images with faster R-CNN. IEEE Transactions on Information Forensics and Security 17 (2019), 69–84.

- Liu et al. (2021) Yen-Cheng Liu, Chih-Yao Ma, Zijian He, Chia-Wen Kuo, Kan Chen, Peizhao Zhang, Bichen Wu, Zsolt Kira, and Peter Vajda. 2021. Unbiased Teacher for Semi-Supervised Object Detection. In Proceedings of the International Conference on Learning Representations.

- Lu et al. (2023) Xiaonan Lu, Wenhui Diao, Yongqiang Mao, Junxi Li, Peijin Wang, Xian Sun, and Kun Fu. 2023. Breaking immutable: Information-coupled prototype elaboration for few-shot object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 37. 1844–1852.

- Lu et al. (2022) Yue Lu, Xingyu Chen, Zhengxing Wu, and Junzhi Yu. 2022. Decoupled metric network for single-stage few-shot object detection. IEEE Transactions on Cybernetics 53, 1 (2022), 514–525.

- Ma and Huang (2023) Haoxiang Ma and Di Huang. 2023. Towards scale balanced 6-dof grasp detection in cluttered scenes. In Conference on Robot Learning. PMLR, 2004–2013.

- Ma et al. (2022) Jiawei Ma, Guangxing Han, Shiyuan Huang, Yuncong Yang, and Shih-Fu Chang. 2022. Few-shot end-to-end object detection via constantly concentrated encoding across heads. In Proceedings of the European Conference on Computer Vision. Springer, 57–73.

- Nguyen et al. (2022) Thanh Nguyen, Chau Pham, Khoi Nguyen, and Minh Hoai. 2022. Few-shot object counting and detection. In Proceedings of the European Conference on Computer Vision. Springer, 348–365.

- Oquab et al. (2023) Maxime Oquab, Timothée Darcet, Théo Moutakanni, Huy Vo, Marc Szafraniec, Vasil Khalidov, Pierre Fernandez, Daniel Haziza, Francisco Massa, Alaaeldin El-Nouby, et al. 2023. Dinov2: Learning robust visual features without supervision. arXiv preprint arXiv:2304.07193 (2023).

- Pei et al. (2022) Wenjie Pei, Shuang Wu, Dianwen Mei, Fanglin Chen, Jiandong Tian, and Guangming Lu. 2022. Few-shot object detection by knowledge distillation using bag-of-visual-words representations. In Proceedings of the European Conference on Computer Vision. Springer, 283–299.

- Pu et al. (2024) Yifan Pu, Weicong Liang, Yiduo Hao, Yuhui Yuan, Yukang Yang, Chao Zhang, Han Hu, and Gao Huang. 2024. Rank-DETR for high quality object detection. In Advances in Neural Information Processing Systems, Vol. 36.

- Qiao et al. (2021) Limeng Qiao, Yuxuan Zhao, Zhiyuan Li, Xi Qiu, Jianan Wu, and Chi Zhang. 2021. Defrcn: Decoupled faster r-cnn for few-shot object detection. In IEEE/CVF International Conference on Computer Vision. 8681–8690.

- Qin et al. (2023) Ran Qin, Haoxiang Ma, Boyang Gao, and Di Huang. 2023. RGB-D grasp detection via depth guided learning with cross-modal attention. In IEEE International Conference on Robotics and Automation. IEEE, 8003–8009.

- Redmon et al. (2016) Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi. 2016. You only look once: Unified, real-time object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 779–788.

- Ren et al. (2016) Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. 2016. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 39, 6 (2016), 1137–1149.

- Sun et al. (2021) Bo Sun, Banghuai Li, Shengcai Cai, Ye Yuan, and Chi Zhang. 2021. Fsce: Few-shot object detection via contrastive proposal encoding. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7352–7362.

- Tang et al. (2023) Yingbo Tang, Zhiqiang Cao, Yuequan Yang, Jierui Liu, and Junzhi Yu. 2023. Semi-supervised few-shot object detection via adaptive pseudo labeling. IEEE Transactions on Circuits and Systems for Video Technology (2023).

- Tarvainen and Valpola (2017) Antti Tarvainen and Harri Valpola. 2017. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Advances in Neural Information Processing Systems, Vol. 30.

- Tian et al. (2020) Zhi Tian, Chunhua Shen, Hao Chen, and Tong He. 2020. FCOS: A simple and strong anchor-free object detector. IEEE Transactions on Pattern Analysis and Machine Intelligence 44, 4 (2020), 1922–1933.

- Wang et al. (2023) Chien-Yao Wang, Alexey Bochkovskiy, and Hong-Yuan Mark Liao. 2023. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7464–7475.

- Wang et al. (2020) Xin Wang, Thomas E Huang, Trevor Darrell, Joseph E Gonzalez, and Fisher Yu. 2020. Frustratingly simple few-shot object detection. In Proceedings of the International Conference on Machine Learning. 9919–9928.

- Wang et al. (2019) Yu-Xiong Wang, Deva Ramanan, and Martial Hebert. 2019. Meta-learning to detect rare objects. In IEEE/CVF International Conference on Computer Vision. 9925–9934.

- Wu et al. (2021) Aming Wu, Yahong Han, Linchao Zhu, and Yi Yang. 2021. Universal-prototype enhancing for few-shot object detection. In IEEE/CVF International Conference on Computer Vision. 9567–9576.

- Wu et al. (2020) Jiaxi Wu, Songtao Liu, Di Huang, and Yunhong Wang. 2020. Multi-scale positive sample refinement for few-shot object detection. In Proceedings of the European Conference on Computer Vision. Springer, 456–472.

- Wu et al. (2022) Shuang Wu, Wenjie Pei, Dianwen Mei, Fanglin Chen, Jiandong Tian, and Guangming Lu. 2022. Multi-faceted distillation of base-novel commonality for few-shot object detection. In Proceedings of the European Conference on Computer Vision. Springer, 578–594.

- Yan et al. (2019) Xiaopeng Yan, Ziliang Chen, Anni Xu, Xiaoxi Wang, Xiaodan Liang, and Liang Lin. 2019. Meta r-cnn: Towards general solver for instance-level low-shot learning. In IEEE/CVF International Conference on Computer Vision. 9577–9586.

- Yang et al. (2020) Yukuan Yang, Fangyun Wei, Miaojing Shi, and Guoqi Li. 2020. Restoring negative information in few-shot object detection. In Advances in Neural Information Processing Systems, Vol. 33. 3521–3532.

- Yang et al. (2022) Ze Yang, Chi Zhang, Ruibo Li, Yi Xu, and Guosheng Lin. 2022. Efficient few-shot object detection via knowledge inheritance. IEEE Transactions on Image Processing 32 (2022), 321–334.

- Yin et al. (2022) Li Yin, Juan M Perez-Rua, and Kevin J Liang. 2022. Sylph: A hypernetwork framework for incremental few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9035–9045.

- Zhang et al. (2022b) Gongjie Zhang, Zhipeng Luo, Kaiwen Cui, Shijian Lu, and Eric P Xing. 2022b. Meta-detr: Image-level few-shot detection with inter-class correlation exploitation. IEEE Transactions on Pattern Analysis and Machine Intelligence 45, 11 (2022), 12832–12843.

- Zhang et al. (2023a) Hao Zhang, Feng Li, Shilong Liu, Lei Zhang, Hang Su, Jun Zhu, Lionel Ni, and Heung-Yeung Shum. 2023a. DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations.

- Zhang et al. (2023b) Jinqing Zhang, Yanan Zhang, Qingjie Liu, and Yunhong Wang. 2023b. SA-BEV: Generating Semantic-Aware Bird’s-Eye-View Feature for Multi-view 3D Object Detection. In IEEE/CVF International Conference on Computer Vision. 3348–3357.

- Zhang et al. (2021b) Lu Zhang, Shuigeng Zhou, Jihong Guan, and Ji Zhang. 2021b. Accurate few-shot object detection with support-query mutual guidance and hybrid loss. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 14424–14432.

- Zhang et al. (2020a) Shifeng Zhang, Cheng Chi, Yongqiang Yao, Zhen Lei, and Stan Z Li. 2020a. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9759–9768.

- Zhang and Wang (2021) Weilin Zhang and Yu-Xiong Wang. 2021. Hallucination improves few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13008–13017.

- Zhang et al. (2020b) Weilin Zhang, Yu-Xiong Wang, and David A Forsyth. 2020b. Cooperating RPN’s improve few-shot object detection. arXiv preprint arXiv:2011.10142 (2020).

- Zhang et al. (2024) Xinyu Zhang, Yuting Wang, and Abdeslam Boularias. 2024. Detect Everything with Few Examples. arXiv preprint arXiv:2309.12969 (2024).

- Zhang et al. (2022a) Yanan Zhang, Jiaxin Chen, and Di Huang. 2022a. Cat-det: Contrastively augmented transformer for multi-modal 3d object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 908–917.

- Zhang et al. (2021a) Yanan Zhang, Di Huang, and Yunhong Wang. 2021a. PC-RGNN: Point cloud completion and graph neural network for 3D object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35. 3430–3437.

- Zhao et al. (2022) Zhiyuan Zhao, Qingjie Liu, and Yunhong Wang. 2022. Exploring effective knowledge transfer for few-shot object detection. In Proceedings of the ACM International Conference on Multimedia. 6831–6839.

- Zhou et al. (2023) Chao Zhou, Yanan Zhang, Jiaxin Chen, and Di Huang. 2023. Octr: Octree-based transformer for 3d object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5166–5175.

- Zhu et al. (2021) Chenchen Zhu, Fangyi Chen, Uzair Ahmed, Zhiqiang Shen, and Marios Savvides. 2021. Semantic relation reasoning for shot-stable few-shot object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8782–8791.

Supplementary Material

This supplementary material provides more experiment results on PS-TTL in Sec. A and more visualization results in Sec. B.

Appendix A More Experiment Results

A.1. One Batch vs. One Epoch

We implement test-time learning by fine-tuning on test data for one epoch, followed by testing on the same test data. Hence, we require a specific storage capacity to accommodate test data. In a more realistic scenario, we need to conduct test-time learning on sequentially streamed test data. When a batch of testing samples arrives, we first use the detector to make predictions. And then, the detector’s weights are updated on this batch of testing samples. We compared two testing strategies, One Batch and One Epoch, on novel split 1 of the PASCAL VOC benchmark, as shown in Table A. By comparing row 1 and row 2, we found that under the One Batch testing strategy, test-time learning can still bring stable improvements to the baseline. Although the performance of the One Batch strategy is generally inferior to that of the One Epoch strategy, we believe this is due to the detector not learning enough knowledge from test data at the early stage during testing.

A.2. The Performance Trend of Test-Time Learning

As shown in Fig. A, we plot the performance trend of the detector under different shot settings on novel split 1 of the PASCAL VOC dataset as training iterations progress. As expected, with the increase of training iterations, the performance of the detector improves progressively. It demonstrates the effectiveness of test-time learning. Through test-time learning, we endow the detector with the continuously learning ability. By learning on test data, the detector can better utilize novel instances in test data to capture the data distribution of novel classes.

A.3. More Baseline Detectors

A.4. Comparison with Recent SOTA Methods

DE-ViT(Zhang et al., 2024) is new SOTA few-shot detector integrating DINOv2(Oquab et al., 2023) ViT into the framework. It is unfair to directly compare DE-ViT with our method, as DE-ViT takes DINOv2 as the backbone, which means that the few-shot novel classes used for testing may have been seen during DINOv2 pre-training. However, as shown in Tab. B, our method with MFDC outperforms DE-ViT in extremely low-shot scenarios (i.e., shot=1/2/3), and reports satisfactory performance at 5/10 shots.

| Test Strategy | nAP50 | ||

|---|---|---|---|

| 1-shot | 2-shot | 3-shot | |

| DeFRCN* | 55.4 | 62.1 | 65.0 |

| One Batch | 56.4 | 64.0 | 66.4 |

| One Epoch | 58.4 | 65.7 | 67.9 |

| Method / Shots | Novel Split 1 | ||||

|---|---|---|---|---|---|

| 1-shot | 2-shot | 3-shot | 5-shot | 10-shot | |

| DE-ViT(ViT-S/14) | 47.5 | 64.5 | 57.0 | 68.5 | 67.3 |

| DE-ViT(ViT-B/14) | 56.9 | 61.8 | 68.0 | 73.9 | 72.8 |

| DE-ViT(ViT-L/14) | 55.4 | 56.1 | 68.1 | 70.9 | 71.9 |

| DeFRCN* | 55.4 | 62.1 | 65.0 | 68.4 | 67.6 |

| DeFRCN*+PS-TTL | 58.4 | 65.7 | 67.9 | 69.3 | 68.1 |

| MFDC* | 62.4 | 67.5 | 68.9 | 71.0 | 71.4 |

| MFDC*+PS-TTL | 63.1 | 69.0 | 69.1 | 71.3 | 72.4 |

| Method | 1-shot | 2-shot | 3-shot | 5-shot | ||||

|---|---|---|---|---|---|---|---|---|

| nAP | nAP75 | nAP | nAP75 | nAP | nAP75 | nAP | nAP75 | |

| TFA w/cos (Wang et al., 2020) | 3.4 | 3.8 | 4.6 | 4.8 | 6.6 | 6.5 | 8.3 | 8.0 |

| MPSR (Wu et al., 2020) | 2.3 | 2.3 | 3.5 | 3.4 | 5.2 | 5.1 | 6.7 | 6.4 |

| QA-FewDet (Han et al., 2021) | 4.9 | 4.4 | 7.6 | 6.2 | 8.4 | 7.3 | 9.7 | 8.6 |

| FADI (Cao et al., 2021) | 5.7 | 6.0 | 7.0 | 7.0 | 8.6 | 8.3 | 10.1 | 9.7 |

| DeFRCN* (Qiao et al., 2021) | 5.5 | 5.7 | 9.8 | 9.9 | 12.3 | 12.6 | 14.2 | 13.7 |

| Ours | 6.0 | 6.5 | 10.1 | 10.3 | 12.5 | 12.5 | 14.4 | 13.8 |

A.5. Results on MS COCO Under Low-shot Settings

Table C shows detection results on MS COCO under low-shot settings. The MS COCO dataset contains 80 categories, with each image typically containing multiple instances. This leads to a degradation in the performance of few-shot detectors on test data, especially in low-shot settings, which hinders the ability to conduct test-time learning. However, our method consistently improves across various low-shot settings, especially in extremely low-sample scenarios, demonstrating notable enhancements. For example, in the 1-shot scenario, our method improved the mAP on novel classes by 9% compared to DeFRCN.

Appendix B More Visualization Results

We visualize more detection results of 1-shot on PASCAL VOC in Fig. B. Our method can alleviate the misclassification issue between base and novel classes, as shown in the top group of Fig. B. DeFRCN misclassifies base class dogs and horses as novel class cows, novel class motorbikes as base class bottles, and novel class buses as base class trains. Although our method is not optimized for the regression branch, as more novel class instances are observed, our method can improve the regression performance of novel classes, as shown in the first two columns of the bottom group in Fig. B. Furthermore, in the construction of base data for FSOD, there are numerous unlabeled novel instances in base data. This may result in some novel instances being misclassified as background. Our method continuously learns on test data, which helps mitigate this issue. As shown in the column 3 and column 4 of the bottom group in Fig. B, our method can prevent the omission of novel classes, such as cows and birds.