Theme Article: Data Economy and Data Marketplaces

Protecting Data Buyer Privacy in Data Markets

Abstract

Data markets serve as crucial platforms facilitating data discovery, exchange, sharing, and integration among data users and providers. However, the paramount concern of privacy has predominantly centered on protecting privacy of data owners and third parties, neglecting the challenges associated with protecting the privacy of data buyers. In this article, we address this gap by modeling the intricacies of data buyer privacy protection and investigating the delicate balance between privacy and purchase cost. Through comprehensive experimentation, 111Our code is available at https://github.com/minxingzhang0107/Protecting-Privacy-of-Data-Buyers-in-Data-Markets. our results yield valuable insights, shedding light on the efficacy and efficiency of our proposed approaches. 222The short version of this paper is accepted in IEEE Internet Computing. DOI: 10.1109/MIC.2024.3398626.

Data markets, buyer, privacy preservation.

Large scale data driven applications rely on more and more data from many different sources. The power of big data, data science, and AI comes from rich data sources, particularly secondary uses of data. Data markets are online platforms that connect data supplies and demands and enable data discovery, exchange, sharing, and integration [1, 2]. Recently, data markets have attracted a lot of interest from both industry and academia.

Privacy holds significant importance in data markets. Broadly defined, it refers to an individual’s or a group’s capacity to conceal themselves or information about themselves, preventing identification or unwanted approaches by others. In data markets, whether privacy should be treated as goods and how privacy is priced are investigated [3, 4].

Preserving privacy in data markets is of utmost importance. Transactions within data markets have the potential to reveal the privacy of various parties through multiple avenues. Data providers may face privacy risks. For instance, hospitals possess valuable medical treatment information that could be sought after by medical equipment companies. If hospitals appropriately collect and anonymize medical treatment data, offering it in data markets while ensuring individual patient identities remain protected, buyers may still glean information, such as the success rates of specific diseases in a hospital, thereby compromising the privacy of the hospital. Moreover, transactions in data markets may expose the privacy of third parties involved. For instance, an AI technology company providing machine learning model building services to data product buyers could face the risk of model theft [5], which constitutes a breach of the company’s privacy. To address these privacy concerns in data markets, various approaches are under exploration. Strategies include creating decentralized and trustworthy privacy-preserving data markets [6, 7], investigating tradeoffs between payments and accuracy when privacy is a factor [8], and aggregating non-verifiable information from privacy-sensitive populations [9]. Numerous studies delve into privacy preservation in data markets, and interested readers are encouraged to explore comprehensive surveys [10, 11, 12, 13, 14, 15, 16] and other sources for further insights.

While most of the existing studies on privacy preservation in data markets focus on protecting data sellers’ and third parties’ privacy, data buyers’ privacy is often overlooked. Indeed, in data markets, details such as data buyers’ identities, purchase locations and times, products purchased, prices, and quantities can inadvertently expose their privacy. Incidents of e-commerce providers accidentally leaking customer information have been reported again and again, highlighting the urgency of safeguarding buyer privacy.

To the best of our knowledge, there is very limited existing literature that specifically tackles the challenges associated with preserving privacy for buyers in data markets. While Aiello et al. [17] tackles the anonymity of buyers in electronic product transactions and introduced an approach aiming at concealing buyer identities during transactions, it is essential to note that, in data markets, the challenges of privacy protection for data buyers remain largely unexplored.

This article addresses the intricate challenge of protecting the privacy of data buyers within a practical and compelling scenario in data markets. In this context, a data buyer publicly declares their purchase intent, specifying the range of the desired data records. This announcement serves to alert potential data owners, encouraging them to provide the relevant data. Simultaneously, the specific details of the purchase intent become the buyer’s privacy, requiring protection.

For instance, consider a company planning a financial service targeting customers aged 40-60 with an annual household income of 150-300k, interested in balanced mid-to-long term investment for future retirement. The company wishes to collect financial behavior data from this specific demographic. However, it also aims to keep this strategic focus confidential, preventing competitors from becoming aware of its interest in this customer group. Consequently, the precise range of the data purchase intent must be preserved to ensure the buyer’s privacy.

To protect the privacy of data buyers in data markets, we explore a nuanced trade-off between privacy and purchase cost. When a buyer discloses the exact purchase intent, acquiring only the data within that specified intent incurs no additional cost. However, this approach compromises privacy, as an attacker can be certain, with confidence, whether a record is within the buyer’s intent or not.

On the other extreme, if a buyer declares the entire data space as the purchase intent, such as customers of any ages and any household income in our previous example, the privacy of the buyer is better preserved. This is because, if the purchase intent represents only a small subset of the entire data space, an attacker has low confidence in determining whether a given data record is part of the buyer’s actual intent. However, this heightened privacy comes at a significant cost, as the buyer must purchase all data records in the expansive whole data space.

In a more general approach, buyers can strike a balance between privacy preservation and cost by posting a published intent that is broader than the actual intent, such as customers aged 30-70 and an annual household income 100-500k. By doing so, even if an attacker observes the published intent, their confidence in determining whether a data record truly aligns with the exact purchase intent remains below a threshold specified by the buyer. This strategy should minimize the cost associated with purchasing all data records within the published intent.

In this article, we provide a series of technical contributions. Firstly, we formalize the buyer’s privacy preservation problem, considering diverse background knowledge that an attacker may leverage. To the best of our knowledge, we are the first in modeling this specific problem. Secondly, we introduce heuristic approaches to address the outlined problem. These approaches are designed to enhance the privacy of the buyer while efficiently managing the trade-off with associated costs. Lastly, we conduct a comprehensive empirical study to evaluate our proposed approach and provide valuable insights into the effectiveness and efficiency of our approach, offering a robust understanding of the practical implications.

Related Work

As related work, there is a rich body of literature on privacy-preserving data publishing (PPDP), which provides methods and tools for publishing useful information while preserving data privacy [10]. Most methods for PPDP use some form of data transformation by reducing granularity of representation to protect the privacy [11]. The randomization method is a typical PPDP technique by adding noise to the data to mask the attribute values of records [18], such as -anonymity [19], -diversity [20], and -closeness [21]. Regarding publishing aggregate information about a statistical database, Dwork [22] proposed the famous -differential privacy model to ensure that the removal or addition of a single database record does not significantly affect the outcome of any analysis. In the distributed privacy preservation setting where an untrusted collector wishes to collect data from a group of respondents, Xue et al. [23] propose a distributed data collection protocol to allow the data collector to obtain a -anonymized or -diversified version of the aggregated table. Recent advancements have introduced data synthesization, which focuses on publishing synthetic datasets that statistically resemble the original data but do not contain any actual user data, thereby mitigating the risk of personal data exposure [24]. To avoid the situation that the output of applications such as query processing, classification, or association rule mining may result in privacy leakage even though the data may not be available, a series of methods were developed to downgrade the effectiveness of applications by either data or application modifications, such as query auditing [25], classifier downgrading [26], and association rule hiding [27].

The predominant focus of the existing studies on PPDP lies in safeguarding the privacy of individual records within a database or across multiple databases. However, the aspect of protecting the privacy of data buyers has not been touched in those studies.

Data Buyer Privacy and Attacker Models

Consider a data buyer who wants to acquire data in space , where are dimensions (also known as attributes). Denote by the data distribution in . Without loss of generality, we assume that each dimension is finite and nominal. The target subset of data that the data buyer wants to acquire, called the true intent, can be specified using a conjunctive normal form , where . When , we also write as ALL, meaning that the data buyer does not specify any constraint on dimension . Please note that a solution for this basic case may be extended to more general cases, such as an intent like . Limited by space, we defer more sophisticated cases to future work.

In an efficient data market, it is assumed that the data buyer specifies the true intent in a truthful manner, that is, all data records falling in the true intent are indeed interesting to the buyer.

For example, consider a dataset representing customer transactions in an e-commerce platform with dimensions, namely , the product category (e.g., electronics, clothing, books), , payment method (e.g., credit card, PayPal, cash), and , state (e.g., NC, SC, GA). Now, a data buyer, an online electronics retailer, is interested in acquiring a specific subset of data to analyze customer behavior for targeted marketing. The buyer specifies the true intent truthfully as .

If the specified true intent is released to the public directly, the data buyer’s target can be easily obtained by competitors, revealing a strategic focus on the sale of electronics to customers located in North Carolina. This information potentially exposes the buyer to increased competitive pressures, as rivals could leverage this information to refine their marketing strategies, intensify competition in the market, and tailor their approaches to attract customers in the same geographical region.

A data buyer wants to protect the privacy so that an attacker cannot determine the buyer’s true intent with high confidence based on the observable information from the buyer. We will consider different assumptions about the observable information and the corresponding attack models.

To quantify an attacker’s intrusion into a buyer’s privacy in the true intent, given a record in , we measure the attacker’s confidence about whether the record belongs to the buyer’s true intent. The inference about the confidence is part of the attack models to be discussed in the rest of the section.

Published Intent-based Attack

To protect the true intent as the buyer’s privacy, a data buyer may post a published intent that is a superset of the true intent, that is, , so that potential data providers know the data buyer’s interest. Our first attack model is based on the published intent (PI for short).

Assumption 1 (Published intent-based attack)

In a published intent-based attack, an attacker only observes the published intent and does not have any other knowledge about the data space and the cost of data collection and processing.

Since the published intent is the only information that an attacker has about the buyer’s intent, given a record in , if the record is outside the published intent, then an attacker knows that the record is not interesting to the buyer. For a record in the published intent, the attacker’s confidence on whether the record is in the buyer’s true intent can be analyzed as follows.

Since the attacker does not have any background information about the data space, such as the probability density, the best that an attacker can assume is that each possible point in has the same probability to occur. That is, the data distribution in is uniform. Therefore, we also call the published intent-based attack the PI-uniform attack in this article.

Since the true intent is a subset of the published intent, and the minimal size of the true intent is , that is, the true intent contains only one specific value on each dimension specified in the published intent. Thus, the lower bound of the confidence is , which is known to both the buyer and any attacker. In the same vein, the upper bound of the confidence is , which is only known to the buyer but not to an attacker. Then, we have

| (1) |

where denotes the attacker’s confidence of record in the data buyer’s true intent given in the published intent via PI-uniform attack.

One may wonder, as is unknown to an attacker, how the upper bound takes effect. Please note that in the task of buyer privacy protection, the key is to ensure that even an attacker knows the size of the true intent, the chance that a record in the published intent indeed belongs to the true intent is still lower than some buyer specified threshold. Therefore, is used in designing the protection mechanisms.

Efficiency Maximization Attack

In addition to the observable published intent, an attacker may have some background knowledge about the data, such as the data distribution in or the cost of producing or collecting a data record with respect to dimension values. The attacker may use the background knowledge to enhance the confidence on whether a data record belongs to a data buyer’s true intent. The central idea is that, to be economically efficient, a data buyer tends to maximize the efficiency of the published intent. That is, a large part in the published intent is within the buyer’s true intent. Thus, we call this type of attacks the efficiency maximization attacks.

In our running example, suppose the published intent contains two states, NC and SC. If an attacker knows that 80% of the transactions appear in NC, then the attacker may heuristically guess NC is in the true intent.

Assumption 2 (Efficiency maximization attack)

In an efficiency maximization attack, an attacker observes the published intent and has the background knowledge about the data distribution in the data space , i.e. , and/or the cost, i.e. , of each data record in . Here, we assume that depends on only the dimension values of .

Specifically, if an attacker knows the data distribution in the subspace of the published intent, then, given a record matching the published intent, an upper bound of the attacker’s confidence about that the record falls in the buyer’s intent is proportional to the probability of the record. That is,

| (2) |

where denotes the probability of the record based on the data distribution in the data space .

The above model can be easily extended by incorporating the cost of data records. By betting that the buyer wants to spend the budget in a cost efficient way, an upper bound of the confidence is

| (3) |

Alternatively, if an attacker knows the cost of data records but does not know the density distribution , still by betting that the buyer wants to spend the budget in a cost efficient way, an upper bound of the confidence is

| (4) |

Purchased Record Inference Attack

In some situations, an attacker observes the records purchased by a data buyer, and tries to infer the data buyer’s intent. To protect the privacy, a data buyer may purchase more records than the true intent. Suppose a data buyer purchases a set of records , containing the records in the true intent and some disguising records that the data buyer uses to protect the privacy. An attacker may try to infer whether the data buyer is interested in a given record. We call this type of attacks the purchased record inference attack.

Assumption 3 (Purchased record inference attack)

In a purchased record inference attack, an attacker observes the set of data records purchased by the data buyer. Optionally, the attacker may also have the background knowledge about the data distribution in the data space , i.e. .

Given a data record, an attacker can infer whether the record is interesting to the data buyer in two steps. In the first step, the attacker can infer a pseudo published intent using the observed set of data records purchased by the data buyer. In order words, the attacker can try to find a minimal intent such that every purchased data record is in the intent. That is,

| (5) |

Clearly, the solution to Equation 5 can be written as:

| (6) |

where is the -th dimensional feature of .

Given a record in , if the record is outside the pseudo published intent, then an attacker knows that the record is not interesting to the buyer. For a record in the pseudo published intent, the attacker’s confidence on whether the record is in the buyer’s true intent can be analyzed as follows, using the distribution of purchased records in the pseudo published intent and the attacker’s background knowledge.

As in a purchased record inference attack, an attacker can infer the observed data distribution in the purchased set of records, specifically, for a record in the pseudo published intent, the occurring probability of in the purchased set can be estimated as

| (7) |

where denotes the occurring frequency of record in , and denotes the cardinality (total number of records in ).

In the simplest case, the attacker only observes the records purchased by the data buyer and does not have any background knowledge about the data distribution in the data space . Then, given a record matching the pseudo published intent, an upper bound of the attacker’s confidence about that record falling in the buyer’s true intent is proportional to the occurring probability of the record in . That is,

| (8) |

If an attacker has the background knowledge about the data distribution in the data space , i.e. , by comparing and the observed distribution , the attacker can infer how likely a purchased record is in the true intent based on the efficiency maximization assumption. The more frequent in the observed distribution and less likely in , the more likely belongs to the true intent.

Quantitatively, for a purchased record , we measure how unlikely the frequency of in the observed distribution may happen by chance given the distribution , which is indicated by the p-value. We first compute the occurring probability of in the psedo published intent based on as follows:

| (9) |

If and the p-value is small, then likely belongs to the true intent. To compute its p-value, we conduct a model-less hypothesis testing by leveraging Monte Carlo simulation and permutation testing. More specifically, we sample sets of records from the pseudo published intent based on each with the same size as the buyer’s purchased set (denoted as ). For each record in satisfying , we compute the p-value of (the proportion of sampled sets with record ’s occurring probability at least as extreme as ). With this, an upper bound of the attacker’s confidence about that record falls in the buyer’s intent can be written as:

| (10) |

The aforementioned model readily lends itself to extension by integrating the cost of data records, following the same logic as the efficiency maximization attack as indicated in Equation 3. For conciseness, we refrain from delving further into the exploration of data record costs in purchased record inference attack.

Problem Formulation

Given a buyer’s true intent and a buyer-specified maximal confidence threshold , the data buyer wants to either release a published intent in the PI-uniform attack and efficiency maximization attack models or purchase data records in a larger scope in the purchased record inference attack, so that any attackers’ confidence is not higher than on a record in the published intent or purchased by the data buyer belonging to the buyer’s true intent. A published intent is called a -privacy preserving published intent, and a set of data records to be purchased is called a -privacy preserving set of purchased records if they satisfying the requirement.

Since a data buyer has to pay for the records in the published intent or the set of data records to be purchased, for the sake of economic consideration, the published intent and the scope of data records to be purchased should be minimized. The problem of data buyer privacy protection is to compute the minimal -privacy preserving published intent or set of purchased records.

PI-uniform Attack

In this case, we need to ensure that the confidence upper bound as expressed in Equation 1 is not higher than . Thus, we have . That is, . In other words, the defence to a PI-uniform attack can be modeled as the following optimization problem.

| (11) |

Efficiency Maximization Attack

In this case, we need to ensure that the confidence upper bound based on an attacker’s background knowledge about the data distribution as expressed in Equation 2 is not higher than for all records belonging to the true intent (it is also generalizable given an attacker’s background knowledge about both the data distribution and the costs, as indicated in Equation 3). For any , . We note that,

That is, .

In other words, the defence to an efficiency maximization attack can be modeled as the following optimization problem.

| (12) |

Purchased Record Inference Attack

In this case, we need to ensure that the confidence upper bound based on an attacker’s background knowledge about the data distribution as expressed in Equation 10 is not higher than for all records belonging to the true intent. For any ,

That is,

Thus, we need to make sure that the p-value of is greater than or equal to . Considering utility as a primary consideration, where the buyer seeks to maximize the ratio of the number of records in the true intent against that in the published intent, the defense to a purchased record inference attack can be modeled as the following optimization problem.

| (13) |

Data Buyer Privacy Protection

In this section, we develop the algorithms tackling the data buyer privacy preservation problem.

PI-uniform Attack / Efficiency Maximization Attack

Given space and the buyer’s true intent , we can keep expending the set of feature values in the true intent until obtaining a published intent that can protect the true intent sufficiently, that is, the constraint in Equations 11 (PI-uniform attack) or 12 (efficiency maximization attack) is satisfied.

Specifically, we set the initial published intent to the same as the true intent, that is, for . In each iteration, we iterate through every dimension in ; for dimension and the corresponding feature value set in the published intent, we select the nearest neighbor feature value in . After iterating through every dimension, we add the best neighbor feature value to the published intent that minimizes Equations 11 (PI-uniform attack) or 12 (efficiency maximization attack).

We need to tackle two challenges in this method, how to find the potential nearest neighbor feature value on each dimension and how to determine the best neighbor feature value among all possible choices.

To illustrate our ideas to tackle those challenges, consider a running example in Figure 1(a), where the published intent is denoted by the red region. Here, , where (horizontal) is the education level and (vertical) the workclass. Let us first look at dimension , where the feature values in the published intent are {“Masters”, “Doctorate”}. The possible feature values to expand include “Pre-school” and “Bachelors.” For each of those two, we compute the accumulated distance between the feature value and all feature values in . The accumulated distance for a feature value is defined as . Suppose we assign the indices 1, 2, 3, and 4 to “Pre-school,” “Bachelors,” “Masters,” and “Doctorate,” respectively. The accumulated distance between “Pre-school” and all the features in is . Similarly, the accumulated distance for “Bachelors” with respect to is 3. The feature value with the shortest accumulated distance is selected as the nearest neighbor feature value on , which is “Bachelors.” On dimension , there is no semantic distance among feature values. In other words, every pair of feature values on has the same distance. Thus, regarding the features in the published intent on dimension , which is {“Federal-gov”}, all the remaining features “Without-pay,” “Private,” and “Self-emp-not-inc” are treated as potential expanded feature values.

After iterating through every dimension, we have four neighbor feature values for possible expansion: “Bachelors” on (the resulting published intent is indicated in Figure 1(b)), “Without-pay” (Figure 1(c)), “Private” (Figure 1(d)), and “Self-emp-not-inc” (Figure 1(e)) on .

To determine the best feature value, we develop a scoring function to comprehensively measure the effectiveness of adding the feature value to the published intent. Given a potential feature value to be added, which is a feature value on dimension , the scoring considers two factors. First, we consider the additional number of records added to the buyer’s published intent after adding the feature value. Given feature value and the current published intent , the addition can be expressed as . Second, we also consider the contribution to satisfy the constraints in Equation 11 (PI-uniform attack) or Equation 12 (efficiency maximization attack), which is measured as the increase in the right-hand side of the constraint after adding the feature value and can be expressed as follows. For the PI-uniform attack,

| (14) |

For the efficiency maximization attack,

| (15) |

For each neighbor feature value, we apply min-max normalization to the associated addition of records and increase in satisfying the constraint. Then, we compute the score of the neighbor feature value by

| (16) | ||||

where is the weight assigned to the evaluation factor, and are the normalized addition of records and increase in satisfying the constraint for , respectively, is the maximum of the addition of all possible neighbor feature values.

For the efficiency maximization attack, in the worst case, the buyer has to claim the entire dataset as the published intent and the attainable lower bound of the attacker’s confidence is

Purchased Record Inference Attack

The ideas can be extended to tackle the problem of privacy protection against purchased record inference attacks.

Given the objective in Equation 13, in order to make the p-value of greater than or equal to for any , we first need to decide the buyer’s published intent (the attacker infers it as the pseudo published intent ). Thus, given the buyer’s true intent, we first leverage the proposed expansion method to derive the published intent.

Then, given the total number of records, , the buyer aims to buy and the published intent , how to allocate the records to every feature value combination in becomes a challenge. To solve the challenge, we propose four allocation methods:

Monte Carlo Simulation (MC Simulation): Generate sets, , with each set containing records sampled from the published intent. Then, remove sets that do not satisfy the privacy constraint, i.e. the p-value of less than for any .

For each set remaining, we compute the utility, i.e. the ratio of the number of records in the true intent against that in the published intent. Given one of the remaining sets denoted as , we have:

| (17) |

Considering utility as a primary consideration, where the buyer seeks to maximize the ratio of the number of records in the true intent against that in the published intent, the set with the highest utility is selected as the purchased set of records.

Markov Chain Monte Carlo (MCMC): We first initialize the purchased set by sampling records based on data distribution as indicated in Equation 9.

Then, we define a set of possible moves. Given the initialized set of record containing the records that the data buyer really wants (records in the buyer’s true intent) and some disguising records that the data buyer uses to hide the privacy, all the possible moves can be summarized into 4 groups: 1) remove a disguising record and add a record in the buyer’s true intent, 2) remove a disguising record and add a different disguising record, 3) remove a record in the buyer’s true intent and add a disguising record, 4) remove a record in the buyer’s true intent and add a different record in the buyer’s true intent. Note that multiple move choices exist under each group by selecting different disguising records or records in the buyer’s true intent.

For each iteration, we randomly select one move and change the current to based on the move. We first check whether satisfies the privacy constraint. If the privacy constraint is satisfied, we compute the acceptance probability of as follows:

where the utility function is elaborated in Equation 17.

We accept with the computed acceptance probability, i.e. there is a probability of transitioning from to .

We continue selecting the next move and updating the purchased set of records until the privacy constraint is no longer satisfied, i.e. the p-value of less than for any . Note that during implementation, the MCMC Algorithm terminates when the minimum difference between the privacy threshold and the current confidence of an attacker on a record is less than a threshold .

Greedy Markov Chain Monte Carlo (G-MCMC): The only difference between this approach and normal Markov Chain Monte Carlo is that when we define the set of possible moves, we only define the most greedy moves that improve the utility, i.e. remove a disguising record and add a record in the buyer’s true intent.

Genetic Sampling: Initialize parent sets where each set contains records sampled from the published intent. Then, remove sets that do not satisfy the privacy constraint, i.e. the p-value of less than for any . For the remaining sets, we compute the utility based on the utility expression in Equation 17.

Then, we select top sets in terms of utility to form a group. For every pair (parent A, B) of sets in the group, we leverage crossover to generate two children sets (children A, B). We add all the generated children to a new population pool.

We repeat the above steps on the new population pool for a specified number of iterations .

Finally, among all the sets satisfying the privacy constraint in the ultimate population pool, we select the set with the highest utility to be the buyer’s purchased set.

Experimental Results

We conduct extensive experiments using both real and synthetic datasets to investigate the following questions.

RQ1: To what extent does the proposed expansion method effectively generate privacy-preserving and high-utility published intent?

RQ2: To what extent does each allocation method effectively generate privacy-preserving and high-utility set of data records for purchase by the buyer?

RQ3: What is the effect of data space dimensionality on the published intent in privacy and utility?

RQ4: What is the effect of buyer’s true intent on the published intent produced by the expansion method?

RQ5: What are the effects of the privacy threshold and the weight parameter in the expansion method?

Experimental Setup

The Adult Dataset [28] is adopted from the UC Irvine machine learning repository [29] derived from US census data. Our analysis focuses on five attributes: age, ethnicity, gender, hours-per-week, and income. The age attribute is transformed into an ordinal scale: {“childhood” (0-17], “young adult” (17-24], “working adult” (24-61], “retirement” 61}. Similarly, attribute hours-per-week is discretized into: {“part-time” [0-34], “full-time” (34-40], “overtime” 40}. After removing the records with missing values, the dataset comprises 30,162 valid records. As the cost of each record is unspecified, a uniform cost of 1 is assigned to each record.

We generated a synthetic dataset mimicking the structure of the Adult Dataset, sharing the five attributes. The number of distinct feature values per dimension matches the Adult Dataset. The same data processing technique is applied. Regarding the data distribution, the record frequencies are sampled from a Gaussian distribution (mean=1000, std=300). The associated record costs are sampled from another Gaussian distribution (mean=20, std=5, lower bound=1).

| Setting | Attack Type | Prot. Method | Conf. LB | Conf. UB | Total Cost | Cost (TI) | Cost Ratio (TI) | # Records (PI) | # Records (TI) | Utility (TI/PI) |

|---|---|---|---|---|---|---|---|---|---|---|

| Adult Dataset, Unit Cost of 1, True Intent Size is 1 | PI-uniform | w/o protection | 100% | 100% | 56.0 | 56.0 | 100% | 56 | 56 | 100% |

| Expansion | 25% | 25% | 58.0 | 56.0 | 96.6% | 58 | 56 | 96.6% | ||

| EM-fc | w/o protection | - | 100% | 56.0 | 56.0 | 100% | 56 | 56 | 100% | |

| Expansion | - | 10.1% | 557.0 | 56.0 | 10.1% | 557 | 56 | 10.1% | ||

| EM-f | w/o protection | - | 100% | 56.0 | 56.0 | 100% | 56 | 56 | 100% | |

| Expansion | - | 10.1% | 557.0 | 56.0 | 10.1% | 557 | 56 | 10.1% | ||

| EM-c | w/o protection | - | 100% | 56.0 | 56.0 | 100% | 56 | 56 | 100% | |

| Expansion | - | 25% | 58.0 | 56.0 | 96.6% | 58 | 56 | 96.6% | ||

| Adult Dataset, Unit Cost of 1, True Intent Size is 2 | PI-uniform | w/o protection | 50% | 100% | 83.0 | 83.0 | 100% | 83 | 83 | 100% |

| Expansion | 12.5% | 25% | 85.0 | 83.0 | 97.6% | 85 | 83 | 97.6% | ||

| EM-fc | w/o protection | - | 67.5% | 83.0 | 83.0 | 100% | 83 | 83 | 100% | |

| Expansion | - | 8.4% | 669.0 | 83.0 | 12.4% | 669 | 83 | 12.4% | ||

| EM-f | w/o protection | - | 67.5% | 83.0 | 83.0 | 100% | 83 | 83 | 100% | |

| Expansion | - | 8.4% | 669.0 | 83.0 | 12.4% | 669 | 83 | 12.4% | ||

| EM-c | w/o protection | - | 50% | 83.0 | 83.0 | 100% | 83 | 83 | 100% | |

| Expansion | - | 25% | 84.0 | 83.0 | 98.8% | 84 | 83 | 98.8% |

As case studies, we define two distinct buyer true intents: [(“working adult”, “Black”, “Female”, “full-time”, “50K”)] with size 1 and [(“working adult”, “Black”, “Female”, “full-time”, “50K”), (“working adult”, “Asian-Pac-Islander”, “Female”, “full-time”, “50K”)] with size 2. We set to 30%. To maximize the utility of the published intents, i.e. the ratio of the number of records in the true intent against that in the published intent, for the Adult Dataset with a true intent size of 1, we set to 0.5 for PI-uniform attack, EM attack-fc, EM attack-f, and EM attack-c, where EM attack-fc, EM attack-f, and EM attack-c refer to efficiency maximization attacks given the attacker background knowledge about both the data distribution and cost, only the data distribution, and only the cost. For the Adult Dataset with a true intent size of 2, we set to 1.0, 0.6, 0.6, and 0.5 for PI-uniform attack, EM attack-fc, EM attack-f, and EM attack-c, respectively. For the synthetic dataset with a true intent size of 1, we set to 0.8, 0.4, 0.4, and 0.8 for PI-uniform attack, EM attack-fc, EM attack-f, and EM attack-c, respectively. For the synthetic dataset with a true intent size of 2, we set to 0.6, 0.5, 0.4, and 0.7 for PI-uniform attack, EM attack-fc, EM attack-f, and EM attack-c, respectively.

For purchased record inference attack, , which denotes the number of sets used for Monte Carlo simulation and permutation testing, is set to 100,000.

For MC Simulation, the total number of sets is set to 100,000. For MCMC, the minimum difference between the privacy threshold and the current confidence of the attacker is set to 0.001 for all case studies, while for G-MCMC, is set to 0.07, 0.01, 0.005, and 0.01 for the Adult Dataset with a true intent size of 1, Adult Dataset with a true intent size of 2, synthetic dataset with a true intent size of 1, and synthetic dataset with a true intent size of 2, respectively. For genetic sampling, the number of parents is set to 50, the number of top utility sets selected for crossover is set to 10, and the number of iterations is set to 30.

| Setting | Attack Type | Prot. Method | Conf. LB | Conf. UB | Total Cost | Cost (TI) | Cost Ratio (TI) | # Records (PI) | # Records (TI) | Utility (TI/PI) |

|---|---|---|---|---|---|---|---|---|---|---|

| Synthetic Dataset, Gaussian Cost, True Intent Size is 1 | PI-uniform | w/o protection | 100% | 100% | 31773.0 | 31773.0 | 100% | 1513 | 1513 | 100% |

| Expansion | 25% | 25% | 63430.0 | 31773.0 | 50.1% | 3222 | 1513 | 47.0% | ||

| EM-fc | w/o protection | - | 100% | 31773.0 | 31773.0 | 100% | 1513 | 1513 | 100% | |

| Expansion | - | 29.5% | 107825.0 | 31773.0 | 29.5% | 5715 | 1513 | 26.5% | ||

| EM-f | w/o protection | - | 100% | 31773.0 | 31773.0 | 100% | 1513 | 1513 | 100% | |

| Expansion | - | 26.5% | 107825.0 | 31773.0 | 29.5% | 5715 | 1513 | 26.5% | ||

| EM-c | w/o protection | - | 100% | 31773.0 | 31773.0 | 100% | 1513 | 1513 | 100% | |

| Expansion | - | 28.8% | 63430.0 | 31773.0 | 50.1% | 3222 | 1513 | 47.0% | ||

| Synthetic Dataset, Gaussian Cost, True Intent Size is 2 | PI-uniform | w/o protection | 50% | 100% | 58053.0 | 58053.0 | 100% | 2973 | 2973 | 100% |

| Expansion | 12.5% | 25% | 154470.0 | 58053.0 | 37.6% | 8153 | 2973 | 36.5% | ||

| EM-fc | w/o protection | - | 54.7% | 58053.0 | 58053.0 | 100% | 2973 | 2973 | 100% | |

| Expansion | - | 25.2% | 125874.0 | 58053.0 | 46.1% | 6380 | 2973 | 46.6% | ||

| EM-f | w/o protection | - | 50.9% | 58053.0 | 58053.0 | 100% | 2973 | 2973 | 100% | |

| Expansion | - | 29.2% | 98329.0 | 58053.0 | 59.0% | 5179 | 2973 | 57.4% | ||

| EM-c | w/o protection | - | 53.8% | 58053.0 | 58053.0 | 100% | 2973 | 2973 | 100% | |

| Expansion | - | 29.6% | 75150.0 | 58053.0 | 77.2% | 3954 | 2973 | 75.2% |

Privacy and Utility of Published Intent

The experimental results are shown in Table 1 for the Adult Dataset and Table 2 for the synthetic dataset.

In PI-uniform attacks, the absence of protection results in the attacker’s upper bound confidence reaching 100% for both datasets and for both true intent sizes of 1 and 2. This underscores the crucial role of the expansion method in safeguarding privacy. Employing the expansion method to counter PI-uniform attacks leads to significant reduction of the confidence upper bound. At the same time, this privacy protection strategy comes with a tradeoff – it requires the acquisition of additional disguise data, resulting in a slight decrease in utility. On the Adult Dataset, the utility decreases slightly.

For the synthetic dataset, the utility decreases by 53% when the true intent size is 1 and by 63.5% when the true intent size is 2. Notably, the more pronounced reduction in the utility of published intents in the synthetic dataset, compared to the Adult Dataset, can be attributed to the presence of “empty” feature value combinations. For example, in the Adult Dataset, there are 0 records falling into the group of (“childhood”, “white”, “female”, “overtime”, “50k”). In a PI-uniform attack, where an attacker lacks of the knowledge of the ground truth data distribution. Such “empty” groups in the published intent enhance privacy without incurring additional disguise records. Such “empty” groups are rare in the synthetic dataset, resulting in a greater drop in the utility of published intent. In efficiency maximization attacks, the “empty” groups also result in significantly higher utility of the published intent after the expansion method, given the attacker’s background knowledge about costs alone. This utility exceeds the utility of the published intent after the expansion method when the attacker has background knowledge about both data distribution and costs, or only data distribution, by over 86% for the Adult Dataset and over 20% for the synthetic dataset.

In efficiency maximization attacks, given the attacker’s background knowledge about both the data distribution and costs, without protection an attacker’s confidence upper bound reaches 100% when the true intent size is 1 and 67.5% when the true intent size is 2 for the Adult Dataset. Regarding the synthetic dataset, without protection an attacker’s confidence upper bound reaches 100% when the true intent size is 1 and 54.7% when the true intent size is 2. The difference in confidence upper bound between true intent size of 1 and true intent size of 2 arises from the inherent characteristics of the efficiency maximization attack. The attack confidence is calculated based on the maximum for record in the buyer’s true intent, divided by the sum of for every record in the published intent, as indicated in Equation 12. Without protection and with a true intent size of 1, the confidence is necessarily 100% since the published intent contains only this single true intent. However, with a true intent size of 2 and no protection, the numerator is the maximum between the two true intents, while the denominator is the sum of the two, resulting in a lower attack confidence. In essence, the presence of multiple true intents balances each other, leading to a lower attacker confidence even without protection.

For the Adult Dataset, upon implementing the expansion method, the attack confidence decreases by 89.9% when the true intent size is 1 and by 59.1% when the true intent size is 2. The increase in privacy comes at the cost of utility, i.e. a decrease by 89.9% and 87.6%, respectively. Regarding the synthetic dataset, the attack confidence decreases by 70.5% when the true intent size is 1 and by 29.5% when the true intent size is 2. The increase in privacy comes at the cost of utility, i.e. a decrease by 73.5% and 53.4%, respectively.

Effectiveness Comparison of Different Allocation Methods

| Setting | Protection Method | Confidence Upper Bound | # Records (PI) | # Records (TI) | Utility (TI/PI) | Time |

|---|---|---|---|---|---|---|

| Adult Dataset, True Intent Size is 1 | No Protection | 100.0% | 61.0 | 56.0 | 91.8% | - |

| MC Simulation | 23.6%0.0% | 61.00.0 | 5.00.0 | 8.2%0.0% | 24.1540.144 | |

| MCMC | 6.4%6.8% | 61.00.0 | 3.10.9 | 5.1%1.5% | 0.0120.001 | |

| G-MCMC | 23.6%0.0% | 61.00.0 | 5.00.0 | 8.2%0.0% | 0.0010.000 | |

| Genetic Sampling | 23.6%0.0% | 61.00.0 | 5.00.0 | 8.2%0.0% | 0.3010.003 | |

| Adult Dataset, True Intent Size is 2 | No Protection | 100.0% | 120.0 | 83.0 | 69.2% | - |

| MC Simulation | 29.3%0.0% | 120.00.0 | 13.00.0 | 10.8%0.0% | 47.5210.290 | |

| MCMC | 2.7%4.2% | 120.00.0 | 4.02.9 | 3.3%2.4% | 0.0880.007 | |

| G-MCMC | 29.3%0.0% | 120.00.0 | 12.70.5 | 10.6%0.4% | 0.0040.002 | |

| Genetic Sampling | 28.9%1.1% | 120.00.0 | 12.90.3 | 10.8%0.2% | 1.1180.010 | |

| Synthetic Dataset, True Intent Size is 1 | No Protection | 100.0% | 1516.0 | 1513.0 | 99.8% | - |

| MC Simulation | 29.6%0.0% | 1516.00.0 | 394.00.0 | 26.0%0.0% | 333.33313.589 | |

| MCMC | 11.6%11.2% | 1516.00.0 | 375.318.6 | 24.8%1.2% | 0.5650.112 | |

| G-MCMC | 29.6%0.0% | 1516.00.0 | 394.00.0 | 26.0%0.0% | 0.0090.002 | |

| Genetic Sampling | 29.1%0.9% | 1516.00.0 | 393.80.4 | 26.0%0.0% | 6.3000.095 | |

| Synthetic Dataset, True Intent Size is 2 | No Protection | 100.0% | 2979.0 | 2973.0 | 99.8% | - |

| MC Simulation | 29.2%0.0% | 2979.00.0 | 918.00.0 | 30.8%0.0% | 640.2292.150 | |

| MCMC | 7.7%9.9% | 2979.00.0 | 853.732.4 | 28.7%1.1% | 1.7350.034 | |

| G-MCMC | 29.2%0.0% | 2979.00.0 | 916.52.6 | 30.8%0.1% | 0.1140.099 | |

| Genetic Sampling | 26.4%1.5% | 2979.00.0 | 911.0 7.2 | 30.6%0.2% | 11.5663.704 |

Concerning purchased record inference attacks, we assess the effectiveness of four proposed allocation methods in generating privacy-preserving and high-utility set of records for purchase by the data buyer. The experimental results are shown in Table 3.

In purchased record inference attacks, the absence of protection results in the attacker’s upper bound confidence reaching 100% for both datasets. This underscores the crucial role of the allocation method in safeguarding privacy.

| Attack Type | Dim. Reduced | Proj. Conf. LB | Change in LB | Proj. Conf. UB | Change in UB | Proj. # Records (PI) | Updated Proj. # Records (PI) | Proj. # Records (TI) | Proj. Utility (TI/PI) | Updated Proj. Utility (TI/PI) | Proj. PI Size | Updated Proj. PI Size |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PI-uniform Attack | Age | 50% | 37.5% | 100% | 75% | 85 | 101 | 85 | 100% | 84.2% | 2 | 8 |

| Ethnicity | 25% | 12.5% | 25% | 0% | 85 | 85 | 83 | 97.6% | 97.6% | 4 | 4 | |

| Gender | 12.5% | 0% | 25% | 0% | 85 | 85 | 83 | 97.6% | 97.6% | 8 | 8 | |

| Hours-per-week | 12.5% | 0% | 25% | 0% | 85 | 85 | 83 | 97.6% | 97.6% | 8 | 8 | |

| Income | 12.5% | 0% | 25% | 0% | 85 | 85 | 83 | 97.6% | 97.6% | 8 | 8 | |

| Efficiency Maximiza -tion Attack | Age | - | - | 8.7% | 0.3% | 669 | 669 | 85 | 12.7% | 12.7% | 24 | 24 |

| Ethnicity | - | - | 13.2% | 4.8% | 669 | 390 | 88 | 13.2% | 22.6% | 18 | 2 | |

| Gender | - | - | 33.6% | 25.3% | 669 | 3226 | 365 | 54.6% | 11.3% | 36 | 3 | |

| Hours-per-week | - | - | 12.4% | 4.0% | 669 | 669 | 123 | 18.4% | 18.4% | 24 | 24 | |

| Income | - | - | 8.4% | 0% | 669 | 554 | 83 | 12.4% | 15.0% | 72 | 3 |

For the Adult Dataset, MC Simulation, G-MCMC, and Genetic Sampling exhibit comparable results in terms of utility, measured by the ratio of the number of records in the true intent against that in the published intent. MCMC has the worst utility, exhibiting a 3.1% and 7.5% decrease for true intent sizes of 1 and 2, respectively. Concerning time, MC Simulation’s runtime, requiring 24 seconds for true intent size 1 and 47 seconds for true intent size 2, is notably longer than that of other methods. G-MCMC has the fastest runtime, requiring only 0.001 seconds, while Genetic Sampling requires approximately 1 second. Concerning privacy, MC Simulation, G-MCMC, and Genetic Sampling show an attacker confidence upper bound of 23.6% and around 29.0% for true intent sizes of 1 and 2, respectively, all closely approaching the privacy threshold of 30%. MCMC excels in privacy, exhibiting decreases in attacker confidence of 17.2% and 26.3%, respectively.

The synthetic dataset exhibits a similar trend to the Adult Dataset. A slight difference lies in the higher utility of MC Simulation and G-MCMC compared to Genetic Sampling. Another observation is a more apparent gap in runtime between MC Simulation, G-MCMC, and Genetic Sampling, where G-MCMC only requires 0.01 seconds and 0.1 seconds for true intent sizes of 1 and 2, respectively. Genetic Sampling requires 6 seconds and 12 seconds, respectively. MC Simulation performs the worst in runtime, requiring 333 seconds and 640 seconds, respectively.

In summary, our findings indicate that MC Simulation, G-MCMC, and Genetic Sampling consistently achieve comparable results in utility, but G-MCMC consistently outperforms the other two in terms of runtime. Notably, MC Simulation exhibits poor runtime performance. MCMC has the worst performance in utility but is able to generate a more privacy-preserving set of records for purchase by the buyer.

Effect of Dimensionality

We conduct experiments by removing dimensions “Age,” “Ethnicity,” “Gender,” “Hours-per-week,” and “Income,” respectively, to examine the effect on attacker confidence. The true intent in the reduced space is the projection of the original data space. That is, with true intent in the original data space, after dimension is removed, the true intent becomes . The experiments are conducted on the Adult Dataset with a true intent size of 2. The efficiency maximization attack is conducted given the attacker’s background knowledge of both the data distribution and associated costs.

A published intent can also be projected into the subspace reduced, but may no longer meet the privacy threshold. Consequently, we update the projected published intent using our proposed expansion method and compare its utility with that of the initially projected published intent. The results are shown in Table 4.

In the PI-uniform attack, the removal of the “Age” dimension has a significant effect, resulting in a substantial increase in the attacker’s confidence, both the lower bound (37.5%) and upper bound (75%). With the privacy threshold set to 30%, the projected published intent no longer meets the privacy threshold, necessitating the addition of more disguise records into the published intent. As a consequence, the updated projected number of records in the published intent increases. The updated projected ratio of the number of records in the true intent against that in the published intent decreases by 15.8%. This observation indicates that, prior to projection, a significant portion of the disguise records used to safeguard the true intent through the expansion method is distributed along the “Age” dimension. Thus, the removal of the “Age” dimension results in a dramatic increase in attacker confidence.

In the efficiency maximization attack, the removal of the “Gender” dimension results in a substantial increase (25.3%) in attacker confidence, surpassing the 30% privacy threshold. Consequently, to enhance the protection of the projected published intent, additional disguise records must be incorporated, leading to an increase of the number of records in the published intent. Thus, there is an associated decrease of 43.3% in the utility.

A noteworthy observation is the comparison between the size of the projected published intent and the size of the updated projected published intent after expansion, specifically after removing the “Age” dimension for PI-uniform attack and the “Gender” dimension for efficiency maximization attack. In the case of PI-uniform attack, the size increases from 2 to 8, reflecting the expansion method’s incorporation of additional feature values into the published intent; thus, the size of the published intent expands. In contrast, for the efficiency maximization attack, the size decreases from 36 to 3. This reduction can be attributed to its inherent characteristics, which necessitates obtaining more records to ensure a sufficiently substantial sum of for record in the published intent, as indicated in Equation 12. Consequently, the size of the updated projected published intent may not necessarily increase, given that there are now more records falling within a specific intent due to projection.

Effect of True Intent Size

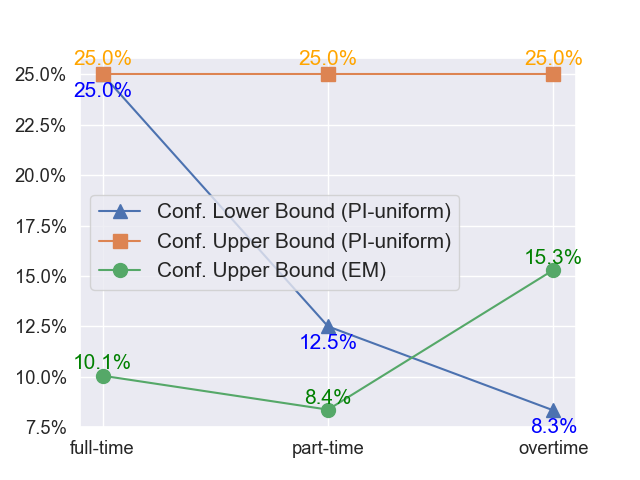

We investigate the effect of buyer’s true intent size on the resulting published intent for PI-uniform attack and efficiency maximization attack. For each dimension, we augment the true intent by adding feature values within that dimension. The results are shown in Figures 2. In each figure, each feature value on the x-axis denotes that the buyer’s true intent on that dimension encompasses the current feature value and all feature values to its left. The experiments are conducted on the Adult Dataset with a true intent size of 1. The efficiency maximization attack is conducted given the attacker’s background knowledge of both the data distribution and associated costs.

In the PI-uniform attack, we observe that the sizes of the published intent and true intent often consistently increase in the same ratio, with no change in the upper bound confidence. Nevertheless, certain instances deviate from this pattern, where the size of the published intent does not increase despite an increase in the attacker confidence upper bound, which is because the original confidence is excessively low. Moreover, it is evident that the attacker’s confidence lower bound decreases as the size of the published intent increases.

In the efficiency maximization attack, in most instances, the size of the published intent either remains constant or experiences a slight increase with an enlargement of the true intent, all while maintaining a consistent confidence level.

Notably, when expanding on a new feature value, the new published intent may exhibit higher utility, i.e. lower number of disguise records, even if the original true intent is a proper subset of the expanded true intent. This phenomenon arises due to the greedy nature of the expansion method, whereby, at each iteration, the feature value deemed the best may not necessarily be the globally optimal one.

Sensitivity Analysis

Sensitivity analyses are conducted on the Adult Dataset with a true intent size of 2. The efficiency maximization attack is conducted given the attacker’s background knowledge of both the data distribution and associated costs.

Effect of Privacy Threshold

The parameter governs the degree to which the buyer’s true intent is protected, with the attacker’s confidence constrained to be no greater than . A lower indicates a higher level of privacy protection. We explore the effect of on the resulting published intent by varying it from 1% (the most stringent privacy threshold) to 100% (indicating no privacy requirement). The results are shown in Figure 4.

In both the PI-uniform attack and the efficiency maximization attack, adhering to the most rigorous privacy threshold of 1%, the buyer is compelled to acquire the entire dataset to ensure privacy protection. As the privacy requirement becomes less stringent, the demand for concealing records diminishes quickly. Ultimately, in scenarios where privacy protection is not a concern (when is set to 100%), the buyer only needs to buy the data pertaining to the true intent. The results indicate that privacy preservation for data buyer is highly feasible and economically efficient as long as the privacy requirement is not extremely high.

Effect of Weight Parameter

In the expansion method, the parameter governs the allocation of weights to the total number of records in the published intent (weighted by ) and the gain in satisfying the privacy constraint when expanding on the current feature (weighted by ). We investigate the effect of on the resulting published intent. The results are shown in Figure 4.

For the PI-uniform attack with a 30% privacy threshold, setting to 1 yields the fewest disguising records purchased. This minimal number of disguising records corresponds to the largest proportion of records indeed belong to the true intent and thus the highest utility. For efficiency maximization attack, with a 30% privacy threshold, values greater than or equal to 0.6 result in the fewest disguising records purchased, achieving the highest utility. In general, for both attacks, as gradually increases from 0 to 1, indicating a higher allocation of weight to the total number of records in the published intent rather than to the gain in satisfying the privacy constraint during expansion, the number of disguising records decreases. This corresponds to a gradual increase in utility.

Conclusions

In this article, we tackle an interesting and important problem in data markets–preserving data buyers’ privacy. We formulate the problem and presents a disciplined approach exploring the trade-off between privacy and data purchase cost. The thorough experimental results clearly show the effectiveness and efficiency of our approach.

Our approach can be extended and generalized to tackle more sophisticated cases, such as complicated data purchase intent beyond a conjunctive normal form and incorporating cost factors into the utility computation. As future work, it is also interesting to explore individual buyers’ privacy cost and the distinct characteristics of data buyers versus general product buyers.

Acknowledgement

This work was supported in part by a Beyond the Horizon grant by Duke University. All opinions, findings, conclusions and recommendations in this paper are those of the authors and do not necessarily reflect the views of the funding agencies.

References

- [1] J. Pei, R. C. Fernandez, and X. Yu, “Data and ai model markets: Opportunities for data and model sharing, discovery, and integration,” Proc. VLDB Endow., vol. 16, no. 12, p. 3872–3873, aug 2023.

- [2] J. Pei, “A survey on data pricing: From economics to data science,” IEEE Transactions on Knowledge and Data Engineering, vol. 34, no. 10, pp. 4586–4608, 2022.

- [3] L. K. Fleischer and Y.-H. Lyu, “Approximately optimal auctions for selling privacy when costs are correlated with data,” in Proceedings of the 13th ACM Conference on Electronic Commerce. New York, NY, USA: Association for Computing Machinery, 2012, pp. 568–585.

- [4] C. Niu, Z. Zheng, F. Wu, S. Tang, X. Gao, and G. Chen, “Unlocking the value of privacy: Trading aggregate statistics over private correlated data,” in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. New York, NY, USA: Association for Computing Machinery, 2018, pp. 2031–2040.

- [5] F. Tramèr, F. Zhang, A. Juels, M. K. Reiter, and T. Ristenpart, “Stealing machine learning models via prediction apis,” in Proceedings of the 25th USENIX Conference on Security Symposium, ser. SECÕ16. USA: USENIX Association, 2016, pp. 601–618.

- [6] N. Hynes, D. Dao, D. Yan, R. Cheng, and D. Song, “A demonstration of sterling: A privacy-preserving data marketplace,” Proc. VLDB Endow., vol. 11, no. 12, pp. 2086–2089, Aug. 2018.

- [7] D. Dao, D. Alistarh, C. Musat, and C. Zhang, “Databright: Towards a global exchange for decentralized data ownership and trusted computation,” CoRR, vol. abs/1802.04780, 2018.

- [8] K. Nissim, S. Vadhan, and D. Xiao, “Redrawing the boundaries on purchasing data from privacy-sensitive individuals,” in Proceedings of the 5th Conference on Innovations in Theoretical Computer Science, ser. ITCS’14. New York, NY, USA: Association for Computing Machinery, 2014, pp. 411–422.

- [9] A. Ghosh, K. Ligett, A. Roth, and G. Schoenebeck, “Buying private data without verification,” in Proceedings of the Fifteenth ACM Conference on Economics and Computation, ser. EC’14. New York, NY, USA: Association for Computing Machinery, 2014, pp. 931–948.

- [10] B. C. M. Fung, K. Wang, R. Chen, and P. S. Yu, “Privacy-preserving data publishing: A survey of recent developments,” ACM Comput. Surv., vol. 42, no. 4, Jun. 2010.

- [11] C. C. Aggarwal and P. S. Yu, “Privacy-preserving data mining: A survey,” in Handbook of Database Security: Applications and Trends, M. Gertz and S. Jajodia, Eds. Boston, MA: Springer US, 2008, pp. 431–460. [Online]. Available: https://doi.org/10.1007/978-0-387-48533-1_18

- [12] E. Bertino, D. Lin, and W. Jiang, “A survey of quantification of privacy preserving data mining algorithms,” in Privacy-Preserving Data Mining: Models and Algorithms, C. C. Aggarwal and P. S. Yu, Eds. Boston, MA: Springer US, 2008, pp. 183–205. [Online]. Available: https://doi.org/10.1007/978-0-387-70992-5_8

- [13] C. Dwork, “Differential privacy: A survey of results,” in Theory and Applications of Models of Computation, M. Agrawal, D. Du, Z. Duan, and A. Li, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2008, pp. 1–19.

- [14] B. Zhou, J. Pei, and W. Luk, “A brief survey on anonymization techniques for privacy preserving publishing of social network data,” SIGKDD Explor. Newsl., vol. 10, no. 2, pp. 12–22, Dec. 2008. [Online]. Available: https://doi.org/10.1145/1540276.1540279

- [15] M. A. Ferrag, L. Maglaras, and A. Ahmim, “Privacy-preserving schemes for ad hoc social networks: A survey,” IEEE Communications Surveys Tutorials, vol. 19, no. 4, pp. 3015–3045, 2017.

- [16] X. Wu, X. Ying, K. Liu, and L. Chen, “A survey of privacy-preservation of graphs and social networks,” in Managing and Mining Graph Data, C. C. Aggarwal and H. Wang, Eds. Boston, MA: Springer US, 2010, pp. 421–453. [Online]. Available: https://doi.org/10.1007/978-1-4419-6045-0_14

- [17] W. Aiello, Y. Ishai, and O. Reingold, “Priced oblivious transfer: How to sell digital goods,” in Advances in Cryptology - EUROCRYPT 2001, International Conference on the Theory and Application of Cryptographic Techniques, Innsbruck, Austria, May 6-10, 2001, Proceeding, ser. Lecture Notes in Computer Science, vol. 2045. Springer, 2001, pp. 119–135.

- [18] R. Agrawal and R. Srikant, “Privacy-preserving data mining,” SIGMOD Rec., vol. 29, no. 2, p. 439–450, may 2000. [Online]. Available: https://doi.org/10.1145/335191.335438

- [19] L. Sweeney, “K-anonymity: A model for protecting privacy,” Int. J. Uncertain. Fuzziness Knowl.-Based Syst., vol. 10, no. 5, p. 557–570, oct 2002. [Online]. Available: https://doi.org/10.1142/S0218488502001648

- [20] A. Machanavajjhala, D. Kifer, J. Gehrke, and M. Venkitasubramaniam, “L-diversity: Privacy beyond k-anonymity,” ACM Trans. Knowl. Discov. Data, vol. 1, no. 1, p. 3–es, mar 2007. [Online]. Available: https://doi.org/10.1145/1217299.1217302

- [21] N. Li, T. Li, and S. Venkatasubramanian, “t-closeness: Privacy beyond k-anonymity and l-diversity,” in 2007 IEEE 23rd International Conference on Data Engineering, 2007, pp. 106–115.

- [22] C. Dwork, “Differential privacy,” in Automata, Languages and Programming, M. Bugliesi, B. Preneel, V. Sassone, and I. Wegener, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2006, pp. 1–12.

- [23] M. Xue, P. Papadimitriou, C. Raïssi, P. Kalnis, and H. K. Pung, “Distributed privacy preserving data collection,” in Database Systems for Advanced Applications, J. X. Yu, M. H. Kim, and R. Unland, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2011, pp. 93–107.

- [24] M. Zhang, H. Lin, S. Takagi, Y. Cao, C. Shahabi, and L. Xiong, “Csgan: Modality-aware trajectory generation via clustering-based sequence gan,” in 2023 24th IEEE International Conference on Mobile Data Management (MDM), 2023, pp. 148–157.

- [25] N. R. Adam and J. C. Worthmann, “Security-control methods for statistical databases: A comparative study,” ACM Comput. Surv., vol. 21, no. 4, p. 515–556, dec 1989. [Online]. Available: https://doi.org/10.1145/76894.76895

- [26] L. C. I. S. Moskowitz, “A decision theoretical based system for information downgrading,” 2000. [Online]. Available: https://api.semanticscholar.org/CorpusID:61137631

- [27] V. Verykios, A. Elmagarmid, E. Bertino, Y. Saygin, and E. Dasseni, “Association rule hiding,” IEEE Transactions on Knowledge and Data Engineering, vol. 16, no. 4, pp. 434–447, 2004.

- [28] B. Becker and R. Kohavi, “Adult,” UCI Machine Learning Repository, 1996, DOI: https://doi.org/10.24432/C5XW20.

- [29] A. Asuncion and D. Newman, “Uci machine learning repository,” 2007.