Proofs and additional experiments on Second order techniques for learning time-series with structural breaks

Abstract

We provide complete proofs of the lemmas about the properties of the regularized loss function that is used in the second order techniques for learning time-series with structural breaks in Osogami (2021). In addition, we show experimental results that support the validity of the techniques.

Appendix A Introduction

We study a nonstationary time-series model, , having time-varying weights, at step . Given an input at , the model makes a prediction about the target . In online learning, we update to after observing and use to make the prediction, , about the next target .

Osogami (2021) proposes to find the weights that, at every step , minimize the following weighted mean squared error with a regularization term:

| (7) |

where

| (1) |

and is the Hessian of (LABEL:eq:WMSE):

| (3) |

Osogami (2021) presents the following lemmas about the properties of the regularized loss function (LABEL:eq:reg_loss).

Lemma LABEL:lemma:reg (Osogami, 2021)

For linear models, the minimizer of the regularized loss function (LABEL:eq:reg_loss) is given by

where

and . Then can be computed from in time by applying the Sherman-Morrison lemma twice.

Lemma LABEL:lemma:invariance (Osogami, 2021)

Consider an invertible linear transformation of order , and let

for each . Let the weights except the intercept be contravariate to in that transforms into

Then the loss function (LABEL:eq:reg_loss) is invariant to .

The rest of the article is organized as follows. In Section B, we prove Lemma LABEL:lemma:reg and Lemma LABEL:lemma:invariance. In Section C, we provide the experimental results that were omitted in Osogami (2021) due to space considerations. Throughout we refer to the equations, lemmas, and figures in Osogami (2021) with the same labels as Osogami (2021). Specifically, Equations (1)-(12), Lemmas 1-3, and Figures 1-2 refer to those appeared in Osogami (2021).

Appendix B Proofs

B.1 Proof of Lemma LABEL:lemma:reg

We will use the following notations:

| (15) | ||||

| (18) | ||||

| (21) |

where can be written recursively as follows:

| (22) | ||||

| (23) |

We can then write our loss function as follows:

| (24) |

Because is a quadratic function, its minimizer is given by the in the lemma, where

| (25) |

By (LABEL:eq:rec_H) and (23), we can write recursively as

| (26) |

This completes the proof of the lemma.

B.2 Proof of Lemma LABEL:lemma:invariance

It is known that is invariant to . Specifically, transforms into , where

| (27) | ||||

| (28) | ||||

| (29) |

It thus suffices to show that the regularization term is invariant to . Observe that transforms into , where

| (30) | ||||

| (31) | ||||

| (32) |

Thus, , proving the lemma.

Appendix C Additional experiments

C.1 Experiments on regularization

C.1.1 Sensitivity of L2 regularization to the transformation of the coordinates

In Figure 4, we show that standard L2 regularization is sensitive to the transformation of the coordinates of explanatory variables. Recall that one minimizes the following loss function with standard L2 regularization:

| (5) |

Here, we have two explanatory variables, and , and the training data is generated according to a linear model , where . In online learning, we often cannot normalize the variables (to have unit variance) a priori. If a scaled variable is observed (and not normalized), the corresponding true weight is also scaled . Depending on the value of , L2 regularization has varying effect on . For a large , the magnitude of the estimated is large and hence is reduced by a large amount by L2 regularization. This however implies that the corresponding unscaled weight gets smaller than what is given when the variables are normalized (to have unit variance).

C.1.2 Effectiveness of the proposed regularization

In Figure 5, we compare the effectiveness of our regularization against L2 regularization. Here, we learn a time-series of the monthly sunspot number from January 1749 to December 1983 (2,820 steps)111https://datamarket.com/data/set/22t4/. We use this dataset primarily because it exhibits large fluctuations of the magnitude. We train autoregressive (AR) models of varying order, as indicated in each panel of the figure. At each step, the parameters are optimized in a way that they minimize either the loss function with L2 regularization (LABEL:eq:loss_standardL2) or the one with our regularization (LABEL:eq:loss_invariant), where we fix .

(a) Order 2

(b) Order 3

(c) Order 4

Overall, our regularization compares favorably against L2 regularization. In particular, the best RMSE of 16.31 is achieved by our regularization at for the model with the third order (Figure 5 (b)), while L2 regularization cannot reduce the RMSE below 16.38 for any and for any order. Although the effectiveness of regularization depends on particular data, the results of this experiment suggest that our regularization not only can be performed in time but also has the expected effect of regularization, sometimes outperforming L2 regularization (e.g. when a time-series involves large fluctuations).

C.2 Experiments on recursively computing pseudo-inverse

Osogami (2021) proposes to update the inverse Hessian based on the following lemma:

Lemma LABEL:lemma:update_pseudo (Osogami, 2021)

For a symmetric and , let

| (6) |

Then the pseudo-inverse can be computed from as follows: if , then

if , then

Figure 6 shows the numerical error accumulated in recursively computed pseudo-inverse with two methods: Proposed and Baseline. Proposed is the one based on Lemma LABEL:lemma:update_pseudo. Baseline differs from Proposed in the following two definitions: and .

Specifically, we recursively compute the pseudo-inverse of the matrix for , where , and is a column vector of length , whose elements are i.i.d. according to the standard normal distribution. We then evaluate the relative error of a recursively compute matrix, which is the sum of the squared error of each element divided by the sum of the squared value of each element of the ground truth matrix, which is computed non-recursively.

Figure 6 suggests that Proposed is up to times more accurate than Baseline.

(a)

(b)

(c)

C.3 Details of the results from the experiments with the synthetic time-series

Figure 7 shows the values of the regularization coefficient used by Algorithm LABEL:alg:adaptive at each step in the experiments with the synthetic time-series, where the corresponding value of the forgetting rate is shown in Figure LABEL:fig:synthetic (c).

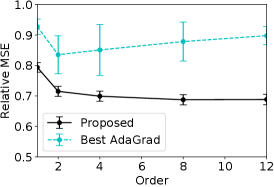

C.4 Details of the results from the experiments with stock indices

Figure 8-12 shows the results in Figure LABEL:fig:all with error bars. In each figure, the relative MSE of Algorithm LABEL:alg:adaptive and baselines are compared on a particular financial index. The baselines are vSGD, HGD, Almeida, Cogra, Adam, AdaGrad, and RMSProp, and each panel shows the relative MSE with one of the baselines. The figures also show error bars, which are computed on the basis of the standard deviation of the MSE on each of the 10 intervals of equal length.

Detailed results on SPX

The results with HGD, Almeida, and Cogra look similar to each other in the figure. This is because, for the financial time-series under consideration, the prediction by these three methods was quite close to the naive prediction that the absolute daily return stays unchanged from the previous day. Because we compute the error bars on the basis of the standard deviation of the MSE on each of the 10 intervals of equal length, the error bars of these three methods also look similar to each other.

Detailed results on Nikkei 225

Detailed results on DAX

Detailed results on FTSE 100

Detailed results on SSEC

References

- Osogami [2021] T. Osogami. Second order techniques for learning time-series with structural breaks. In Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI-21), 2021.