Promoting Cooperation in Multi-Agent Reinforcement Learning via Mutual Help

Abstract

Multi-agent reinforcement learning (MARL) has achieved great progress in cooperative tasks in recent years. However, in the local reward scheme, where only local rewards for each agent are given without global rewards shared by all the agents, traditional MARL algorithms lack sufficient consideration of agents’ mutual influence. In cooperative tasks, agents’ mutual influence is especially important since agents are supposed to coordinate to achieve better performance. In this paper, we propose a novel algorithm Mutual-Help-based MARL (MH-MARL) to instruct agents to help each other in order to promote cooperation. MH-MARL utilizes an expected action module to generate expected other agents’ actions for each particular agent. Then, the expected actions are delivered to other agents for selective imitation during training. Experimental results show that MH-MARL improves the performance of MARL both in success rate and cumulative reward.

Index Terms— Multi-agent reinforcement learning, cooperation, mutual help

1 Introduction

In recent years, multi-agent reinforcement learning (MARL) has achieved great success in cooperative tasks, such as electronic games[1], power distribution[2], and cooperative navigation[3]. The design of the reward scheme is critical in MARL to instruct agents to fulfill corresponding objectives. Depending on the specific application, local reward scheme[4, 5] or global reward scheme[6, 7] is designed to provide rewards for individual agents or rewards shared by all the agents, respectively. The global reward scheme involves the problem of credit assignment [6]. Traditional algorithms with the local reward scheme optimize agents’ local rewards which lack explicit reflection on the mutual influence between agents, and thus result in insufficient coordination among agents[8]. Mutual influence of agents is essential in MARL, especially in cooperative tasks, where agents are expected to coordinate to achieve better performance from the perspective of the whole multi-agent system.

There are mainly three approaches that incorporate explicit mutual influence among agents into traditional MARL: communication methods, teacher-student methods, and local-global reward-based methods. Communication methods improve the cooperation of agents through communication protocols between agents. [9] trains agents’ policies alongside the communication. [10] further studies on when to communicate and how to use communication messages. [11] forms each agent’s intention and then communicates it to other agents. However, communication methods require explicit communication channels to exchange messages between agents, which may not be available in many applications.

Teacher-student methods instruct agents to learn from each other. [12] guides agents in learning from agents who are already experienced. [13, 14] consider the framework where agents teach each other while being trained in a shared environment. [15, 16] conduct deeper research on how to reuse received instructions from teacher agents. In [17], agents implicitly teach each other via sharing experiences. However, teacher-student methods require agents to be identical to directly utilize other agents’ instructions on actions.

Local-global reward-based methods simultaneously utilize local rewards and global rewards which can be constructed by local rewards. [18] learns two policies with local rewards and global rewards respectively, and restricts the discrepancy between the two policies. [8] maintains a centralized critic to learn from global rewards and a decentralized critic to learn from local rewards, and simultaneously optimizes the two critics. However, local-global reward-based methods require the design of global rewards based on the local reward scheme. In addition, they only consider the relationship between a single agent and the whole multi-agent system from the perspective of the reward scheme, without detailed relationships among agents.

In this paper, we propose a novel algorithm Mutual-Help-based MARL (MH-MARL) to instruct agents to help each other while being trained by traditional MARL algorithms, where an expected action module is added to traditional MARL algorithms. In traditional MARL with the local reward scheme, agents only optimize their own policies, ignoring other agents’ performance. In contrast, each agent learns to generate actions that can help increase other agents’ returns while optimizing its own return in MH-MARL. Specifically, each agent selectively imitates the actions that are expected by other agents while being trained by a traditional MARL algorithm. The expected actions are generated by an expected action module. The selective imitation follows the principle that an agent chooses to learn to help others if helping others does not seriously harm its own performance. Note that MH-MARL considers mutual help between agents without the requirement of communication during execution. Besides, agents are not required to be identical. We evaluate MH-MARL in a flocking navigation environment[19] where cooperation between agents is crucial for success. Experimental results demonstrate that MH-MARL improves performance in both success rate and reward. The main contributions of this paper are listed as follows:

-

•

A novel algorithm MH-MARL is proposed to promote cooperation in the local reward scheme of MARL by utilizing an expected action module to instruct agents to selectively help each other while optimizing their own policies.

-

•

Experiments are conducted to verify the effectiveness of MH-MARL in the cooperation task and its capability to be built on different fundamental MARL algorithms.

2 Background

2.1 Markov Games with Local Reward Scheme

Markov Games with the local reward scheme is generally modeled similarly to [4, 5]. At each time step , each agent chooses action to interact with the environment based on its local observation and agent’s own policy . The combinations of each agent’s observation and each agent’s action are denoted as joint observation and joint action , respectively. After agents’ interaction with the environment, each agent receives a new local observation and a local reward . The goal of each agent’s policy is to maximize the expectation of its return, which is also known as cumulative reward: , where is a discount factor.

2.2 Multi-Agent Reinforcement Learning Framework

Actor-critic architecture and centralized training and decentralized execution (CTDE) are two commonly used components in MARL framework.

In actor-critic architecture, each agent learns an ‘Actor’ and a ‘Critic’. The ‘Actor’ represents a policy function that can generate action according to observation . The ‘Critic’ represents an action-value function, also known as Q-function, which estimates the return of a policy given current actions and observations .

In CTDE[4], during training, agents have access to other agents’ observations and actions. This helps agents to estimate the expected return more accurately from a more comprehensive perspective. During execution, each agent is required to choose its action merely based on its own observation, which enables agents to act in a distributed manner.

MADDPG[4] is a representative MARL algorithm with the local reward scheme. The Q-functions in MADDPG are optimized by minimizing the following loss:

| (1) |

where is a replay buffer to store and reuse experiences of agents, and is the target value obtained by:

| (2) |

where and are target functions as backups to stabilize training.

The policy functions in MADDPG is optimized by minimizing the following loss:

| (3) |

3 Algorithm

Traditional MARL algorithms do not consider active and explicit mutual influence of agents’ actions on each other sufficiently, although Q-functions take other agents’ actions and observations into account in CTDE mainly for the problem of non-stationarity. Agents’ mutual influence is essential, especially in cooperative tasks, where agents are supposed to achieve better performance through teamwork. In the local reward scheme, where agents only optimize their own returns, the lack of consideration of mutual influence is more obvious.

Therefore, we propose a novel algorithm Mutual-Help-based MARL (MH-MARL) to instruct agents to help each other by adding an expected action module to traditional actor-critic architecture, as shown in Fig. 1. MH-MARL aims to guide each agent in helping others without severely harming its own performance, and thus achieves the effect of ‘one for all, all for one’ in cooperative tasks. MH-MARL can be built on any traditional MARL algorithms with actor-critic architecture and CTDE in the local reward scheme. In this paper, we choose to build MH-MARL on a representative MARL algorithm MADDPG[4]. Mutual help with expected action module during training consists of two processes: generating the expected actions for each agent and utilizing generated expected actions from other agents.

Firstly, we focus on how to generate the expected actions. The Q-function of each agent involves all the agents’ actions as a part of input in CTDE, which represents that other agents’ actions have an impact on the return of the agent. Thus, if each agent expects to maximize its own performance, the agent can rely on the adjustment of other agents’ actions, apart from the adaption of the agent’s own actions. To make it clear which actions of other agents can help an agent to the utmost extent, each agent maintains an expected policy function to generate expected actions of other agents according to joint observation by minimizing the following loss:

| (4) |

where experiences are randomly extracted from the replay buffer as (3), is generated by the agent’s current policy , and represents actions of other agents expected by agent . As the progress of training, the Q-functions will be more accurate as trained by minimizing (1), and then can generate the expected actions of other agents more accurately.

Secondly, we consider how to utilize expected actions from other agents when each agent optimizes its own policy function . For each agent , if we consider optimizing only to help another agent , then the action generated by should imitate (expected action for the agent in ) , which is realized by minimizing the discrepancy between and agent’s own action . However, may severely harm the performance of agent in some cases, and thus full adoption of expected actions will frustrate the optimization of agent . Therefore, the agent only considers imitating the expected actions which do not severely decrease its own performance. This selective imitation is realized by minimizing the following loss:

| (5) | ||||

where is a step function for comparison, and is a positive constant. Since each agent can receive expected actions from all the other agents, the selective imitation is averaged over all these expected actions.

In addition to helping others, each agent also optimizes its policy function to maximize its own return by minimizing (3) as traditional MARL. The selective imitation to help others and the traditional MARL to optimize its own return are fulfilled simultaneously using the following loss function:

| (6) |

where is the mix ratio of the two loss terms. To automatically balance the two loss terms, is calculated every minibatch to convert the two loss terms to the same scale:

| (7) |

where is the size of the minibatch and is a hyperparameter. The whole algorithm of MH-MARL built on MADDPG is shown in Algorithm 1.

4 Experiments

4.1 Main Experiments

To verify the effectiveness of MH-MARL, we conduct experiments in a flocking navigation environment[19], where agents are required to navigate to a target area while maintaining their flock without collisions. It is a highly cooperative task since agents need to precisely control the distances between them to avoid both collisions and destroying the flock. We build our MH-MARL on a representative MARL algorithm MADDPG[4] to form MH-MADDPG, and contrast it with four baseline algorithms: MADDPG, MADDPG-GR, DE-MADDPG, and SEAC-MADDPG. MADDPG-GR uses a global reward which is the sum of local rewards and shared by all agents. DE-MADDPG[8] simultaneously learns decentralized Q-functions to optimize local rewards and centralized Q-functions to optimize global rewards (which is the sum of local rewards as MADDPG-GR). SEAC-MADDPG is designed based on MADDPG to directly share experiences between agents[17]. and as hyperparameters. All the algorithms are run in 5 seeds.

Convergence curves of success rate and reward are plotted in Fig. 2. It shows that our algorithm MH-MADDPG learns the fastest during training and achieves the best success rate and reward after training, in comparison with four baseline algorithms. Directly transforming the local reward scheme to a global reward scheme (MADDPG-GR) results in poor performance. Both DE-MADDPG and SEAC-MADDPG surpass their fundamental MARL algorithm MADDPG in success rate and reward, but are still inferior to MH-MADDPG. Besides, due to the utilization of other agents’ experience, the stability of SEAC-MADDPG is the worst.

4.2 Ablation Experiments

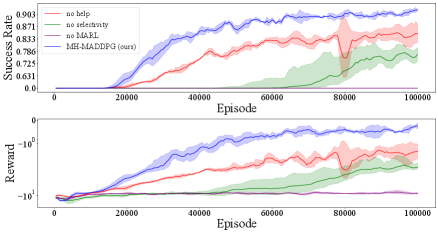

To validate the effectiveness of all the parts of MH-MARL, we design three ablation algorithms of MH-MADDPG: ‘no help’ refers to the algorithm removing the expected action module in MH-MARL, which is exactly MADDPG; ‘no selectivity’ removes the step function in (5) for full adoption of expected actions; ‘no MARL’ removes the traditional MARL loss term (3) in (6) to only help others.

Convergence curves of ablation experiments are plotted in Fig. 3. It demonstrates that MH-MADDPG is largely superior to three ablation algorithms throughout the training process, which validates the effectiveness of all the parts of MH-MARL. Specifically, in contrast to ‘no help’ (MADDPG), ‘no MARL’ can hardly improve the performance by training, and ‘no selectivity’ hinders the training in the early stage. It shows the importance of emphasis on the agent’s own performance compared to helping others.

4.3 Experiments with another fundamental MARL algorithm

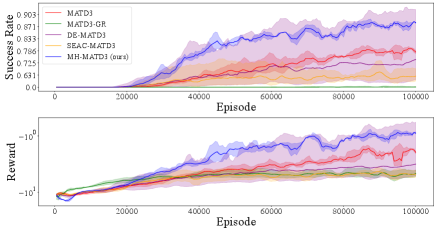

In previous experiments, we choose MADDPG as the fundamental MARL algorithm to implement our MH-MARL. However, MH-MARL is designed to be able to build on any MARL algorithm with actor-critic architecture and CTDE in the local reward scheme. In this subsection, we choose to build MH-MARL on MATD3[5], instead of MADDPG. Baseline algorithms are also adjusted to use MATD3 as their fundamental MARL algorithms.

Convergence curves of success rate and reward are plotted in Fig. 4. The figure demonstrates that MH-MATD3 still surpasses the baseline algorithms by a large margin in success rate and reward, which validates the flexibility in the choice of the fundamental MARL algorithm. Specifically, DE-MATD3 and SEAC-MATD3 are even inferior to their fundamental algorithm MATD3, and the stability of DE-MATD3 is poor, unlike MH-MATD3.

5 Conclusion and Future Work

We propose a novel algorithm MH-MARL, which utilizes an expected action module to instruct agents to help each other. Agents first generate expected actions for other agents, and then other agents selectively imitate corresponding expected actions while being trained by a traditional MARL algorithm. MH-MARL emphasizes optimizing an agent’s own performance as the primary goal, and mutual help is regarded as the secondary goal to promote cooperation. Experiments are conducted to verify the superiority of MH-MARL in the improvement of performance in the cooperative task. Experimental results also show that MH-MARL can be built on different fundamental MARL algorithms. In the near future, we expect to apply MH-MARL to other cooperative tasks.

References

- [1] Oriol Vinyals, Igor Babuschkin, Wojciech M Czarnecki, Michaël Mathieu, Andrew Dudzik, Junyoung Chung, David H Choi, Richard Powell, Timo Ewalds, Petko Georgiev, et al., “Grandmaster level in starcraft ii using multi-agent reinforcement learning,” Nature, vol. 575, no. 7782, pp. 350–354, 2019.

- [2] Jianhong Wang, Wangkun Xu, Yunjie Gu, Wenbin Song, and Tim C Green, “Multi-agent reinforcement learning for active voltage control on power distribution networks,” Advances in Neural Information Processing Systems, vol. 34, pp. 3271–3284, 2021.

- [3] Yue Jin, Yaodong Zhang, Jian Yuan, and Xudong Zhang, “Efficient multi-agent cooperative navigation in unknown environments with interlaced deep reinforcement learning,” in ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019, pp. 2897–2901.

- [4] Ryan Lowe, Yi Wu, Aviv Tamar, Jean Harb, Pieter Abbeel, and Igor Mordatch, “Multi-agent actor-critic for mixed cooperative-competitive environments,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, 2017, pp. 6382–6393.

- [5] Johannes Ackermann, Volker Gabler, Takayuki Osa, and Masashi Sugiyama, “Reducing overestimation bias in multi-agent domains using double centralized critics,” in NeurIPS Workshop on Deep RL, 2019.

- [6] Jakob Foerster, Gregory Farquhar, Triantafyllos Afouras, Nantas Nardelli, and Shimon Whiteson, “Counterfactual multi-agent policy gradients,” in Proceedings of the AAAI conference on artificial intelligence, 2018, vol. 32.

- [7] Tabish Rashid, Mikayel Samvelyan, Christian Schroeder, Gregory Farquhar, Jakob Foerster, and Shimon Whiteson, “Qmix: Monotonic value function factorisation for deep multi-agent reinforcement learning,” in International conference on machine learning. PMLR, 2018, pp. 4295–4304.

- [8] Hassam Ullah Sheikh and Ladislau Bölöni, “Multi-agent reinforcement learning for problems with combined individual and team reward,” in 2020 International Joint Conference on Neural Networks (IJCNN). IEEE, 2020, pp. 1–8.

- [9] Sainbayar Sukhbaatar, Rob Fergus, et al., “Learning multiagent communication with backpropagation,” Advances in neural information processing systems, vol. 29, 2016.

- [10] Jiechuan Jiang and Zongqing Lu, “Learning attentional communication for multi-agent cooperation,” Advances in neural information processing systems, vol. 31, 2018.

- [11] Woojun Kim, Jongeui Park, and Youngchul Sung, “Communication in multi-agent reinforcement learning: Intention sharing,” in International Conference on Learning Representations, 2020.

- [12] Lisa Torrey and Matthew Taylor, “Teaching on a budget: Agents advising agents in reinforcement learning,” in Proceedings of the 2013 international conference on Autonomous agents and multi-agent systems, 2013, pp. 1053–1060.

- [13] Felipe Leno Da Silva, Ruben Glatt, and Anna Helena Reali Costa, “Simultaneously learning and advising in multiagent reinforcement learning,” in Proceedings of the 16th conference on autonomous agents and multiagent systems, 2017, pp. 1100–1108.

- [14] Shayegan Omidshafiei, Dong-Ki Kim, Miao Liu, Gerald Tesauro, Matthew Riemer, Christopher Amato, Murray Campbell, and Jonathan P How, “Learning to teach in cooperative multiagent reinforcement learning,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2019, vol. 33, pp. 6128–6136.

- [15] Changxi Zhu, Yi Cai, Ho-fung Leung, and Shuyue Hu, “Learning by reusing previous advice in teacher-student paradigm,” in Proceedings of the 19th International Conference on Autonomous Agents and MultiAgent Systems, 2020, pp. 1674–1682.

- [16] Ercument Ilhan, Jeremy Gow, and Diego Perez Liebana, “Action advising with advice imitation in deep reinforcement learning,” in Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems, 2021, pp. 629–637.

- [17] Filippos Christianos, Lukas Schäfer, and Stefano Albrecht, “Shared experience actor-critic for multi-agent reinforcement learning,” Advances in neural information processing systems, vol. 33, pp. 10707–10717, 2020.

- [18] Li Wang, Yupeng Zhang, Yujing Hu, Weixun Wang, Chongjie Zhang, Yang Gao, Jianye Hao, Tangjie Lv, and Changjie Fan, “Individual reward assisted multi-agent reinforcement learning,” in International Conference on Machine Learning. PMLR, 2022, pp. 23417–23432.

- [19] Yunbo Qiu, Yue Jin, Lebin Yu, Jian Wang, Yu Wang, and Xudong Zhang, “Improving sample efficiency of multi-agent reinforcement learning with non-expert policy for flocking control,” IEEE Internet of Things Journal, pp. 1–14, 2023.