Projective Resampling Imputation Mean Estimation Method for Missing Covariates Problem

Abstract

Missing data is a common problem in clinical data collection, which causes difficulty in the statistical analysis of such data. To overcome problems caused by incomplete data, we propose a new imputation method called projective resampling imputation mean estimation (PRIME), which can also address “the curse of dimensionality” problem in imputation with less information loss. We use various sample sizes, missing-data rates, covariate correlations, and noise levels in simulation studies, and all results show that PRIME outperformes other methods such as iterative least-squares estimation (ILSE), maximum likelihood (ML), and complete-case analysis (CC). Moreover, we conduct a study of influential factors in cardiac surgery-associated acute kidney injury (CSA-AKI), which show that our method performs better than the other models. Finally, we prove that PRIME has a consistent property under some regular conditions.

Keywords : projective resampling, missing covariates problem, imputation method, linear regression

1Center for Applied Statistics, Renmin University of China,

2 School of Statistics, Remin University of China,

3 State Key Laboratory of Cardiovascular Disease,

Fuwai Hospital, National Center for Cardiovascular Diseases, Chinese Academy of Medical Sciences and Peking Union Medical College

1 Introduction

In medical research, an investigator’s ultimate interest may be in inferring prognostic markers, given the patients’ genetic, cytokine, and/or environmental backgrounds [1, 2]. However, in practical applications, data are often missing. The most common approach to address missing-data problems is complete-case analysis (CC), which is simple but inefficient. CC can also lead to biased estimates when the data are not missing completely at random. The maximum likelihood method (ML [3, 4]) and the inverse probability weighting method (IPW [5, 6]) are also widely used approaches to address missing data. However, likelihood-based methods are sensitive to model assumptions, and re-weighting methods do not always make full use of the available data. Alternatively, imputation [7, 8] is a more flexible approach to couple with missing data.

However, a preliminary analysis of cardiac surgery-associated acute kidney injury (CSA-AKI) data used in Chen et al. [2] indicates that the missing-data patterns vary across individuals. Accordingly, new and more capable quantitative methods are needed for this individual-specific missing data. Furthermore, it is common for only a small fraction of records to have complete information across all sources. Existing methods do not work well when the percentage of unavailable data is high. To estimate the coefficients (rather than predicting them), Lin et al. [9] proposed the iterative least-squares estimation (ILSE) method to deal with individual-specific missing-data patterns using the classical regression framework, but it needs a complete set of observations to obtain the initial values, and its results might not converge when based on bad initial values. Furthermore, Lin et al. [9] may have difficulty accommodating data missing from both important and unimportant variables.

In this study, based on the idea of projection resampling/random projection, we propose Projection Resampling Imputation Mean Estimation (PRIME), a method that tackles the aforementioned drawbacks of existing methods. The key idea behind projection resampling/random projection was given in the Johnson-Lindenstrauss lemma [10], which preserves pairwise distances after projecting a set of points to a randomly chosen low-dimensional subspace. There are several previous studies on projection resampling/random projection for dimension reduction, including Schulman [11] for clustering, Donoho [12] for signal processing, Shi et al. [13] for classification, Maillard and Munos [14] for linear regression, and Le et al. [15] for kernel approximation. Specifically, the idea of PRIME is to project the covariates along randomly sampled directions to obtain samples of scalar-valued predictors and kernels (dimension reduction). Next, a simple geometric average is taken on the scalar-predictor-based kernel to impute the missing parts (using all-sided information). Our method has several advantages, including the following. First, PRIME can deal with a high degree of missing data, even data containing no complete observations, while most existing methods require at least a fraction of the subjects to have fully complete observations. Second, we can average the imputed estimates from multiple projection directions and fully utilize the available information to reach a more reliable and useful result. Third, to reduce the undesirable influence of unimportant variables, PRIME can be easily extended to sparse PRIME (denoted as SPRIME), which has a profound impact in practical applications.

The remainder of the paper is organized as follows. Section 2 introduces the basic setup of PRIME and SPRIME. Theoretical properties are discussed in Section 3. Sections 4 and 5 present the numerical results using simulated and real data examples, respectively. Section 6 presents some concluding remarks. In addition, our proposed method is implemented using R and the scripts to reproduce our results are available at https://github.com/eleozzr/PRIME. The proof of the theorem is available the Appendix.

2 Projective resampling Imputation mean estimation (PRIME)

2.1 Model and estimation by PRIME

In this paper, let be the response variable of interest and be the covariate matrix. We assume that . We consider the presence of missing covariates by , which denotes the missing-data indicator for , where is 1 if is missing and is 0 otherwise. For each unit , denotes the available covariates set, e.g., for the complete case.

In this study, we focus on a linear regression model. Assume that the random sample is generated by:

| (1) |

where is the coefficient vector for the covariates and the ’s are independently identically distributed random errors. We assume and .

Covariates can be divided into two parts based on : for observed covariates and for missing covariates. Thus, equation (1) can be expressed as

where and denote the regression coefficients for the complete and incomplete covariates, respectively. For , which is unobserved, refer to Lin et al. [9], we take the expectation of given the observed covariates. Thus, we obtain the following equation:

| (2) |

Hence, by equation (2), we can impute the incomplete part using the information on to obtain an estimator for . We use the following estimator to estimate the missing components of the covariates for unit :

| (3) |

In equation (3), , where is a kernel function and is a bandwidth. In this way, we can make use of the information as fully as possible. To tackle the problem of “the curse of dimensionality”, we further transform the estimator in equation (3) using the projective resampling method. As shown in Figure 1, for subject , we project onto random directions , where denotes the cardinality of a set A, then obtain kernel values using the resulting scalars , and finally integrate the kernels through the geometric mean.

| (4) |

where is a random vector, with each entry chosen independently from a distribution that is symmetric about the origin with . In practice, we usually generate from or , but random vectors from other possible distributions can also be used.

Applying the above imputation strategy, we can obtain by using observed data for part and imputed data for part .

| (5) |

Therefore, we propose the following estimation equation:

| (6) |

Thus, the estimator of the regression coefficient can be solved by

Specifically, we propose the following algorithm:

2.2 Simultaneous fitting and selection by sparse PRIME

Because the number of disease-associated biomarkers is not expected to be large, it is of great importance to take the sparse assumption into account when not all variables contribute to outcome variables. Hence, we assume the linear regression model in equation (1) is sparse and define the index set of the active and inactive predictors by and , respectively. Our practical goal is to identify which biomarkers in the CSA-AKI datasets are disease-related as well as to estimate the corresponding coefficients. The main idea of sparse PRIME is to replace the estimation equation 6 with the penalized estimation equations as follows:

| (7) |

where is the partial derivative for the penalty function with respect to . The least absolute shrinkage and selection operator (LASSO) estimator is defined to satisfy . Optimizing the functions in (7) with is computationally cumbersome because the functions are non-differentiable. Fortunately, the shooting algorithm proposed in Fu [16] can be used to compute the LASSO estimator. Moreover, Fu [16, 17] proved that the unique estimator of (7) is equivalent to the solution of the penalized objective function as follows:

We can solve this penalized regression-form problem and get a sparse estimator using the glmnet package in R.

3 Theoretical properties

We study the consistency of the projection resampling least estimator . Denote the true value of by . We make the following assumptions:

- (A1)

-

with ;

- (A2)

-

;

- (A3)

-

The kernel function is a symmetric density function with compact support and a bounded derivative;

- (A4)

-

, where is a bounded set;

- (A5)

-

For a missing-data pattern , let be the conditional expectation of and be the density of Assume and have continuous second derivatives with respect to on the corresponding support;

- (A6)

-

;

- (A7)

-

is bounded for all , and the limit exists;

- (A8)

-

and , where is a finite and positive definite matrix;

- (A9)

-

There exists a constant , .

Assumptions (A1)–(A5) are the same as the conditions in Lin et al. [9]. Specifically, Assumption (A1) requires under-smoothing to obtain a root consistent estimator, which is a commonly used regularity assumption in semiparametric regression. Assumption (A2) addresses the missing data mechanism and ensures the PRIME estimator is consistent. As mentioned in Lin et al. [9], Assumption (A2) is weaker than assuming the data are missing completely at random. Assumptions (A3)–(A5) are standard in nonparametric regression. Assumption (A3) is achieved when the kernel function is the Gaussian kernel, but it is more general. Assumption (A6) is a moment bound required in Arriaga and Vempala [20] and Li et al. [19], a necessary technical condition. Assumptions (A7) and (A8) are commonly used in penalized estimation problems [16, 17]. We assume to make sure that there are enough samples being used to estimate .

Theorem 1.

Suppose Assumptions (A1)-(A6) hold and , as , then in probability.

Theorem 2.

Suppose Assumptions (A1)-(A8) hold, , and tuning parameter as , then in probability.

4 Simulation

In this section, we consider several simulated scenarios to highlight the properties of PRIME in contrast to some other methods. We experimentally investigate the performance of the following methods:

For each model setting with a specific choice of parameters, we repeat the simulation 100 times and evaluate the performance of models using the normalized absolute distance (NAD) and the mean squared error (MSE), defined as follows:

In addition, we calculate the optimal MSE rate, defined as the proportion of times each method (except Full) produced the smallest MSE in repetitions. The MSE based on N repetitions is partitioned into MSE=Variance+Bias2, as follows:

where and in this simulation study. For simplicity, we set bandwidth for PRIME, SPRIME and ILSE. In the following sections, we compare the methods using various settings for sample size, missing data rates, noise levels, and feature correlations.

4.1 Scenario 1: Different noise levels

The data generation model has the linear expression

where , , .

We generate from the multivariate normal distribution . We set the non-diagonal elements of equal to and the diagonal elements of equal to . For , we use the error distribution , where changes with . We consider the cases in which .

Missing data are divided into three generative scenarios or assumptions. Missing completely at random (MCAR) means the missingness is independent of the values of the data. Missing at random (MAR) means the propensity of data to be missing depends on the observed values, whereas missing not at random (MNAR) covers the remaining scenario that the mechanism depends on the unobserved values (the variables that are missing). In our study, we consider situations that differ from the classical MCAR, MAR, and MNAR mechanism.

For each sample, variables are always available. There are twelve “typical” missing patterns considered, the details are shown in Table 1. We divide the 12 patterns into two groups. Specifically, the first group consists of , and the second group consists of the rest missing patterns. We randomly assign the missing patterns in to the sample with missing probability . Furthermore, we set the missing probability of th unit for the patterns in as . Then we randomly assign the patterns in to the missing samples. The settings in Table 2 are used for the missing rate (MR).

| Group | Pattern | Variable | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Full | |||||||||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||

| Missing rate | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | ||

| 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | ||

| -1.5 | -1.5 | -1.5 | -1.5 | -1.5 | -1.5 | -2 | -2 | -4 | ||

| -4 | -2 | -2 | -1.5 | -1.5 | -1 | -1 | -1 | -1 | ||

| 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.65 | ||

| -1.5 | -1.5 | -2 | -2 | -3 | -3 | -3.5 | -3.5 | -4 | ||

| -4 | -4 | -4 | -4 | -4 | -4 | -4 | -4 | -4 | ||

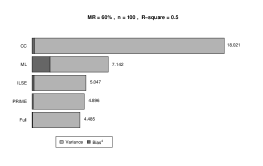

The MSE results are shown in Figures 3, 6, 9, and 12 for . The results of optimal rate of MSE are displayed in Figures 4, 7, 10, and 13. To determine the relative performance, we rank the NADs of the five methods at each repetition. The mean NAD ranks are displayed in Figures 2, 5, 8, and 11 for . The results of the cases not shown here are available in the supplementary materials. In general, they exhibit patterns which is similar to those shown here.

To make the comparison of Full, PRIME, and ILSE easier, the MSE bar plots for CC and ML are manually scaled because these two methods lead to much higher MSEs than the other methods. The main conclusions are as follows:

-

1.

When and increase, the MSEs of Full, ILSE, and PRIME generally decrease, as expected. However, the relative performance of these three methods does not change.

-

2.

Generally, PRIME outperformes ILSE and ML in terms of NAD, and all three significantly outperforme CC. Surprisingly, PRIME was close or even superior to the Full method in estimating the coefficients of and when and and in estimating the coefficients of and when and . These results confirm the superiority of the PRIME method.

-

3.

The comparison of results shows that the proposed PRIME method performed better than the other three methods (ILSE, CC, and ML). The bias and variance decomposition figures show that ILSE produces more biased estimates than PRIME. The CC method?s estimation has extremely high variance in almost all ranges of , and CC?s approximation error was larger when was not very high. Furthermore, the CC method produces biased estimates, as expected, because of the missing-data mechanism. The ML performance is not stable: ML’s performance is close to our proposed method?s when and , but ML has the poorest performance when and because ML estimators can be highly biased when the MAR assumption does not hold.

-

4.

The optimal rates of MSE show a proportion over for PRIME, and this indicates that PRIME yields the smallest MSE of all the competitors in more than 40% of the trials in Scenario 1. When PRIME yields the optimal rate, ILSE or ML most often yields the second-smallest MSE. When PRIME does not yield the lowest MSE, ILSE and ML most often do. Not surprisingly, CC rarely produces the smallest MSE except when and .

4.2 Scenario 2: Varying correlation between variables

To compare the methods with different correlations, we consider the correlation between and in four situations: for , for , for and for . Here, we set with . All other aspects remain the same as in Scenario 1. For the missing rate, two settings are considered, where

-

•

, so that the missing rate is approximately 60%,

-

•

, so that the missing rate is approximately 90%.

The NAD and MSE results are shown in Figures 14, 15, 17, 18, 20, 21, 23, and 24. The optimal MSE rates are shown in Figures 16, 19, 22, and 25. The main conclusions are as follows:

- 1.

-

2.

The optimal rate results show that PRIME has obvious advantages over other methods because it produces the smallest MSE in almost all situations except when and . However, when increases, the gap between PRIME and other methods increases. CC still has the worst MSE performance among the four methods.

4.3 Scenario 3: Taking sparse structure into consideration

In this scenario, we illustrate the proposed SPRIME by studying the data from simulations. We consider penalized Full (denoted as SFull), complete-case analysis with a penalty (denoted as SCC), ILSE, and ML as the alternatives. ILSE in Lin et al. [9] and ML in Jiang et al. [4] were proposed without considering the sparse assumption; hence, we use them directly instead of using the penalized estimation form. We acknowledge that there are other approaches such as those in Xue and Qu [21] that can be used to address a high-dimensional missing-data problem. However, the missing-data patterns in these methods are different from the individual-specific case.

The model used to generate data has the linear expression

where , , , and . We generate from the multivariate normal distribution . We set the non-diagonal element of equal to . For , we use the error distribution , where changes with . We consider only the case in which .

When taking sparse structure into consideration, the coefficients are equal to 0. Thus, the criterion, NAD used in Scenario 1 and 2, is no longer meaningful. So we only use MSE and the optimal rate of MSE to assess the performance of different methods. Several conclusions can be drawn from the figures:

-

1.

Scenario 3 results yield conclusions similar to those of Scenarios 1 and 2. From Figure 26 we can see that SPRIME produces the smallest MSEs in almost all cases.

-

2.

When MR=60%, the optimal rates of MSE are 0.95, 0.01, 0.00, 0.04 for SPRIME, ILSE, CC and ML, respectively. When MR=90%, the optimal rates of MSE are 0.94, 0.05, 0.01, 0.00 for SPRIME, ILSE, CC and ML, respectively. Not surprisingly, SPRIME that considers the sparse structure performs better than ILSE and ML without the penalty, which reconfirms the superiority of PRIME-type approach.

5 Cardiac surgery-associated acute kidney injury study

In this example, we illustrate the proposed method by analyzing data regarding Cardiac surgery-associated acute kidney injury (CSA-AKI). CSA-AKI is the second condition for acute kidney injury in the intensive care setting and sometimes causes death [2]. However, because of a general lack of effective treatment for CSA-AKI, tools or methods for earlier identification are very important for prevention and management of the syndrome. To find more predictive biomarkers for CSA-AKI, Chen et al. [2] collected 32 plasma cytokines, including CTACK, FGFa, G-CSF, HGF, interferon-2, interferon-gamma (IFN-), IL-1, IL-1, IL-2, IL-4, IL-6, IL-7, IL-8, IL-9, IL-10, IL-12p70, IL-12p40, IL-16, IL-17, IL-18, IP-10, MCP-1, MCP-3, M-CSF, MIF, MIG, MIP-1 (macrophage inflammatory protein-1 ), MIP-1 (macrophage inflammatory protein-1 ), SCF, SCGF-, SDF-1, and tumor necrosis factor-. CSA-AKI severity is evaluated primarily by deltaScore, which is measured by serum creatinine alterations before and after surgery. Serum creatinine concentrations before and after surgery are measured by an identical testing platform in the clinical laboratory of the hospital.

We use the continuous-variable deltaScore as the response. For simplicity, we conduct a standardized transformation to scale the both response and covariates. Furthermore, we exclude subjects with missing deltaScores because the aforementioned methods (PRIME and SPRIME) apply primarily to the missing covariates. Finally, 321 patients are enrolled for statistical analysis, of which only approximately 60% have complete covariate information.

Because the number of related variables is not expected to be large, as in Scenario 3 in the simulation study, we use SPRIME and SCC to simultaneously select and estimate the coefficients of the factors that might shed light on the deltaScore. We also use ILSE and ML directly without considering the sparse assumption. The regression coefficient estimates obtained from the four methods are listed in Table 3. Among them, IL-8, IL-10, IFN-, IL-16, and MIP- are also found to be related to CSA-AKI in Chen et al. [2]. However, in real-world data, it is difficult to objectively evaluate the performance of candidate methods. Therefore, we delete the subjects with missing covariates and construct missing data manually for the complete-case data of CSA-AKI. For the same reason as before in Scenario 3, we consider only MSE to evaluate the estimation accuracy. However, because of the unknown true coefficients, we are unable to evaluate MSE as described in the simulation. Hence, we calculate instead, as follows:

where are the estimated coefficients obtained using Full method.

The setting of missing-data patterns is the same as that in the simulation study except for the missing probability function. We randomly assign the missing patterns in to the sample with missing probability . Furthermore, we set the missing probability of the th unit for the patterns in as , where . Then, we randomly assign the patterns in to the missing samples. For the missing rate, we set . Consequently, the missing rate is approximately 90%. This is repeats times to randomly generate missing data. Here, like in simulation, we also set bandwidth .

The results are shown in Figure 27. The optimal rates of MSE are 1.00, 0.00, 0.00, 0.00 for SPRIME, ILSE, CC and ML, respectively. The resuls show that SPRIME has advantages over the other methods in estimation accuracy as it produces the smallest . The missing mechanism and the wrong model assumption may give rise to the worse performance of ILSE, CC and ML.

| Variable | SPRIME | SCC | ILSE | ML |

| age | -0.058 | -0.099 | -0.167 | -0.012 |

| BMI | 0.040 | 0.048 | ||

| hospitalized time | 0.051 | 0.045 | 0.069 | 0.080 |

| CTACK | 0.020 | 0.207 | 0.117 | |

| FGFa | 0.137 | 0.137 | ||

| G-CSF | -0.403 | -0.462 | ||

| HGF | -0.120 | -0.162 | ||

| IFN-2 | 0.233 | 0.393 | ||

| IFN- | 0.089 | 0.063 | 0.207 | 0.199 |

| IL-1 | -0.126 | -0.113 | ||

| IL-1 | -0.257 | -0.256 | ||

| IL-2 | 0.349 | 0.280 | ||

| IL-4 | -0.011 | -0.012 | ||

| IL-6 | -0.126 | -0.148 | ||

| IL-7 | -0.165 | -0.182 | ||

| IL-8 | 0.149 | 0.155 | 0.527 | 0.624 |

| IL-9 | -0.008 | -0.020 | -0.034 | |

| IL-10 | 0.040 | 0.023 | 0.043 | 0.064 |

| IL-12p70 | 0.347 | 0.361 | ||

| IL-12p40. | -0.029 | -0.099 | ||

| IL-16 | 0.100 | 0.109 | 0.143 | 0.118 |

| IL-17 | -0.322 | -0.303 | ||

| IL-18 | -0.071 | -0.080 | ||

| IP-10 | -0.057 | -0.170 | ||

| MCP-1 | 0.081 | 0.060 | ||

| MCP-3 | -0.099 | -0.233 | ||

| M-CSF | 0.080 | 0.024 | 0.042 | |

| MIF | -0.131 | -0.146 | ||

| MIG | 0.024 | 0.009 | 0.169 | 0.342 |

| MIP-1 | 0.036 | 0.039 | 0.315 | 0.330 |

| MIP-1 | -0.187 | -0.165 | ||

| SCF | 0.175 | 0.143 | 0.166 | 0.175 |

| SCGF- | 0.093 | 0.0923 | 0.060 | 0.121 |

| SDF-1 | -0.096 | -0.057 | ||

| preLVEF | 0.056 | 0.073 | ||

| TNF- | -0.100 | -0.071 |

6 Conclusions

In this study, we propose a projective resampling imputation mean estimation method to estimate the regression coefficients for a high rate of missing-data covariates. Our first set of random features projects data points onto a randomly chosen line and then averages the resulting scalar values to yield a comprehensive result. The random lines are drawn from the standard normal distribution to ensure less loss of information. We experimentally evaluate the performance of the PRIME method, and the results showe that the proposed method is a feasible alternative.

However, the aforementioned work has been developed for a classical setting. Developing PRIME to combine generalized linear models or Cox models with missing data warrants future research. Furthermore, we considered only missing covariates even though it is common to encounter cases where both covariates and responses are missing. Hence, developing methods to address practical issues will be the focus of our future work.

References

- [1] Mengyun Wu, Jian Huang, and Shuangge Ma. Identifying gene-gene interactions using penalized tensor regression. Statistics in medicine, 37(4):598–610, 2018.

- [2] Zhongli Chen, Liang Chen, Guangyu Yao, Wenbo Yang, Ke Yang, and Chenglong Xiong. Novel blood cytokine-based model for predicting severe acute kidney injury and poor outcomes after cardiac surgery. Journal of the American Heart Association, 9(22):e018004, 2020.

- [3] Arthur P. Dempster, Nan M. Laird, and Donald B. Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society Series B, 39(1):1–22, 1977.

- [4] Wei Jiang, Julie Josse, Marc Lavielle, and TraumaBase Group. Logistic regression with missing covariates—parameter estimation, model selection and prediction within a joint-modeling framework. Computational Statistics and Data Analysis, 2020.

- [5] Shaun R Seaman and Ian R White. Review of inverse probability weighting for dealing with missing data. Statistical Methods in Medical Research, 22(3):278–295, 2013.

- [6] BaoLuo Sun and Eric J. Tchetgen Tchetgen. On inverse probability weighting for nonmonotone missing at random data. Journal of the American Statistical Association, 113(521):369–379, 2018.

- [7] Judith M Conn, Kung-Jong Lui, and Daniel L McGee. A model-based approach to the imputation of missing data: Home injury incidences. Statistics in Medicine, 8(3):263–266, 1989.

- [8] Xiaoyan Yin, Daniel Levy, Christine Willinger, Aram Adourian, and Martin G Larson. Multiple imputation and analysis for high-dimensional incomplete proteomics data. Statistics in medicine, 35(8):1315–1326, 2016.

- [9] Huazhen Lin, Wei Liu, and Wei Lan. Regression analysis with individual-specific patterns of missing covariates. Journal of Business and Economic Statistics, 19(3):231–253, 2019.

- [10] Willian B. Johnson and Joram Lindenstrauss. Extensions of lipschitz mappings into a hilbert space. Contemporary Mathematics, 26:189–206, 1984.

- [11] Leonard J. Schulman. Clustering for edge-cost minimization (extended abstract). Proceedings of the thirty-second annual ACM symposium on Theory of computing, pages 547–555, 2000.

- [12] D.L. Donoho. Compressed sensing. IEEE Transactions Information Theory, 52:1289–1306, 2006.

- [13] Qinfeng Shi, Hanxi Li, and Chunhua Shen. Rapid face recognition using hashing. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 2753–2760, 2010.

- [14] Odalric-Ambrym Maillard and Rémi Munos. Linear regression with random projections. Journal of Machine Learning Research, 13(1):2735–2772, 2012.

- [15] Quoc Le, Tamás Sarlós, and Alex Smola. Fastfood: approximating kernel expansions in loglinear time. ICML’13 Proceedings of the 30th International Conference on International Conference on Machine Learning - Volume 28, 2013.

- [16] Wenjiang J Fu. Penalized regressions: the bridge versus the lasso. Journal of computational and graphical statistics, 7(3):397–416, 1998.

- [17] Wenjiang J Fu. Penalized estimating equations. Biometrics, 59(1):126–132, 2003.

- [18] Dimitris Achlioptas. Database-friendly random projections: Johnson-lindenstrauss with binary coins. Journal of computer and System Sciences, 66(4):671–687, 2003.

- [19] Ping Li, Trevor J Hastie, and Kenneth W Church. Very sparse random projections. Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 287–296, 2006.

- [20] Rosa I Arriaga and Santosh Vempala. An algorithmic theory of learning: Robust concepts and random projection. Machine Learning, 16:161–182, 2006.

- [21] Fei Xue and Annie Qu. Integrating multisource block-wise missing data in model selection. Journal of the American Statistical Association, pages 1–14, 2020.

- [22] Kani Chen, Shaojun Guo, Liuquan Sun, and Jane-Ling Wang. Global partial likelihood for nonparametric proportional hazards models. Journal of the American Statistical Association, 105(490):750–760, 2010.

Appendix

Proof of Theorem 1

Proof.

Let

Similar to Lin et al. [9], we first prove that is a solution of . We have

and we also have , hence we can get . Noting that is a function of , then

| (8) |

Similar to Lin et al. [9], it is easy to show that is the unique solution of .

To prove Theorem 1, it suffices to show that

| (9) |

We rewrite

where

Then follows from the weak law of large numbers. Noting that

| (10) |

For gaussian kernel generates better empirical performance than do other types of kernels, here we assume is a gaussian kernel function, then can be written as , where be a random matrix whose entries are chosen independently from either or . Hence, we can show that using Theorem 1 in Arriaga and Vempala [20]. Then, (10) can be writtern as

Under the conditions given, following the proof of theorem 1 in Lin et al. [9] and the lemma 4 in Chen et al. [22], we know that

Let , and follows from lemma 4 in Chen et al. [22]. Using Assumption (A5), then . Hence, we have

Let . Clearly, for the condition

Then using the weak law of large number, we have

Thus, we obtain and the same argument can also apply to . The above convergences imply that . Following the technical derivation follow from Lin et al. [9], Theorem 1 holds.

∎

Proof of Theorem 2