Progressive Object Transfer Detection

Abstract

Recent development of object detection mainly depends on deep learning with large-scale benchmarks. However, collecting such fully-annotated data is often difficult or expensive for real-world applications, which restricts the power of deep neural networks in practice. Alternatively, humans can detect new objects with little annotation burden, since humans often use the prior knowledge to identify new objects with few elaborately-annotated examples, and subsequently generalize this capacity by exploiting objects from wild images. Inspired by this procedure of learning to detect, we propose a novel Progressive Object Transfer Detection (POTD) framework. Specifically, we make three main contributions in this paper. First, POTD can leverage various object supervision of different domains effectively into a progressive detection procedure. Via such human-like learning, one can boost a target detection task with few annotations. Second, POTD consists of two delicate transfer stages, i.e., Low-Shot Transfer Detection (LSTD), and Weakly-Supervised Transfer Detection (WSTD). In LSTD, we distill the implicit object knowledge of source detector to enhance target detector with few annotations. It can effectively warm up WSTD later on. In WSTD, we design a recurrent object labelling mechanism for learning to annotate weakly-labeled images. More importantly, we exploit the reliable object supervision from LSTD, which can further enhance the robustness of target detector in the WSTD stage. Finally, we perform extensive experiments on a number of challenging detection benchmarks with different settings. The results demonstrate that, our POTD outperforms the recent state-of-the-art approaches. The codes and models are available at https://github.com/Cassie94/LSTD/tree/lstd.

Index Terms:

Object detection, Deep learning, Transfer learning, Weakly/Semi-supervised detection, Low-shot learning

I Introduction

Recent years have witnessed the breakthrough of object detection, with the development of deep learning frameworks [1, 2, 3, 4]. However, the remarkable performance of these detectors heavily depends on the large-scale benchmarks with object annotations. For a specific detection task in practice, it is often labor-intensive to collect such fully-annotated data. To alleviate this annotation burden, a number of weakly/semi-supervised detectors have been proposed with weakly-annotated images (i.e., with only image labels) [5, 6, 7, 8, 9, 10]. Even though such images can be obtained easily from the internet, the performance of these weakly/semi-supervised detectors is far from being competitive to fully-supervised detectors.

Alternatively, human can localize and recognize new objects successfully, after checking few examples with the prior object knowledge. Moreover, this capacity can be generalized via exploiting objects from weakly-annotated images. To mimic this learning process, several approaches have been proposed recently, by low-shot and/or transfer learning in a semi-supervised detection setting [11, 12, 13]. However, these detectors have difficulties in handling wild images with complex objects [11, 12], or learning to detect with weakly-annotated images [13]. For this reason, [14] introduces a baby learning framework, based on prior knowledge modelling, exemplar learning, and learning with video contexts. However, it often requires a large amount of weakly-annotated videos (e.g., 20,000 per category), and the iterative learning manner may reduce the discriminative power of deep neural networks.

To address these challenges, we propose a novel Progressive Object Transfer Detection (POTD) framework in Fig. 1. Our key insight is that, human-like learning can effectively integrate various object knowledge of different domains into a progressive detection process, which can boost a target detection task with very few instance-level annotations. More specifically, this paper makes three main contributions. First, POTD can efficiently mimic the learning manner of human, by progressively transferring object knowledge from source to target, from large-scale to low-shot, from fully-annotated to weakly-annotated images. With this multi-level learning process, one can effectively promote the detection performance with little annotation burden. Second, POTD consists of two novel detection stages, i.e., Low-Shot Transfer Detection (LSTD) and Weakly-Supervised Transfer Detection (WSTD). Each stage is elaborately designed with transfer insights. In LSTD, we distill implicit object knowledge in source to guide low-shot detection in target. This can effectively warm up WSTD to handle wild images later on. In WSTD, we design a recurrent object labelling mechanism for learning to detect with weakly-labeled images. Furthermore, we exploit reliable object knowledge from LSTD, which can enhance the robustness of target detector for weakly-supervised detection. Finally, we conduct extensive experiments on a number of challenging data sets, where our POTD outperforms the recent state-of-the-art approaches.

It is noted that, Low-Shot Transfer Detection (LSTD) has been published in AAAI 2018 [13]. We significantly extend it in the following ways. From the aspect of model designs, we extend LSTD to be a Progressive Object Transfer Detection (POTD) procedure. Specifically, we use LSTD as warm-up, and design a new Weakly-Supervised Transfer Detection (WSTD) stage to handle weakly-annotated images in the target domain. Consequently, our POTD can further boost the detection performance of LSTD with little annotation burden. From the aspect of experiments, we deeply investigate the proposed POTD, and show the effectiveness of both LSTD and WSTD on object detection.

II Related Work

Weakly-Supervised Object Detection. Since only image labels are available in the weakly-supervised object detection, most approaches are naturally based on the Multiple Instance Learning (MIL) framework [15], where each image is regarded as a bag of object instances. Positive categories are assumed to contain at least one object instance in the image, while negative categories contain no corresponding instances at all. However, MIL alternates between estimating the object representation and selecting positive object instances, which often converges to an unsatisfied local optima. To alleviate this problem, a number of efforts have been made by good initializations [16, 6], suitable optimization strategies[17, 18], and so on. Recently, deep learning has been used for weakly-supervised detection [7, 5, 8, 9]. A fundamental framework refers to weakly supervised deep detection network [7], which performs localization and classification within a two-stream architecture. To further improve performance, several extensions have been introduced by context design [19], saliency guidance [8], online classifier refinement [9], etc. However, the unsupervised selective search [20] or edgeBoxes[21] may limit the efficiency of these deep detection networks. More importantly, the performance of these detectors is considerably lower than that of fully-supervised detectors, due to the lack of elaborate object annotations.

Semi-Supervised Object Detection. Most semi-supervised detectors assume that several categories are fully-annotated [11, 12]. Via transferring the detection knowledge of these categories into the weakly-annotated ones, these detectors can improve the final performance on the whole set. However, [11, 12] attempt to adapt an image classifier to an object classifier, which is often effective on single-instance images. It may limit the detection capacity for wild images. In addition, these detectors often require moderate amount of object annotations, which can be still difficult or expensive to obtain.

Low-Shot Transfer Object Detection. Alternatively, [10] proposes a more reasonable data assumption to alleviate labelling burden, i.e., quite a few images are fully-annotated for each object category, and all other images are weakly-annotated with only image labels. But its performance is limited, without guidance of prior object knowledge. For this reason, several low-shot and/or transfer detection approaches [14, 13] have been proposed recently, in order to mimic the learning procedure of human. By taking advantage of large-scale benchmarks in source, these detectors can work with few object annotations in target. However, [13] ignores the weakly-annotated target images, which may restrict the generalization capacity of target detector. [14] attempts to borrow numerous weakly-annotated videos, but the iterative learning scheme lacks the efficiency of end-to-end learning. Different from these approaches, our POTD is a progressive learning procedure, which is built upon the fully end-to-end training framework with very few object annotations.

III Overall Learning Procedure of Progressive Object Transfer Detection (POTD)

Problem Definition. To effectively address a target detection task with little annotation burden, we define our detection problem according to the following settings in practice. First, we can access a well-trained detector in the source domain. Second, we partially annotate the training set in the target domain, e.g., quite a few images (like one-shot per category) are fully-annotated with object bounding boxes, and all others are weakly-annotated with only image labels. Finally, we consider one of the most challenging transfer cases, where the object categories in source and target are non-overlapped.

Overall Learning Procedure. In this work, we design a novel Progressive Object Transfer Detection (POTD) framework, for learning to detect like humans. The whole procedure of POTD is shown in Fig. 1, which consists of two elegant transfer stages, i.e., Low-Shot Transfer Detection (LSTD), and Weakly-Supervised Transfer Detection (WSTD). In LSTD, we adapt the source-domain detector to be a warm-up detector in the target domain. This stage can stabilize WSTD afterwards, by distilling rich object knowledge in the source domain. In WSTD, we adapt the warm-up detector to be a target detector, which can learn to detect with weakly-annotated images in a fully end-to-end learning manner. Via this human-like learning procedure, our POTD can effectively address the target detection task with little annotation burden.

Notations. To be concise, we list all the relevant notations in the procedure of POTD, w.r.t., two transfer detection stages including LSTD and WSTD in Table I. In the following, we describe these two stages of POTD in detail.

| General | Description |

|---|---|

| number of object classes in the source domain | |

| number of object classes in the target domain | |

| number of object proposals | |

| LSTD | Description |

| total training loss | |

| main loss (i.e., standard detection loss) | |

| coefficient of main loss | |

| loss of background depression (BD) | |

| coefficient of BD loss | |

| feature regions that refer to image background | |

| loss of source detection knowledge (SDK) | |

| coefficient of SDK loss | |

| SDK in source detector | |

| SDK in warm-up detector | |

| WSTD | Description |

| total training loss | |

| SDK loss | |

| coefficient of SDK loss | |

| SDK in target detector | |

| loss of recurrent object labelling (ROL) | |

| coefficient of ROL loss | |

| ROL loss for Classifier | |

| Prediction score vector of one image | |

| Ground truth image label | |

| Prediction score matrix of proposals for Classifier | |

| Pseudo label matrix of proposals for Classifier |

IV Low-Shot Transfer Detection (LSTD)

Since detection with weak annotations often gets stuck in local optima, we first design a warm-up stage, i.e., fine-tuning source-domain detector with fully-annotated images in the target domain. However, such annotated images are quite few (e.g., one-shot per category) for a target detection task, in order to reduce the annotation burden. This fact can significantly increase the fine-tuning difficulties. For this reason, we propose a novel low-shot transfer detection framework to alleviate overfitting.

IV-A Deep Detection Architecture for LSTD

We first design a flexible deep learning architecture, which can alleviate transfer difficulties from large-scale source domain to low-shot target domain. As shown in Fig. 2, it is a Faster-RCNN-style detection framework but with SSD-style region proposal network (RPN).

Why to Choose Faster-RCNN-Style Framework. This is mainly credited to one key design in Faster RCNN, i.e., region proposal network (RPN). More specifically, the object classifier in RPN is used to identify whether the proposal is object or not. Hence, it can learn the common traits of different objects, e.g., clear edge, uniform texture, and so on. By pretraining RPN with large-scale detection benchmark in the source domain, we can obtain the high-quality proposals for low-shot detection in the target domain. On the contrary, the object classification in SSD is one-stage, i.e., it has to deal with thousands of randomly-initialized proposals directly. This fact can deteriorate the target detection task, especially with only few annotated images.

Why to Design SSD-Style RPN. In the standard RPN [22], the bounding box regressor is designed separately for each categories. It means that, the parameters of regressor have to be re-initialized for each new domain, since object categories in different domains are often non-overlapped in practice. This often increases the overfitting risk during fine-tuning, when the target domain only contains few annotated images. Alternatively, we adapt RPN into a SSD style. Since the regressor in SSD is shared among all object categories, the pretrained parameters of SSD-style RPN can be reused as initialization in the low-shot target domain. This avoids the random re-initialization in the standard RPN, and thus reduces the fine-tuning burdens in the low-shot domain. In addition, we directly use SSD-style RPN for object localization, without regression refining after the ROI pooling in the standard Faster RCNN. The main reason is that, the multiple-convolutional-feature design of SSD is sufficient to localize objects with various sizes.

Discussion. Our deep architecture aims at reducing transfer learning difficulties for low-shot detection in the target domain. To achieve it, we flexibly leverage the core designs of both Faster RCNN and SSD. Additionally, this detector performs bounding box regression and object classification on two relatively separate places, which can further decompose the learning difficulties in low-shot detection.

IV-B Regularized Transfer Learning for LSTD

After designing a flexible deep architecture, we introduce an end-to-end regularized transfer learning framework for LSTD in Fig. 3. Specifically, this transfer procedure involves two detectors. Source Detector. We first use the large-scale detection benchmark in the source domain to train the basic architecture in Fig. 2. As a result, we obtain a source detector which contains rich source-domain object knowledge for generalization. Warm-Up Detector. After pretraining, we use source detector as initialization, and perform fine-tuning with a few fully-annotated training images in the target domain. The resulting detector is a target-domain detector. Since it can stabilize weakly-supervised transfer detection later on, we call it as the warm-up detector. We next describe how to perform fine-tuning to obtain the warm-up detector via LSTD.

Total Loss for LSTD. Due to the low-shot property, direct fine-tuning often traps into the overfitting risk. To alleviate this challenge, we propose two novel regularization terms, i.e., background depression (BD) and source-detection knowledge (SDK). Specifically, the total loss of fine-tuning can be written as

| (1) |

where refers to the standard loss summation of bounding box regression and object classification in the warm-up detector, and refer to BD and SDK regularization. , and are respectively the coefficients of the main loss, BD and SDK regularization.

Background Depression (BD) Regularization. In the low-shot scenario, the complex background may disturb the localization performance. For this reason, we propose a novel background-depression (BD) regularization, by using the ground-truth bounding boxes of fully-annotated training images in the target domain. First, we feed a target image into warm-up detector, and generate the convolutional feature cube from a middle-level convolutional layer. Second, we mask this convolutional cube with the ground-truth bounding boxes of all the objects in the image. Consequently, we can identify the feature regions that are corresponding to image background, namely . To depress the background disturbances, we use L2 regularization to penalize the activation of ,

| (2) |

With this , warm-up detector can suppress background regions while pay more attention to target objects, which is especially important for training with a few images. As shown in Fig. 4, our BD regularization can successfully reduce the background disturbances.

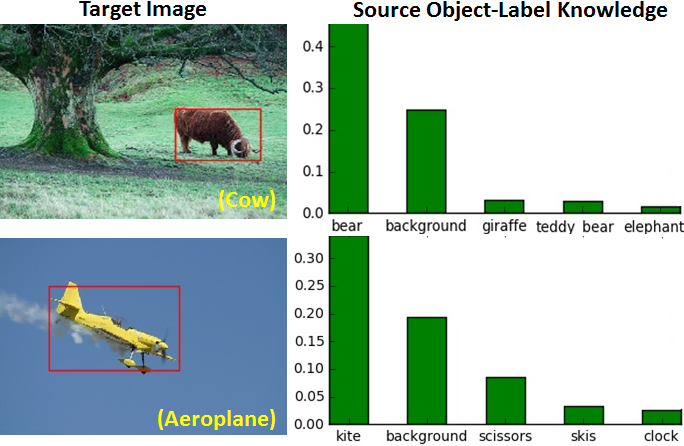

Source Detection Knowledge (SDK) Regularization. Our key insight is that, rich object knowledge in the large-scale source domain provides extra information about target domains. As shown in Fig. 5, Cow or Aeroplane may have a high response on Bear or Kite, due to the color or shape similarity. Apparently, this knowledge is important for fine-tuning warm-up detector, especially with few fully-annotated images in the target domain. Hence, we propose to distill it for each object proposal in the target domain.

(1) Extracting SDK from Source Detector. First, we feed a target image into warm-up detector, which can produce object proposals of this image. Then, we put these target proposals into the ROI pooling layer of source detector, which can generate a SDK matrix from the final FC layer. Each column of is the probability vector of a target proposal, w.r.t., categories (objects + background) in the source domain.

(2) Leveraging SDK into Warm-Up Detector. To incorporate SDK into fine-tuning, we add a SDK prediction branch at the end of warm-up detector. This branch can produce a prediction matrix for target proposals, where each column is the prediction vector of a target proposal, w.r.t., categories (objects + background) in the source domain. Consequently, we apply cross entropy between and as a regularization,

| (3) |

In this case, SDK can be effectively integrated into fine-tuning, which generalizes low-shot detection in the target domain.

(3) Discussion. LSTD is a learning step, instead of a detector. In this step, we transfer source detector (large-scale, source domain) into warm-up detector (low-shot, target domain). Specifically, we train warm-up detector with the regularization of source detector, i.e., we use source detector to extract SDK, and leverage it as extra supervision for training warm-up detector. As a result, LSTD can generalize warm-up detector with low-shot training images in the target domain. Moreover, it is worth mentioning that, our SDK transfer is different from knowledge distillation [23]. First, knowledge distillation is originally designed for model compression, while our SDK is proposed for transfer learning. Second, SDK is performed on each proposal for object detection. Hence, it is not the standard way of knowledge transfer, which often works on the whole image for object classification.

V Weakly-Supervised Transfer Detection (WSTD)

Via LSTD, we transfer source detector into warm-up detector in the target domain. Next, we apply weakly-annotated images to further boost target detection task. To achieve it, we design another critical stage of POTD, i.e., Weakly-Supervised Transfer Detection (WSTD), which can effectively handle weakly-annotated images in a fully end-to-end transfer framework (Fig. 6). Specifically, this transfer procedure involves two detectors. Warm-Up Detector. LSTD produces a warm-up detector, which is transferred from source detector, and trained on fully-annotated target images. Hence, this detector leverages the object knowledge from both source and target domains. Target Detector. We use warm-up detector as initialization, and perform fine-tuning with weakly-annotated images in the target domain. The resulting detector is a target-domain detector. Since it is used to produce the final detection result in the target domain, we call it as the target detector. We next describe how to perform fine-tuning to obtain target detector via WSTD.

Total Loss for WSTD. Specifically, we introduce the total loss of WSTD with two distinct parts,

| (4) |

On one hand, we transfer object supervision of warm-up detector via , which can further regularize target detector to enhance the generalization capacity. On the other hand, we design a novel recurrent object labelling (ROL) mechanism via , which can exploit confident proposals for learning to detect with weakly-annotated images in the target domain.

V-A Object Supervision From Warm-Up Detector

As mentioned before, weakly-annotated images lack object-level supervision, which makes the learning procedure trap into an unsatisfactory solution. Alternatively, warm-up detector can inherit rich object knowledge of source detector by LSTD. Hence, we propose to further transfer warm-up detector as target detector (Fig. 6), which can leverage the reliable object supervision to enhance weakly-supervised detection in the target domain.

Object Supervision from Warm-Up Detector. We fix warm-up detector as an extractor of object knowledge. First, we use the pretrained RPN of warm-up detector as an online proposal generator, which can produce high-quality proposals for weakly-annotated images in the target domain. Second, we feed these proposals into the ROI pooling layer of warm-up detector, and subsequently generate source detection knowledge from the SDK prediction branch.

Integrating Warm-Up Supervision into Target Detector. We add an extra FC layer as the SDK prediction branch of target detector. This branch can produce a prediction matrix for object proposals of weakly-annotated images. By using cross entropy between and , we integrate warm-up supervision into target detector,

| (5) |

Discussion. To further stabilize target detector with weakly-annotated images, we propose to leverage object supervision from warm-up detector. Note that, this supervision refers to source detection knowledge (SDK). It is transferred from source detector to warm-up detector (by Eq.(3)), and then from warm-up detector to target detector (by Eq. (5)). In other words, we use warm-up detector as a middle-stage, instead of directly transferring from source detector to target detector. Our key insight is that, warm-up detector is fine-tuned with fully-annotated target images during LSTD, i.e., it has been adaptively adjusted to produce effective target-domain proposals, and can reliably represent source-domain knowledge of these target proposals. Via such progressive learning, one can gradually promote the generalization capacity of object detector in the target domain.

V-B Recurrent Object Labelling (ROL)

For learning to detect with weakly-annotated images, we leverage source domain knowledge (i.e., object supervision of warm-up detector) in the previous section. Next, we integrate target domain knowledge for weakly-supervised detection. Specifically, we propose a recurrent object labelling (ROL) mechanism, which can exploit support proposals of weakly-annotated images, and refine object classifier online.

Classifier : Image-Level Supervision. After generating proposals of a weakly-annotated image, we feed them into ROI pooling of target detector (Fig. 6). For each proposal, conv13 in the target detector can generate a convolutional feature cube. We subsequently feed these cubes into recurrent object labelling (Fig. 7), which can identify confident proposals for classifier refinement. Specifically, we pass all the convolutional cubes through Classifier , and obtain a prediction matrix . Each column of refers to the probability vector of a proposal, and is the number of object categories in the target domain. Note that, we only have ground truth image label , since each image is weakly annotated in the stage of WSTD. To integrate this supervision into training, we sum over proposals and obtain an image score vector ,

| (6) |

Since there may be multiple objects in one image (i.e., multiple entries of can be 1), we apply the multi-label loss for Classifier ,

| (7) |

Via this image-level supervision, we can enhance reliability of proposal score , which will be used for object labelling afterwards.

Classifier : Support Proposal Mining. After obtaining the proposal score , we label object proposals in a recurrent manner. It is worth mentioning that, not all the proposals are confident enough to describe objects or background in an image. Hence, we design a support proposal mining procedure, where we label the highly-confident object and background proposals, according to the proposal score matrix . In the following, we illustrate the entire labelling procedure at Classifier , where we assume that the object category exists in an image.

(1) Labelling Support Object Proposals. First, we find the -th row of the prediction score matrix , and then pick out the most confident proposal which has the highest score,

| (8) |

Subsequently, we label this proposal as ‘ground truth box’ for category , and use its score as the pseudo label . Moreover, the spatial context is often important for weakly-supervised detection. Hence, we further assign category to those proposals, which are spatially adjacent to the highest-score proposal (e.g., IoU).

(2) Labelling Support Background Proposals. Traditionally, all the unlabelled proposals are assigned into the background category [9]. This design apparently introduces a large number of wrong annotations, since the unlabelled proposals, which are far from the highest-score proposal , may also contain objects. To alleviate it, we propose to exploit support background proposals from the rest unlabelled ones. Specifically, we assign the background category to the ones which have the moderate overlap (e.g., IoU) with the highest-score proposal . As a result, we can reduce wrong or redundant annotations which often lead to the unstable learning for weakly-supervised detection.

(3) Classification Loss. After labelling all the object categories in a weakly-annotated image, we can get the score matrix as pseudo label. For the support proposals of category , we assign the highest score into the corresponding entries of . This design can effectively associate the object category with its confident proposals, which stabilizes the training procedure of weakly-supervised detection [9]. For the unlabelled proposals, the corresponding columns are zero vectors in . Finally, we obtain the prediction score matrix from Classifier , and compute the cross entropy loss between and for training,

| (9) |

This procedure is done in a recurrent manner, and the total loss of our ROL module is

| (10) |

| LSTD | Source (large-scale, fully-annotated) | Target (low-shot, fully-annotated) |

|---|---|---|

| Task 1 | COCO (standard 80 classes, 118,287 training images) | ImageNet2015 (chosen 50 classes) |

| Task 2 | COCO (chosen 60 classes, 98,459 training images) | VOC2007 (standard 20 classes) |

| Task 3 | ImageNet2015 (chosen 181 classes, 151,603 training images) | VOC2010 (standard 20 classes) |

| Shots for Task 1 (mAP) | 1 | 2 | 5 | 10 | 30 |

|---|---|---|---|---|---|

| LSTDFT | 16.5 | 21.9 | 34.3 | 41.5 | 52.6 |

| LSTDFT+SDK | 18.1 | 25.0 | 35.6 | 43.3 | 55.0 |

| LSTDFT+SDK+BD | 19.2 | 25.8 | 37.4 | 44.3 | 55.8 |

| Shots for Task 2 (mAP) | 1 | 2 | 5 | 10 | 30 |

| LSTDFT | 27.1 | 46.1 | 57.9 | 63.2 | 67.2 |

| LSTDFT+SDK | 31.8 | 50.7 | 60.4 | 65.1 | 69.0 |

| LSTDFT+SDK+BD | 34.0 | 51.9 | 60.9 | 65.5 | 69.7 |

| Shots for Task 3 (mAP) | 1 | 2 | 5 | 10 | 30 |

| LSTDFT | 29.3 | 37.2 | 48.1 | 52.1 | 56.4 |

| LSTDFT+SDK | 32.7 | 40.8 | 49.7 | 54.1 | 57.9 |

| LSTDFT+SDK+BD | 33.6 | 42.5 | 50.9 | 54.5 | 58.3 |

| Tasks (mAP) | ||

|---|---|---|

| Task1 | 19.2 | 18.9 |

| Task2 | 34.0 | 34.5 |

| Task3 | 33.6 | 33.4 |

Discussion. First, we clarify the main difference between Online Instance Classifier Refinement (OICR) [9] and our ROL. Specifically, OICR is a label propagation and refinement technique for weakly-supervised detection. Similar to our ROL, it labels support object proposals via IOU. However, OICR assigns the background category without selection, while our ROL takes support proposal mining into account. As shown in Fig. 8, OICR labels many redundant proposals as background. What is worse, it tends to label true objects (e.g., left horse in the 1st image) mistakenly as background, and these noisy annotations can deteriorate the refining procedure of object classifier. On the contrary, our ROL carefully labels the contextual background regions around the confident proposals, which can reduce the labelling redundancy and improve the quality of training samples. Finally, we would like to emphasize that, our WSTD is used to imitate the generalization process of human learning, i.e., humans generalize the warm-up stage by exploiting objects from wild images without full annotations. To achieve it, WSTD investigates reliable object supervision from warm-up detector of LSTD. More importantly, it uses ROL for learning to detect objects from weakly-annotated images. In this case, WSTD can further generalize the detection performance in the target domain. Next, we evaluate progressive object transfer detection (POTD), by extensive experiments on a number of challenging datasets.

VI Experiments

In this section, we evaluate the performance of the proposed POTD on a number of challenging data settings. First, since POTD consists of two transfer detection stages (i.e., LSTD and WSTD), we deeply investigate them from different experimental aspects. Then, we compare our POTD with the state-of-the-art approaches, and show our contributions in practice.

VI-A LSTD

Data Settings. Since LSTD is a regularized transfer learning framework for low-shot detection, we adopt a number of detection benchmarks, i.e., COCO [25], ImageNet2015 [26], VOC2007 and VOC2010 [27], respectively as source and target of three transfer tasks (Table II). The training set is large-scale in the source domain of each task, while it is low-shot in the target domain (1/2/5/10/30 training images for each target-object class). The fully-annotated training shots are randomly selected in our experiments. To reproduce the results, we provide the data splits for all the tasks111. Furthermore, the object categories for source and target are carefully selected to be non-overlapped, in order to evaluate if LSTD can detect unseen object categories from few shots in the target domain. Finally, we use the standard PASCAL VOC detection rule on the test sets to report mean average precision (mAP) with 0.5 intersection-over-union (IOU).

Implementation Details. Unless stated otherwise, we perform LSTD as follows. First, the basic deep architecture of LSTD is built upon VGG16 [28], similar to SSD and Faster RCNN. For bounding box regression, we use the same structure in the standard SSD. For object classification, we apply the ROI pooling layer on conv7, and add two convolutional layers (conv12: , conv13: for task 1/2/3) before object classifier. Second, we select 100/100/64 proposals (task 1/2/3) for training source detector, while we select 64/64/64 proposals for warm-up detector. The loss coefficients of both BD and SDK are 0.5. Finally, the optimization strategy for both source and target is Adam [29], where the initial learning rate is 0.0002 (with 0.1 decay), the momentum/momentum2 is 0.9/0.99, and the weight decay is 0.0001. All our experiments are implemented on Caffe [30]. In the following, we evaluate the key designs of LSTD. To be fair, when we explore different settings of one design, others are with the basic setting above.

Basic Deep Structure of LSTD. We first evaluate the basic deep structure of LSTD respectively in the source and target domains, where we compare it with the closely-related SSD [24] and Faster RCNN [22]. For fairness, we choose task 1 to show the effectiveness. The main reason is that, the source data in task 1 is the standard COCO detection set, where SSD and Faster RCNN are well-trained with the state-of-the-art performance. Hence, we use the published SSD [24] and Faster RCNN [22] in this experiment, where the size of input images for SSD and our LSTD is , and Faster RCNN follows the settings in the original paper. In Table III, we report mAP on the test sets of both source and target domains in task 1. One can see that, our LSTD achieves a competitive mAP in the source domain. It illustrates that LSTD can be a state-of-art deep detector for large-scale training sets. More importantly, our LSTD outperforms both SSD and Faster RCNN significantly for low-shot detection in the target domain (one training image per target category), where all approaches are simply fine-tuned from their pre-trained models in the source domain. It shows that, LSTD yields a more effective deep architecture for low-shot detection, by leveraging the core designs of SSD and Faster RCNN. Finally, we investigate the structure robustness in LSTD itself. As the bounding box regression follows the standard SSD, we explore the object classifier in which we choose different convolutional layers (conv or conv) for ROI pooling. The results are comparable in Table III, showing the architecture robustness of LSTD. For consistency, we use conv for ROI pooling in all our experiments.

Regularized Transfer Learning of LSTD. We mainly evaluate if the proposed regularization can enhance transfer learning for LSTD, in order to boost low-shot detection. As shown in Table IV, both SDK and BD can significantly improve the baseline (i.e., direct fine-tuning), especially when the training set is scarce in the target domain (such as one-shot). Additionally, we show the architecture robustness of BD regularization in Table V. Specifically, we perform BD regularization on different convolutional layers. One can see that BD is generally robust to different convolutional layers. Hence, we apply BD on conv in our experiments for consistency.

| LSTD (Full Annotation) | WSTD (Weak Annotation) | mAP |

|---|---|---|

| 1-shot | 0-shot | 34.0 |

| 250-shot (VOC07) | 62.6 | |

| 800-shot (VOC07+12) | 62.8 | |

| 2-shot | 0-shot | 51.9 |

| 250-shot (VOC07) | 65.6 | |

| 800-shot (VOC07+12) | 66.0 | |

| 5-shot | 0-shot | 60.9 |

| 250-shot (VOC07) | 69.1 | |

| 800-shot (VOC07+12) | 69.6 | |

| 10-shot | 0-shot | 65.5 |

| 250-shot (VOC07) | 69.5 | |

| 800-shot (VOC07+12) | 70.1 | |

| 30-shot | 0-shot | 69.7 |

| 250-shot (VOC07) | 70.5 | |

| 800-shot (VOC07+12) | 71.3 |

| mAP of WSTD | Classifier 1 | Classifier 2 | Classifier 3 |

|---|---|---|---|

| OICR | 52.2 | 55.4 | 55.5 |

| Our ROL | 53.8 | 60.7 | 61.3 |

| Methods | aero | bike | bird | boat | bottle | bus | car | cat | chair | cow | table | dog | horse | mbike | person | plant | sheep | sofa | train | tv | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OICR(2shot) | 64.6 | 69.6 | 50.3 | 49.7 | 30.4 | 77.5 | 68.0 | 74.1 | 39.5 | 58.9 | 53.4 | 70.5 | 77.3 | 67.9 | 50.0 | 31.1 | 52.3 | 73.7 | 76.3 | 62.1 | 59.9 |

| OICR(5shot) | 70.4 | 72.0 | 57.7 | 56.6 | 33.8 | 75.6 | 69.7 | 80.0 | 42.8 | 59.9 | 67.4 | 75.6 | 76.4 | 68.5 | 53.5 | 32.8 | 52.8 | 71.5 | 77.5 | 65.8 | 63.0 |

| Our ROL(2shot) | 68.9 | 73.0 | 56.0 | 54.3 | 35.5 | 81.9 | 79.2 | 75.9 | 41.1 | 72.8 | 56.9 | 74.9 | 83.0 | 75.3 | 64.0 | 35.7 | 64.6 | 74.5 | 77.5 | 67.9 | 65.6 |

| Our ROL(5shot) | 75.1 | 76.3 | 66.2 | 58.6 | 42.6 | 80.1 | 80.0 | 82.6 | 39.7 | 76.1 | 66.1 | 80.5 | 82.4 | 78.9 | 68.6 | 38.6 | 72.0 | 70.2 | 78.4 | 69.6 | 69.1 |

| Methods | aero | bike | bird | boat | bottle | bus | car | cat | chair | cow | table | dog | horse | mbike | person | plant | sheep | sofa | train | tv | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OICR(2shot) | 73.3 | 64.1 | 50.8 | 44.1 | 31.9 | 64.5 | 55.8 | 72.3 | 34.3 | 52.8 | 31.9 | 73.6 | 71.1 | 69.0 | 52.2 | 29.3 | 45.0 | 59.2 | 72.9 | 53.0 | 55.1 |

| OICR(5shot) | 75.3 | 66.1 | 56.0 | 45.0 | 35.0 | 62.7 | 56.1 | 76.7 | 34.2 | 53.8 | 48.8 | 75.6 | 69.9 | 70.5 | 53.6 | 31.3 | 47.9 | 55.6 | 73.4 | 55.0 | 57.1 |

| Our ROL(2shot) | 77.7 | 69.2 | 57.0 | 48.3 | 33.6 | 73.9 | 67.5 | 77.1 | 35.1 | 67.7 | 34.6 | 78.5 | 78.2 | 73.9 | 65.9 | 34.1 | 62.4 | 59.5 | 76.8 | 55.4 | 61.3 |

| Our ROL(5shot) | 81.3 | 72.9 | 62.6 | 47.0 | 37.9 | 74.1 | 69.2 | 80.0 | 28.9 | 68.6 | 48.0 | 81.1 | 78.5 | 78.9 | 70.7 | 37.0 | 68.9 | 55.8 | 77.0 | 56.7 | 63.8 |

| Methods | aero | bike | bird | boat | bottle | bus | car | cat | chair | cow | table | dog | horse | mbike | person | plant | sheep | sofa | train | tv | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OICR(2shot) | 72.3 | 63.8 | 50.0 | 41.3 | 29.3 | 64.8 | 55.0 | 71.9 | 33.4 | 51.8 | 31.8 | 73.5 | 70.4 | 68.0 | 51.0 | 28.9 | 43.5 | 59.8 | 72.4 | 53.7 | 54.3 |

| OICR(5shot) | 73.7 | 66.5 | 55.0 | 41.3 | 32.1 | 63.1 | 55.2 | 76.1 | 33.2 | 52.7 | 48.9 | 75.3 | 69.6 | 69.1 | 52.3 | 30.0 | 46.9 | 57.1 | 73.5 | 55.8 | 56.4 |

| Our ROL(2shot) | 77.1 | 68.8 | 55.8 | 45.0 | 30.7 | 74.0 | 67.8 | 76.8 | 34.8 | 67.4 | 34.8 | 78.3 | 77.7 | 73.5 | 65.3 | 33.3 | 60.9 | 60.2 | 75.9 | 56.2 | 60.7 |

| Our ROL(5shot) | 80.2 | 73.4 | 61.6 | 43.0 | 35.2 | 74.5 | 69.4 | 79.6 | 28.8 | 68.1 | 48.3 | 80.8 | 78.5 | 78.0 | 70.0 | 35.8 | 67.8 | 56.7 | 76.6 | 57.8 | 63.2 |

VI-B WSTD

Data Settings. We mainly evaluate WSTD on task 2, since it is the most representative setting among the three tasks in the previous section. First, one image contains multiple objects in COCO and VOC2007, while one image contains one object in ImageNet2015. Hence, task 2 is a more realistic data setting. Second, the target domain refers to VOC2007 with the standard 20 categories, which is more convenient to compare with other approaches. Furthermore, we extend the target domain of task 2 as VOC2010 and VOC2012 later on, in order to further show the effectiveness of WSTD.

Implementation Details. First, we start WSTD from warm-up detector, which is trained with 1 fully-annotated image per category of VOC2007 in the stage of LSTD. Then, we utilize WSTD to train target detector, where the training images are weakly annotated. Second, we choose 128 proposals for each image in WSTD, after non-maximum-suppression (NMS) of 1500 proposals at 0.75. Moreover, we simultaneously enlarge the loss coefficients in WSTD (i.e., 50 for ROL and 150 for SDK), in order to speed up convergence. Finally, the optimization strategy is Adam [29], where the initial learning rate is 0.00001 (with 0.1 decay), and the weight decay is 0.0001. All other settings are the same as LSTD.

WSTD vs. LSTD. After applying LSTD with few fully-annotated images, we continue to perform WSTD with weakly-annotated images. The result is shown in Table VI. First, when the number of fully-annotated training images in LSTD is small, one can perform WSTD to improve the detection mAP with weakly-annotated images. For example, the mAP is 34.0 when we fully annotate 1 shot per class in LSTD. It becomes as 62.6 after we use 250 weakly-annotated images per class in WSTD, and tends to stabilize around 62.8 after we use 800 weakly-annotated images per class in WSTD. It illustrates that, WSTD can significantly boost low-shot detection via weakly-annotated images, and tends to be saturated when the number of weakly-annotated images increases. Second, when the number of fully-annotated training images in LSTD is getting larger, the efforts of weakly-annotated images in WSTD is getting smaller. For example, the detection mAP is 69.7 when we fully annotate 30 shots per class in LSTD. After we use around 250 weakly-annotated images per class in WSTD, the mAP slightly increases to 70.5. It illustrates that, fully-annotated images take more effort for detection in the target domain. Third, there exists the tradeoff between LSTD and WSTD. For example, the mAP is 70.1, when we use 10 fully-annotated images (per class) in LSTD and around 800 weakly-annotated images (per class) in WSTD. Alternatively, we can obtain the similar mAP (70.5), when we use 30 fully-annotated images (per class) in LSTD and around 250 weakly-annotated images (per class) in WSTD. Since weakly-annotated images can be straightforwardly obtained from internet, while fully-annotated images have to be obtained via the exhausted labelling procedure. Hence, it is preferable choice to use few fully-annotated images in LSTD and most weakly-annotated images in WSTD.

Object Supervision from Warm-Up Detector. We evaluate whether object supervision of warm-up detector is effective for WSTD. Specifically, we consider three cases in the following. SDK (without) denotes that we ignore SDK in warm-up detector when performing WSTD. SDK (unweighted) denotes that we use the SDK loss in Eq. (5) when performing WSTD. SDK (weighted) denotes that we add an extra weight in SDK loss, i.e., is used as object supervision in Eq. (5). In our experiment, is set to be , in order to further enhance the importance of SDK. The result is shown in Fig. 9. One can see that, SDK (unweighted) is comparable to SDK (without). It illustrates that SDK needs to be further exploited in the object-level. Furthermore, SDK (weighted) achieves the best among these settings, showing that can further take the importance of SDK into account.

Recurrent Object Labelling (ROL). We mainly evaluate ROL, w.r.t., number of proposals, IoU threshold, and ROL vs. OICR [9]. (1) Number of Proposals. As shown in Fig. 10 (a), mAP of WSTD first increases and then decreases, when we increase the number of proposals for ROL. It illustrates that, the number of proposals is required to be sufficient for a good detection performance. But too many proposals may introduce noisy annotations to deteriorate ROL. Hence, we choose 128 proposals in the rest experiments, due to its outstanding performance. (2) IoU Threshold. In ROL, we use IoU thresholds to selectively mine support proposals as objects (i.e., IoU ) or background (i.e., IoU ). Hence, we evaluate the influence of IoU thresholds in Fig. 10 (b)-(c), where we change for proposals labelled as objects ( in this case), and change for proposals labelled as background ( in this case). As or increases, mAP of WSTD first increases and then decreases. It shows that, the threshold should not be too loose or tight for support proposal mining. Hence, we set and in the rest experiments.

ROL vs. OICR [9]. OICR is Online Instance Classifier Refinement, which addresses weakly-supervised detection via label propagation and refinement [9]. It can be used with any detection backbone, just like our ROL. To further show the effectiveness of ROL, we respectively perform WSTD with OICR [9] and our proposed ROL (both utilize 128 proposals). In Table VII, our ROL significantly outperforms OICR for all the classifiers in the recurrent steps. This is mainly because that, our ROL selects support proposals attentively, which can largely reduce redundant proposals and noisy annotations. Furthermore, we evaluate ROL vs. OICR [9] on each category of VOC2007/2010/2012. For most object categories in Table X, X and X, our ROL outperforms OICR with a large margin, showing the effectiveness of ROL.

| Weakly-Supervised (mAP) | VOC07 | VOC10 | VOC12 |

|---|---|---|---|

| WSDDN [31] | 34.8 | 36.2 | - |

| STL [18] | 41.7 | - | 38.3 |

| WCCN [32] | 42.8 | 39.5 | 37.9 |

| PDA [33] | 39.5 | 30.7 | 39.1 |

| OICR [9] | 41.2 | - | 37.9 |

| SGW [8] | 43.5 | - | 39.6 |

| Semi-Supervised (mAP) | VOC07 | VOC10 | VOC12 |

| MSPLD(2shot)[10] | 37.4 | - | - |

| MSPLD(3shot)[10] | 44.8 | - | - |

| MSPLD(4shot)[10] | 47.5 | - | - |

| Fully-Supervised (mAP) | VOC07 | VOC10 | VOC12 |

| Faster RCNN [22] | 69.9 | - | 70.4 (07+12) |

| SSD [24] | 68.0 | - | 72.4 (07+12) |

| Low-Shot Transfer (mAP) | VOC07 | VOC10 | VOC12 |

| CBL(2shot)[14] | 67.1 | 63.8 | 63.2 |

| CBL(all)[14] | 68.9 | - | 64.6 |

| Our POTD(1shot) | 62.6 | 58.6 | 58.1 |

| Our POTD(2shot) | 65.6 | 61.3 | 60.7 |

| Our POTD(5shot) | 69.1 | 63.8 | 63.2 |

| Our POTD(all) | 76.1 | 71.8 | 71.3 |

VI-C Comparison with The-State-of-The-Art

In this section, we compare our POTD with a number of the state-of-the-art approaches in Table XI. First, the performance of weakly-supervised / semi-supervised detectors is far from competitive to fully-supervised detectors, due to the lack of object-level supervision. Alternatively, low-shot transfer detectors can achieve the competitive results, even though few images are fully-annotated in the target domain. The main reason is that these transfer detectors can mimic the human learning, which leverages source-domain knowledge as object prior. Second, we compare POTD with the recent computational baby learning (CBL) [14]. One can see that, POTD(2shot) is competitive to CBL(2shot), but it uses much less weakly-annotated data, i.e., POTD uses 250 weakly-annotated images per category while CBL uses 20,000 weakly-annotated videos per category. Finally, the subscript means that all training images are fully-annotated. In this case, POTD is simply reduced as LSTD without using weakly-labeled images. As expected, it significantly outperforms CBL(all), and achieves the comparable results to the fully-supervised detectors. The main reason is that, our POTD leverages the key insight of transfer detection, which can inherit rich source knowledge to boost detection accuracy in the target domain.

VI-D Visualization

Detection Visualization. We visualize two transfer detection stages of POTD in Fig. 11, where one training image is fully annotated in the target domain (VOC2007). First, LSTD(1shot) can achieve a reasonably good performance, via transferring object knowledge from source domain. Second, WSTD(1shot) can further generalize detection with weakly-annotated images. Hence, we can see that our approach can boost target-domain detection progressively and effectively but with little annotation burden.

Error Mode Analysis. We compare LSTD(1shot) with WSTD(1shot), according to error model analysis of VOC2007 in Fig. 12. First, WSTD can largely reduce various error types of LSTD, according to the 1st and 2nd row of Fig. 12. It shows that WSTD can further generalize target detector with weakly-annotated images. Second, one can see that in the 3rd and 4th rows of Fig. 12, the distribution of error types for LSTD is different from the one for WSTD. The main reason is that, the LSTD stage is built on low-shot but fully-annotated images, while the WSTD stage is built on large-scale but weakly-annotated images. In this case, LSTD is largely confused by similar objects and/or others, due to the lack of training images. Alternatively, WSTD is largely confused by poor localization and/or background, due to the lack of object-level supervision.

VII Conclusion

In this paper, we propose a novel progressive object transfer detection (POTD) framework. First, POTD effectively integrates various object supervision of different domains into a progressive detection procedure, i.e., from source to target domains, from large to few data, from full to weak annotations. Via this human-like learning, POTD can boost a target detection task with little annotation burden. Second, each detection stage in POTD is efficiently designed with delicate transfer insights, where LSTD is used as warm-up for WSTD generalization. Finally, we conduct extensive experiments to show that POTD outperforms other state-of-the-art methods.

References

- [1] R. Girshick, “Fast R-CNN,” in ICCV, 2015.

- [2] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” in NIPS, 2015.

- [3] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. E. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” in ECCV, 2016.

- [4] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” in ICCV, 2017.

- [5] A. Diba, V. Sharma, A. Pazandeh, H. Pirsiavash, and L. V. Gool, “Weakly Supervised Cascaded Convolutional Networks,” in CVPR, 2017, pp. 5131–5139.

- [6] D. Li, J. B. Huang, Y. Li, S. Wang, and M. H. Yang, “Weakly Supervised Object Localization with Progressive Domain Adaptation,” in CVPR, 2016.

- [7] H. Bilen and A. Vedaldi, “Weakly Supervised Deep Detection Networks,” in CVPR, 2016, pp. 2846–2854.

- [8] B. Lai and X. Gong, “Saliency Guided End-to-End Learning for Weakly Supervised Object Detection,” in IJCAI, 2017.

- [9] P. Tang, X. Wang, X. Bai, and W. Liu, “Multiple Instance Detection Network with Online Instance Classifier Refinement,” in CVPR, 2017.

- [10] X. Dong, L. Zheng, F. Ma, Y. Yang, and D. Meng, “Few-shot Object Detection,” arXiv:1706.08249, 2017.

- [11] J. Hoffman, S. Guadarrama, E. Tzeng, J. Donahue, R. B. Girshick, T. Darrell, and K. Saenko, “LSDA: Large Scale Detection Through Adaptation,” in NIPS, 2014.

- [12] Y. Tang, J. Wang, B. Gao, E. Dellandréa, R. Gaizauskas, and L. Chen, “Large Scale Semi-Supervised Object Detection Using Visual and Semantic Knowledge Transfer,” in CVPR, 2016.

- [13] H. Chen, Y. Wang, G. Wang, and Y. Qiao, “LSTD: A Low-Shot Transfer Detector for Object Detection,” in AAAI, 2018.

- [14] X. Liang, S. Liu, Y. Wei, L. Liu, L. Lin, and S. Yan, “Towards Computational Baby Learning: A Weakly-Supervised Approach for Object Detection,” ICCV, pp. 999–1007, 2015.

- [15] T. G. Dietterich, R. H. Lathrop, and T. Lozano-Pérez, “Solving the Multiple Instance Problem with Axis-Parallel Rectangles,” Artif. Intell., vol. 89, pp. 31–71, 1997.

- [16] R. G. Cinbis, J. Verbeek, and C. Schmid, “Weakly Supervised Object Localization with Multi-Fold Multiple Instance Learning,” PAMI, vol. 39, no. 1, pp. 189–203, Jan 2017.

- [17] H. Bilen, M. Pedersoli, and T. Tuytelaars, “Weakly supervised object detection with convex clustering,” in CVPR, 2015, pp. 1081–1089.

- [18] Z. Jie, Y. Wei, X. Jin, J. Feng, and W. Liu, “Deep Self-Taught Learning for Weakly Supervised Object Localization,” in CVPR, 2017, pp. 4294–4302.

- [19] V. Kantorov, M. Oquab, M. Cho, and I. Laptev, “ContextLocNet: Context-Aware Deep Network Models for Weakly Supervised Localization,” in ECCV, 2016.

- [20] J. R. R. Uijlings, K. E. A. van de Sande, T. Gevers, and A. W. M. Smeulders, “Selective Search for Object Recognition,” IJCV, vol. 104, pp. 154–171, 2013.

- [21] C. L. Zitnick and P. Dollár, “Edge Boxes: Locating Object Proposals from Edges,” in ECCV, 2014.

- [22] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” IEEE TPAMI, 2016.

- [23] G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” arXiv preprint arXiv:1503.02531, 2015.

- [24] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” in ECCV, 2016.

- [25] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft COCO: Common objects in context,” in ECCV, 2014.

- [26] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in CVPR, 2009.

- [27] M. Everingham, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman, “The Pascal Visual Object Classes (VOC) Challenge,” IJCV, 2010.

- [28] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv:1409.1556, 2014.

- [29] D. P. Kingma and J. L. Ba, “Adam: a Method for Stochastic Optimization,” in ICLR, 2015.

- [30] Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell, “Caffe: Convolutional Architecture for Fast Feature Embedding,” in arXiv preprint arXiv:1408.5093, 2014.

- [31] H. Bilen and A. Vedaldi, “Weakly supervised deep detection networks,” in CVPR, 2016.

- [32] A. Diba, V. Sharma, A. Pazandeh, H. Pirsiavash, and L. Van Gool, “Weakly Supervised Cascaded Convolutional Networks,” arXiv:1611.08258, 2016.

- [33] D. Li, J.-B. Huang, Y. Li, S. Wang, and M.-H. Yang, “Weakly supervised object localization with progressive domain adaptation,” in CVPR, 2016.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/97b1838f-891b-40a1-8276-37e8981b13d4/x4.png) |

Hao Chen received his B.S. degree in optical-electronic information engineering and M.S. degree in pattern recognition and intelligent system from Huazhong University of Science and Technology (HUST), Wuhan, China, in 2015 and 2018, respectively. He is currently a PhD student in computer science at University of Maryland, College Park. His research interests include object detection, video understanding and deep learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/97b1838f-891b-40a1-8276-37e8981b13d4/x5.png) |

Yali Wang received the Ph.D. degree in computer science from Laval University, Canada, in 2014. He is currently an Associate Professor with Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences. His research interests are deep learning and computer vision, machine learning and statistics. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/97b1838f-891b-40a1-8276-37e8981b13d4/x6.png) |

Guoyou Wang received the B.S. degree in electronic engineering and the M.S. degree in pattern recognition and intelligent system from Huazhong University of Science and Technology, Wuhan, China, in 1988 and 1992, respectively. He is currently a Professor with the Institute for Pattern Recognition and Artificial Intelligence, Huazhong University of Science and Technology. His current research interests include image processing, image compression, pattern recognition, artificial intelligence, and machine learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/97b1838f-891b-40a1-8276-37e8981b13d4/x7.png) |

Xiang Bai received his B.S., M.S., and Ph.D. degrees from Huazhong University of Science and Technology (HUST), Wuhan, China, in 2003, 2005, and 2009, respectively, all in electronics and information engineering. He is currently a Professor with the School of Electronic Information and Communications, HUST. His research interests include object recognition, shape analysis, and OCR. He received IAPR/ICDAR Young Investigator Award in 2019. He is an associate editor for Pattern Recognition, Pattern Recognition Letters, and Frontiers of Computer Science. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/97b1838f-891b-40a1-8276-37e8981b13d4/x8.png) |

Yu Qiao (SM’13) received the Ph.D. degree from The University of Electro-Communications, Japan, in 2006. He was a JSPS Fellow and a Project Assistant Professor with The University of Tokyo from 2007 to 2010. He is currently a Professor with Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences. He has authored over 140 papers in journals and conference including, PAMI, IJCV, TIP, ICCV, CVPR, ECCV, AAAI. His research interests include computer vision, deep learning, and intelligent robots. He received the Lu Jiaxi Young Researcher Award from the Chinese Academy of Sciences in 2012. He was the first runner-up at the ImageNet Large Scale Visual Recognition Challenge 2015 in scene recognition and the winner at the ActivityNet Large Scale Activity Recognition Challenge 2016 in video classification. |