Probabilistic Implicit Scene Completion

Abstract

We propose a probabilistic shape completion method extended to the continuous geometry of large-scale 3D scenes. Real-world scans of 3D scenes suffer from a considerable amount of missing data cluttered with unsegmented objects. The problem of shape completion is inherently ill-posed, and high-quality result requires scalable solutions that consider multiple possible outcomes. We employ the Generative Cellular Automata that learns the multi-modal distribution and transform the formulation to process large-scale continuous geometry. The local continuous shape is incrementally generated as a sparse voxel embedding, which contains the latent code for each occupied cell. We formally derive that our training objective for the sparse voxel embedding maximizes the variational lower bound of the complete shape distribution and therefore our progressive generation constitutes a valid generative model. Experiments show that our model successfully generates diverse plausible scenes faithful to the input, especially when the input suffers from a significant amount of missing data. We also demonstrate that our approach outperforms deterministic models even in less ambiguous cases with a small amount of missing data, which infers that probabilistic formulation is crucial for high-quality geometry completion on input scans exhibiting any levels of completeness.

1 Introduction

High-quality 3D data can create realistic virtual 3D environments or provide crucial information to interact with the environment for robots or human users (Varley et al. (2017)). However, 3D data acquired from a real-world scan is often noisy and incomplete with irregular samples. The task of 3D shape completion aims to recover the complete surface geometry from the raw 3D scans. Shape completion is often formulated in a data-driven way using the prior distribution of 3D geometry, which often results in multiple plausible outcomes given incomplete and noisy observation. If one learns to regress a single shape out of multi-modal shape distribution, one is bound to lose fine details of the geometry and produce blurry outputs as noticed with general generative models (Goodfellow (2017)). If we extend the range of completion to the scale of scenes with multiple objects, the task becomes even more challenging with the memory and computation requirements for representing large-scale high resolution 3D shapes.

In this work, we present continuous Generative Cellular Automata (cGCA), which generates multiple continuous surfaces for 3D reconstruction. Our work builds on Generative Cellular Automata (GCA) (Zhang et al. (2021)), which produces diverse shapes by progressively growing the object surface from the immediate neighbors of the input shape. cGCA inherits the multi-modal and scalable generation of GCA, but overcomes the limitation of discrete voxel resolution producing high-quality continuous surfaces. Specifically, our model learns to generate diverse sparse voxels associated with their local latent codes, namely sparse voxel embedding, where each latent code encodes the deep implicit fields of continuous geometry near each of the occupied voxels (Chabra et al. (2020); Jiang et al. (2020)). Our training objective maximizes the variational lower bound for the log-likelihood of the surface distribution represented with sparse voxel embedding. The stochastic formulation is modified from the original GCA, and theoretically justified as a sound generative model.

We demonstrate that cGCA can faithfully generate multiple plausible solutions of shape completion even for large-scale scenes with a significant amount of missing data as shown in Figure 1. To the best of our knowledge, we are the first to tackle the challenging task of probabilistic scene completion, which requires not only the model to generate multiple plausible outcomes but also be scalable enough to capture the wide-range context of multiple objects.

We summarize the key contributions as follows: (1) We are the first to tackle the problem of probabilistic scene completion with partial scans, and provide a scalable model that can capture large-scale context of scenes. (2) We present continuous Generative Cellular Automata, a generative model that produces diverse continuous surfaces from a partial observation. (3) We modify infusion training (Bordes et al. (2017)) and prove that the formulation indeed increases the variational lower bound of data distribution, which verifies that the proposed progressive generation is a valid generative model.

2 Preliminaries: Generative Cellular Automata

Continuous Generative Cellular Automata (cGCA) extends the idea of Generative Cellular Automata (GCA) by Zhang et al. (2021) but generates continuous surface with implicit representation instead of discrete voxel grid. For the completeness of discussion, we briefly review the formulation of GCA.

Starting from an incomplete voxelized shape, GCA progressively updates the local neighborhood of current occupied voxels to eventually generate a complete shape. In GCA, a shape is represented as a state , a set of binary occupancy for every cell , where the occupancy grid stores only the sparse cells on the surface. Given a state of an incomplete shape , GCA evolves to the state of a complete shape by sampling from the Markov chain

| (1) |

where is a fixed number of transitions and is a homogeneous transition kernel parameterized by neural network parameters . The transition kernel is implemented with sparse CNN (Graham et al. (2018); Choy et al. (2019)), which is a highly efficient neural network architecture that computes the convolution operation only on the occupied voxels.

The progressive generation of GCA confines the search space of each transition kernel at the immediate neighborhood of the current state. The occupancy probability within the neighborhood is regressed following Bernoulli distribution, and then the subsequent state is independently sampled for individual cells. With the restricted domain for probability estimation, the model is scalable to high resolution 3D voxel space. GCA shows that the series of local growth near sparse occupied cells can eventually complete the shape as a unified structure since the shapes are connected. While GCA is a scalable solution for generating diverse shapes, the grid representation for the 3D geometry inherently limits the resolution of the final shape.

3 Continuous Generative Cellular Automata

In Sec. 3.1, we formally introduce an extension of sparse occupancy voxels to represent continuous geometry named sparse voxel embedding, where each occupied voxel contains latent code representing local implicit fields. We train an autoencoder that can compress the implicit fields into the embeddings and vice versa. Then we present the sampling procedure of cGCA that generates 3D shape in Sec. 3.2, which is the inference step for shape completion. Sec. 3.3 shows the training objective of cGCA, which approximately maximizes the variational lower bound for the distribution of the complete continuous geometry.

3.1 Sparse Voxel Embedding

In addition to the sparse occupied voxels of GCA, the state of cGCA, named sparse voxel embedding, contains the associated latent code, which can be decoded into continuous surface. Formally, the state of cGCA is defined as a set of pair of binary occupancy and the latent code , for the cells in a three-dimensional grid

| (2) |

Similar to GCA, cGCA maintains the representation sparse by storing only the set of occupied voxels and their latent codes, and sets if .

The sparse voxel embedding can be converted to and from the implicit representation of local geometry with neural networks, inspired by the work of Peng et al. (2020), Chabra et al. (2020), and Chibane et al. (2020a). We utilize the (signed) distance to the surface as the implicit representation, and use autoencoder for the conversion. The encoder produces the sparse voxel embedding from the coordinate-distance pairs , where is a 3D coordinate and is the distance to the surface, . The decoder , on the other hand, regresses the local implicit field value at the 3D position given the sparse voxel embedding , for given coordinate-distance pairs . The detailed architecture of the autoencoder is described in Appendix A, where the decoder generates continuous geometry by interpolating hierarchical features extracted from sparse voxel embedding. An example of the conversion is presented on the left side of Fig. 2, where the color of the sparse voxel embedding represents clustered labels of the latent codes with k-means clustering (Hartigan & Wong (1979)). Note that the embedding of a similar local geometry, such as the seat of the chair, exhibits similar values of latent codes.

The parameters of the autoencoder are jointly optimized by minimizing the following loss function:

| (3) |

where and is the size of a single voxel. The first term in Eq. (3) corresponds to minimizing the normalized distance and the second is the regularization term for the latent code weighted by hyperparameter . Clamping the maximum distance makes the network focus on predicting accurate values at the vicinity of the surface (Park et al. (2019); Chibane et al. (2020b)).

3.2 Sampling from continuous Generative Cellular Automata

The generation process of cGCA echos the formulation of GCA (Zhang et al. (2021)), and repeats steps of sampling from the transition kernel to progressively grow the shape. Each transition kernel is factorized into cells within the local neighborhood of the occupied cells of the current state, 111We use the notation if for to denote occupied cells. given a distance metric and the radius :

| (4) |

Note that the distribution is further decomposed into the occupancy and the latent code , where we denote and as the random variable of occupancy and latent code for cell in state . Therefore the shape is generated by progressively sampling the occupancy and the latent codes for the occupied voxels which are decoded and fused into a continuous geometry. The binary occupancy is represented with the Bernoulli distribution

| (5) |

where is the estimated occupancy probability at the corresponding cell . With our sparse representation, the distribution of the latent codes is

| (6) |

is a Dirac delta distribution at indicating that when . For the occupied voxels (), follows the normal distribution with the estimated mean of the latent code and the predefined standard deviation , where decreases with respect to .

Initial State.

Given an incomplete point cloud, we set the initial state of the sampling chain by setting the occupancy to be 1 for the cells that contain point cloud and associating the occupied cells with a latent code sampled from the isotropic normal distribution. However, the input can better describe the provided partial geometry if we encode the latent code of the occupied cells with the encoder . The final completion is more precise when all the transitions are conditioned with the initial state containing the encoded latent code. Further details are described in Appendix A.

Mode Seeking.

While we effectively model the probabilistic distribution of multi-modal shapes, the final reconstruction needs to converge to a single coherent shape. Naïve sampling of the stochastic transition kernel in Eq. (4) can include noisy voxels with low-occupancy probability. As a simple trick, we augment mode seeking steps that determine the most probable mode of the current result instead of probabilistic sampling. Specifically, we run additional steps of the transition kernel but we select the cells with probability higher than 0.5 and set the latent code as the mean of the distribution . The mode seeking steps ensure that the final shape discovers the dominant mode that is closest to as depicted in Fig. 2, where it can be transformed into implicit function with the pretrained decoder .

3.3 Training Continuous Generative Cellular Automata

We train a homogeneous transition kernel , whose repetitive applications eventually yield the samples that follow the learned distribution. However, the data contains only the initial and the ground truth state , and we need to emulate the sequence for training. We adapt infusion training (Bordes et al. (2017)), which induces the intermediate transitions to converge to the desired complete state. To this end, we define a function that finds the valid cells that are closest to the complete shape within the neighborhood of the current state :

| (7) |

Then, we define the infusion kernel factorized similarly as the sampling kernel in Eq. (4):

| (8) |

The distributions for both and are gradually biased towards the ground truth final shape with the infusion rate , which increases linearly with respect to time step, i.e., , with :

| (9) |

| (10) |

Here is an indicator function, and we will denote as the occupancy and latent code of the ground truth complete shape at coordinate .

cGCA aims to optimize the log-likelihood of the ground truth sparse voxel embedding . However, since the direct optimization of the exact log-likelihood is intractable, we modify the variational lower bound using the derivation of diffusion-based models (Sohl-Dickstein et al. (2015)):

| (11) | ||||

where the full derivation is in Appendix B.1. We now analyze separately. We ignore the term during optimization since it contains no trainable parameters. for can be decomposed as the following :

| (12) |

where the full derivation is in Appendix B.2. Since and are the KL divergence between Bernoulli and normal distributions, respectively, can be written in a closed-form. In practice, the scale of can be much larger than that of . This results in local minima in the gradient-based optimization and reduces the occupancy probability for every cell. So we balance the two losses by multiplying a hyperparameter at , which is fixed as for all experiments.

The last term can be written as following:

| (13) |

A problem rises when computing , since the usage of Dirac distribution makes if , which does not produce a valid gradient for optimization. However, we can replace the loss with a well-behaved loss for , by using the following proposition:

Proposition 1.

By replacing with the indicator function when computing , , for

Proof.

The proof is found in Appendix B.3. ∎

The proposition above serves as a justification for approximating as , with the benefits of having a simpler training procedure and easier implementation. Replacing with can be regarded as a reweighting technique that naturally avoids divergence in the lower bound, since both functions output a non-zero value only at . Further discussions about the replacement are in Appendix B.3.

In conclusion, the training procedure is outlined as follows:

-

1.

Sample by .

-

2.

For , update with , where is the learning rate.

Note that the original infusion training (Bordes et al. (2017)) also attempts to minimize the variational lower bound, employing the Monte Carlo approximation with reparameterization trick (Kingma & Welling (2014)) to compute the gradients. However, our objective avoids the approximations and can compute the exact lower bound for a single training step. The proposed simplification can be applied to infusion training with any data structure including images. We also summarize the difference of our formulation compared to GCA in Appendix C.

4 Experiments

We test the probabilistic shape completion of cGCA for scenes (Section 4.1) and single object (Section 4.2). For all the experiments, we use latent code dimension , trained with regularization parameter . All the results reported are re-trained for each dataset. Further implementation details are in the Appendix A.2 and effects of mode seeking steps are discussed in Appendix E.

4.1 Scene Completion

In this section, we evaluate our method on two datasets: ShapeNet scene (Peng et al. (2020)) and 3DFront (Fu et al. (2021)) dataset. The input point cloud are created by iteratively removing points within a fixed distance from a random surface point. We control the minimum preserved ratio of the original complete surface points for each object in the scene to test varying levels of completeness. The levels of completeness tested are 0.2, 0.5, and 0.8 with each dataset trained/tested separately. We evaluate the quality and diversity of the completion results by measuring the Chamfer-L1 distance (CD), total mutual distance (TMD), respectively, as in the previous methods (Peng et al. (2020); Zhang et al. (2021)). For probabilistic methods, five completion results are generated and we report the minimum and average of Chamfer-L1 distance (min. CD, avg. CD). Note that if the input is severely incomplete, there exist various modes of completion that might be feasible but deviate from its ground truth geometry. Nonetheless, we still compare CD assuming that plausible reconstructions are likely to be similar to the ground truth.

| min. rate 0.2 | min. rate 0.5 | min. rate 0.8 | |||||||

| Method | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD |

| ConvOcc | 3.60 | - | - | 1.33 | - | - | 0.74 | - | - |

| IFNet | 12.94 | - | - | 8.55 | - | - | 7.49 | - | - |

| GCA | 4.97 | 6.32 | 11.56 | 3.02 | 3.54 | 4.92 | 2.50 | 2.64 | 2.76 |

| cGCA | 2.80 | 3.88 | 10.07 | 1.16 | 1.49 | 3.91 | 0.69 | 0.87 | 3.05 |

| cGCA (w/ cond.) | 1.75 | 2.33 | 5.38 | 0.87 | 1.08 | 2.96 | 0.57 | 0.64 | 2.34 |

ShapeNet Scene. ShapeNet scene contains synthetic rooms that contain multiple ShapeNet (Chang et al. (2015)) objects, which have been randomly scaled with randomly scaled floor and random walls. We compare the performance of cGCA with two deterministic scene completion models that utilize occupancy as the implicit representation: ConvOcc (Peng et al. (2020)) and IFNet (Chibane et al. (2020a)). We additionally test the quality of our completion against a probabilistic method of GCA (Zhang et al. (2021)). Both GCA and cGCA use voxel resolution with transitions. cGCA (w/ cond.) indicates a variant of our model, where each transition is conditioned on the initial state obtained by the encoder as discussed in Sec. 3.2.

Table 1 contains the quantitative comparison on ShapeNet scene. Both versions of cGCA outperform all other methods on min. CD for all level of completeness. The performance gap is especially prominent for highly incomplete input, which can be visually verified from Fig. 3. The deterministic models generate blurry objects given high uncertainty, while our method consistently generates detailed reconstructions for inputs with different levels of completeness. Our result coincides with the well-known phenomena of generative models, where the deterministic models fail to generate crisp outputs of multi-modal distribution (Goodfellow (2017)). Considering practical scenarios with irregular real-world scans, our probabilistic formulation is crucial for accurate 3D scene completion. When conditioned with the initial state, the completion results of cGCA stay faithful to the input data, achieving lower CDs. Further analysis on varying completeness of input is discussed in Appendix F.

| min. rate 0.2 | min. rate 0.5 | min. rate 0.8 | |||||||

| Method | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD |

| GCA | 4.89 | 6.59 | 16.90 | 3.11 | 3.53 | 8.95 | 2.53 | 2.82 | 7.98 |

| cGCA | 4.07 | 5.42 | 16.20 | 2.12 | 2.57 | 7.82 | 1.64 | 2.19 | 5.97 |

| cGCA (w/ cond.) | 3.53 | 4.26 | 9.23 | 2.19 | 2.54 | 6.89 | 1.47 | 1.69 | 5.35 |

3DFront. 3DFront is a large-scale indoor synthetic scene dataset with professionally designed layouts and contains high-quality 3D objects, in contrast to random object placement for ShapeNet scene. 3DFront dataset represents the realistic scenario where the objects are composed of multiple meshes without clear boundaries for inside or outside. Unless the input is carefully processed to be converted into a watertight mesh, the set-up excludes many of the common choices for implicit representation, such as occupancy or signed distance fields. However, the formulation of cGCA can be easily adapted for different implicit representation, and we employ unsigned distance fields (Chibane et al. (2020b)) to create the sparse voxel embedding for 3DFront dataset. We compare the performance of cGCA against GCA, both with voxel resolution of 5cm and transitions.

Table 2 shows that cGCA outperforms GCA by a large margin in CD, generating high-fidelity completions with unsigned distance fields. While both GCA and cGCA are capable of generating multiple plausible results, GCA suffers from discretization artifacts due to voxelized representation, as shown in Fig. 4. cGCA not only overcomes the limitation of the resolution, but also is scalable to process the entire rooms at once during both training and test time. In contrast, previous methods for scene completion (Siddiqui et al. ; Peng et al. (2020)) divide the scene into small sections and separately complete them. We analyze the scalability in terms of the network parameters and the GPU usage in Appendix D. In Appendix G, we also provide results on ScanNet (Dai et al. (2017a)) dataset, which is one of the widely used datasets for real-world indoor environments.

| MMD (quality) | UHD (fidelity) | TMD (diversity) | ||||||||||

| Method | Sofa | Chair | Table | Avg. | Sofa | Chair | Table | Avg. | Sofa | Chair | Table | Avg. |

| cGAN | 5.70 | 6.53 | 6.10 | 6.11 | 11.40 | 12.10 | 11.20 | 11.57 | 7.44 | 8.21 | 6.88 | 7.51 |

| GCA () | 4.70 | 6.23 | 6.22 | 5.72 | 8.88 | 7.95 | 7.63 | 8.15 | 22.39 | 12.41 | 18.83 | 17.88 |

| cGCA () | 4.59 | 6.11 | 6.08 | 5.59 | 10.43 | 9.99 | 9.29 | 9.90 | 9.72 | 13.65 | 26.04 | 16.47 |

| cGCA () | 4.51 | 6.30 | 5.89 | 5.57 | 8.99 | 8.43 | 7.22 | 8.21 | 11.18 | 16.70 | 31.94 | 19.94 |

| cGCA (, w/ cond.) | 4.64 | 6.15 | 5.90 | 5.56 | 8.00 | 6.75 | 6.45 | 7.07 | 14.01 | 11.49 | 31.01 | 18.84 |

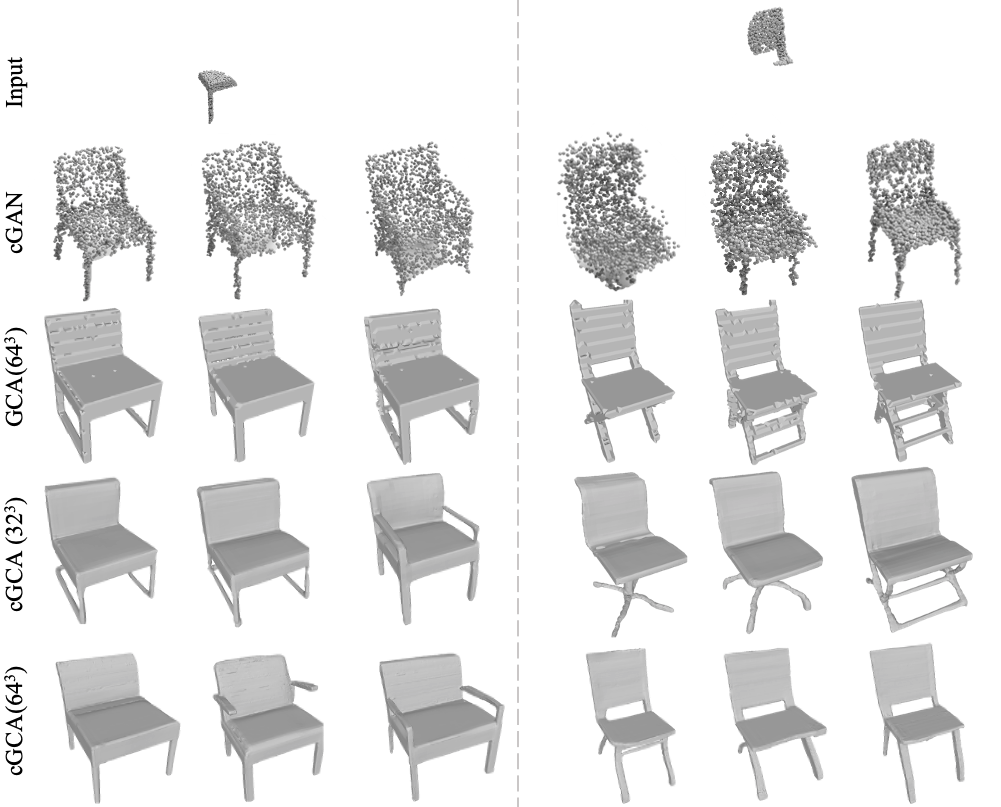

4.2 Single Object Completion

We analyze various performance metrics of cGCA for a single object completion with chair/sofa/table classes of ShapeNet (Chang et al. (2015)) dataset. Given densely sampled points of a normalized object in , the incomplete observation is generated by selecting points within the sphere of radius 0.5 centered at one of the surface points. From the partial observation, we sample 1,024 points which serve as a sparse input, and sample 2,048 points from completion results for testing. Following the previous method (Wu et al. (2020)), we generate ten completions and compare MMD (quality), UHD (fidelity), and TMD (diversity). Our method is compared against other probabilistic shape completion methods: cGAN (Wu et al. (2020)) which is based on point cloud, and GCA (Zhang et al. (2021)) which uses voxel representation. We use transitions.

Quantitative and qualitative results are shown in Table 3 and Fig. 5. Our approach exceeds other baselines in all metrics, indicating that cGCA can generate high-quality completions (MMD) that are faithful to input (UHD) while being diverse (TMD). By using latent codes, the completed continuous surface of cGCA can capture geometry beyond its voxel resolution. The quality of completed shape in voxel resolution therefore even outperforms in MMD for discrete GCA in higher voxel resolution. Also, the UHD score of cGCA (w/ cond.) exceeds that of GCA and vanilla cGCA indicating that conditioning latent codes from the input indeed preserves the input partial geometry.

5 Related Works

3D Shape Completion.

The data-driven completion of 3D shapes demands a large amount of memory and computation. The memory requirement for voxel-based methods increases cubically to the resolution (Dai et al. (2017b)) while the counterpart of point cloud based representations (Yuan et al. (2018)) roughly increases linearly with the number of points. Extensions of scene completion in voxel space utilize hierarchical representation (Dai et al. (2018)) or subdivided scenes (Dai et al. (2018; 2020)) with sparse voxel representations. Recently, deep implicit representations (Park et al. (2019); Chen & Zhang (2019); Mescheder et al. (2019)) suggest a way to overcome the limitation of resolution. Subsequent works (Chabra et al. (2020); Chibane et al. (2020a); Jiang et al. (2020); Peng et al. (2020)) demonstrate methods to extend the representation to large-scale scenes. However, most works are limited to regressing a single surface from a given observation. Only a few recent works (Wu et al. (2020); Zhang et al. (2021); Smith & Meger (2017)) generate multiple plausible outcomes by modeling the distribution of surface conditioned on the observation. cGCA suggests a scalable solution for multi-modal continuous shape completion by employing progressive generation with continuous shape representations.

Diffusion Probabilistic Models.

One way to capture the complex data distribution by the generative model is to use a diffusion process inspired by nonequilibrium thermodynamics such as Sohl-Dickstein et al. (2015); Ho et al. (2020); Luo & Hu (2021). The diffusion process incrementally destroys the data distribution by adding noise, whereas the transition kernel learns to revert the process that restores the data structure. The learned distribution is flexible and easy to sample from, but it is designed to evolve from a random distribution. On the other hand, the infusion training by Bordes et al. (2017) applies a similar technique but creates a forward chain instead of reverting the diffusion process. Since the infusion training can start from a structured input distribution, it is more suitable to a shape completion that starts from a partial data input. However, the infusion training approximates the lower bound of variational distribution with Monte Carlo estimates using the reprameterization trick (Kingma & Welling (2014)). We modify the training objective and introduce a simple variant of infusion training that can maximize the variational lower bound of the log-likelihood of the data distribution without using Monte Carlo approximation.

6 Conclusion

We are the first to tackle the challenging task of probabilistic scene completion, which requires not only the model to generate multiple plausible outcomes but also be scalable to capture the wide-range context of multiple objects. To this end, we propose continuous Generative Cellular Automata, a scalable generative model for completing multiple plausible continuous surfaces from an incomplete point cloud. cGCA compresses the implicit field into sparse voxels associated with their latent code named sparse voxel embedding, and incrementally generates diverse implicit surfaces. The training objective is proven to maximize the variational lower bound of the likelihood of sparse voxel embeddings, indicating that cGCA is a theoretically valid generative model. Extensive experiments show that our model is able to faithfully generate multiple plausible surfaces from partial observation.

There are a few interesting future directions. Our results are trained with synthetic scene datasets where the ground truth data is available. It would be interesting to see how well the data performs in real data with self-supervised learning. For example, we can extend our method to real scenes such as ScanNet Dai et al. (2017a) or Matterport 3D (Chang et al. (2017)) by training the infusion chain with data altered to have different levels of completeness as suggested by Dai et al. (2020). Also, our work requires two-stage training, where the transition kernel is trained with the ground truth latent codes generated from the pre-trained autoencoder. It would be less cumbersome if the training could be done in an end-to-end fashion. Lastly, our work takes a longer inference time compared to previous methods (Peng et al. (2020)) since a single completion requires multiple transitions. Reducing the number of transitions by using a technique similar to Salimans & Ho (2022) can accelerate the runtime.

7 Ethics Statement

The goal of our model is to generate diverse plausible scenes given an observation obtained by sensors. While recovering the detailed geometry of real scenes is crucial for many VR/AR and robotics applications, it might violate proprietary or individual privacy rights when abused. Generating the unseen part can also be regarded as creating fake information that can deceive people as real.

8 Reproducibility

Code to run the experiments is available at https://github.com/96lives/gca. Appendix A contains the implementation details including the network architecture, hyperparameter settings, and dataset processing. Proofs and derivations are described in Appendix B.

9 Acknowledgement

We thank Youngjae Lee for helpful discussion and advice on the derivations. This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No.2020R1C1C1008195) and the National Convergence Research of Scientific Challenges through the National Research Foundation of Korea (NRF) funded by Ministry of Science and ICT (NRF2020M3F7A1094300). Young Min Kim is the corresponding author.

References

- Bordes et al. (2017) Florian Bordes, Sina Honari, and Pascal Vincent. Learning to generate samples from noise through infusion training. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings. OpenReview.net, 2017. URL https://openreview.net/forum?id=BJAFbaolg.

- Chabra et al. (2020) Rohan Chabra, Jan Eric Lenssen, Eddy Ilg, Tanner Schmidt, Julian Straub, Steven Lovegrove, and Richard A. Newcombe. Deep local shapes: Learning local SDF priors for detailed 3d reconstruction. CoRR, abs/2003.10983, 2020. URL https://arxiv.org/abs/2003.10983.

- Chang et al. (2017) Angel Chang, Angela Dai, Thomas Funkhouser, Maciej Halber, Matthias Niessner, Manolis Savva, Shuran Song, Andy Zeng, and Yinda Zhang. Matterport3d: Learning from rgb-d data in indoor environments. International Conference on 3D Vision (3DV), 2017.

- Chang et al. (2015) Angel X. Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, Jianxiong Xiao, Li Yi, and Fisher Yu. ShapeNet: An Information-Rich 3D Model Repository. Technical Report arXiv:1512.03012 [cs.GR], Stanford University — Princeton University — Toyota Technological Institute at Chicago, 2015.

- Chen & Zhang (2019) Zhiqin Chen and Hao Zhang. Learning implicit fields for generative shape modeling. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pp. 5939–5948. Computer Vision Foundation / IEEE, 2019. doi: 10.1109/CVPR.2019.00609. URL http://openaccess.thecvf.com/content_CVPR_2019/html/Chen_Learning_Implicit_Fields_for_Generative_Shape_Modeling_CVPR_2019_paper.html.

- Chibane et al. (2020a) Julian Chibane, Thiemo Alldieck, and Gerard Pons-Moll. Implicit functions in feature space for 3d shape reconstruction and completion. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp. 6968–6979. IEEE, 2020a. doi: 10.1109/CVPR42600.2020.00700. URL https://doi.org/10.1109/CVPR42600.2020.00700.

- Chibane et al. (2020b) Julian Chibane, Aymen Mir, and Gerard Pons-Moll. Neural unsigned distance fields for implicit function learning. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin (eds.), Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020b. URL https://proceedings.neurips.cc/paper/2020/hash/f69e505b08403ad2298b9f262659929a-Abstract.html.

- Choy et al. (2019) Christopher B. Choy, JunYoung Gwak, and Silvio Savarese. 4d spatio-temporal convnets: Minkowski convolutional neural networks. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pp. 3075–3084. Computer Vision Foundation / IEEE, 2019. doi: 10.1109/CVPR.2019.00319. URL http://openaccess.thecvf.com/content_CVPR_2019/html/Choy_4D_Spatio-Temporal_ConvNets_Minkowski_Convolutional_Neural_Networks_CVPR_2019_paper.html.

- Dai et al. (2017a) Angela Dai, Angel X. Chang, Manolis Savva, Maciej Halber, Thomas A. Funkhouser, and Matthias Nießner. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, July 21-26, 2017, pp. 2432–2443. IEEE Computer Society, 2017a. doi: 10.1109/CVPR.2017.261. URL https://doi.org/10.1109/CVPR.2017.261.

- Dai et al. (2017b) Angela Dai, Charles Ruizhongtai Qi, and Matthias Nießner. Shape completion using 3d-encoder-predictor cnns and shape synthesis. In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, July 21-26, 2017, pp. 6545–6554. IEEE Computer Society, 2017b. doi: 10.1109/CVPR.2017.693. URL https://doi.org/10.1109/CVPR.2017.693.

- Dai et al. (2018) Angela Dai, Daniel Ritchie, Martin Bokeloh, Scott Reed, Jürgen Sturm, and Matthias Nießner. Scancomplete: Large-scale scene completion and semantic segmentation for 3d scans. In 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018, pp. 4578–4587. IEEE Computer Society, 2018. doi: 10.1109/CVPR.2018.00481. URL http://openaccess.thecvf.com/content_cvpr_2018/html/Dai_ScanComplete_Large-Scale_Scene_CVPR_2018_paper.html.

- Dai et al. (2020) Angela Dai, Christian Diller, and Matthias Nießner. SG-NN: sparse generative neural networks for self-supervised scene completion of RGB-D scans. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp. 846–855. IEEE, 2020. doi: 10.1109/CVPR42600.2020.00093. URL https://doi.org/10.1109/CVPR42600.2020.00093.

- Fu et al. (2021) Huan Fu, Bowen Cai, Lin Gao, Ling-Xiao Zhang, Jiaming Wang, Cao Li, Qixun Zeng, Chengyue Sun, Rongfei Jia, Binqiang Zhao, and Hao Zhang. 3d-front: 3d furnished rooms with layouts and semantics. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 10933–10942, October 2021.

- Goodfellow (2017) Ian J. Goodfellow. NIPS 2016 tutorial: Generative adversarial networks. CoRR, abs/1701.00160, 2017. URL http://arxiv.org/abs/1701.00160.

- Graham et al. (2018) Benjamin Graham, Martin Engelcke, and Laurens van der Maaten. 3d semantic segmentation with submanifold sparse convolutional networks. In 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018, pp. 9224–9232. IEEE Computer Society, 2018. doi: 10.1109/CVPR.2018.00961. URL http://openaccess.thecvf.com/content_cvpr_2018/html/Graham_3D_Semantic_Segmentation_CVPR_2018_paper.html.

- Hartigan & Wong (1979) J. A. Hartigan and M. A. Wong. A k-means clustering algorithm. JSTOR: Applied Statistics, 28(1):100–108, 1979.

- Ho et al. (2020) Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin (eds.), Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020. URL https://proceedings.neurips.cc/paper/2020/hash/4c5bcfec8584af0d967f1ab10179ca4b-Abstract.html.

- Jiang et al. (2020) Chiyu Max Jiang, Avneesh Sud, Ameesh Makadia, Jingwei Huang, Matthias Nießner, and Thomas A. Funkhouser. Local implicit grid representations for 3d scenes. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp. 6000–6009. IEEE, 2020. doi: 10.1109/CVPR42600.2020.00604. URL https://doi.org/10.1109/CVPR42600.2020.00604.

- Kingma & Ba (2015) Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Yoshua Bengio and Yann LeCun (eds.), 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015. URL http://arxiv.org/abs/1412.6980.

- Kingma & Welling (2014) Diederik P Kingma and Max Welling. Auto-encoding variational bayes, 2014.

- Lorensen & Cline (1987) William E. Lorensen and Harvey E. Cline. Marching cubes: A high resolution 3d surface construction algorithm. In Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ’87, pp. 163–169, New York, NY, USA, 1987. Association for Computing Machinery. ISBN 0897912276. doi: 10.1145/37401.37422. URL https://doi.org/10.1145/37401.37422.

- Luo & Hu (2021) Shitong Luo and Wei Hu. Diffusion probabilistic models for 3d point cloud generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- Mescheder et al. (2019) Lars M. Mescheder, Michael Oechsle, Michael Niemeyer, Sebastian Nowozin, and Andreas Geiger. Occupancy networks: Learning 3d reconstruction in function space. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pp. 4460–4470. Computer Vision Foundation / IEEE, 2019. doi: 10.1109/CVPR.2019.00459. URL http://openaccess.thecvf.com/content_CVPR_2019/html/Mescheder_Occupancy_Networks_Learning_3D_Reconstruction_in_Function_Space_CVPR_2019_paper.html.

- Park et al. (2019) Jeong Joon Park, Peter Florence, Julian Straub, Richard A. Newcombe, and Steven Lovegrove. Deepsdf: Learning continuous signed distance functions for shape representation. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pp. 165–174. Computer Vision Foundation / IEEE, 2019. doi: 10.1109/CVPR.2019.00025. URL http://openaccess.thecvf.com/content_CVPR_2019/html/Park_DeepSDF_Learning_Continuous_Signed_Distance_Functions_for_Shape_Representation_CVPR_2019_paper.html.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d Alché-Buc, E. Fox, and R. Garnett (eds.), Advances in Neural Information Processing Systems 32, pp. 8024–8035. Curran Associates, Inc., 2019. URL http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf.

- Peng et al. (2020) Songyou Peng, Michael Niemeyer, Lars Mescheder, Marc Pollefeys, and Andreas Geiger. Convolutional occupancy networks. In European Conference on Computer Vision (ECCV), 2020.

- Qi et al. (2016) Charles Ruizhongtai Qi, Hao Su, Kaichun Mo, and Leonidas J. Guibas. Pointnet: Deep learning on point sets for 3d classification and segmentation. CoRR, abs/1612.00593, 2016. URL http://arxiv.org/abs/1612.00593.

- Ronneberger et al. (2015) O. Ronneberger, P.Fischer, and T. Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI), volume 9351 of LNCS, pp. 234–241. Springer, 2015. URL http://lmb.informatik.uni-freiburg.de/Publications/2015/RFB15a. (available on arXiv:1505.04597 [cs.CV]).

- Salimans & Ho (2022) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models. In International Conference on Learning Representations, 2022. URL https://openreview.net/forum?id=TIdIXIpzhoI.

- (30) Yawar Siddiqui, Justus Thies, Fangchang Ma, Qi Shan, Matthias Nießner, and Angela Dai. RetrievalFuse: Neural 3D Scene Reconstruction with a Database. arXiv e-prints, art. arXiv:2104.00024.

- Sitzmann et al. (2020) Vincent Sitzmann, Julien N.P. Martel, Alexander W. Bergman, David B. Lindell, and Gordon Wetzstein. Implicit neural representations with periodic activation functions. In Proc. NeurIPS, 2020.

- Smith & Meger (2017) Edward J. Smith and David Meger. Improved adversarial systems for 3d object generation and reconstruction. volume 78 of Proceedings of Machine Learning Research, pp. 87–96. PMLR, 13–15 Nov 2017. URL http://proceedings.mlr.press/v78/smith17a.html.

- Sohl-Dickstein et al. (2015) Jascha Sohl-Dickstein, Eric A. Weiss, Niru Maheswaranathan, and Surya Ganguli. Deep unsupervised learning using nonequilibrium thermodynamics. In Francis R. Bach and David M. Blei (eds.), Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6-11 July 2015, volume 37 of JMLR Workshop and Conference Proceedings, pp. 2256–2265. JMLR.org, 2015. URL http://proceedings.mlr.press/v37/sohl-dickstein15.html.

- Varley et al. (2017) Jacob Varley, Chad DeChant, Adam Richardson, Avinash Nair, Joaquín Ruales, and Peter Allen. Shape completion enabled robotic grasping. In Intelligent Robots and Systems (IROS), 2017 IEEE/RSJ International Conference on. IEEE, 2017.

- Wu et al. (2020) Rundi Wu, Xuelin Chen, Yixin Zhuang, and Baoquan Chen. Multimodal shape completion via conditional generative adversarial networks. In The European Conference on Computer Vision (ECCV), 2020.

- Yuan et al. (2018) Wentao Yuan, Tejas Khot, David Held, Christoph Mertz, and Martial Hebert. Pcn: Point completion network. In 3D Vision (3DV), 2018 International Conference on, 2018.

- Zhang et al. (2021) Dongsu Zhang, Changwoon Choi, Jeonghwan Kim, and Young Min Kim. Learning to generate 3d shapes with generative cellular automata. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=rABUmU3ulQh.

Appendix A Implementation Details

We use Pytorch (Paszke et al. (2019)) and the sparse convolution library MinkowskiEngine (Choy et al. (2019)) for all implementations.

A.1 Autoencoder

Network Architecture. The autoencoder is composed of two modules, encoder and decoder to convert between the sparse voxel embedding and implicit fields, which is depicted in Fig. 2. The encoder generates the sparse voxel embedding from the coordinate-distance pairs , where is a 3D coordinate and is the (signed) distance to the surface, . The decoder regresses the implicit field value at the 3D position given the sparse voxel embedding , .

The encoder is a a local PointNet (Qi et al. (2016)) implemented with MLP of 4 blocks with hidden dimension 128, as in ConvOcc (Peng et al. (2020)). Given a set of coordinate-distance pair , we find the nearest cell for each coordinate-distance pair . Then we make the representation sparse by removing the coordinate-distance pair if the nearest cell do not contain the surface. For the remaining pairs, we normalize the coordinate to the local coordinate centered at the nearest voxel. Then we use the local coordinate to build a vector in for coordinate-distance pair which serves as the input to the PointNet for the encoder . The local PointNet architecture employs average pooling to the point-coordinate pairs that belong to the same cell.

The architecture of decoder , inspired by IFNet (Chibane et al. (2020a)) is depicted in Fig. 6. As shown on the left, the decoder can be decomposed into the sparse convolution that extracts hierarchical feature, followed by trilinear interpolation of the extracted feature , and final MLP layers . The sparse convolution layer aggregates the information into multi-level grids in different resolutions, i.e., , Starting from the original resolution , contains a grid that is downsampled times. However, in contrast to the original IFNet, our grid features are sparse, since they only store the downsampled occupied cells of sparse voxel embedding . The sparse representation is composed of occupancy and -dimensional feature for each cell, like sparse voxel embedding. The grid points that are not occupied are considered as having zero features. We use levels, with feature dimension for all experiments.

The multi-scale features are then aggregated to define the feature for a query point : . Here is the trilinear interpolation of features in discrete grid to define the feature at a particular position , similar to IFNet. We apply trilinear interpolation at each resolution then combine the features.

Lastly, the MLP layer maps the feature at the query point to the implicit function value , . The MLP is composed of 4 ResNet blocks with hidden dimension 128 with as the final activation function. The final value is the truncated signed distance within the range of except for 3DFront (Fu et al. (2021)) which does not have a watertight mesh. We use unsigned distance function for 3DFront as in NDF (Chibane et al. (2020b)) by changing the codomain of the decoder to with sigmoid as the final activation function. Thus, the decoder can be summarized as .

We would like to emphasize that the autoencoder does not use any dense 3D convolutions, and thus the implementation is efficient, especially when reconstructing the sparse voxel embedding. Since the decoding architecture only consists of sparse convolutions and MLPs, the memory requirement increases linearly with respect to the number of occupied voxels. In contrast, the requirement for dense 3D convolution increases cubic with respect to the maximum size of the scene.

Reconstruction Results. Fig. 7 shows a reconstruction of a chair (left) using signed distance fields and a scene in 3DFront (right) using unsigned distance fields. The figure shows that our autoencoder can faithfully reconstruct a continuous surface using the sparse voxel embedding. However, the usage of unsigned distance fields (Chibane et al. (2020b)) representation tends to generate relatively thicker reconstructions compared to that of signed distance fields. Without a clear distinction between the interior/exterior, the unsigned representation makes it difficult to scrutinize the zero-level isosurface and thus results in comparably thick reconstruction. Thus, the flexibility of being able to represent any mesh with various topology comes at a cost of expressive power, in contrast to signed distance fields which can only represent closed surfaces. Our work can be further improved by utilizing more powerful implicit representation techniques, such as SIREN (Sitzmann et al. (2020)), but we leave it as future work.

Hyperparameters and Implementation Details. We train the autoencoder with Adam (Kingma & Ba (2015)) optimizer with learning rate 5e-4 and use batch size of 3, 1, and 4 for ShapeNet scene (Peng et al. (2020)), 3DFront (Fu et al. (2021)), and ShapeNet (Chang et al. (2015)) dataset, respectively. The autoencoder for scene completion is pretrained with all classes of ShapeNet assuming that the local geometric details for scenes are represented with the patches from individual objects.

A.2 Transition Model

Network Architecture. Following GCA (Zhang et al. (2021)), we implement the transition model by using a variant of U-Net (Ronneberger et al. (2015)). The input to the sparse convolution network is the sparse voxel embedding , and the output feature size of the last convolution is , where we use generalized sparse convolution for the last layer to obtain the occupancy (with dimension 1) and the latent code (with dimension ) of the neighborhood. Unlike GCA, our implementation does not need an additional cell-wise aggregation step.

Conditioned Model. The conditioned model is a variant of our model, where we employ the use of encoder to estimate the initial latent codes of initial state and condition every transition kernel on . Given incomplete point cloud with , the variant constructs the initial by encoding a set of coordinated-distance pair , where the distance is set to 0 for every coordinate in . Since the point cloud is sampled from the surface of the original geometry, this is a reasonable estimate of the latent code.

The transition kernel is conditioned on by taking a sparse voxel embedding of latent code dimension that concatenates the current state and as the input. Specifically, we define to be , where the are the occupancies and latent codes of and . The occupancy is set to one if either or is occupied and the latent code is set to be the concatenation of latent codes of and . Thus, the only modification to the neural network is the size of input feature dimension, which is increased from to .

Hyperparameters and Implementation Details We train our model with Adam (Kingma & Ba (2015)) optimizer with learning rate 5e-4. The transition kernel is trained using infusion schedule and standard deviation schedule . We use the accelerated training scheme introduced in GCA (Zhang et al. (2021)) by managing the time steps based on the current step. We use neighborhood size , with metric induced by norm, except for the ShapeNet (Chang et al. (2015)) experiment on voxel resolution which uses . For transition model training, we utilize maximum batch size of 32, 16 and 6, for ShapeNet scene, 3DFront, ShapeNet dataset, respectively. We use adaptive batch size for the transition model, which is determined by the total number of cells in the minibatch for efficient GPU memory usage. Lastly, we use mode seeking steps for all experiments.

Visualizations and Testing. All surface visualizations are made by upsampling the original voxel resolution 4 times, where we efficiently query from the upsampled grid only near the occupied cells of the final state . Then, the mesh is extracted with marching cubes (Lorensen & Cline (1987)) with no other postprocessing applied. From the upsampled high resolution grid, we extract cells with the distance value below 0.5 which serve as the point cloud to compute the metrics for testing.

A.3 Baselines

We use the official implementation or the code provided by the authors by contact with default hyperparameters unless stated otherwise. All results are obtained by training on our dataset. The output point cloud used for testing, is obtained by sampling from mesh with the same resolution if possible, unless stated otherwise. For GCA (Zhang et al. (2021)) we use the same hyperparameters as ours for fair comparison. The mesh is created by using marching cubes (Lorensen & Cline (1987)) with the voxel resolution. We use the volume encoder for ConvOcc (Peng et al. (2020)) which achieves the best results in the original ShapeNet scene dataset (Peng et al. (2020)). For IFNet (Chibane et al. (2020a)), we use the ShapeNet128Vox model as used in the work of Siddiqui et al. with occupancy implicit representation. cGAN (Wu et al. (2020)) is tested by 2,048 generated point cloud.

A.4 Datasets

ShapeNet Scene. ShapeNet scene (Peng et al. (2020)) contains synthetic rooms that contain multiple ShapeNet (Chang et al. (2015)) objects, which has randomly scaled floor and random walls, normalized so that the longest length of the bounding box has length of 1. The number of rooms for train/val/test split are 3750/250/995. We sample SDF points for training our model. The input is created by iteratively sampling points from the surface and removing points within distance 0.1 for each instance. We run 20 iterations and each removal is revoked if the aggregated removal exceeds that of the guaranteed rate. Since we are more interested in the reconstruction of objects, the walls and floors are guaranteed to have minimum rate of 0.8, regardless of the defined minimum rate. Lastly, we sample 10,000 points and add normal noise with standard deviation 0.005. For computing the metrics at test time, we sample 100,000 points.

3DFront. 3DFront (Fu et al. (2021)) is a large-scale indoor synthetic scene dataset with professionally designed layouts and contains high quality 3D objects. We collect rooms that contain multiple objects, where 10% of the biggest rooms are removed from the data to discard rooms with immense size for stable training. Thus, 7044/904/887 rooms are collected as train/val/test rooms. The incomplete point cloud is obtained by randomly sampling with density 219 points/ and then removed and noised as in ShapeNet scene, but with removal sphere distance proportional to the object size and noise of standard deviation of 1cm. During evaluation, we sample points proportionally to the area for each ground truth surface with density of 875 points/ to compute the metrics.

ShapeNet. We use chair, sofa, table classes of ShapeNet (Chang et al. (2015)), where the signed distance values of the query points are obtained by using the code of DeepSDF (Park et al. (2019)). The shapes are normalized to fit in a bounding box of . The partial observation is generated by extracting points within a sphere of radius 0.5, centered at one of the surface points. Then we sample 1,024 points to create a sparse input and compare the metrics by sampling 2,048 points for a fair comparison against cGAN (Wu et al. (2020)).

Appendix B Proofs and Detailed Derivations

B.1 Variational Bound Derivation

We derive the variational lower bound for cGCA (Eq. (11)) below. The lower bound is derived by the work of Sohl-Dickstein et al. (2015) and we include it for completeness.

The second term of the right hand side can be converted into KL divergence as following:

Thus,

is derived.

B.2 KL Divergence Decomposition

B.3 Approximating by

In this section, we claim that can be replaced by , which allows us to train using a simplified objective. First, we show that due to the usage of Dirac distribution and introduce a non-diverging likelihood by replacing the Dirac function with indicator function . Recall,

Thus, if any cell is unoccupied, the likelihood diverges to since . This disables us to use negative log-likelihood as a loss function, since all the gradients will diverge.

This problem can be easily resolved by substituting with indicator function . Both functions have non-zero value only at , but the former has value of while the latter has value . While is not a probability measure, i.e. , replacing will have the effect of reweighting the likelihood at from to , with the likelihood at other values unchanged. Thus, is a natural replacement of for computing a valid likelihood.

See 1

Proof.

For brevity of notations, we define

where is the occupancy probability, and mean of the infusion kernel at cell . With , there exists , such that , since . Since if and only if , must converge to a set of occupied coordinates of since the distance decreases for each cell at every step. This indicates that . Also, holds for all .

Then, and can be expressed as the following:

We divide cases for and . When ,

where we set for , since . Analogously, when ,

Thus, if , , where is a constant. We can conclude that for all .

∎

Appendix C Difference of Formulation compared to GCA

We elaborate the difference of formulations compared to GCA (Zhang et al. (2021)).

Usage of Latent Code. The biggest difference between GCA and our model is the usage of local latent codes (Jiang et al. (2020); Chabra et al. (2020)) to generate continuous surface. Although the formulation of GCA allows scalable sampling of high resolution voxel, it cannot produce continuous surface due to predefined voxel resolution. However, we define a new state named sparse voxel embedding by appending local latent codes to the voxel occupancy. Sparse voxel embedding can be decoded to produce continuous geometry, outperforming GCA in accuracy in all the evaluated datasets in Sec. 4.

Training Disconnected Objects. During training, GCA assumes a property named partial connectivity between an intermediate state and ground truth state to guarantee the convergence of infusion sequences to ground truth (Property 1 and Proposition 1 of Zhang et al. (2021)). The connectivity means that any cell in can reach any cell in following the path of cells in with local transitions bounded by neighborhood size . The assumption is required since the infusion kernel of single cell for GCA, defined as

| (14) |

inserts the ground truth cell if the cell is within the neighborhood of current state , i.e. . However, we eliminate the assumption by defining an auxiliary function

| (15) |

and modifying the formulation as

| (16) |

by switching in the infusion term to from the original infusion kernel. The usage of guarantees infusing a temporary ground truth cell that assures reaching all cells in regardless of the connectivity. The assumption is no longer required and therefore our training procedure is a general version of GCA.

Training Procedure for Maximizing Log-likelihood. Both GCA and our model utilizes the infusion training (Bordes et al. (2017)), by emulating the sequence of states that we aim to learn. The objective of GCA does not explicitly show the relationship between the training objective and maximizing the log-likelihood, which is what we aim to achieve. In Sec. 3.3, we give a detailed explanation of how our training objective approximates maximizing the lower bound of log-likelihood of data distribution. Thus, our training procedure completes the heuristics used in original work of GCA.

Appendix D Analysis on Scalability

| grid size | ||||||

|---|---|---|---|---|---|---|

| Method | # of parameters (x) | 55 | 77 | 93 | 124 | 235 |

| ConvOcc | 0.42 | 1.32 | 1.81 | 2.38 | 4.13 | 21.68 |

| cGCA | 4.12 | 2.40 | 2.81 | 2.88 | 2.84 | 3.24 |

In this section, we investigate the scalability of our approach. Scene completion needs to process the 3D geometry of large-scale scenes with multiple plausible outputs, and sparse representation is crucial to find the diverse yet detailed solutions.

As the scale of the 3D geometry increases, the approaches utilizing the dense convolutional network cannot observe the entire scene at the same time. As a simple remedy, previous methods employ sliding window techniques for 3D scene completion (Siddiqui et al. ; Peng et al. (2020)). Basically they divide the input scene into small segments, complete each segment, and then fuse them. The formulation can only observe the information that is within the same segment for completion, and assumes that the divisions contain all the information necessary to complete the geometry. The size of the maximum 3D volume that recent works utilize amounts to a 3D box whose longest length is 3.2m for resolution voxel, where individual cells are about cm in each dimension. Note that the size is comparable to ordinary furniture, such as sofa or dining table, whose spatial context might not be contained within the same segment. The relative positions between relevant objects can be ignored, and the missing portion of the same object can accidentally be separated into different segments. With our sparse representation, the receptive field is large enough to observe the entire room, and we can stably complete the challenging 3D scenes with multiple objects capturing the mutual context.

We further provide the numerical comparison of our sparse representation against the dense convolution of ConvOcc (Peng et al. (2020)). Table 4 shows the number of neural network parameters and the GPU usage for processing single rooms of different sizes in 3DFront (Fu et al. (2021)). We used 5 cm voxel resolution and the grid size indicates the number of cells required to cover the longest length of the bounding box for the room. The maximum GPU usage during 25 transitions of cGCA is provided. We did not include the memory used for the final reconstruction which regresses the actual distance values at dense query points, since the memory usage differs depending on the number of query points.

Our neural network is powerful, employing about 10 times more parameters than ConvOcc. With the efficient sparse representation, we can design the network to capture larger receptive fields with deeper network, enjoying more expressive power. This is reflected in the detailed reconstruction results of our approach. The memory usage is similar for smaller rooms for both approaches, but cGCA becomes much more efficient for larger rooms. When the grid size for room reaches 235, our method consumes 7 times less GPU memory compared to ConvOcc. As widely known, the memory and computation for 3D convolution increases cubic to the grid resolution with dense representation, while those for sparse representations increase with respect to the number of occupied voxels, which is much more efficient. Therefore our sparse representation is scalable and crucial for scene completion.

Appendix E Effects of Mode Seeking Steps

We perform an ablation study on the effects of mode seeking steps, which removes the noisy voxels with low-occupancy probability as discussed in Sec. 3.2. We test with ShapeNet dataset (Chang et al. (2015)) with voxel size and neighborhood radius , which tends to show the most intense effect of mode seeking phase due to large neighborhood size.

The right side of Fig. 9 shows the graph of metrics on quality (MMD), fidelity (UHD), and diversity (TMD) with respect to mode seeking steps. There is a dramatic decrease in all metrics on the first step of mode seeking step. The effect is visualized in the left side of Fig. 9, where the unlikely voxels are removed in a single mode seeking step. After the first mode seeking step, UHD and TMD metrics increase slightly, but overall, the metrics remain stable. We choose for all the conducted experiments to ensure that shape reaches a stable mode.

Appendix F Analysis on Varying Completeness of Input

In this section, we further investigate the effects on the level of completeness of input. Sec. F.1 shows results of completion models on sparse inputs and Sec. F.2 provides how our model behaves when a non-ambiguous input is given.

F.1 Results on Sparse Input

| 500 points | 1,000 points | 5,000 points | 10,000 points | |||||||||

| Method | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD |

| ConvOcc | 36.58 | - | - | 5.92 | - | - | 1.65 | - | - | 1.33 | - | - |

| IFNet | 15.27 | - | - | 12.33 | - | - | 9.64 | - | - | 8.55 | - | - |

| GCA | 6.41 | 9.77 | 31.83 | 4.08 | 5.90 | 16.58 | 3.08 | 3.68 | 5.64 | 3.02 | 3.54 | 4.92 |

| cGCA | 11.30 | 17.61 | 50.58 | 3.64 | 5.53 | 16.18 | 1.32 | 1.73 | 4.64 | 1.16 | 1.49 | 3.91 |

| cGCA (w/ cond.) | 4.62 | 5.93 | 10.71 | 2.11 | 2.76 | 5.75 | 0.97 | 1.27 | 3.41 | 0.87 | 1.08 | 2.96 |

For the experiments of ShapeNet scene dataset, the models are trained and evaluated on the input where the ambiguity lies on the missing parts, but the remaining parts of input pointcloud are relatively clear by sampling 10,000 points, following the experiment setting of ConvOcc (Peng et al. (2020)). In this section, we further provide ambiguity to the remaining parts by sampling less points. For the models trained on 10,000 point samples with 0.5 completeness, we evaluate the results on sparse input by sampling 500, 1,000, 5,000 and 10,000 points after applying the same iterative removal process as done in training. As in Sec. 4.1, we measure the accuracy (CD) and diversity (TMD) metrics compared with ConvOcc (Peng et al. (2020)), IFNet (Chibane et al. (2020a)), and GCA (Zhang et al. (2021)).

Table 5 contains the quantitative comparison results. For all the models, accuracy (CD) degrades as the input gets sparser. However, cGCA (w/ cond.) achieves best accuracy at all times, indicating that our model is the most robust to the sparsity of input. For probabilistic models, the diversity (TMD) increases as the density decreases. This implies that the diversity of the reconstructions is dependent to the ambiguity of the input. This is intuitively a natural response, since the multi-modality of plausible outcomes increases as the partial observations become less informative.

Fig. 10 visualizes the results for various sparsity. All of the models produce less plausible reconstructions with 500 points (leftmost column of Fig. 10) compared to that of higher density inputs. However, we observe a clear distinction between the completions of probabilistic models (GCA, cGCA) and deterministic models (ConvOcc, IFNet). While probabilistic models try to generate the learned shapes from the observations, such as a shade of the lamp, deterministic methods tend to fill the gaps between points. As discussed in Sec. 4.1, we hypothesize that this consequence stems from the fact that deterministic methods produce blurry results since it is forced to generate a single result along out of multiple plausible shapes which are the modes of the multi-modal distribution (Goodfellow (2017)). Therefore, we claim that a generative model capable of modeling multi-modal distribution should be used for shape completion task.

F.2 Results on Non-ambiguous Input

| min. rate 0.2 | min. rate 0.5 | min. rate 0.8 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| evaluation dataset | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD | min. CD | avg. CD | TMD |

| training | 2.80 | 3.88 | 10.07 | 1.16 | 1.49 | 3.91 | 0.69 | 0.87 | 3.05 |

| non-ambiguous | 2.46 | 3.58 | 8.72 | 0.73 | 0.87 | 2.91 | 0.61 | 0.71 | 2.72 |

We present experiments where cGCA is given a non-ambiguous input. For the models trained on various level of completeness on ShapeNet scene dataset, we evaluate each model on an input presenting distinct geometry. The input is generated by sampling 100,000 points from the mesh without any procedure of removal.

Table 6 shows the quantitative results of the experiments. The first row shows the accuracy (CD) and diversity (TMD) scores of the models operated on test set when the removal procedure is same as that of the training set. The second row shows the metrics when the input of the test set is sampled densely (100,000 points) without any removal. For all models trained with varying level of completeness, accuracy increases with decreasing diversity given a clear shape compared to an ambiguous shape. The result indicates that the diversity of the trained models is dependent on the ambiguity of the input. This coincides with the result of Sec. F.1 that the reconstructions of our model is dependent on the multi-modality of plausible outcomes of input.

We also observe that the diversity of reconstructions is associated with the training data. Comparing the quantitative results on non-ambiguous input, the accuracy is low while the diversity is high when the model is trained on relatively incomplete data. We hypothesize that models trained with higher level of incompleteness are more likely to generate shapes from the input. However, Fig. 11 shows that while the completions of cGCA trained on highly incomplete data (min. rate 0.2) are diverse, they are quite plausible.

Appendix G Probabilistic Scene Completion on Real Dataset

We investigate how our model behaves on indoor real-world data. We test on the ScanNet indoor scene dataset (Dai et al. (2017a)), which is highly incomplete compared to other datasets, such as Matterport (Chang et al. (2017)). The input is sampled by collecting 500 points/ from each scene. ScanNet dataset does not have a complete ground truth mesh, so we test our model trained on 3DFront (Fu et al. (2021)) dataset with completness of 0.8. We align the walls of ScanNet data to xy-axis since the scenes of 3DFront are axis aligned. We compare our result with GCA (Zhang et al. (2021)).

The results are visualized in Fig. 12. Our model shows diverse results (e.g. chairs, closets) and generalizes well to the real data, which has significantly different statistics compared to the training data. Especially, the conditioned variant of our model shows better results by generalizing well to new data (e.g. tree), not found in 3DFront training dataset. We emphasize that our model is trained on unsigned distance fields and does not require the sliding-window technique, unlike the previous methods (Peng et al. (2020); Chibane et al. (2020a)).

Appendix H Additional Results for Scene Completion

H.1 Additional Results for ShapeNet Scene

H.2 Additional Results for 3DFront

Appendix I Additional Results for Single Object Completion