Probabilistic Guarantees of Stochastic Recursive Gradient in Non-Convex Finite Sum Problems

Abstract

This paper develops a new dimension-free Azuma-Hoeffding type bound on summation norm of a martingale difference sequence with random individual bounds. With this novel result, we provide high-probability bounds for the gradient norm estimator in the proposed algorithm Prob-SARAH, which is a modified version of the StochAstic Recursive grAdient algoritHm (SARAH), a state-of-art variance reduced algorithm that achieves optimal computational complexity in expectation for the finite sum problem. The in-probability complexity by Prob-SARAH matches the best in-expectation result up to logarithmic factors. Empirical experiments demonstrate the superior probabilistic performance of Prob-SARAH on real datasets compared to other popular algorithms.

Keywords: machine learning, variance-reduced method, stochastic gradient descent, non-convex optimization

1 Introduction

We consider the popular non-convex finite sum optimization problem in this work, that is, estimating minimizing the following loss function

| (1) |

where is a potentially non-convex function on some compact set . Such non-convex problems lie at the heart of many applications of statistical learning [13] and machine learning [7].

Unlike convex optimization problems, in general, non-convex problems are intractable and the best we can expect is to find a stationary point. Given a target error , since , we aim to find an estimator such that roughly , where denotes the gradient vector the loss function and is the operator norm. With a non-deterministic algorithm, the output is always stochastic, and the most frequently considered measure of error bound is in expectation, i.e.,

| (2) |

There has been a substantial amount of work providing upper bounds on computational complexity needed to achieve the in-expectation bound. However, in practice, we only run a stochastic algorithm for once and an in-expectation bound cannot provide a convincing bound in this situation. Instead, a high-probability bound is more appropriate by nature. Given a pair of target errors , we want to obtain an estimator such that with probability at least , , that is

| (3) |

Though the Markov inequality might help, in general, an in-expectation bound cannot be simply converted to an in-probability bound with a desirable dependency on . It would be important to prove upper bounds on high-probability complexity, which ideally should be polylogorithmic in and with polynomial terms comparable to the in-expectation complexity bound.

Gradient-based methods are favored by practitioners due to simplicity and efficiency and have been widely studied by researchers in the non-convex setting ([26, 6, 1, 31, 5, 33]). Among numerous gradient-based methods, the StochAstic Recursive grAdient algoritHm (SARAH) ([27, 28, 33]) is the one with the best first-order guarantee as given an in-expectation error target, in both of convex and non-convex finite sum problems. It is worth noticing that [23] attempted to show that a modified version of SARAH is able to approximate the second-order stationary point with a high probability. However, we believe that their application of the martingale Azuma-Hoeffding inequality is unjustifiable because the bounds are potentially random and uncontrollable. In this paper, we shall provide a correct dimension-free martingale Azuma-Hoeffding inequality with rigorous proofs and leverage it to show in-probability properties for SARAH-based algorithms in the non-convex setting.

1.1 Related Works

-

•

High-Probability Bounds: While most works in the literature of optimization provide in-expectation bounds, there is only a small fraction of works discussing bounds in the high probability sense. [17] provide a high-probability bound on the excess risk given a bound on the regret. [12], [8, 9] derive some high-probability bounds for SGD in convex online optimization problems. [34, 22] prove high-probability bounds for several adaptive methods, including AMSGrad, RMSProp and Delayed AdaGrad with momentum. All these works rely on (generalized) Freedman’s inequality or the concentration inequality given in Lemma 6 in [15]. Different from them, our high-probability results are built on a novel Azuma-Hoeffding type inequality proved in this work and Corollary 8 from [15]. In addition, we notice that [23] provide some probabilistic bounds on a SARAH-based algorithm. However, we believe their use of the plain martingale Azuma-Hoeffding inequality is not justifiable. [5] show in-probability upper bound for SPIDER. Nevertheless, SPIDER’s practical performance is inferior due to its accuracy-dependent small step size [32, 33].

-

•

Variance-Reduced Methods in Non-Convex Finite Sum Problems: Since the invention of the variance-reduction technique in [18, 16, 4], there has been a large amount of work incorporating this efficient technique to methods targeting the non-convex finite-sum problem. Subsequent methods, including SVRG ([1, 31, 25]), SARAH ([27, 28]), SCSG ([19, 21, 11]), SNVRG ([34]), SPIDER ([5]), SpiderBoost ([33]) and PAGE ([24]), have greatly reduced computational complexity in non-convex problems.

1.2 Our Contributions

-

•

Dimension-Free Martingale Azuma-Hoeffding inequality: To facilitate our probabilistic analysis, we provide a novel Azuma-Hoeffding type bound on the summation norm of a martingale difference sequence. The novelty is two-fold. Firstly, same as the plain martingale Azuma-Hoeffding inequality, it provides a dimension-free bound. In a recent paper, a sub-Gaussian type bound has been developed by [15]. However, their results are not dimension-free. Our technique in the proof is built on a classic paper by [29] and is completely different from the random matrix technique used in [15]. Secondly, our concentration inequality allows random bounds on each element of the martingale difference sequence, which is much tighter than a large deterministic bound. It should be highlighted that our novel concentration result perfectly suits the nature of SARAH-style methods where the increment can be characterized as a martingale difference sequence and it can be further used to analyze other algorithms beyond the current paper.

-

•

In-probability error bounds of stochastic recursive gradient: We design a SARAH-based algorithm, named Prob-SARAH, adapted to the high-probability target and provably show its good in-probability properties. Under appropriate parameter setting, the first order complexity needed to achieve the in-probability target is , which matches the best known in-expectation upper bound up to some logarithmic factors ([34, 33, 11]). We would like to point out that the parameter setting used to achieve such complexity is semi-adaptive to . That is, only the final stopping rule relies on while other key parameters are independent of , including step size, mini-batch sizes, and lengths of loops.

-

•

Probabilistic analysis of SARAH for non-convex finite sum: Existing literature on the bounds of SARAH is mostly focusing on the strongly convex or general convex settings. We extend the case to the non-convex scenarios, which can be considered as a complimentary study to the stochastic recursive gradient in probability.

1.3 Notation

For a sequence of sets , we denote the smallest sigma algebra containing , , by . By abuse of notation, for a random variable , we denote the sigma algebra generated by by . We define constant . For two scalars , we denote and . When we say a quantity is for some , there exists a polylogarithmic in and such that , and similarly is defined the same but up to a logarithm factor.

2 Prob-SARAH Algorithm

The algorithm Prob-SARAH proposed in our work is a modified version of SpiderBoost ([33]) and SARAH ([27, 28]). Since the key update structure is originated from ([27]), we call our modified algorithm Prob-SARAH. In fact, it can also be viewed as a generalization of the SPIDER algorithm introduced in ([5]).

We present the Prob-SARAH in Algorithm 1, and here, we provide some explanation of the key steps. Following other SARAH-based algorithms, we adopt a similar gradient approximation design with nested loops, specifically with a checkpoint gradient estimator using a large mini-batch size in Line 4 and a recursive gradient estimator updated in Line 9. When the mini-batch size is large, we can regard the checkpoint gradient estimator as a solid approximation to the true gradient at . With this checkpoint, we can update the gradient estimator with a small mini-batch size while maintaining a desirable estimation accuracy.

To emphasize, our stopping rules in Line 11 of Algorithm 1 is newly proposed, which ensures a critical enhancement of the performance compared to previous literature. In particular, with this new design, we can control the gradient norm of the output with high probability. For a more intuitive understanding of these stopping rules, we will see in our proof sketch section that the gradient norm of iterates in the -th outer iteration, , can be bounded by a linear combination of with a small remainder. The first stopping rule, therefore, strives to control the magnitude of the linear combination of , while the second stopping rule is specifically designed to control the size of remainder terms. For this purpose, should be set as a credible controller of the remainder term, with an example given in Theorems 3.1. In this way, with small preset constants and , we guarantee that the output has a desirably small gradient norm, dependent on and , when the designed stopping rules are activated. Indeed, Proposition B.1 in Appendix B offers a guarantee that the stopping rule will be definitively satisfied at some point. More refined quantitative results regarding the number of steps required for stopping will follow in Theorems 3.1 and Appendix D.3.

3 Theoretical Results

This section is devoted to the main theoretical result of our proposed algorithm Prob-SARAH. We provide the stop guarantee of the algorithm along with the upper bound of the steps. The high-probability error bound of the estimated gradient is also established. The discussion of the dependence of our algorithm on the parameters is available after we introduce our main theorems.

3.1 Technical Assumptions

We shall introduce some necessary regularized assumptions. Most assumptions are commonly used in the optimization literature. We have further clarifications in Appendix A.

Assumption 3.1 (Existence of achievable minimum).

Assume that for each , has continuous gradient on and is a compact subset of . Then, there exists a constant such that

| (4) |

Also, assume that there exists an interior point of the set such that

Assumption 3.2 (-smoothness).

For each , is -smooth for some constant , i.e.,

Assumption 3.3 (-smoothness extension).

There exists a -smooth function such that

where is the Euclidean projection of on some compact set .

Assumption 3.4.

Assumption 3.1 also indicates that there exists a positive number such that Assumptions 3.1–3.3 are commonly used in the optimization literature, and Assumption 3.4 can be easily satisfied in practical use as long as the initial points are not too far from the optimum. See more comments on assumptions in Appendix A.

3.2 Main Results on Complexity

According to the definition given in [20], an algorithm is called -independent if it can guarantee convergence at all target accuracies in expectation without explicitly using in the algorithm. This is a very favorable property because it means that we no longer need to set the target error beforehand. Here, we introduce a similar property regarding the dependency on .

Definition 3.1 (-semi-independence).

An algorithm is -semi-independent, given , if it can guarantee convergence at all target accuracies with probability at least and the knowledge of is only needed in the post-processing. That is, the algorithm can iterate without knowing and we can select an appropriate iterate out afterwards.

The newly introduced property can be perceived as the probabilistic equivalent of -independence. As stated in the succeeding theorem, under the given conditions, Prob-SARAH can achieve -semi-independence, given .

Theorem 3.1.

3.3 Proof Sketch

In this part, we explain the idea of the proof of Theorem 3.1. Same proofing strategy can be applied to other hyper-parameter settings. First, we bound the difference between and by a linear combination of and small remainders, with which we can have a good control on when the stopping rules are met. Second, we bound the number of steps we need to meet the stopping rules. Combining these 2 key components, we can smoothly get the final conclusions.

Let us firstly introduce a novel Azuma-Hoeffding type inequality, which is key to our analysis.

Theorem 3.2 (Martingale Azuma-Hoeffding Inequality with Random Bounds).

Suppose is a martingale difference sequence adapted to . Suppose is a sequence of random variables such that and is measurable with respect to , . Then, for any fixed , and , with probability at least , for , either

|

|

Remark 3.1.

It is noteworthy that this probabilistic bound on large-deviation is dimension-free, which is a nontrivial extension of Theorem 3.5 in [30]. If are not random, we can let and with , . Since can be arbitrarily close to 0 and can be arbitrarily close to 1, we can recover Theorem 3.5 in [30]. Compared with Corollary 8 in [15], which can be viewed as a sub-Gaussian counterpart of our result, a key feature of our Theorem 3.2 is its dimension-independence. We are also working towards improving the bound in Corollary 8 from [15] to a dimension-free one.

The success of Algorithm 1 is largely because is well-approximated by , and meanwhile can be easily updated. We can observe that is actually sum of a sequence of martingale difference as

| (6) |

To be more specific, let , and iteratively define , , , , . We also denote , , . Then, we can see that is a martingale difference sequence adapted to . With the help of our new Martingale Azuma-Hoeffding inequality, we can control the difference between and by a linear combination of and small remainders, with details given in Appendix D.1. Then, given the stopping rules in line 11 and selection method specified in line 12 of Algorithm 1, it would be not hard for us to obtain with a high probability. More details can be found in Appendix D.2.

Another key question needed to be resolved is, when the algorithm can stop? The following analysis can build some intuitions for us. Given a , with the bound given in Proposition F.1 in Appendix F, with a high probability,

| (7) |

where is upper bounded by a value polylogorithmic in . As for the second summation, if for (which is obviously true when is moderately large) and our algorithm doesn’t stop in outer iterations,

which grows at least linear in . Consequently, when is sufficiently large, the RHS of (7) can be smaller than , which leads to a contradiction. Roughly, we can see that the stopping time cannot exceed the order of . More details can be found in Appendix D.3.

4 Numerical Experiments

In order to validate our theoretical results and show good probabilistic property for the newly-introduced Prob-SARAH, we conduct some numerical experiments where the objectives are possibly non-convex.

4.1 Logistic Regression with Non-Convex Regularization

In this part, we consider to add a non-convex regularization term to the commonly-used logistic regression. Specifically, given a sequence of observations , and a regularized parameter , the objective is

Such an objective has also been considered in other works like [11] and [14]. Same as other works, we set the regularized parameter across all experiments. We compare the newly-introduced Prob-SARAH against three popular methods including SGD ([6]), SVRG ([31]) and SCSG ([21]). Based on results given in Theorem 3.1, we let the length of the inner loop , the inner loop batch size , the outer loop batch size . For fair comparison, we determine the batch size (inner loop batch size) for SGD (SCSG and SVRG) based on the sample size and the number of epochs needed to have sufficient decrease in gradient norm. For example, for the w7a dataset, the sample size is 24692 and we run 60 epochs in total. In the 20th epoch, the inner loop batch size of Prob-SARAH is approximately . Thus, we set batch size 256 for SGD, SCSG and SVRG so that they can be roughly matched. In addition, based on the theoretical results from [31], we also consider a large inner loop batch size comparable to for SVRG. In addition, we set step size for all algorithms across all experiments for simplicity.

Results are displayed in Figure 2, from which we can see that Prob-SARAH has superior probabilistic guarantee in controlling the gradient norm in all experiments. It is significantly better than SCSG and SVRG under our current setting. Prob-SARAH can achieve a lower gradient norm than SGD at the early stage while SGD has a slight advantage when the number of epochs is large.

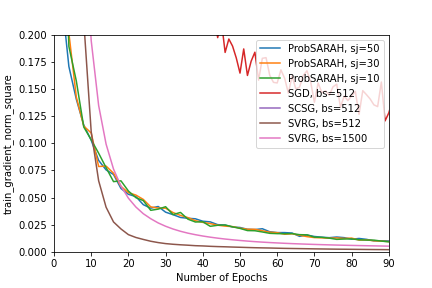

4.2 Two-Layer Neural Network

We also evaluate the performance of Prob-SARAH, SGD, SVRG and SCSG on the MNIST dataset with a simple 2-layer neural network. The two hidden layers respectively have 128 and 64 neurons. We include a GELU activation layer following each hidden layer. We use the negative log likelihood as our loss function. Under this setting, the objective is possibly non-convex and smooth on any given compact set. The step size is fixed to be 0.01 for all algorithms. For Prob-SARAH, we still have the length of the inner loop , the inner loop batch size , the outer loop batch size . But to reduce computational time, we let start from 10, 30 and 50 respectively. Based on the same rule described in the previous subsection, we let the batch size (or inner loop batch size) for SGD, SVRG and SCSG be 512.

Results are given in Figure 1. In terms of gradient norm, Prob-SARAH has the best performance among algorithms considered here when the number of epochs is relatively small. With increasing number of epochs, SVRG tends to be better in finding first-order stationary points. However, based on the 3rd and 4th columns in Figure 1, SVRG apparently has an inferior performance on the validation set, which indicates that it could be trapped at local minima. In brief, Prob-SARAH achieves the best tradeoff between finding a first-order stationary point and generalization.

5 Conclusion

In this paper, we propose a SARAH-based variance reduction algorithm called Prob-SARAH and provide high-probability bounds on gradient norm for estimator resulted from Prob-SARAH. Under appropriate assumptions, the high-probability first order complexity nearly match the one in the in-expectation sense. The main tool used in the theoretical analysis is a novel Azuma-Hoeffding type inequality. We believe that similar probabilistic analysis can be applied to SARAH-based algorithms in other settings.

Appendix A Remarks and Examples for Assumptions

A.1 More comments on Assumptions 3.1–3.4

Remark A.1 (Convexity and smoothness).

It is worth noticing that Assumption 3.1 is widely used in many non-convex optimization works and can be met for most applications in practice. Assumption 3.2 is also needed in deriving in-expectation bound for many non-convex variance-reduced methods, including state-of-art ones like SPIDER and SpiderBoost. As for Assumption 3.3, it is a byproduct of the compact constraint and can be satisfied with some commonly-seen and usual choices of . For more discussions on Assumption 3.3, please see Appendix A.2.

Remark A.2 (Compact set ).

Compared with other works in the literature of non-convex optimization, the compact constraint region imposed in the finite sum problem (1) may seem somewhat restrictive. In fact, such constraint is largely due to technical convenience and it can be removed with additional condition on gradients. We will elaborate on this point in subsection C.1. Besides, in many practical applications, it is reasonable to restrict estimators to a compact set when certain prior knowledge is available.

A.2 An Example of Assumption 3.3

Let us consider the logistic regression with non-convex regularization where the object function can be characterized as

where , is the th element of , is the regularization parameter, are labels and are normalized covariates with norm 1. In fact, for any fixed , Assumption 3.3 holds with and when is sufficiently large. Since smoothness is easy to show, we focus on the second part of Assumption 3.3. To show that

holds for any , since the projection direction is pointed towards the origin, it suffices to show that for any with ,

when for , where is the th element of . To see this,

If , we can immediately know that for any .

If , let us consider an auxiliary function

Then,

from where we can know the minimum of is achieved for some . Thus,

Therefore,

which is positive when .

If we consider other non-convex regularization terms in logistic regression, such as , we may no longer enjoy Assumption 3.3 because monotony may not hold for a few projection directions even when the constraint region is large. Nevertheless, such theoretical flaw can be easily remedied by adding an extra regularization term like with appropriate .

Appendix B Stop Guarantee

We would like to point out that, under appropriate parameter setting, Prob-SARAH is guaranteed to stop. Actually, we can have the stopping guarantee under more general conditions than those stated in the following proposition. But for simplicity, we only present conditions naturally matched parameter settings given in the next two subsections.

Proposition B.1 (Stop guarantee of Prob-SARAH).

Suppose that Assumptions 3.1, 3.2, 3.3 and 3.4 are satisfied. Let step size and suppose that , . The large batch size is set appropriately such that when is sufficiently large. If the limit of is 0, then, for any fixed and , with probability 1, Prob-SARAH (Algorithm 1) stops. In settings where we always have , we also have the result that Prob-SARAH (Algorithm 1) stops with probability 1.

Appendix C Detailed Results on Complexity

Theorem C.1.

Suppose that Assumptions 3.1, 3.2, 3.3 and 3.4 are valid. Given a pair of errors , in Algorithm 1 (Prob-SARAH), set hyperparameters

|

|

(8) |

for , where

Then, with probability at least , Prob-SARAH stops in at most

outer iterations and the output satisfies . Detailed definitions of and can be found in Propositions D.3 and D.4.

Corollary C.1.

Under parameter settings in Theorem C.1,

We introduce another setting that can help to reduce the dependence on , which could be implicitly affected by the choice of constraint region . We should also notice that, under such setting, the algorithm is no longer -semi-independent.

Theorem C.2.

Corollary C.2.

Under parameter settings in Theorem C.2,

Comparing the complexities in Corollary C.1 and Corollary C.2, we can notice that under the second setting, we can get rid of the dependence on at the expense of losing some adativity to . We would also like to point out that, in the low precision region, i.e. when , the second complexity is inferior.

C.1 Dependency on Parameters

To apply our newly-introduce Azuma-Hoeffding type inequality (see Theorem 3.2), it is necessary to impose a compact constraint region . Therefore, let us provide a delicate analysis on how can affect the convergence guarantee.

Dependency on : , the diameter of , is a parameter directly related to the choice of . Shown in theoretical results presented above, the in-probability first-order complexities always have a polylogorithmic dependency on , which implies that as long as is polynomial in or , it should only have a minor effect on the complexity. With certain prior knowledge, we should be able to control at a reasonable scale.

Dependency on and : Under the setting given in Theorem 3.1, the first-order complexity is polynomial in . Such dependency implicates that the complexity would not deteriorate much if is of a small order, which is definitely true when the loss function is bounded. As for the setting given in Theorem C.2, the first-order complexity is polynomial in , which is conventionally assumed to be and will not be affected by .

Appendix D Postponed Proofs for the Results in Section 3

D.1 Bounding the Difference between and

Proposition D.1.

Remark D.1.

Let us briefly explain this high-probability bound on . When and , by letting be of appropriate -polynomial order, will be roughly . If further we have be of -polynomial order and let be of -polynomial order, will be . As a result, the upper bound is roughly when is sufficiently large so that the last term in the bound (10) is negligible. Bounding by linear combination of is the key to our analysis.

D.2 Analysis on the Output

Under parameter setting specified in Theorem 3.1, if we suppose that the algorithm stops at the -th outer iteration, i.e.

| (11) |

there must exist a , such that

Then, on the event

| (12) |

where and , we can easily derive an upper bound on ,

where the 2nd step is based on (10) with definitions of and given in Theorem 3.1, the 5th step is based on (11), the 6th step is based on the choice of and the 7th step is based on the choice of . In addition, based on Proposition D.1, the union event occurs with probability at least

In one word, it is highly likely to control the norm of gradient at our desired level when the algorithm stops.

The above results can sufficiently explain our choice of stopping rule imposed in Algorithm 1. We can summarize them as the following proposition.

D.3 Upper-bounding the Stopping Time

Proposition D.3 (First Stopping Rule).

Proposition D.4 (Second Stopping Rule).

Proposition D.5 (Stop Guarantee).

Proof.

If Algorithm 1 stops in outer iterations, our conclusion is obviously true. If not, according to Proposition D.3, there must exist a such that the first stopping rule is met, i.e.

According to Proposition D.4, the second stopping rule is also met, i.e.

Consequently, the algorithm stops at the -th outer iteration. ∎

Appendix E Technical Lemmas

Lemma E.1 (Theorem 4 in [10]).

Let be a set of fixed vectors in . are 2 random index sets sampled respectively with replacement and without replacement, with size . For any continuous and convex function ,

Lemma E.2 (Proposition 1.2 in [3]111See also Lemma 1.3 in [2].).

Let be real random variable such that and for some . Then, for all ,

Lemma E.3 (Theorem 3.5 in [30]222See also Theorem 3 in [29] and Proposition 2 in [5].).

Let be a vector-valued martingale difference sequence with respect to , , i.e. for , . Assume , . Then,

.

Proposition E.1 (Norm-Hoeffding, Sampling without Replacement).

Let be a set of fixed vectors in such that , , for some . Let be a random index sets sampled without replacement from , with size . Then,

. In addition,

Proof.

Firstly, we start with developing moment bounds. Let be a random index sets sampled with replacement from , independent of , with size . For any ,

where the 1st step is based on Lemma E.1 and the 4th step is based on the fact that and Lemma E.3.

Then, for any ,

where the 1st step is based on Taylor’s expansion and the second to the last step is based on the fact that , , .

Definition E.1.

A random vector is -norm-subGaussian (or nSG), if such that

.

Definition E.2.

A sequence of random vectors is -norm-subGaussian martingale difference sequence adapted to , if such that for ,

and is -norm-subGaussian.

Lemma E.4 (Corollary 8 in [15]).

Suppose is -norm-subGaussian martingale difference sequence adapted to . Then for any fixed , and , with probability at least , either

or,

Lemma E.5.

For any ,

when .

Proof.

∎

Lemma E.6.

For any ,

when .

Proof.

Function is monotonically decreasing when . Since ,

Define a function . It is monotonically decreasing when . Thus, if , we know and consequently,

If , . Hence,

Based on the above results,

∎

Appendix F Proofs of Main Theorems

Proof of Proposition B.1.

It is not hard to conclude that we only need to show

| (16) |

For simplicity, we denote . To show (16), we firstly derive the in-expectation bound on , which has been covered in works like [33].

With our basic assumptions, we have

where the 2nd and 3rd step is based on Assumption 3.3. Then, summing the above inequality from to ,

| (17) |

Then,

| (18) |

Proof of Theorem 3.2.

In this proof, for simplicity, we denote by . Let . For a , consider

Then,

and consequently,

Next,

where the first inequality is based on the fact that if , .

According to Lemma 3 in [29],

Thus,

| (21) |

Now, let

We can easily know that for , is measurable with respect to . According to (21),

which implies that is a non-negative super-martingale adapted to .

For any constant , if we define stopping time , we immediately know that , , is a supermartingale and

where the 2nd step is based on the fact that , the 4th step is by Chebyshev’s inequality and the 5th step is based on the supermartingale property.

Therefore, if we let ,

Since can be up to ,

The final conclusion can be obtained immediately by following similar steps given in the proof of Corollary 8 from [15]. ∎

Proof of Proposition D.1.

Next, if we suppose , where for any , we have

Let

and

for and . Then, we can see that is a martingale difference sequence adapted to .

Notice that for and ,

Proposition F.1 (Inner Loop Analysis).

Proof of Proposition F.1.

Firstly, as what we have shown in section 3, occurs with probability at least .

Proof of Proposition D.3.

For simplifying notations, we denote

Then,

| (26) | |||

where the 1st step is based on the choices that and , the second step is bases on the choices of , . According to Lemma E.5, as ,

| (27) |

According to Lemma E.6, as ,

| (28) |

If we suppose to the contrary that

holds for all , then we have

By (26), (27), (28) and the above results,

which contradicts (25). ∎

Proof of Proposition D.4.

Proof of Corollary C.1.

This part follows a similar way as the complexity analysis in [11]. It is easy to know that if Algorithm 1 stops in outer iterations, the first order computational complexity is

Thus, it is sufficient to show

For simplicity, we let and consequently .

When , and consequently . Hence,

where the last step is due to .

When , and consequently . Hence,

where the last step is due to .

To sum up,

Secondly, if we let , we have based on Assumption 3.4. As a result, . Therefore, it is equivalent to study where .

When ,

Thus, . Then,

When ,

Thus, . Then

To sum up,

Since , we can directly know that

Similar to the previous case,

∎

Proof of Theorem C.2.

We can see that many results given under the setting of Theorem 3.1 can still apply under the current setting. If we still define as (12), occurs with probability at least .

Under the current setting, Proposition F.1 is still valid. Thus, on , for any ,

where the 2nd step is due to our choice of and consequently , the 3rd step is based on our choices of and , the 4th step is based on our choice of . Summing the above inequality from to ,

| (33) |

where the 2nd step is according to Assumption 3.1, the 4th step is based on our choice of . We assert that when , there must exist a such that

If not,

which is in conflict with (33). Thus, on , the first stopping rule will be met in at most outer iterations while the second stopping rule is always satisfied. When both stopping rules are met, we can show that the output is of desirable property. Let and such that

and

Then, on ,

where the 4th step is based on Proposition D.1, the 7th step is based on our choices of and , the 8th step is based on our choice of . ∎

Appendix G Supplementary Figures

References

- [1] Zeyuan Allen-Zhu and Elad Hazan “Variance reduction for faster non-convex optimization” In International conference on machine learning, 2016, pp. 699–707 PMLR

- [2] Rémi Bardenet and Odalric-Ambrym Maillard “Concentration inequalities for sampling without replacement” In Bernoulli 21.3 Bernoulli Society for Mathematical StatisticsProbability, 2015, pp. 1361–1385

- [3] Stéphane Boucheron, Gábor Lugosi and Pascal Massart “Concentration inequalities: A nonasymptotic theory of independence” Oxford university press, 2013

- [4] Aaron Defazio, Francis Bach and Simon Lacoste-Julien “SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives” In Advances in neural information processing systems, 2014, pp. 1646–1654

- [5] Cong Fang, Chris Junchi Li, Zhouchen Lin and Tong Zhang “SPIDER: near-optimal non-convex optimization via stochastic path integrated differential estimator” In Proceedings of the 32nd International Conference on Neural Information Processing Systems, 2018, pp. 687–697

- [6] Saeed Ghadimi and Guanghui Lan “Stochastic first-and zeroth-order methods for nonconvex stochastic programming” In SIAM Journal on Optimization 23.4 SIAM, 2013, pp. 2341–2368

- [7] Ian Goodfellow, Yoshua Bengio and Aaron Courville “Deep learning” MIT press, 2016

- [8] Nicholas JA Harvey, Christopher Liaw, Yaniv Plan and Sikander Randhawa “Tight analyses for non-smooth stochastic gradient descent” In Conference on Learning Theory, 2019, pp. 1579–1613 PMLR

- [9] Nicholas JA Harvey, Christopher Liaw and Sikander Randhawa “Simple and optimal high-probability bounds for strongly-convex stochastic gradient descent” In arXiv preprint arXiv:1909.00843, 2019

- [10] Wassily Hoeffding “Probability Inequalities for Sums of Bounded Random Variables” In Journal of the American Statistical Association 58.301, 1963, pp. 13–30

- [11] Samuel Horváth, Lihua Lei, Peter Richtárik and Michael I Jordan “Adaptivity of stochastic gradient methods for nonconvex optimization” In arXiv preprint arXiv:2002.05359, 2020

- [12] Prateek Jain, Dheeraj Nagaraj and Praneeth Netrapalli “Making the last iterate of sgd information theoretically optimal” In Conference on Learning Theory, 2019, pp. 1752–1755 PMLR

- [13] Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshirani “An introduction to statistical learning” Springer, 2013

- [14] Kaiyi Ji, Zhe Wang, Bowen Weng, Yi Zhou, Wei Zhang and Yingbin Liang “History-gradient aided batch size adaptation for variance reduced algorithms” In International Conference on Machine Learning, 2020, pp. 4762–4772 PMLR

- [15] Chi Jin, Praneeth Netrapalli, Rong Ge, Sham M Kakade and Michael I Jordan “A short note on concentration inequalities for random vectors with subgaussian norm” In arXiv preprint arXiv:1902.03736, 2019

- [16] Rie Johnson and Tong Zhang “Accelerating stochastic gradient descent using predictive variance reduction” In Advances in neural information processing systems 26, 2013, pp. 315–323

- [17] Sham M Kakade and Ambuj Tewari “On the generalization ability of online strongly convex programming algorithms” In Advances in Neural Information Processing Systems, 2009, pp. 801–808

- [18] Nicolas Le Roux, Mark Schmidt and Francis Bach “A stochastic gradient method with an exponential convergence rate for finite training sets” In Proceedings of the 25th International Conference on Neural Information Processing Systems-Volume 2, 2012, pp. 2663–2671

- [19] Lihua Lei and Michael Jordan “Less than a single pass: Stochastically controlled stochastic gradient” In Artificial Intelligence and Statistics, 2017, pp. 148–156 PMLR

- [20] Lihua Lei and Michael I Jordan “On the adaptivity of stochastic gradient-based optimization” In SIAM Journal on Optimization 30.2 SIAM, 2020, pp. 1473–1500

- [21] Lihua Lei, Cheng Ju, Jianbo Chen and Michael I Jordan “Non-convex finite-sum optimization via SCSG methods” In Proceedings of the 31st International Conference on Neural Information Processing Systems, 2017, pp. 2345–2355

- [22] Xiaoyu Li and Francesco Orabona “A high probability analysis of adaptive sgd with momentum” In arXiv preprint arXiv:2007.14294, 2020

- [23] Zhize Li “SSRGD: Simple Stochastic Recursive Gradient Descent for Escaping Saddle Points” In Advances in Neural Information Processing Systems 32, 2019, pp. 1523–1533

- [24] Zhize Li, Hongyan Bao, Xiangliang Zhang and Peter Richtárik “PAGE: A simple and optimal probabilistic gradient estimator for nonconvex optimization” In International Conference on Machine Learning, 2021, pp. 6286–6295 PMLR

- [25] Zhize Li and Jian Li “A simple proximal stochastic gradient method for nonsmooth nonconvex optimization” In Proceedings of the 32nd International Conference on Neural Information Processing Systems, 2018, pp. 5569–5579

- [26] Yurii Nesterov “Introductory lectures on convex optimization: A basic course” Springer Science & Business Media, 2003

- [27] L M Nguyen, Jie Liu, Katya Scheinberg and Martin Takáč “SARAH: A novel method for machine learning problems using stochastic recursive gradient” In International Conference on Machine Learning, 2017, pp. 2613–2621 PMLR

- [28] Lam M Nguyen, Jie Liu, Katya Scheinberg and Martin Takáč “Stochastic recursive gradient algorithm for nonconvex optimization” In arXiv preprint arXiv:1705.07261, 2017

- [29] Iosif Pinelis “An approach to inequalities for the distributions of infinite-dimensional martingales” In Probability in Banach Spaces, 8: Proceedings of the Eighth International Conference, 1992, pp. 128–134 Springer

- [30] Iosif Pinelis “Optimum bounds for the distributions of martingales in Banach spaces” In The Annals of Probability JSTOR, 1994, pp. 1679–1706

- [31] Sashank J Reddi, Ahmed Hefny, Suvrit Sra, Barnabas Poczos and Alex Smola “Stochastic variance reduction for nonconvex optimization” In International conference on machine learning, 2016, pp. 314–323 PMLR

- [32] Quoc Tran-Dinh, Nhan H Pham, Dzung T Phan and Lam M Nguyen “Hybrid stochastic gradient descent algorithms for stochastic nonconvex optimization” In arXiv preprint arXiv:1905.05920, 2019

- [33] Zhe Wang, Kaiyi Ji, Yi Zhou, Yingbin Liang and Vahid Tarokh “Spiderboost and momentum: Faster variance reduction algorithms” In Advances in Neural Information Processing Systems 32, 2019, pp. 2406–2416

- [34] Dongruo Zhou, Jinghui Chen, Yuan Cao, Yiqi Tang, Ziyan Yang and Quanquan Gu “On the convergence of adaptive gradient methods for nonconvex optimization” In arXiv preprint arXiv:1808.05671, 2018