Probabilistic Analysis of rrt Trees

McGill University,

Montreal, Canada)

Abstract

This thesis presents analysis of the properties and run-time of the Rapidly-exploring Random Tree (rrt) algorithm. It is shown that the time for the rrt with stepsize to grow close to every point in the -dimensional unit cube is . Also, the time it takes for the tree to reach a region of positive probability is . Finally, a relationship is shown to the Nearest Neighbour Tree (nnt). This relationship shows that the total Euclidean path length after steps is and the expected height of the tree is bounded above by .

1 Introduction

In 1998, LaValle [12] introduced a ground-breaking approach to path planning: the Rapidly-exploring Random Tree (rrt). At the time the area of path planning had already been taken over by randomized algorithms due to strong evidence of a limit on the potential speed of deterministic path [11, 16, 15]. The rrt was a novel randomized approach that has quickly come to dominate modern path planning. Previously the most widely used algorithm had been the probabilistic road map [10, 3], an algorithm which randomly selects points in the space and connects these points to the graph. The rrt differed, in that rather than adding these randomly selected points, it built a tree with small edge length based on these points.

The rrt’s ubiquity is in large part due to a number of variants to the original algorithm, such as the rrt-Connect [11, 13] and the rrt* [9, 8]. In the former, we grow an rrt out of both the initial and final point of a desired path, while trying to connect them. In the latter, to optimize the spanning ratio we grow our tree based on a choice of minimal path length as well as the locations of the randomly selected points. These modifications and others are missing analytical grounding to understand their performance.

There have been prior analyses of the rrt but none in quite this area. Soon after the creation of the rrt, Kuffner and LaValle [11] proved that the algorithm was probabilistically complete—i.e., the algorithm succeeds with high probability—and that the distribution of the -th vertex in the tree converges to the sampling distribution. In their analysis however, they commented that they had no result on the speed of this convergence. Further analysis has been done by Arnold et al. [1] on the speed at which the rrt spreads out by studying an idealized version of the algorithm on an infinite disc. Work has also been done by Karaman and Frazzoli [9] on the complexity of the update step in the algorithm and the optimality of the path, while Dalibard and Laumond [3] have studied the effects of narrow passages on the performance of the algorithm. However there remains a gap in the literature on determining a run time until success of the rrt. This paper aims to fill this gap.

1.1 Organization

The paper is organized as follows: first we set out definitions and a framework for our approach. We then split our analysis into three phases of growth of the rrt.

The first phase is the phase where the rrt spreads out quickly. Most of the space is still far away from the tree, so most steps give a large amount of progress. This is the phase most similar to the problem considered by Arnold et al.[1].

The second phase is the phase where the rrt grows close to every point in the space. Kuffner and LaValle [11] proved that this occurs and we will add information on the speed.

Finally, the third phase covers the growth of the tree once the rrt has grown close to every point in the graph. We find a relationship here to the nearest neighbour tree which allows easy study of the tree and many results on its behaviour.

2 Definitions

2.1 Initial Framework

We begin with basic definitions of the rrt on compact, convex metric spaces. In lieu of the vocabulary used in general path planning problems, we will use the vocabulary of metric spaces and probability.

Definition 1.

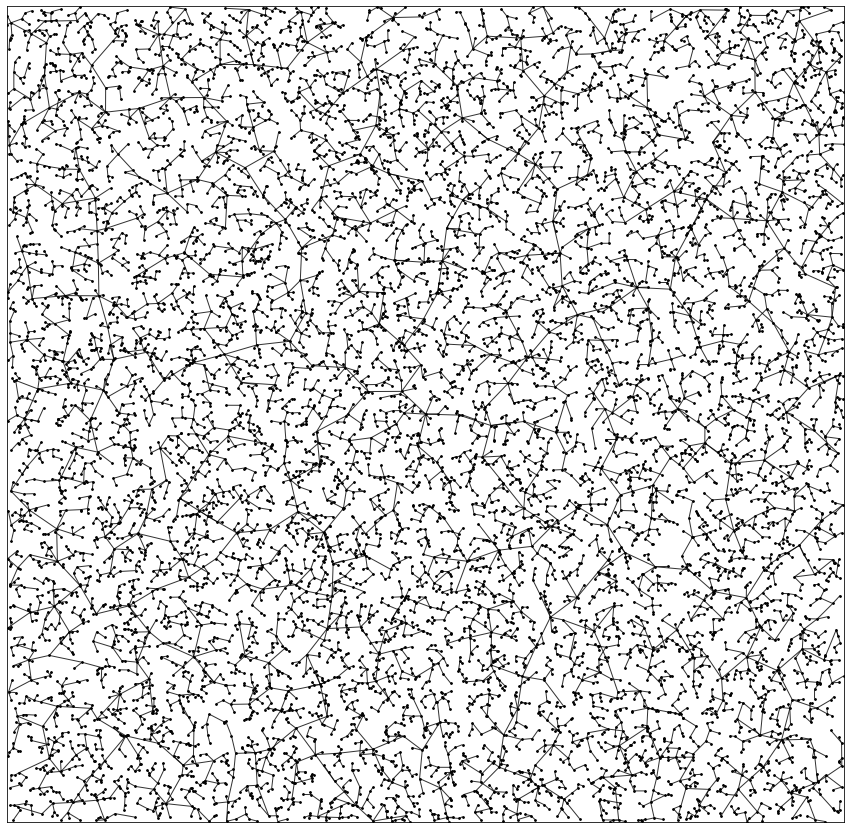

An rrt is a sequence of vertex and edge random variable pairs

defined on a compact metric space with distance function , a step-size parameter , , and an initial vertex . Each is generated in the following way: take a sequence of uniform -valued random variables and let

Define

and

An rrt at time is the subset given by

In plain terms, we start with an initial vertex and then iteratively we select a point uniformly in the space, find —the closest vertex in the tree at time —and then add a vertex-edge pair in the direction of with edge length less than or equal to . In this definition we do not check for obstacles blocking the edge as we are assuming the space to be convex.

To remove clunkiness in notation, we often leave and implicit and just write . Typically, will be the -dimensional unit cube . Also, the initial vertex is often irrelevant to our analysis.

2.2 Covering Metric Spaces

One measure of success for the rrt is whether or not the tree has grown close to every point in the space. A natural definition for “close” for an rrt is being within of the tree.

Definition 2.

Consider a compact metric space and a graph where is the set of vertices and is the set of edges. The -cover of is

where is the ball

In the case of an rrt , the cover is

Definition 3.

The covering time of a metric space by an rrt is the -valued random variable

In particular, we will analyze the expected covering time, . Unless explicitly noted, we assume that .s

3 Phase 1—Initial Growth

We begin by studying how quickly the rrt spreads. Arnold et al. [1] consider a modification to the rrt where the space is the plane , the step size is 1, and points are drawn at infinity by picking a ray from the origin with a uniformly random direction. They prove in this model that the perimeter of the convex hull of tree grows linearly in time which implies that the maximum distance from the tree to the origin also grows linearly in time. Here, we consider a related question: how long does it take an rrt to reach a convex region of positive probability?

While a number of such regions can be chosen, for ease of calculation we consider the case that we have an rrt on and the region we want to reach is the right half of the square, when Similar arguments extend to the general convex region.

Theorem 1.

For an rrt on , the expected time to reach is as .

Proof.

We will follow the vertex with greatest -value through the whole process. Consider the Voronoi region of this point. There are two cases: either there are multiple vertices with the same -value or there is a single vertex with greatest -value. If there are multiple vertices with the same -value then then we have a greater chance of useful progression so we focus on the case of a unique vertex.

Suppose we have a unique vertex with greatest -value, . Consider any other vertex in the tree, . This vertex only impacts the Voronoi region of by the perpendicular bisector of the line between and Following this, we look at where ’s Voronoi region intersects the right edge of the box, and see that it does so at the edge of the Voronoi region of some other vertex. Also, we see that the Voronoi region contains a triangle from the vertex to the intersections of its Voronoi region with .

Our goal is to find a probability of progress which we will do by looking at this triangle—more specifically by considering triangles and considering when the Voronoi region would contain this triangle. In the worst case scenario, is on the upper or lower edge of , so without loss of generality, we take to be on the upper edge.

Consider the triangle with points and for some . The first question to ask is when will the Voronoi region of not contain ? We find the region where any vertex other that inside will have a Voronoi region intersecting . Observe that for any point on the boundary of this region, the perpendicular bisector of the line between and must pass through . Writing

consider the similar triangles

and

We have the equality

which implies that the boundary points all lie on the circle whose points satisfy

which is the circle centered at with radius .

The area that could interfere with this circle is then a cap with height

Note that , and that is decreasing in , so we may upper bound with

Now we pick in such a way that any time we select a point in we make progress. Set and take . Suppose our -th uniform vertex . The easy case is that in which case we make progress of at least The more subtle case is if . Then is in the circle and since is the most advanced point, is in fact in the cap. is at least further than , but how much progress have we actually made? We must analyse the difference between the height of the cap and ’s progress.

We will show that the height of the cap is less than by a constant fraction of . We derive to get

This is increasing in and at it is , so it follows that we progress by at least

This shows that it suffices to select a point in at least times to reach the right half of the unit square. Meanwhile, the area of is at least so the expected time to select a point in is less than . It follows that the expected time to reach is less than

∎

It is worth remarking that if we repeat the analysis in and wish to reach the argument above holds with slightly different constants.

The question remains whether this upper bound is tight or not. One can imagine that in expectation either some finite number of branches shoot out rapidly towards the right half of —in this case we would have in fact —or that the tree will progress with tendrils approximately apart from each other moving forward evenly—this being the case. The question remains open.

4 Phase 2—Covering Times

4.1 Covering

We turn our attention to the covering time for in the Euclidean metric. This gives the expected run time of the algorithm to some measure of success. The following analysis does not depend on the initial vertex so we drop it. First we look at the expected covering time for an rrt on .

Theorem 2.

The expected covering time of by an rrt is

Proof.

We first find a lower bound for . We consider a critical subset of the time steps: the time steps where new area is added to the cover. We work backwards from covering. Our goal is to lower bound the amount of time required for each critical step.

Define to be the inverse of the volume of the -dimensional ball with radius :

The maximum amount of space we cover at any critical step is so the number of critical steps is at least . As well, we will argue that the probability of achieving the -th last critical step is bounded by .

Consider the final critical step of any rrt. The area added in this step is a possibly uncountable set of points contained in an -ball. Letting be any time before the final critical step and after the penultimate critical step, we note that we may only have a critical step if is within of an uncovered point. This implies that the set of points that could lead to a critical step is contained in a -ball.

This argument can be repeated inductively—at any time the set of points that could lead to a critical step is contained in a union of -balls. To be precise, the time taken for the -th last critical step is lower bounded by the geometric random variable with parameter .

It follows that the covering time can be bounded below by

This gives a bound using the -harmonic number

∎

For an upper bound we consider a grid over where each grid cell is a cube has side length . The time required for the rrt to have a vertex in each grid cell is greater than the covering time. To find upper bound we need the following lemma to bound the time required for a new cell to have a vertex.

Lemma 1.

Let be an rrt on with an grid over it. Let be the union of the cells which do not contain a vertex of at time . Then if and only if .

Proof.

Suppose that at some time the cube and . Clearly so . But since , it belongs to a cell which contains for some . It follows that

This contradicts the definition of so we have proven the claim. ∎

Now we continue to the upper bound.

Proof.

For the grid, each cell is covered by any vertex inside it. Thus the time required to have a vertex in each grid cell upper bounds . Define to be the inverse of the volume of a cell:

Suppose grid cells contain a vertex. Then, by our previous lemma, the time to add a vertex to a new cell is the time to draw a uniform random point in a new cell. This is geometric with parameter

which has expectation

It follows that

Again, the -harmonic number gives an asymptotic bound

Thus, we have both inequalities. ∎

Notice that this result also holds for modified rrt algorithms which maintain the property that the point drawn in an uncovered cube will cover some new cube.

As well, note that the lower and upper bounds given by this proof are asymptotically

and

respectively.

Consider the lower bound. If we fix and then let , the run time is , confirming that the run time is at least exponential in the dimension of the space [3].

5 Coupon Collector Problem

It is worth noticing that our upper bound argument is very similar to a classical problem. We recall the Coupon Collector Problem [14, 5]:

Theorem 3.

Suppose there exist different types of coupons and each time a coupon is drawn it is drawn independently and uniformly at random from the classes, i.e., for each coupon drawn and for each the probability that the coupon is of the -th type is . The expected time to have one of each type of coupon is asymptotically .

In our upper bound argument for the covering time of the graph we have a scenario very similar to the Coupon Collector Problem. We have divided the space into a number of cubes that we need to cover, however in our case the selection of a new cube does not guarantee that it is covered, just that some new cube is covered. In this way we see it does not matter that we are not adding the randomly selected point to our tree.

6 A Connecting Lemma—Nearest Neighbour Trees

We turn our attention to the growth of the tree after the space has been covered. From this time onward, every point of is within distance of the tree, so we will have after coverage. Our analysis between the growth of the rrt in this phase depends on the relationship between the rrt and the Nearest Neighbour Tree (nnt).

Definition 4.

An nnt is a sequence of vertex and edge random variable pairs

defined on a compact metric space with an initial vertex and . Each is generated in the following way: take a sequence of uniform -valued random variables and let

Then is added to the tree via the edge

| (1) |

An nnt at time is the subset where

Now we define a nearest neighbour process which grows onto a given tree —note that may be a random tree itself. The purpose of this is to ultimately view an rrt as the growth of a nearest neighbour tree onto the rrt at covering time.

Definition 5.

Let be a random variable in and let be a random tree embedded in a metric space with vertices and edges . Let be an nnt. The connection process of an nnt onto is the sequence of vertex and edge random variable pairs

where

and

It is worth noting that the process loses a lot of meaning if . As well, may be deterministic in which case its associated random variables are all constant.

The Connecting Lemma below links the nnt to the rrt.

Lemma 2.

Let be the process defined above. has the following properties:

-

•

Let be the distance from to its parent in and be the distance from to its parent in . Then .

-

•

Let be the height of , be the depth of in and be the depth of in . Then for ,

Proof.

The first point follows by definition. For the second, let and let

The length of the path from to is certainly less than and the path up the tree starting at ’s parent is contained in , so it follows that

∎

Put simply, the first property of the Connecting Lemma states that the distance from a node to its parent is smaller in a connection process than an nnt. The second property states that the depth of a node in a connection process is less than the depth of the node in the nnt and an term—importantly, in the case of the rrt . Armed with this lemma, we can now analyze the behaviour of the rrt after covering.

7 Phase 3—Growth After Covering

We first analyze the behaviour of the nnt and then relate it to the rrt via the connecting lemma. In the case of an rrt, and .

7.1 Euclidean Distances

Let be a random variable with distribution

We begin with a Lemma on the nnt.

Lemma 3.

Let be an nnt. Then obeys the following inequality

Proof.

If , then all lie outside the ball . It follows by independence that

Considering then gives

Taking the limit, we get

This is the distribution of , so

This gives our result. ∎

Now we use this lemma for a result on the rrt.

Theorem 4.

Let be an rrt. Then obeys the following inequality

Proof.

Consider , the covering time of . Recall that is finite with probability 1. Define Then we have

Now, by the Connecting Lemma,

For the second term, by Cauchy-Schwarz,

and since

it follows that

Next we split the first term. By the Connecting Lemma and Cauchy-Schwarz

In the second term above, recall that can be upper bounded by a finite sum of independent random variables. Clearly , so it follows that

Now we split the first term again, this time for values of around . For

Let . At any time before covering the probability of failure is bounded above by while success we can bound trivially by 1. As well, in order to have we must have at least failures in the first steps, so we have the following bound on the first term above:

Finally, we find a bound on the most significant term. Note that for

Combining the above inequalities gives gives us that

Taking the limit we get our desired result

∎

This quickly gives a result on the tree that is more tangible.

Corollary 1.

Let be an rrt and let , the total Euclidean path length at time . Then

Proof.

By Theorem 4, we know that

It follows that

∎

Our final result on Euclidean distances concerns the path from a single node to the root.

Theorem 5.

Let be an rrt and let be the Euclidean path length from to . Then

Proof.

First, we observe a useful equality where is the event that is an ancestor of and is the indicator function:

Now cover with a grid of side length . Letting be the grid square containing and letting be the event that , we get the inequality

Next, sort the nodes by distance to . Then by this sorting is the event that is a record, so [7]. Furthermore, is independent of the event . It follows that

∎

7.2 Height and Depth

Next, we consider the height of the tree and depth of the -th node. We note the similarity with the analysis of the height of a binary search tree [4].

To begin, we analyze the height of the nnt.

Lemma 4.

Let be an nnt, let be the depth of , and let be the height of . For every , we have

Proof.

Observe that the index of ’s parent is uniformly distributed on due to independence. Stated another way, it is distributed as where is uniform on Iterating this process will take us to ’s parent, then grandparent, then eventually the root. It follows that

where the ’s are independent. For a useful bound on the , we upper bound by removing the floors and using Markov’s inequality:

When , the infimum is achieved at

It follows that

If , then with

we have

Note that for , . It follows that

Next, we can bound the height of the tree by

If , then

∎

The Connecting Lemma extends this result to the rrt.

Theorem 6.

Let be an rrt, let be the depth of , and let be the height of . For every , we have

Proof.

Simply observe that by the Connecting Lemma

It follows that

The result for follows similarly. ∎

We give a bound on the expectation as well. Again, we start with a lemma on the nnt.

Lemma 5.

Let be an nnt, let be the depth of , and let be the height of . Then

Proof.

Note that for fixed,

For the third term, note that due to our bound in Lemma 4, for ,

For the second term, we have the bound

Again, by Lemma 4,

Thus,

For and fixed we have similar bounds:

We have already shown the third term is , so we turn our attention to the second term. We again use a bound from Lemma 4 to get

Thus,

∎

The Connecting Lemma also allows an easy extension of this result to the rrt.

Theorem 7.

Let be an rrt, let be the depth of , and let be the height of . Then

Proof.

By the Connecting Lemma

The result for follows similarly. ∎

8 Future Work

There is a lot of work to be done using this framework. Immediate questions we are working on are:

-

•

Is the bound on reaching a convex region of positive probability tight? As the question is open, there remains hope that the expected time is in fact its trivial lower bound, . Further, how long does it take the tree to reach the boundary of the space?

-

•

What is the expected run time of rrt-Connect? Finding a path should be much faster than finding a single point, and growing two trees into each other should greatly improve the performance.

-

•

How does the performance change when barriers are added? For a specific space it is relatively easy to use this framework to determine an expected run time, but what can be said about a general space?

Our approach for the question of barriers will be to study random barriers generated by percolation models and determine expected run times on these spaces. Specifically, we will study site percolation as presented by Broadbent and Hammersly [2] and lilypad percolation as presented by Gilbert [6].

For site percolation, we take the space and drop a grid on it of side length . For chosen probability —which we will vary to model spaces with different quantities of barriers—we will place a barrier at each grid cube. We then run the rrt on the largest connected component of the space we have created and ask ourselves the same questions as we have asked for : what is the expected covering time of the connected component? What is the expected time for the rrt to spread through the space? What is the expected run time of rrt-Connect?

For lilypad percolation we fill the space with —again, we will vary —discs whose radius is a function of and whose centers are chosen uniformly and independently in .

9 Conclusion

Our results prove the conjectured performance of the rrt, answer some questions on its performance, and give a greater understanding to its behaviour while providing a framework for further analysis of the tree.

To summarize, we have split the growth of the rrt into three phases: initial growth, covering behaviour, and post covering growth. In the initial phase we have studied the time it takes the rrt to reach a convex area of positive probability, furthering the study of Arnold et al. [1] on how fast the tree spreads through a space .

In the covering phase we have determined the run time the tree needs to grow close to every point in the space. We have found that with step size the run time of the algorithm as is in expectation

and that fixing the expected run time is . This confirms the conjecture that the run time is worse than exponential in the dimension and provides a good understanding of the performance as a function of step size.

In the post-covering phase we have used a relationship the the Nearest Neighbour Tree to examine its behaviour. As a result, we know that asymptotically the path length and total Euclidean path length are both . As well, the expected depth of the -th node and height of the tree at time are both .

References

- [1] Maxim Arnold, Yuliy Baryshnikov and Steven M LaValle “Convex Hull Asymptotic Shape Evolution” In Algorithmic Foundations of Robotics X Springer, 2013, pp. 349–364

- [2] Simon R Broadbent and John M Hammersley “Percolation Processes: I. Crystals and Mazes” In Mathematical Proceedings of the Cambridge Philosophical Society 53.3, 1957, pp. 629–641 Cambridge University Press

- [3] Sébastien Dalibard and Jean-Paul Laumond “Control of Probabilistic Diffusion in Motion Planning” In Algorithmic Foundation of Robotics VIII Springer, 2009, pp. 467–481

- [4] Luc Devroye “A Note on the Height of Binary Search Trees” In Journal of the ACM (JACM) 33.3 ACM, 1986, pp. 489–498

- [5] Philippe Flajolet, Danièle Gardy and Loÿs Thimonier “Birthday Paradox, Coupon Collectors, Caching Algorithms and Self-organizing Search” In Discrete Appl. Math. 39.3 Amsterdam, The Netherlands, The Netherlands: Elsevier Science Publishers B. V., 1992, pp. 207–229

- [6] Edward N Gilbert “Random Plane Networks” In Journal of the Society for Industrial and Applied Mathematics 9.4 SIAM, 1961, pp. 533–543

- [7] Ned Glick “Breaking Records and Breaking Boards” In The American Mathematical Monthly 85.1 Mathematical Association of America, 1978, pp. 2–26

- [8] Fahad Islam et al. “RRT*-Smart: rapid Convergence Implementation of RRT* Towards Optimal Solution” In 2012 IEEE International Conference on Mechatronics and Automation, 2012, pp. 1651–1656 IEEE

- [9] Sertac Karaman and Emilio Frazzoli “Sampling-Based Algorithms for Optimal Motion Planning” In The International Journal of Robotics Research 30.7 Sage Publications Sage UK: London, England, 2011, pp. 846–894

- [10] Lydia E Kavraki, Petr Svestka, J-C Latombe and Mark H Overmars “Probabilistic Roadmaps for Path Planning in High-Dimensional Configuration Spaces” In IEEE Transactions on Robotics and Automation 12.4 IEEE, 1996, pp. 566–580

- [11] James Kuffner and Steven LaValle “RRT-Connect: An Efficient Approach to Single-Query Path Planning.” In IEEE International Conference on Robotics and Automation 2, 2000, pp. 995–1001

- [12] Steven M. LaValle “Rapidly-Exploring Random Trees: A New Tool for Path Planning”, 1998

- [13] Steven M LaValle and James J Kuffner Jr “Randomized Kinodynamic Planning” In The International Journal of Robotics Research 20.5 SAGE Publications, 2001, pp. 378–400

- [14] Rajeev Motwani and Prabhakar Raghavan “Randomized Algorithms” Cambridge University Press, 1995

- [15] John H. Reif “Complexity of the Generalized Mover’s Problem”, 1985

- [16] John H Reif “Complexity of the Mover’s Problem and Generalizations” In 20th Annual Symposium on Foundations of Computer Science (SFCS 1979), 1979, pp. 421–427 IEEE