Proactive Network Maintenance using Fast, Accurate Anomaly Localization and Classification on 1-D Data Series

Abstract

Proactive network maintenance (PNM) is the concept of using data from a network to identify and locate network faults, many or all of which could worsen to become service failures. The separation between the network fault and the service failure affords early detection of problems in the network to allow PNM to take place. Consequently, PNM is a form of prognostics and health management (PHM).

The problem of localizing and classifying anomalies on 1-dimensional data series has been under research for years. We introduce a new algorithm that leverages Deep Convolutional Neural Networks to efficiently and accurately detect anomalies and events on data series, and it reaches 97.82% mean average precision (mAP) in our evaluation.

Index Terms:

machine learning, pattern recognition, anomaly detection, proactive network maintenanceI Introduction

PNM is a concept created around 10 years ago in the cable industry to help manage operations costs as service providers turned more attention toward bi-directional services. These data services needed resiliency mechanisms, which in turn provided opportunities for PNM. Receive modulation error ratio (RxMER) is a key data element for finding problems in the network. It is a measurement of the signal to noise on a per sub-carrier frequency basis. Each sub-carrier frequency carries a calibrated pilot signal periodically, which provides an opportunity to measure a calibrated signal level and calculate a signal to noise ratio. The result is a 1-dimensional data series of measurements over a span of frequencies. But for most cable operators today, translation of these data into a decision about whether there exists an impairment in the signal or not, how severe, and where it might be located, has been left to experts to decipher, and at times disagree. By introducing machine learning techniques to the problem, the industry believes that this step in the process can be automated and improved.

There are many challenges on detecting patterns from series of data. For instance, in anomaly detection on time series, traditional methods like auto-regressive integrated moving average (ARIMA) and sliding window median, are useful for detecting sudden changes in the data series. But they require well defined thresholds and specifically designed window sizes. These algorithms also cannot recognize the actual patterns of the anomalies and produce meaningful labels. Newer methods like long short-term memory (LSTM) can also be used for detecting patterns or anomalies on data series. However, compared to convolutional neural network (CNN), LSTM normally has more parameters and takes more time and resources to train, and it also has a tendency to overfit the training dataset. One of our previous research paper[16] uses compound sliding window median algorithm for region proposal generation and a fully-connected neural network for classification. However, the previous research has such a shortcoming that the region proposal module of the model is based on smoothing algorithms and is not able to accurately detect anomalies. In addition, the input of its classification neural network has data padding on each proposed anomaly, which significantly increases the amount of data that are being processed and results in poor inference performance.

Literature in object detection has been showing significant progress in recent years. Region proposal-based object detection algorithms such as faster R-CNN[1] and regression based single shot algorithms such as YOLO[2][3], SSD[4], and Retinanet[5] are showing great performance in object detection problems. Given that image data are far more complex than data series, we adopt the architecture of YOLOv3[3] and develop a single-shot anomaly detector which provides superb efficiency and accuracy for recognizing anomalies and events on data series. This novel algorithm uses a Fully-Convolutional Neural Network and custom feature aggregation layers and prediction layers to perform localization and classification in a single step, which significantly improves the localization accuracy and classification performance compared to the previous research[16]. In use cases such as detecting impairments using cable modems’ Orthogonal Frequency-Division Multiplexing (OFDM) and Orthogonal Frequency-Division Multiple Access (OFDMA) RxMER data, we have demonstrated the effectiveness of our algorithm.

II Background

II-A DOCSIS® Specification Context

The DOCSIS protocol enables two-way radio-frequency (RF) communication over coax, a technology providing much of the access network for entertainment programming and internet access for the world, not to mention a large amount of networking for businesses.

It relies on analog RF transmission of data, and uses a mix of methods including single carrier quadrature amplitude modulation, OFDM/A (3.1), SC-QAM (3.0), etc., depending on the version and implementation.

For a long time, it has included several resiliency mechanisms including Forward Error Correction (FEC), adjustable profiles with differing data rates per frequency carrier, echo cancellation, and equalization. These resiliency mechanisms adjust service to account for plant problems that create impairments to the RF transmission signal. When these adjustments are made, it indicates in many cases an imperfect transmission medium. When this imperfection goes beyond the designed and installed quality level, it indicates network (plant) damage or degradation. This mechanism therefore can be monitored to indicate changes or problems in the network (plant). The operator can know of a problem before the customer is impacted. When a problem is indicated, a proactive repair opportunity may be created. PNM is the industry term for addressing plant problems before service is impacted.

II-B Proactive Network Maintenance Description

PNM was envisioned in the cable industry more than a decade ago, when DOCSIS networks were relatively new, and resiliency mechanisms were creating the opportunity[14]. Several measures were identified based on then-understood failure modes and risks associated with deployed technology. At the time, for example, analog optics were common for feeding the coax plant, so in some systems optical clipping was more common than it is today with digital optical systems. But the coax plant remains and will for some time, bringing with it several failure modes that result in RF transmission issues in certain frequencies, and impact on subsequent layers of the communication connection.

General components and their failure modes in the outside plant that can appear as impairments in the RF signal include but are not limited to the following:

-

•

Hard line or drop cable – shield, sheath, conductor, insulator

-

•

Connector – center conductor, shield, thread

-

•

Tap – cover, connector, electronics

-

•

Splitter, combiner, coupler – cover, connector, electronics

-

•

Filter – cover, connector, electronics

-

•

Splice – wrap, fill, shield connection, conductor connection, strap

-

•

Amplifier – cover, connector, electronics

-

•

Power Supply – cover, connector, electronics

-

•

Anchoring – various types such as straps, guy wires, and more.

-

•

Node – cover, connector, electronics

General failure modes of the cable plant which impact RF signal include the following

-

•

Passive – loose or misaligned connection, corroded, poor installation, damage, degradation, wet, cracked, loose and moving, incorrect part.

-

•

Active – poorly made, poorly installed, electrostatic discharge (ESD), lightning, ground fault, degradation or wear out, damaged, incorrect part.

Measurements identified for proactive network maintenance in the specification include the following, though some of these measurements are more general and not specifically identified for PNM:

-

•

Downstream spectrum capture

-

•

Downstream symbol capture

-

•

Downstream channel estimation coefficient

-

•

Downstream constellation display

-

•

Downstream receive modulation error ratio (RxMER) per sub-carrier

-

•

Downstream forward error correction (FEC) summary

-

•

Downstream required quadrature amplitude modulation (QAM) MER

-

•

Downstream histogram

-

•

Downstream modulation profile

-

•

Upstream pre-equalization

-

•

Upstream spectrum capture

-

•

Upstream RxMER per sub-carrier

-

•

Upstream FEC summary

-

•

Power level

For the version of the anomaly detector described in this paper, we will focus on downstream RxMER per sub-carrier as the measure used. While not a complete solution for identifying and removing RF impairments in coax networks, it is an important measure and can address a very significant part of PNM. RxMER per subcarrier is a measure of the signal to noise of each downstream frequency used to carry data to the cable modems. The cable modem can report these data because it monitors the frequencies over which it receives data, and those frequencies occasionally carry calibrated pilot signals. Those signals are known, so they can be compared against a received signal to determine a signal to noise ratio. Ideal signals are mostly flat across all subcarriers, with small amounts of variation acceptable. More detail about this measure can be found in[8][9][10][11][12]. Many impairments show up in RxMER per sub-carrier data, visually, statistically, and otherwise[11].

Impairments that appear in the spectrum (and RxMER per sub-carrier data) are classified in the cable industry as follows

-

•

Standing waves – when an RF signal transmits though coax and encounters an impedance change, the signal loses strength in the forward direction, and an echo returns in the opposite direction. If there are two impedance mismatches in the line, then an echo tunnel can form, causing repeating echo signals. When the impedance mismatches are stable, the echo tunnel appears in the RF signal as a standing wave, which impedes the signal in at least two ways: energy loss due to the impedance mismatches, and noise from the echo. Both of these factors impact the RF signal. Echo cancelers can take care of some of the impact at some points, but only if there is a signal with which to cancel. Power levels can adjust somewhat for fixed signal level effects, but not in all cases.

-

•

Resonant peaking – sometimes in frequency data we see certain frequencies with higher energy than others. We have not seen this in RxMER per sub-carrier data, and due to the nature of the measurement we don’t expect to encounter a resonant peaking, though the same pattern may appear in the data due to other frequencies experiencing impairment issues.

-

•

LTE or FM Ingress – when there are opens in cable plant, RF from the outside can get into the cable network. FM and LTE cellular signals (as do VHF and UHF bands) overlap the spectrum used in DOCSIS networks, so can appear as noise when the cable plant is open due to shield failures (shield integrity problems).

-

•

Suck-out – An ideal cable plant transmits evenly on all frequencies, but actual cable plant transmits better at lower frequencies than at high frequencies, so amplification is adjusted to make it behave more ideally. Typically, we see this as a slope in the power levels, when not adjusted for in transmission. But grounding issues in the cable plant can lead to some frequencies being highly attenuated when they should not be. These appear as a rapid drop in a band of frequencies, like the energy is being sucked out of the system at that frequency. The frequencies affected by the suck-out become unusable when severe enough, and at least must be compensated for otherwise. Because these problems can come and go over time and conditions, and often get worse over time, these are a perfect opportunity for PNM.

-

•

Roll-off – at the edge of a frequency band, frequencies at the edge can be attenuated more as you get closer to the edge. This is called a roll-off, and results in frequencies not being useful near that edge.

-

•

Filter – band filters in the plant can protect noise from getting into the system, but sometimes filters can attenuate frequencies on band edges unintentionally. Finding and removing these issues improves service.

-

•

Adjacency, second order distortion, and phase distortion are less common. Adjacency may appear as a step in the data over some sub-carriers. Second order distortion may appear as noise in RxMER per sub-carrier, and could potentially be captured by correlating with carrier signals whose energy appears in other frequencies, though detecting this impairment type requires correlating with the sent signal. Similarly, phase distortions require more than magnitude data (which RxMER per sub-carrier is) or potentially correlating with the complex I and Q values of the signal.

As access networks are physically a tree structure, an impairment has different impact on customers connected at different places of the plant. Taps include filters that protect customers from some issues. Recognizing RxMER is a measurement of the signal to noise that is received by the cable modem, and it is a measurement of the pilot signals only (which are of a known energy level), not all network problems will be discovered with RxMER. Further, due to the design of cable plant with diplex filters and taps, some impairments further downstream from a given cable modem may not impact that cable modem. These features help in localizing the problem sometimes.

The distinction between the network fault and service failure is a lot like the distinction between a software fault and software execution failure. In software, a fault may not lead to a failure in all cases, and may not be executed frequently or ever in an application. Likewise, a network fault may be hidden by resiliency mechanisms that come with DOCSIS networks and well-designed cable plant, or the impacted frequencies may not be used or used at lower data rates to compensate such that service is not impacted. Yet these impairments can grow to impact service as a failure, or service conditions can change such that the fault becomes a service failure.

II-C ProOps Environment

We created the Proactive Operations[12][13] (ProOps) platform to enable automation of detection of PNM issues into operations processes that turn the data into action. This platform enables code modules, referred to as workers, that process data input to form statistics output. The data can be raw data, statistics, processed data, or otherwise. The output can be any statistic including a hard decision point. We direct but do not require a four layer architecture for organizing the workers in ProOps to fulfil one or more of the following layers

-

•

Observe – collect data from the network elements (usually cable modems identified by MAC address) and process into soft decisions or statistics for further analysis.

-

•

Orient – analyze the data from the observe layer and decide what additional data are needed, or more frequent collection of some data, or a broader scope of the collection of those data such as over neighbors or over longer periods of time, for example.

-

•

Decide – analyze the data, statistics, and soft decisions from the first two observe and orient layers to turn found anomalies in the data into network impairments, through classification of anomalies, clustering the data from the network elements, and applying either network health or severity measurements to quantify the indicated problems.

-

•

Act – by organizing the found problems by severity or network health scores, we can allow the operator to select which found issues deserve attention, and what type of human intervention or further work is needed, be that a field technician or someone in a network operations center.

ProOps is depicted in Figure 1 below, with the four layers represented. Also shown are the configuration interface, control and scheduling functions, the work queue for workers to process, and the various types of data stores used. The results can be observed on a dashboard, which can include maps showing impairments colored by severity, or graphs of the network health or impact of impairments that rise to attention, for example.

ProOps has been built with several types of workers, and can be configured to use these in various ways. For the sake of this paper, we will limit the discussion to the anomaly detector which will process RxMER data from cable modems and identify anomaly types at the first layer. The subsequent layer will collect additional information to assist in quantifying the severity of the found anomalies. Then the next layer will simply match impairment patterns as a simple clustering, and calculate a severity. The final layer presents a sorted list of the identified impairments to be addressed from most severe to least. At the point of this writing, only the clustering mechanism has not yet been built; we anticipate using a k-means method or like matching algorithm. The focus of this paper is the anomaly detection worker which is very efficient and effective at this particular problem.

III Model Architecture

The architecture diagram Fig.2 shows the high level architecture of our model. The one dimensional convolutional layers, route layers, up-sampling layers, and 3 prediction layers are included in the diagram. The shortcut layers, activation layers, and batch normalization layers are omitted in the diagram for simplicity.

III-A Backbone Network

The backbone network consists of 1-D convolutional layers with 1-D filters for feature extraction and shortcut layers for feature aggregation. The first 3 convolutional layers only have 4 filters with kernel size 3 and the maximum number of filters in the following layers is 64. This is because 1-dimensional data have much simpler features such that a smaller network can reach high mAP while featuring great performance and low resource consumption. Zero padding is used on both sides of the sample before convolution when the kernel size is greater than 1. This allows the network to maintain the consistency and relevance of the feature map sizes.

The shortcut layers perform addition on the feature maps from the previous layer and the layer it points to. It has multiple benefits such as smoothing the optimization during training and preventing the model from being attracted by spurious local optima as we learned in the previous study[6].

III-B Route Layers

The Route layers are used for feature concatenation. When a route layer only points to one previous layer, the feature map output from the previous layer is forwarded as the output of the route layer. When a route layer points to multiple previous layers, their feature maps are concatenated and forwarded as the output of the route layer. In our network, features from lower level layers that contain more original information are concatenated with outputs from higher level layers to provide more information to the prediction layer to improve mAP.

III-C Up-sampling Layers

The up-sampling layers are used to increase the size of the feature map from the previous prediction layer (the layer that predicts at larger grids) to match the size of the forwarded feature map from lower level layers. In our network we use a nearest neighbor interpolation algorithm for the up-sampling process.

III-D Activation Layers

Activation functions are implemented as layers in our network. All 1-dimensional convolutional layers are followed with Leaky Rectified Linear Unit (Leaky ReLU) activation layers except the 3 convolutional layers before the 3 prediction layers. The Leaky ReLU activation is calculated as

| (1) |

where is 0.1 in our network.

III-E Batch Normalization Layers

We use batch normalization layers[7] in between 1-dimensional convolutional layers to normalize the input layer by adjusting and scaling the activations. Batch normalization improves the training speed, stability, and mAP of our network.

III-F Prediction Anchors

Instead of predicting the start, end, and the center of anomalies directly, we use anchors which are a set of hand-picked priors[1] that the prediction layers use as references. This simplifies the localization problem and makes it easier for the network to learn[2]. With this, the center prediction becomes

| (2) |

where is the final value of the predicted anomaly center, is the network predicted value calculated with sigmoid function

| (3) |

and is the center of the grid that is performing prediction where a grid is defined as a prediction unit that takes input data from one feature map channel and handles all anomalies which have their center points inside of it. The width prediction of the anomaly becomes

| (4) |

where is the final value of the predicted anomaly width, is the network predicted value calculated with exponential function, and is the width of the current anchor.

III-G Anchor Calculation

We use both K-Means and kernel density estimation (KDE) algorithm to calculate proper anchor sizes. This allows the sizes of the prediction anchors to fit the distribution of anomaly width values in datasets as much as possible, and makes the problem easier for our network to learn. In our experiments with cable modems’ downstream RxMER data, the width value of anomalies ranges from 2 to 416 (with input size 416) as shown in Table I.

| Prediction Layer | Anchor Sizes |

|---|---|

| Layer 1 (13 prediction grids) | 155, 234, 416 |

| Layer 2 (26 prediction grids) | 43, 73, 109 |

| Layer 3 (52 prediction grids) | 2, 8, 23 |

III-H Prediction Layers

With specifically designed convolutional layers as the backbone, at the first prediction layer, the size of the input feature map is transformed to

| (5) |

where is the total number of values in the feature map, is the original input size, is the number of classes in the training and testing dataset, is the number of anchors that are being used by the current prediction layer, and is the number of convolutional layers in the backbone network with stride 2.

The number of grids doubles when it becomes the second prediction layer for detecting mid-sized anomalies, and it doubles again when it comes to the third prediction layer for small anomalies. For instance, when using as the input sample size and there are 5 anomaly classes to predict and 3 anchors at each prediction layer, the size of the input feature map from a single sample at the first prediction layer is . The size becomes at the second prediction layer and at the third prediction layer.

Looking further into the anchor prediction vectors from the above example, each of the three anchors predicts a value for the center of the anomaly, a value for the width of the anomaly, a confidence score for the objectness of the anomaly, and 5 probabilities for each anomaly class. The predictions are initially labeled using softmax function on the class probabilities (if it is multi-label classification, classes with top probabilities are selected) and then filtered by a confidence threshold, which is in our network. Non-maximum suppression (NMS) is then used (only in inference process) to reduce the number of localization proposals on each anomaly. In our model, we apply NMS to each class predictions instead of all predictions. The final output is a list of predictions each consists of a class label(s), an anomaly confidence score, the center location of the anomaly (proportional), and the width of the anomaly (proportional).

IV Training

IV-A Datasets

In our experiments, we use downstream receive modulation order ratio (RxMER) per sub-carrier data captures from cable modems’ OFDM channels in DOCSIS 3.1[8] networks to test our algorithms and implementation. DOCSIS 3.1 cable modems are capable of capturing PHY layer data like downstream RxMER per sub-carrier based on requirements defined in DOCSIS 3.1 CM OSSI specification[9], which makes it a promising use case for real-world demonstration.

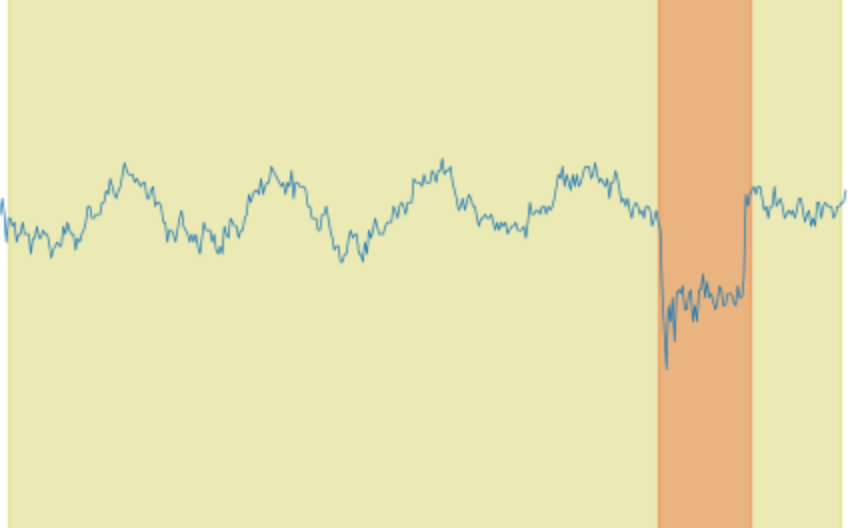

The training dataset contains 45000 RxMER samples and the testing dataset contains 26000 RxMER samples. Each sample has 1800 to 2000 sub-carrier MER values that are in range . There are 5 classes of PHY layer impairments that are labeled in the datasets: LTE ingress noise, wave, roll-off, suck-out, and spike. The LTE ingress noise is normally identified as LTE signals that are adding interference to the OFDM signal through leakage points on the plant or defected shielding on the modem as shown in Fig.3; wave is identified by wave shaped impairments (as shown in Fig.5) that affect the whole spectrum or part of the spectrum and is normally caused by echos or impedance mismatch; roll-off is identified as rolling off RxMER values on either side of the OFDM spectrum as shown in Fig.6; suck-out is identified as dips with a sharp corner as shown in Fig.4, and is normally caused by issues on amplifiers; spike is identified as sharp dips as shown in Fig.3 and Fig.6, and are normally less than 3 sub-carriers wide. Each impairment on the sample is labeled with the center of the impairment (proportional), the width of the impairment (proportional), and the class of the impairment. Each RxMER sample can have multiple different types of impairments located at different frequency ranges. Example plots of RxMER per sub-carrier data captured from cable modems are shown in Fig.3 through Fig.6, showing various impairment types.

IV-B Synthetic Data Generation

To reduce labor work and increase the number of training samples, we randomly generate synthetic training data based on human-observed samples and anomalies. For instance, in our datasets, we use randomly generated noisy base samples as the starting point: spikes are generated randomly on different frequencies with different MER values; suck-outs are generated using two randomized non-linear functions; waves are generated from different sine waves; roll-offs are generated with one non-linear function. All anomalies are added to different locations on the sample.

The synthetic data are used as an addition to the human labeled datasets for both training and testing. It significantly increases the amount of data that joins training, which helps the model to generalize better. And it adds more samples that are unseen by the model for validation.

IV-C Data Pre-processing with Binning Down-sampling

While all input values are normalized to range , the binning minimum algorithm (algorithm 1) is used for preserving low values in the original data series while reducing the input sample size. The algorithm first performs up-sampling (nearest-neighbor algorithm) on the original sample to make it become the nearest dividable size by the target sample size, then calculates binning minimum values from the up-sampled input.

IV-D Data Augmentation

During training, multiple data augmentation techniques are used to improve training results, improve the model’s generalization ability, and increase the number of training samples. Each of the data augmentation techniques has a probability of being selected for each input sample.

IV-D1 Scale Shifting

The scale shifting process allows the network to learn samples with different noise fluctuation scales. The mean value of the sample is calculated at first as

| (6) |

where is the mean value of the sample, is the number of values in the sample array, and is the value.

Then for each original value

| (7) |

where is a randomly generated factor in range of .

IV-D2 Flipping

Flipping allows the network to learn anomalies at different locations and doubles the total number of samples for training. The input sample is randomly flipped (left-right), and the labels are converted if the sample is flipped.

IV-D3 Value Floor Shifting

By modifying the floor of the input sample, the network can adapt to anomalies at different values levels. For each value in the input sample

| (8) |

where is a randomly calculated value in range of .

IV-D4 Noise Injection

Random noise (not enough to become anomalies) is added to each value in the input sample to allow the network to adapt to noisy inputs and multiplies the number of different samples for training,

| (9) |

where is a randomly generated value in range for each value in the sample.

IV-D5 Smoothing

A Savitzky-Golay filter is used to reduce the amount of high frequency noise in the input sample. This allows the network to adapt to smooth inputs and again multiplies the number of different samples for training. Different filter window lengths (3, 5, 7) are randomly selected during training for different input samples.

IV-D6 Cut and Paste

We randomly move (cut and paste) anomaly objects on the x-axis of the input sample to improve the training efficiency on every prediction grid. This increased the mAP on detecting small and dense anomalies on cable modems’ OFDM receive modulation error ratio per sub-carrier data by .

IV-E Optimizer and Hyper Parameters

Stochastic gradient descent (SGD) is used as the optimizer in our experiments with 6000 burn-in mini-batches, 0.9 momentum, learning rate, weight decay, and mini-batch size 32. During the burn-in time, the learning rate increases gradually until it reaches the target learning rate

| (10) |

where is the target learning rate, is the learning rate of the current iteration, is the number of trained mini-batches, and is the number of burn-in mini-batches.

IV-F Loss Calculation

Our loss function for training the model is designed with reference from YOLOv2[2]. To make it more efficient for our anomaly detection task and better balance loss calculation between small anomalies and large anomalies, we add scale weights in the mean square error (MSE) loss for localization loss calculation. This improves the model’s ability to localize small anomalies more precisely.

The localization loss of the center prediction is calculated as

| (11) |

where is the number of prediction grids at the current prediction layer, is the number of anchors used at the current prediction layer, is the ground truth confidence which value can be 0 or 1, is the ground truth center location (proportional) of the anomaly, is the predicted center location (calculated with the anchor) of the anomaly, is the ground truth width (proportional) of the anomaly, is the predicted width (calculated with the anchor) of the anomaly, and is the scale weight of the anomaly

| (12) |

where is in range and anomalies with smaller width have larger weights. The confidence loss is designed for the network to converge to a point that background data corresponds to confidence score 0, and anomaly data corresponds to confidence score 1. The confidence score is first calculated from the prediction layer’s linear output with sigmoid function

| (13) |

The confidence loss calculation is based on binary cross-entropy (BCE) loss, but use different weights for anomalies and background in order to balance the network’s recall and precision

| (14) |

where is the ground truth confidence score, is the predicted confidence score, is the weight of the confidence loss which can be represented as

| (15) |

The classification loss is calculated based on BCE loss as

| (16) | ||||

IV-G Responsible Prediction Layer and Anchor Selection

For each anomaly during training, only 1 prediction layer out of all 3 prediction layers is responsible for the prediction, and only 1 anchor out of all 3 anchors is responsible. The responsible prediction layer and anchor are selected by calculating which anchor has the best intersection over union (IoU) against the ground truth anomaly ignoring its center location

| (18) |

where is the responsible anchor’s index, is the current anomaly’s width, and is the anchor’s width. Once the index of the responsible anchor is calculated, the responsible prediction layer is determined by where the responsible anchor is used. Because the loss of all the other anchors used by 3 prediction layers’ is calculated as that these anchors see background with no anomaly, the ground truth confidence scores for these anchors are 0. The predictions from anchors which have IoU with the ground truth that are higher than the ignoring threshold , which is 0.7 in our experiments, do not join the loss calculation for either anomaly or the background.

IV-H Overfitting Prevention and Early Stop

We use a small mini-batch size (32) in training, and use weight decay to reduce the possibility of overfitting. The extensive data augmentation during training helps the model generalize and train as completely as possible. We also validate the network’s performance every iteration using the testing dataset and stop the training early.

V Testing and Experimental Results

There are only 69628 parameters in our weight file (283KB in size) and our network is able to process more than 1000 RxMER captures in one second on a single CPU core. During testing, we use mAP as the metric to indicate the performance of the model. The confidence score threshold is 0.5 and NMS threshold is 0.5. NMS is performed on a per-class basis using IoU thresholds 0.5 and 0.75. We also performed testing with soft-NMS[17] with very minor code changes, and slightly improved the model’s mAP. The inference performance is compared to the results from a previous research paper[16].

| (soft-NMS) | (Previous) | (soft-NMS) | (Previous) | |||

|---|---|---|---|---|---|---|

| LTE ingress | 99.21 | 99.23 | 73.67 | 99.13 | 99.14 | 60.09 |

| Suck-out | 98.35 | 98.44 | 62.18 | 96.16 | 96.25 | 48.39 |

| Wave | 98.42 | 98.42 | 58.93 | 98.42 | 98.42 | 56.82 |

| Roll-off | 99.15 | 99.15 | 87.36 | 97.26 | 97.26 | 70.61 |

| Spike | 93.64 | 93.89 | 68.73 | 93.57 | 93.83 | 59.46 |

| (soft-NMS) | (Previous) | (soft-NMS) | (Previous) | ||

|---|---|---|---|---|---|

| 97.75 | 97.82 | 70.17 | 96.91 | 96.98 | 59.07 |

From the results in Table II and Table III we can see that the algorithm we propose in this paper outperforms the algorithm from the previous research by a large margin, part of the reason is that the previous algorithm is not designed to differentiate all the 5 types of anomalies and it has non-ideal region proposal generation. The previous algorithm also predicts much slower at about 50 samples per second. On the other hand, Soft-NMS improves mAP on small and medium sized anomalies in our evaluation. The small difference between mAP50 and mAP75 indicates that our network produces high quality localization prediction. The lower mAP50 and mAP75 on spike detection are caused by lower recall which indicates that our network has difficulties recognizing all of the smallest anomalies especially when they are densely located. This can be caused by that the prediction layers have at most 52 grids in prediction resolution. One grid can produce 3 predictions in which only 1 of them is trained to recognize the anomaly, which means small anomalies that are close to each other can be missed by the detector. The performance of our network on small and dense anomalies can possibly be improved by introducing an additional prediction layer with 104 grids or larger input sizes such as 608 or 928. However, in our use case, spikes on OFDM RxMER captures are minor issues that do not warrant attention by repair technicians or network operations center personnel. Therefore, it is not necessary to trade inference performance for spike detection improvement.

We list example detection results from Fig.8 to Fig.17. Fig.8 shows 3 LTE channels that are interfering with the modem’s OFDM channel, there is also a spike on the higher frequency end; Fig.9 shows a large suck-out on the lower frequency end of the spectrum and 2 spikes; Fig.10 shows a wave that is interfering the whole channel and a LTE channel that is affecting the higher frequency end; Fig.11 shows a suck-out in the middle of the OFDM channel and a right roll-off; Fig.12 shows 7 spikes detected across the channel; Fig.13 shows a rare capture that 3 suck-outs exist on 1 OFDM channel; Fig.14 shows that a small LTE impairment is not detected, which could be caused by that the small LTE impairment is in the same prediction grid with the larger LTE impairment on its right side, and one prediction grid can only detect one anomaly at a time; Fig.15 shows that some small spikes are not detected, which could be caused by that after many layers of feature extraction, some details are lost; Fig.16 shows that a very subtle wave across the OFDM channel is not detected; Fig.17 shows that a large roll-off is not detected.

VI Conclusion and Future Work

Our key contributions in this paper can be summarized as follows

-

•

A novel anomaly detector implementation based on YOLOv3’s network architecture: Based on YOLOv3’s network architecture, we develop a Fully 1D-Convolutional Neural Network with 45 1D-Convolutional layers for feature extraction with significantly less filters. Shortcut layers and route layers[2] are used with 3 prediction layers to aggregate features from different scales and perform prediction at different scales. This anomaly detector achieves very low resource consumption, very high performance, and very high mAP. In our evaluation using cable modems’ downstream RxMER data, the anomaly detector processes more than 1000 cable modems’ data captures every second using a single CPU core at up to 600 KB memory consumption. Each cable modems’ data capture is a 1-D array that has 1800 to 2000 MER values.

-

•

A specifically designed down-sampling algorithm which significantly improves performance while keeping important features: The down-sampling algorithm is specifically designed to pre-process 1-dimensional input data for performance gains. It significantly reduces the number of values from the original input and keeps important features for anomaly detection.

-

•

Data augmentation techniques for anomaly detection on data series: We specifically develop data augmentation techniques to improve the training quality, model generalization, and number of training and testing samples. These techniques significantly reduce the amount of manual work and improve the model’s performance.

-

•

Demonstration of leveraging state-of-the-art object detection algorithm in 1-dimensional anomaly detection problems: We successfully leverage the state-of-the-art object detection algorithm and transform it into a promising problem solution for anomaly detection by changing its network architecture, the loss function, data augmentation techniques, and significantly improving its performance.

PNM is a field of work in the cable industry that is constantly extending with different architectures and updates to protocols. As ProOps suggests a structure that we believe can be a model for the industry, built on a model for data collection for the industry as well, we intend to continue research, prototyping, and validating new PNM solutions for new updates to DOCSIS networking and technologies involving optical networks[15] as well.

The anomaly detection solution reported here is very flexible, applicable to a wide range of related problems, and is easily extensible. For medium and large impairments, which are the most important to address, the mAP of our solution is over 98%. Because our model has superb inference performance, we can focus on improvements in three fronts: additional measures that may provide additional evidence to improve precision, focusing on the most severe issues that are more likely to be problems that need to be addressed, and collecting RxMER data from neighboring CMs or the same CM over time to confirm the found issues. As we extend the solution to other data sources and over time series, we expect mAP to be higher. Using ProOps as the platform allows us to implement this approach and adjust settings rapidly as well. But we are already at a level of performance that a network operations center person can quickly confirm the most severe problems and gain order of magnitude efficiencies with human time and expertise. We also intend to extend the anomaly detection engine into other spectrum and time series measures, including spectrum analysis data, pre-equalization coefficients data, various time series statistics for specific CMs, and perhaps even clustering of impairments over time and over CMs to improve repair efficiency.

To further improve our detector’s performance, there are other state-of-the-art object detection networks we can learn from such as EfficientDet[18] which uses a weighted bi-directional feature pyramid network (BiFPN) and a compound scaling method to reduce network size, improve inference performance, and improve mAP. The prediction grid layout can be designed to improve performance on small and dense anomalies. We can also experiment with neural network based NMS[19].

Acknowledgment

We would like to thank CableLabs members who provided the data that helped with this research, and CableLabs for supporting the work.

References

- [1] S. Ren, K. He, R. Girshick, and J. Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497, 2015.

- [2] J. Redmon and A. Farhadi. YOLO9000: Better, Faster, Stronger, arXiv:1612.08242, 2016.

- [3] J. Redmon and A. Farhadi. Yolov3: An incremental improvement, arXiv:1804.02767, 2018.

- [4] Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, Alexander C. Berg SSD: Single Shot MultiBox Detector, arXiv:1512.02325, 2016.

- [5] Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, Piotr Dollár Focal Loss for Dense Object Detection, arXiv:1708.02002, 2018.

- [6] Tianyi Liu, Minshuo Chen, Mo Zhou, Simon S. Du, Enlu Zhou, Tuo Zhao Towards Understanding the Importance of Shortcut Connections in Residual Networks, Thirty-third Conference on Neural Information Processing Systems, 2019.

- [7] Sergey Ioffe, Christian Szegedy Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift, arXiv:1502.03167, 2015.

- [8] Cable Television Laboratories, Inc DOCSIS 3.1, Physical Layer Specification, https://www.cablelabs.com, September 17, 2019.

- [9] Cable Television Laboratories, Inc DOCSIS 3.1, Cable Modem Operations Support System Interface Specification, https://www.cablelabs.com, September 17, 2019.

- [10] Larry Wolcott, John Heslip, Bryan Thomas, Robert Gonsalves A Comprehensive Case Study of Proactive Network Maintenance, Cable-Tec Expo, 2016.

- [11] Ron H., James M., Bruce C., Roger F., Tom K., Jason R., Tom W., Larry W. Characterizing Network Problems using DOCSIS® 3.1 OFDM RxMER Per Subcarrier Data, https://www.nctatechnicalpapers.com/Paper/2019/2019-characterizing-network-problems-using-docsis-3-1-ofdm-rxmer-per-subcarrier-data, SCTE/ISBE Cable-Tec Expo proceedings, 2019.

- [12] Cable Television Laboratories, Inc Proactive Operations Platform, Application User Guide and Technical Report, https://specification-search.cablelabs.com/CL-TR-ProOps, September 25, 2019.

- [13] Jason Rupe, Jingjie Zhu Kickstarting Proactive Network Maintenance with the Proactive Operations Platform and Example Application, https://www.nctatechnicalpapers.com/Paper/2019/2019-kickstarting-proactive-network-maintenance, Cable-Tec Expo, 2019.

- [14] Bruce Currivan, Larry Wolcott Leveraging DOCSIS 3.1 PNM Measurements for Plant Performance Optimization, Cable-Tec Expo, 2015.

- [15] L. Alberto Campos, Zhensheng Jia, Larry Wolcott Proactive Network Maintenance Evolution to the Optical Domain in Coherent Optics, https://www.nctatechnicalpapers.com/Paper/2018/2018-proactive-network-maintenance-evolution-to-the-optical-domain-in-coherent-optics, Cable-Tec Expo, 2018.

- [16] Karthik Sundaresan, Jingjie Zhu Access Network Data Analytics, https://www.nctatechnicalpapers.com/Paper/2017/2017-access-network-data-analytics, Cable-Tec Expo, 2017.

- [17] Navaneeth Bodla, Bharat Singh, Rama Chellappa, Larry S. Davis Soft-NMS – Improving Object Detection With One Line of Code, arXiv:1704.04503, 2017.

- [18] Mingxing Tan, Ruoming Pang, Quoc V. Le EfficientDet: Scalable and Efficient Object Detection, arXiv:1911.09070, 2019.

- [19] Jan Hosang, Rodrigo Benenson, Bernt Schiele Learning non-maximum suppression, arXiv:1705.02950, 2017.