PRMI: A Dataset of Minirhizotron Images for Diverse Plant Root Study

Abstract

Understanding a plant’s root system architecture (RSA) is crucial for a variety of plant science problem domains including sustainability and climate adaptation. Minirhizotron (MR) technology is a widely-used approach for phenotyping RSA non-destructively by capturing root imagery over time. Precisely segmenting roots from the soil in MR imagery is a critical step in studying RSA features. In this paper, we introduce a large-scale dataset of plant root images captured by MR technology. In total, there are over 72K RGB root images across six different species including cotton, papaya, peanut, sesame, sunflower, and switchgrass in the dataset. The images span a variety of conditions including varied root age, root structures, soil types, and depths under the soil surface. All of the images have been annotated with weak image-level labels indicating whether each image contains roots or not. The image-level labels can be used to support weakly supervised learning in plant root segmentation tasks. In addition, 63K images have been manually annotated to generate pixel-level binary masks indicating whether each pixel corresponds to root or not. These pixel-level binary masks can be used as ground truth for supervised learning in semantic segmentation tasks. By introducing this dataset, we aim to facilitate the automatic segmentation of roots and the research of RSA with deep learning and other image analysis algorithms.

Introduction

Plant root systems play important roles in supporting our natural ecosystems, adapting to changes in climate, and ensuring the sustainable production of plants (Aidoo et al. 2016; Alexander, Diez, and Levine 2015; Ettinger and HilleRisLambers 2017). Phenotyping RSAs is an important component of understanding plant root systems to support and enhance these roles along with advancing many aspects of plant science research (Pieruschka and Schurr 2019; Trachsel et al. 2011; Wasson et al. 2012).

Relative to above-ground plant phenotyping, below-ground field-based plant root phenotyping is challenging. Many common approaches for field-based root phenotyping, such as soil coring and “shovelomics” (Van Noordwijk and Floris 1979; Gregory 2008; Trachsel et al. 2011), are destructive, laborious, time-consuming, and cannot be carried out in real-time or over-time. Minirhizotron (MR) technology (Majdi 1996; Johnson et al. 2001) is one of the few most widely-used approaches for phenotyping RSA non-destructively over time. Generally, before planting, MR transparent tubes are installed in the field at an angle (e.g. ) to the soil surface in locations that should eventually be directly under or near to plants of interest. Then, as the plant’s root systems grow, a high-resolution camera can be inserted along the tube to capture root images at a variety of depths as shown in Figure 1. Since the MR tubes remain in the soil during the entire growing period, the camera is able to capture time-series RGB root images providing insight into RSA development. In addition to the development of the whole root structure, MR imagery can be used to observe changes of roots themselves throughout their life cycle such as color, diameter, angle, and length changes.

Precisely segmenting roots from the soil background in MR imagery is a critical step in studying root systems using MR technology. Traditionally, MR images are manually annotated in which users trace along each individual root to indicate the location and diameter. As can be imagined, manually tracing a large number of thin, hard-to-see roots is incredibly tedious and time-consuming. This annotation step is the primary bottleneck limiting the effectiveness of MR technology for large-scale RSA studies. Given the complexity of plant RSA, biological variation, and environmental- and management- impacts on RSA, a huge amount of data is needed for comprehensive RSA studies to lead to statistically reasonable conclusions. Thus, algorithms for high-throughput automatic root segmentation tasks are essential.

Deep learning, and convolutional neural networks specifically, have shown initial great success in plant root segmentation tasks (Yasrab et al. 2020; Smith et al. 2020). The features learned by well-trained deep neural networks are more representative and effective for segmenting roots as compared to earlier image processing-based approaches (Das et al. 2015; Galkovskyi et al. 2012; Pierret et al. 2013; Haralick, Sternberg, and Zhuang 1987; Lobregt, Verbeek, and Groen 1980). However, one limitation in applying these approaches is the need for large MR image datasets for training that include the soil characteristics and plant species of interest. Transfer learning has been investigated to help alleviate the need for collecting and annotating enormous MR image training sets for each soil type and plant species (Xu et al. 2020). However, much of these investigations have been limited by the lack of public sizeable public MR image datasets due to the fact that collecting and annotating images are extremely tedious, time-consuming and often requires some knowledge and expertise.

In order to help fill this gap and introduce the computer vision community to this problem domain, we compiled a dataset, named PRMI (Plant Root Minirhizotron Imagery), containing over 72 thousand MR RGB root images across six different species, namely, cotton, papaya, peanut, sesame, sunflower, and switchgrass. These images span a variety of conditions including varying root age, root spatial structures, soil types, and depths under the soil surface. We paired binary image-level labels for each image showing whether the image contains roots along with meta-data such as crop species, collection location, MR tube number, collection time, collection depth, and image resolution. In addition, over 63 thousand of the images have been manually annotated to generate pixel-level binary masks indicating whether the pixels correspond to root or not. Annotating MR root images is challenging, so learning from weak labels is essential, particularly when transitioning a trained method from one location (i.e., soil type and properties) and one plant species (i.e., root characteristics) to another. The primary use of the PRMI dataset is for supervised/weakly-supervised semantic segmentation as well as transfer learning. The RSA features (length, diameter, etc.) can be extracted for scientific purposes after segmentation. We hope this dataset can make contributions to facilitate the automatic segmentation of roots and the research of RSA with deep learning. The dataset is publicly available: https://gatorsense.github.io/PRMI/.

Related Datasets and Annotation Tools

There are a few public MR image datasets available as summarized in Table 1. However, these datasets are relatively small (e.g., the largest collection for one species contains only 400 images) and only three of these datasets provide pixel-level ground truth masks. These datasets have been used to develop image processing-based approaches as well as deep-learning methods for root segmentation tasks. For example, early work on the Peach1 dataset (Zeng, Birchfield, and Wells 2006) proposed an image processing-based approach that first extracted a collection of features using linearly-shaped spatial filters. The soybean (Wang et al. 2019) and the chicory (Smith et al. 2020) datasets have been used for developing deep learning segmentation approaches.

There are several annotation tools that have been used for manually tracing roots. Zeng et. al., who provided the Peach1 dataset (Zeng, Birchfield, and Wells 2006), developed Rootfly for root tracing. Le Bot et. al. developed a software DART (Le Bot et al. 2010) to analyze RSA. Commercial tools are also available such as RootSnap (CID Bioscience) and WinRHIZO Tron (Regent Instrument) (Bauhus and Messier 1999). Furthermore, general image processing software such as Photoshop and ImageJ have also been used for root tracing (Abràmoff, Magalhães, and Ram 2004). Automated tools were also developed such as EZ-RHIZO (Armengaud et al. 2009), RootNav (Yasrab et al. 2019), and RootGraph (Cai et al. 2015).

| Dataset | Num of images(Train/Test) | Label |

|---|---|---|

| Peach1∗ | 50/200 | Pixel label |

| Peach2∗ | 200/200 | Image Label |

| maple1∗ | 120/120 | Image Label |

| magnolia1∗ | 100/100 | Image Label |

| soybean§ | 39/13 | Pixel label |

| chicory‡ | 38/10 | Pixel label |

Image Collection

In our PRMI dataset, MR technology was used to collect root images across cotton, papaya, peanut, sesame, sunflower, and switchgrass. For each species, root images were captured from multiple plants and multiple MR tubes across a variety of depths over different time periods and varying soil and environmental conditions. Many of the root images are related to each others in time or depth. For example, for a specific species, images were captured from the same MR tube and the same depth across times (i.e., a time series of MR imagery of particular plant root architectures). Thus, some of these images will contain the same root portions at different ages, sizes, shapes and colors. Although all the root images of different species were collected by MR technology, the specifics of the MR technology were different for each sub-collection. For example, the camera used for each species was distinct, resulting in different image resolutions, dots per inch (DPI), and color profiles. For each species, there were images containing plant roots as well as images containing purely soil and background. Some example images for each species are shown in the Figure 2.

The details of the sub-collections of each species are shown in Table 2, including the dpi, resolution, number of locations, number of collection tubes, number of root images, number of non-root images, the number of images in train/val/test sets, and the total number of images. To maintain the original appearance of the root images, we compiled the dataset using raw root images in their original format without any pre-processing such as re-sizing or any normalization.

| Species | DPI | Size | Num of | Num of | Num of | Num of non- | Train | Val | Test | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| locations | tubes | root images | root images | |||||||

| Cotton | 150 | 1 | 12 | 918 | 1494 | 1271 | 564 | 577 | 2412 | |

| Papaya | 150 | 2 | 6 | 487 | 59 | 282 | 131 | 133 | 546 | |

| Peanut | 120 | 1 | 32 | 8508 | 8534 | 10087 | 3413 | 3542 | 17042 | |

| Peanut | 150 | 2 | 24 | 11147 | 8478 | 11485 | 3347 | 4793 | 19625 | |

| Sesame | 120 | 4 | 11 | 1460 | 700 | 1438 | 318 | 404 | 2160 | |

| Sesame | 150 | 2 | 24 | 7923 | 6423 | 8637 | 2625 | 3084 | 14346 | |

| Sunflower | 120 | 1 | 16 | 1646 | 2254 | 2211 | 722 | 967 | 3900 | |

| Switchgrass | 300 | 3 | 72 | 3465 | 9072 | 11272∗ | 665 | 600 | 12537 |

-

•

∗ 2647 images in the training set have pixel-level annotation and the remaining 8625 images only have image-level annotation.

Image Annotation

Each MR image in this collection is paired with image-level annotations and more than 63 thousand of the images are also paired with pixel-level annotation. Next, we will introduce the annotation details.

Image-level Annotation

To enable a more comprehensive understanding of the dataset, meta-data for each MR image is recorded and provided in a JSON file (details can be found in the appendix B). This meta-data includes crop species, collection location, MR tube number, collection time, collection depth, and sensor DPI. More importantly, we manually annotate each MR image with an binary image-level label indicating whether the image contains roots. This image-level annotation can be used in a weakly-supervised learning framework for plant root segmentation tasks, which is one of the major proposed use of this data.

Pixel-level Annotation

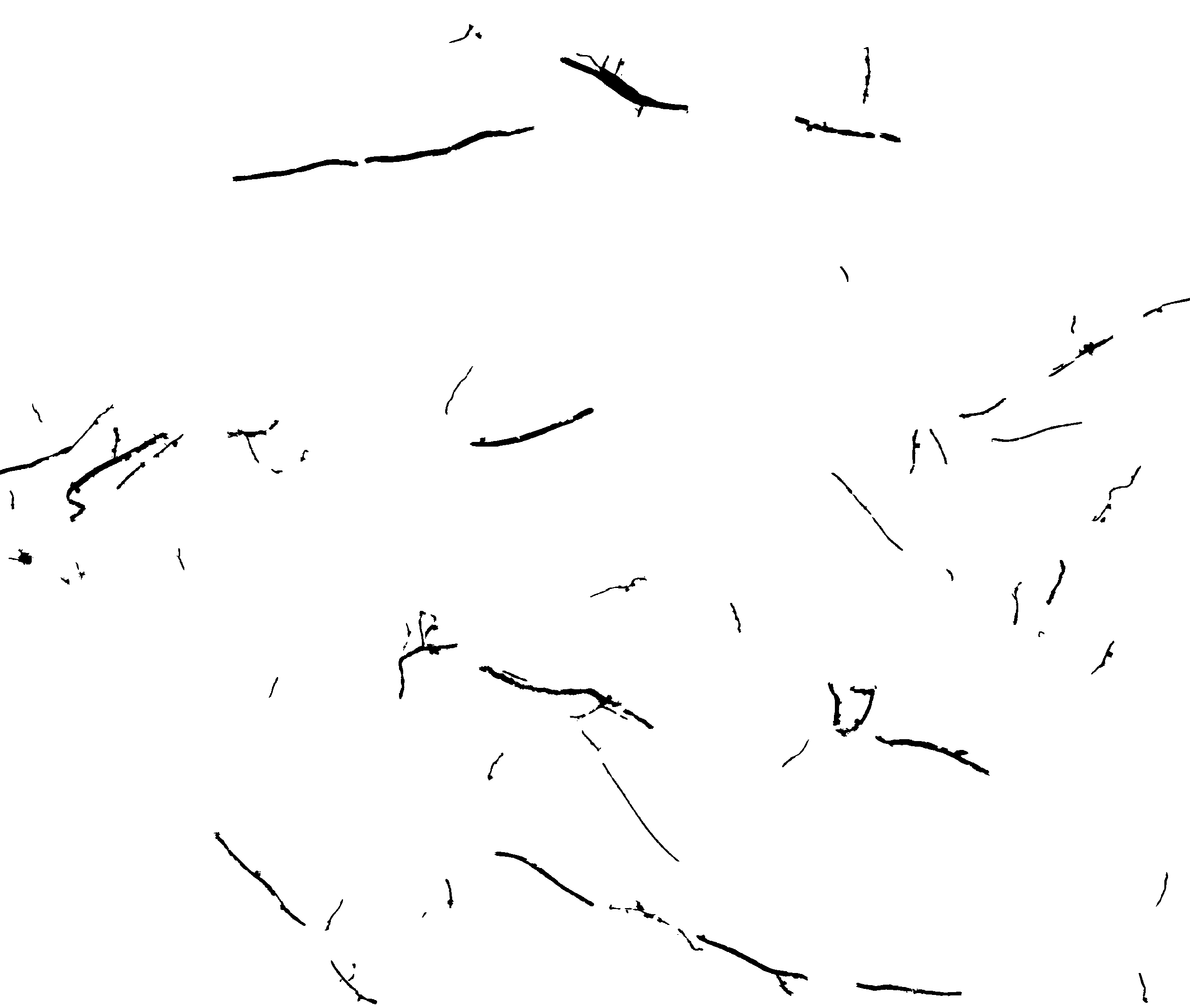

Each pixel-level annotation is a segmentation mask containing binary values indicating the class (root/soil) for each pixel. Selected pixel-level segmentation masks for each species are shown in Figure 2. All the pixel-level masks were generated by technicians manually labeling pixels in the images. However, different annotation methods were used to generate masks for switchgrass and all the other species.

Pixel-level annotation for all species other than switchgrass. WinRHIZO Tron software was used to manually trace the roots in images of cotton, papaya, peanut, sesame, and sunflower. The pixel-level segmentation masks were generated by drawing rectangular boxes to highlight the area of root pixels in the software. To make the annotation as accurate as possible, multiple rectangular boxes of different sizes were used for each single piece of root to capture any variance in diameter. The coordinates of four vertexes of all rectangular boxes were recorded for each root image. Then, the binary masks were generated by reconstructing and aligning these boxes in a blank image of the same size as the corresponding RGB root images.

Pixel-level annotation for the switchgrass. There are 3912 out of 12537 switchgrass images paired with pixel-level segmentation masks. Given the fine, narrow switchgrass roots and the size of these images, it is extremely time-consuming to manually annotate each pixel. To improve the efficiency of the annotation process, two different annotation methods were used: a) technicians annotated images on superpixels generated by running the Simple Linear Iterative Clustering (SLIC) algorithm (Achanta et al. 2010) on raw images; and b) technicians refined the predicted segmentation masks generated by passing raw images to pre-trained U-net. Details can be found in appendix B.

Dataset Split

For each species, the root images were collected from different locations, dates, tubes, and depths. To ensure that the training, validation, and testing data share a similar data distribution while ensuring that testing data does not contain the same tubes and roots used for training, we split the data into train, validation and test sets based on the tubes.

We randomly selected 60% of tubes for training, 20% for validation, and the remaining 20% for test for each species other than switchgrass. In terms of switchgrass root images, to ensure confidence in the accuracy of segmentation masks in the test set, all the images with manually generated ground-truth were placed in the test set. The training set was generated by randomly selected 80% of tubes with AI-guided annotation with the remaining compiled in the validation set. In addition, all the images with only image-level annotations were placed in the training set to support weakly-supervised segmentation. The total number of images for train, validation and test sets are shown in Table 2.

The dataset could also be split according to other factors such as dates, depths, and locations for specific research questions. For example, researchers can split the dataset based on dates to study the changes of roots over time. We paired image-level labels with meta-data for each image, which can be easily used to sort and split dataset based on different attributes.

Experiments and Results

The primary proposed use of this PRMI dataset is for root segmentation tasks. To generate baseline benchmark results and show the challenges of this dataset, we trained a U-net (Ronneberger, Fischer, and Brox 2015) for supervised learning and IRNet (Ahn, Cho, and Kwak 2019) for weakly-supervised learning on each sub-collection species to show the segmentation results. All the models were trained on training set for 300 epochs using a single Nvidia A100 GPU. For each sub-collection, the model performing best on the validation set will be used to evaluate the segmentation performance on the test set. The Intersection-Over-Union (IoU) and F1 score were calculated as evaluation metrics. More details about training can be found in the appendix D.

Table 3 shows the F1 and IoU scores for each sub-collection. In general, the dataset is quite challenging as the supervised model got around 35% average IoU for all species. This dataset is even more challenging for CAM-based weakly-supervised model since the CAM methods focus on the most different parts in the images which could be complicated soil conditions underground instead of roots when roots are rare and sparse. In addition, the number of root pixels and the number of soil pixels are highly imbalanced resulting in the fact that model could be easily biased towards soil features instead of root features. Some species, such as cotton and sunflower, have relative less images having roots compared with other species. With these facts, we believe this dataset can be helpful for researchers to build and develop more robust and advanced algorithms for automatic root segmentation tasks.

| Species-DPI | U-net | IRNet | ||

|---|---|---|---|---|

| IoU | F1 | IoU | F1 | |

| Cotton-150 | 4.8% | 0.092 | 0.8% | 0.016 |

| Papaya-150 | 56.0% | 0.718 | 14.4% | 0.252 |

| Peanut-150 | 36.8% | 0.538 | 2.6% | 0.055 |

| Peanut-120 | 61.9% | 0.765 | 10.6% | 0.192 |

| Sesame-150 | 20.6% | 0.341 | 4.4% | 0.084 |

| Sesame-120 | 25.9% | 0.411 | 7.2% | 0.134 |

| Sunflower-120 | 29.7% | 0.458 | 2.3% | 0.045 |

| Switchgrass-300 | 40.2% | 0.574 | 2.2% | 0.043 |

Conclusion

In this work, we present PRMI, a large-scale dataset of diverse plant root images captured by MR technology across different species in a variety of conditions. This dataset is very well suited to study supervised/weakly-supervised semantic segmentation, transfer learning, one- or few-shot learning, and data distribution shifts. Through this work, we hope the PRMI dataset can bring more attention of computer vision community to this problem domain and facilitate the research of weak-learning mechanisms and/or transfer-learning mechanisms that can be applied with very limited data and significant changes to the data distribution.

References

- Abràmoff, Magalhães, and Ram (2004) Abràmoff, M. D.; Magalhães, P. J.; and Ram, S. J. 2004. Image processing with ImageJ. Biophotonics international, 11(7): 36–42.

- Achanta et al. (2010) Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; and Süsstrunk, S. 2010. Slic superpixels. Technical report.

- Ahn, Cho, and Kwak (2019) Ahn, J.; Cho, S.; and Kwak, S. 2019. Weakly supervised learning of instance segmentation with inter-pixel relations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2209–2218.

- Aidoo et al. (2016) Aidoo, M. K.; Bdolach, E.; Fait, A.; Lazarovitch, N.; and Rachmilevitch, S. 2016. Tolerance to high soil temperature in foxtail millet (Setaria italica L.) is related to shoot and root growth and metabolism. Plant Physiology and Biochemistry, 106: 73–81.

- Alexander, Diez, and Levine (2015) Alexander, J. M.; Diez, J. M.; and Levine, J. M. 2015. Novel competitors shape species’ responses to climate change. Nature, 525(7570): 515–518.

- Armengaud et al. (2009) Armengaud, P.; Zambaux, K.; Hills, A.; Sulpice, R.; Pattison, R. J.; Blatt, M. R.; and Amtmann, A. 2009. EZ-Rhizo: integrated software for the fast and accurate measurement of root system architecture. The Plant Journal, 57(5): 945–956.

- Badrinarayanan, Kendall, and Cipolla (2015) Badrinarayanan, V.; Kendall, A.; and Cipolla, R. 2015. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. arXiv preprint arXiv:1511.00561.

- Bauhus and Messier (1999) Bauhus, J.; and Messier, C. 1999. Evaluation of fine root length and diameter measurements obtained using RHIZO image analysis. Agronomy Journal, 91(1): 142–147.

- Cai et al. (2015) Cai, J.; Zeng, Z.; Connor, J. N.; Huang, C. Y.; Melino, V.; Kumar, P.; and Miklavcic, S. J. 2015. RootGraph: a graphic optimization tool for automated image analysis of plant roots. Journal of experimental botany, 66(21): 6551–6562.

- Chen et al. (2018a) Chen, J.; Fan, Y.; Wang, T.; Zhang, C.; Qiu, Z.; and He, Y. 2018a. Automatic Segmentation and Counting of Aphid Nymphs on Leaves Using Convolutional Neural Networks. Agronomy, 8(8): 129.

- Chen et al. (2018b) Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; and Yuille, A. L. 2018b. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence, 40(4): 834–848.

- Das et al. (2015) Das, A.; Schneider, H.; Burridge, J.; Ascanio, A. K. M.; Wojciechowski, T.; Topp, C. N.; Lynch, J. P.; Weitz, J. S.; and Bucksch, A. 2015. Digital imaging of root traits (DIRT): a high-throughput computing and collaboration platform for field-based root phenomics. Plant methods, 11(1): 1–12.

- Ettinger and HilleRisLambers (2017) Ettinger, A.; and HilleRisLambers, J. 2017. Competition and facilitation may lead to asymmetric range shift dynamics with climate change. Global change biology, 23(9): 3921–3933.

- Galkovskyi et al. (2012) Galkovskyi, T.; Mileyko, Y.; Bucksch, A.; Moore, B.; Symonova, O.; Price, C. A.; Topp, C. N.; Iyer-Pascuzzi, A. S.; Zurek, P. R.; Fang, S.; et al. 2012. GiA Roots: software for the high throughput analysis of plant root system architecture. BMC plant biology, 12(1): 116.

- Gregory (2008) Gregory, P. J. 2008. Plant roots: growth, activity and interactions with the soil. John Wiley & Sons.

- Haralick, Sternberg, and Zhuang (1987) Haralick, R. M.; Sternberg, S. R.; and Zhuang, X. 1987. Image analysis using mathematical morphology. IEEE transactions on pattern analysis and machine intelligence, (4): 532–550.

- Johnson et al. (2001) Johnson, M. G.; Tingey, D. T.; Phillips, D. L.; and Storm, M. J. 2001. Advancing fine root research with minirhizotrons. Environmental and Experimental Botany, 45(3): 263–289.

- Le Bot et al. (2010) Le Bot, J.; Serra, V.; Fabre, J.; Draye, X.; Adamowicz, S.; and Pagès, L. 2010. DART: a software to analyse root system architecture and development from captured images. Plant and Soil, 326(1): 261–273.

- Lobregt, Verbeek, and Groen (1980) Lobregt, S.; Verbeek, P. W.; and Groen, F. C. 1980. Three-dimensional skeletonization: principle and algorithm. IEEE Transactions on pattern analysis and machine intelligence, (1): 75–77.

- Long, Shelhamer, and Darrell (2015) Long, J.; Shelhamer, E.; and Darrell, T. 2015. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, 3431–3440.

- Majdi (1996) Majdi, H. 1996. Root sampling methods-applications and limitations of the minirhizotron technique. Plant and Soil, 185(2): 255–258.

- Pierret et al. (2013) Pierret, A.; Gonkhamdee, S.; Jourdan, C.; and Maeght, J.-L. 2013. IJ_Rhizo: an open-source software to measure scanned images of root samples. Plant and soil, 373(1): 531–539.

- Pieruschka and Schurr (2019) Pieruschka, R.; and Schurr, U. 2019. Plant phenotyping: past, present, and future. Plant Phenomics, 2019.

- Ronneberger, Fischer, and Brox (2015) Ronneberger, O.; Fischer, P.; and Brox, T. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, 234–241. Springer.

- Smith et al. (2020) Smith, A. G.; Petersen, J.; Selvan, R.; and Rasmussen, C. R. 2020. Segmentation of roots in soil with U-Net. Plant Methods, 16(1): 1–15.

- Trachsel et al. (2011) Trachsel, S.; Kaeppler, S. M.; Brown, K. M.; and Lynch, J. P. 2011. Shovelomics: high throughput phenotyping of maize (Zea mays L.) root architecture in the field. Plant and soil, 341(1): 75–87.

- Van Noordwijk and Floris (1979) Van Noordwijk, M.; and Floris, J. 1979. Loss of dry weight during washing and storage of root samples. Plant and Soil, 239–243.

- Wang et al. (2019) Wang, T.; Rostamza, M.; Song, Z.; Wang, L.; McNickle, G.; Iyer-Pascuzzi, A. S.; Qiu, Z.; and Jin, J. 2019. SegRoot: a high throughput segmentation method for root image analysis. Computers and Electronics in Agriculture, 162: 845–854.

- Wasson et al. (2012) Wasson, A. P.; Richards, R.; Chatrath, R.; Misra, S.; Prasad, S. S.; Rebetzke, G.; Kirkegaard, J.; Christopher, J.; and Watt, M. 2012. Traits and selection strategies to improve root systems and water uptake in water-limited wheat crops. Journal of experimental botany, 63(9): 3485–3498.

- Xu et al. (2020) Xu, W.; Yu, G.; Zare, A.; Zurweller, B.; Rowland, D. L.; Reyes-Cabrera, J.; Fritschi, F. B.; Matamala, R.; and Juenger, T. E. 2020. Overcoming small minirhizotron datasets using transfer learning. Computers and Electronics in Agriculture, 175: 105466.

- Yasrab et al. (2019) Yasrab, R.; Atkinson, J. A.; Wells, D. M.; French, A. P.; Pridmore, T. P.; and Pound, M. P. 2019. RootNav 2.0: Deep learning for automatic navigation of complex plant root architectures. GigaScience, 8(11): giz123.

- Yasrab et al. (2020) Yasrab, R.; Pound, M.; French, A.; and Pridmore, T. 2020. RootNet: A Convolutional Neural Networks for Complex Plant Root Phenotyping from High-Definition Datasets. bioRxiv.

- Yu et al. (2020a) Yu, G.; Zare, A.; Sheng, H.; Matamala, R.; Reyes-Cabrera, J.; Fritschi, F. B.; and Juenger, T. E. 2020a. Root identification in minirhizotron imagery with multiple instance learning. Machine Vision and Applications, 31(6): 1–13.

- Yu et al. (2020b) Yu, G.; Zare, A.; Xu, W.; Matamala, R.; Reyes-Cabrera, J.; Fritschi, F. B.; and Juenger, T. E. 2020b. Weakly Supervised Minirhizotron Image Segmentation with MIL-CAM. In European Conference on Computer Vision, 433–449. Springer.

- Zeng, Birchfield, and Wells (2006) Zeng, G.; Birchfield, S. T.; and Wells, C. E. 2006. Detecting and measuring fine roots in minirhizotron images using matched filtering and local entropy thresholding. Machine Vision and Applications, 17(4): 265–278.

- Zhu et al. (2018) Zhu, Y.; Aoun, M.; Krijn, M.; Vanschoren, J.; and Campus, H. T. 2018. Data augmentation using conditional generative adversarial networks for leaf counting in arabidopsis plants. Computer Vision Problems in Plant Phenotyping (CVPPP2018).

Appendix A Appendix A: Image Collection

Although all the root images of different species were collected by MR technology, the specifics and characteristics of the MR images were different for each sub-collection. For each species, root images were captured from multiple plants and multiple MR tubes across a variety of depths over different time periods and varying soil and environmental conditions. For each species, there were images containing plant roots as well as images containing purely soil and background. To help better understand the varieties of root images as well as different soil background, some example images for each species are shown in the Figure 3. The top three rows show the images containing roots, and the bottom three rows show non-root images with different soil types and other background components (e.g., water bubbles and condensation).

Different from other species, the raw switchgrass images were captured by a high-resolution camera with a resolution of . Due to the drastically large size compared to the other sub-collections, we divided each image into 15 sub-images of size without overlap. The row index and column index for each sub-image are saved in both image names and the image-level labels such that the original high-resolution images can be reconstructed if needed. After dividing, the switchgrass sub-collection has 3465 sub-images with roots, 9072 sub-images without roots, resulting in a total of 12,537 sub-images in total.

In addition, all of the images other than switchgrass were saved in JEPG format and named using the following convention: Species_tube_depth_date_time_location_DPI. For switchgrass images, all the images were saved in PNG format and named using the following convention: Species_tube_depth_date_time_location_DPI_row-index_column-index.

Appendix B Appendix B: Image Annotation

Image-level Annotation

We provided meta-data for each image including crop species, collection location, MR tube number, collection time, collection depth, and sensor DPI. The image-level annotations are provided in a JavaScript Object Notation (JSON) file with the following format:

-

1.

image_name (str): full name of MR image file with the data format extension

-

2.

crop (str): plant species of the MR image

-

3.

has_root (int): flag for whether image has roots (0 does not contain roots, 1 contains roots)

-

4.

binary_mask (str): variable indicating whether the image has a corresponding pixel-level root segmentation mask. If so, the value is the full name of the corresponding pixel-level segmentation mask. Otherwise, the value is ‘N/A’.

-

5.

location (str): abbreviation of the location where the image was collected

-

6.

tube_num (str): MR tube number where the image was collected

-

7.

date (str): date (Year-Month-Day) when the image was collected

-

8.

depth (str): depth at which the was image collected

-

9.

dpi (str): DPI of the collected image

In addition to the above, switchgrass MR annotations also contain the following:

-

10.

row_idx (str): the row index of the image in the original spanning image

-

11.

col_idx (str): the column index of the image in the original spanning image

Pixel-level Annotation for Switchgrass

The switchgrass dataset can be divided into three components: (a) 8625 images only paired with image-level annotation (no pixel-level annotation masks); (b) 600 images paired with image-level and pixel-level annotation generated manually by technicians; and (c) 3312 images paired with image-level and pixel-level annotation which were generated by technicians refining the segmentation results of a pre-trained U-net (Ronneberger, Fischer, and Brox 2015). The annotation methods for (b) and (c) are described below:

(b) Manual annotation by technicians for switchgrass MR images: The raw switchgrass MR spanning images each have a size of . Given the fine, narrow switchgrass roots and the size of these images, it is extremely time-consuming to manually annotate each pixel. To improve the efficiency of the annotation process, we ran the Simple Linear Iterative Clustering (SLIC) algorithm (Achanta et al. 2010) on raw images to segment images into superpixels by clustering spatially-contiguous pixels into groups according to color. Then, the technicians annotated the imagery on a superpixel level such that all the pixels belonging to the same superpixel share the same label. The fine roots are challenging to accurately delineate and over-segment using SLIC due to their small size and, often, similarity in color to the background. Thus, after annotating images on the superpixel level, the technicians refined the masks by manually fine-tuned the labels on a pixel-level for missing or inaccurate roots and root edges. This dataset contains 600 switchgrass MR root images annotated using this method. Examples of these switchgrass images and the corresponding manually annotated masks are shown in Figure 5 (a) and (b), respectively.

(c) AI prediction aided annotation for switchgrass MR images: To further improve the annotation efficiency, we also explored the possibility of using the prediction results of a pre-trained U-net. Specifically, we used a U-net model pre-trained on a large peanut root dataset and fine-tuned on a limited switchgrass root dataset (Xu et al. 2020) to generate predicted segmentation masks for cropped switchgrass images. Then, technicians refined the predicted segmentation masks by manually adding or deleting the roots on the pixel-level. There are 3312 switchgrass root MR images annotated using this method. Examples of a spanning switchgrass image, the predicted segmentation mask generated by U-net, and technician refined U-net-predicted mask are shown in Figure 5 (c), (d) and (e), respectively.

Appendix C Appendix C: Intended Use of the Dataset

Supervised root segmentation. Semantic segmentation of plant roots from the background is the first step before any further MR analysis. Generally, training images with pixel-level annotation are required for this task. Several models (e.g., fully convolutional networks(Long, Shelhamer, and Darrell 2015), SegNet(Badrinarayanan, Kendall, and Cipolla 2015), U-net(Ronneberger, Fischer, and Brox 2015), and DeepLab(Chen et al. 2018b)) have achieved success in segmentation of plant roots and leaves(Chen et al. 2018a; Zhu et al. 2018; Yu et al. 2020b, a). With this dataset, we expect to boost the research of more advanced models and algorithms for plant root segmentation applications given pixel-level labeled training data.

Weakly-supervised root segmentation. Generating pixel-level ground truth masks for MR imagery is incredibly time-consuming and tedious. Thus, effort in weakly-supervised semantic segmentation in which only image-level labels are used to train a model would greatly boost MR segmentation efforts. Instead of requiring a significant effort of labeling MR imagery for every new field and plant species (with varying soil and root properties), images (or sub-images) could be much more efficiently labeled with image-level labels.

Segmentation across location and species. Several MR segmentation approaches have been developed in the literature. However, each of the approaches needs to be re-trained to achieve adequate performance on any new plant species or new location with different soil properties. One use of this dataset is to develop approaches that can be effectively and efficiently transferred to new locations and species.

Root feature analysis. For each species, we collected images at a different depth, location over a period of time to capture the growth process of the plant root system. The collection location, depth, and time information are saved in the image-level label for each image. Root features such as root color, root thickness, root length, root surface area, and the number of fine roots, etc. can be extracted from root imagery. Given a specific location and depth, the changes of young roots to old roots were recorded over time. This dataset can be used in the time-series analysis of these root features, which is valuable to identify the relationship between root age and root features. The depth information plays an important role in terms of the structure of the whole root system. This dataset can also be used for root alignment applications to reconstruct the root system by combining imagery from a different depth. Finally, this dataset covers different species. Comparing and characterizing the root system across species can also be studied using this dataset.

Appendix D Appendix D: Training Setup

We used U-net based model (Xu et al. 2020) and IRNet (Ahn, Cho, and Kwak 2019) to benchmark the dataset for supervised learning and weakly-supervised learning, respectively. The architecture of U-net model has five convolutional blocks in encoder and four trans-convolutional blocks in decoder. The details of architecture can be found in (Xu et al. 2020). Adamax optimizer was used with learning rate 1e-4 and weight decay 5e-4. Binary cross-entropy loss was used to calculate loss between predicted segmentation masks and ground truth masks. The models are trained on suggested training set for 300 epochs. For weakly-supervised learning using IRNet, we first trained the CAM step (ResNet50 backbone) on training set using image-level label to classify the root images vs. non-root images. We followed the training strategies in (Ahn, Cho, and Kwak 2019). The pseudo ground truth masks are generated for both training set and validation set by thresholding (0.9 as shown in (Yu et al. 2020b)) the attention map. Then, the U-net model were training on these pseudo binary masks using the same training setup as the supervised learning. The model with the smallest error on the validation set will be used to evaluate the segmentation performance on test set.