PRIORITY-BASED POST-PROCESSING BIAS MITIGATION USING UNFAIRNESS QUOTIENT

Abstract

Previous post-processing bias mitigation algorithms on both group and individual fairness don’t work on regression models and datasets with multi-class numerical labels. We propose a priority-based post-processing bias mitigation on both group and individual fairness with the notion that similar individuals should get similar outcomes irrespective of socio-economic factors and more the unfairness, more the injustice. We establish this proposition by a case study on tariff allotment in a smart grid. Our novel framework establishes it by using a user segmentation algorithm to capture the consumption strategy better. This process ensures priority-based fair pricing for group and individual facing the maximum injustice. It upholds the notion of fair tariff allotment to the entire population taken into consideration without modifying the in-built process for tariff calculation. We also validate our method and show superior performance to previous work on a real-world dataset in criminal sentencing.

Index Terms: Priority-based, Post-processing fairness, Group fairness, Individual fairness, Unfairness Quotient

1 Introduction

Over the past few years, there has been a rapid development in the domain of machine learning and its application. As a consequence of that, machine learning systems are now being used as a tool to make high-stakes decisions, which could affect an individual’s daily life. However, it has been observed that these machine learning systems sometimes produce an outcome that is unfair or discriminatory for minorities, historically disadvantaged populations, and other groups. For example, in COMPAS [dressel2018accuracy], the algorithm used for recidivism prediction produces a much higher false-positive rate for black people than white people, therefore being discriminatory for black people.

Bias creeps into the machine learning model because of many reasons. Skewed training sample, sample size disparity, and proxies are a few of the many reasons which lead the system to be unfair towards one group. In general, the assumption of one size fits all policy lead to discrimination among groups. There are two central notions of fairness in decision making: individual fairness and group fairness [8682620]. While individual fairness, in a broader sense, requires similar individuals should be treated similarly whereas group fairness seeks for some statistical measure to be equal among group defined by protected attributes (such as age, gender, race, and religion). Disparate impact (DI) is a standard measure for group fairness.

Pre-processing, in-processing, and post-processing are three stages for performing debiasing in the machine learning model. Pre-processing involves transforming the feature space into another feature space which, as a whole, is independent of the protected attribute. The in-training algorithm adds a regularization term in the objective function of the model to achieve independence from a protected attribute. While in the post-processing algorithm, the goal is to edit the outcome to achieve fairness. Several works have been done on price fairness in a smart grid. In [maestre2018reinforcement], the author has used Q-Learning with neural network approximation to assign the right price to the individual of different groups. In [han2014impact], the author shows a strong correlation between the socio-economic factor and the load consumption pattern. In this paper, we first propose a tariff allotment policy. The electricity load forecasting task is a time-series forecasting problem. SVR has been found to perform better for time-series forecasting [muller1997predicting]. We use SVR to predict day-ahead aggregated load for each of the consumers for the entire day. The reason for day-ahead allocation being that it makes the consumer aware of the power consumption. The power market prefers tariff slab or bucketing for pricing [sarker2014smart]. For creating slabs, we use the Gaussian bucketing method to bin the predicted value and assign respective tariff to individuals.

An application-independent priority-based post-processing debiasing algorithm with the notion of both group and individual fairness is used to remove the bias associated with the previous step that uses machine learning as an architecture. In most real scenarios, we won’t have access to the internals of models. Therefore it is evident that the post-processing debiasing algorithm with the notion of both group and individual fairness suits the most [8682620]. The trade-off between Bias and Accuracy [feldman2015certifying] limits the number of individual samples allowed to be debiased. This brings the requirement of individuals facing more unfairness to be debiased in priority [berk2017convex]. Using this approach, we can achieve fairness faster both in time and number of samples whose label needs to be changed to achieve fairness. To the best of our knowledge, all existing post-processing algorithms don’t work on priority-based debiasing [8682620, KamiranKZ2012, HardtPS2016, PleissRWKW2017, CanettiCDRSS2018]. The starting point for our proposed approach is the individual bias detector of [aggarwal2019black], which finds samples whose model prediction changes when only protected attributes change and calculate Unfairness Quotient, which is used to identify the priority order of the samples.

We have two major contributions to this work: First, an application-independent priority-based post-processing debiasing algorithm that improves both individual and group fairness. Second, a case study to showcase a fair tariff allocation strategy which charges a similar price to an individual with similar load characteristics irrespective of socio-economic factors.

Compared to the randomized debiasing method used in [8682620], we have superior performance. We validate our proposition on a real-world dataset in criminal sentencing [sentencing]. The remainder of the paper is organized as follows. First, we provide details on the tariff allocation policy using [ISSDA] in Sec.3. Next, we propose a priority-based post-processing debiasing algorithm in Sec.4 with results on real-world datasets [ISSDA] [sentencing] in Sec.5. We have showcased the comparison result with [8682620]. Finally, we conclude the paper in Sec.6.

2 Fairness

Before proposing a priority-based post-processing debiasing algorithm, we first introduce the working definitions of two important notions of fairness i.e. group and individual fairness. Consider a supervised classification problem with features , protected attributes , and labels . We are given a set of training samples and would like to learn a classifier . For ease of explanation, we will only consider a scalar binary protected attribute, i.e. , and a binary classification problem, i.e. . The value is set to correspond to the privileged / majority group (e.g. male as in this application) and to unprivileged / minority group (e.g. female). The value is set to correspond to a favorable outcome (e.g. receiving a loan or not being arrested) and to an unfavorable outcome.

2.1 Group Fairness

Definition 1

We define group fairness in terms of disparate impact [feldman2015certifying] as follows. There is disparate impact if

| (1) |

is less than or greater than , where a common value of is 0.1 or 0.2. In our method, the value of is 0.1.

In our designed scheme, group fairness implies that the prediction for an individual across different groups should be almost equiprobable.

2.2 Individual Fairness

Definition 2

From the data, a given sample has individual bias if .

In our designed scheme, individual fairness confirms that similar individuals should be treated similarly i.e. prediction allotted to a particular individual should not be sensitive to the protected attributes of the individual [8682620].

3 Case study: Tariff Allotment

3.1 Dataset and Learning process:

For the evaluation purpose, The CER ISSDA dataset [ISSDA] is used which was obtained by the Irish Commission for Energy Regulation (CER) for the purpose to analyze the impact on consumer’s electricity consumption. It contains half-hourly load consumption data points of around 5000 Irish households and SMEs. In addition to the load data, the dataset also includes some survey questionnaire which describes the socio-economic condition of the consumer. The inputs features are past load values, temperature, calendar details, and socio-economic factors. Our day-ahead load forecasting algorithm uses Adaboost regressor [scikit-learn] with the base learner as support vector regression (SVR). Adaboost regressor is a meta-estimator that begins by fitting the base estimator which in this case is SVR. We use Root mean squared error (RMSE) calculation to evaluate the model (Adaboost+SVR) and found to be better performing with a value of 8.3 against 10.54 in the base estimator case.

3.2 Allotment Policy:

We are proposing a day-ahead tariff allocation policy to assign a tariff band or slab to the individual [sarker2014smart]. The role of tariff bands is that all the individuals who are assigned to the same tariff band are charged the same amount. To distinguish the consumer, we use the Gaussian bucketing technique. The reason behind choosing this method to represent tariff bands was that the probability distribution of the dataset is Gaussian in nature. Assume the prediction from model be , we calculate the mean and the standard deviation and assign all the individual same tariff whose predicted value lies in . The algorithm 1 is used to assign tariff band. The input to algorithm is predicted load () while output is tagged tariff band (). The time complexity of the algorithm to assign tariff to each individual is where is the number of individual in the system.

4 Priority-based Post-processing Mitigation

The policy described in the previous section doesn’t take into account the biased behavior of the model concerning the protected attributes known at run-time i.e. impact of socio-economic factors. Before we start discussing priority-based post-processing methodology for removing group and individual biases concerning the protected attribute (age, race, and gender), one may argue that the debiasing phase would not be required if during model training we would have removed these sensitive attributes as features. However, this method poses this weakness because the non-sensitive attribute can behave as a proxy for the sensitive attribute that may not be visible at first [kusner2017counterfactual]. Therefore a post-processing debiasing scheme is proposed. Another advantage which post-processing debiasing process provides is that it can be applied to any black-box model.

Bias and Accuracy always have a trade-off [feldman2015certifying]. Post-processing individual bias mitigation may result in drop in accuracy. While performing the debiasing process, we always have to consider a bandwidth of accuracy loss allowed and thereby limiting the number of individuals allowed to be debiased. Hence, we are using group discrimination metric (Disparate Impact) as a threshold to limit the number of individual samples to be debiased. We terminate the debiasing process once the fair threshold is reached or it gets terminated by itself [8682620]. We define Unfairness Quotient as the difference between the actual model prediction and the prediction after perturbing. It signifies the amount of unfairness associated. Priority-based debiasing is needed to ensure priority-based justice to the individual facing maximum unfairness. This allows individuals facing more bias to be debiased in priority. This algorithm can be implemented on N-nary and continuous-valued protected attributes (shown in Figure 1) since they can always be categorized into privileged and unprivileged i.e. binary situation.

4.1 Individual Bias detection and Unfairness Scoring

Consider the model which comprises prediction followed by bucketing. To determine whether the sample , where is the sensitive attributes and are the non-sensitive attributes, has individual bias associated with it, we perturb the sensitive attribute to . If is different from , we add this sample to individual biased sample collection.

Definition 3

For each sample , we calculate Unfairness Quotient . Formally, Unfairness Quotient is defined as the difference between the actual model prediction and the prediction after perturbing.i.e

| (2) |

The Unfairness Quotient signifies the amount of bias associated with that sample, i.e. more the value, more the injustice and hence higher the priority during debiasing.

4.2 Overall Algorithm

The priority-based bias mitigation algorithm is applied after the model prediction and band allotment for each sample. For each sample, in the decreasing order of Unfairness Quotient, we update the value of to until the value of DI is less than . The proposed algorithm is summarized in Algorithm 2.

5 Empirical Results

We evaluated our proposed algorithm on the subset of the CER ISSDA dataset described in subsection 3.1 for Jan 2010. We started by training an Adaboost + SVR model on the first 20 days sample to predict the power consumption by the individual followed by the Gaussian bucketing for tariff band allocation. We empirically verified the distribution between average power consumption and the number of individuals and found it to be Gaussian with mean 32.24 and variance 94.67. The training samples contain two protected attributes i.e. Gender and Age concerning which model can have group as well as individual unfairness. Since our proposed priority-based post-processing algorithm is application-independent, we verified the effectiveness of the algorithm on the tariff allotment model. The notion of fairness requires the definitions of group, i.e. privileged and unprivileged and output class labels, i.e. favorable and unfavorable. For the Gender attribute, the group comprises Male and Female, and for the Age attribute, we define the group as young i.e with Age 45 and old i.e Age 45. We used the percentage strategy and chose lower 40% as favorable and upper 60% as unfavorable which makes sense i.e. one is in a favorable zone if he/she is paying less than average. Figure 1 showcases the result of priority-based algorithm for protected attribute Age.

5.1 Comparison with the baseline approach

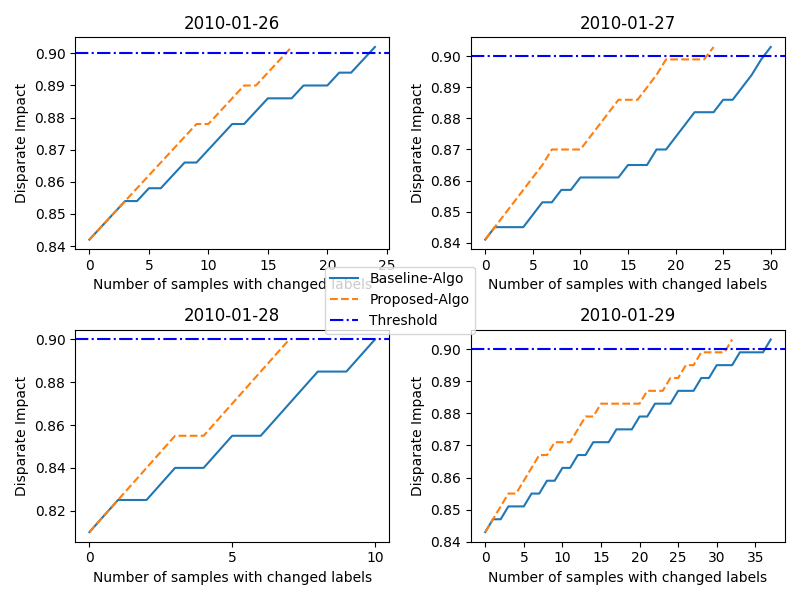

We compared our priority-based debiasing approach with the baseline method in [8682620]. In our case, daily in the post-processing stage, the model assigns tariff to each individual. We start by calculating the initial Disparate Impact which is a measure of group fairness discussed in Section 2.1 and if it comes out to be less than 0.9 we uplift its value towards 0.9, if achievable by debiasing the sample with individual bias. Due to the efficiency in selecting the individual bias point i.e in decreasing order of Unfairness Quotient rather than randomly in [8682620], we found out that our approach takes less time as well as a lesser number of class label changes takes place compared to the existing algorithm. Due to the fewer number of changes in class label, our algorithm kind of reduces the inescapable bias-accuracy trade-off. Figure 2 shows the comparison plot for both the algorithm with the protected attribute as Gender.

5.2 Validation of the proposed algorithm

We extended our analysis to see how our algorithm performs on other publicly available datasets with multi-class numerical labels. For the validation purpose, we run the algorithm on Sentencing dataset [sentencing], which has Gender as a protected attribute. On studying the dataset, we found out the charge imposed on Male was much higher than Female leading to some unfairness towards Male. Using our proposed algorithm, we were able to remove this unfairness by changing 784 class labels as compared to the 1163 class labels in the case of baseline. Also, the time taken by the debiasing process using the priority-based approach was 125s whereas using the baseline randomized approach was 190s on average. Figure 4 showcases this analysis.

6 Conclusion

Algorithmic fairness has become a compulsory step to be taken into consideration when building and deploying machine learning models. Bias-mitigation algorithms that address the critical and important notion of fairness are important. Accuracy and Bias are always in a trade-off. This creates a limit on the number of individual samples to be debiased withholding the accuracy. In this constraint individual debiasing process, past randomization-based debiasing approach fails to give a validity on whether individual samples facing maximum unfairness have been taken into consideration for debiasing or not. In this paper, we have developed a novel priority-based post-processing algorithm that ensures priority-based fairness to the individual facing maximum unfairness. The remediation process work to improve both individual and group fairness metrics. In this shifting paradigms in the model development process, there is limited accessibility for deployers to the internals of trained models. Therefore, post-processing algorithms that treat models as complete black-box are necessary. In comparison to previous work, our algorithm is faster and needs changing labels of a lesser number of samples to achieve fairness. We have validated our method on multiple real-world datasets.