Predicting ground state configuration of energy landscape ensemble using graph neural network

Abstract

Many scientific problems seek to find the ground state in a rugged energy landscape, a task that becomes prohibitively difficult for large systems. Within a particular class of problems, however, the short-range correlations within energy minima might be independent of system size. Can these correlations be inferred from small problems with known ground states to accelerate the search for the ground states of larger problems? Here, we demonstrate the strategy on Ising spin glasses, where the interaction matrices are drawn from protein contact maps. We use graph neural network to learn the mapping from an interaction matrix to a ground state configuration, yielding guesses for the set of most probable configurations. Given these guesses, we show that ground state configurations can be searched much faster than in vanilla simulated annealing. For large problems, a model trained on small matrices predicts a configurations whose energy is much lower than those obtained by simulated annealing, indicating the size generalizability of the strategy.

Finding the ground state configurations of a complex energy landscape is a long standing computational challenge Wales and Bogdan (2006). Short of brute-force enumeration, random search algorithms such as simulated annealing can anneal Markov chains to the global minimum as a simulation temperature approaches zero Kirkpatrick et al. (1983). However, in cases where many interacting degrees of freedom result in a highly rugged energy landscapes, conventional methods suffer from low probability of overcoming energy barriers and the chain may get stuck in local minima Papadimitrou and Steiglitz (1982); Landau and Binder (2014); Wolff (1990).

The classical methods for searching energy landscapes are devised to work for general problems. Yet, many scientific problems often present themselves via an ensemble of energy landscapes with similar underlying patterns, with interactions arising from a single or handful of governing equations. Examples include the energy landscapes of organic molecules built out of chemical building blocks, where potential energies are obtained by solving Schrödinger equation, or the space of protein structures from interactions of individual amino acids. In an ensemble setting, we hypothesize that there exist system-specific sampling rules Wolff (1989); Wang and Swendsen (1990); Houdayer (2001) that make it possible to traverse these particular energy landscapes more efficiently than classical methods. These rules can be learned from examples of energy minima calculated with classical methods for small problems.

Here we demonstrate this approach in the context of a model problem that defines a natural ensemble. We construct Ising spin glasses Mézard et al. (1987); Edwards and Anderson (1975), where the interaction matrix is a structured random matrix, chosen from protein contact maps. Given the large database of natural proteins Berman et al. (2000) and the distinctive contact pattern of a folded protein Nelson et al. (2008), protein contact map data gives an ideal ensemble for testing whether interaction rules encoded in ’s are consistent across varying system sizes.

In recent years, several machine learning techniques have been applied to sample spin configurations of Ising model. The list includes but is not limited to simple regression Liu et al. (2017), restricted Boltzmann machine Huang and Wang (2017), reinforcement learning Bojesen (2018), autoregressive model Wu et al. (2019); McNaughton et al. (2020), and normalizing flow model Hartnett and Mohseni (2020). The goal of these works is to estimate the Boltzmann distribution of a given problem so that the learned model can either completely replace or be used as a proposal distribution for Markov Chain Monte Carlo simulation. However, these schemes do not consider learning with instances of varying sizes.

Instead we recast spin glass energy minimization, a well known NP-hard problem Barahona (1982), as a node classification problem in graph theory, and employ a graph neural network (GNN) Wu et al. (2020) to parametrize the mapping from a to the corresponding ground state configuration. We generate the set of most probable configurations from the GNN model to predict low-lying configurations of an energy landscape. If this configuration set misses a ground state configuration, we show that simulated annealing starting from a configuration in this set can search for the ground state configuration more efficiently. The schematic of this strategy is described in Fig. 1.

We further test the utility of the GNN model by constraining the size of —where size refers to the number of amino acid—in a training set and testing the trained model on larger ’s. As we increase the size limit of training set from 30 to 500, model’s test performances quickly reach the level comparable to those obtained with the size limit of 800. We also show that the model trained on with size less than 800 can predict configurations whose energies are much lower than those found by simulated annealing for with size around 3000.

We begin by constructing an ensemble of Hamiltonians for which the underlying potential energy landscapes have similar patterns. For simplicity, we consider Ising Hamiltonians of the form

| (1) |

where both coupling and field terms depend on an interaction matrix and . The field is chosen to prevent all ground state configurations from collapsing to a trivial ground state of all 1’s. Within this formulation, to obtain an energy landscape ensemble, we need to specify an ensemble of matrices, whose is random yet with distinct shared patterns. Due to binary , this structured randomness of shall be encoded in spin connectivity.

In this work, we obtain this ensemble using protein contact maps to calculate the ensemble. Since proteins are characterized by distinct secondary structures, together with non-local contacts, protein contact maps define a set of structured random connectivity matrices. We downloaded fist subunit of all protein structure files deposited in the Protein Data Bank rcs to ensure ’s do not have a distinct block diagonal structure due to the presence of multiple domains. Hence the connectivity features in our ensemble solely originate from the pattern of intra-domain folding, which are referred to as secondary and tertiary protein structures. We excluded protein with missing spatial information or whose protein chain is shorter than 20 residues or longer than 800 residues. We additionally added two largest subunit structures with chain length of 3661 and 2814 for the size generalizability experiments. From these files, we generated contact maps by setting as 1 if the distance between two corresponding amino acid residues is less than 8Å, and 0 otherwise Monastyrskyy et al. (2014). From this procedure, we obtained 64563 different contact maps, excluding the two large cases, to define our ensemble. We emphasize the resulting spin configurations derived from these energy functions Eq. 1 have no relation to amino sequences; our intent here is not to make predictions about proteins per se, but instead to use the regularity of protein structures to define a natural ensemble.

For all ’s except the two largest, we ran simulated annealing for each starting from 100 random initial configurations, and selected an annealed configuration with the lowest energy as its purported ground state configuration . The annealing schedule was optimized such that simulated annealing always find the ground state configurations on ’s with size smaller than 30, which we identified from brute-force enumeration of all configurations. For the two largest ’s, we decreased a cooling rate and increased an equilibration steps at each temperature to account for an enlarged configurational space and ran 30 randomly initialized simulated annealing. Further simulation details are discussed in the Supplemental Material 111See supplemental material at …. Since finding the global energy minimum of an Ising spin glass in configuration space is NP-hard, we settled for this repeated annealing scheme and assume closely approximates the actual ground state configuration. From all pairs of and , 6400 pairs were randomly selected as validation set, another 6400 pairs as test set, and remaining 51763 pairs as training set. For the first size generalizability experiment, we used the same test set but sub-select from the training set the pairs whose are smaller than certain size cutoffs to make small- training sets. For the second size generalizability experiment, we used the entire training set and test on the two large ’s.

Our prediction task is to learn the mapping from . This can be cast as a node classification problem in graph theory and hence we parametrize the mapping with a graph neural network. Given a graph, the -layer model generates an expressive feature embedding for node by aggregating the features of all -hop neighbor nodes of as shown in Fig. 1, and uses this embedding to classify globally whether each node shall be turned on or off. To allow generalization of the mapping across ’s with different size and structure, we chose a message passing framework Scarselli et al. (2008); Gilmer et al. (2017) with attention mechanism Vaswani et al. (2017); Veličković et al. (2017), instead of Laplacian-based convolution method Defferrard et al. (2016); Kipf and Welling (2016) which requires a constant graph structure.

The inputs to the graph neural network are the adjacency matrix and node features, which are initially a node degree the field strength from Eq. 1. At each layer, the network updates node features by first applying standard nonlinear transformation—expanding feature dimension from 2 to , then calculating attention coefficient to find relative importance of a neighbor node to node , and taking weighted sum of neighbor nodes’ features using these coefficients. To capture more information from neighbors, this process is repeated times with different set of weights and newly computed to produce features for a node. The features of node are then reduced to a probability in the final layer for node classification. The functional forms of the operations are detailed in the Supplemental Material Note (1). Notably, the final model used in this work consists of six layers.

The model’s predicted configuration, , are then obtained by choosing the greater of the two node classification probabilities, . However, this point estimation does not take a full advantage of the learned embedding. This scheme is especially problematic for nodes with probabilities around 0.5 because the non-argmax configurations would have been just as likely. Therefore, we generate a set of top most probable configurations from the configuration probability output of the model, giving a broader coverage of the low-lying region in the energy landscape. To obtain such configurations, we pick top nodes whose are close to 0.5, and order all permuted configurations according to their corresponding sum combination of probabilities.

A GNN model trained on the entire training set correctly predicted for 1700 of 6400 ’s in the held-out test set. To further quantify the model’s performance, we investigate following two metrics. Define accuracy as the ratio between the number of correctly predicted nodes in and total number of nodes and energy difference as the energy gap between a predicted configuration and the true ground state. Fig. 2(a) shows the prediction accuracy decreases, while the energy difference increases with increasing . The average accuracy and across the entire ensemble are and 2.79 respectively, due to the size distribution of skewed towards small ’s (Supplemental Material Fig. S1 Note (1)). Since energy histograms of small ’s obtained via complete configuration enumeration are peaked at positive energy and negative energy configurations occur in far-left tail region (Supplemental Material Fig. S2 Note (1)), predicting configurations with such small energy differences is surprising. We emphasize again that the model does not evaluate the energy function of Eq. 1 to optimize a configuration. This suggests the GNN model has learned a generalizable node feature transformation for this particular class of energy landscape simply by comparing its predicted configurations to known ground state configurations.

| Training set | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| size cutoff | # ’s | acc. | acc. | acc. | # found | ||||||

| 30 | 560 | 14 | 0.866 | 23.84 | 150 | 0.880 | 17.22 | 2824 | 0.926 | 3.68 | 2988 |

| 40 | 1301 | 65 | 0.901 | 11.05 | 260 | 0.909 | 8.68 | 2756 | 0.923 | 3.64 | 3081 |

| 50 | 1839 | 388 | 0.944 | 5.15 | 1079 | 0.954 | 3.00 | 2383 | 0.932 | 2.68 | 3850 |

| 100 | 7319 | 511 | 0.953 | 4.12 | 1265 | 0.962 | 2.23 | 2272 | 0.932 | 2.74 | 4048 |

| 200 | 24589 | 784 | 0.964 | 3.20 | 1398 | 0.972 | 1.65 | 2222 | 0.942 | 1.85 | 4404 |

| 300 | 36130 | 1442 | 0.972 | 2.79 | 1638 | 0.977 | 1.32 | 1454 | 0.938 | 1.93 | 4534 |

| 400 | 43190 | 1497 | 0.974 | 2.47 | 1687 | 0.980 | 1.18 | 1503 | 0.943 | 1.45 | 4687 |

| 500 | 47631 | 1519 | 0.976 | 2.36 | 1738 | 0.981 | 1.12 | 1463 | 0.947 | 1.28 | 4720 |

| 800 | 51763 | 1700 | 0.978 | 2.31 | 1673 | 0.983 | 1.13 | 1417 | 0.953 | 1.07 | 4790 |

Fig. 2(b) shows the averaged histogram of node classification probability from high accuracy configurations in blue, and that of low accuracy configurations in orange. A striking feature is that most nodes in both cases are predicted with high certainty as evinced by the peaks at both ends. In addition, the histogram of low accuracy configurations shows more nodes in the middle, indicating that the model’s prediction accuracy may be directly related to the node classification probability . We thus set a threshold probability, , to select nodes with low uncertainty where or and calculated an error rate among these nodes as is varied. As shown in the inset of Fig. 2, the number of misclassified nodes among such nodes goes down as we increase the threshold. This result in turn confirms that most misclassifications indeed occur among uncertain nodes in the middle region of the histogram.

Given there are only a handful of uncertain nodes, the set of top most probable configurations can account for most of permutations of their node configurations because the first few nodes to be changed are those with . This enumerated set allows for coverage of configuration space around the model’s initial prediction. We enumerated top 1000 most probable configurations for each in the test set to cover 10 most uncertain nodes since . We then calculated the energy of these configurations and picked the lowest energy configuration as an improved prediction of the model, . From this procedure, we additionally found the ground state configurations in 1673 ’s. This improvement of by configuration enumeration suggests that the uncertain nodes contain frustrated nodes to which the configuration energy is highly sensitive and, thus, that the GNN model has an implicit representation of energy in the node embedding.

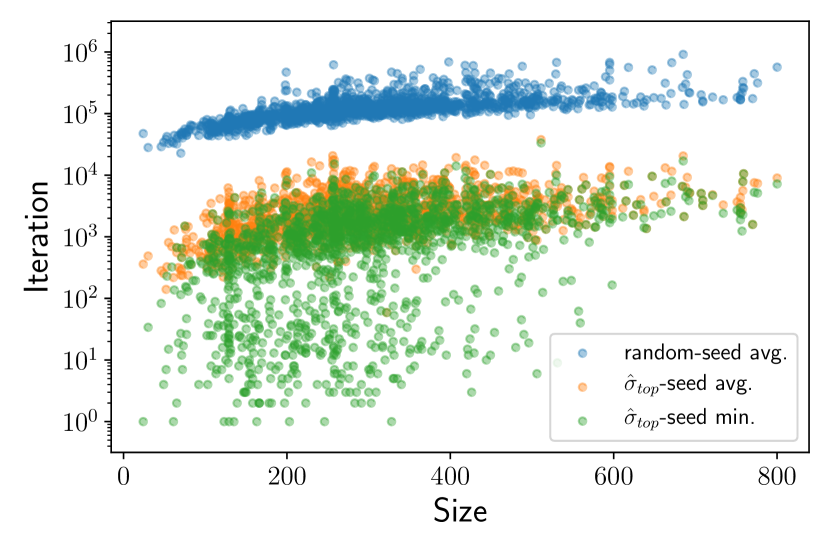

For the half of test set where our model missed the ground state configurations, all predicted configurations still have small energy differences relative to the ground state configurations. We exploited this by running 5 simulated annealings with as a starting configuration for each remaining . Since we are now annealing from a low-lying point in energy landscape, the starting temperature of the annealing should concurrently decreased to prevent the chain from sampling arbitrarily high energy states. We used the temperature value at which the energy trajectory of sampled states drifts up to a bit higher energy at the beginning to allow for initial exploration of energy landscape Note (1). The -seeded simulated annealing found the ground state configuration for additional 1417 ’s with about two orders of magnitude reduction in the averaged number of sampling steps as shown in Fig. 3. In about 20% cases, the minimum number of sampling steps from 5 trials were only few hundreds as only one or two node were misclassified in . In total, we found ground state configurations for 75% of test set ’s. This seeded simulated annealing result shows that the predicted configuration falls in the vicinity of , which is often close enough that simulated annealing can locate . Given the top most probable configurations, we could also run simulated annealing from other configurations or perform parallel tempering Swendsen and Wang (1986); Earl and Deem (2005) with multiple configurations to account for the possibility of falling in a basin that is too far away from the one containing .

To test for size generalizability of GNN model, we first trained models on eight small- training sets with increasing size cutoffs and test them on the existing test set. As shown in Table 1, test set accuracy and energy difference roughly reach those of the original model trained on the entire training set when the size cutoff for is above 300. Note that the number of the match between the annealed configurations, , and decreases as the size constraint increases because most of ’s are already recovered through model predictions. In all cases, the configuration enumeration and seeded simulated annealing improve upon initial model predictions. If , , and are considered together, GNN model provides comparable performance even at the size cutoff of 200. The local interaction pattern of 6-hop neighbor networks in a protein shorter than 100 amino acids should be similar enough to that of much longer chain. It is thus likely that relatively poor performances with training sets with size cutoff less than 100 are simply due to a limited amount of available data.

To test this hypothesis in more practical use case setting, we tested the original model on ’s of size 3661 and 2814. The model predicted with energy -443 and configuration enumeration further improved the energy to -447 for of size 3661, whereas the lowest energy configuration found from 30 randomly initialized simulated annealing runs was -432. On with size 2814, we obtained energy values of -366 for and -370 for whereas randomly initialized simulated annealing only reached down to -362. As in previous analysis, we launched simulated annealing from and obtain annealed configurations with energy -463 and -391 for with size 3661 and 2814, respectively. Fig. 4 highlights the efficiency of the GNN model over randomly initialized simulated annealing.

Our work shows that it is indeed possible to use an ensemble of energy landscapes with known ground state configurations to train a neural network to deduce the ground state configuration of similar energy landscapes. On our model problem, we deterministically found the ground state configurations on 50% of the held out test set ’s and stochastically on additional 25% through a graph neural network, top configuration enumeration, and seeded simulated annealing. Although this number may appear modest, we emphasize that all configurations predicted by the model were extremely low-lying configurations, often in the vicinity of the ground state configurations. Since the loss function does not include other local minima—or, for that matter, the energy function itself, we believe that such an informed prediction is possible only if the learned node feature embedding of GNN correctly captures the local interaction rule encoded in interaction matrices, and hence the topological undulation in configuration space.

In addition, we showcased the practical utility of the GNN model with size generalizability experiments. The GNN model predicted the configurations that could not be reached by naive simulated annealing with random initial guess, and we were able to further improve it by combining the enumeration scheme and a seeded simulated annealing. Therefore, the GNN model presents an appealing method to produce extremely good initial guesses for a class of energy landscape problem where governing physics is local.

In future work, we will apply this framework to a variety of problems where the discovery of global minima would have technological consequences.

Acknowledgements.

We thank Lucy Colwell for suggesting protein contact maps as a model system for ensembles of energy landscapes, and for helpful discussions. We also thank Yohai Bar-Sinai, Carl Goodrich, Mor Nitzan, Mobolaji Williams and Jong Yeon Lee for helpful discussions. This work is supported by the Office of Naval Research through the grant N00014-17-1-3029, as well as the Simons Foundation.References

- Wales and Bogdan (2006) D. Wales and T. Bogdan, J. Phys. Chem. B 110, 20765 (2006).

- Kirkpatrick et al. (1983) S. Kirkpatrick, C. D. Gelatt, and M. P. Vecchi, Science 220, 671 (1983).

- Papadimitrou and Steiglitz (1982) C. H. Papadimitrou and K. Steiglitz, Combinatorial optimization: algorithms and complexity (Prentice-Hall, Englewood Cliffs, NJ, 1982).

- Landau and Binder (2014) D. P. Landau and K. Binder, A guide to Monte Carlo simulations in statistical physics (Cambridge university press, 2014).

- Wolff (1990) U. Wolff, Nucl. Phys. B, Proc. Suppl. 17, 93 (1990).

- Wolff (1989) U. Wolff, Phys. Rev. Lett. 62, 361 (1989).

- Wang and Swendsen (1990) J.-S. Wang and R. H. Swendsen, Physica A 167, 565 (1990).

- Houdayer (2001) J. Houdayer, Eur. Phys. J. B 22, 479 (2001).

- Mézard et al. (1987) M. Mézard, G. Parisi, and M. Virasoro, Spin glass theory and beyond: An Introduction to the Replica Method and Its Applications, Vol. 9 (World Scientific Publishing Company, 1987).

- Edwards and Anderson (1975) S. F. Edwards and P. W. Anderson, J. Phys. F 5, 965 (1975).

- Berman et al. (2000) H. Berman, J. Westbrook, Z. Feng, G. Gilliland, T. Bhat, H. Weissig, I. N. Shindyalov, and P. E. Bourne, Nucleic Acids Res. 28, 235 (2000).

- Nelson et al. (2008) D. L. Nelson, A. L. Lehninger, and M. M. Cox, Lehninger principles of biochemistry (Macmillan, 2008).

- Liu et al. (2017) J. Liu, Y. Qi, Z. Y. Meng, and L. Fu, Phys. Rev. B 95, 041101 (2017).

- Huang and Wang (2017) L. Huang and L. Wang, Phys. Rev. B 95, 035105 (2017).

- Bojesen (2018) T. A. Bojesen, Phys. Rev. E 98, 063303 (2018).

- Wu et al. (2019) D. Wu, L. Wang, and P. Zhang, Phys. Rev. Lett. 122, 080602 (2019).

- McNaughton et al. (2020) B. McNaughton, M. Milošević, A. Perali, and S. Pilati, Phys. Rev. E 101, 053312 (2020).

- Hartnett and Mohseni (2020) G. S. Hartnett and M. Mohseni, arXiv preprint arXiv:2001.00585 (2020).

- Barahona (1982) F. Barahona, J. Phys. A 15, 3241 (1982).

- Wu et al. (2020) Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, and S. Y. Philip, IEEE Trans. Neural Netw. Learn. Syst. (2020), https://doi.org/10.1109/TNNLS.2020.2978386.

- (21) “RCSB Protein Data Bank,” .

- Monastyrskyy et al. (2014) B. Monastyrskyy, D. d’Andrea, K. Fidelis, A. Tramontano, and A. Kryshtafovych, Proteins 82, 138 (2014).

- Note (1) See supplemental material at …

- Scarselli et al. (2008) F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner, and G. Monfardini, IEEE Trans. Neural Netw. 20, 61 (2008).

- Gilmer et al. (2017) J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, and G. E. Dahl, (2017), arXiv:1704.01212 .

- Vaswani et al. (2017) A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, (2017), arXiv:1706.03762 .

- Veličković et al. (2017) P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, (2017), arXiv:1710.10903 .

- Defferrard et al. (2016) M. Defferrard, X. Bresson, and P. Vandergheynst, (2016), arXiv:1606.09375 .

- Kipf and Welling (2016) T. N. Kipf and M. Welling, (2016), arXiv:1609.02907 .

- Swendsen and Wang (1986) R. H. Swendsen and J.-S. Wang, Phys. Rev. Lett. 57, 2607 (1986).

- Earl and Deem (2005) D. J. Earl and M. W. Deem, Phys. Chem. Chem. Phys. 7, 3910 (2005).

Supplemental Material for “Predicting ground state configuration of energy landscape ensemble using graph neural network”

I SIMULATION DETAILS

For each sampling step of simulated annealing, we randomly selected a single node from a current node configuration vector and changed the node value to 1 if it is 0 or vice versa to generate a proposal configuration , and accepted this configuration with probability given by the Metropolis criterion

| (S1) |

where is an inverse temperature as we set Boltzmann constant to 1 and is the Ising Hamiltonian from the main text.

I.1 Calibration of annealing schedule

We used -bit Gray code algorithm to enumerate all {0,1}-node configurations and identify ground state configurations for ’s with size ranging up to 30. Using an exponential cooling schedule, , with the initial temperature and exponentially increasing equilibration steps, , with the initial number of steps , 10 randomly initialized simulated annealing runs all reached those ground state configurations for ’s with size smaller than 30. We let the temperature cycle go up to 30. We used this annealing schedule to calculate purported ground state configuration for remaining ’s with size ranging up to 800, repeating the simulation 100 times with different seed configurations for each . Additionally, we ran several simulated annealing runs with slower cooling and longer equilibration on 50 randomly selected ’s with size larger than 500 but these did not improve over the lowest energy annealed configurations found from the original experiments.

I.2 Annealing schedule for large

On the two largest ’s with size 3661 and 2814, all 100 simulations with the aforementioned schedule annealed to different configurations and some had relatively high energies compared to the rest, indicating a poor annealing. We thus modified the cooling schedule to with the same initial temperature yet with going up to 40 and the equilibration schedule to with . Using this schedule, we were able to anneal to low energy configurations in all 30 randomly initialized simulated annealing runs. The energies of initial configurations and annealed configurations are shown as blue and orange dots in Fig. 4 of the main text.

I.3 Annealing schedule for seeded simulated annealing

Since has low energy and is thus likely in the vicinity of , we modified the cooling schedule and equilibration length to focus our search on the configurational space near —i.e., exploit rather than explore. We kept the factor of 0.8 in the exponential cooling but decreased the initial temperature to 0.5, which was high enough that configurations with energy higher than are accepted for all ’s in the test set. For the equilibration, we decreased the initial number of steps to 100 while keeping the exponential form of the length schedule. Although it is certainly possible to optimize the schedule per individual basis and find for more ’s and quicker, we did not pursue this further as it deviates from the scope of this work.

II SIZE DISTRIBUTIONS OF

Fig. S1 shows the size distributions of in the entire ensemble and test set. Note that the ensemble histogram reflects the length distribution of first subunit of all proteins in Protein Data Bank that have more than 20 but fewer than 800 amino acids. The histogram of the test set resembles the full histogram, as we would expect for a random split.

III Energy histograms on small

Fig. S2 show the histogram of energy values of three randomly selected ’s with size 28, calculated using all configurations found via brute-force enumeration with 28-bit Gray code generator. This can be viewed as the density of states for the five instances of spin glass Hamiltonian of Eq. 1 of the main text. Note that negative energy values occur several standard deviations away from the center, which is at a positive energy. We expect this distributional form to hold in bigger ’s, yet with larger spread due to more contacts in . In with size 3661, for example, the distribution seems to have a peak around 4600 and a configuration can have energy value at least as high as 4700 as shown in Fig. 4 of the main text.

IV NETWORK STRUCTURE AND TRAINING SETUP

The network takes two input, an adjacency matrix and node feature matrix, with where is initially 2 with node degree and field strength, , as these two input node features. At each network layer, we first transform number of features into number of features via a weight matrix, . We then calculate an attention coefficient —which represents the importance of node on node —using a weight vector, as following

| (S2) |

where denotes neighbors of node , represents an activation function, and is concatenation operation. We used LeakyReLU activation with negative input slope for . Note that the summation includes the current node to account for the influence of the node itself, relative to neighbors, on updating its features. This technique is referred to as self-attention in the machine learning literature. The updated node feature is then computed by taking a weighted sum of features of neighboring nodes,

| (S3) |

with ELU activation function with default multiplicative factor as .

To make node features more expressive while improving learning stability, we implemented multi-head attention where the feature update of Eq. S3 is repeated for times, each with different W, , and newly computed . The sets of hidden features are concatenated in input and middle layers to generate features,

| (S4) |

and averaged in the final layer to retain the feature dimension which is reduced to 2, representing and ,

| (S5) |

We then apply sigmoid activation to the output matrix to obtain configuration probability matrix, where .

We trained the model to minimize the binary cross-entropy between the configuration probabilities and found via simulated annealing, and optimized model hyperparameters on the 6400 validation set. We used Adam optimizer with an initial learning rate of 0.002 and a batch size of 16. The final model consisted of six graph attention layers—5 hidden layers implementing Eq. S4, each with attention heads and node features, and a final layer implementing Eq. S5.

V EFFECT OF LAYER DEPTH ON TEST SET PERFORMANCE

As shown in the previous section, each graph attention layer aggregates information from 1-hop neighbors. Hence, a depth of model controls the field of view we employ in learning the local interaction rules. Fig. S3 summarizes how a model’s test set performance change as we increase its layer depth. We noticed that going past 6 layers give negligible improvement. From a network analysis perspective, it would be a worthwhile effort to probe whether this saturation of learnability at 6-hop neighborhood hints to a cluster property intrinsic to protein contact maps.

VI Additional results from size generalizability experiment

Table S1 reports detailed breakdowns of the test set accuracy and the energy difference of models trained on small- training sets. over or under refers to a given metric averaged on all test set ’s whose sizes are over or under the size cutoff applied during model training.

| Accuracy | ||||

|---|---|---|---|---|

| size cutoff | under | over | under | over |

| 30 | 0.881199 | 0.871548 | 2.450821 | 21.263792 |

| 40 | 0.927850 | 0.899662 | 1.076943 | 11.178737 |

| 50 | 0.968899 | 0.944050 | 0.228221 | 5.177260 |

| 100 | 0.975691 | 0.950044 | 0.496598 | 4.471919 |

| 200 | 0.973282 | 0.946137 | 0.913523 | 5.005585 |

| 300 | 0.973081 | 0.955576 | 1.192279 | 4.803279 |

| 400 | 0.970069 | 0.955870 | 2.192192 | 8.932947 |

| 500 | 0.970202 | 0.951427 | 2.037306 | 8.844054 |

Fig. S4 shows the two metrics at each size window of test set ’s, with finer size cutoff spacing from size 30 to 50. The degradation of model performance is less severe as a training set size cutoff increases. The model appears to generalize well starting from the size cutoff of 50. Note that there are only 1839 ’s in the training set at this cutoff, which is only about 3% of the whole ensemble.