Predicting dominant hand from spatiotemporal context varying physiological data

Abstract

Health metrics from wrist-worn devices demand an automatic dominant hand prediction to keep an accurate operation. The prediction would improve reliability, enhance the consumer experience, and encourage further development of healthcare applications. This paper aims to evaluate the use of physiological and spatiotemporal context information from a two-hand experiment to predict the wrist placement of a commercial smartwatch. The main contribution is a methodology to obtain an effective model and features from low sample rate physiological sensors and a self-reported context survey. Results show an effective dominant hand prediction using data from a single subject under real-life conditions.

Index Terms:

Commercial smartwatch, contextual information, dominant hand prediction, heart rate, step-count, wrist-worn device placement.I Related Work / Literature Review

Smartwatches are getting higher technology for increasingly affordable prices and their popular health-tracking applications demand accuracy [1]. Alternatives to overcome wrist placement inaccuracies are required to support well-informed health promotion for smartwatch consumers [2]. Automatic dominant hand prediction would reduce negative impacts from an incorrect setup process or wrist placement changes. The spatiotemporal user context and its connection with physiological variations provide a potential solution for dominant hand prediction and accuracy improvements.

Addressing concerns in terms of health impact, three popular commercial wrist-worn devices were recently reviewed in [3]. Results showed accurate heart rate measurements, but poor energy expenditure estimations. It is consistent with results for previous models presented in [4], six years ago. Professionals and users are warned to proceed with caution using those devices for training or nutritional purposes. Manufacturers are asked to improve algorithms addressing potential error sources.

Device placement is one of the factors influencing the accuracy of accelerometer data during human activity recognition [5]. In particular, wrist-worn devices are known to be susceptible to dominant or non-dominant wrist placement with up to 8.5% variations [2]. Some manufacturers seem aware of this problem and claim to include measures that counteract its effects, see [6]. However, the corrective measures depend on user input during initial setup or setting adjustments [7]. An automatic dominant hand prediction would improve the user experience, with added reliability towards accurate health information.

Developing an effective automatic solution faces various challenges to detect a dominant hand. Only low sample rate sensor information is available from consumer-grade devices. That limits elaborated physiological measures such as Heart Rate Variability (HRV) requiring up to 250 Hz [8]. The prediction should also work under real-life unconstrained conditions. However, the dominant hand is only distinguished in activities including writing, eating, cooking, dishwashing, or generally using tools demanding strength and precision [9].

Machine learning algorithms are already used for different activity recognition tasks [10, 11]. So, merging human context and sensor data from different sources is a strategy with proven potential to deal with the established challenges. A remarkable example effectively estimates stress levels using limited physiological data and activity information to improve its performance [12].

II Approach / Method

The objective is to use machine learning concepts to predict the dominant hand of a user wearing a commercial smartwatch. A two-hand experiment was proposed to gather physiological data simultaneously from both hands for over 2-weeks. A survey was designed for the user to keep a self-report of contextual information including location, activity, and subjective physiological arousal. The raw data was downloaded using the official export tools from the manufacturer. Algorithms using python and Matlab were proposed to clean, analyze and calculate features from the raw data. Finally, using feature selection algorithms a relevant subset was used to train and select an effective classification model.

II-A Two-hand experiment conditions

Smartwatches are getting higher technology sensors and processing units for increasingly affordable prices. Sensors commonly include GPS, gyroscopes, pedometers, temperature, blood oxygen (SpO2), and advanced heart monitoring. The available data enables gesture recognition, physical activity identification, and estimation of relevant health metrics. These wearable devices are now a popular alternative to easily monitor wellness information, such as energy expenditure, sleep quality, heart rhythm assessment, and stress levels.

The proposed two-hand experiment takes advantage of those characteristics to develop the dominant hand prediction algorithm. The subject wore a Fitbit Sense device on each wrist following its recommended tightness and location for all-day wear with one finger width above the wrist bone, see Figure 1. Given the long 2-week time frame, the smartwatches were removed to keep consistent measurement conditions. This was done for recharging the devices, a daily cleaning procedure, and also to prevent interaction with water.

After the initial setup, the settings were kept with default values. All the available features were turned on, and the devices had an active Fitbit Premium subscription during the experiment. Each wristband was connected to a different smartphone due to a one-device restriction for the official application. Both device pairs were kept reasonably close to ensure a consistent Bluetooth link during the experiment.

Lastly, it is important to highlight that the subject was instructed to keep routines unchanged. Other than wearing the pair of smartwatches, keep them charged, and clean. The data should record typical unconstrained real-life conditions for a graduate student without mobility restrictions.

II-B Self-report survey for contextual information

A simple and short survey was designed to easily gather detailed information on the subject’s context. The user was prompted to make only between 1 to 4 selections among different options of interest. Those options included 10 common locations, 16 activities, 5 levels of physiological arousal, and a flag to indicate when the device was removed. The survey was implemented using Google Forms as shown in Figure 2.

The suggested procedure was filling out the survey before any change of activity or location. A shortcut access was added on the home screen of the subject’s main smartphone to remember the self-report task. However, the subject remarked serious difficulties to keep on the self-report during the experiment. It was difficult to establish the routine, the task was easily forgotten even with an expressed high level of engagement with the experiment. Unreliable internet connection and slow smartphone responses further contributed to the problems.

The survey demanded unrealistic commitment under a highly dynamic real-life routine. So, the subject completed some information using the survey, but also manually included additional entries to complete the spreadsheet at the end of each day. The manual updates considered the inspection of additional sources of information to increase accuracy on the context labels. That includes using GPS information from Google Timeline records, heart rate, and step count from the Fitbit dataset. During the experiment 256 context updates were completed using the survey, and 241 additional updates were included manually.

It is worth clarifying that the purpose of the survey is just to facilitate the research process. However, a fully practical implementation would require the extraction of contextual information from the available sources. For instance, location can be automatically extracted from GPS information, and activity can be estimated from the numerous sensors on both smartphone and smartwatch devices.

II-C Data processing

The process starts using the official data export tool from the Fitbit website to download the most detailed raw data available. Files for heart rate, step count, calories, and altitude are stored in separate folders for each hand. The manufacturer encodes the information in multiple JSON-formatted files.

A python code with inspiration on [13] is developed to convert the JSON files into a single CSV file for each hand. The CSV files are further processed using Matlab to remove unnecessary information, adjust nonuniform date-stamps, and re-sampling to a consistent 1-second interval dataset. Missing information was filled using linear interpolation for heart rate, and zero value for the remaining variables. Clean and consistent datasets for each hand are stored as timetables on MAT files.

For the self-reported contextual information. The spreadsheet containing the data is directly processed using Matlab. A timetable structure is also created and string data types are converted into categorical. A MAT file is saved with a re-sampling of a 1-second interval consistent with the physiological data. Another one is saved with a greater re-sampling under the window interval used for feature calculation. A new time categorical column is created to divide time into four more meaningful ranges (Noon, Morning, Afternoon, and Evening).

Time synchronization is then performed using the Matlab timetables to merge both physiological and contextual data with the uniform 1-second intervals. The physiological data is further cleaned eliminating time frames where the Fitbit devices are removed. Finally, the corresponding dominant and non-dominant hand labels are assigned to the clean, uniform, and organized datasets ready for feature extraction.

II-D Feature Extraction

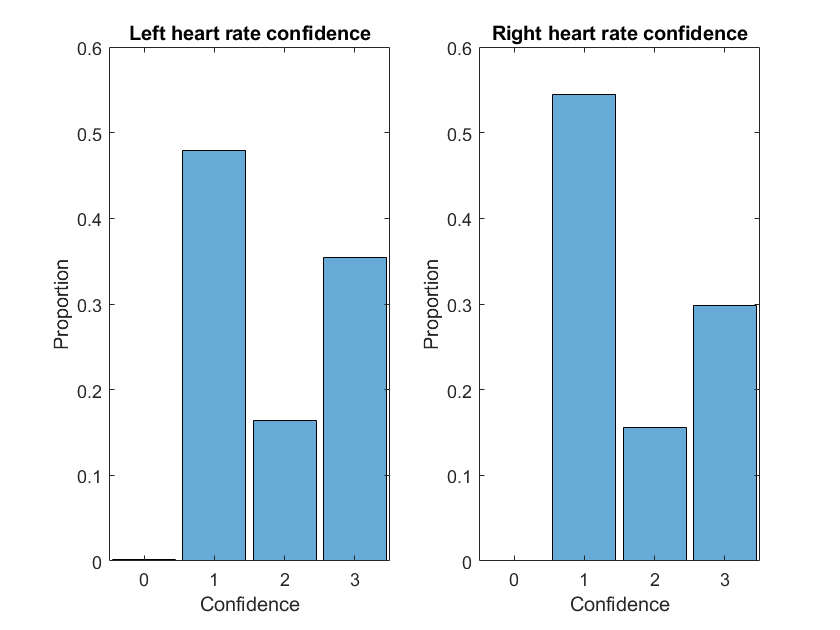

The objective on dominant hand prediction was clearly defined only after the experiment design and its data collection. So, some of the collected data is dropped as irrelevant given the context of this problem. For instance, altitude from the Fitbit data, and subjective physiological arousal form the self-report survey. The confidence for each heart rate sample was also omitted given the similar distribution of its values for both dominant and non-dominant hand, see Figure 3.

The features for the remaining data were calculated using different window sizes of 1, 5, 10, 20, and 40 minutes. For heart rate 3 frequency-based, and 17 time-based statistical features are calculated. The cumulative sum was the only feature considered for step count and calories. The 3 categorical features were not further processed and completed a set with 25 features in total.

The experiment was extended two additional weeks to test two different settings available on Fitbit devices. The first one with both devices on default non-dominant hand setting. The second one with the devices configured accordingly to the dominant or non-dominant hand setting. The features are calculated for both conditions and stored in separated files.

II-E Context Information and Feature Selection

The Minimum Redundancy Maximum Relevance (MRMR) Algorithm, available on Matlab, is initially used for feature selection. Following an heuristic approach, it provides significant scores for classification tasks with both categorical and continuous features. The results show low relevance for most of the heart rate and categorical features.

For the example shown in Figure 4, features with top-five MRMR scores include calories, step count, and heart rate values for range, minimum, maximum and number of peaks. The results vary depending on the window size, but also on the Fitbit setting being used, something evidenced on the example shown. However, it is important to remark that most categorical features got low or zero scores on almost every tested condition.

Notwithstanding the MRMR scores, categorical features contain some valuable contextual information such as activity, location, and time. From literature, see [9], it is clear that certain activities are strongly connected with dominant hand preference. Therefore, a further feature filter accounting to context and problem knowledge is proposed.

The context-based feature filter eliminates windows potentially providing indistinguishable data for dominant prediction. That includes activities involving reduced body movement, such as sleeping, movies, or meetings. Physiological data suggesting similar conditions, like zero step or zero calories counts. Also, including means to filter windows with specific activities or conditions facilitating dominant hand prediction.

II-F Classification algorithms

The Matlab Classification Learner toolbox was used to evaluate a broad variety of models. It includes up to 32 different model configurations such as decision trees, discriminant analysis, logistic regression, naive Bayes, support vector machines (SVM), k-nearest neighbor (KNN), kernel approximations, ensemble alternatives, and neural networks. The models supporting both categorical and continuous features are strongly limited, but most of the tests included continuous features only. The main configurations across all the different evaluations were set to a 5-fold cross-validation method and 10% proportion for the test dataset. Access to the data and codes is available on the following link: https://drive.google.com/drive/folders/1kdaSetBQWjWsV2pbKZ-Y41dZirmyWskS?usp=sharing

III Dataset Description

Two sets with a commercial smartphone paired with a Fitbit smartwatch were used for the project. All the information is gathered for a single individual without constraints on its daily life behavior. The devices record timestamped physiological data with a low second sample rate and the following specifications for each hand:

-

•

Heart Rate (HR) (355000 data points with 7 s average sample time)

-

•

Steps (19000 data points with 2 min average sample time, only 5000 non-zero entries)

-

•

Calories (43000 data points with 1 min average sample time)

-

•

Altitude (140 data points with 4 h average sample time)

The self-report survey records include 497 data points for:

-

•

Wearing/Removing smartwatch flags

-

•

Location (10 common points of interest)

-

•

Activity (16 common options)

-

•

Subjective physiological arousal (5 levels)

IV Analysis/Results/Evaluations

The baseline evaluation considered default non-dominant Fitbit setting for both hands, a window size of 1 min, 4 out of 26 top-scoring features using MRMR, all the recorded data without context-based feature filter. From the 32 classification models, neural networks, SVM, and Naive Bayes required the highest training time reaching over the 500 s mark. The top-5 validation accuracy models are decision trees and ensemble versions reaching up to 87.8% accuracy.

The results suggest that some feature is providing biased information. From the unconstrained real life context, it is not expected to easily identify the dominant hand under most of the activities. From the MRMR scores the calories count have a value around 100 times higher compared to the next feature in the list, the peak counts for heart rate. A test omitting the calories count for the highest ranked classifier further confirms the impact of the feature on model performance dropping best accuracy to 50.4%. The following evaluations omit the calories feature, and change other conditions to reveal a more realistic result.

Another study evaluates the value of using the proposed context-based filter to clean the dataset. It is performed only using data from all activities with potential to identify dominant hand, and with step-counts different from zero. The results show a consistent accuracy between validation and test data for the Coarse Decision Tree and the Ensemble Boosted Decision Tree reaching up to 59.5%. The improvement on classification performance, motivated additional individual evaluations for different relevant activities.

The study for individual evaluations start with the exercising activity. It is worth mentioning that MRMR feature selection is used and 3 out of 21 top-scoring features are chosen. The top features usually include heart rate peak count, step-count, and an statistical heart rate measure like range. For these conditions, a quadratic SVM results with consistent accuracy around 70% for both validation (see Figure 5) and test data. For other individual activities such as doing chores, and walking lower accuracy of 58.6% and under 50% values are respectively obtained for the best performing models.

The remaining evaluations are performed as modifications from the best performing conditions. Using the context-based filter to work with only exercising activity, without zero step-counts, ignoring calories count, and selecting top-scoring features with MRMR. Evaluating the effect of using a wider window size. The window was changed from 1 min to 5 min. As a result, MRMR selection showed a dominant score for the heart rate peak counts. Training models with just two features resulted in accuracy values near 80% for quadratic SVM, but scatter plots evidence almost exclusive contribution from the peaks feature, see Figure 6.

V Discussion / Limitations

From the results it is clear that a dominant hand prediction can be obtained with up to 70% accuracy. It is critical to carefully select the available physiological and contextual information. In particular, the results were better obtained when the individual is exercising. The MRMR algorithm recognize 3 relevant features including step-count, heart rate peak count, and range.

It is important to highlight that only data for one person was used across the study. The data was collected under no activity restrictions, and detailed information for the most relevant activities on dominant-hand prediction was strongly limited. The labels for the evaluated activities were manually recorded and not detailed. The physiological information has low sample rates and it is limited to manufacturer logs.

VI Conclusion & Future Work

The impact and importance of contextual information is evidenced through the different analysis. Dominant hand detection seems viable using limited commercial smartwatch data and it is constrained to reliable activity detection. There is a long list of further tests and aspects to consider, some including:

-

•

Further model selection, speed concerns

-

•

Improved data processing and feature calculation

-

•

Sensor fusion Smartphone Step-count

-

•

Significant activity selection

-

•

Automatic activity recognition

-

•

Record and usage of accelerometer data

-

•

Experiments collecting data for more persons

-

•

Additional impact and mitigation of inaccuracies when dominant hand is detected

References

- [1] B. Reeder and A. David, “Health at hand: A systematic review of smart watch uses for health and wellness,” Journal of Biomedical Informatics, vol. 63, DOI 10.1016/j.jbi.2016.09.001, pp. 269–276, 10 2016. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S1532046416301137

- [2] D. S. Buchan, L. M. Boddy, and G. McLellan, “Comparison of free-living and laboratory activity outcomes from actigraph accelerometers worn on the dominant and non-dominant wrists,” Measurement in Physical Education and Exercise Science, vol. 24, DOI 10.1080/1091367X.2020.1801441, pp. 247–257, 10 2020. [Online]. Available: https://www.tandfonline.com/doi/full/10.1080/1091367X.2020.1801441

- [3] G. Hajj-Boutros, M.-A. Landry-Duval, A. S. Comtois, G. Gouspillou, and A. D. Karelis, “Wrist-worn devices for the measurement of heart rate and energy expenditure: A validation study for the apple watch 6, polar vantage v and fitbit sense,” European Journal of Sport Science, DOI 10.1080/17461391.2021.2023656, pp. 1–13, 1 2022. [Online]. Available: https://www.tandfonline.com/doi/full/10.1080/17461391.2021.2023656

- [4] M. P. Wallen, S. R. Gomersall, S. E. Keating, U. Wisløff, and J. S. Coombes, “Accuracy of heart rate watches: Implications for weight management,” PLOS ONE, vol. 11, DOI 10.1371/journal.pone.0154420, p. e0154420, 5 2016. [Online]. Available: https://dx.plos.org/10.1371/journal.pone.0154420

- [5] A. Davoudi, M. T. Mardini, D. Nelson, F. Albinali, S. Ranka, P. Rashidi, and T. M. Manini, “The effect of sensor placement and number on physical activity recognition and energy expenditure estimation in older adults: Validation study,” JMIR mHealth and uHealth, vol. 9, DOI 10.2196/23681, p. e23681, 5 2021. [Online]. Available: https://mhealth.jmir.org/2021/5/e23681

- [6] Fitbit, “Does the wrist i wear my device on affect accuracy?” 2022. [Online]. Available: https://help.fitbit.com/articles/en_US/Help_article/1136.htm

- [7] Fitbit, “Wear sense - handedness (fitbit sense user manual v1.13),” 2022. [Online]. Available: https://help.fitbit.com/manuals/sense/Content/manuals/html/Wear%20Device.htm

- [8] O. Kwon, J. Jeong, H. B. Kim, I. H. Kwon, S. Y. Park, J. E. Kim, and Y. Choi, “Electrocardiogram sampling frequency range acceptable for heart rate variability analysis,” Healthc Inform Res, vol. 24, DOI 10.4258/hir.2018.24.3.198, no. 3, pp. 198–206, 2018. [Online]. Available: http://e-hir.org/journal/view.php?number=936

- [9] M. E. Nicholls, N. A. Thomas, T. Loetscher, and G. M. Grimshaw, “The flinders handedness survey (flanders): A brief measure of skilled hand preference,” Cortex, vol. 49, DOI https://doi.org/10.1016/j.cortex.2013.02.002, no. 10, pp. 2914–2926, 2013. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0010945213000385

- [10] G. M. Weiss, J. L. Timko, C. M. Gallagher, K. Yoneda, and A. J. Schreiber, “Smartwatch-based activity recognition: A machine learning approach,” DOI 10.1109/BHI.2016.7455925, pp. 426–429. IEEE, 2 2016. [Online]. Available: http://ieeexplore.ieee.org/document/7455925/

- [11] Y. Vaizman, K. Ellis, and G. Lanckriet, “Recognizing detailed human context in the wild from smartphones and smartwatches,” IEEE Pervasive Computing, vol. 16, DOI 10.1109/MPRV.2017.3971131, pp. 62–74, 10 2017. [Online]. Available: http://ieeexplore.ieee.org/document/8090454/

- [12] Y. S. Can, N. Chalabianloo, D. Ekiz, J. Fernandez-Alvarez, C. Repetto, G. Riva, H. Iles-Smith, and C. Ersoy, “Real-life stress level monitoring using smart bands in the light of contextual information,” IEEE Sensors Journal, vol. 20, DOI 10.1109/JSEN.2020.2984644, pp. 8721–8730, 8 2020. [Online]. Available: https://ieeexplore.ieee.org/document/9051655/

- [13] C. Ottesen, “Tutorial: Making sense of fitbits’s json export,” Feb. 2019. [Online]. Available: https://dataespresso.com/en/2019/02/07/fitbit-json-to-csv/