Beersheba, Israel

PolyDNN

Polynomial Representation of NN for Communication-less SMPC Inference

Abstract

The structure and weights of Deep Neural Networks (DNN) typically encode and contain very valuable information about the dataset that was used to train the network. One way to protect this information when DNN is published is to perform an interference of the network using secure multi-party computations (MPC). In this paper, we suggest a translation of deep neural networks to polynomials, which are easier to calculate efficiently with MPC techniques. We show a way to translate complete networks into a single polynomial and how to calculate the polynomial with an efficient and information-secure MPC algorithm. The calculation is done without intermediate communication between the participating parties, which is beneficial in several cases, as explained in the paper.

1 Introduction

Deep Neural Networks (DNN) are the state-of-the-art form of Machine Learning techniques these days. They are used for speech recognition, image recognition, computer vision, natural language processing, machine translation, and many other tasks. Similar to other Machine Learning (ML) methods, DNN is based on finding patterns in the data and, as such, the method embeds information about the data into a concise and generalized model. Subsequently, the sharing of the DNN model also reveals private and valuable information about the data.

In this paper, we consider a situation where the data owner collects the data, trains the model, and shares the model to be used by clients. The model is very valuable for the data owner, as the training process is resource-intensive and frequently performed over private and valuable data. Therefore, the goal of the data owner is to retain control of the model as much as possible after it was shared. The data owner will likely be willing to delegate the query service to (Machine Learning Data model Store MLDSore [7]) clouds, in a way that the cloud providers do for computing platforms. In such a scenario, the cloud providers should not be able to simply copy the model and reuse it. In addition, the data owner should have the ability to limit the number of queries executed on the model, such that a single, or a small team of colluding cloud providers (or servers) cannot execute an unlimited number of queries on the model. In fact, we show that a (D)NN can be represented by a (nested) polynomial, therefore enough queries (points on the polynomial) can reveal the neural network, and the ownership of the information (succinct model) is at risk. In practice, as data is constantly updated new data emerges and old data becomes obsolete, thus, frequent updates of the neural network are frequent enough to define a new polynomial for which the past queries are not relevant.

In our previous work [6] we have shown an algorithm to distributively share DNN-based models while retaining control and ownership over the shared model. The activation functions of neural network units were approximated with polynomials and we have shown an efficient, additive secret sharing based, MPC protocol for information-secure calculations of polynomials.

In this paper, we first suggest approximating a trained neural network with a single (possibly nested) polynomial. We present a nested polynomial approach to speed up the calculation of the polynomial on a single node. The essence of the idea is to nest the polynomial approximation of each layer within the approximation of the next layer, such that a single polynomial (or arithmetic circuit) will approximate not only a single network unit, but a few layers or even the entire network. We discuss an efficient, (perfect information theoretically secure) secret-sharing MPC calculation of the polynomial calculation of DNN. Lastly, we compare the MPC calculation of the neural network itself with a calculation of polynomial representation.

Our main contribution in this research is an optimization of (communication-less) MPC calculations of a shared DNN by approximating neighboring layers by a single polynomial, and in some cases, the entire network. An additional contribution is a nesting of a multi-layer polynomial to reduce the redundant calculations of the intermediate layers.

The rest of the paper is structured as follows; Previous relevant research is covered in Section 2. Section 3 discusses the most common activation functions and how they are approximated with polynomials. Section 4 discusses a way to approximate DNN with a single polynomial on a single computing node. The premise of the section is to establish a basis for a secure, communication-less multi-party computation, which is presented in Section 5. Section 6 summarizes the techniques for blindly computing a polynomial (some of its coefficients being secret shares of zero) to obtain blind execution of DNN. Section 7 describes the way our polynomial neural network representation facilitate an efficient execution of the inference by an untrusted third party, without revealing the (machine learning big) data the queries, and the results. Empirical experiments are described in Section 8 and, lastly, the paper is concluded in Section 9.

2 Previous Work

The main issue in calculating the neural network activation with secure multi-party computations algorithms is the translation of activation functions from floating-point arithmetic to fixed-point. The regular activation functions of neural network units use floating-point arithmetic for the accuracy of the calculations, as the penalty of such calculations on modern CPUs is small. However, distributed MPC protocols are built for fixed-point arithmetic, and many times even for limited range values.

Approximation of neural network units’ activation function with fixed-point arithmetic was considered before in [9, 10], where polynomial functions were suggested for approximation. In both works, the network was not distributed but rather was translated into fixed-point calculations to run over encrypted data. However, the methods of approximation are similar to the MPC case.

CryptoDL [10] showed an implementation of Convolutional Neural Networks (CNN) over encrypted data using homomorphic encryption (HE). As fully homomorphic encryption is limited to addition and multiplication operations, the paper has shown approximation of CNN activation functions by low-degree polynomials due to the high-performance overhead of higher degree polynomials.

The calculation of neural networks with secure multi-party computations was considered in [12]. Sigmoid and softmax activation functions were replaced with functions that can be calculated with MPC. Their experiments showed that the polynomial approximation of the sigmoid function requires at least a 10-degree polynomial, which causes a considerable performance slow-down with garbled circuit protocol. Thus, they propose to replace sigmoid and softmax activation functions with a combination of ReLu activation functions, multiplication, and addition. Our experiments show that the accuracy of polynomial function approximation increases with the degree, , of the polynomial and flattens at about As a side-note, a polynomial degree is a less important issue in our computation scheme for reasons explained later in the paper. Their work had a limitation for two participating parties and the algorithm was shown to be limiting in terms of performance and the practical size of the network.

CrypTFlow [11] is a system that converts TensorFlow (TF) code automatically into secure multi-party computation protocol. The system has three parts: a compiler, from TF code into two and three-party secure computations, an optimized three-party computation protocol for secure interference, and a hardware-based solution for computation integrity. The most salient characteristic of CrypTFlow is the ability to automatically translate the code into MPC protocol, where the specific protocol can be easily changed and added. The optimized three-party computational protocol is specifically targeted for NN computation and speeds up the computation. This approach is similar to the holistic approach of [1].

SecureNN [15] proposed arguably the first practical three-party secure computations, both for training and for activation of DNN and CNN. The impressive performance improvement over then, state-of-the-art, results is achieved by replacing garbled circuits and oblivious transfer protocols with secret sharing protocols. The replacement also allowed information security instead of computational security. The paper provides a hierarchy of protocols allowing calculation of activation functions of neural networks. The downside is that the protocols are specialized for three-party computations and their adaptation for more computational parties is not trivial, unlike our scheme that supports any number of participating servers. Also, despite being efficient, the paper claimed more than a four times speedup over SecureML’s [12] work, the protocols require ten communication rounds for ReLu calculation of a single unit not counting share distribution rounds.

A different approach at speeding up performance was made by [5], which concentrated on two-party protocols. The work showed a mixed protocol framework based on Arithmetic sharing, Boolean sharing, and Yao’s garbled circuit (ABY). Each protocol was used for its specific ability, and the protocols are mixed to provide a complete framework for neural networks activation functions.

MPC computations of DNN were considered in additional works, for example, in [13, 1], which have a lesser connection to our contribution.

We begin by showing how DNN can be approximated with polynomial functions representing a single, or multiple layers. As an approximation of multiple layers increases the degree of the polynomial function, we show possible techniques to use (intermediate) communication to keep the degree low in Section 4.

3 Neural Network as Polynomial Functions in a Single Node Case

We show how to approximate functions that are a typical part of DNNs, by polynomials.

We focus on the most commonly used functions in neural networks.

Weighted Sum of the Unit Input. Figure 1 depicts a schema of a single neuron.

Given neuron inputs , the weighted sum is a multiplication of inputs with the corresponding weights where is a bias of the neuron.

The sum is very easily approximated with a polynomial, as it is a vector multiplication of weight with the -dimensional input or in other words, a polynomial of degree 1.

Common Activation Functions. Most of the research approximating DNN activation functions focused on these few common functions:

-

•

ReLu ,

-

•

Leaky ReLu (similar to ReLu but if )

-

•

Sigmoid

-

•

TANh

-

•

SoftMax (used for multi-class prediction )

All those functions can be approximated with a polynomial using various different methods, for example [16, 10, 12, 1, 13]. Our optimization method is agnostic to a specific approximation method.

Another point to consider is that opposite to most of the research approach in [10], which minimized the degree of the approximating polynomial,

our communication-less approach allows us to use a higher degree polynomials.

In our previous research [6], that considered a single polynomial for a single neuron unit, rather than a single (nested) polynomial for many or even all units as we do here, we have shown that 30-degree Chebyshev polynomials achieve good results. Increasing the polynomial degree higher results in diminishing returns.

Convolution Layer.

The convolution layer is used in Convolutional Neural Networks (CNN), mainly for image recognition and classification. Usually, this layer performs dot product of a (commonly) square of data points (pixels). The idea is to calculate the local features. The layer performs multiplication and addition, which are directly translated into a polynomial.

Max and Mean Pooling. Max and Mean pooling compute the corresponding functions of a set of units. Those functions are frequently used in CNN following the convolution layers. Previous works [16] suggested replacing max-pooling with a scaled mean-pooling, which is trivially represented by a polynomial. However, this requires the replacement to be done during the training stage.

For networks that did not replace max pooling with mean and as an alternative, max function can be approximated by:

| (1) |

When the approximation is reduced to a scaled mean pooling function, i.e., without division by the number of elements.

A simple and practical variation of the equation 1 is:

| (2) |

Notice that the function provides an approximation near any values of and , which is an advantage over Taylor or Chebyshev approximations, that are developed according to a specific point. Despite its simplicity, equation 2 provides a relatively good approximation, see Figure 2.

Notice that using a two-variable function for the max pooling layer of inputs requires chaining of the max functions:

Alternatively, the optimization sequence is interrupted at the max-pooling layer, which will require an MPC protocol for the max function calculation, for example [15].

Long Short-Term Memory (LSTM). LSTM is a subset of Recurrent Neural Network (RNN) architecture, whose goal is to learn sequences of data. LSTM networks are used for speech recognition, video processing, time sequences, etc.

There are many different variations of LSTM units with a usual structure, including several gates or functions, which enable the unit to remember values over several cell activations. A common activation function of LSTM units is the logistic sigmoid function, which we already considered in Section 3.

4 Multiple Layers Approximation

We have discussed the approximation of DNN functions by polynomials. The approximation exists for all the common functions. This makes it possible to combine multiple layers into a single polynomial function according to the connectivity of the layers.

One example of a network that can be approximated by a single polynomial function is auto-decoder, see Figure 3 for a simple example, where hidden layers are dense layers with (commonly) ReLu or sigmoid activation.

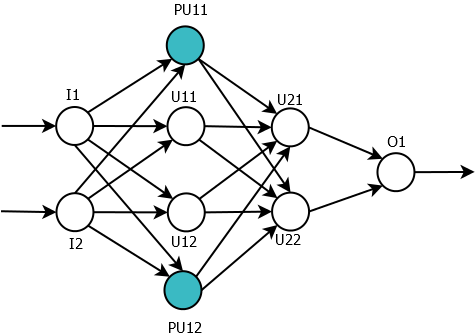

The idea is to create a polynomial for the “flow” of the data in the network instead of approximating every single neural unit with a polynomial. As an example, consider the network in Figure 4.

The network consists of an input layer () on the left, two dense hidden layers ( and ), and one output layer , which is implemented by the softmax function. The units are marked as where is the hidden layer number and is the number of the unit in the layer. We assume that the activation functions of the hidden layers are ReLu (or any other function that can be approximated by a polynomial function).

Consider a unit . It calculates the function which is approximated by the polynomial. Assume that ReLu activation functions are approximated using a polynomial of -degree.

| (3) |

Unit receives and as inputs and calculates the “nested” polynomial function:

| (4) |

In general, assuming dense layers, the nested polynomials are defined as:

| (5) |

In this simple case, the result of networks evaluation can be calculated by evaluating two polynomials of -degree: and and calculating the output layer function of their output. Overall, by approximating softmax by we get the following polynomial for the entire network:

|

(6) |

Notice that and were calculated twice as they are used as inputs for both and units.

Non-dense layers are approximated in a similar way but with only the corresponding inputs from the previous layer. An example of such architectures is CNN, commonly used for image recognition. CNN layers have a topographic structure, where neurons are associated with a fixed two-dimensional position that corresponds to a location in the input image. Thus, each neuron in the convolutional layer is connected (receives its inputs) from a subset of neurons from the previous layer, that belong to the corresponding rectangular patch.

For the polynomial approximation of such networks, the polynomial approximating the unit depends only on the relevant units from the previous layer. In case when the interconnections of the networks, part of the architecture, are part of a secret as well, then a technique from Section 4.2 can be used. In other words, the network is considered to be dense, but the weights of the “pseudo”-connections are set to zero. Thus, achieving the same effect as not connecting the units at all.

4.1 Nesting to Decrease Polynomial Degree

Neural units’ calculation is the most common operation in the DNN feed-forward operation. The approximation of the operation with polynomial significantly increases the complexity of the activation function. For instance, ReLu function is in its essence a simple if condition, and is approximated with a degree polynomial.

The impact is less severe than expected, as the approximation is applied to the networks after their training phase, which is extremely computationally intensive. However, it is still significant if many inference calculations are performed. In a multi-layer polynomial approximation, the degree of polynomials increases leading to an increase of performance overhead as well.

To limit polynomial degree at layer , the polynomials from layer are not explicitly expanded into a single function (see Equation 5), but rather kept as a sum of lower-degree polynomials. In this way, each polynomial is calculated only once in the process of the calculation of each multi-layer polynomial. This will limit the degree of the polynomial and eliminate redundant calculations.

4.2 Hiding Network Architecture

In case the network architecture in itself is valuable, it might be desired to conceal the correct architecture from cloud providers. The naïve multi-layer polynomial approximation hides the network architecture somewhat, even though, the degree of the polynomial might be a telling factor.

A way to conceal the exact network architecture is to add “pseudo”-nodes to the network. Those nodes will not contribute to the network inference but will add noise to the network architecture. Figure 5 shows an example of a simple network from Fiture 4 with two added pseudo-units: and . To nullify the influence of the pseudo-units on the network inference the results of those units activation have to be canceled. A better way is to nullify an output edge weights, rather than input connection. This way, we ensure that memory-enabled units or custom-activation units will not contribute. In the example, the edges: , , and will be zeroed.

The location of the units is randomized and the number of the units is depended on the need to hide the original network architecture.

5 Communication-less MPC for Polynomial Calculations

The goal of MPC calculations in the considered setup is to protect the published model from exposure to participating cloud providers. The model is trained by the data provider and has two components: architecture, which includes the layout, type, and interconnection of the neural units, as well as the weights of the input, which were refined during the training of the network, i.e. back-propagation phase.

Our goal is to protect the weights that were obtained by a costly process of training. While the architecture also might hold ingenious insights, it is considered less of a secret and may be exposed to the cloud providers. In Section 4.2 we also discuss ways to hide the network architecture.

Any MPC protocol can be used, preferably if it answers the following requirements.

-

•

The protocol calculates polynomials over participating parties. Our goal is to spread the calculation over many servers/cloud providers to minimize the risk of adversaries’ collaboration. Therefore, the protocol should preferably support parties.

-

•

Perfect Information theoretically secure protocol.

-

•

Efficient calculation of the polynomial in terms of communication rounds to enable usage of high-degree polynomials.

A number of MPC protocols answer those requirements [2, 4].

These MPC protocols based on Shamir secret sharing [14] can cope with a minority of semi-honest parties and even with a third of the malicious parties.

BGW protocol [2] provides a perfect security and [4] provides statistical security with any desirable certainty.

In our case, the input is not a multi-variable that is secret-shared, but rather the weights and coefficients of the network are the secrets.

Clear-text Inputs. In a simpler scenario, the input is revealed to all participating parties. In this case, the secrets are the weights of the trained network. The input values are then can be considered as numerical constants for the MPC calculation and thus, communication rounds can be eliminated completely, see BGW [2] algorithm where additive “gates” are calculated locally without any communication.

Given a secret-share of coefficient :

The polynomial can be calculated as where and use the corresponding secret share.

Secret-Shared Inputs. In the second scenario, the input values are protected as well, and thus, they are distributed by the secret share. As the input values are raised to polynomial degree , the secret share is done on the set of values: Multiplication of secret shares requires communication rounds in a general case, still when secret sharing every element of it is possible to eliminate the communications all-together using [3].

Notice that nested polynomials, Section 4.1, cannot be used in this case, as the polynomial terms have to be regrouped for nesting, and secret-sharing of inputs prevents that.

6 Distributed Communication-less Secure Interference for Unknown DNN

The last two sections, Section 4 and Section 5, provide all the required building blocks for communication-less MPC for common DNNs. In Section 4 we showed how a given, pre-trained network can be approximated with a single polynomial, in most common cases. As a side-note, as the neural network activation functions are not limited to a specific set, there might be networks that cannot be approximated. However, the majority of networks use a rather small set of functions and architectures.

Once the network is presented by a single polynomial, Section 5 shows that it can be calculated without a single communication round (apart from the input distribution and output gathering) when the inputs are revealed, or with half the communication rounds when the inputs are secret.

Taken together, those two results enable a somewhat surprising outcome: the data owner can train DNN models, pre-process, and share them with multiple cloud providers. The providers then can collaboratively calculate interference of the network on common or secret-shared inputs without ever communicating with each other. Thus, reducing the attack surface even further even for multi-layer networks.

7 Fully Homomorphic Encryption Alternative

The reduction of Neural Networks to nested polynomials, facilitate inference over encrypted polynomial coefficients and encrypted inputs using computational secure (unlike the perfect information theoretic secure of the other scheme proposed here) Fully Homomorphic Encryption (FHE) [8]. The nested polynomial that represents fully connected layers can still be calculated in polynomial time (the total number of connections is quadratic in the number of every two layers of neurons) so that some of the encrypted coefficients (or edge weights) can be an encrypted zero which in fact yields an (unrevealed) subset of the Neural Network.

Delegation of machine learning to a third party (e.g., cloud provider) without revealing anything about the (big) data (collected), the inputs/queries, and the outputs is an important goal we address here, by using FHE and the nested polynomial. In certain cases, for example when the neuron computes the max function, the nested polynomial can integrate actual FHE computation of the max over the inputs arriving from the previous layer, rather than a polynomial over these inputs. A neuron is computed as polynomial over input polynomials (values), and two (or more) results can be computed for each neuron: one a polynomial over the inputs to the neuron and one an FHE max value over the input. Then use an encrypted bit(s) to blindly choose among the results, i.e. between polynomial or “direct” FHE calculation of the neuron activation function.

In the rest of the paper, we will show experiments performed to validate the results.

8 Experiments

All tests were performed on the Fashion database of MNIST, which contains a training set of 60,000 and a testing set of 10,000 28x28 images of 10 fashion categories. The task is a multi-class classification of a given image.

To solve the problem we have used a non-optimized neural network with two dense hidden layers: one of 300 units and the second one with 100 units. The output layer is a softmax layer with ten units and batch normalization layers before each activation layer.

The performed experiments were done on a pre-trained model. The model was loaded and translated into polynomial as described above automatically. This enables us to perform translation for any pre-trained network, similarly in spirit to [11]. Both the original model and polynomial representation were executed on the same inputs. The outputs are compared for different classification (divided by a total number of test inputs).

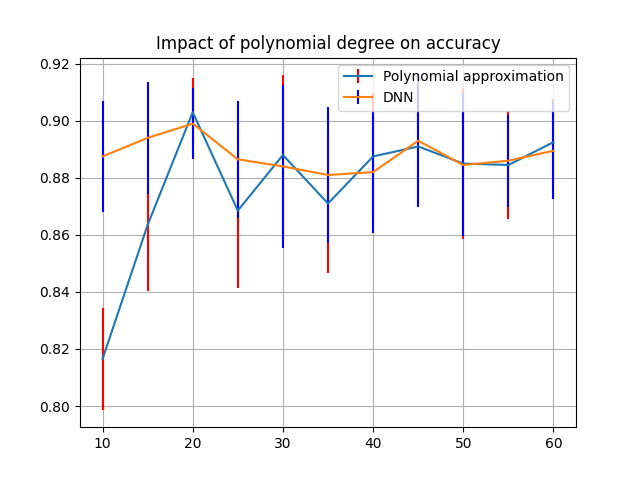

Figure 7 shows the difference in accuracy of the network with different degrees.As can be seen, the accuracy improves with the degree of the polynomial approximation, however the improvement flattens at around .

The computation costs are increasing linearly with the polynomial degree (data not shown), where the original ReLu is similar to degree polynomial. Thus, it makes sense to choose the lowest degree that still provides consistent and accurate results.

9 Conclusions

DNN networks are quickly becoming one of the most common computing workloads. Therefore, any optimization of their execution is important and could potentially save numerous CPU and network resources. In this paper, we have presented a way to reduce and ultimately eliminate the number of communication rounds in the secure multi-party computation of DNN models. This setup of the computations is important for the secure sharing of DNN-based models. We think that this optimization method can enable more efficient DNN calculations and further progress in the process of privacy-preserving data sharing. In particular, extra security and privacy factor is established, when no communication among the MPC participants is required, namely a participant cannot easily collude with other participants, as their identities are revealed to it.

The above optimization of DNN evaluation targets the inference phase, which is done after the DNN-based model is shared and distributed across cloud providers. The network is not trained anymore, but only queried by the clients. At this phase, the performance issues do not impact the data owners, which could be resource-limited end-devices, but rather are relevant for the cloud providers that have as much larger resources.

DNNCoin (Deep Neural Network Coin), or SMCoin (Similitude Model Coin) can be structured in a distributed fashion similar Cryptocoins, where secret shares of the DNN coefficients are distributed among servers, possibly with a common Merkle tree root residing on Blockchain and proof for the share belonging to the Merkle tree. Such that a request for inference using a particular DNNCoin/SMCoin is accompanied with (cryptocurrency) payment to the owner of the Big Data used to create the DNNCoin/SMCoin, while limitig the number of queries to avoid revealing the (possibly, polynomial representing) DNNCoin/SMCoin.

References

- [1] N. Agrawal, A. S. Shamsabadi, M. J. Kusner, and A. Gascón. Quotient: Two-party secure neural network training and prediction. In CCS ’19, 2019.

- [2] M. Ben-Or, S. Goldwasser, and A. Wigderson. Completeness theorems for non-cryptographic fault-tolerant distributed computation. In STOC ’88, 1988.

- [3] D. Berend, D. Bitan, and S. Dolev. Polynomials whose secret shares multiplication preserves degree for 2-cnf circuits over a dynamic set of secrets. IACR Cryptol. ePrint Arch., 2019:1192, 2019.

- [4] D. Chaum, C. Crépeau, and I. Damgård. Multiparty unconditionally secure protocols. In STOC ’88, 1988.

- [5] D. Demmler, T. Schneider, and M. Zohner. Aby - a framework for efficient mixed-protocol secure two-party computation. In NDSS, 2015.

- [6] P. Derbeko, S. Dolev, and E. Gudes. Deep neural networks as similitude models for sharing big data. In 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, December 9-12, 2019, pages 5728–5736. IEEE, 2019.

- [7] P. Derbeko, S. Dolev, and E. Gudes. Mldstore - dnns as similitude models for sharing big data (brief announcement). In S. Dolev, D. Hendler, S. Lodha, and M. Yung, editors, Cyber Security Cryptography and Machine Learning - Third International Symposium, CSCML 2019, Beer-Sheva, Israel, June 27-28, 2019, Proceedings, volume 11527 of Lecture Notes in Computer Science, pages 93–96. Springer, 2019.

- [8] C. Gentry. Fully Homomorphic Encryption Using Ideal Lattices. In Proceedings of the 41st Annual ACM Symposium on Theory of Computing, pages 169–178, New York, NY, USA, 2009. ACM.

- [9] R. Gilad-Bachrach, N. Dowlin, K. Laine, K. E. Lauter, M. Naehrig, and J. R. Wernsing. Cryptonets: Applying neural networks to encrypted data with high throughput and accuracy. In ICML, 2016.

- [10] E. Hesamifard, H. Takabi, and M. Ghasemi. Cryptodl: Deep neural networks over encrypted data. CoRR, abs/1711.05189, 2017.

- [11] N. Kumar, M. Rathee, N. Chandran, D. Gupta, A. Rastogi, and R. Sharma. Cryptflow: Secure tensorflow inference, 2019.

- [12] P. Mohassel and Y. Zhang. Secureml: A system for scalable privacy-preserving machine learning. In 2017 IEEE Symposium on Security and Privacy (SP), pages 19–38, May 2017.

- [13] B. D. Rouhani, M. S. Riazi, and F. Koushanfar. Deepsecure: scalable provably-secure deep learning. In DAC, 2018.

- [14] A. Shamir. How to share a secret. Commun. ACM, 22(11):612–613, Nov. 1979.

- [15] S. Wagh, D. Gupta, and N. Chandran. Securenn: Efficient and private neural network training. IACR Cryptology ePrint Archive, 2018:442, 2018.

- [16] P. Xie, M. Bilenko, T. Finley, R. Gilad-Bachrach, K. E. Lauter, and M. Naehrig. Crypto-nets: Neural networks over encrypted data. ArXiv, abs/1412.6181, 2014.