Polarized Self-Attention: Towards High-quality Pixel-wise Regression

Abstract

Pixel-wise regression is probably the most common problem in fine-grained computer vision tasks, such as estimating keypoint heatmaps and segmentation masks. These regression problems are very challenging particularly because they require, at low computation overheads, modeling long-range dependencies on high-resolution inputs/outputs to estimate the highly nonlinear pixel-wise semantics. While attention mechanisms in Deep Convolutional Neural Networks(DCNNs) has become popular for boosting long-range dependencies, element-specific attention, such as Nonlocal blocks, is highly complex and noise-sensitive to learn, and most of simplified attention hybrids try to reach the best compromise among multiple types of tasks. In this paper, we present the Polarized Self-Attention(PSA) block that incorporates two critical designs towards high-quality pixel-wise regression: (1) Polarized filtering: keeping high internal resolution in both channel and spatial attention computation while completely collapsing input tensors along their counterpart dimensions. (2) Enhancement: composing non-linearity that directly fits the output distribution of typical fine-grained regression, such as the 2D Gaussian distribution (keypoint heatmaps), or the 2D Binormial distribution (binary segmentation masks). PSA appears to have exhausted the representation capacity within its channel-only and spatial-only branches, such that there is only marginal metric differences between its sequential and parallel layouts. Experimental results show that PSA boosts standard baselines by points, and boosts state-of-the-arts by points on 2D pose estimation and semantic segmentation benchmarks. Codes are released111https://github.com/DeLightCMU/PSA.

1 Introduction

Recent trends from the coarse-grained (such as image-wise classification [38] and bounding box detection [15]) to the fine-grained computer vision tasks (such as keypoint estimation [31] and segmentation segmentation [62]) have received booming advances in both research and industrial communities. Comparing to the coarse-grained tasks, perception at the pixel-wise level is increasingly appealing in autonomous driving [42], augment reality [7], medical image processing [29], and public surveillance [46].

The goal of the pixel-wise regression problem is to map every image pixels of the same semantics to the same scores. For instance, mapping all the background pixels to 0 and all the foreground pixels to their class indices, respectively. Two typical tasks are keypoint heatmap regression and segmentation mask regression. Most DCNN models for regression problems take an encoder-decoder architecture. The encoder usually consists of a backbone network, such as ResNet [18], that sequentially reduces the spatial resolution and increases the channel resolution, while the decoder usually contains de-convolution/up-sampling operations that recover the spatial resolution and decrease the channel resolution. Typically the tensor connecting the encoder and decoder has an element number smaller than both the input image tensor and the output tensor. The reduction of elements is necessary for computation/memory efficiency and stochastic optimization reasons [16]. However, the pixel appearances and patch shapes of the same semantics are highly nonlinear in nature and therefore difficult to be encoded with a reduced number of features. Moreover, high input-output resolutions are preferred for fine details of objects and object parts [26, 40, 44]. Comparing to the image classification task where an input image is collapsed to an output vector of class indices, the pixel-wise regression problem has a higher problem complexity by the order of output element numbers. From the model design perspective, the pixel-wise regression problem faces special challenges: (1) Keeping high internal resolution at a reasonable cost; (2) Fitting output distribution such as that of the keypoint heatmaps or segmentation masks.

Based on the tremendous success in new DCNNs architectures, we focus on a plug-and-play solution that could consistently improve an existing (vanilla) network, i.e., inserting attention blocks [43][35][8][47][10][50][19][3]. Most of above hybrids try to reach the best compromise among multiple types of tasks, for instance, image classification, object detection, as well as for instance segmentation. These generalized goals are partially the reason that channel-only attention (SE [20], GE [19] and GCNet [3]) are among the most popular blocks. Channel-only attention blocks put the same weights on different spatial locations, such that the classification task still benefits since its spatial information eventually collapses by pooling, and the anchor displacement regression in object detection benefits since the channel-only attention unanimously highlights all foreground pixels. Unfortunately, due to critical differences in attention designs, the channel-spatial compositional attention blocks, (e.g., DA [14], CBAM [48]), did not show significant overall advantages from the latest channel-only attentions such as GCNet [3].

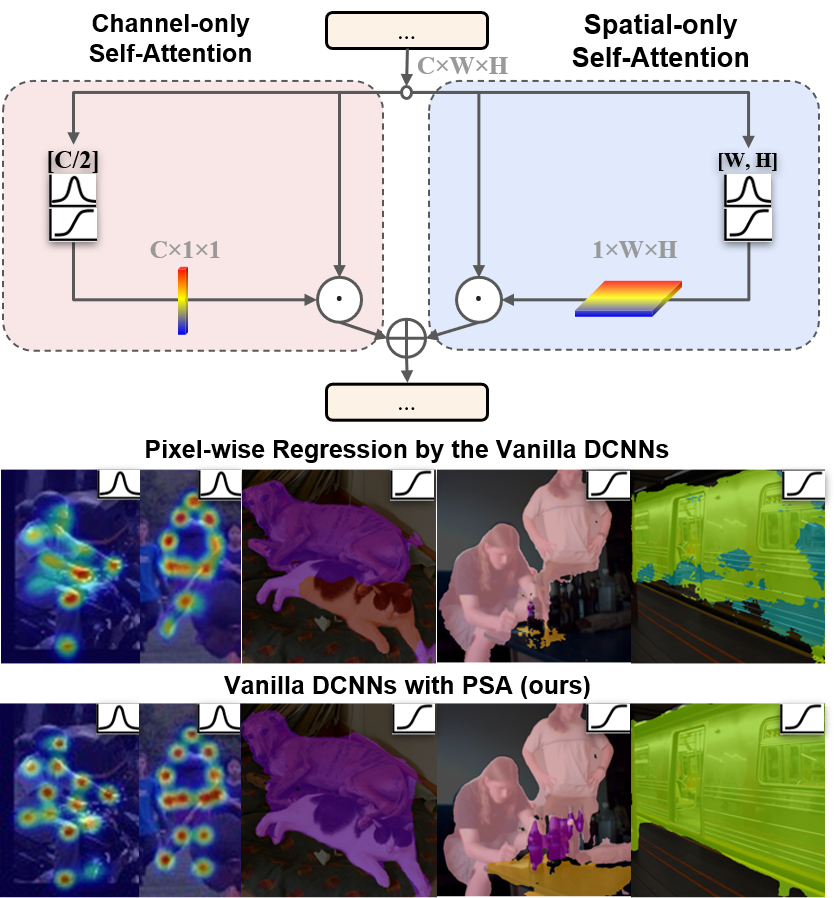

In this paper, we present the Polarized Self-Attention (PSA) block (See Figure 1) for high-quality pixel-wise regression. To preserve the potential loss of high-resolution information in vanilla/baseline DCNNs by pooling/downsampling, PSA keeps the highest internal resolution in attention computation among existing attention blocks (see also Table 1). To fitting the output distribution of typical fine-grained regression, PSA fuse softmax-sigmoid composition in both channel-only and spatial-only attention branches. Comparing to existing channel-spatial compositions [48, 14] that favor particular layouts, there is only marginal metric differences between PSA layouts. This indicates PSA may have exhausted the representation capacity within its channel-only and spatial-only branches. We conducted extensive experiments to demonstrate the direct performance gain of PSA on standard baselines as well as state-of-the-arts.

2 Related Work

Pixel-wise Regression Tasks: The advances of DCNNs for pixel-wise regression are basically pursuing higher resolution. For body keypoint estimation, Simple-Baseline[51] consists of conventional components ResNet+deconvolution. HRnet[40] address the resolution challenge of Simple-Baseline with 4 parallel high-to-low resolution branches and their pyramid fusion. Other most recent variants, DARK-Pose[56] and UDP-Pose[21], both compensate for the loss of resolution due to the preprocessing, post-processing, and propose techniques to achieve a sub-pixel estimation of keypoints. Note that, besides the performance gain among network designs, the same models with and inputs are usually better than that with inputs. This constantly reminds researchers of the importance of keeping high-resolution information. For Semantic segmentation, [4] introduces atrous convolution in the decoder head of Deeplab for wide receptive field on high-resolution inputs. To overcome the limitation of ResNet backbones in Deeplab, all the latest advances are based on HRnet [44], in particular, HRNet-OCR[41] and its variants are the current state-of-the-art. There are many other multitask architecture [17, 63, 6] that include pixel-wise regression as a component.

PSA further pursues the high-resolution goals of the above efforts from the attention perspective and further boosts the above DCNNs.

Self-attention and its Variants. Attention mechanisms have been introduced into many visual tasks to address the weakness of standard convolutions [35][2][1][37][3]. In the self-attention mechanism, each input tensor is used to compute an attention tensor and is then re-weighted by this attention tensor. Self-attention [43][35][8] emerged as a standard component to capture long-range interactions, after it success in sequence modeling and generative modeling tasks. Cordonnier et al. [8] has proven that a multi-head self-attention layer with a sufficient number of heads is at least as expressive as any convolutional layer. In some vision tasks, such as object detection and image classification, self-attention augmented convolution models [2] or standalone self-attention models [37] have yielded remarkable gains. While most self-attention blocks were inserted after convolution blocks, attention-augmented convolution [2] demonstrates that parallelizing the convolution layer and attention block is a more powerful structure to handle both short and long-range dependency.

PSA advances self-attention for pixel-wise regression and could also be used in other variants such as the convolution-augmented attentions.

Full-tensor and simplified attention blocks. The basic non-local block (NL) [47] and its variants, such as a residual form [59] second-order non local [10][50], and asymmetric non-local [64], produce full-tensor attentions and have successfully improved person re-identification, image super-resolution, and semantic segmentation tasks. To capture pair-wise similarities among all feature elements, the NL block computes an extremely large similarity matrix between the key feature maps and query feature maps, leading to huge memory and computational costs. EA [39] produces a low-rank approximation of NL block for computation efficiency. BAM [33],DAN [14] and CBAM [48] produce different compositions of the channel-only and spatial-only attentions. Squeeze-and-Excitation (SENet) [20], Gather-Excite [19] and GCNet [3] only re-weight feature channels using signals aggregated from global context modeling. Most of above attention blocks were designed as a compromise among multiple types of tasks, and do not address the specific challenges in fine-grained regression.

PSA address the specific challenges in fine-grained regression by keeping the highest attention resolution among existing attention blocks, and directly fitting the typical output distributions.

3 Our Method

Notations:222All non-bold letters represent scalars. Bold capital letter denotes a matrix; Bold lower-case letters is a column vector. represents the column vector of the matrix . denotes the element of . denotes the inner-product between two vectors or metrics. Denote as a feature tensor of one sample (e.g., one image), where are the number of elements along the height, width, and channel dimension of , respectively. where is a feature vector along the channel dimension. A self-attention block takes as input, and produces a tensor as output, where . A DCNN block is formulated as a nonlinear mapping . The possible operators of the network block include: the convolution layer , the batch norm layer , the ReLU activation layer , softmax . Without losing generality, all the convolution layers in attention blocks are the () convolution, denoted by . For simplicity, we only consider the case where the input tensor and output tensor of a DCNN block have the same dimension (i.e., ).

3.1 Self-Attention for Pixel-wise Regression

A DCNN for pixel-wise regression learns a weighted combination of features along two dimensions: (1) channel-specific weighting to estimate the class-specific output scores; (2) spatial-specific weighting to detect pixels of the same semantics. The self-attention mechanism applied to the DCNN is expected to further highlight features for both above goals.

Ideally, with a full-tensor self-attention (), the highlighting could potentially be achieved at the element-wise granularity ( elements). However, the attention tensor is very complex and noise-prone to learn directly. In the Non-Local self-attention block [47], is calculated as,

| (1) |

There are four () convolution kernels, i.e., ,, , and , that learns the linear combination of spatial features among different channels. Within the same channels, the outer-product between and activates any features at different spatial locations that have a similar intensity. The joint activation mechanism of spatial features is very likely to highlight the spatial noise. The only actual weights, s, are channel-specific instead of spatial-specific, making the Non-Local attention exceptionally redundant at the huge memory-consumption of the matrix. For efficient computation, reduction of NL leads to many possibilities: Low rank approximation of (EA), Channel-only self-attention that highlight the same global context for all pixels(GC [3] and SE [19] ), Spatial-only self-attention not powerful enough to be recognized as a standalone model, Channel-spatial composition , where the parallel composition: and the sequential composition: introduce different order of non-linearity. Different conclusions were empirically drawn, such as CBAM [48] (sequentialparallel) and DA [14] (parallelsequential), which partially indicates that the intended non-linearity of the tasks are not fully modeled within the attention blocks.

These issues are typical examples of general attention design that does not target the pixel-wise regression problem. With the help of Table 1, we re-visit critical design aspects of existing attention blocks and raise challenges on how to achieve both channel-specific and spatial-specific weighting for pixel-wise regression. (All the attention blocks are compared with their top-performance configurations.)

| Method | ch. resolution | sp. resolution | non-linearity | complexity |

|---|---|---|---|---|

| NL[47] | SM | |||

| GC [3] | - | SM+ReLU | ||

| SE [19] | - | ReLU+SD | ||

| CBAM [48] | SD | |||

| DA [14] | SM | |||

| EA [39] | () | () | SM | |

| PSA(ours) | SM+SD |

Internal Attention Resolution. Recall that most pixel-wise regression DCNNs use the same backbone networks, e.g., ResNet, as the classification (i.e., image recognition) and coordinate regression(i.e. bbox detection, instance segmentation) tasks. For robustness and computational efficiency, these backbones produce low-resolution features, for instance for the classification and for bbox detection, where is the longest side pixels of the smallest object bounding box. Pixel-wise regression cannot afford such loss of resolution, especially because the highly non-linearity in object edges and body parts are very difficult to encode in low-resolution features [4, 44, 40].

Using these backbones in pixel-wise regression, self-attention blocks are expected to preserve high-resolution semantics in attention computation. However, in Table 1, all the reductions of NL reach their top performance at a lower internal resolution. Since their performance metrics are far from perfect, the natural question to ask is: are there better non-linearity that could leverages higher resolution information in attention computation?

Output Distribution/Non-linearity. In DCNNs for pixel-wise regression, outputs are usually encoded as 3D tensors. For instance, the 2D keypoint coordinates are encoded as a stack of 2D Gaussian maps . The pixel-wise class indices are encoded as a stack of binary maps which follows the Binormial distribution. Non-linearity that directly fits the distribution upon linear transformations (such as convolution) could potentially alleviate the learning burden of DCNNs. The natural nonlinear functions to fit the above distributions are SoftMax for 2D Gaussian maps, and Sigmoid for 2D Binormial Distribution. However, none of the existing attention blocks in Table 1 contains such a combination of nonlinear functions.

3.2 Polarized Self-Attention (PSA) Block

Our solution to the above challenges is to conduct “polarized filtering” in attention computation. A self-attention block operates on an input tensor to highlight or suppress features, which is very much like optical lenses filtering the light. In photography, there are always random lights in transverse directions that produce glares/reflections. Polarized filtering, by only allowing the light pass orthogonal to the transverse direction, can potentially improve the contrast of the photo. Due to the loss of total intensity, the light after filtering usually has a small dynamic range, therefore needs a additional boost, e.g. by High Dynamic Range (HDR), to recover the details of the original scene.

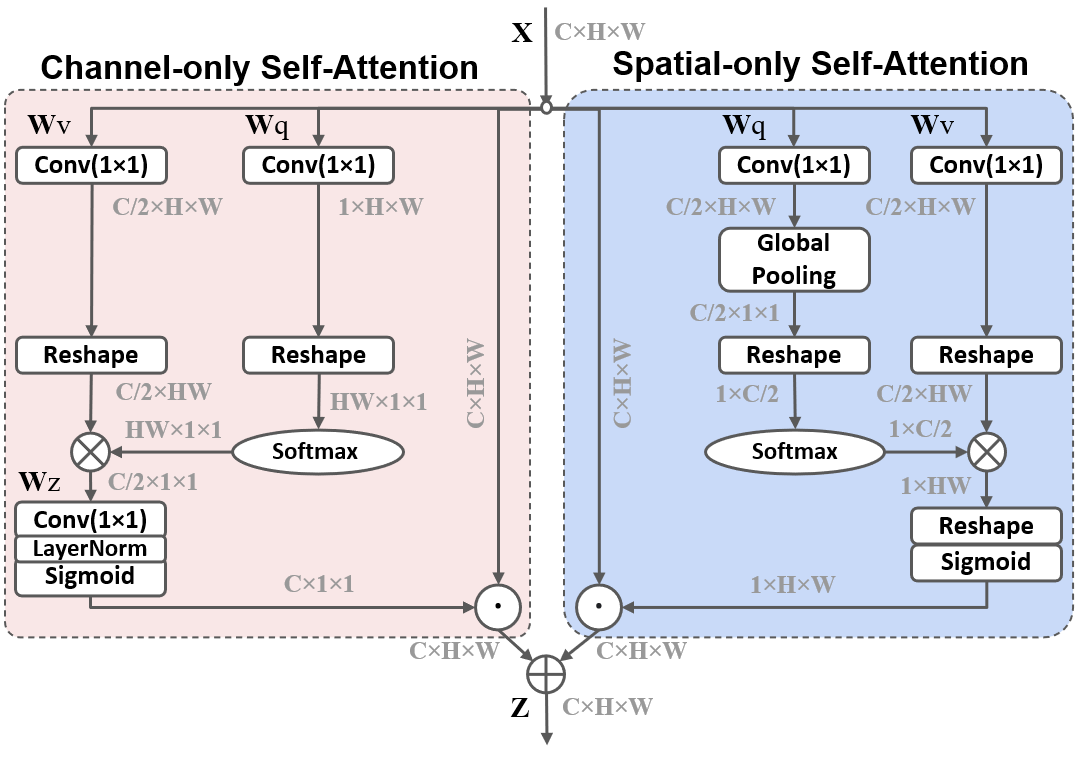

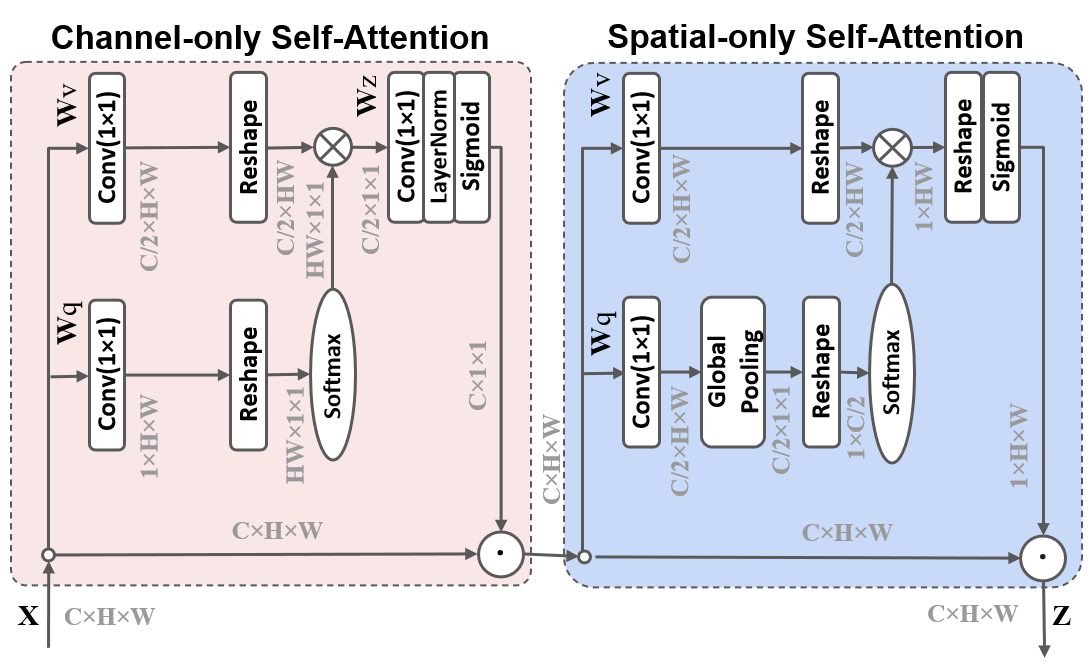

We borrow the key factors of photography, and propose the Polarized Self-Attention (PSA) mechanism: (1) Filtering: completely collapse features in one direction while preserving high-resolution in its orthogonal direction; (2) HDR: increase the dynamic range of attention by Softmax normalization at the bottleneck tensor (smallest feature tensor in attention block), followed by tone-mapping with the Sigmoid function. Formally, we instantiate the PSA mechanism as a PSA block below (also see diagram in Figure 2):

Channel-only branch :

| (2) |

where and are convolution layers respectively, and are two tensor reshape operators, and is a SoftMax operator and ”” is the matrix dot-product operation . The internal number of channels, between and , is . The output of channel-only branch is , where is a channel-wise multiplication operator.

Spatial-only branch :

| (3) |

where and are standard convolution layers respectively, is an intermediate parameter for these channel convolutions, and , and are three tensor reshape operators, and is the SoftMax operator. is a global pooling operator , and is the matrix dot-product operation. The output of spatial-only branch is , where is a spatial-wise multiplication operator.

Composition: The outputs of above two branches are composed either under the parallel layout

or under the sequential layout

where ”+” is the element-wise addition operator.

Relation of PSA to other Self-Attentions: We add PSA to Table 1 and make the following observations:

-

•

Internal Resolution vs Complexity: Comparing to existing attention blocks under their top configuration, PSA preserves the highest attention resolution for both the channel ()333 is the smallest channel number when PSA produces the best metrics, and is used throughout our experiments. and spatial () dimension.

Moreover, in our channel-only attention, the Softmax re-weighting is fused with squeeze-excitation leveraging Softmax as the nonlinear activation at the bottleneck tensor of size . The channel numbers -- follow a squeeze-excitation pattern that benefited both GC and SE blocks. Our design conducts higher-resolution squeeze-and-excitation while at comparable computation complexity of the GC block.

Our spatial-only attention not only keeps the full spatial resolution, but also internally keeps learnable parameters in and for the nonlinear Softmax re-weighting, which is more powerful structure than existing blocks. For instance, the spatial-only attention in CBAM is parameterized by a convolution (a linear operator), and EA learns parameters for linear re-weighting ( ).

-

•

Output Distribution/Non-linearity. Both the PSA channel-only and spatial-only branches use a Softmax-Sigmoid composition. Considering the Softmax-Sigmoid composition as a probability distribution function, both the multi-mode Gaussian maps (keypoint heatmaps) and the piece-wise Binomial maps (segmentation masks) can be approximated upon linear transformations, i.e. convolutions in PSA. We therefore expect the non-linearity could fully leverage the high resolution information preserved within in PSA attention branches.

4 Experiments

Implementation details. For any baseline networks with the bottleneck or basic residual blocks, such as ResNet and HRnet, we add PSAs after the first convolution in every residual blocks, respectively. For 2D pose estimation, we kept the same training strategy and hyper-parameters as the baseline networks. For semantic segmentation, we added a warming-up training phase of 5000 iterations, stretched the total training iteration by , and kept all the rest training strategy and hyper-parameters of the baseline networks. Empirically, these changes allow PSA to train smoothly on semantic segmentation.

4.1 PSA vs. Baselines

We first add PSA blocks to standard baseline networks of the following tasks.

| Method | Backbone | ImageNet Pretrain | Flops | mPara | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Simple-Baseline [51] | Res50 | Y | 72.2 | 89.3 | 78.9 | 68.1 | 79.7 | 77.6 | 20.0G | 34.0M |

| +PSA | Res50 | N | 76.5(+4.3) | 93.6 | 83.6 | 73.2 | 81.0 | 79.0 | 20.9G | 36.1M |

| Simple-Baseline [51] | Res152 | Y | 74.3 | 89.6 | 81.1 | 70.5 | 81.6 | 79.7 | 35.3G | 68.6M |

| +PSA | Res152 | N | 78.0(+3.7) | 93.6 | 84.8 | 75.2 | 82.3 | 80.5 | 37.5G | 75.2M |

| HRNet [40] | HRNet-W32 | Y | 75.8 | 90.6 | 82.5 | 72.0 | 82.7 | 80.9 | 16.0G | 28.5M |

| +PSA | HRNet-W32 | Y | 78.7(+2.9) | 93.6 | 85.9 | 75.6 | 83.5 | 81.1 | 17.1G | 31.4M |

| HRNet [40] | HRNet-W48 | Y | 76.3 | 90.8 | 82.9 | 72.3 | 83.4 | 81.2 | 32.9G | 63.6M |

| +PSA | HRNet-W48 | Y | 78.9(+2.6) | 93.6 | 85.7 | 75.8 | 83.8 | 81.4 | 35.2G | 70.0M |

| Method | Backbone | mIoU | Flops | mPara |

|---|---|---|---|---|

| DeepLabV3Plus [4] | MobileNet | 71.1 | 16.9G | 5.22M |

| +PSA | MobileNet | 73.7(+2.6) | 17.1G | 5.22M |

| DeepLabV3Plus [4] | Res50 | 77.2 | 62.5G | 39.8M |

| +PSA | Res50 | 79.0(+1.8) | 65.2G | 42.3M |

| DeepLabV3Plus [4] | Res101 | 78.3 | 83.2G | 58.8M |

| +PSA | Res101 | 80.3(+2.0) | 87.7G | 63.5M |

Top-Down 2D Human Pose Estimation: Among the DCNN approaches for 2D human pose estimation, the top-down approaches generally dominate the top metrics. This top-down pipeline consists of a person bounding box detector and a keypoint heatmap regressor. Specifically, we use the pipelines in [51] and [40] as our baselines. An input image is first processed by a human detector [51] of AP (Average Precision) on MS-COCO val2017 dataset [28]. Then all the detected human image patches are cropped from the input image and resized to . Finally, the image patches are used for keypoint heatmap regression by a single person pose estimator. The output heatmap size is .

We add PSA on Simple-Baseline [51] with the Resnet50/152 backbones and HRnet [40] with the HRnet-w32/w48 backbones. The results on MS-COCO val2017 are shown in Table 2. PSA boosts all the baseline networks by to AP with minor overheads of computation (Flops) and the number of parameters(mPara). Even without ImageNet pre-training, PSA with “Res50” backbone gets AP, which is not only better than Simple-Baseline with Resnet50 backbone, but also better than Simple-Baseline even with Resnet152 backbone. A similar benefit is also observed on PSA with HRNet-W32 backbone outperforms the baseline with “HR-w48” backbone. This giant performance gains of PAS and the small overheads make PSA+HRNet-W32 the most cost-effective model among all models in Table 2.

Semantic Segmentation. This task maps an input image to a stack of segmentation masks, one output mask for one semantic class. In Table 3, we compare PSA with the DeepLabV3Plus [4] baseline on the Pascal VOC2012 Aug [12] (21 classes, input image size , output mask size ). PSA boosts all the baseline networks by to mIoU(mean Intersection over Union) with minor overheads of computation (Flops) and the number of parameters (mPara). PSA with “Res50” backbone got mIoU, which is not only better than the DeepLabV3Plus with the Resnet50 backbone, but also better than DeepLabV3Plus even with Resnet101.

4.2 Comparing with State-of-the Arts

We then apply PSA to the current state-of-the-arts of above tasks. Top-down 2D Human Pose Estimation. To our knowledge, the current state-of-the-art results by single models were achieved by UDP-HRnet with 65.1mAP bbox detector on the MS-COCO keypoint testdev set. In Table 4, we add PSA to the UDP-Pose with HRnet-W48 backbone and achieve a new state-of-the-art AP of . PSA boosts UDP-Pose (baseline) by points (see Figure 3 (a) for their qualitative comparison).

Note that there is only a subtle metric difference between the parallel (p) and sequential(s) layout of PSA. We believe this partially validate that our design of the channel-only and spatial-only attention blocks has exhausted the representation power along the channel and spatial dimension.

| Method | Backbone | Input Size | Flops | mPara | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 8-stage Hourglass [32] | 8-stage Hourglass | 66.9 | - | - | - | - | - | 14.3G | 25.1M | |

| CPN [5] | ResNet50 | 68.6 | - | - | - | - | - | 6.2G | 27.0M | |

| CPN + OHKM [5] | ResNet50 | 69.4 | - | - | - | - | - | 6.2G | 27.0M | |

| SimpleBaseline [51] | ResNet50 | 70.4 | 88.6 | 78.3 | 67.1 | 77.2 | 76.3 | 8.90G | 34.0M | |

| SimpleBaseline [51] | ResNet101 | 71.4 | 89.3 | 79.3 | 68.1 | 78.1 | 77.1 | 12.4G | 53.0M | |

| SimpleBaseline [51] | ResNet152 | 72.0 | 89.3 | 79.8 | 68.7 | 78.9 | 77.8 | 15.7G | 72.0M | |

| HRNet-W32 [40] | HRNet | 74.4 | 90.5 | 81.9 | 70.8 | 81.0 | 78.9 | 7.10G | 28.9M | |

| HRNet-W48 [40] | HRNet | 75.1 | 90.6 | 82.2 | 71.5 | 81.8 | 80.4 | 14.6G | 63.6M | |

| Dark-Pose [56] | HRNet-W32 | 75.6 | 90.5 | 82.1 | 71.8 | 82.8 | 80.8 | 7.1G | 28.5M | |

| UDP-Pose [21] | HRNet-W48 | 77.2 | 91.8 | 83.7 | 73.8 | 83.7 | 82.0 | 14.7G | 63.8M | |

| SimpleBaseline [51] | ResNet152 | 74.3 | 89.6 | 81.1 | 70.5 | 79.7 | 79.7 | 35.6G | 68.6M | |

| HRNet-W32 [40] | HRNet | 75.8 | 90.6 | 82.7 | 71.9 | 82.8 | 81.0 | 16.0G | 28.5M | |

| HRNet-W48 [40] | HRNet | 76.3 | 90.8 | 82.9 | 72.3 | 83.4 | 81.2 | 32.9G | 63.6M | |

| Dark-Pose [56] | HRNet-W48 | 76.8 | 90.6 | 83.2 | 72.8 | 84.0 | 81.7 | 32.9G | 63.6M | |

| UDP-Pose [21] | HRNet-W48 | 76.2 | 92.5 | 83.6 | 72.5 | 82.4 | 81.1 | 33.0G | 63.8M | |

| UDP-Pose [21] (Strong Baseline) | HRNet-W48 | 77.8 | 92.0 | 84.3 | 74.2 | 84.5 | 82.5 | 33.0G | 63.8M | |

| Ours | ||||||||||

| UDP-Pose-PSA(p) | HRNet-W48 | 78.9 | 93.6 | 85.8 | 76.1 | 83.6 | 81.4 | 15.7G | 70.1M | |

| UDP-Pose-PSA(p) | HRNet-W48 | 79.5 | 93.6 | 85.9 | 76.3 | 84.3 | 81.9 | 35.4G | 70.1M | |

| UDP-Pose-PSA(s) | HRNet-W48 | 79.4 | 93.6 | 85.8 | 76.1 | 84.1 | 81.7 | 35.4G | 69.1M |

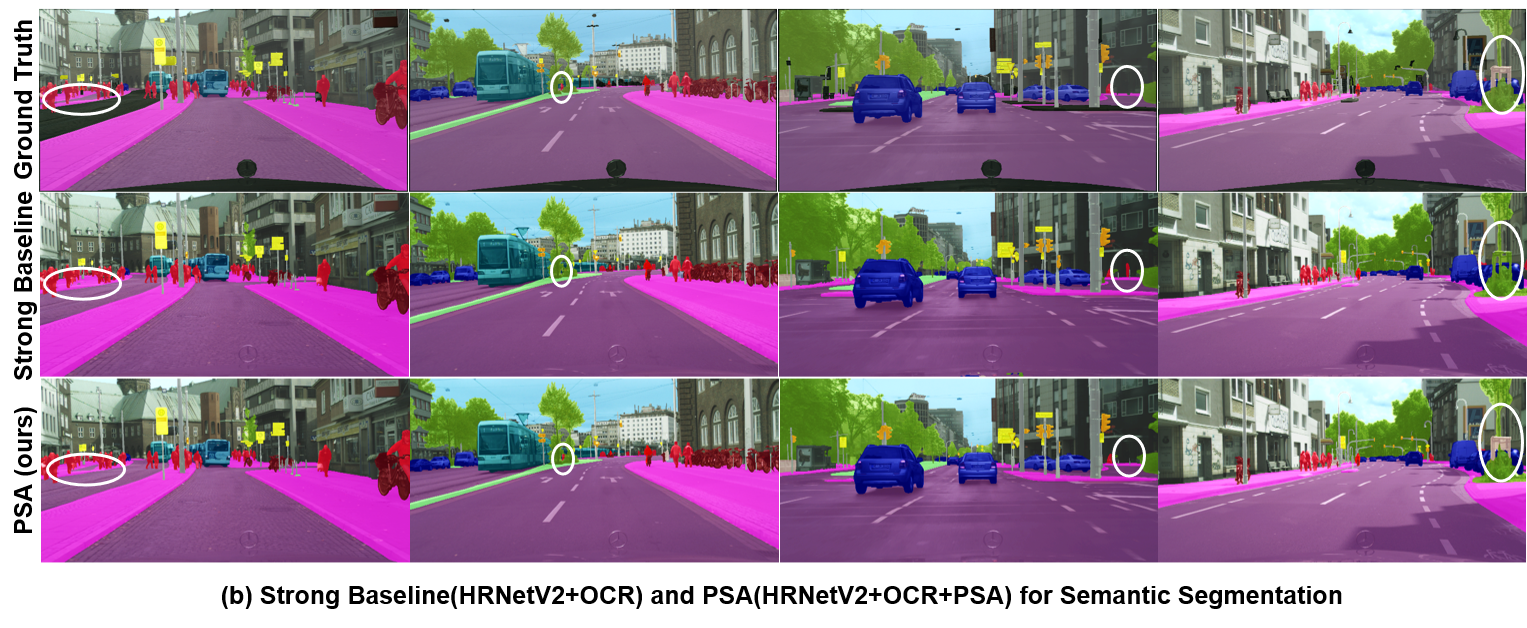

Semantic Segmentation. To our knowledge, the current state-of-the-art results by single models were produced by HRNet-OCR(MA) [41] on the Cityscapes validation set [9](19 classes, input image size , output mask size ). In Table 5, we add PSA to the basic configuration of HRNet-OCR and achieve the new state-of-the-arts mIoU of . PSA boosts HRNet-OCR (strong baseline) by points(see Figure 3 (b) for their qualitative comparison). Again that there is only a subtle metric difference between the PSA results under the parallel(p) layout and the sequential(s) layout.

| Method | Backbone | mIoU | iIoU cla. | IoU cat. | iIoU cat. |

|---|---|---|---|---|---|

| GridNet [13] | - | 69.5 | 44.1 | 87.9 | 71.1 |

| LRR-4x | - | 69.7 | 48.0 | 88.2 | 74.7 |

| DeepLab [4] | D-ResNet-101 | 70.4 | 42.6 | 86.4 | 67.7 |

| LC | - | 71.1 | - | - | - |

| Piecewise [27] | VGG-16 | 71.6 | 51.7 | 87.3 | 74.1 |

| FRRN [36] | - | 71.8 | 45.5 | 88.9 | 75.1 |

| RefineNet [26] | ResNet-101 | 73.6 | 47.2 | 87.9 | 70.6 |

| PEARL [23] | D-ResNet-101 | 75.4 | 51.6 | 89.2 | 75.1 |

| DSSPN [25] | D-ResNet-101 | 76.6 | 56.2 | 89.6 | 77.8 |

| LKM [34] | ResNet-152 | 76.9 | - | - | - |

| DUC-HDC [45] | - | 77.6 | 53.6 | 90.1 | 75.2 |

| SAC [58] | D-ResNet-101 | 78.1 | - | - | - |

| DepthSeg [24] | D-ResNet-101 | 78.2 | - | - | - |

| ResNet38 [49] | WResNet-38 | 78.4 | 59.1 | 90.9 | 78.1 |

| BiSeNet [53] | ResNet-101 | 78.9 | - | - | - |

| DFN [54] | ResNet-101 | 79.3 | - | - | - |

| PSANet [61] | D-ResNet-101 | 80.1 | - | - | - |

| PADNet [52] | D-ResNet-101 | 80.3 | 58.8 | 90.8 | 78.5 |

| CFNet [57] | D-ResNet-101 | 79.6 | - | - | - |

| Auto-DeepLab [30] | - | 80.4 | - | - | - |

| DenseASPP [60] | WDenseNet-161 | 80.6 | 59.1 | 90.9 | 78.1 |

| SVCNet [11] | ResNet-101 | 81.0 | - | - | - |

| ANN [65] | D-ResNet-101 | 81.3 | - | - | - |

| CCNet [22] | D-ResNet-101 | 81.4 | - | - | - |

| DANet [14] | D-ResNet-101 | 81.5 | - | - | - |

| HRNetV2 [44] | HRNetV2-W48 | 81.6 | 61.8 | 92.1 | 82.2 |

| HRNetV2+OCR [55] | HRNetV2-W48 | 84.9 | - | - | - |

| HRNetV2+OCR(MA) [41] (Strong Baseline) | HRNetV2-W48 | 85.4 | - | - | - |

| Ours | |||||

| HRNetV2-OCR+PSA(p) | HRNetV2-W48 | 86.95 | 71.6 | 92.8 | 85.0 |

| HRNetV2-OCR+PSA(s) | HRNetV2-W48 | 86.72 | 71.3 | 92.3 | 82.8 |

4.3 Ablation Study

In Table 6, we conduct an ablation study of PSA configurations on Simple-Baseline(Resnet50) [51] and compare PSAs with other related self-attention methods. All the overheads, such as Flops, mPara, inference GPU memory(”Mem.”), and inference time (”Time”)) are inference costs of one sample. To reduce the randomness in CUDA and Pytorch scheduling, we ran inference on MS-COCO val2017 using 4 TITAN RTX GPUs, batchsize 128 (batchsize 32/GPU), and averaged over the number of samples.

From the results of ”PSA ablation” in Table 6, we observe that (1) the channel-only block () outperform spacial-only attention (), but can be further boosted by their parallel ([]) or sequential () compositions; (2) The parallel ([]) or sequential () compositions has similar AP, Flops, mPara, inference memory(Mem.), and inference (Time.).

From the results of ”related self-attention methods”, we observe that (1) the NL block costs the most memory while produces the least boost (AP) over the baseline, indicating that NL is highly redundant. (2) The channel-only attention GC is better than SE since it includes SE. GC is even better than channel+spatial attention CBAM because the inner-product-based attention mechanism in GC is more powerful than the convolution/MLP-based CBAM. (3) PSA is the best channel-only attention block over GC and SE. We believe PSA benefits from its highest channel resolution () and its output design. (4) The channel+spatial attention CBAM with a relatively early design is still better than the channel-only attention SE. (5) Under the same sequential layout of spatial and channel attention, PSA is significantly better than CBAM. Finally, (6) At similar overheads, both the parallel and sequential PSAs are better than the compared blocks.

| Method | Flops | mPara | Mem.(MiB) | Time(ms) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Simple-Baseline(ResNet50) [51] | 72.2 | 89.3 | 78.9 | 68.1 | 79.7 | 77.6 | 20.0G | 34.0M | 1.43 | 2.56 |

| PSA ablation | ||||||||||

| + | 76.3(+4.1) | 92.6 | 83.6 | 73.0 | 80.8 | 78.9 | 20.4G | 35.3M | 1.49 | 2.58 |

| + | 75.0(+2.8) | 92.6 | 81.6 | 71.5 | 80.2 | 77.7 | 20.7G | 35.3M | 1.45 | 2.63 |

| +[] (PSA(p)) | 76.5(+4.3) | 93.6 | 83.6 | 73.2 | 81.0 | 79.0 | 20.9G | 36.5M | 1.54 | 2.70 |

| + (PSA(s)) | 76.6(+4.4) | 93.6 | 83.6 | 73.2 | 81.2 | 79.1 | 20.9G | 36.5M | 1.52 | 2.71 |

| Related self-attention methods | ||||||||||

| + (NL [47]) | 74.5(+2.3) | 92.6 | 81.5 | 70.9 | 79.9 | 77.3 | 21.1G | 36.5M | 10.97 | 2.76 |

| + (GC [3]) | 76.1(+3.9) | 92.6 | 82.7 | 72.9 | 80.9 | 78.7 | 20.2G | 34.3M | 1.47 | 2.69 |

| + (SE [20]) | 75.7(+3.5) | 93.6 | 82.6 | 72.4 | 80.8 | 78.3 | 20.2G | 34.2M | 1.29 | 2.94 |

| + (CBAM [48]) | 75.9(+3.7) | 92.6 | 82.7 | 72.9 | 80.7 | 78.7 | 20.2G | 34.3M | 1.49 | 2.96 |

5 Conclusion and Future Work

We presented the Polarized Self-Attention(PSA) block towards high-quality pixel-wise regression. PSA significantly boosts all compared DCNNs for two critical designs (1) keeping high internal resolution in both polarized channel-only and spatial-only attention branches, and (2) incorporating a nonlinear composition that fully leverages the high-resolution information preserved in the PSA branches. PSA can potentially benefit any computer vision tasks with pixel-wise regression.

It is still not clear how PSA would best benefit pixel-wise regression embedded with the classification and displacement regression in complex DCNN heads, such as those in the instance segmentation, anchor-free object detection and panoptic segmentation tasks. To our knowledge, most existing work with self-attention blocks only inserted blocks in the backbone networks. Our future work is to explore the use of PSAs in DCNN heads.

References

- [1] Jean-Marc Andreoli. Convolution, attention and structure embedding. In NIPS, 2019.

- [2] Irwan Bello, Barret Zoph, Ashish Vaswani, Jonathon Shlens, and Quoc V. Le. Attention augmented convolutional networks. In ICCV, 2019.

- [3] Yue Cao, Jiarui Xu, Stephen Lin, Fangyun Wei, and Han Hu. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In ICCV, 2019.

- [4] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L. Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(4):834–848, 2017.

- [5] Yilun Chen, Zhicheng Wang, Yuxiang Peng, Zhiqiang Zhang, Gang Yu, and Jian Sun. Cascaded pyramid network for multi-person pose estimation. In CVPR, 2018.

- [6] Bowen Cheng, Maxwell D Collins, Yukun Zhu, Ting Liu, Thomas S Huang, Hartwig Adam, and Liang-Chieh Chen. Panoptic-deeplab: A simple, strong, and fast baseline for bottom-up panoptic segmentation. In CVPR, 2020.

- [7] Han-Pang Chiu, Varun Murali, Ryan Villamil, G. Drew Kessler, Supun Samarasekera, and Rakesh Kumar. Augmented reality driving using semantic geo-registration. In IEEE Conference on Virtual Reality and 3D User Interfaces (VR), 2018.

- [8] Jean-Baptiste Cordonnier, Andreas Loukas, and Martin Jaggi. On the relationship between self-attention and convolutional layers. In ICLR, 2020.

- [9] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele. The cityscapes dataset for semantic urban scene understanding. In CVPR, 2016.

- [10] Tao Dai, Jianrui Cai, Yongbing Zhang, Shu-Tao Xia, and Lei Zhang. Second-order attention network for single image super-resolution. In CVPR, 2019.

- [11] Henghui Ding, Xudong Jiang, Bing Shuai, Ai Qun Liu, and Gang Wang. Semantic correlation promoted shape-variant context for segmentation. In CVPR, 2019.

- [12] Mark Everingham, S. M. Ali Eslami, Luc Van Gool, Christopher K. I. Williams, John Winn, and Andrew Zisserman. The pascal visual object classes challenge: A retrospective. International Journal of Computer Vision, 111(1):98–136, 2015.

- [13] Damien Fourure, Rémi Emonet, Elisa Fromont, Damien Muselet, Alain Tremeau, and Christian Wolf. Residual conv-deconv grid network for semantic segmentation. In BMCV, 2017.

- [14] Jun Fu, Jing Liu, Haijie Tian, Yong Li, Yongjun Bao, Zhiwei Fang, and Hanqing Lu. Dual attention network for scene segmentation. In CVPR, 2019.

- [15] Ross Girshick. Fast r-cnn. In ICCV, 2015.

- [16] Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT Press, 2016. http://www.deeplearningbook.org.

- [17] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In ICCV, 2017.

- [18] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, 2016.

- [19] Jie Hu, Li Shen, Samuel Albanie, Gang Sun, and Andrea Vedaldi. Gather-excite: Exploiting feature context in convolutional neural networks. In NIPS, 2018.

- [20] Jie Hu, Li Shen, Samuel Albanie, Gang Sun, and Enhua Wu. Squeeze-and-excitation networks. In CVPR, 2018.

- [21] Junjie Huang, Zheng Zhu, Feng Guo, and Guan Huang. The devil is in the details: Delving into unbiased data processing for human pose estimation. In CVPR, 2020.

- [22] Zilong Huang, Xinggang Wang, Yunchao Wei, Lichao Huang, Humphrey Shi, Wenyu Liu, and Thomas S. Huang. Ccnet: Criss-cross attention for semantic segmentation. In ICCV, 2019.

- [23] Xiaojie Jin, Xin Li, Huaxin Xiao, Xiaohui Shen, Zhe Lin, Jimei Yang, Yunpeng Chen, Jian Dong, Luoqi Liu, Zequn Jie, Jiashi Feng, and Shuicheng Yan. Video scene parsing with predictive feature learning. In ICCV, 2017.

- [24] Shu Kong and Charless Fowlkes. Recurrent scene parsing with perspective understanding in the loop. In CVPR, 2018.

- [25] Xiaodan Liang, Hongfei Zhou, and Eric Xing. Dynamic-structured semantic propagation network. In CVPR, 2018.

- [26] Guosheng Lin, Anton Milan, Chunhua Shen, and Ian Reid. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In CVPR, 2017.

- [27] Guosheng Lin, Chunhua Shen, Anton van dan Hengel, and Ian Reid. Efficient piecewise training of deep structured models for semantic segmentation. In CVPR, 2016.

- [28] Tsung-Yi Lin, Michael Maire, Serge Belongie, Lubomir Bourdev, Ross Girshick, James Hays, Pietro Perona, Deva Ramanan, C. Lawrence Zitnick, and Piotr Dollár. Microsoft coco: Common objects in context, 2014. arXiv:1405.0312.

- [29] Geert Litjens, Thijs Kooi, Babak Ehteshami, Bejnordi Arnaud, Arindra Adiyoso, and Setio Francesco. A survey on deep learning in medical image analysis. Medical Image Analysis, 42:60–88, 2017.

- [30] Chenxi Liu, Liang-Chieh Chen, Florian Schroff, Hartwig Adam, Wei Hua, Alan Yuille, and Li Fei-Fei. Auto-deeplab: Hierarchical neural architecture search for semantic image segmentation. In CVPR, 2019.

- [31] Zhengxiong Luo, Zhicheng Wang, Yan Huang, Tieniu Tan, and Erjin Zhou. Rethinking the heatmap regression for bottom-up human pose estimation. In CVPR, 2021.

- [32] Alejandro Newell, Kaiyu Yang, and Jia Deng. Stacked hourglass networks for human pose estimation. In ECCV, 2016.

- [33] Jongchan Park, Sanghyun Woo, Joon-Young Lee, and In SoKweon. Bam: bottleneck attention module. In BMVC, 2018.

- [34] Chao Peng, Xiangyu Zhang, Gang Yu, Guiming Luo, and Jian Sun. Large kernel matters – improve semantic segmentation by global convolutional network. In CVPR, 2017.

- [35] Ashish Vaswani Peter Shaw, Jakob Uszkoreit. Self-attention with relative position representations. 2018. arXiv:1803.02155.

- [36] Tobias Pohlen, Alexander Hermans, Markus Mathias, and Bastian Leibe. Full-resolution residual networks for semantic segmentation in street scenes. In CVPR, 2017.

- [37] Prajit Ramachandran, Niki Parmar, Ashish Vaswani, Irwan Bello, Anselm Levskaya, and Jonathon Shlens. Stand-alone self-attention in vision models. In NIPS, 2019.

- [38] Olga Russakovsky, Hao Su Jia Deng, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115:211–252, 2015.

- [39] Zhuoran Shen, Mingyuan Zhang, Haiyu Zhao, Shuai Yi, and Hongsheng Li. Efficient attention: Attention with linear complexities, 2020. arXiv:1812.01243.

- [40] Ke Sun, Bin Xiao, Dong Liu, and Jingdong Wang. Deep high-resolution representation learning for human pose estimation. In CVPR, 2019.

- [41] Andrew Tao, Karan Sapra, and Bryan Catanzaro. Hierarchical multi-scale attention for semantic segmentation. In arXiv preprint arXiv:2005.10821, 2020.

- [42] Michael Treml, José Arjona-Medina, Thomas Unterthiner, Rupesh Durgesh, Felix Friedmann, Peter Schuberth, Andreas Mayr, Martin Heusel, Markus Hofmarcher, Michael Widrich, Bernhard Nessler, and Sepp Hochreiter. Speeding up semantic segmentation for autonomous driving. In NIPS, 2016.

- [43] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit abd Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. Attention is all you need. In NIPS, 2017.

- [44] Jingdong Wang, Ke Sun, Tianheng Cheng, Borui Jiang, Chaorui Deng, Yang Zhao, Dong Liu, Yadong Mu, Mingkui Tan, Xinggang Wang, Wenyu Liu, , and Bin Xiao. Deep high-resolution representation learning for visual recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 10:5686–5696, 2020.

- [45] Panqu Wang, Pengfei Chen, Ye Yuan, Ding Liu, Zehua Huang, Xiaodi Hou, and Garrison Cottrell. Understanding convolution for semantic segmentation. In WACV, 2018.

- [46] Qi Wang, Junyu Gao, Wei Lin, and Yuan Yuan. Pixel-wise crowd understanding via synthetic data. International Journal of Computer Vision, 129:225–245, 2021.

- [47] Xiaolong Wang, Ross Girshick, Abhinav Gupta, and Kaiming He. Non-local neural networks. In CVPR, 2018.

- [48] Sanghyun Woo, Jongchan Park, Joon-Young Lee, and In So Kweon. Cbam: Convolutional block attention module. In ECCV, 2018.

- [49] Zifeng Wu, Chunhua Shen, and Anton van den Hengel. Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recognition, 90:119–133, 2019.

- [50] Bryan (Ning) Xia, Yuan Gong, Yizhe Zhang, and Christian Poellabauer. Second-order non-local attention networks for person re-identification. In ICCV, 2019.

- [51] Bin Xiao, Haiping Wu, and Yichen Wei. Simple baselines for human pose estimation and tracking. In ECCV, 2018.

- [52] Dan Xu, Wanli Ouyang, Xiaogang Wang, and Nicu Sebe. Pad-net: Multi-tasks guided prediction-and-distillation network for simultaneous depth estimation and scene parsing. In CVPR, 2018.

- [53] Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In ECCV, 2018.

- [54] Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang. Learning a discriminative feature network for semantic segmentation. In CVPR, 2018.

- [55] Yuhui Yuan, Xilin Chen, and Jingdong Wang. Object-contextual representations for semantic segmentation. In ECCV, 2020.

- [56] Feng Zhang, Xiatian Zhu, Hanbin Dai, Mao Ye, and Ce Zhu. Distribution-aware coordinate representation for human pose estimation. In CVPR, 2020.

- [57] Hang Zhang, Chenguang Wang, and Junyuan Xie. Co-occurrent features in semantic segmentation. In CVPR, 2019.

- [58] Rui Zhang, Sheng Tang, Yongdong Zhang, Jintao Li, and Shuicheng Yan. Scale-adaptive convolutions for scene parsing. In ICCV, 2017.

- [59] Yulun Zhang, Kunpeng Li, Kai Li, Bineng Zhong, and Yun Fu. Residual non-local attention networks for image restoration. In ICLR, 2019.

- [60] Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. Pyramid scene parsing network. In CVPR, 2017.

- [61] Hengshuang Zhao, Yi Zhang, Shu Liu, Jianping Shi, Chen Change Loy, Dahua Lin, and Jiaya Jia. Psanet: Point-wise spatial attention network for scene parsing. In ECCV, 2018.

- [62] Zilong Zhong, Zhong Qiu Lin, Rene Bidart, Xiaodan Hu, Ibrahim Ben Daya, Zhifeng Li, Wei-Shi Zheng, Jonathan Li, and Alexander Wong. Squeeze-and-attention networks for semantic segmentation. In CVPR, 2020.

- [63] Xingyi Zhou, Dequan Wang, and Philipp Krähenbühl. Objects as points. In arXiv preprint arXiv:1904.07850, 2019.

- [64] Xizhou Zhu, Han Hu, Stephen Lin, and Jifeng Dai. Deformable convnets v2: More deformable, better results. In CVPR — arXiv:1811.11168, 2019.

- [65] Zhen Zhu, Mengde Xu, Song Bai, Tengteng Huang, and Xiang Bai. Asymmetric non-local neural networks for semantic segmentation. In ICCV, 2019.