Poisoning Online Learning Filters: DDoS Attacks and Countermeasures

Abstract.

The recent advancements in machine learning have led to a wave of interest in adopting online learning-based approaches for long-standing attack mitigation issues. In particular, DDoS attacks remain a significant threat to network service availability even after more than two decades. These attacks have been well studied under the assumption that malicious traffic originates from a single attack profile. Based on this premise, malicious traffic characteristics are assumed to be considerably different from legitimate traffic. Consequently, online filtering methods are designed to learn network traffic distributions adaptively and rank requests according to their attack likelihood. During an attack, requests rated as malicious are precipitously dropped by the filters. In this paper, we conduct the first systematic study on the effects of data poisoning attacks on online DDoS filtering; introduce one such attack method, and propose practical protective countermeasures for these attacks. We investigate an adverse scenario where the attacker is “crafty”, switching profiles during attacks and generating erratic attack traffic that is ever-shifting. This elusive attacker generates malicious requests by manipulating and shifting traffic distribution to poison the training data and corrupt the filters. To this end, we present a generative model MimicShift, capable of controlling traffic generation while retaining the originating traffic’s intrinsic properties. Comprehensive experiments show that online learning filters are highly susceptible to poisoning attacks, sometimes performing much worse than a random filtering strategy in this attack scenario. At the same time, our proposed protective countermeasure diminishes the attack impact.

1. Introduction

The accelerating adoption of machine learning in the enterprise makes poisoning attacks (Li et al., 2016; Yang et al., 2017a; Jagielski et al., 2018) on learning systems increasingly common. These attacks generally compromise the integrity of a learning system by injecting unsanitized malicious samples into the training dataset, leading to terrible system performance. As a result, it poses a serious problem for online cyberattack mitigation systems. One such cyberattack, the Distributed Denial-of-Service (DDoS), denies legitimate users access to resources on a target network by sending an overwhelmingly large amount of traffic to exhaust the victim network’s resources. As a result, legitimate users receive severely degraded to no service. As recent as Q1 2020, Amazon Web Services (AWS) reported the largest publicly disclosed attack on record at 2.3 Tbps 111https://aws-shield-tlr.s3.amazonaws.com/2020-Q1_AWS_Shield_TLR.pdf. The previous record was held by the GitHub Memcached-based DDoS attack, which peaked at 1.35 Tbps in February 2018.

As more and more present-day adaptive DDoS filtering methods adopt an online machine learning approach, we anticipate the growing urgency to study the effects of poisoning attacks on DDoS filters and find practical defenses against them. Online approaches typically perform incremental updates as more attack requests are observed to mitigate the malicious requests rapidly during an attack. However, such online learning methods assume that the attackers are static and that their behaviors stay the same during an attack. While these approaches are applicable to a static attacker, what if “crafty” attackers can dynamically change their attack behavior? We focus on studying such shifting attacks on online learning-based DDoS filtering.

Existing online learning-based filtering mechanisms can be broadly categorized into two groups. In one group, the approaches (He et al., 2017; Lima Filho et al., 2019; Daneshgadeh Çakmakçı et al., 2020) either employ simple decision tree methods or basic machine learning algorithms. The other group (Tann et al., 2021b; Doriguzzi-Corin et al., 2020) takes a deep learning approach, where the latest work (Tann et al., 2021b) proposed two online filtering methods designed to be computationally efficient to respond in a real-time environment where time constraint is a crucial consideration. Conversely, there is minimal literature that analyzes the effectiveness of fluctuating DDoS attacks on filtering methods. One study (Li et al., 2005) found that adaptive statistical filters cannot effectively mitigate malicious traffic from attackers that dynamically change their behavior, performing much worse against a dynamic attacker than if the attacker was static. Most other works (Ibitoye et al., 2019; Warzyński and Kołaczek, 2018; Hashemi et al., 2019; Peng et al., 2019, 2019; Huang et al., 2020; Liu and Yin, 2021) perform adversarial attacks on targeted DDoS detection systems. However, there is no study on the effects of erratic attack traffic on online learning DDoS filters.

In this paper, we study the effects of poisoning attacks on online learning-based filtering, where the attacker dynamically changes its behavior and generates fluctuating requests that vary from one time interval to the next. Our investigation of the elusive DDoS attack scenario is much more elaborate than those considered in existing online filtering approaches (Tann et al., 2021b; He et al., 2017; Lima Filho et al., 2019; Daneshgadeh Çakmakçı et al., 2020). In particular, we study a sophisticated setting where the attacker is “crafty”, shifting its attack behavior (e.g., distribution of requests and features) periodically to corrupt the adaptive filters. The attacker models the attack traffic and generates malicious traffic with different distributions in short timeframes ’s while mimicking properties of normal traffic.

Under this shifting attack setting (see Figure 1), the online learning filters utilize traffic data from two operational periods, (1) normal-day traffic , and (2) the attack-day traffic , to learn and update its filtering policy. Normal traffic behavior can be learned by performing intensive processing on . However, during an attack, the incoming traffic is divided into short intervals of minutes (e.g., ). The attack traffic (), where contains the traffic during the -th interval, will be used for the online training and update of the filters to learn attack traffic behavior. The filters then use these last updated models to create and identify a blacklist of attack identities (e.g., IP addresses) and filter them accordingly.

To this end, we formulate a controllable generative method that captures the sophisticated attack setting described above. We present MimicShift, a conditional generative method for controlling network traffic generation to generate shifting and unpredictable traffic. Our method is inspired by a controllable approach (Tann et al., 2021a) that is based on a conditional generative adversarial network, and it addresses the challenge of generating network requests of varying distributions. It is achieved by using request features such as Request Len and TCP Window Size that capture the intrinsic request properties. These features form the condition that we control to guide the traffic generative process. The method introduces control by leveraging the conditions, managed with a transparent Markov model, as a control vector. It allows us to influence the generative process. By specifying the density distribution parameters of the Markov model, the method can generate requests with different proprieties.

We show that the attacker can effectively inundate online learning filtering systems through the clever shifting of attack distribution periodically through such poisoning attacks. We maintain that it is a compelling result as it suggests that existing proposed online filtering mechanisms are inadequate in mitigating poisoning attacks against online learning systems. We design a deep-learning-based generative approach, MimicShift, and demonstrate the effectiveness of poisoning attacks. The main advantage of MimicShift is the controllability of the generative process. It first learns the behavior of given traffic (either malicious traffic of a single attack profile or benign traffic of the victim that has been compromised) and generates synthetic traffic that is subject to manipulation, producing requests of different specified distributions. The control is performed in an easy-to-understand Markov process to derive traffic distribution based on the Markov model parameters. We believe that this Markov process provides an intuitive approach to the control of shifting traffic distributions.

We evaluate the proposed controllable poisoning attack on two state-of-the-art online deep-learning DDoS filtering methods, N-over-D and Iterative Classifier (Tann et al., 2021b). Our evaluation is performed on the same datasets presented in the existing online filtering work (Tann et al., 2021b) to set a fair comparison benchmark. The two publicly available network datasets are (1) CICIDS2017 (Sharafaldin. et al., 2018) and (2) CAIDA UCSD “DDoS Attack 2007” (Hick et al., 2007). We use three separate DDoS attacks from these datasets, containing both generated traffic and anonymized traffic traces from a real-world attack, respectively.

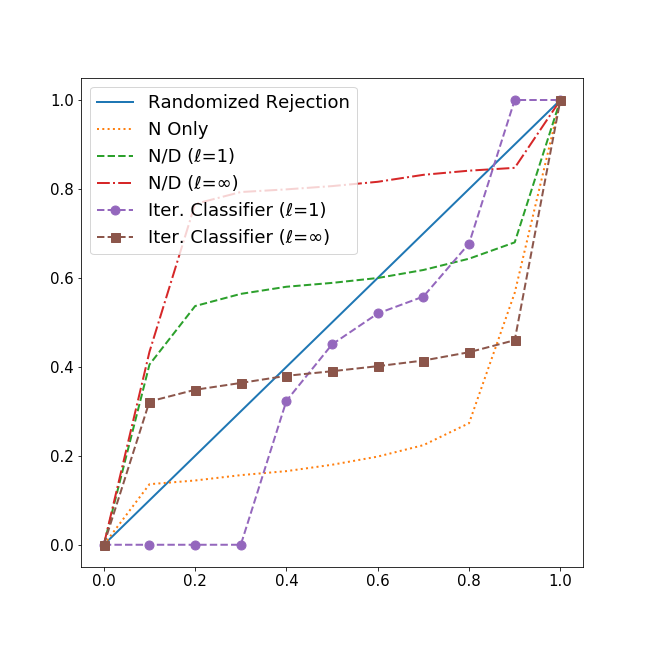

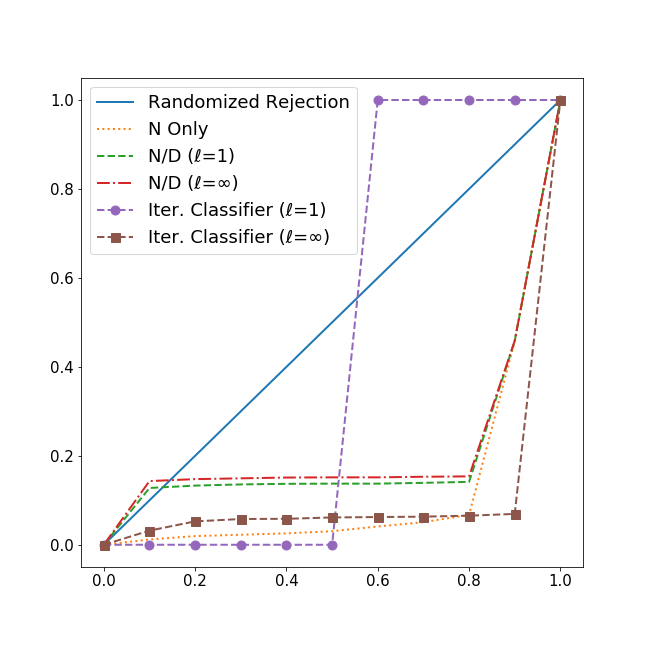

In the experiments, we first train a filtering model based on anomaly detection techniques. It uses only the normal traffic for learning to determine if requests during an attack are legitimate or malicious. This model sets a baseline for us to compare MimicShift. Empirical results show that MimicShift can generate attack traffic that eludes online filtering systems, attaining close to 100% false-negative rates at times (see Table 6.2, Figures 3 and 4 for experiment details). It effectively negates the filtering capabilities, making the performance worse than a 50-50 random guess. Next, we introduce a simple yet effective protective countermeasure that greatly minimizes the attack impact, achieving a significant boost in filtering performance (see Table 7). It serves as a starting point for the future research of robust countermeasures.

Furthermore, our experiments also show that the different acceptance or rejection thresholds significantly affect the filtering performance. A slight change in the acceptance threshold could considerably affect the false-negative rate, allowing markedly more attack requests to be accepted. The result powerfully demonstrates the robustness of MimicShift. Interestingly, even when we only use a random combination of three Markov model parameter settings for the different attack traffic distributions, it severely degrades the online filtering performance. While this may appear counter-intuitive, we believe that two reasons can explain the results. (1) The primary objective of the MimicShift generative method is to shift its behavior unpredictably to maintain an obscure attack profile, and (2) the online filtering methods are designed to adapt quickly to new data and place higher importance on the latest observed attack traffic. Hence, online learning filters could be easily influenced and deceived by the shifting attack traffic.

Contribution.

-

(1)

We formulate the problem of corrupting and eluding online DDoS filtering by poisoning attacks. The attacker generates attacks that mimic the observed traffic and shift behaviors during the attack process.

-

(2)

We design a mimic model, which can replicate features of any given requests, producing features of specified distributions that follow a simple and easy-to-understand Markov model. Leveraging on this mimic function, we propose a controllable generative attack method, which undermines the capabilities of online filtering systems, making them perform worse than a 50-50 random guess filtering strategy.

-

(3)

We propose a simple yet practical countermeasure that dramatically diminishes the success of poisoning attacks on online learning filters.

-

(4)

We conduct experimental evaluations of the poisoning attacks on two state-of-the-art online deep-learning-based DDoS filtering approaches. The empirical results demonstrate both the efficacy and robustness of the attacks generated by MimicShift.

2. Background and Challenges

This section gives an overview of existing machine learning-based DDoS filtering methods and detection mechanisms; we highlight the ease of detecting DDoS attacks in such systems. Next, we cover attacks on DDoS defenses and highlight poisoning attacks.

2.1. Online DDoS Filtering

Several unsupervised learning mitigation strategies (Lima Filho et al., 2019; He et al., 2017; Daneshgadeh Çakmakçı et al., 2020) have been proposed to counter such attacks in an online manner. These learning-based methods, employing rudimentary machine learning algorithms, e.g., random forest, logistic regression, decision tree, perform clustering and simple distance measure using a few elementary features. While they can capture some basic network traffic properties, they are unable to learn deeper representations of legitimate and malicious requests to better distinguish between the two types of traffic. Moreover, the optimization goal of these methods is not clear.

In one of the most recent online deep learning filtering methods presented in Tann et al. (Tann et al., 2021b), the two proposed approaches perform filtering by utilizing both the normal-day and the attack-day mixture traffic. One approach estimates the likelihood that each request is an attack, given that it is from the attack-day mixture traffic. The other approach formulates a loss function specific to a two-class classifier with the objective to classify requests from normal traffic as normal and an estimated proportion of requests during the attack as malicious. The two approaches demonstrate to be suitable for attacks with a short time frame.

2.2. Attacking DDoS Defenses

While several DDoS filtering methods exist, there is a shortage of literature on deep generative models targeting DDoS detection systems. In recent years, some methods (Ibitoye et al., 2019; Warzyński and Kołaczek, 2018) have been proposed to generate adversarial samples to evade DDoS detection. On the one hand, some of these methods were employed in a white-box setting. The attackers are assumed to know the details of the target deep-learning intrusion detection system, which is not practical in the real world. On the other hand, another group of methods (Hashemi et al., 2019; Peng et al., 2019, 2019; Huang et al., 2020; Liu and Yin, 2021) is presented as black-box attacks, where the attacker has very limited knowledge of the target model, such as only the model’s output. Both evasion methods perform perturbations on samples until an adversarial sample successfully evades the target DDoS detector.

While these methods perform evasive attacks on DDoS intrusion systems by manipulating adversarial samples in one way or another, there is no study on the effectiveness of poisoning attacks on online deep-learning DDoS filters. The most relevant work is a study (Li et al., 2005) on adaptive statistical filters. It was determined that adaptive statistical filters perform much worse against attackers that dynamically change their behavior. However, there are no such studies for online deep learning filters.

2.3. Data Poisoning

Data poisoning is a type of attack, where an attacker can inject malicious data that target machine learning models during the training phase. Most existing poisoning attacks (Biggio et al., 2012; Jagielski et al., 2018; Rubinstein et al., 2009; Xiao et al., 2015; Yang et al., 2017a) compromise the training dataset. The attacks aim to compromise the integrity of a machine learning system by having a model learn on unsanitized data, which manipulates a model to learn a high error rate on test samples during operation, leading to abysmal performance and the nullification of the model’s effectiveness. It was first studied by Biggio et al. (Biggio et al., 2012) against support vector machines. Beyond linear classifier models, others have studied poisoning in areas such as collaborative filtering (Li et al., 2016), generative method against neural networks (Yang et al., 2017b), and topic modeling (Mei and Zhu, 2015). However, there is no study on data poisoning attacks targeting online learning filtering methods.

3. Poisoning DDoS Filters

In this section, we formulate the problem of poisoning DDoS filters and discuss the challenges of avoiding online learning detection models that constantly update themselves. We provide an example to describe the problem and highlight its relevant concepts.

3.1. Poisoning Attack

We model the interactions between a “clever” attacker and an online-learning DDoS filter as a game. In this game, there are mainly three notions relating to every time interval : (1) the estimated attack traffic distribution of the filter at each interval, (2) the generated attack traffic distribution that the attacker constantly shifts from one interval to the next, and (3) the filter model , which makes the decision on requests that are to be dropped during an attack. The goal of an attacker is to shift the distribution of attack traffic dynamically, deceiving the filter into approximating an inaccurate attack distribution, such that it is unable to distinguish between normal and attack requests accurately; effectively carrying out a poisoning attack.

Motivating Example. Let us consider a scenario where an elusive attacker aims to disrupt the network service availability of a target victim server by overwhelming the target with a flood of unpredictable attack traffic. In this scenario, the attacker decided to attack the victim service provider in the application layer, as such attacks are especially effective at consuming server and network resources. A request in the application-layer DDoS could be a sequence of sub-requests ’s. For example, a visit to a website consists of an HTTP-GET request to the landing page, followed by other requests. Each user who makes a request , a sequence of sub-requests, corresponds to an IP address.

Most network service providers would have protective DDoS filtering mechanisms monitoring the availability of their networks. Such a DDoS filtering mechanism is often an online learning-based filter that makes the decision to drop abnormal requests based on some extracted salient features, with an adaptive model trained on observed network traffic. Therefore, it is reasonable to assume that the attacker would not have any knowledge about the protective filtering system that the network service provider has in place (e.g., the features, time interval, and data used by the filter). Nevertheless, the attacker can always probe the filter by making malicious requests and studying the provider’s response (accept/reject) via the status code. For example, receiving the code HTTP 403 means that the requested resource is forbidden by the provider. However, we assume that the attacker can only probe the provider’s filter a limited number of times before its IP address is blacklisted.

To avoid detection, the attacker has a generator that generates controllable network requests that model regular traffic closely. For instance, the generator is able to dynamically generate malicious traffic requests by allowing the attacker to control the distribution shift of generated traffic. Due to the attacker’s limited knowledge of the underlying online DDoS protection mechanism, only by constantly shifting its attack traffic distribution, can the attacker confuse the online learning filter, and it allows the attacker to be always one step ahead of the filter’s estimation of the attack profile. Given the strict constraints, we aim to investigate the effectiveness of such attacks.

3.2. Online Learning-Based Filtering

For the online filter, we use the deep learning filters (Tann et al., 2021b) that demonstrated effectiveness against DDoS attacks in an online setting. The two filters proposed are the N-over-D nets and Iterative Classifier. Their goal is to filter out an estimated proportion of malicious requests during an attack. The considered filter is one that learns on both the traffic from two operational periods for model training. As an abnormally large number of requests characterizes attacks, it can be readily detected in practice. The first period is the normal-day traffic , where the requests are logged during the normal period. The second period is the attack-day traffic that contains a mixture of unlabeled legitimate and malicious traffic. Based on the volume of attack traffic, it is assumed that the filter is able to roughly estimate the proportion of attack requests in . All requests in are considered to be normal, whereas traffic in is unlabeled.

In an online setting, the filtering process can be broadly categorized into three stages. First, during a normal period, intensive processing and training can be performed on normal-day traffic to learn an accurate intermediate model of normal requests. Next, when an attack begins, the filter model starts its online learning of incoming mixture traffic . The mixture traffic can be divided into intervals of minutes (e.g., ), and ordered accordingly (), where contains the mixture within the -th interval. At each interval , the model, trained on and some intermediate representation , giving the updated and a model . Finally, the trained model is used to estimate the attack traffic distribution and perform the filtering of incoming requests.

3.3. Elusive Attacker

The attacker takes as input a request sample from given traffic (either malicious traffic of a single attack profile or benign traffic of the victim that has been compromised) and generates another request that mimics the traffic. In addition, the attacker shifts the distribution of the generated requests in order to fool the filter. It can be achieved by leveraging some data features as conditions to control the traffic generation process. Each request is associated with some selected feature that is grouped into classes (e.g., the various request payload sizes), and it corresponds to the request in the given traffic. Through these selected features, the attacker is able to control the distribution during the generative process. We denote this time-varying distribution as . The attacker can take advantage of the useful properties of the network features to manipulate the generated samples.

For example, the attacker can use the TCP Len feature as one of the conditions to vary the distribution of generated requests. In one interval, the attacker can generate attack distribution with requests of longer packet lengths. In contrast, the next interval could be generated with short packet lengths. Nevertheless, such traffic features are not fixed, and it is always up to the attacker to select from the set of all network traffic features. As the goal of the attacker is to generate unpredictable requests and attempt to get the online learning filter to accept as many of them as possible, it is sufficient for the attacker to mimic the traffic and find a distribution that the filter’s estimated distribution is unable to accurately capture in time.

4. Controllable Generative Method

In this section, we present the controllable generative method MimicShift that generates unpredictable malicious traffic with varying distributions for poisoning online learning DDoS detection filters. It leverages both conditional modeling and generative adversarial networks (GANs) to generate sequence data.

We design MimicShift as a controllable network request generation method (see Figure 2). It is inspired by the conditional GAN framework (Mirza and Osindero, 2014). MimicShift leverages selected traffic features (e.g., Request Len or TCP Window Size) as a control vector to influence the generative process. During the training phase, it first takes given requests as input and selects some corresponding features as the condition for controlling the request generation. Next, the method trains an imitative mimic model , which inputs the feature sequences of the corresponding requests in the given traffic. It then generates representative feature sequences that mimic the real features. After learning to mimic the features, a generative model then takes these representative feature sequences as conditions to produce generated attack requests . The objective of is to learn the distribution of given traffic requests and generate synthetic requests that are as alike as possible to the requests. Simultaneously, a discriminative model estimates the probability that a request sequence and its corresponding features are produced by or a real request; its goal is to distinguish between the actual and synthetic requests.

Following the training of models , , and , we specify the Markov model parameters () for controlling the mimic model , which produces representative requests based on the specified parameters to produce representative features that follow the intended transition dynamics. We then control the request traffic generative process with these designed features , influencing the generator to create synthetic manipulated requests .

In our method, we train both and conditioned on selected features that correspond to the requests . We designed our model to allow itself to be significantly influenced by some user-specific features such as TCP Len or any other network request feature. As a result, the model can introduce a high dimensional control vector to generate unpredictable requests, and directly control the data generation process to confuse and elude DDoS filters. For example, by using payload information of the requests, we can learn the distribution of regular payloads, using this information, then explicitly generate requests that mimic the traffic closely.

Based on the first introduced conditional GAN (Mirza and Osindero, 2014), we present the conditional GAN training for network traffic requests, defining the loss function as:

| (1) |

where is the latent noise sampled from a multivariate standard normal distribution. The network traffic is represented as a binary adjacency matrix of different request types. We then sample sets of random walks of length from adjacency matric to use as training data for our model. Following a biased second-order random walk sampling strategy, the sampled random walks are network requests where each request could be made up of a sequence of sub-requests ’s. One desirable property of such a random walk is that the walks only include connected sub-requests, which efficiently exploits the sequential nature of requests in real-world network traffic.

5. Implementation

In this section, we describe each component of the MimicShift method and formally present the controllable generative process.

5.1. Mimic

The mimic model is designed to learn traffic behavior by modeling features of sub-requests in the sampled requests. It is a sequence-to-sequence model. Given a sequence of traffic request features, the model then predicts the input sub-request features one at a time as the features are observed. We design using a long short-term memory (LSTM) (Hochreiter and Schmidhuber, 1997) neural network. The purpose of is to model network traffic, retain a memory of its behavior, and provide a vector of control for the ensuing generative process. When given sampled sequences of features as inputs, the mimic learns to generate synthetic features that mimic the input features. For example, a sample input Request Len sequence given to could result in such an output of , which closely mimics the input sample.

5.2. Generator

The generator generates network requests conditional on the selected features. It is a parameterized LSTM model . At each step , the LSTM takes as input the previous request , the last memory state of the LSTM model, and the current feature . The model then generates two values , where denotes the probability distribution over the current request and the current memory state. Next, the generated request is sampled from a categorical distribution using a one-hot vector representation, where is the softmax function. A latent noise from a multivariate standard normal distribution is drawn and passed through a hyperbolic tangent function to compute the initial memory state . Generator takes this noise and sampled features as inputs, and generates attack requests .

5.3. Discriminator

The discriminator aims to discriminate between requests sampled from the given traffic and synthetic requests generated by the generator . It is a binary classification LSTM model that takes two inputs: the current request and its associated feature condition , both represented as one-hot vectors. then outputs a score between and , indicating the probability of an actual request.

5.4. Controlling Traffic Generation

MimicShift takes traffic features as conditions to input into the generator and influences the generated output requests, creating requests with various feature densities. Our method constantly shifts the distribution of these conditions to produce unpredictable network traffic. The method aims to provide controllability to the attack and generate requests that mislead the filter, allowing the attack requests to elude detection. We first build a Markov chain model according to some user-specified transition distribution and construct specific features sequences for requests of some desired distribution. These sequences are then injected into the mimic to generate features that mimic the sampled features of given requests. Next, we train the MimicShift model on given requests . Given the trained model, we then construct features that follow some specified distribution and use the generator to produce desired requests . Through this process, the attacker can control the feature conditions and generate unpredictable requests with varying distributions.

For example, by controlling the parameters of the mimic feature, our method has the ability to generate attack traffic that has shifting distributions. The features provide a vector of control to how requests are generated. We influence the generative process by constructing mimic features of desired distribution using the mimic model . First, we construct select features by specifying the parameters of a transparent Markov model: (1) initial probability distribution over classes , where is the probability that the Markov chain will start from class , and (2) transition probability matrix , where each represents the probability of moving from class to class . Next, we input these constructed sequences into mimic , and it returns model-generated features that replicate the original features. Finally, by injecting these constructed sequences into our trained generator , we generate attack requests that follow the specific distribution.

6. Evaluation

In this section, we present the evaluation of online deep learning filters performed on various DDoS attacks with fluctuating requests in two scenarios, where (1) the attacker has access to the regular traffic of the victim that has been compromised (with normal traffic ), and (2) the attacker uses traffic from a single attack profile to approximate regular traffic (without normal traffic ). We demonstrate the ineffectiveness of such filters against our generative attack method MimicShift, and compare their performance against known DDoS attacks. Finally, we analyze the effects of such poisoning attacks and introduce an effective countermeasure.

6.1. Datasets

Our evaluation is performed on the same attacks presented in the existing online DDoS filtering paper (Tann et al., 2021b) to set a fair comparison benchmark. The datasets are from two publicly available network datasets: CICIDS2017 222www.unb.ca/cic/datasets/ids-2017.html (Sharafaldin. et al., 2018) and CAIDA UCSD “DDoS Attack 2007” 333www.caida.org/data/passive/ddos-20070804_dataset.xml (Hick et al., 2007), containing generated network traffic resembling real-world attacks and anonymized traffic traces from a DDoS attack on August 04, 2007, respectively. From these two datasets, three distinct DDoS attacks are extracted for evaluation.

Synthetic Benchmark Data. In the first dataset for evaluation, we use the synthetic CICIDS2017 (Sharafaldin. et al., 2018) dataset provided by the Canadian Institute for Cybersecurity of the University of New Brunswick (UNB), Canada. The human traffic was generated by modeling the abstract behavior of user interactions, and the response of 25 users based on the HTTP, HTTPS, FTP, SSH, and email protocols were used to create the abstract behavior. The two selected attacks: (1) HULK and (2) Low Orbit Ion Canon (LOIC) last for 17 and 20 mins, respectively.

Real-world Data. In the second dataset, we use a real-world attack, CAIDA07 UCSD “DDoS Attack 2007” (Hick et al., 2007). This attack occurred on August 04, 2007, and affected various locations around the world. It contains anonymized traffic traces from a DDoS attack that lasted around an hour, including network traffic such as Web, FTP, and Ping. These traces mostly only include attack traffic to the victim and responses from the victim, and non-attack traffic has been removed as much as possible. The network load surges dramatically from about 200 kbits/s to about 80 Mbits/s within a few minutes.

6.2. Mimic Attacks

Next, we generate fluctuating DDoS attack traffic based on both the CICIDS2017 and CAIDA07 datasets to perform a detailed evaluation of the online learning filtering methods on these attacks. First, we select the feature to mimic and use as a control vector to influence the generative process. After selecting the appropriate feature, we specify the distributions for shifting the attack requests, and generate fluctuating traffic to attack the online filtering methods.

Feature Analysis. In order to select a feature for the appropriate condition as a control vector, we perform an analysis of the features in the various datasets. We found that the distributions of the features of the traffic are highly skewed. This skewness is because a large majority of the traffic is concentrated at a few top features, such as the most common IP flags, TCP lengths, and window sizes.

| Dataset | # Request Len | Top 3 (%) | Distribution |

|---|---|---|---|

| HULK | 3753 | 79.7 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d869eadf-a2f6-4116-9faa-c22720dc6f90/hulklen_feature.png)

|

| LOIC | 3490 | 60.4 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d869eadf-a2f6-4116-9faa-c22720dc6f90/loiclen_feature.png)

|

| CAIDA07 | 1059 | 91.2 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d869eadf-a2f6-4116-9faa-c22720dc6f90/caidalen_feature.png)

|

For example, using the Request Len feature as an illustration, it can be seen in Table 1 that the traffic is concentrated in the top few lengths of the feature. In the CAIDA07 dataset, the top three request lengths (60, 52, 1500) make up 91% of the traffic, with the top length (60) accounting for 81% of the traffic alone. As for the LOIC dataset, the top three request lengths (60, 66, 1514) make up 60% of the traffic; the top three features (60, 1514, 2974) of the HULK dataset make up 80% of the total traffic.

As the distribution of the feature Request Len is concentrated in the top few classes, we group all of them into three classes for our experiments. The first two classes are the top two features, and the third class consists of all the other features. We design it such that the distribution of the three classes is more uniform and increases the variability of feature distribution.

Distribution Parameters. We specify the Markov model parameters, initial probability distribution and transition probability matrix , used in our experiments for controlling the shift of the generated mimic attacks. We use various parameters for all the datasets (see Table 2).

| Attack | ||

|---|---|---|

The attack is divided into three traffic () with different distributions. At each time period during the attack, one of the attack parameter settings is picked at random for . Our model MimicShift would then generate attack traffic according to the specified distributions, and produce fluctuating traffic to attack the online filtering methods.

| Dataset | Model | Attacker with normal traffic | Attacker without normal traffic | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FNR | FPR | ACC | Prec. | Rec. | F1 | FNR | FPR | ACC | Prec. | Rec. | F1 | ||

| HULK | N | 0.7824 | 0.2790 | 0.9019 | 0.9196 | 0.9561 | 0.9375 | 0.7834 | 0.2734 | 0.9045 | 0.9212 | 0.9578 | 0.9392 |

| N-over-D () | 0.8997 | 0.6295 | 0.7403 | 0.8186 | 0.8511 | 0.8345 | 0.9009 | 0.6432 | 0.7340 | 0.8147 | 0.8470 | 0.8305 | |

| N-over-D () | 0.9693 | 0.6461 | 0.6461 | 0.7597 | 0.7899 | 0.7745 | 0.9697 | 0.8411 | 0.6427 | 0.7576 | 0.7877 | 0.7724 | |

| Iterative Classifier () | 0.6802 | 0.5684 | 0.8435 | 0.8780 | 0.9252 | 0.9010 | 1.0000 | 0.6772 | 0.6514 | 0.8326 | 0.6846 | 0.7514 | |

| Iterative Classifier () | 0.7006 | 0.6619 | 0.7864 | 0.8702 | 0.8548 | 0.8624 | 0.2169 | 0.4329 | 0.7930 | 0.8786 | 0.8482 | 0.8631 | |

| LOIC | N | 0.7863 | 0.2350 | 0.9062 | 0.9411 | 0.9416 | 0.9414 | 0.7869 | 0.2434 | 0.9028 | 0.9390 | 0.9395 | 0.9393 |

| N-over-D () | 0.8882 | 0.6532 | 0.7386 | 0.8364 | 0.8368 | 0.8366 | 0.9269 | 0.7857 | 0.6855 | 0.8032 | 0.8036 | 0.8034 | |

| N-over-D () | 0.9836 | 0.9741 | 0.6100 | 0.7560 | 0.7564 | 0.7562 | 0.9877 | 0.9796 | 0.6078 | 0.7546 | 0.7550 | 0.7548 | |

| Iterative Classifier () | 0.8823 | 0.2003 | 0.2085 | 0.0520 | 0.5538 | 0.0951 | 1.0000 | 0.8364 | 0.8330 | 0.8272 | 1.0000 | 0.9054 | |

| Iterative Classifier () | 0.6943 | 0.4102 | 0.3798 | 0.3129 | 0.7795 | 0.4465 | 0.9265 | 0.2514 | 0.3402 | 0.8042 | 0.2312 | 0.3591 | |

| CAIDA07 | N | 0.7555 | 0.0013 | 0.9825 | 0.9987 | 0.9798 | 0.9891 | 0.7592 | 0.0155 | 0.9598 | 0.9845 | 0.9659 | 0.9751 |

| N-over-D () | 0.7622 | 0.8025 | 0.6884 | 0.8149 | 0.7995 | 0.8071 | 0.7849 | 0.0326 | 0.9324 | 0.9674 | 0.9491 | 0.9582 | |

| N-over-D () | 0.9288 | 0.7905 | 0.6928 | 0.8177 | 0.8022 | 0.8099 | 0.7882 | 0.0355 | 0.9278 | 0.9645 | 0.9463 | 0.9553 | |

| Iterative Classifier () | 0.9434 | 0.1109 | 0.8818 | 0.8754 | 0.9773 | 0.9236 | 0.0317 | 0.9978 | 0.8005 | 0.8132 | 0.9806 | 0.8891 | |

| Iterative Classifier () | 0.9266 | 1.0000 | 0.9753 | 0.9998 | 0.9708 | 0.9851 | 0.8881 | 0.0654 | 0.3140 | 0.9255 | 0.1727 | 0.2911 | |

6.3. Online Filtering Methods

We perform a detailed evaluation of the online learning filtering methods on the generated mimic attacks, and report the filtering capabilities to identify attack requests from normal traffic. The two online filtering methods are the latest state-of-the-art deep learning approaches to DDoS filtering (Tann et al., 2021b):

-

(1)

N-over-D approach

-

(2)

Iterative classifier approach

that take a principled approach to estimate conditional probabilities and an online iterative two-class classifier design for a specifically formulated machine learning loss function, respectively.

In the N-over-D approach, the technique approximates the likelihood that a network request is an attack, given that it is observed in the attack traffic. It is achieved with a two-step process. The first step applies an unsupervised learning model on the normal-day traffic to obtain a model that learns the distribution of normal traffic. Then, when a DDoS attack is detected, the second step is activated, and it trains another unsupervised learning model to rapidly learn the distribution of the attack traffic. At any time, the conditional probability can be estimated by taking the ratio of the normal-traffic distribution over the attack-traffic distribution. As for the Iterative Classifier approach, a particular machine learning loss function is formulated for fast online learning using one learning model. An online iterative two-class classifier performs joint learning on both the normal and attack operational periods, fully leverages all available information for model training.

Both filtering approaches are evaluated using the online interval of 1 min () and a rejection rate of . Under such settings, it means that each is collected over a period of 1 min for learning and filtering, dropping of the requests. In addition, we also use an offline approach for comparison. In this setting, the interval is set as . We suppose that all normal and attack data is available for training the models. The offline setting effectively shows that it is not easy for models to learn to generalize over the different distributions. By taking an offline approach that trains on all available data, we can (1) study how effective the varying distributions of attack traffic are on online filtering methods, and (2) obtain a reference performance limit for the online filtering results.

6.4. Evaluation Results

We measure the performance of the two filtering approaches in both attack scenarios. As demonstrated in Table 6.2, Figure 3, and Figure 4, both N-over-D and Iterative Classifier result in high false-negative rates and high false-positive rates across the three different attacks, thereby undermining the effectiveness of the filter and reducing it to no better than a random guess. We present both the false-negative and false-positive graphs of the attack with normal traffic as both attack scenarios have similar results (see Appendix for the attack without plots). These results show that unpredictable attack traffic essentially disables online learning filters.

The reported evaluation metrics used are False Negative Rate (FNR), False Positive Rate (FPR), Accuracy (ACC), Precision (Prec.), Recall (Rec.), and F1 Score (F1). The two most relevant metrics are FNR and FPR. False Negative Rate is the rate that attack requests are wrongly classified as legitimate and not dropped by the filtering methods. False Positive Rate reflects the rate that requests are from normal traffic but predicted as attack and rejected by the filters.

In Table 6.2, the results demonstrate a trade-off between letting attack requests through and denying legitimate requests. The N-over-D approach achieves a lower false-negative rate (FNR) than the Iterative Classifier, but it has a much higher false-positive rate (FPR). On the one hand, it means that while the N-over-D allows a smaller proportion of the attack requests through, it rejects many of the normal requests, denying a large portion of the legitimate requests. On the other hand, the iterative classifier has a higher FNR but a lower FPR, indicating it allows more legitimate requests at the expense of more attack requests. In general, although the attacker with normal traffic fares slightly better than an attacker without (higher error rates), the FNR and FPR in both attack scenarios are similar; results suggest that the attacks using representative traffic can be successful.

6.5. Result Comparison

For comparison, we assess the performance of state-of-the-art online DDoS filtering methods, N-over-D and Iterative Classifier approaches, and note the difference. Using the CICIDS2017 and CAIDA07 datasets, we study how our generated fluctuating attack traffic affects the filtering performance. More importantly, we demonstrate that an attacker who can shift its attack traffic distribution can significantly differ from the original attack traffic and effectively inundate online learning filtering methods. Compared to the original attacks, mimic attacks dramatically increase the false positive and false negative error rates.

HULK attack. We compare the online filtering performance on our generated fluctuating attacks and the original attack traffic. As shown in Table 4, the FPR of the filtering methods significantly increased from less than 10% to around 60%. It implies that the filtering systems are systematically classifying normal requests as attacks wrongly, making it worse than a 50% random guess.

| Attack | Model | ACC | FPR | Prec. | Rec. | F1 | FNR |

|---|---|---|---|---|---|---|---|

| Original HULK (Tann et al., 2021b) | N | 0.5903 | 0.3837 | 0.7016 | 0.5739 | 0.6313 | — |

| N/D () | 0.8086 | 0.1030 | 0.9198 | 0.7524 | 0.8277 | — | |

| N/D () | 0.8291 | 0.0766 | 0.9403 | 0.7692 | 0.8462 | — | |

| Iter.() | 0.9088 | 0.0378 | 0.8831 | 0.7659 | 0.8204 | — | |

| Iter. () | 0.9120 | 0.0206 | 0.9299 | 0.7313 | 0.8187 | — | |

| Mimic HULK (with ) | N | 0.9019 | 0.2790 | 0.9196 | 0.9561 | 0.9375 | 0.7824 |

| N/D () | 0.7403 | 0.6295 | 0.8186 | 0.8511 | 0.8345 | 0.8997 | |

| N/D () | 0.6461 | 0.8337 | 0.7597 | 0.7899 | 0.7745 | 0.9693 | |

| Iter. () | 0.8435 | 0.5684 | 0.8780 | 0.9252 | 0.9010 | 0.6802 | |

| Iter. () | 0.7864 | 0.6619 | 0.8702 | 0.8548 | 0.8624 | 0.7006 | |

| Mimic HULK (without ) | N | 0.9045 | 0.2734 | 0.9212 | 0.9578 | 0.9392 | 0.7834 |

| N/D () | 0.7340 | 0.6432 | 0.8147 | 0.8470 | 0.8305 | 0.9009 | |

| N/D () | 0.6427 | 0.8411 | 0.7576 | 0.7877 | 0.7724 | 0.9697 | |

| Iter. () | 0.6514 | 0.6772 | 0.8326 | 0.6846 | 0.7514 | 1.0000 | |

| Iter. () | 0.7930 | 0.4329 | 0.8786 | 0.8482 | 0.8631 | 0.2169 |

Moreover, using the N model as a baseline, the accuracy of both the online filters N-over-D and Iterative Classifier significantly dropped from 90% to as low as 65%. The fluctuating mimic traffic fools the online learning filtering methods. The other metrics, such as Precision, Recall, and F1, are also significantly lower than the N-only model, which serves as our benchmark of a clean model that is not uncorrupted by the attack traffic in its filtering evaluation.

LOIC attack. As for the LOIC attack, it can be seen in Table 5 that the FPR has increased from values close to zero in the original attack traffic to values ranging from (20–97)%. The accuracy of the online filters has also dropped from 90% in the original attack to values less than 50% on the mimic attacks.

| Attack | Model | ACC | FPR | Prec. | Rec. | F1 | FNR |

|---|---|---|---|---|---|---|---|

| Original LOIC (Tann et al., 2021b) | N | 0.3257 | 0.6997 | 0.3889 | 0.3454 | 0.3659 | — |

| N/D () | 0.9330 | 0.0047 | 0.9959 | 0.8846 | 0.9370 | — | |

| N/D () | 0.9330 | 0.0047 | 0.9959 | 0.8846 | 0.9370 | — | |

| Iter. () | 0.8805 | 0.0329 | 0.8808 | 0.6494 | 0.7476 | — | |

| Iter. () | 0.9486 | 0.0220 | 0.9016 | 0.8275 | 0.8630 | — | |

| Mimic LOIC (with ) | N | 0.9062 | 0.2350 | 0.9411 | 0.9416 | 0.9414 | 0.7863 |

| N/D () | 0.7386 | 0.6532 | 0.8364 | 0.8368 | 0.8366 | 0.8882 | |

| N/D () | 0.6100 | 0.9741 | 0.7560 | 0.7564 | 0.7562 | 0.9836 | |

| Iter. () | 0.2085 | 0.2003 | 0.0520 | 0.5538 | 0.0951 | 0.8823 | |

| Iter. () | 0.3798 | 0.4102 | 0.3129 | 0.7795 | 0.4465 | 0.6943 | |

| Mimic LOIC (without ) | N | 0.9028 | 0.2434 | 0.9390 | 0.9395 | 0.9393 | 0.7869 |

| N/D () | 0.6855 | 0.7857 | 0.8032 | 0.8036 | 0.8034 | 0.9269 | |

| N/D () | 0.6078 | 0.9796 | 0.7546 | 0.7550 | 0.7548 | 0.9877 | |

| Iter. () | 0.8330 | 0.8364 | 0.8272 | 1.0000 | 0.9054 | 1.0000 | |

| Iter. () | 0.3402 | 0.2514 | 0.8042 | 0.2312 | 0.3591 | 0.9265 |

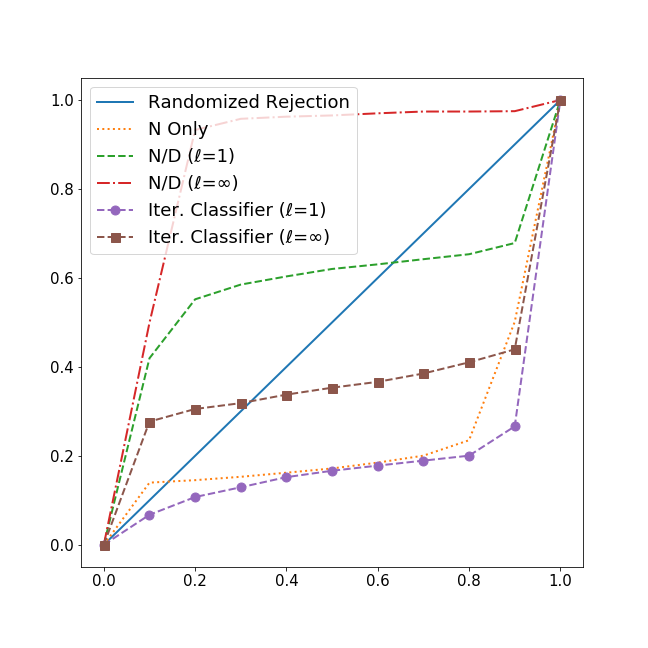

CAIDA07 attack. In the real-world CAIDA07 attack, the results (see Table 6) corroborate with the findings in both the previous attacks, LOIC and HULK. The FPR is high (closer to 1.0), while the accuracy is much lower than the N-only model.

| Attack | Model | ACC | FPR | Prec. | Rec. | F1 | FNR |

|---|---|---|---|---|---|---|---|

| Original CAIDA07 (Tann et al., 2021b) | N | 0.6009 | 0.2639 | 0.8958 | 0.5671 | 0.6945 | — |

| N/D () | 0.6944 | 0.0251 | 0.9900 | 0.6242 | 0.7657 | — | |

| N/D () | 0.6880 | 0.0411 | 0.9837 | 0.6202 | 0.7608 | — | |

| Iter. () | 0.7081 | 0.0208 | 0.9919 | 0.6403 | 0.7783 | — | |

| Iter. () | 0.7088 | 0.0141 | 0.9945 | 0.6396 | 0.7785 | — | |

| Mimic CAIDA07 (with ) | N | 0.9825 | 0.0013 | 0.9987 | 0.9798 | 0.9891 | 0.7555 |

| N/D () | 0.6884 | 0.8025 | 0.8149 | 0.7995 | 0.8071 | 0.7622 | |

| N/D () | 0.6928 | 0.7905 | 0.8177 | 0.8022 | 0.8099 | 0.9288 | |

| Iter () | 0.8818 | 0.1109 | 0.8754 | 0.9773 | 0.9236 | 0.9434 | |

| Iter () | 0.9753 | 1.0000 | 0.9998 | 0.9708 | 0.9851 | 0.9266 | |

| Mimic CAIDA07 (without ) | N | 0.9598 | 0.0155 | 0.9845 | 0.9659 | 0.9751 | 0.7592 |

| N/D () | 0.9324 | 0.0326 | 0.9674 | 0.9491 | 0.9582 | 0.7849 | |

| N/D () | 0.9278 | 0.0355 | 0.9645 | 0.9463 | 0.9553 | 0.7882 | |

| Iter. () | 0.8005 | 0.9978 | 0.8132 | 0.9806 | 0.8891 | 0.0317 | |

| Iter. () | 0.3140 | 0.0654 | 0.9255 | 0.1727 | 0.2911 | 0.8881 |

While the mimic attacks demonstrate that online learning filters are ill-equipped to handle fluctuating traffic distributions, we discover that the N-only model is able to hold up well against such attacks. As the N-only model trains on normal traffic, the attack traffic does not affect the model, thereby enabling it to differentiate between normal and attack traffic effectively.

6.6. Attack Analysis

We further analyze the poisoning attacks, shifting attack traffic generated by MimicShift that eludes online DDoS filters, to better understand the extent of the shifting traffic effects. We study the contrast between the estimated traffic distributions during normal and DDoS periods by analyzing these distributions from an empirical perspective.

The study was conducted by using the N-over-D approach, which estimates the likelihood that a network request is an attack, given that it is observed in the attack traffic. The process takes a two-step process, where the first step trains an N-only model on normal traffic to learn normal traffic characteristics. The next step activates another learning model, D, that takes an online approach to model the attack traffic. The approach then approximates the conditional probability of the traffic by taking . Hence, we are able to clearly examine how the attack traffic affects the online training process by studying the difference between models N and online D.

Using the CAIDA07 dataset for illustration, we study the normalized conditional probability scores of the attack traffic (see Figure 5). Through these distributions, we observe the differences between an anomaly detection method N-only and the online learning approach N-over-D. In Figure LABEL:fig:Nonly_distr, we plot the scores of normal traffic and attack traffic predicted with the N-only model, showing a clear distinction between the two distributions. It can be seen that the attack traffic is assigned a low score. In contrast, the normal traffic is assigned high scores, indicating that the anomaly detector is able to separate the malicious from the benign.

However, when we introduce the online model D into the filtering process, the online filtering approach is deeply poisoned by the shifting traffic. We can clearly see in Figure LABEL:fig:NDonline_distr that the online approach estimates the likelihood of both normal and attack traffic with the same scores, and it is unable to separate the two distributions. Hence, MimicShift can effectively elude online DDoS filters by mimicking normal traffic and injecting erratic attack traffic into the online learning process.

6.7. Countermeasure against Poisoning

While the results in Sections 6.4, 6.5 and 6.6 demonstrate that poisoning attacks are highly effective against online adaptive filtering systems, we introduce a simple protective measure that greatly diminishes the attack efficacy. It is a straightforward defense technique that can be quickly implemented. The idea of the online learning filter enhancement is to vary time interval in the online learning process during the attack period.

We perform the protective countermeasure by first setting the initial attack interval to the interval size mins. As for subsequent intervals (), we randomly set to be between 1–3 mins. By keeping the interval random and unknown to the attacker, online filtering systems are able to improve the estimate of the overall attack traffic distribution while achieving adaptive filtering.

Two-class Iterative Approach. The performance of our improved two-class online approach, the Enhanced Iterative Classifier, demonstrates that the introduced countermeasure successfully reduces its FNR and FPR significantly (see Table 7). In addition to the joint training of the Enhanced Iterative Classifier, this countermeasure effectively mitigates against the poisoning attacks.

| Dataset | Model () | FNR | FPR | ACC | Prec. | Rec. | F1 |

|---|---|---|---|---|---|---|---|

| HULK (with ) | Iter. Classifier | 0.6802 | 0.5684 | 0.8435 | 0.8780 | 0.9252 | 0.9010 |

| Enhanced Iter. | 0.2562 | 0.4489 | 0.6826 | 0.8893 | 0.6710 | 0.7649 | |

| LOIC (with ) | Iter. Classifier | 0.8823 | 0.2003 | 0.2085 | 0.0520 | 0.5538 | 0.0951 |

| Enhanced Iter. | 0.7470 | 0.7570 | 0.3749 | 0.7216 | 0.3553 | 0.4761 | |

| CAIDA07 (with ) | Iter. Classifier | 0.9434 | 0.1109 | 0.8818 | 0.8754 | 0.9773 | 0.9236 |

| Enhanced Iter. | 0.1035 | 0.0305 | 0.9386 | 0.9933 | 0.9310 | 0.9611 | |

| HULK (without ) | Iter. Classifier | 1.0000 | 0.6772 | 0.6514 | 0.8326 | 0.6846 | 0.7514 |

| Enhanced Iter. | 0.7179 | 0.4730 | 0.4915 | 0.8180 | 0.4363 | 0.5691 | |

| LOIC (without ) | Iter. Classifier | 1.0000 | 0.8364 | 0.8330 | 0.8272 | 1.0000 | 0.9054 |

| Enhanced Iter. | 0.7234 | 0.5730 | 0.5328 | 0.8365 | 0.5167 | 0.6388 | |

| CAIDA07 (without ) | Iter. Classifier | 0.0317 | 0.9978 | 0.8005 | 0.8132 | 0.9806 | 0.8891 |

| Enhanced Iter. | 0.9981 | 0.9996 | 0.3311 | 0.9531 | 0.1890 | 0.3154 |

Likelihood Approach. As for the principled N-over-D approach, the performance of this countermeasure shows that it achieves similar a performance to the original approach (see Table 8).

| Dataset | Model () | FNR | FPR | ACC | Prec. | Rec. | F1 |

|---|---|---|---|---|---|---|---|

| HULK (with ) | N-over-D | 0.8997 | 0.6295 | 0.7403 | 0.8186 | 0.8511 | 0.8345 |

| Enhanced N/D | 0.9142 | 0.6756 | 0.7191 | 0.8053 | 0.8373 | 0.8210 | |

| LOIC (with ) | N-over-D | 0.8882 | 0.6532 | 0.7386 | 0.8364 | 0.8368 | 0.8366 |

| Enhanced N/D | 0.9312 | 0.8085 | 0.6764 | 0.7975 | 0.7979 | 0.7977 | |

| CAIDA07 (with ) | N-over-D | 0.7622 | 0.8025 | 0.6884 | 0.8149 | 0.7995 | 0.8071 |

| Enhanced N/D | 0.7622 | 0.1851 | 0.6884 | 0.8149 | 0.7995 | 0.8071 | |

| HULK (without ) | N-over-D | 0.9009 | 0.6432 | 0.7340 | 0.8147 | 0.8470 | 0.8305 |

| Enhanced N/D | 0.9164 | 0.6936 | 0.7108 | 0.8001 | 0.8319 | 0.8157 | |

| LOIC (without ) | N-over-D | 0.9269 | 0.7857 | 0.6855 | 0.8032 | 0.8036 | 0.8034 |

| Enhanced N/D | 0.9385 | 0.8244 | 0.6700 | 0.7935 | 0.7939 | 0.7937 | |

| CAIDA07 (without ) | N-over-D | 0.7849 | 0.0326 | 0.9324 | 0.9674 | 0.9491 | 0.9582 |

| Enhanced N/D | 0.7848 | 0.0330 | 0.9317 | 0.9670 | 0.9487 | 0.9578 |

N-over-D estimates the likelihood that a request is an attack given that it is observed in the attack traffic; the enhanced two-step learning process cannot separate the attack from normal traffic. The attack analysis (see Section 6.6) helps us better understand why it is challenging for this two-step process to differentiate the attacks. The attacks generated by MimicShift are designed to closely mimic the normal traffic, resulting in a very high similarity to the normal traffic. This approach trains two different models to estimate the conditional probabilities of normal and attack traffic separately. Hence, both attack and normal traffic distributions are close, so it learns to assign them with similar likelihoods (see Figure LABEL:fig:NDonline_distr). Hence, it becomes tough for this approach to differentiate normal from attack traffic.

In addition, we plot the false-negative graphs of the implemented enhanced countermeasure for all three attacks (see Figure 6). It demonstrates that while the countermeasure slightly improves the performance of the N-over-D approach, it significantly enhances the performance of the Iterative Classifier.

7. Discussion

In this section, we discuss the current limitations of MimicShift and potential DDoS filtering strategies against non-stationary attack traffic, reviewing the implications.

7.1. Limitations

The limitations of MimicShift arise from the design objective, where the generated attack traffic aims to be real with respect to the attacker. The goal of the generative model is for the poisoning attack to work on the online learning filters. It is designed to fool the learning filters and for the defense to fail. Hence, it is a lower requirement, and the fidelity of the generated traffic is not as essential for this requirement. In addition, MimicShift requires a reasonable estimate of the interval to shift its traffic accordingly to poison the online learning filters effectively. Finally, there is an inherent speed and performance trade-off in the interval size, as a larger interval size reduces the rate of detection and increases the filtering performance. In contrast, a smaller interval results in faster filtering and reduces filter performance. The attacker is subject to the effects of such changes in the interval sizes.

7.2. Existing Online Filtering Methods

Existing approaches to the online filtering of DDoS attacks rely either on simple decision models (He et al., 2017), rudimentary learning algorithms (Daneshgadeh Çakmakçı et al., 2020; Lima Filho et al., 2019), or deep learning models (Tann et al., 2021b) to rank the network requests according to their legitimacy. The filtering methods then set a threshold proportion of the lowest ranking traffic to reject, assuming that the methods assign attack requests a low score accurately and vice-versa for the normal requests. As such, it has been suggested that increasing the threshold to reject a larger proportion of traffic could further mitigate the attacks (Tann et al., 2021b). However, our results show that there is a trade-off between increasing the threshold, which increases the FPR and reduces the FNR, rendering the above-mentioned filtering methods ineffective. For example, a high threshold reduces the number of falsely rejected requests but at the same time falsely classifies more attack traffic as normal, allowing them through to the target server.

7.3. Potential Filtering Strategies

Hardening the Accept Threshold. The previous deep learning online filtering work suggested that the approaches (Tann et al., 2021b) are robust against the choice of rejection threshold (0.5–0.7) and time interval for each period of learning the attack traffic. One reason for the robustness is that volumetric DDoS attacks characteristically make up more than half the traffic, reaching up to 80–90% of the traffic volume, and this large proportion of attack enables a higher threshold to not adversely affect the filtering performance.

In Tann et al. (Tann et al., 2021b), the authors reported that adjusting the rejection threshold from 0.5 to 0.7 into steps of 0.05 has a negligible effect on the false positive rate. However, as we are concerned with attack traffic eluding online filters, we focus on the false-negative rate; our experiments (see Section 6.4) suggest that a change in acceptance threshold could considerably affect the FNR. Thus, a potential filtering strategy would be to set a lower acceptance threshold to increase the chance of stopping attack requests from slipping through the filter. It is a strategy that is worth investigating in future works.

Random Learning of Attack. One other potential filtering strategy is to set the attack traffic learning model to embed some level of randomness. In particular, the learning strategy of a learning model for attack traffic can choose a time interval from a range of short spans for each period , with some probability. Given that the interval between 1 and 10 mins does not have much of an effect on the filtering performance, the strategy that makes a random selection of for attack traffic learning could possibly mitigate the effects of shifting attack traffic. Further study is needed to analyze the impact of such a countermeasure.

Similarity of Distribution. Another potential filtering strategy for shifting attack distributions is to only accept requests during the attack period with the closest probability score to that of normal traffic. Most filtering methods are able to approximate the normal traffic distribution to a high degree of accuracy. Hence, during an attack, another model can be employed to learn the attack traffic of that specific period and estimate the distribution of the attack requests. With both distributions, the filtering mechanism would only accept requests from the attack traffic that falls in the distribution of normal traffic plus some specified error margin, thereby greatly minimizing the FNR. In other words, the filtering system is designed to be very strict that traffic during a detected attack falls within the scope of normal behavior.

7.4. Shifting for Improved Filtering

Optimistically, the controlled synthetic generation of various types of network traffic, most likely unobserved previously, can surprisingly aid in building enhanced DDoS filtering systems robust against poisoning attacks. As this is the first work to suggest poisoning attacks on online deep-learning-based DDoS filtering, the robustness of such filters can be secured by occasionally injecting shifting attack traffic throughout its training process. In particular, the generated shifting attack traffic that retains some level of normal traffic properties, especially the ones that are ranked highly to be normal by the online learning systems, can be included and explicitly labeled in the training dataset of the model training during the normal period. We note that by randomly injecting various shifted attack traffic into the normal period training process, the online filters are capable of learning to adapt attack traffic with a wide range of diversity, thereby enhancing the robustness against shifting attack traffic during an attack.

8. Related Work

In this section, we examine some of the most relevant works that take statistical and machine learning approaches in DDoS attacks and defenses.

8.1. Approaches to DDoS Defense

Statistical. Statistical approaches to DDoS mitigation, widely popular when first introduced in the early s (Zargar et al., 2013), generally involve methods that measure select statistics of network traffic properties, such as entropy scoring of network packets (Wang et al., 2015; Yu et al., 2015; Gaurav and Singh, 2017; Kalkan et al., 2018), applied to IP filtering (Tao Peng et al., 2003; Peng et al., 2002) and IP source traceback (Kuznetsov et al., 2002; Yu et al., 2011). However, given that DDoS attacks vary from previous attacks, statistical methods are not generally appropriate in an online setting as some traffic statistics cannot be computed during attacks. While Kim et al. (Yoohwan Kim et al., 2004) proposed a statistical method to support distributed filtering and automated online attack characterization, which assigns a score to each packet that estimates a packet’s legitimacy given the attributes, it remains especially challenging for statistical techniques to perform online updates and select appropriate model parameters that minimize false detection rates.

Machine Learning. The rapid progress of machine learning has led to the increasingly widespread adoption of deep learning models in DDoS filtering. These learning models can be broadly categorized into two main groups, where one group takes an offline approach (Yin et al., 2017; Cui et al., 2018; Li et al., 2018; Kwon et al., 2018; Yuan et al., 2017; Roopak et al., 2019), where the models are trained on all attack data that is observed and available, and the other group adopts an online learning approach (Tann et al., 2021b; Lima Filho et al., 2019; He et al., 2017; Daneshgadeh Çakmakçı et al., 2020; Doriguzzi-Corin et al., 2020), which trains models that only receive data incrementally, as more attack traffic is observed with time. Offline learning methods (Kwon et al., 2018; Yin et al., 2017; Cui et al., 2018; Li et al., 2018; Yuan et al., 2017; Roopak et al., 2019) utilize various types of deep learning models based on Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN) architectures. It is observed that modeling network traffic through time in a sequential manner, retaining historical information, results in better filtering performance. RNN-based intrusion detection systems (Yin et al., 2017; Cui et al., 2018; Li et al., 2018; Yuan et al., 2017) demonstrate that the sequential learning models demonstrate superior modeling capabilities. They were able to detect sophisticated attacks in various intrusion detection tasks. However, all these methods preprocess the entire training data for model training, and they are not designed for online learning to perform adaptive updates as new data is observed.

Online Learning. There is only a handful of online learning approaches to DDoS filtering. While an online method (Doriguzzi-Corin et al., 2020) uses ground-truth labels for training, the other methods (Tann et al., 2021b; He et al., 2017; Lima Filho et al., 2019; Daneshgadeh Çakmakçı et al., 2020) do not use data labels for training. However, these approaches either employ basic machine learning algorithms, simple decision tree methods, or even a rudimentary kernel-based learning algorithm with some Mahalanobis distance measure and a chi-square test. Their modeling capabilities limit these online approaches. Tann et al. (Tann et al., 2021b) presented one of the only approaches that employ powerful online deep learning models, which does not use data labels for training.

8.2. Attacking DDoS Defenses

There is little literature on adversarial attacks targeted at DDoS detection systems and the corresponding relevant studies. One such analysis (Li et al., 2005) was performed to determine an adaptive statistical filter can effectively defend against attackers that dynamically change their behavior. They found that, in fact, the adaptive filter performs much worse against a static attacker than if the attacker is dynamic. While in some recent works (Ibitoye et al., 2019; Warzyński and Kołaczek, 2018), the methods leverage machine learning to generate adversarial samples to fool DDoS detection on deep-learning intrusion detection systems. These attacks generate adversarial samples in a white-box attack setting, where attackers have complete knowledge of the target model. Other methods (Hashemi et al., 2019; Peng et al., 2019, 2019; Huang et al., 2020; Liu and Yin, 2021) present black-box attacks, where the attacker does not know the details of the target model. They perform various manipulative procedures and perturbations on adversarial samples until a sample successfully evades the target DDoS detector. However, there is no study on the effectiveness of poisoning attacks on online deep-learning DDoS filters.

9. Conclusion

Given the recent success and growing interest in machine learning, we expect increased adoption of online learning models to mitigate and filter prevailing cyber attack issues. We identify a particularly challenging problem, DDoS attacks, a primary concern in present-day network security. Hence, we conduct the first systematic study of data poisoning attacks on online deep-learning-based filtering systems. In this study, we show that online learning filters are not robust against “crafty” attackers that can shift attack traffic distributions and vary the type of attack requests from time to time. When an online-learning filter wrongly estimates the attack distribution in the next period based on the preceding period, its performance would be much worse than that on a fixed attack distribution. We demonstrate that online adaptive filters are vulnerable to attackers who act in an erratic and unpredictable manner, causing the filter much difficulty to update its adaptation accurately.

In addition, we propose a controllable generative method, MimicShift, for generating attack traffic of specific distributions for poisoning online filtering systems. Such an approach assumes that the generative method only observes the given traffic and has no other knowledge about the filtering systems. The effectiveness of our proposed approach is demonstrated by experimental studies conducted against two recent online deep-learning-based DDoS filtering methods. Furthermore, we suggest practical protective countermeasures, which enhance online deep-learning-based defenses against poisoning attacks. Empirical studies verify that our proposed defensive countermeasure can effectively minimize the impact of such attacks, serving as a starting point for the future development of robust defenses.

References

- (1)

- Biggio et al. (2012) Battista Biggio, Blaine Nelson, and Pavel Laskov. 2012. Poisoning Attacks against Support Vector Machines. In Proceedings of the 29th International Conference on International Conference on Machine Learning.

- Cui et al. (2018) Jianjing Cui, Jun Long, Erxue Min, Qiang Liu, and Qian Li. 2018. Comparative Study of CNN and RNN for Deep Learning Based Intrusion Detection System. In Cloud Computing and Security.

- Daneshgadeh Çakmakçı et al. (2020) Salva Daneshgadeh Çakmakçı, Thomas Kemmerich, Tarem Ahmed, and Nazife Baykal. 2020. Online DDoS attack detection using Mahalanobis distance and Kernel-based learning algorithm. Journal of Network and Computer Applications (2020).

- Doriguzzi-Corin et al. (2020) R. Doriguzzi-Corin, S. Millar, S. Scott-Hayward, J. Martinez-del Rincon, and D. Siracusa. 2020. LUCID: A Practical, Lightweight Deep Learning Solution for DDoS Attack Detection. IEEE Transactions on Network and Service Management (2020).

- Gaurav and Singh (2017) A. Gaurav and A. K. Singh. 2017. Entropy-score: A method to detect DDoS attack and flash crowd. In 2017 2nd IEEE International Conference on Recent Trends in Electronics, Information Communication Technology (RTEICT).

- Hashemi et al. (2019) Mohammad J. Hashemi, Greg Cusack, and Eric Keller. 2019. Towards Evaluation of NIDSs in Adversarial Setting. In Proceedings of the 3rd ACM CoNEXT Workshop on Big DAta, Machine Learning and Artificial Intelligence for Data Communication Networks.

- He et al. (2017) Z. He, T. Zhang, and R. B. Lee. 2017. Machine Learning Based DDoS Attack Detection from Source Side in Cloud. In 2017 IEEE 4th International Conference on Cyber Security and Cloud Computing (CSCloud).

- Hick et al. (2007) P. Hick, E. Aben, K. Claffy, and J. Polterock. 2007. The CAIDA DDoS attack 2007 dataset.

- Hochreiter and Schmidhuber (1997) Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long Short-Term Memory. Neural Comput. (1997).

- Huang et al. (2020) Weiqing Huang, Xiao Peng, Zhixin Shi, and Yuru Ma. 2020. Adversarial Attack against LSTM-based DDoS Intrusion Detection System. In 2020 IEEE 32nd International Conference on Tools with Artificial Intelligence (ICTAI).

- Ibitoye et al. (2019) Olakunle Ibitoye, Omair Shafiq, and Ashraf Matrawy. 2019. Analyzing Adversarial Attacks Against Deep Learning for Intrusion Detection in IoT Networks. arXiv preprint 1905.05137 (2019).

- Jagielski et al. (2018) Matthew Jagielski, Alina Oprea, Battista Biggio, Chang Liu, Cristina Nita-Rotaru, and Bo Li. 2018. Manipulating machine learning: Poisoning attacks and countermeasures for regression learning. In 2018 IEEE Symposium on Security and Privacy (SP).

- Kalkan et al. (2018) K. Kalkan, L. Altay, G. Gür, and F. Alagöz. 2018. JESS: Joint Entropy-Based DDoS Defense Scheme in SDN. IEEE Journal on Selected Areas in Communications (2018).

- Kuznetsov et al. (2002) Vadim Kuznetsov, Helena Sandström, and Andrei Simkin. 2002. An Evaluation of Different IP Traceback Approaches. In Information and Communications Security.

- Kwon et al. (2018) D. Kwon, K. Natarajan, S. C. Suh, H. Kim, and J. Kim. 2018. An Empirical Study on Network Anomaly Detection Using Convolutional Neural Networks. In 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS).

- Li et al. (2016) Bo Li, Yining Wang, Aarti Singh, and Yevgeniy Vorobeychik. 2016. Data Poisoning Attacks on Factorization-Based Collaborative Filtering. In Proceedings of the 30th International Conference on Neural Information Processing Systems (NIPS’16).

- Li et al. (2018) Chuanhuang Li, Yan Wu, Xiaoyong Yuan, Zhengjun Sun, Weiming Wang, Xiaolin Li, and Liang Gong. 2018. Detection and defense of DDoS attack–based on deep learning in OpenFlow-based SDN. International Journal of Communication Systems (2018).

- Li et al. (2005) Q. Li, E.-C. Chang, and M.C. Chan. 2005. On the effectiveness of DDoS attacks on statistical filtering. In Proceedings IEEE 24th Annual Joint Conference of the IEEE Computer and Communications Societies.

- Lima Filho et al. (2019) Francisco Sales de Lima Filho, Frederico AF Silveira, Agostinho de Medeiros Brito Junior, Genoveva Vargas-Solar, and Luiz F Silveira. 2019. Smart Detection: An Online Approach for DoS/DDoS Attack Detection Using Machine Learning. Security and Communication Networks (2019).

- Liu and Yin (2021) Zengguang Liu and Xiaochun Yin. 2021. LSTM-CGAN: Towards Generating Low-Rate DDoS Adversarial Samples for Blockchain-Based Wireless Network Detection Models. IEEE Access (2021).

- Mei and Zhu (2015) Shike Mei and Xiaojin Zhu. 2015. The Security of Latent Dirichlet Allocation. In Proceedings of the Eighteenth International Conference on Artificial Intelligence and Statistics (Proceedings of Machine Learning Research).

- Mirza and Osindero (2014) Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. arXiv preprint 1411.1784 (2014).

- Peng et al. (2002) Tao Peng, Christopher Leckie, and Kotagiri Ramamohanarao. 2002. Detecting Distributed Denial of Service Attacks Using Source IP Address Monitoring. In Proceedings of the Third International IFIP-TC6 Networking Conference.

- Peng et al. (2019) Xiao Peng, Weiqing Huang, and Zhixin Shi. 2019. Adversarial Attack Against DoS Intrusion Detection: An Improved Boundary-Based Method. In 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI).

- Roopak et al. (2019) M. Roopak, G. Yun Tian, and J. Chambers. 2019. Deep Learning Models for Cyber Security in IoT Networks. In 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC).

- Rubinstein et al. (2009) Benjamin IP Rubinstein, Blaine Nelson, Ling Huang, Anthony D Joseph, Shing-hon Lau, Satish Rao, Nina Taft, and J Doug Tygar. 2009. Antidote: understanding and defending against poisoning of anomaly detectors. In Proceedings of the 9th ACM SIGCOMM Conference on Internet Measurement.

- Sharafaldin. et al. (2018) Iman Sharafaldin., Arash Habibi Lashkari., and Ali A. Ghorbani. 2018. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy - Volume 1: ICISSP.

- Tann et al. (2021a) Wesley Joon-Wie Tann, Ee-Chien Chang, and Bryan Hooi. 2021a. SHADOWCAST: Controllable Graph Generation. arXiv preprint 2006.03774 (2021).

- Tann et al. (2021b) Wesley Joon-Wie Tann, Jackie Jin Wei Tan, Joanna Purba, and Ee-Chien Chang. 2021b. Filtering DDoS Attacks from Unlabeled Network Traffic Data Using Online Deep Learning. In Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security.

- Tao Peng et al. (2003) Tao Peng, C. Leckie, and K. Ramamohanarao. 2003. Protection from distributed denial of service attacks using history-based IP filtering. In IEEE International Conference on Communications. ICC.

- Wang et al. (2015) R. Wang, Z. Jia, and L. Ju. 2015. An Entropy-Based Distributed DDoS Detection Mechanism in Software-Defined Networking. In 2015 IEEE Trustcom/BigDataSE/ISPA.

- Warzyński and Kołaczek (2018) Arkadiusz Warzyński and Grzegorz Kołaczek. 2018. Intrusion detection systems vulnerability on adversarial examples. In 2018 Innovations in Intelligent Systems and Applications (INISTA).

- Xiao et al. (2015) Huang Xiao, Battista Biggio, Gavin Brown, Giorgio Fumera, Claudia Eckert, and Fabio Roli. 2015. Is feature selection secure against training data poisoning?. In International Conference on Machine Learning.

- Yang et al. (2017b) Chaofei Yang, Qing Wu, Hai Li, and Yiran Chen. 2017b. Generative Poisoning Attack Method Against Neural Networks. arXiv preprint 1703.01340 (2017).

- Yang et al. (2017a) Guolei Yang, Neil Zhenqiang Gong, and Ying Cai. 2017a. Fake Co-visitation Injection Attacks to Recommender Systems.. In NDSS.

- Yin et al. (2017) C. Yin, Y. Zhu, J. Fei, and X. He. 2017. A Deep Learning Approach for Intrusion Detection Using Recurrent Neural Networks. IEEE Access (2017).

- Yoohwan Kim et al. (2004) Yoohwan Kim, Wing Cheong Lau, Mooi Choo Chuah, and H. J. Chao. 2004. Packetscore: statistics-based overload control against distributed denial-of-service attacks. In IEEE INFOCOM.

- Yu et al. (2015) S. Yu, S. Guo, and I. Stojmenovic. 2015. Fool Me If You Can: Mimicking Attacks and Anti-Attacks in Cyberspace. IEEE Trans. Comput. (2015).

- Yu et al. (2011) S. Yu, W. Zhou, R. Doss, and W. Jia. 2011. Traceback of DDoS Attacks Using Entropy Variations. IEEE Transactions on Parallel and Distributed Systems (2011).

- Yuan et al. (2017) Xiaoyong Yuan, Chuanhuang Li, and Xiaolin Li. 2017. DeepDefense: Identifying DDoS Attack via Deep Learning. 2017 IEEE International Conference on Smart Computing (SMARTCOMP) (2017).

- Zargar et al. (2013) S. T. Zargar, J. Joshi, and D. Tipper. 2013. A Survey of Defense Mechanisms Against Distributed Denial of Service (DDoS) Flooding Attacks. IEEE Communications Surveys Tutorials (2013).

Appendix

A. Evaluation Metrics

The evaluation metrics are defined as:

-

FNR =

-

FPR =

-

ACC =

-

Prec. =

-

Rec. =

-

F1 =

where , , , . We run all the experiments on NVIDIA Tesla P100 GPUs with 12 GB memory. The models have been implemented in Python v3.7.5 using the Keras v2.2.4 library on top of the Tensorflow v1.14.0 machine learning framework.

B. Feature Analysis

We perform feature analysis on the traffic data of the three datasets to analyze the characteristics of the distributions. A large portion of the requests is typically from only a few top classes of the features, concentrating among the top 3–5 classes.